3.1. Results of Automatic Assessment of Image Exposure Quality

3.1.1. Cross-Validation Performance

To rigorously evaluate the effectiveness and generalizability of the exposure quality classifier, a five-fold cross-validation protocol was applied. The aggregated results are presented in

Table 5, where each metric is reported as the mean value ± standard deviation across all folds.

The XGBoost model achieved an average overall accuracy of , indicating that nearly 97% of images were correctly classified as either well-exposed or poorly exposed. Importantly, the balanced accuracy () demonstrates that the classifier maintains similar sensitivity across both classes, mitigating the risk of bias towards the majority class—an essential consideration given the class imbalance in the dataset. The high precision () shows that when the model identifies an image as well-exposed, this prediction is correct in the vast majority of cases, minimizing false positives. Simultaneously, the recall () indicates that the classifier successfully detects nearly all true well-exposed images, limiting the number of false negatives. The resulting F1-score () further reflects a robust balance between precision and recall, suggesting the classifier performs reliably even in challenging, borderline cases.

A key strength of the model is reflected in the extremely high area under the precision–recall curve (AUC-PR: ), which is particularly relevant in imbalanced datasets. This value implies that the classifier is able to effectively distinguish between well- and poorly exposed images across a wide range of threshold values, ensuring reliable performance regardless of the class distribution.

The low standard deviations across all metrics confirm that the classifier’s performance is stable and consistent across different folds, with no evidence of overfitting to specific subsets of the data. Such consistency underscores the robustness of the feature set and the model’s suitability for automated, scalable quality control.

These results have direct practical implications for downstream phenological analysis. By providing a highly reliable and automated pre-filtering step, the exposure quality classifier ensures that only consistently well-exposed images are included in the training and evaluation of deep learning models for phenological phase classification. This, in turn, reduces the risk of confounding errors related to image quality, increases the robustness of subsequent models, and supports the development of reliable, high-throughput phenological monitoring systems in real-world conditions.

3.1.2. Per-Fold Results

To provide a comprehensive view of the classifier’s performance and its generalizability,

Table 6 reports the detailed metrics for each individual fold of the five-fold cross-validation. The per-fold results allow for assessment of not only the average effectiveness but also the variability and robustness of the XGBoost-based exposure quality model when confronted with different random train–test splits.

A close examination of

Table 6 reveals that, despite inevitable differences in the composition of the validation data across folds, the model maintained consistently high performance. The accuracy ranged from 0.945 (Fold 4) to 0.991 (Fold 1), demonstrating a low spread and suggesting that the classifier is not overly sensitive to specific data subsets. The balanced accuracy, which accounts for potential class imbalance, also remained at a high level (0.952–0.994 across folds), confirming the model’s ability to treat both well-exposed and poorly exposed images with similar attention.

Looking more closely at precision and recall, we note that the model’s recall (the ability to correctly identify well-exposed images) is particularly stable and high, with values between 0.933 and 1.000. This implies that only a small fraction of well-exposed images is erroneously discarded—a crucial property in applications where it is preferable to retain as many good-quality samples as possible for downstream phenological analysis.

Precision, on the other hand, exhibited slightly greater variation across folds, ranging from 0.853 (Fold 4) to 0.968 (Fold 1). This reflects occasional increases in false positives, where some poorly exposed images are mistakenly retained as well-exposed. The modest dip in precision in Folds 4 and 5 could be attributed to the presence of borderline or ambiguous cases in these validation subsets—such as images with exposure levels near the classification threshold, or with lighting conditions that are not clearly distinguishable by automated features. In practice, this means that while the vast majority of accepted images will indeed be well-exposed, a small number of borderline-quality images may be included—an acceptable compromise for many large-scale or high-throughput monitoring tasks.

The F1-score, which harmonically balances precision and recall, ranged from 0.906 (Fold 4) to 0.984 (Fold 1), again demonstrating the high overall reliability of the model. Notably, the area under the precision–recall curve (AUC-PR) remained exceptionally high for all folds (from 0.991 to 1.000), which is especially important in the context of the imbalanced class distribution (where the well-exposed class predominates).

Such consistently high AUC-PR values indicate that the model can effectively discriminate between well- and poorly exposed images across a range of threshold settings, providing flexibility for future adjustments of sensitivity or specificity according to project needs. In addition, the combination of high recall and balanced accuracy ensures that almost all well-exposed images are captured, and there is no substantial bias toward the majority class.

The small, fold-specific variations observed are typical for limited or heterogeneous datasets and reflect the presence of challenging examples near the decision boundary. These variations also highlight the importance of rigorous cross-validation in performance assessment, as single split results might overestimate or underestimate the true generalization ability of the classifier.

From a practical perspective, the robustness demonstrated across all validation folds means that the trained classifier can be confidently deployed as an automated pre-filtering tool in large-scale phenological image datasets. Its high recall guarantees the preservation of valuable, well-exposed data for downstream deep learning analyses, while the moderate rate of false positives does not pose a significant risk for the intended applications—especially when considering that subsequent visual or algorithmic checks can further refine the selection, if necessary.

In conclusion, the per-fold analysis confirms the exposure quality classifier’s stability, reliability, and suitability for real-world phenological image pipelines. The ability to maintain high performance across all data partitions, even in the presence of ambiguous or borderline cases, underscores the potential for transferring this approach to other datasets and environmental monitoring scenarios with minimal need for retraining or extensive manual tuning.

3.1.3. Confusion Matrix for Exposure Quality Classification

The performance of the XGBoost-based exposure quality classifier was further analyzed by examining the aggregated confusion matrix, presented in

Table 7. The matrix summarizes the results of exposure classification for the manually labeled test set, aggregated over all five cross-validation folds.

The confusion matrix reveals that among the 400 images annotated as well-exposed (“Actual: good”), the model correctly classified 388 as well-exposed and misclassified only 12 as poorly exposed. For images labeled as poorly exposed (“Actual: bad”), 145 out of 150 were correctly identified, while only 5 were erroneously classified as well-exposed.

These results indicate that the classifier achieves very high sensitivity (recall) for both classes: only 3% of well-exposed images and 3.3% of poorly exposed images were misclassified. The high true positive rates for both categories highlight the model’s ability to robustly distinguish between acceptable and poor image exposure in diverse, real-world conditions.

From a practical perspective, this means that only a small fraction of high-quality images would be inadvertently discarded by the pre-filtering step, while nearly all poorly exposed images are reliably detected and excluded from further phenological analysis. The low number of false positives (bad images accepted as good) ensures that downstream deep learning models are trained on data of consistently high visual quality, thereby improving their generalizability and robustness.

The overall low rate of misclassification, confirmed both by quantitative metrics and the confusion matrix structure, demonstrates the effectiveness of the feature-based, data-driven approach adopted in this study. The classifier’s performance justifies its deployment as an automated quality-control module in high-throughput phenological image pipelines, where manual curation is infeasible.

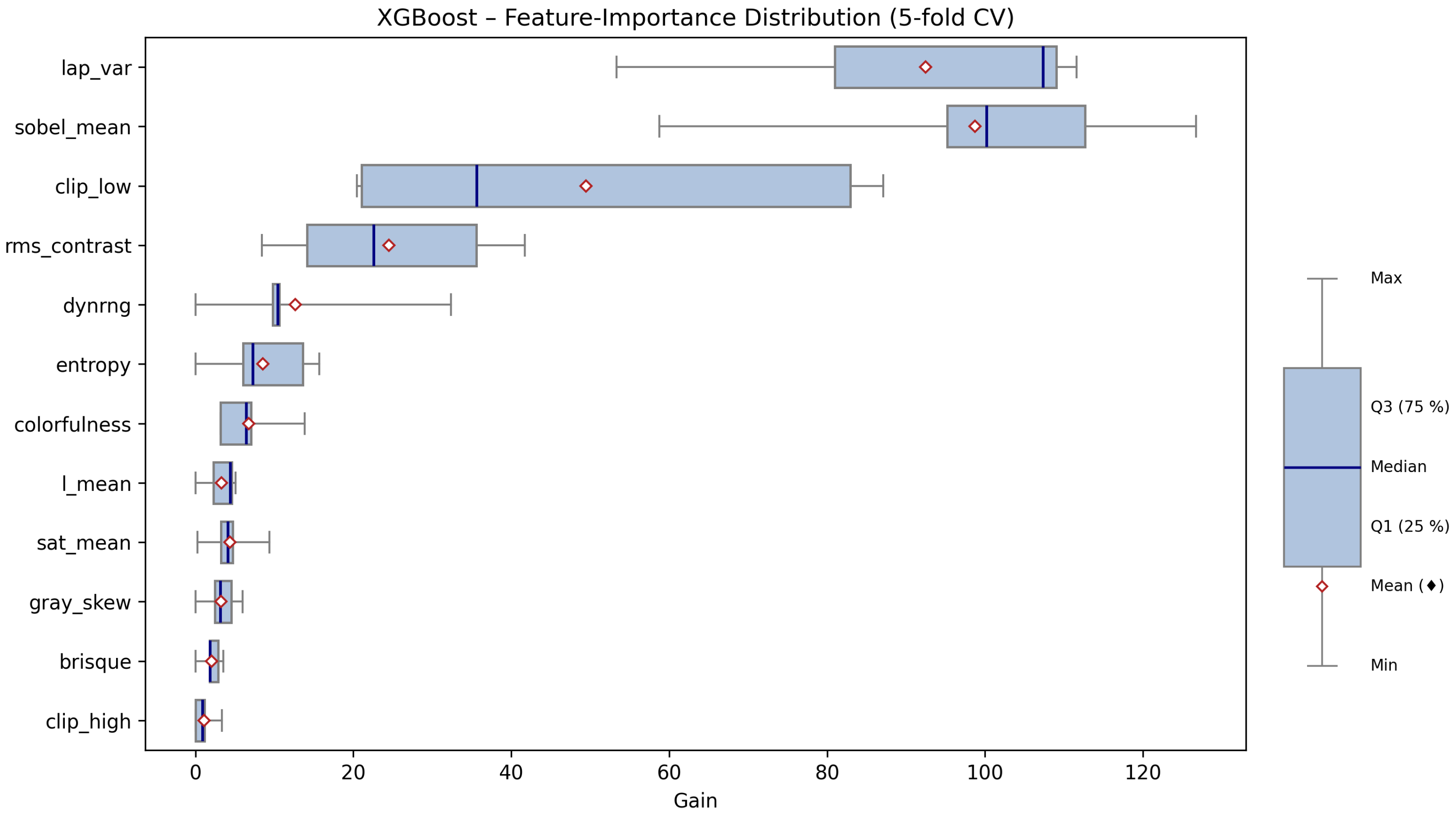

3.1.4. Feature Importance Analysis

A crucial aspect of deploying machine learning models in real-world image analysis pipelines is understanding which features drive the classification decisions. To this end, we performed a detailed feature importance analysis for the XGBoost exposure quality classifier, focusing on the quantitative “gain” metric, which reflects the contribution of each feature to improving model accuracy through tree-based splits.

Table 8 provides a comprehensive summary of feature importance statistics, including the median, quartiles, mean, maximum, minimum, and interquartile range (IQR) for each feature, aggregated across all cross-validation folds. These statistics allow us to identify not only which features are most influential, but also how stable their importance is across different data splits.

The results demonstrate a pronounced hierarchy among the twelve extracted image features. The three features with by far the highest importance are lap_var (Laplacian variance), sobel_mean (mean Sobel gradient magnitude), and clip_low (fraction of underexposed pixels). For example, lap_var exhibits the highest median gain (107.4), with a relatively narrow interquartile range (IQR = 28.0), indicating both a strong and consistent contribution to model performance across all folds. Similarly, sobel_mean (median = 100.2) and clip_low (median = 35.7, but with a wide IQR) are also key predictors. These features capture, respectively, image sharpness, edge content, and the prevalence of dark, underexposed regions. High values of lap_var and sobel_mean are typical for well-exposed, in-focus images, while clip_low identifies images where a significant fraction of pixels are too dark, a common artifact of underexposure.

The second tier of features includes rms_contrast, dynrng, and entropy. These features measure global contrast and brightness variability. For instance, rms_contrast (median = 22.6) and dynrng (median = 10.5) are important for distinguishing images that, despite not being severely underexposed or blurred, may suffer from flat lighting or a lack of tonal diversity. The entropy feature quantifies the overall complexity of the luminance distribution, with higher values corresponding to more visually rich and well-exposed images.

Features related to color properties, such as colorfulness, sat_mean (mean saturation), and l_mean (mean lightness), show moderate but still non-negligible importance. These metrics help the classifier to recognize images where exposure errors manifest as washed-out colors or overall dullness, phenomena frequently encountered in natural image acquisition under suboptimal lighting.

Gray_skew (skewness of the lightness histogram) and brisque (a no-reference image quality index) have relatively low average importance, but may still contribute in specific edge cases. Notably, clip_high (fraction of overexposed pixels) is consistently the least important feature, with both median and mean gains near zero in most folds. This suggests that, within this dataset, severe overexposure was much less common or less diagnostically useful than underexposure.

These findings are further illustrated in

Figure 5, which displays a horizontal boxplot of gain values for each feature. The graphical summary provides several key insights:

Features with the widest boxes and highest medians (lap_var, sobel_mean, and clip_low) are consistently dominant, regardless of fold.

Some features, like clip_low and rms_contrast, show substantial variability (wide IQRs), indicating their influence can fluctuate depending on the particular cross-validation split. This reflects the heterogeneous nature of real-world image data.

Features clustered near zero (e.g., clip_high, brisque) appear as short, compact boxplots, confirming their marginal role in the classification.

The robust and reproducible ranking of feature importance across folds indicates that the model is not overfitting to spurious associations and that its predictions are grounded in meaningful, interpretable aspects of image quality. From a practical standpoint, this analysis provides direct guidance for future improvements or adaptations of the exposure classifier:

Emphasis should be placed on further refining sharpness and edge-based metrics, as these are most sensitive to exposure defects.

In applications or datasets where overexposure is a larger concern, the feature set might need to be expanded or re-weighted accordingly.

For transfer to other domains or imaging conditions, the validation of the current feature hierarchy should be repeated, ensuring continued model interpretability and trustworthiness.

In summary, the feature importance analysis confirms that exposure quality in field phenological images is most effectively assessed using a combination of sharpness, edge, and underexposure metrics. The stability of these findings across cross-validation folds underscores the robustness of the data-driven approach, supporting its use as a reliable pre-filtering step in automated, large-scale environmental monitoring pipelines.

3.1.5. Inference and Final Well-Exposed Subset

Once trained, the exposure quality model was applied in batch mode to the entire Linden_ROI_civil dataset. For each image, the model predicted the probability of being well-exposed. Images classified as “good” (with predicted probability above 0.5) were retained in the final working set (Linden_ROI_wellExposed), while images assessed as “bad” were discarded from further phenological analysis.

The class distribution after this filtering step is summarized in

Table 9.

This additional exposure-based filtering ensured that the dataset used for the main flowering classification task was not only phenologically annotated, but also highly consistent in terms of image quality. The removal of poorly exposed images is expected to improve the accuracy and robustness of all subsequent analyses and machine learning models.

3.2. Results of Deep Learning Models for Linden Flowering Phase Classification

3.2.1. Confusion Matrix Analysis

To evaluate the effectiveness of the selected deep learning architectures in the task of automatic classification of the phenological flowering phase of small-leaved lime (Tilia cordata Mill.), we performed a detailed analysis of the aggregated confusion matrices and classification metrics. Results are presented for seven models, ConvNeXt Tiny, EfficientNetB3, MobileNetV3 Large, ResNet50, Swin Transformer Tiny, VGG16, and Vision Transformer (ViT-B/16), summarizing predictions across all folds of the cross-validation.

Figure 6 presents the confusion matrices for each of the analyzed models. All architectures achieved very high accuracy in classifying the majority class (non-flowering; class 0), with the number of true negatives exceeding 18,900 in each configuration (representing approximately 95.9% of all samples). The number of false positives and false negatives was very low (usually below 20 cases out of nearly 20,000 samples), indicating high precision and sensitivity of the models, even in the presence of a significant class imbalance.

The best performance in correctly classifying positive samples (flowering; class 1) was obtained for the VGG16, ResNet50, and ConvNeXt Tiny models, which misclassified only 10, 12, and 11 positive cases, respectively, out of 788 positive samples (i.e., sensitivity of 98.7%, 98.5%, and 98.6% for class 1). Similarly, the number of false positive predictions (assigning flowering to a non-flowering image) for these models was minimal (from 7 to 23 cases, i.e., less than 0.12% of all negative samples).

The remaining architectures (EfficientNetB3, MobileNetV3 Large, Swin-Tiny, and ViT-B/16) also demonstrated high performance, although with a slightly greater number of errors in the positive class (up to 32 false negatives for MobileNetV3 Large). Nevertheless, even in these cases, the sensitivity for class 1 exceeded 96%.

It is important to note that all considered models maintained a very good balance between precision and recall (F1-score for class 1 above 0.94), achieved despite the strong class imbalance (ratio of approximately 24:1 in favor of class 0). Analysis of the confusion matrices confirms that most misclassifications concern borderline cases or images with challenging lighting conditions.

In summary, classical deep convolutional networks (VGG16, ResNet50, and ConvNeXt Tiny) achieve the highest sensitivity and specificity for both flowering and non-flowering classes, while modern transformer-based models (ViT-B/16, Swin-Tiny) and efficient architectures (EfficientNet, MobileNet) provide a very comparable level of performance. The choice of model for practical applications should consider not only accuracy but also computational complexity and inference time.

These results clearly demonstrate that state-of-the-art deep learning architectures enable highly accurate automatic classification of flowering phases, even under strong class imbalance and varying image exposure conditions. Such models can provide a robust foundation for automated, high-throughput phenological monitoring of trees in real-world field settings.

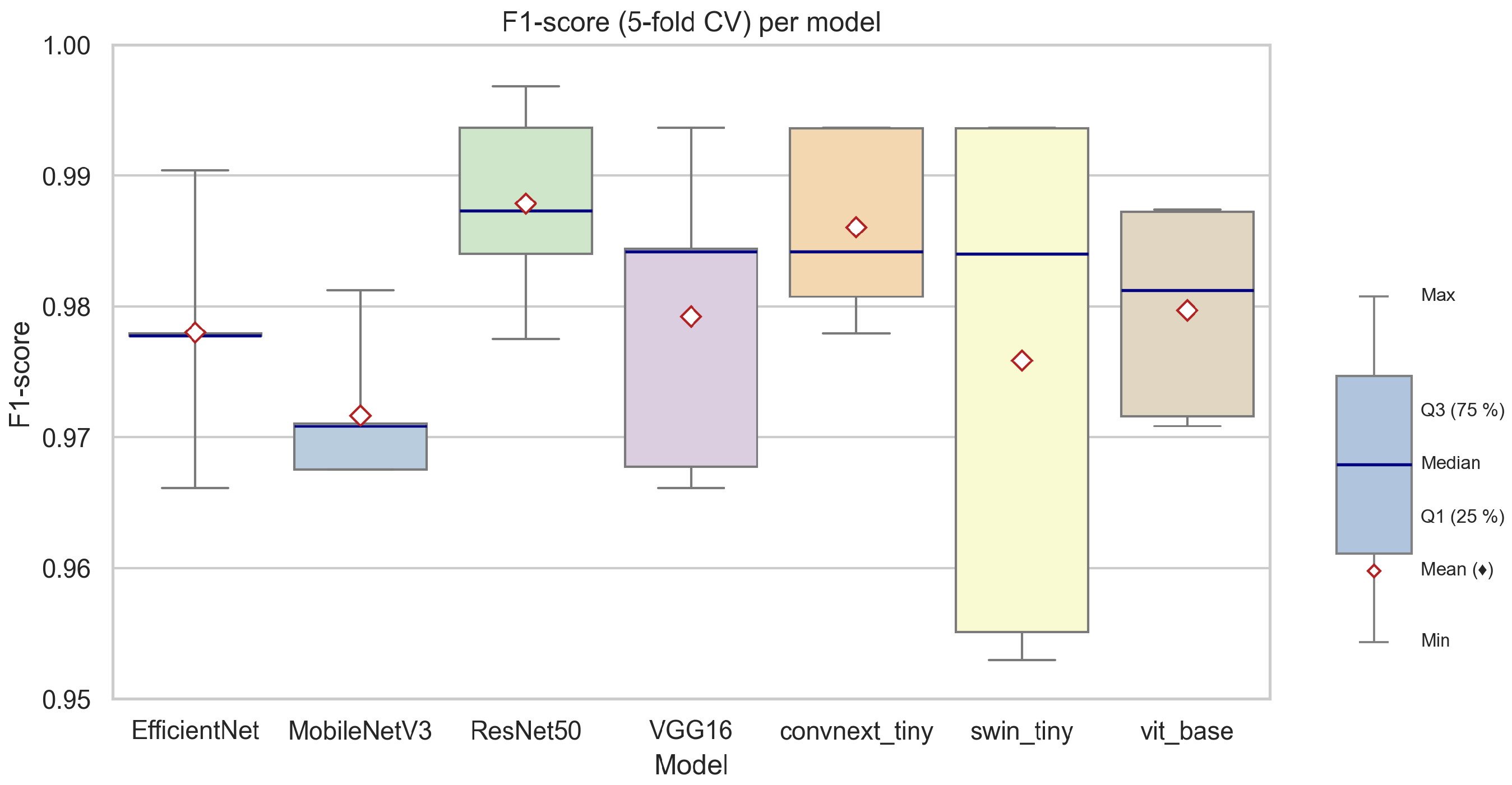

3.2.2. Quantitative Comparison of Classification Metrics

A comprehensive quantitative comparison of the evaluated deep learning models for the classification of linden flowering phases is presented in

Table 10, which reports cross-validated results for seven architectures. The models were benchmarked using several standard classification metrics: balanced accuracy, overall accuracy, precision, sensitivity (recall), specificity, F1-score, and Matthews correlation coefficient (MCC), each reported as mean ± standard deviation across the 5-fold cross-validation.

Across all models, the achieved classification performance was exceptionally high. The highest mean F1-scores were observed for ResNet50 (0.9879 ± 0.0077) and ConvNeXt Tiny (0.9860 ± 0.0073), with VGG16, EfficientNetB3, and ViT-B/16 also yielding very strong results (mean F1-score ≈ 0.98). The lowest (though still robust) F1-score was reported for MobileNetV3 Large (0.9716 ± 0.0056), indicating this lightweight architecture is marginally less effective in this specific phenological context.

Figure 7 provides a visual summary of the F1-scores achieved by each model across the five cross-validation folds. The boxplot demonstrates that all models maintained high and consistent F1-scores, with narrow interquartile ranges and only minimal variability between folds. Notably, transformer-based models (ViT-B/16, Swin-Tiny) exhibited a slightly wider spread in F1-score distribution compared to classical convolutional networks, which may indicate a higher sensitivity to fold-specific data variability, particularly in minority class samples.

When analyzing individual metrics, all models demonstrated excellent specificity (above 0.99), confirming their ability to correctly identify non-flowering images, which dominate the dataset. Precision and recall values, especially for the minority (flowering) class, were also consistently high. For example, ResNet50 achieved both high precision (0.9910) and recall (0.9847), resulting in the overall best F1-score and MCC, suggesting superior reliability in detecting flowering phases without sacrificing performance on the majority class.

EfficientNetB3 and ConvNeXt Tiny, while being more computationally efficient, also offered an excellent balance of accuracy and speed, making them attractive choices for large-scale or real-time deployments. MobileNetV3 Large, optimized for resource-limited scenarios, maintained competitive performance, with only a slight reduction in recall and F1-score, likely attributable to its smaller model capacity.

In summary, the results indicate that all tested deep learning architectures are well-suited for the automatic classification of flowering phenological phases in linden trees, with performance differences between the top models being minor in practical terms. The small standard deviations across folds confirm the robustness and generalizability of the obtained results. Among the tested models, ResNet50 and ConvNeXt Tiny appear to provide the best trade-off between accuracy and reliability, while transformer-based models show promise, particularly in scenarios where learning complex, spatially distributed patterns is crucial.

These findings are further illustrated by the F1-score boxplot (

Figure 7), which facilitates an intuitive comparison of both the central tendency (median and mean) and the variability of each architecture’s performance. The overall consistency and high level of metrics obtained validate the effectiveness of deep learning models for real-world, automated phenological monitoring based on image data.

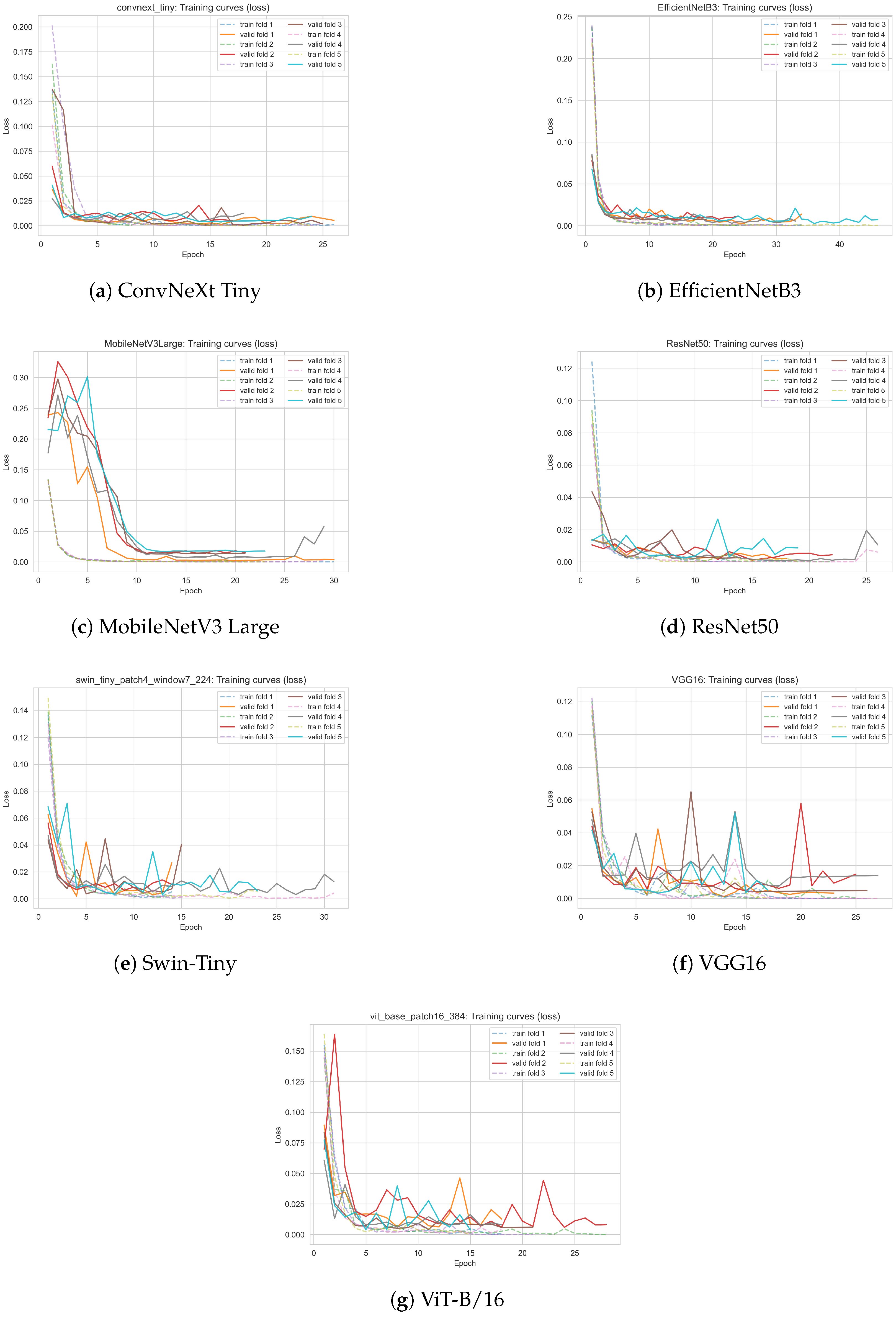

3.2.3. Analysis of Training and Validation Loss Curves for Deep Learning Models

To gain deeper insight into the learning dynamics and convergence behavior of all evaluated deep learning models, we present the training and validation loss curves for each architecture. The loss curves, obtained during 5-fold cross-validation, illustrate both the speed of convergence and the stability of training for each model.

Figure 8 displays the evolution of the training and validation loss as a function of the epoch for all analyzed models: ConvNeXt Tiny, EfficientNetB3, MobileNetV3 Large, ResNet50, Swin Transformer Tiny, VGG16, and Vision Transformer (ViT-B/16). For each model, both training and validation losses are plotted for all cross-validation folds, allowing for a direct visual assessment of convergence, potential overfitting, and variability between folds.

The following key observations can be made:

All models demonstrate rapid convergence, with the training loss sharply decreasing during the first few epochs and reaching low, stable values.

The validation loss closely follows the training loss, indicating good generalization and absence of significant overfitting for any architecture.

The lowest and most stable validation losses were observed for classical convolutional models (ConvNeXt Tiny, ResNet50, VGG16) and for transformer-based models (ViT-B/16, Swin-Tiny), confirming their robustness on the phenological classification task.

Minor fluctuations in the validation loss curves for some folds, especially for MobileNetV3 Large and VGG16, likely reflect the limited number of positive-class (flowering) samples and challenging real-world image variability.

The training curves corroborate the quantitative results reported in

Table 10, showing that all tested models are capable of fast and stable optimization on the curated dataset of linden tree images, with no indication of mode collapse, underfitting, or severe overfitting.

3.2.4. Qualitative Analysis of Misclassified Images

A manual inspection of the misclassified subset revealed two recurring failure modes that explain the handful of errors still made by the best-performing models (cf.

Table 6 and

Table 10):

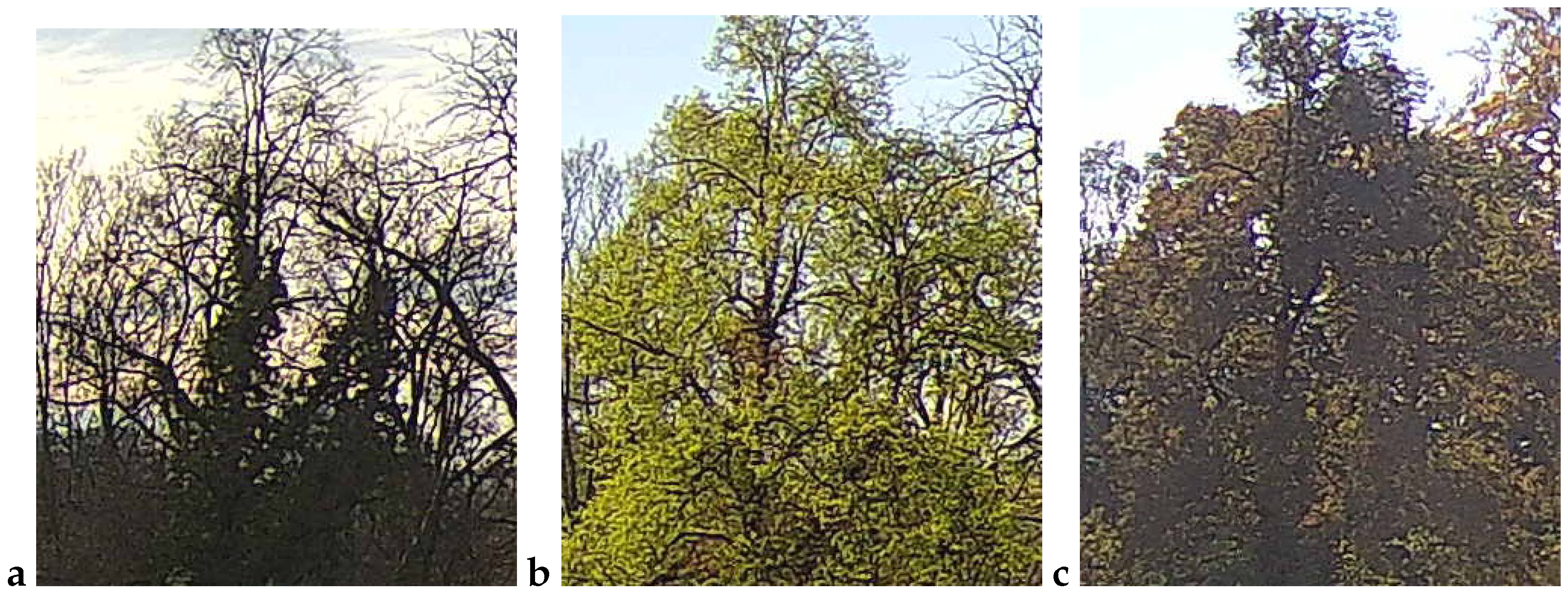

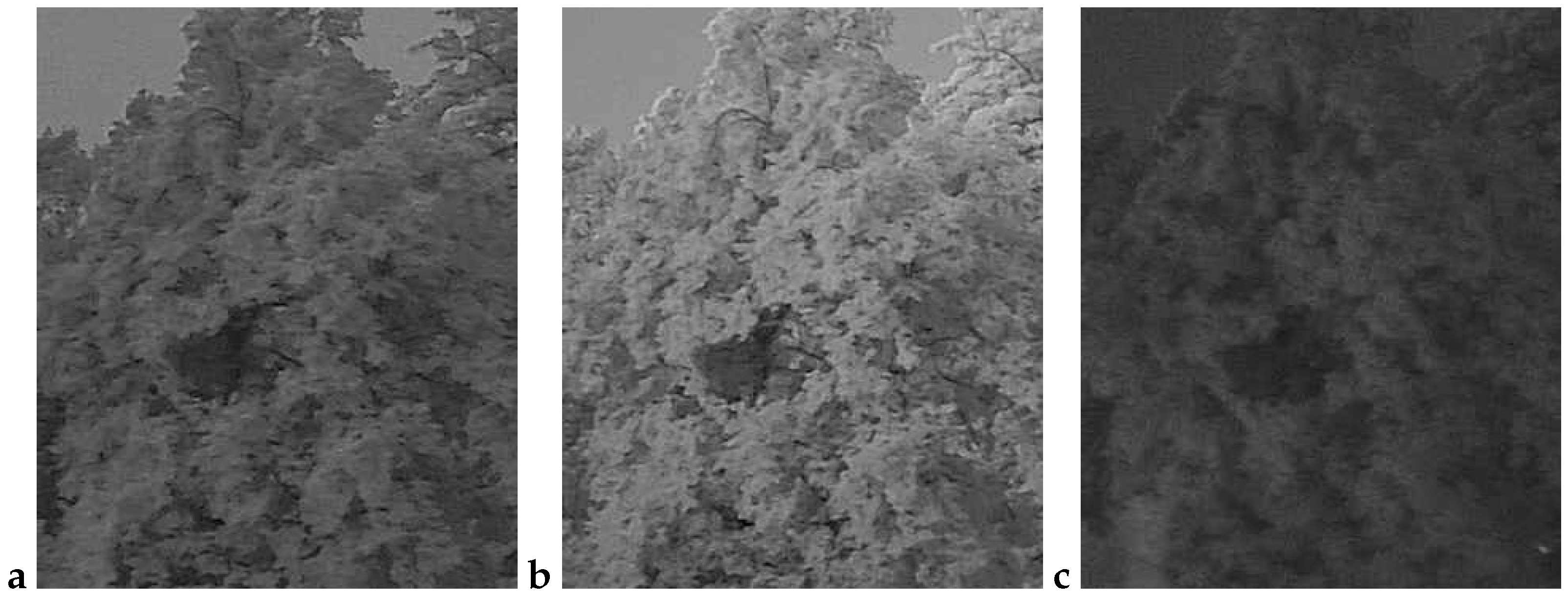

False negatives (FNs)—flowering images predicted as non-flowering. These cases (

Figure 9) are dominated by situations in which bracts and flowers are either heavily shaded, back-lit or partially veiled by morning mist. In all three scenarios, the bracts are present, but their color and texture contrast against the surrounding foliage is strongly attenuated, causing the CNN to rely on the more salient (dark-green) context and miss the subtle floral signal. Notably, panels a and b were taken at 16:10 and 18:00 CEST on 21 June 2022, i.e., at the very onset of peak bloom (BBCH 61–63); panel c (24 June 05:32 CEST) precedes sunrise and exhibits a pronounced blue cast with low dynamic range. These examples confirm that early-phase, low-light flowering remains the most challenging condition.

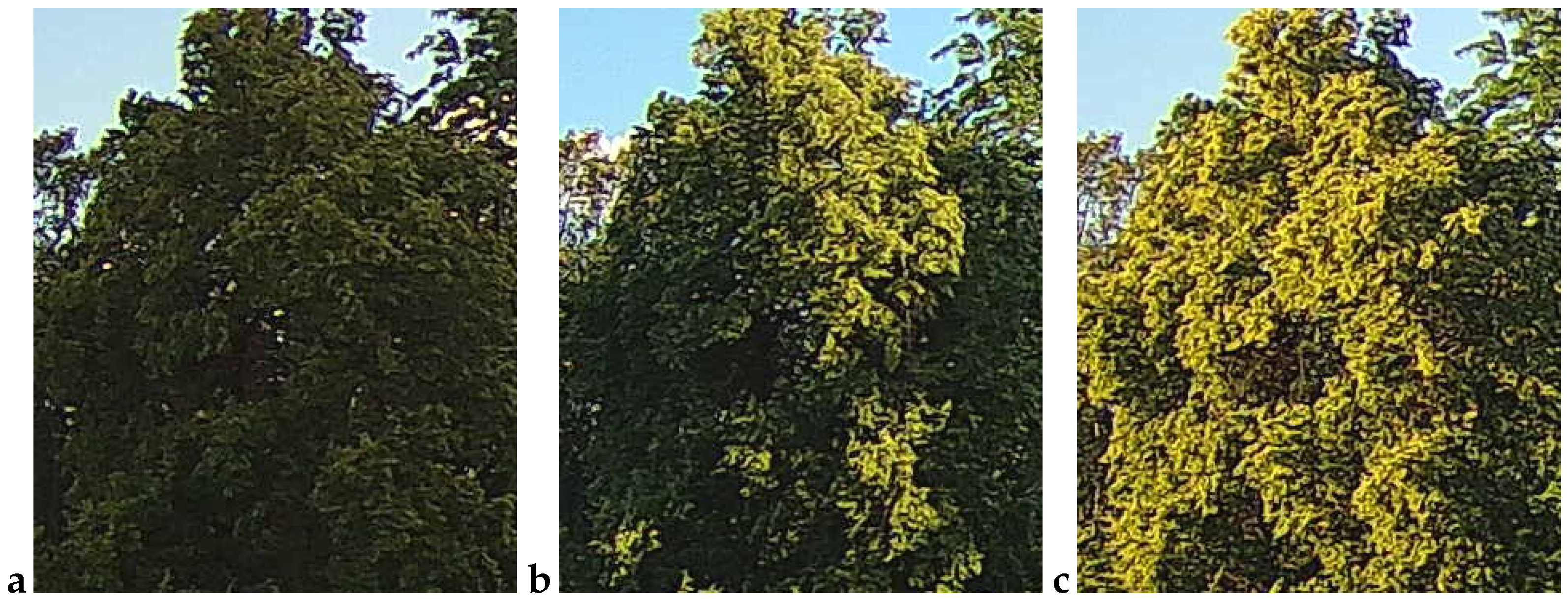

False positives (FPs)—vegetative images predicted as flowering. The converse error type (

Figure 10) arises primarily when non-flowering canopies display locally yellow-green highlights that mimic the spectral signature of bracts. Panel a (30 May 14:55 CEST) shows young, still-expanding leaves with a lighter hue; panel b (7 June 05:21 CEST) was taken in light drizzle and thin fog, which softened edges and brightened leaf margins; and panel c (16 July 18:07 CEST) captures late-season chlorosis and strong sun flecks that create bract-like patches. All three scenes illustrate how extreme illumination or physiological color changes outside the flowering window can deceive the classifier.

Overall, misclassifications are tied less to the neural architecture and more to transient imaging artifacts that suppress or imitate the fine-scale chromatic–textural cues of linden bracts. Future work should therefore explore (i) temporal smoothing (e.g., majority voting within a ±1-day window), (ii) color-constancy preprocessing, and (iii) explicit modeling of shading geometry to further reduce these edge-case errors.

3.2.5. Computation Time Analysis

A critical aspect of deploying deep learning models for automated phenological monitoring is their computational efficiency—both during training and inference. Computation time directly affects the scalability and practical applicability of proposed solutions, especially in scenarios involving large-scale environmental datasets or resource-constrained edge devices.

All model training, validation, and inference tasks described in this study were performed on a high-performance HPE ProLiant DL384 Gen12 server with the following hardware configuration:

Processors: 2 × NVIDIA Grace A02 CPUs, each with 72 cores (total 144 cores) at 3375 MHz, 72 threads per CPU.

GPUs: 2 × NVIDIA GH200 (144 GB HBM3e each).

Memory: 960 GB DDR5 (2 slots, 6400 MHz).

Storage: 2 × 1.92 TB SSD.

This server platform, equipped with state-of-the-art Grace Hopper Superchips and high-bandwidth HBM3e memory, enabled efficient parallel processing and reduced training times for all evaluated neural network architectures.

Table 11 summarizes the total computation times (in seconds) required for training, testing (inference on validation folds), and metrics calculation for each evaluated model. These times are aggregated across all five cross-validation folds to provide a realistic estimate of end-to-end resource consumption.

The results indicate substantial variability in computation time among the tested architectures. The fastest model in our benchmark was the Vision Transformer (ViT-B/16), which required a total of 1395 s for complete cross-validation training and evaluation. ConvNeXt Tiny (1586 s) and Swin-Tiny (1629 s) also achieved relatively fast overall times, highlighting the efficiency of modern transformer-based and hybrid convolutional designs. Classical convolutional networks such as ResNet50 and VGG16 demonstrated moderately longer total computation times (2022 s and 2051 s, respectively), but still offer an attractive trade-off between accuracy and speed.

MobileNetV3 Large, although designed for efficiency on mobile and embedded platforms, required a total computation time of 2769 s, slightly higher than classical CNNs. This is likely due to its extensive use of depth-wise separable convolutions, which are highly efficient on CPUs but may not be fully optimized for GPU processing in this context.

EfficientNetB3 required the longest total time (4630 s), primarily due to the model’s compound scaling strategy and increased computational demands during training and backpropagation.

The observed differences in test (inference) times were much less pronounced and remained below 35 s for all architectures, suggesting that once trained, all models are suitable for real-time or near-real-time application in automated image pipelines.