An End-to-End Computationally Lightweight Vision-Based Grasping System for Grocery Items

Abstract

1. Introduction

1.1. Related Work

1.1.1. Grasp Detection

1.1.2. Benchmarking

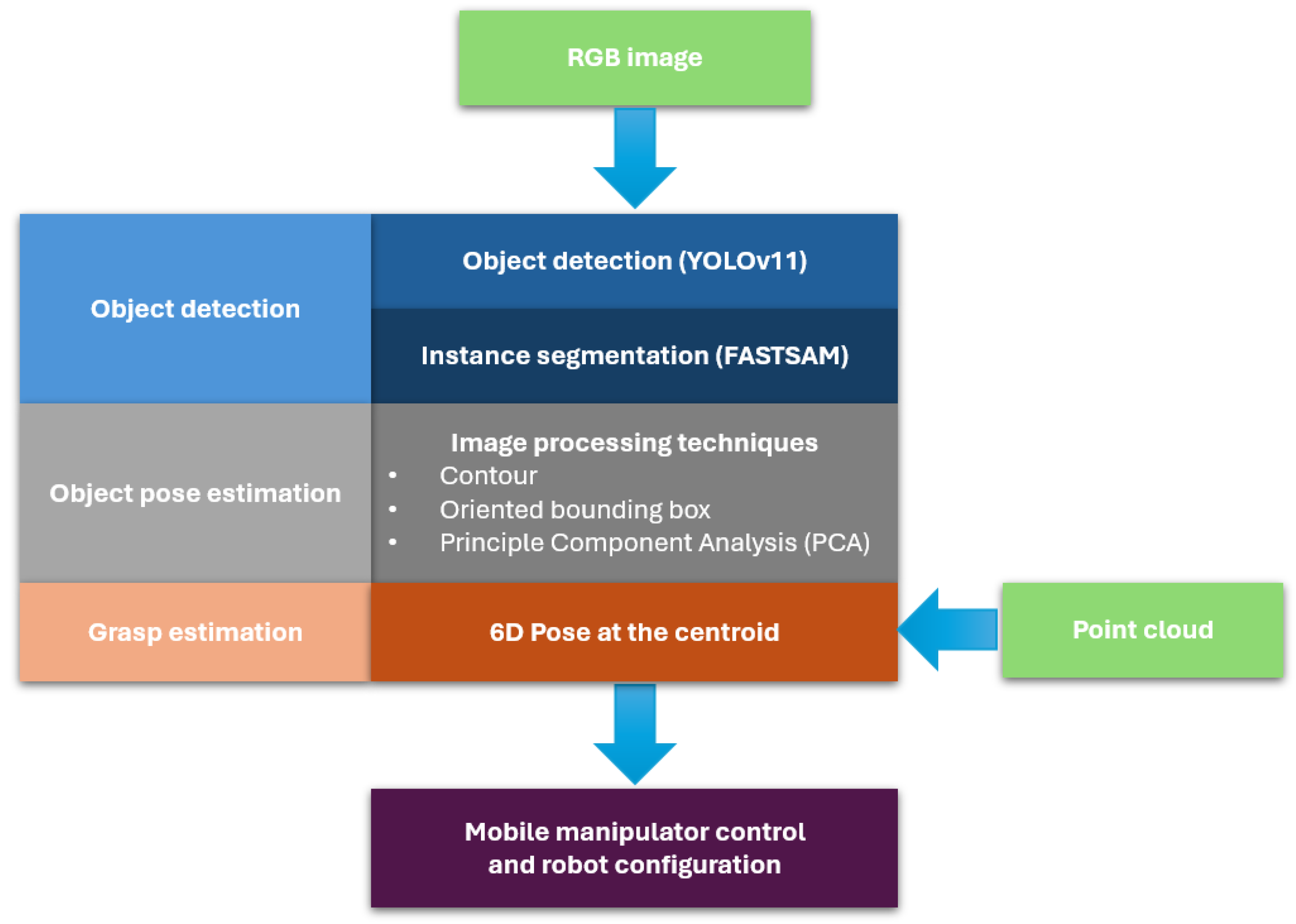

- Core Lightweight System Architecture: The essential modules of the proposed grasp detection system were systematically analyzed and defined. Each component, ranging from object detection to grasp estimation, was individually evaluated and optimized to achieve not only computational efficiency but also high success rates in real-world robotic grasping tasks. The overall system architecture was carefully designed, and its core functionalities are presented in detail.

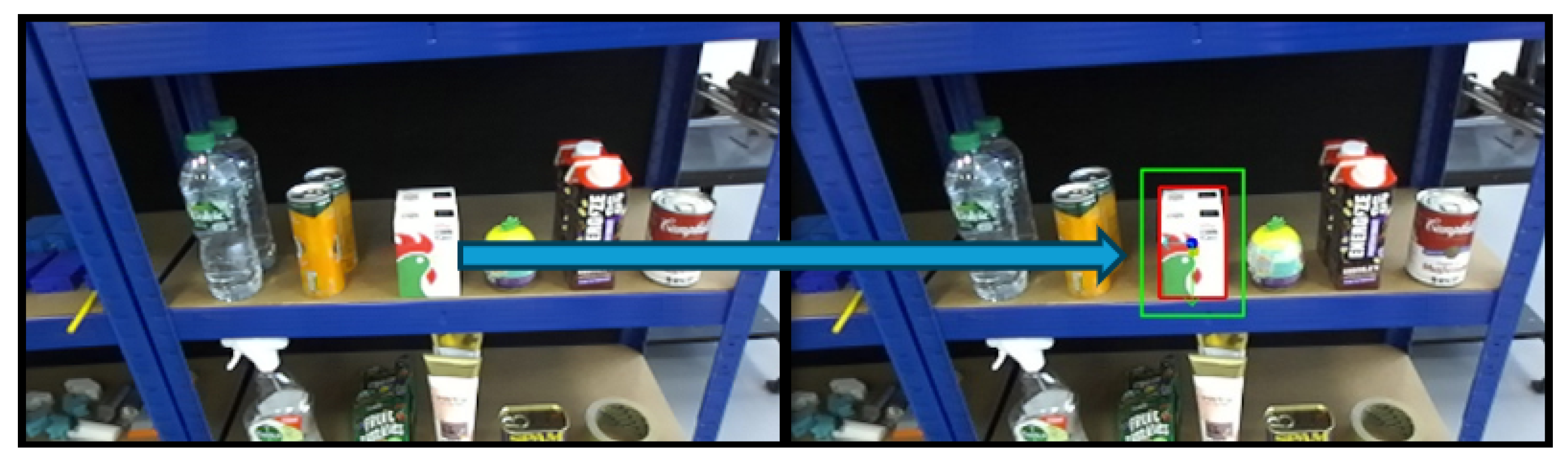

- Improved Traditional Object Pose Estimation Method: An efficient pose estimation method based on traditional image processing techniques was developed to reduce the complexity and computational demands typically associated with complicated networks. This approach enables precise object pose prediction for unseen objects, without the need for datasets or prior grasping knowledge. Moreover, the method can be seamlessly integrated with various object detection algorithms, enabling broad applicability across different domains.

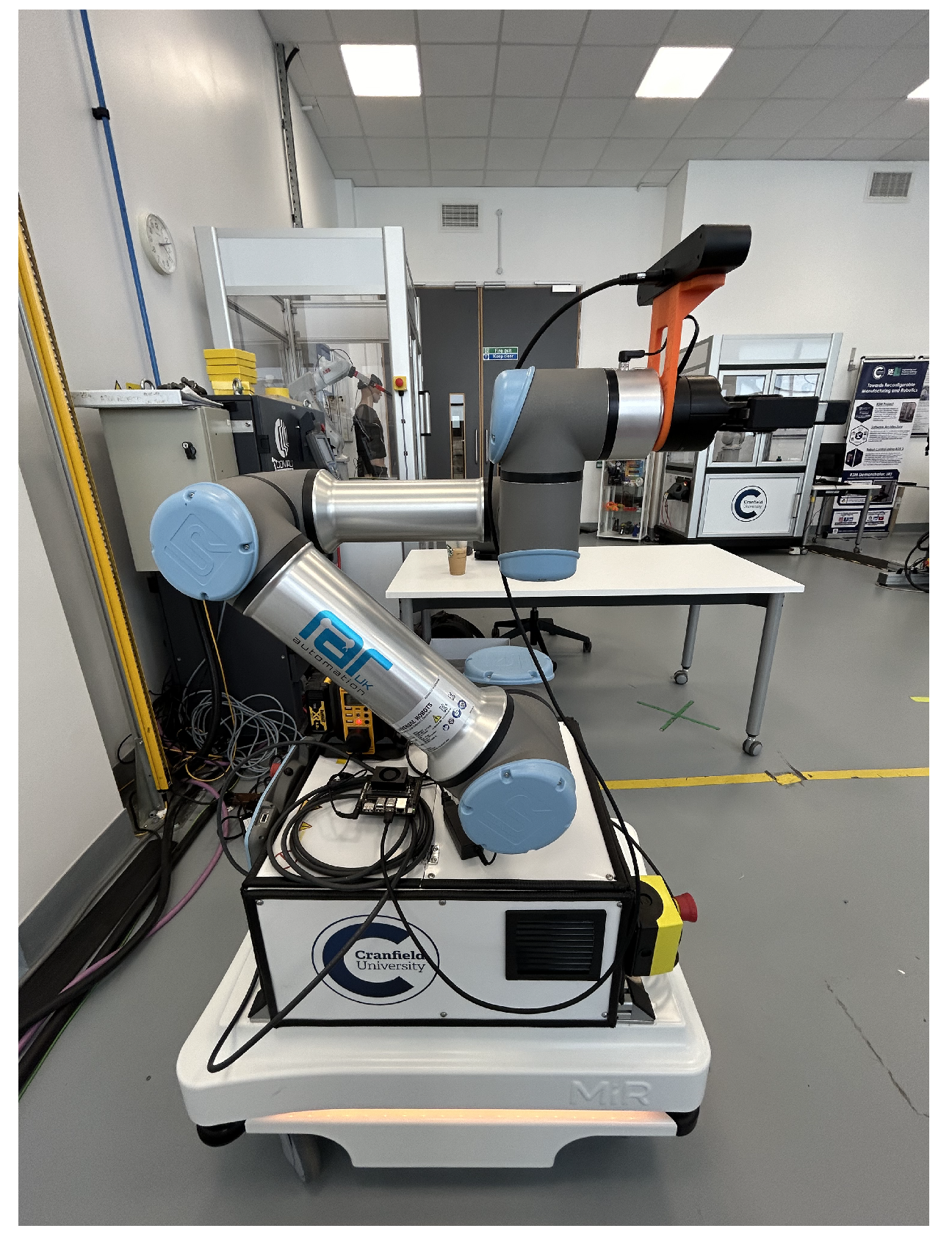

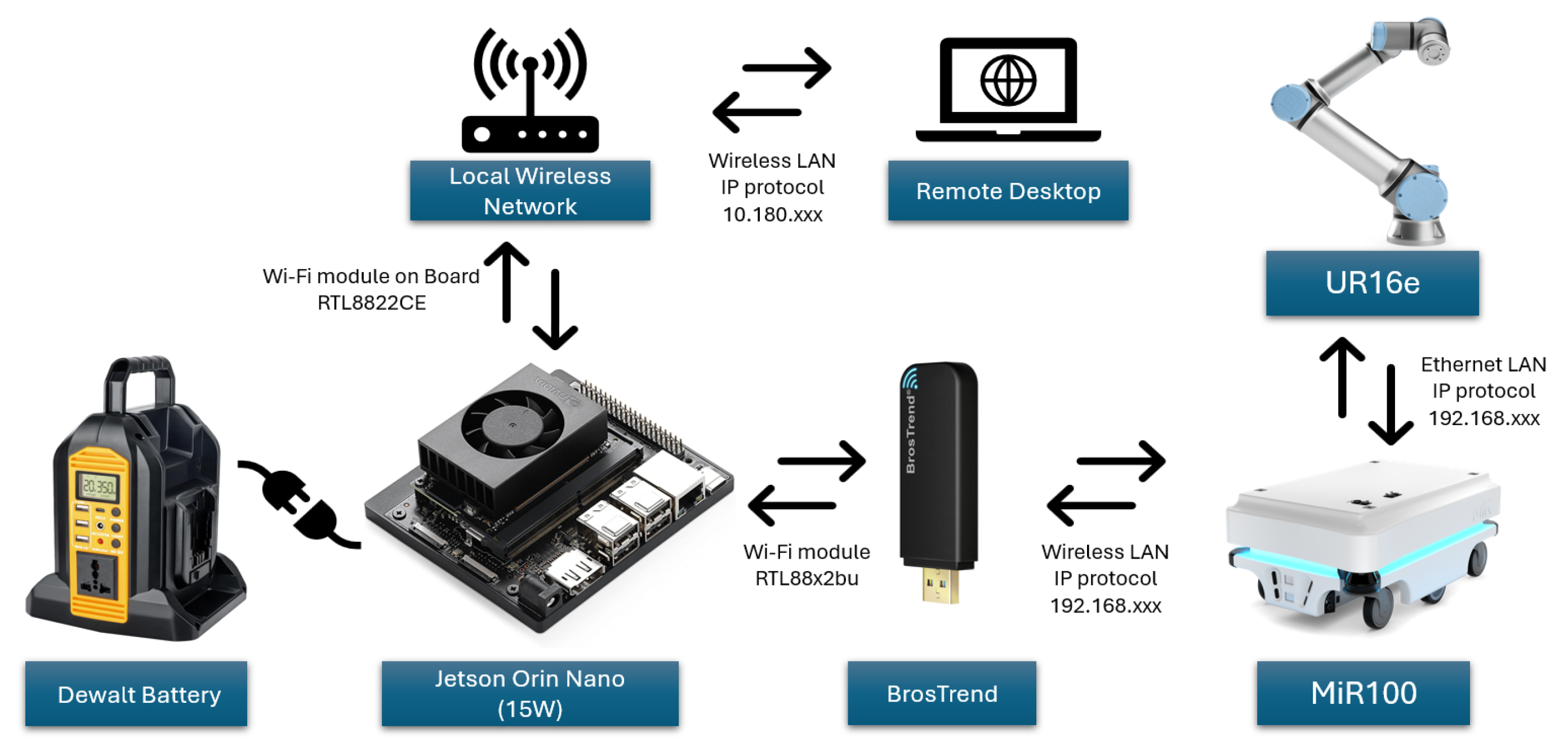

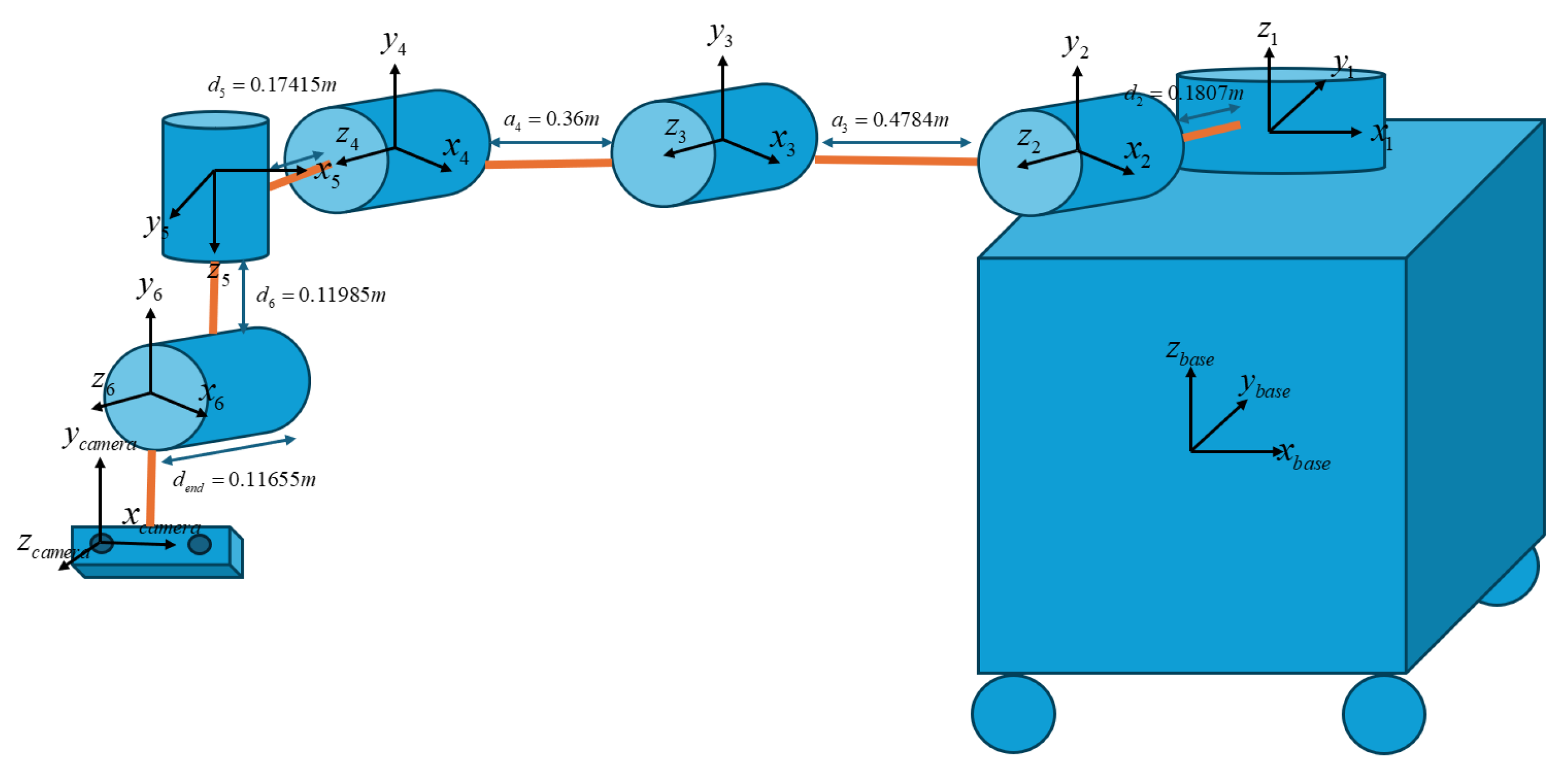

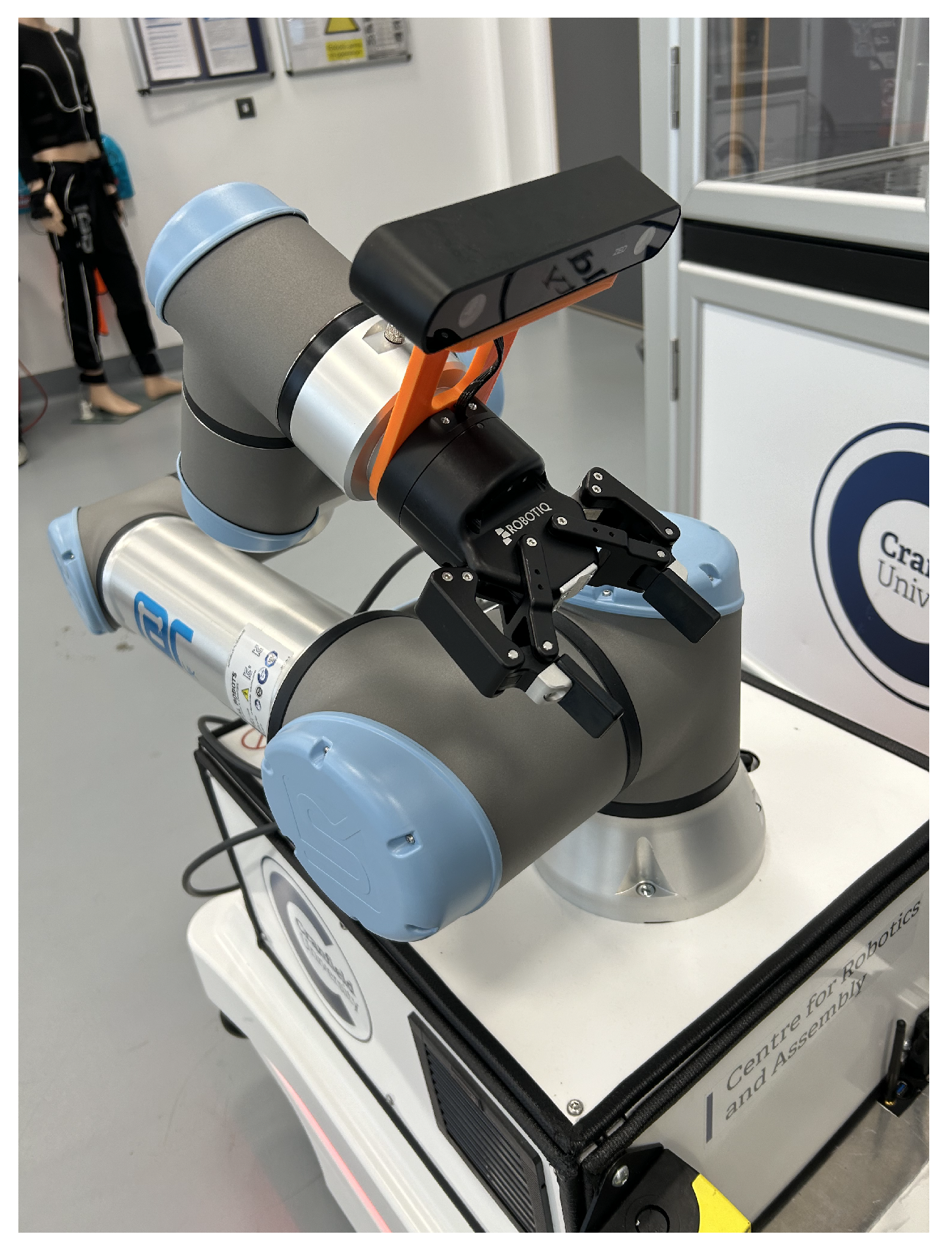

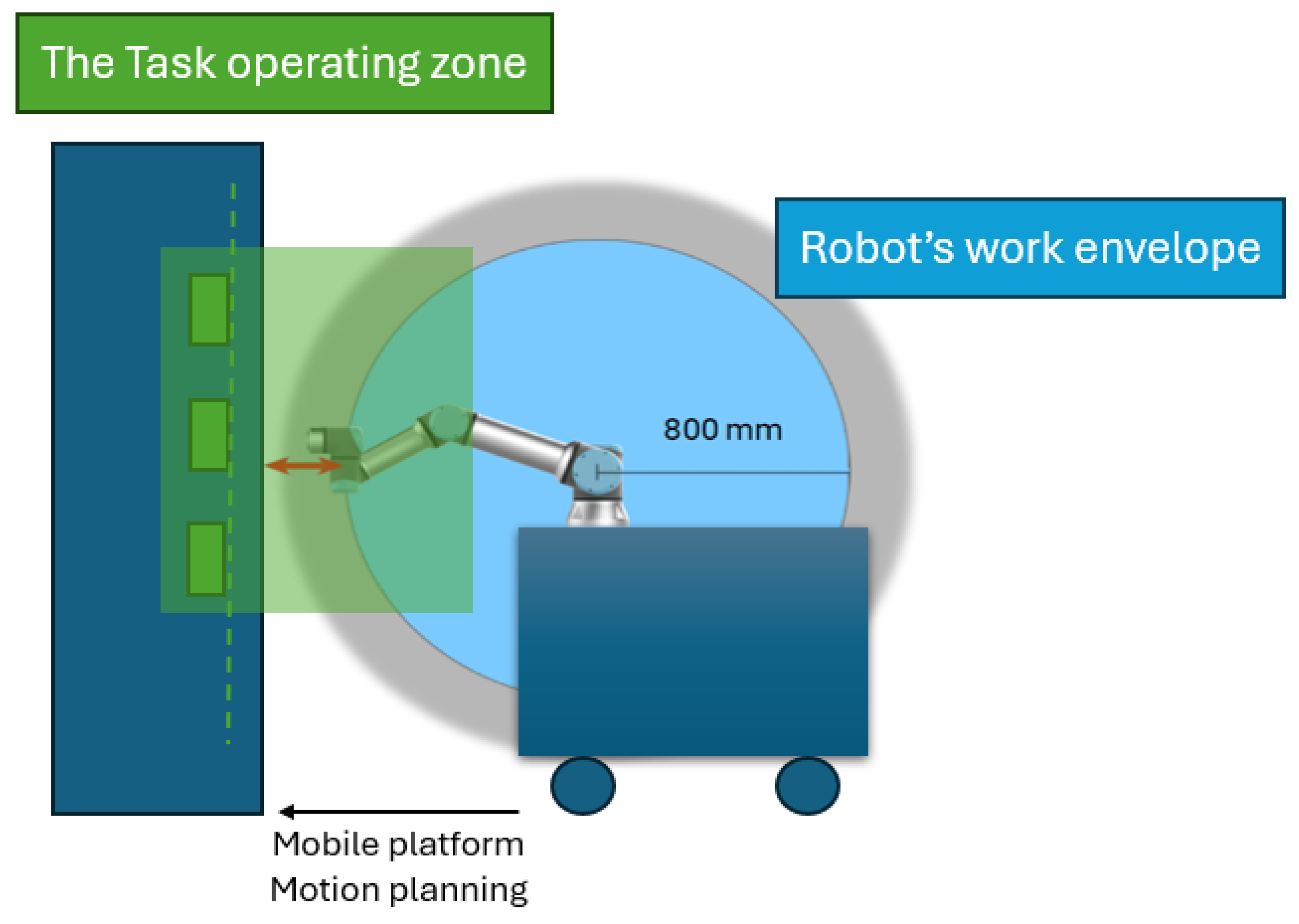

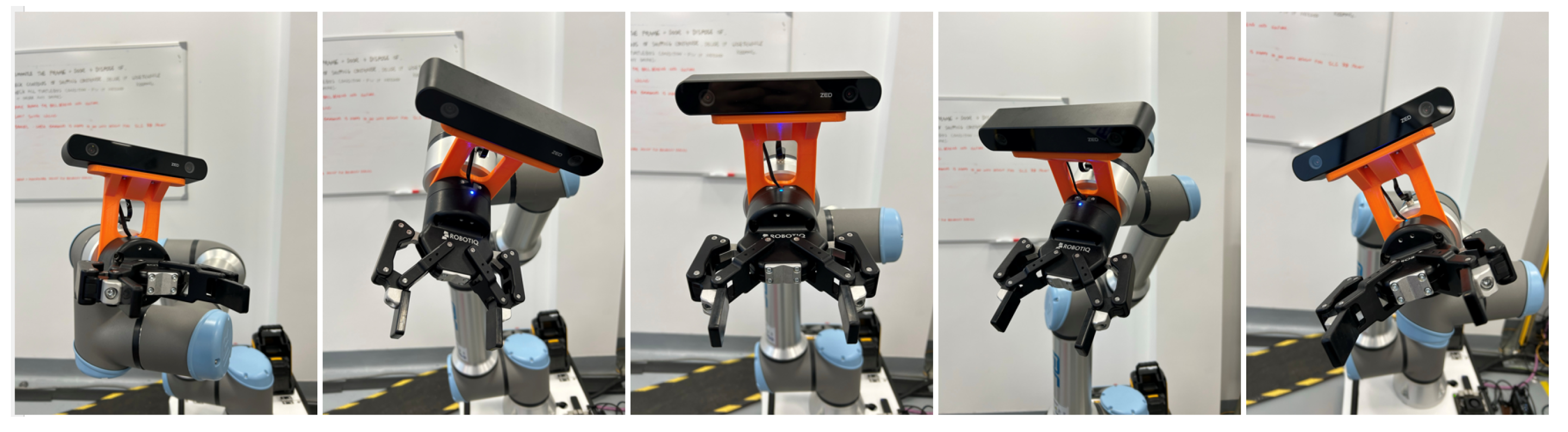

- Kinematic Model and Control of a Mobile Manipulator: A complete calculation model of the mobile manipulator system was derived, including the transformation from the camera frame to the robot frame. The robotic arm (UR16e) was mounted on a mobile platform (MiR100), and a unified motion control framework was developed. This framework integrates forward and inverse kinematics to coordinate both joint positioning and end-effector orientation (roll, pitch, and yaw), enabling precise robot operation.

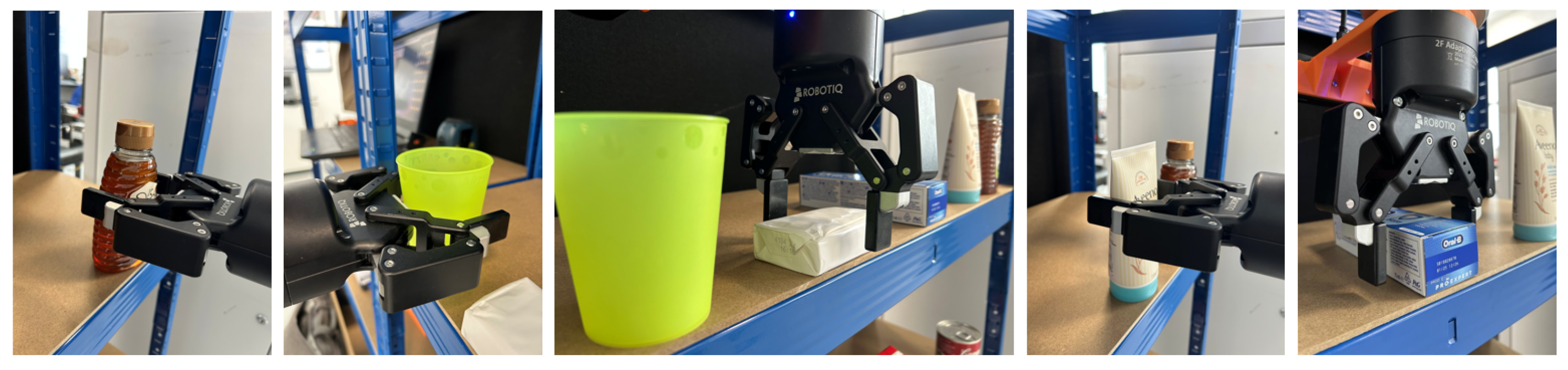

- Dataset and Benchmark for Grocery Pick-and-Pack Tasks: A dedicated dataset and benchmark for vision-based grasping of grocery items in real-world environments were created and made publicly available. The object detection dataset includes realistic items commonly found in supermarkets. The benchmark framework supports evaluation across diverse robot and gripper configurations and uses standardized metrics such as individual success rate, average success rate, processing time, and the compute-to-speed ratio. These metrics offer a unified and reproducible means of validating system performance and enabling fair comparisons with related works.

2. Proposed Methodology

2.1. An End-to-End Grasp Detection System

2.2. Comprehensive Core Modules

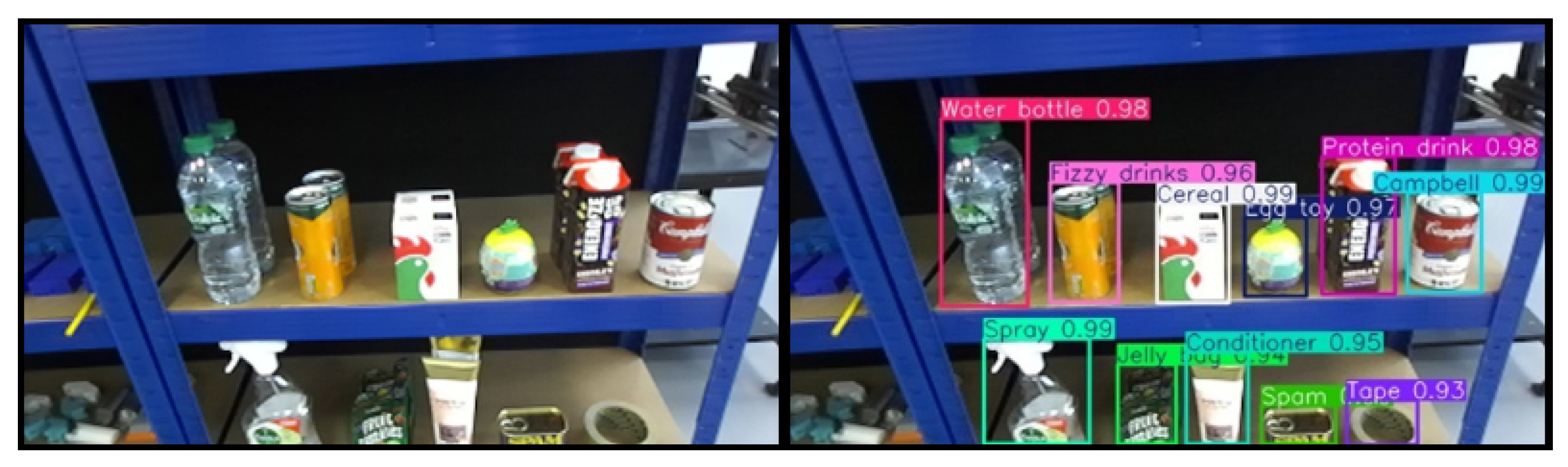

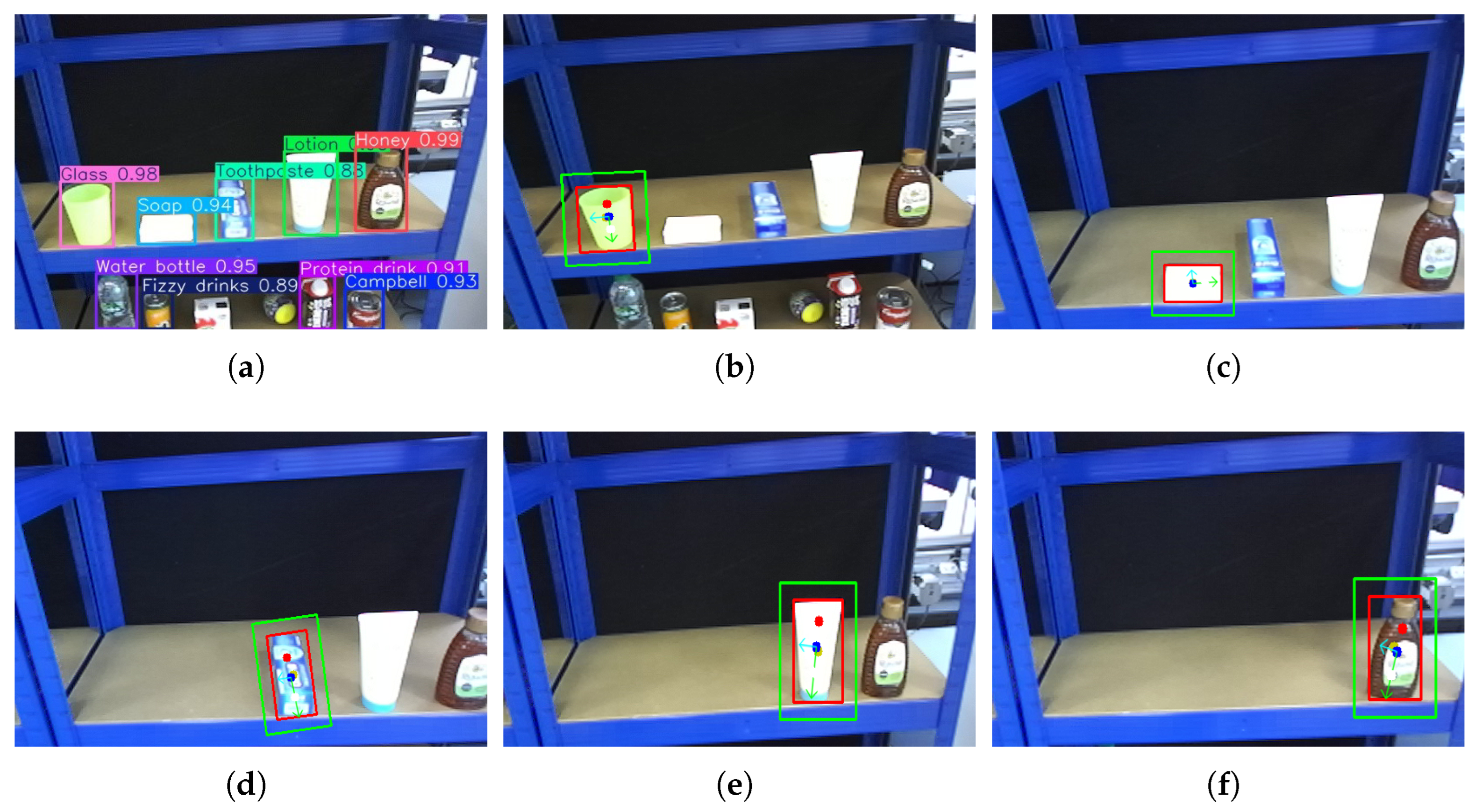

2.2.1. Object Detection, Yolov11

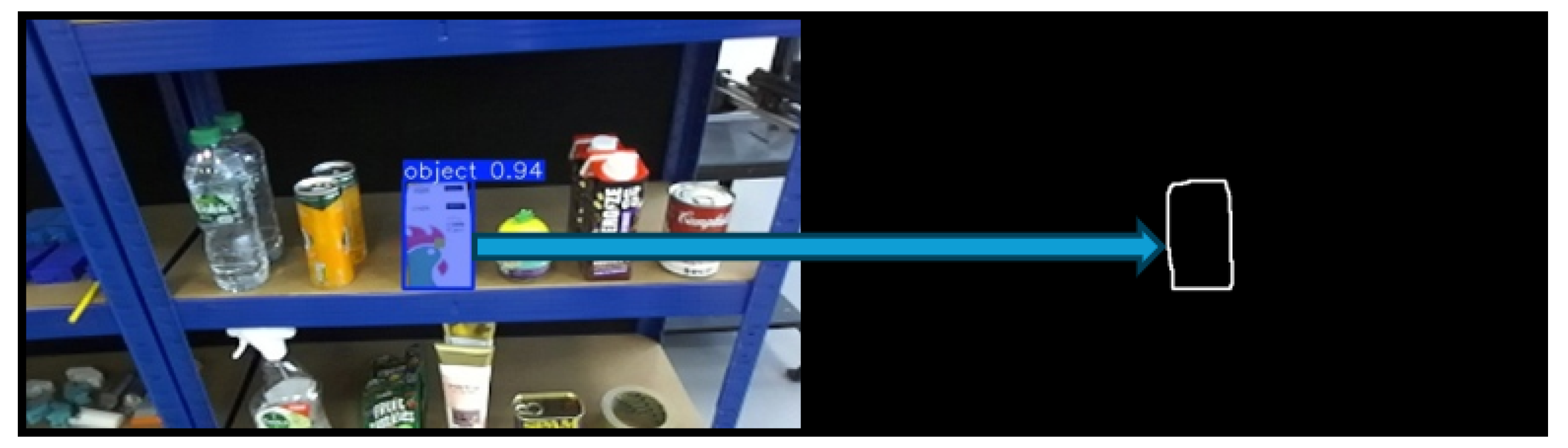

2.2.2. Object Segmentation

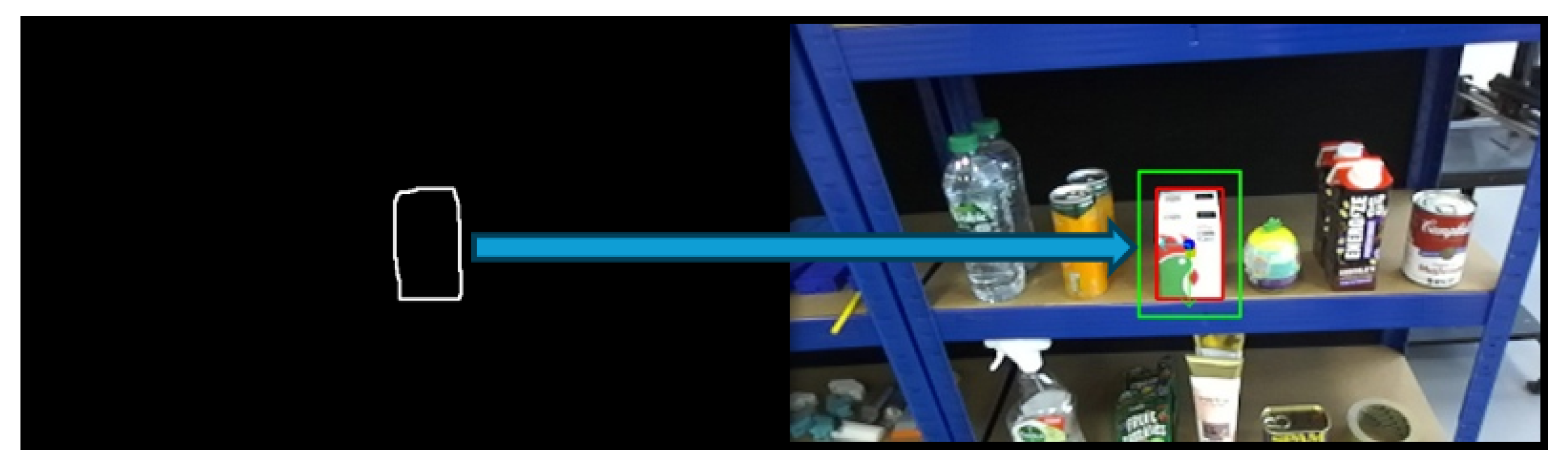

2.2.3. Image Processing and Principle Component Analysis (PCA)

- PCA for object pose estimation

- Centroid Calculation:

- Covariance Matrix:

- Eigen Decomposition:

- Orientation Angle:

2.3. Fallback Strategy

2.4. The Design and Calculation Model of a Mobile Manipulator

- Yaw Control: Rotational Matrix Around the Z-axis:

- Pitch Control: Rotational Matrix Around the Y-axis:

- Roll Control: Rotational Matrix Around the X-axis:

- Robot Rotational Vector to Rotational Matrix:

- Composed Rotation:

- Rotation Matrix to Axis–Angle Vector:

2.5. Benchmark Methodology

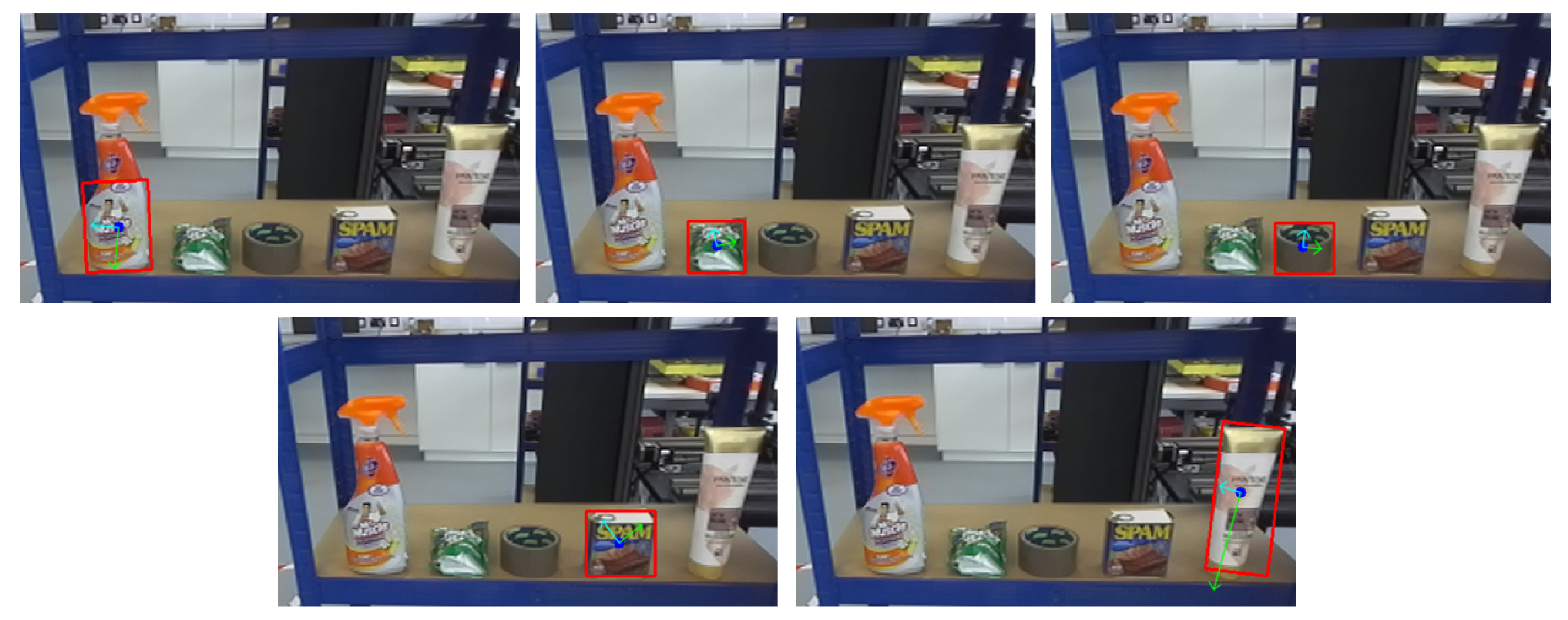

- Level 1: Basic Geometry and Upright Pose

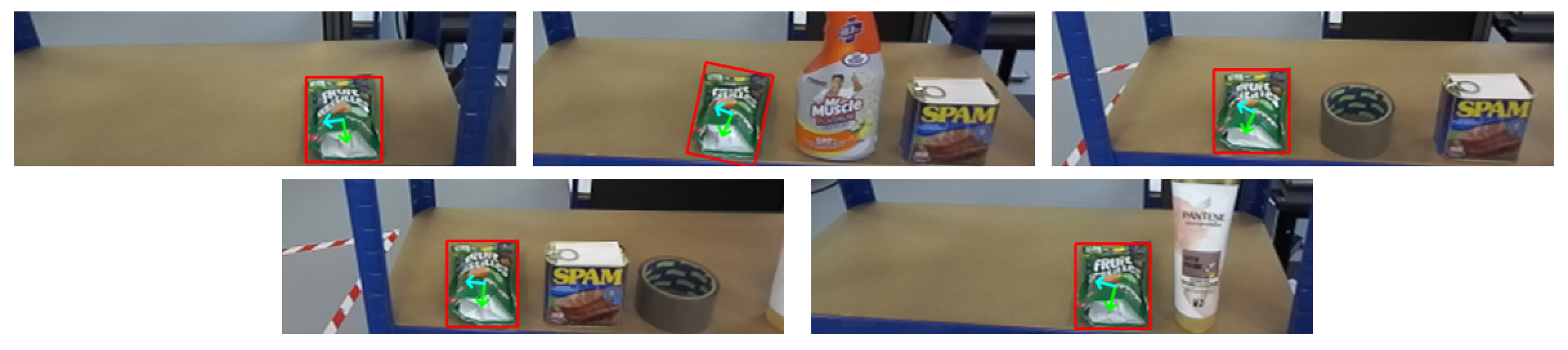

- Level 2: Mixed Poses and Complex Shapes

- Level 3: Grasp Constraints and Affordance Awareness

3. Experiments and Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Grand View Research, Inc. Online Grocery Market Size, Share and Trends Analysis Report by Product Type, By Region, and Segment Forecasts 2022–2030; Grand View Research, Inc.: San Francisco, CA, USA, 2023. [Google Scholar]

- Delberghe, C.; Delbarre, A.; Vissers, D.; Kleis, A.; Laizet, F.; Läubli, D.; Vallöf, R. State of Grocery Europe 2024: Signs of Hope; McKinsey & Company: Singapore, 2024. [Google Scholar]

- Arents, J.; Greitans, M. Smart industrial robot control trends, challenges and opportunities within manufacturing. Appl. Sci. 2022, 12, 937. [Google Scholar] [CrossRef]

- Ghodsian, N.; Benfriha, K.; Olabi, A.; Gopinath, V.; Arnou, A. Mobile manipulators in industry 4.0: A review of developments for industrial applications. Sensors 2023, 23, 8026. [Google Scholar] [CrossRef]

- Shahria, M.T.; Sunny, M.S.H.; Zarif, M.I.I.; Ghommam, J.; Ahamed, S.I.; Rahman, M.H. A comprehensive review of vision-based robotic applications: Current state, components, approaches, barriers, and potential solutions. Robotics 2022, 11, 139. [Google Scholar] [CrossRef]

- Du, G.; Wang, K.; Lian, S.; Zhao, K. Vision-based robotic grasping from object localization, object pose estimation to grasp estimation for parallel grippers: A review. Artif. Intell. Rev. 2021, 54, 1677–1734. [Google Scholar] [CrossRef]

- Aleotti, J.; Baldassarri, A.; Bonfè, M.; Carricato, M.; Chiaravalli, D.; Di Leva, R.; Fantuzzi, C.; Farsoni, S.; Innero, G.; Lodi Rizzini, D.; et al. Toward future automatic warehouses: An autonomous depalletizing system based on mobile manipulation and 3d perception. Appl. Sci. 2021, 11, 5959. [Google Scholar] [CrossRef]

- Mansakul, T.; Tang, G.; Webb, P. The Comprehensive Review of Vision-based Grasp Estimation and Challenges. In Proceedings of the 2024 29th International Conference on Automation and Computing (ICAC), Sunderland UK, 28–30 August 2024; IEEE: New York, NY, USA, 2024; pp. 1–6. [Google Scholar]

- Outón, J.L.; Villaverde, I.; Herrero, H.; Esnaola, U.; Sierra, B. Innovative mobile manipulator solution for modern flexible manufacturing processes. Sensors 2019, 19, 5414. [Google Scholar] [CrossRef]

- Bajracharya, M.; Borders, J.; Cheng, R.; Helmick, D.; Kaul, L.; Kruse, D.; Leichty, J.; Ma, J.; Matl, C.; Michel, F.; et al. Demonstrating mobile manipulation in the wild: A metrics-driven approach. arXiv 2024, arXiv:2401.01474. [Google Scholar] [CrossRef]

- Chen, C.S.; Hu, N.T. Eye-in-hand robotic arm gripping system based on machine learning and state delay optimization. Sensors 2023, 23, 1076. [Google Scholar] [CrossRef]

- Shahria, M.T.; Ghommam, J.; Fareh, R.; Rahman, M.H. Vision-based object manipulation for activities of daily living assistance using assistive robot. Automation 2024, 5, 68–89. [Google Scholar] [CrossRef]

- Zhao, Y.; Yang, J.; Wang, S.; Li, X. Towards One Shot & Pick All: 3D-OAS, an end-to-end framework for vision guided top-down parcel bin-picking using 3D-overlapping-aware instance segmentation and GNN. Robot. Auton. Syst. 2023, 167, 104491. [Google Scholar]

- Park, J.; Han, C.; Jun, M.B.; Yun, H. Autonomous robotic bin picking platform generated from human demonstration and YOLOv5. J. Manuf. Sci. Eng. 2023, 145, 121006. [Google Scholar] [CrossRef]

- Le, T.T.; Lin, C.Y. Bin-picking for planar objects based on a deep learning network: A case study of USB packs. Sensors 2019, 19, 3602. [Google Scholar] [CrossRef]

- Outón, J.L.; Merino, I.; Villaverde, I.; Ibarguren, A.; Herrero, H.; Daelman, P.; Sierra, B. A real application of an autonomous industrial mobile manipulator within industrial context. Electronics 2021, 10, 1276. [Google Scholar] [CrossRef]

- Annusewicz-Mistal, A.; Pietrala, D.S.; Laski, P.A.; Zwierzchowski, J.; Borkowski, K.; Bracha, G.; Borycki, K.; Kostecki, S.; Wlodarczyk, D. Autonomous manipulator of a mobile robot based on a vision system. Appl. Sci. 2022, 13, 439. [Google Scholar] [CrossRef]

- Zou, Z.; Chen, K.; Shi, Z.; Guo, Y.; Ye, J. Object detection in 20 years: A survey. Proc. IEEE 2023, 111, 257–276. [Google Scholar] [CrossRef]

- El Ghazouali, S.; Mhirit, Y.; Oukhrid, A.; Michelucci, U.; Nouira, H. FusionVision: A comprehensive approach of 3D object reconstruction and segmentation from RGB-D cameras using YOLO and fast segment anything. Sensors 2024, 24, 2889. [Google Scholar] [CrossRef]

- Guan, J.; Hao, Y.; Wu, Q.; Li, S.; Fang, Y. A survey of 6dof object pose estimation methods for different application scenarios. Sensors 2024, 24, 1076. [Google Scholar] [CrossRef]

- Dong, M.; Zhang, J. A review of robotic grasp detection technology. Robotica 2023, 41, 3846–3885. [Google Scholar] [CrossRef]

- Zapata-Impata, B.S.; Mateo Agulló, C.; Gil, P.; Pomares, J. Using geometry to detect grasping points on 3D unknown point cloud. In Proceedings of the 14th International Conference on Informatics in Control, Automation and Robotics (ICINCO 2017), Madrid, Spain, 26–28 July 2017. [Google Scholar]

- Zapata-Impata, B.S.; Gil, P.; Pomares, J.; Torres, F. Fast geometry-based computation of grasping points on three-dimensional point clouds. Int. J. Adv. Robot. Syst. 2019, 16, 1729881419831846. [Google Scholar] [CrossRef]

- Lin, S.; Zeng, C.; Yang, C. Robot grasping based on object shape approximation and LightGBM. Multimed. Tools Appl. 2024, 83, 9103–9119. [Google Scholar] [CrossRef]

- Zhang, H.; Tang, J.; Sun, S.; Lan, X. Robotic grasping from classical to modern: A survey. arXiv 2022, arXiv:2202.03631. [Google Scholar] [CrossRef]

- Jiang, Y.; Moseson, S.; Saxena, A. Efficient grasping from rgbd images: Learning using a new rectangle representation. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; IEEE: New York, NY, USA, 2011; pp. 3304–3311. [Google Scholar]

- Depierre, A.; Dellandréa, E.; Chen, L. Jacquard: A large scale dataset for robotic grasp detection. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; IEEE: New York, NY, USA, 2018; pp. 3511–3516. [Google Scholar]

- Fang, H.S.; Wang, C.; Gou, M.; Lu, C. Graspnet-1billion: A large-scale benchmark for general object grasping. In Proceedings of the Proceedings of the IEEE/CVF conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 11444–11453. [Google Scholar]

- Smart City Consultancy. RobotComp 2025—Robotics and Automation Competition. Available online: https://www.smartcityconsultancy.co.uk/robotcomp2025 (accessed on 5 August 2025).

- Fujita, M.; Domae, Y.; Noda, A.; Garcia Ricardez, G.A.; Nagatani, T.; Zeng, A.; Song, S.; Rodriguez, A.; Causo, A.; Chen, I.M.; et al. What are the important technologies for bin picking? Technology analysis of robots in competitions based on a set of performance metrics. Adv. Robot. 2020, 34, 560–574. [Google Scholar] [CrossRef]

- Zhao, X.; Ding, W.; An, Y.; Du, Y.; Yu, T.; Li, M.; Tang, M.; Wang, J. Fast segment anything. arXiv 2023, arXiv:2306.12156. [Google Scholar] [CrossRef]

- Tadic, V.; Toth, A.; Vizvari, Z.; Klincsik, M.; Sari, Z.; Sarcevic, P.; Sarosi, J.; Biro, I. Perspectives of realsense and zed depth sensors for robotic vision applications. Machines 2022, 10, 183. [Google Scholar] [CrossRef]

- Williams II, R.L.; Universal Robot URe-Series Kinematics. Internet Publication. Available online: https://people.ohio.edu/williams/html/PDF/UniversalRobotKinematics.pdf (accessed on 8 February 2025).

- Baronti, L.; Alston, M.; Mavrakis, N.; Ghalamzan E, A.M.; Castellani, M. Primitive shape fitting in point clouds using the bees algorithm. Appl. Sci. 2019, 9, 5198. [Google Scholar] [CrossRef]

- Castellani, M.; Baronti, L.; Zheng, S.; Lan, F. Shape recognition for industrial robot manipulation with the Bees Algorithm. In Intelligent Production and Manufacturing Optimisation—The Bees Algorithm Approach; Springer: Cham, Switzerland, 2022; pp. 97–110. [Google Scholar]

| Link i | ||||

|---|---|---|---|---|

| 1 | 0 | 0 | 0 | |

| 2 | 0 | 0.1807 | ||

| 3 | −0.4784 | 0 | 0 | |

| 4 | −0.36 | 0 | 0 | |

| 5 | 0 | 0.17415 | ||

| 6 | 0 | − | 0.11985 |

| Grocery Items | Weight (g) | Execution Times (s) | Average Time (s) | Success Rate | Energy Consumption (W) | CSR |

|---|---|---|---|---|---|---|

| Water bottle | 526 | 4.40 4.19 4.64 4.51 4.41 | 4.430 | 100% (5/5) | 17 | 0.0133 |

| Sparkling can | 268 | 4.73 4.33 4.47 4.47 4.43 | 4.486 | 100% (5/5) | 17 | 0.0131 |

| Cereal | 32 | 4.48 4.62 4.47 4.39 4.44 | 4.480 | 100% (5/5) | 17 | 0.0131 |

| Egg-shaped toy | 53 | 4.27 4.95 4.46 4.21 4.55 | 4.488 | 100% (5/5) | 17 | 0.0131 |

| Protein drink | 387 | 5.65 4.63 4.24 4.88 4.36 | 4.752 | 100% (5/5) | 17 | 0.0124 |

| Campbell | 337 | 4.49 4.30 4.33 4.31 4.34 | 4.354 | 100% (5/5) | 17 | 0.0135 |

| Grocery Items | Weight (g) | Execution Times (s) | Average Time (s) | Success Rate | Energy Consumption (W) | CSR |

|---|---|---|---|---|---|---|

| Glass | 17 | 4.75 4.36 4.60 4.38 4.58 | 4.534 | 100% (5/5) | 17 | 0.0130 |

| Soap | 95 | 5.14 4.96 4.38 4.37 4.43 | 4.656 | 100% (5/5) | 17 | 0.0126 |

| Toothpaste | 130 | 4.24 4.45 5.55 - 4.78 | 4.755 | 80% (4/5) | 17 | 0.0124 |

| Lotion | 167 | 4.38 4.47 4.58 5.00 4.56 | 4.598 | 100% (5/5) | 17 | 0.0128 |

| Honey | 363 | 4.31 4.83 4.29 4.52 4.76 | 4.542 | 100% (5/5) | 17 | 0.0130 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mansakul, T.; Tang, G.; Webb, P.; Rice, J.; Oakley, D.; Fowler, J. An End-to-End Computationally Lightweight Vision-Based Grasping System for Grocery Items. Sensors 2025, 25, 5309. https://doi.org/10.3390/s25175309

Mansakul T, Tang G, Webb P, Rice J, Oakley D, Fowler J. An End-to-End Computationally Lightweight Vision-Based Grasping System for Grocery Items. Sensors. 2025; 25(17):5309. https://doi.org/10.3390/s25175309

Chicago/Turabian StyleMansakul, Thanavin, Gilbert Tang, Phil Webb, Jamie Rice, Daniel Oakley, and James Fowler. 2025. "An End-to-End Computationally Lightweight Vision-Based Grasping System for Grocery Items" Sensors 25, no. 17: 5309. https://doi.org/10.3390/s25175309

APA StyleMansakul, T., Tang, G., Webb, P., Rice, J., Oakley, D., & Fowler, J. (2025). An End-to-End Computationally Lightweight Vision-Based Grasping System for Grocery Items. Sensors, 25(17), 5309. https://doi.org/10.3390/s25175309