3.1. Overview

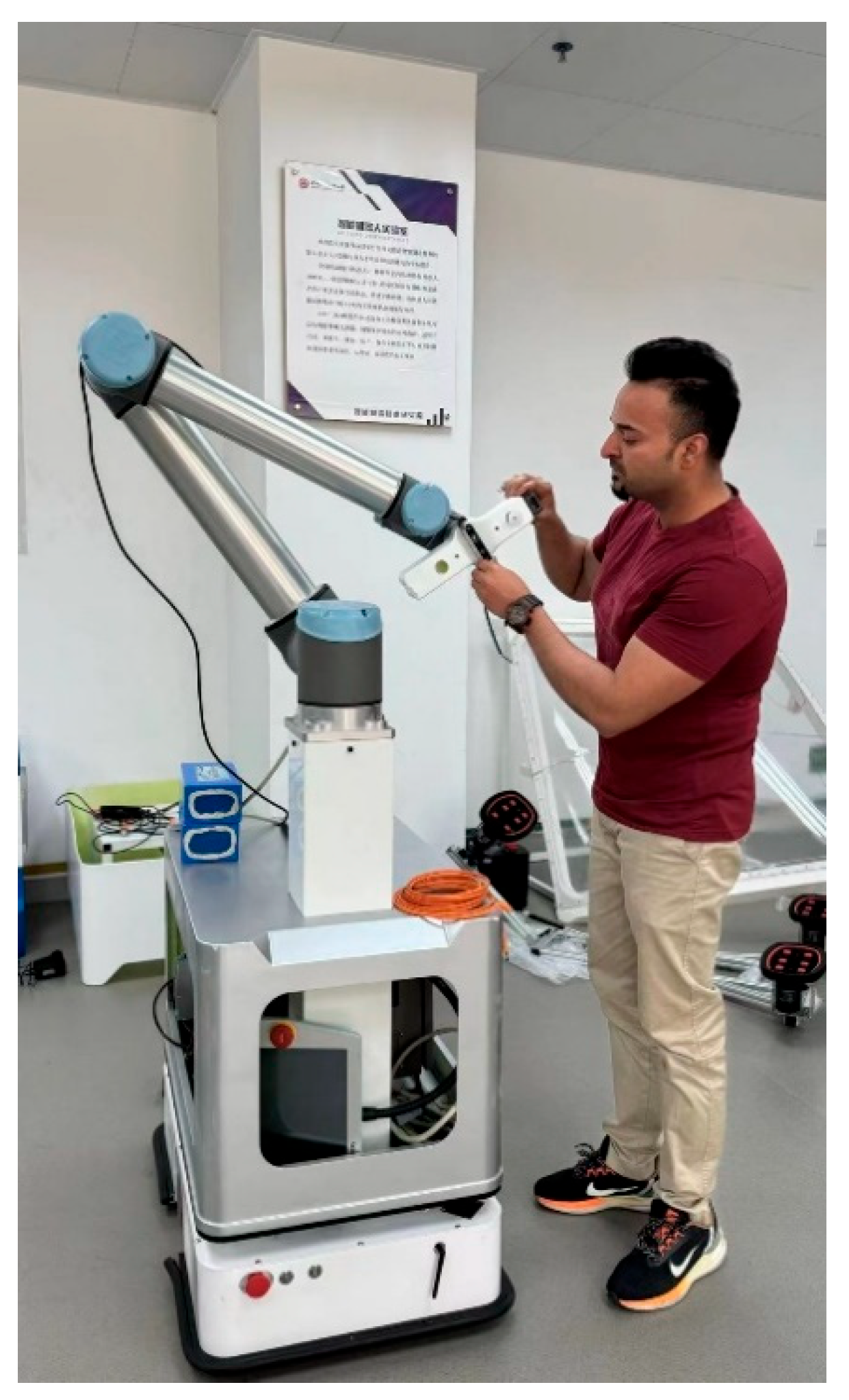

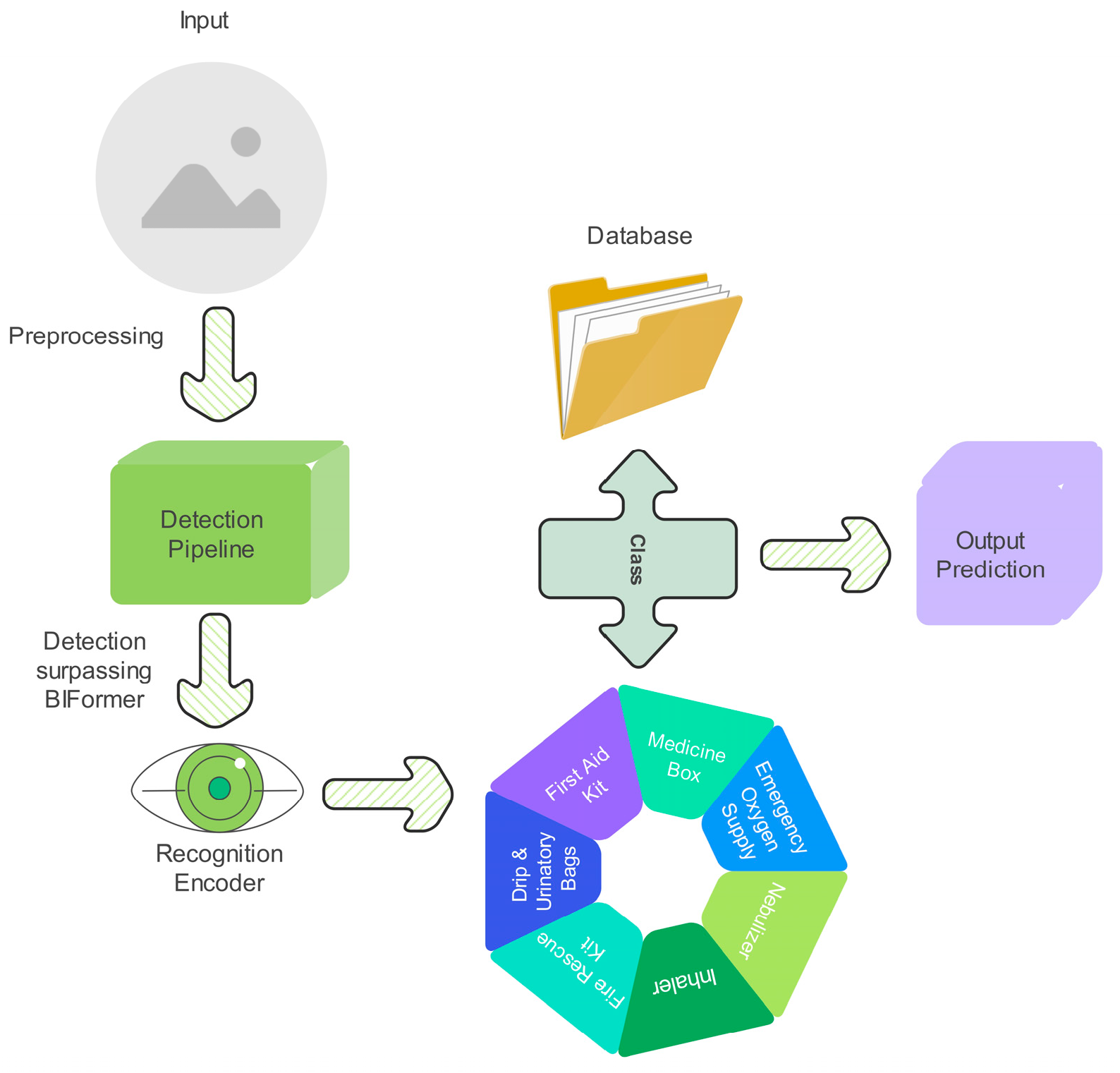

The proposed model is designed for an autonomous healthcare mobile robot that detects and recognizes medicines and medical supplies on healthcare shelf settings using a two-stage deep learning framework, as shown in

Figure 2. The pipeline processes input image frames in real time, starting with preprocessing, followed by object detection using EfficientDet, feature refinement via BiFormer, and fine-grained classification using ResNet-18 with triplet loss and OHNM. The goal is to accurately localize, distinguish, and recognize medical supplies and medicines while operating in cluttered environments where objects exhibit occlusions, visual similarity, and orientation variations. The system begins by capturing high-resolution image frames from the robot’s onboard camera, which are resized, normalized, and preprocessed before being passed into the EfficientDet-based object detection module. Given an input image

, EfficientDet extracts multi-scale feature representations using its Bidirectional Feature Pyramid Network (BiFPN) and compound scaling approach, ensuring improved localization precision for delicate and occluded objects. The model outputs bounding box predictions

, where

are the bounding box coordinates,

are the bounding box width and height, and

represents the class confidence score. Unlike conventional CNN-based detectors, which process fixed feature maps, BiFPN enables bidirectional feature fusion, computed as:

where

and

are feature maps from different network stages,

are weight matrices, allowing the network to adaptively refine feature importance, and

represents upsampled feature maps from adjacent. Once objects are detected, their region proposals are passed to the BiFormer-based feature refinement module. Standard CNNs suffer from feature redundancy and spatial misalignment, especially in healthcare shelf environments where objects have minor differences in labels. BiFormer improves classification by applying bi-level routing attention, selectively attending to discriminative spatial features. Given input features

X, the attention mechanism is computed, which filters irrelevant features and enhances classification robustness in cluttered scenes.

Finally, the refined feature maps are fed into the ResNet-18-based classification module, which learns a discriminative embedding space for fine-grained recognition. Instead of using cross-entropy loss, the system leverages triplet loss for metric learning, ensuring medicines with slightly similar packaging but different shapes or sizes remain separable in feature space. The triplet loss function is formulated as:

where

is the no. of training samples; α denotes the fixed margin parameter, a positive constant that enforces a minimum separation between the anchor positive and anchor negative distances in the embedding space, thereby encouraging discriminative feature separation;

,

, and

are the anchor sample (query medicine image), positive sample (same category as anchor), and negative sample (different category), respectively;

and

are the Euclidean distance function, ensuring that similar samples remain close while dissimilar samples are separated; and α is a margin parameter to enforce feature separation. Additionally, OHNM is used to prioritize difficult negative samples, further refining classification accuracy. The final recognized objects are mapped back to their bounding boxes and integrated into the robot’s inventory management system, updating healthcare environment databases for automated medicine tracking and retrieval. The model achieves over 20 FPS on a Jetson AGX Orin, enabling real-time deployment on the healthcare mobile robot. This deep learning-driven automation system enhances efficiency and reduces human dependency.

3.2. Dataset Collection and Preprocessing

To ensure the robustness and generalization of proposed healthcare mobile robot’s vision-based detection and recognition system, a custom dataset was meticulously curated, comprising high-resolution images of medicine boxes and medical supplies, including medicine boxes, first aid kits, emergency oxygen supplies, inhalers, nebulizers, fire rescue kit, and drip and urinatory bags, captured under diverse real-world conditions, as shown in

Figure 3 and

Figure 4.

Unlike publicly available datasets, which often lack occlusion-heavy, cluttered, and dynamically arranged shelf images found in real healthcare environments, this dataset was self-collected to simulate healthcare unit storage conditions. The dataset was compiled using a multi-camera setup, ensuring diverse perspectives and adaptability for real-time robotic vision. The image acquisition was conducted using a healthcare shelf type in various settings, capturing, after all data augmentation techniques, a total of 5000+ images of different medicines and medical supplies arranged in different lighting conditions (natural daylight, artificial healthcare lighting, low-light emergency room environments), varying shelf arrangements (stacked medicines, randomly placed medical supplies, rotated boxes), and occlusion and overlapping cases, simulating real-world cluttered healthcare settings. Each medicine or medical supply was captured from multiple angles to ensure robust feature learning, allowing the system to recognize objects even when partially visible.

Table 1 illustrates the details about the metadata (such as resolutions, per-class counts, camera specs).

The dataset was manually annotated using bounding boxes and class labels, ensuring precise ground truth mapping for EfficientDet-based object detection and ResNet-based fine-grained recognition. Formally, the dataset was defined as a set of labeled image samples:

where

represents an image sample of height

H, width

W, and channels

C, and

denotes the corresponding object category label for supervised training. Before training, all collected images underwent a standardized preprocessing pipeline to ensure uniformity, noise reduction, and optimal feature extraction across different lighting and occlusion conditions. The preprocessing steps include the following:

Image Resizing and Normalization: All images are resized to a fixed dimension of 1024 × 1024 pixels to match the input requirements of EfficientDet and ResNet-18. Pixel values are normalized to a [0, 1] range using min-max normalization, ensuring stable gradient updates during training:

- 2.

Data Augmentation and Illumination: Images are randomly rotated within flipped (horizontal and vertical) and cropped to simulate variations in real-world medicine shelf orientations. Brightness and contrast adjustments are applied, where denotes a uniform distribution and and in are the lower and upper bounds, ensuring adaptation to different healthcare environment lighting scenarios:

Noise Injection: Gaussian noise with variance is applied to simulate real-world motion blur and sensor noise in robotic navigation.

Bounding Box Refinement for EfficientDet: To optimize object detection, bounding boxes are refined using IoU-based (Intersection over Union) filtering, discarding low-confidence annotations and ensuring accurate region proposals:

where

and

are overlapping bounding boxes.

Hard Sample Mining for Recognition Training: Difficult-to-classify images (e.g., medicine and medical supplies that may vary in size but with minor visual differences) are prioritized in mini-batches, ensuring ResNet-18 learns fine-grained details more effectively.

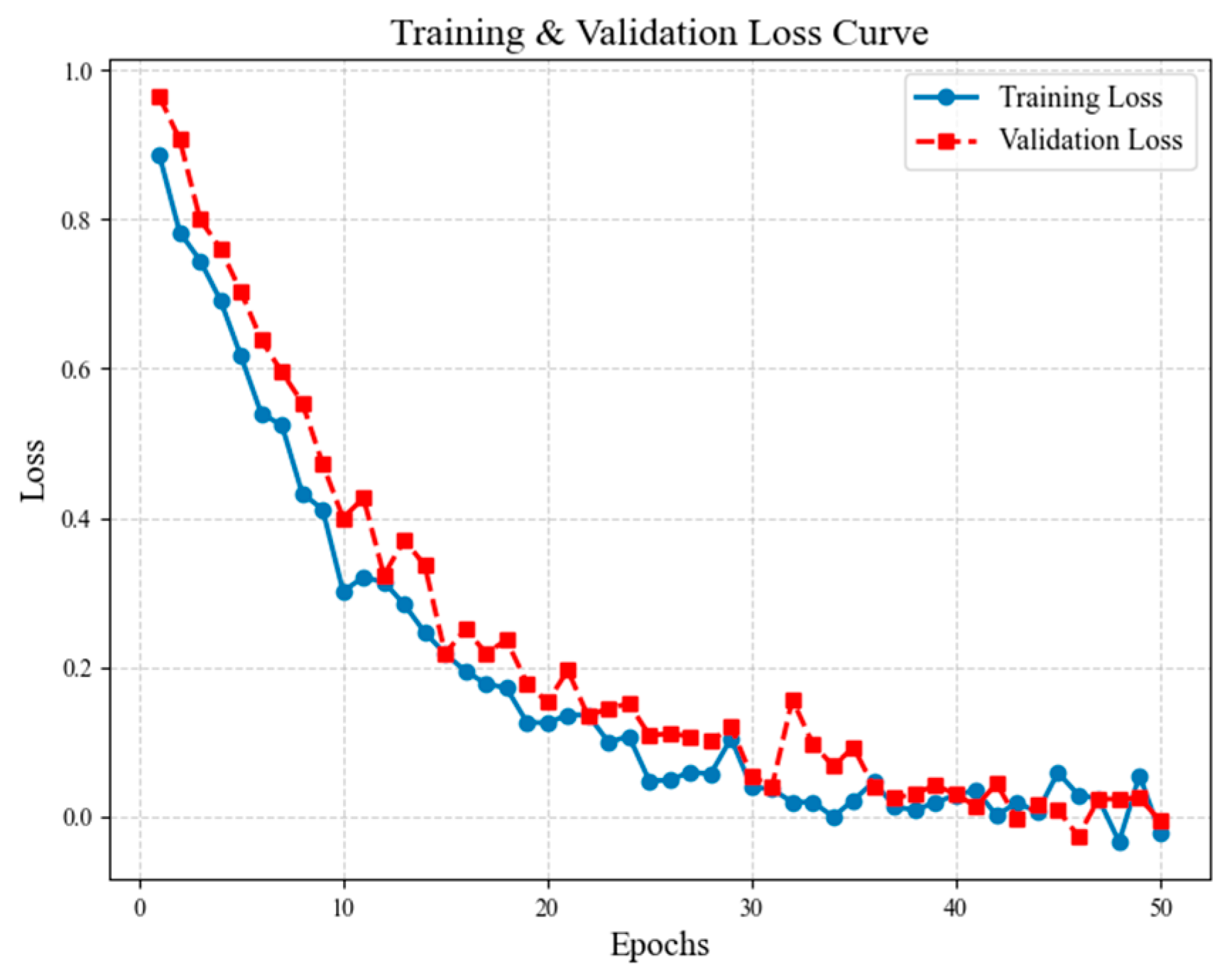

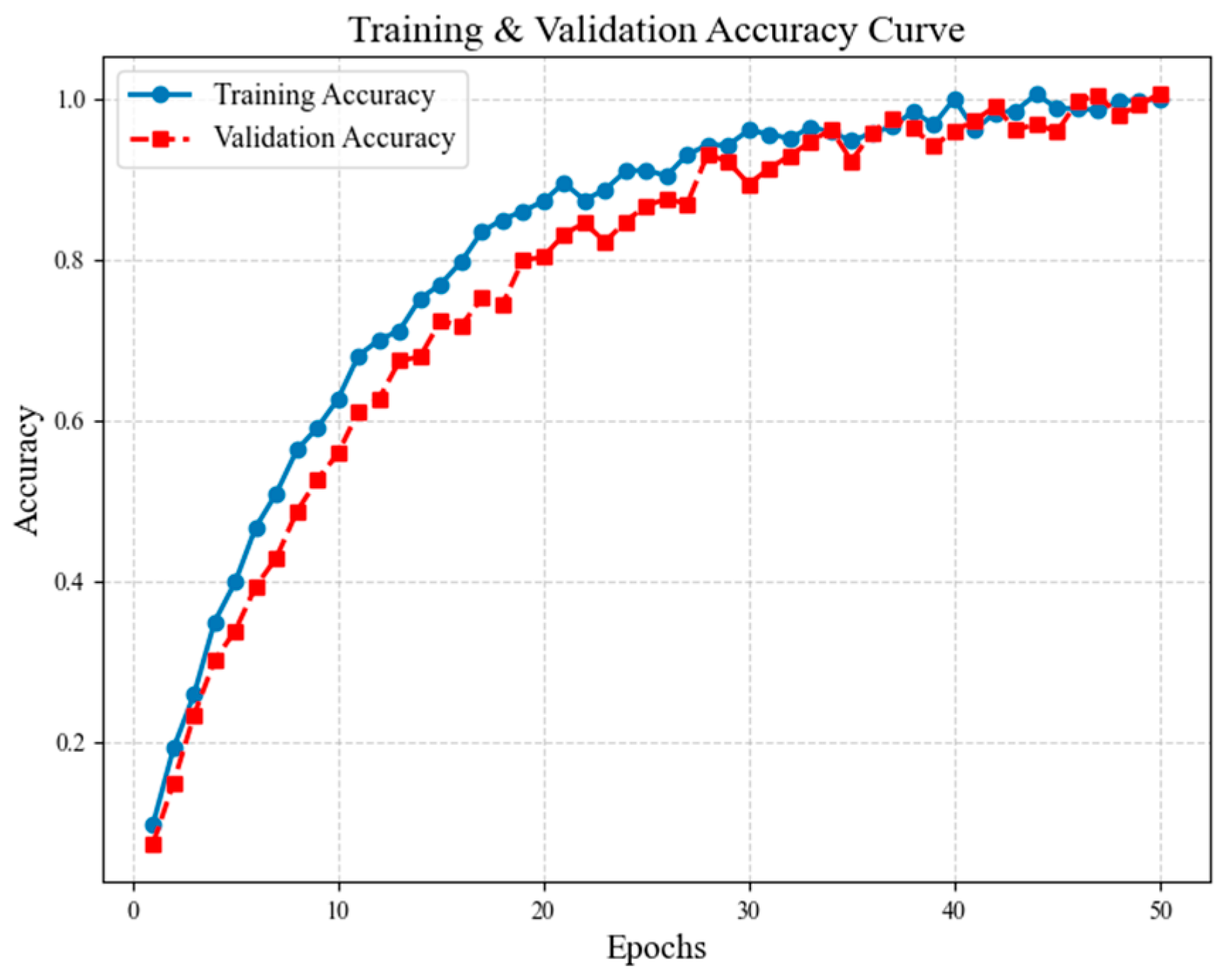

Additionally, to prevent overfitting and ensure unbiased evaluation, the dataset is split into three subsets, including the training set (70%), used for model learning, the validation set (20%), used to fine-tune hyperparameters, and the test set (10%), used for performance evaluation under unseen conditions. This structured data collection and preprocessing pipeline ensures that the EfficientDet-BiFormer-ResNet hybrid model achieves high accuracy, robust generalization, and real-time inference capabilities for healthcare robotic applications.

3.3. Object Detection Module

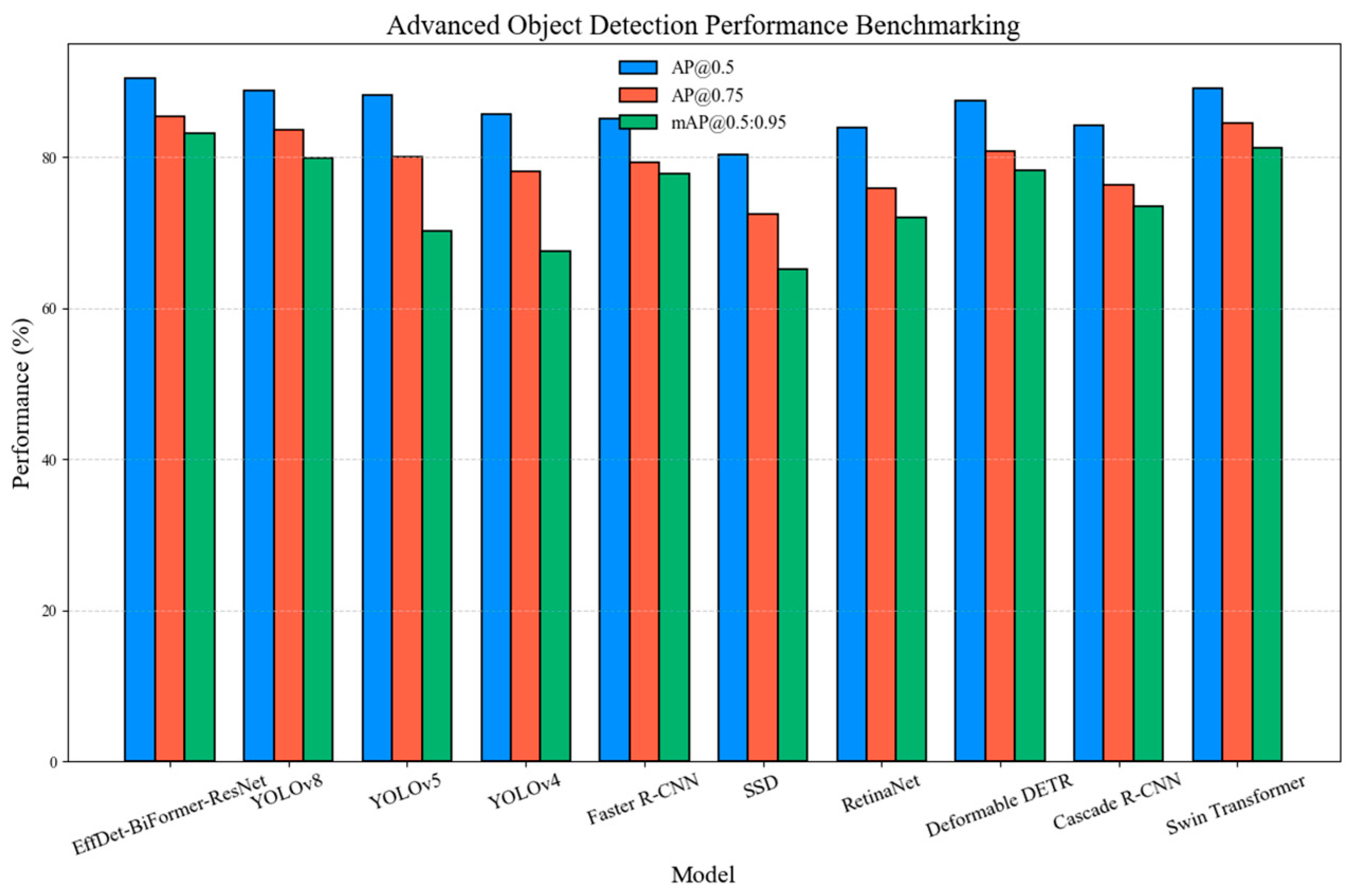

Traditional object detectors, such as Faster R-CNN, SSD, and YOLO variants, have demonstrated significant success in general object detection tasks, yet they struggle in specialized domains. YOLO-based models, despite their high-speed inference, often compromise on detection accuracy for small and overlapping objects. SSD, on the other hand, lacks the hierarchical feature fusion capabilities required for handling scale variations, a critical factor in detecting small medicine boxes placed alongside larger medical supplies or other supplies. The object detection module in our proposed model serves as the first stage in the proposed vision pipeline, responsible for the precise localization and detection of medicine and medical supplies within complex healthcare shelf environments. Given the cluttered arrangement, variable lighting, and frequent occlusions in medical storage settings, an optimized detection framework is required to ensure robust multi-scale detection with real-time efficiency. To achieve this, EfficientDet is employed as the backbone detection model, leveraging Bidirectional Feature Pyramid Networks (BiFPNs) for multi-scale feature aggregation alongside Biand compound scaling for optimal trade-offs between accuracy, computational efficiency, and model depth.

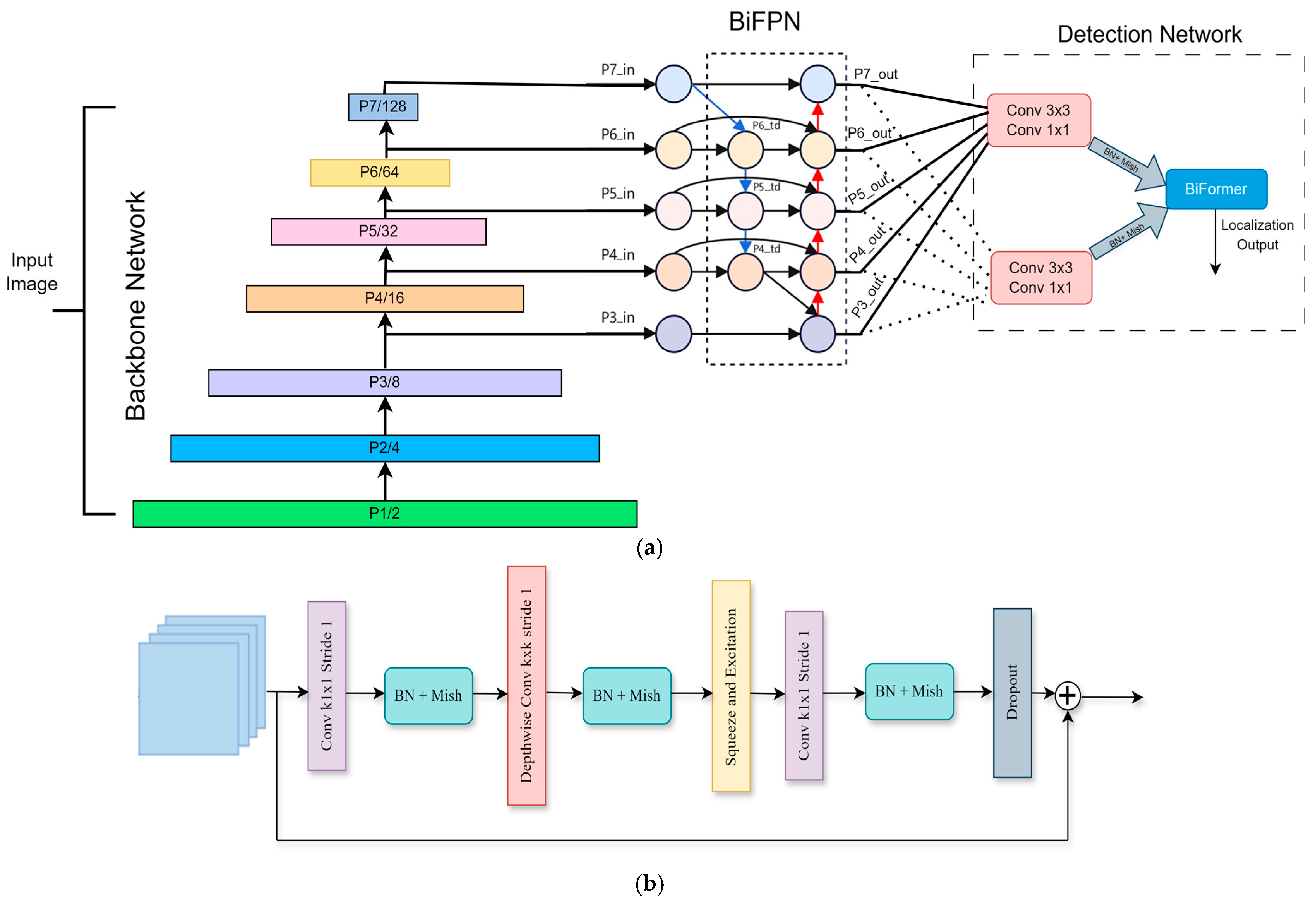

EfficientDet addresses these challenges through two primary architectural innovations in our model, including BiFPN, which is a multi-scale feature aggregation mechanism that enhances small-object detection and improves spatial feature propagation across different levels of the feature hierarchy. The EfficientDet architecture uniquely integrates bidirectional and cross-scale connectivity by creating supplementary connections between original input and output nodes at corresponding hierarchical levels. This approach involves stacking identical feature layers multiple times, effectively balancing detection precision with computational efficiency. As clearly demonstrated in the EfficientDet algorithm depicted in the accompanying network diagram shown in

Figure 5a, EfficientDet is structured into three distinct stages. The first is feature extraction as the backbone, which extracts critical features by leveraging Neural Architecture Search (NAS) in combination with compound scaling strategies. The next stage is Feature Integration, where BiFPN combines cross-layer features, significantly enhancing detection performance through enriched multi-scale representations. The last stage, Detection and Localization, is performed by dedicated classification and regression networks, which accurately predict the object class and pinpoint object locations.

Furthermore, the foundational and terminal layers within EfficientDet typically consist of simple convolutional layers combined with batch normalization and the Mish activation function, primarily using convolutional kernels sized 3 × 3 and 1 × 1. In the intermediate layers, the architecture consistently employs a series of convolutional modules known as Mobile Inverted Bottleneck Convolution (MBConv) blocks. Initially, each MBConv block expands the dimensions of the input features through a convolutional operation utilizing 1 × 1 kernels. Subsequently, the expanded features undergo depthwise separable convolutions (DSCs) performed with either 3 × 3 or 5 × 5 kernels. The block then concludes with the integration of a Squeeze-and-Excitation Network (SENet), which compresses the dimensionality back down via an additional 1 × 1 convolution, thereby refining the feature representation effectively.

Figure 5b provides a visual representation of this detailed structure. Additionally, EfficientDet utilizes compound scaling methods to fine-tune network dimensions, including depth, width, and image resolution to achieve optimal efficiency. These methods yield a compact yet powerful architecture suitable for deployment in environments with limited computational resources, such as robotic platforms. The core part of EfficientDet is BiFPN, which integrates bidirectional pathways with advanced scaling mechanisms. This core innovation not only enhances the model’s learning efficiency during training but also ensures robust real-time processing and highly accurate object detection. BiFPN introduces bidirectional cross-scale connections, ensuring that features from multiple resolutions interact effectively, enhancing detection accuracy for small objects in the healthcare shelf setting. Formally, for each feature level

, the BiFPN representation is computed as Equation (1)

,

This mechanism allows the detector to dynamically prioritize critical features in cluttered shelves, preventing small objects, such as individual medicine boxes, from being ignored in dominant background elements.

3.3.1. Bounding Box Regression and Training Optimization

EfficientDet uses an anchor-free object detection strategy, where bounding boxes are generated based on scale-invariant keypoints instead of predefined anchor sizes. This reduces computational redundancy while ensuring that detection generalizes well to varying shelf layouts. The detection module predicts a set of bounding boxes

B and confidence scores

c, defined as:

where

N donates as the number of instances,

are the center coordinates of the detected object,

denotes the width and height of the bounding box, and

is the classification confidence score, indicating the probability of the object belonging to a particular class. The bounding box loss function

is defined below, where

is the predicted bounding box and

is the ground truth annotation:

The EfficientDet model was trained using a weighted focal loss function, which adjusts the loss contribution of hard and easy examples, ensuring that the detector focuses on difficult-to-detect shelf objects rather than being dominated by easily recognizable objects. This focal loss function is defined as:

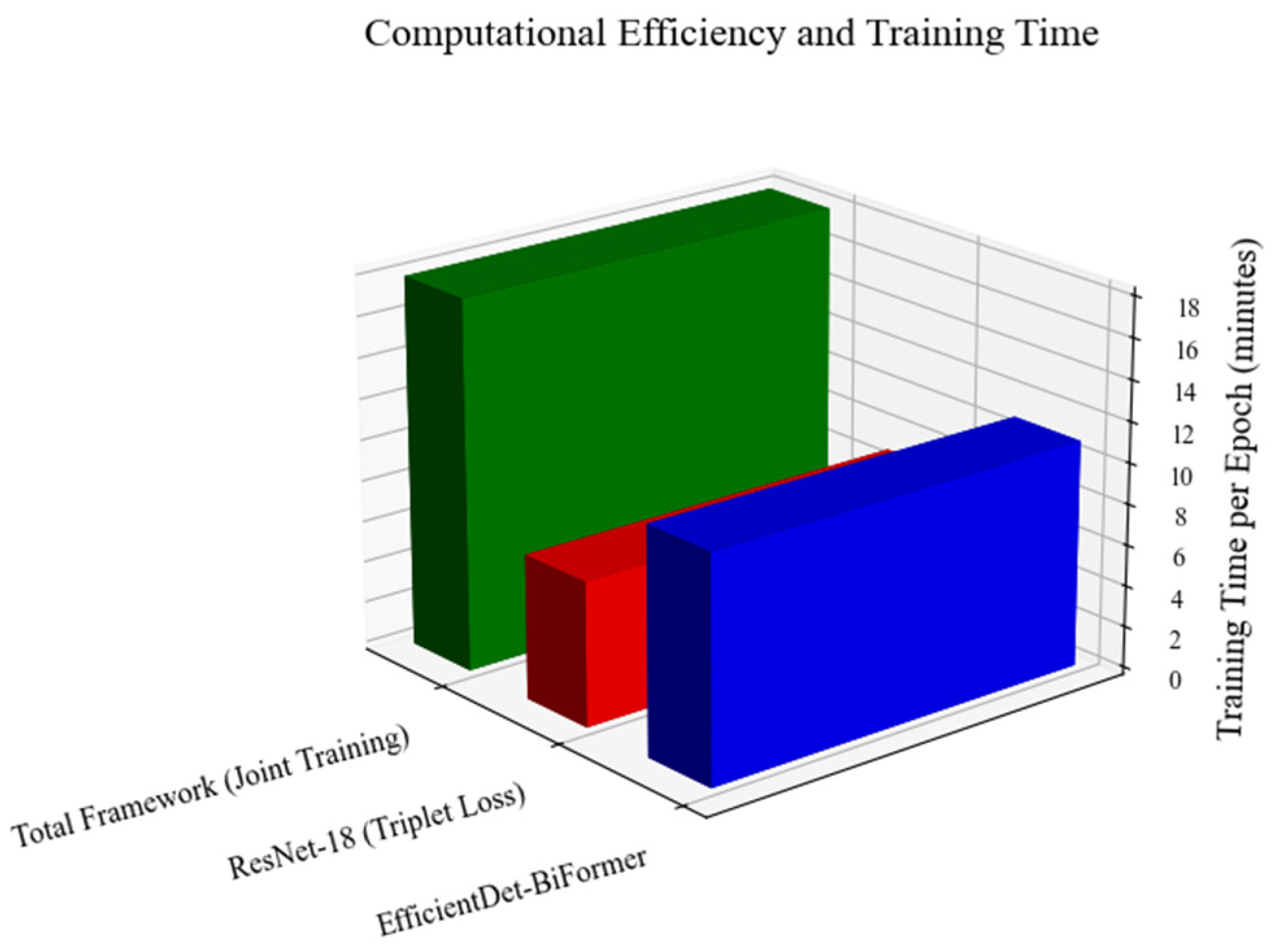

where

is the predicted probability for the target class,

is the focusing parameter, controlling the importance given to misclassified samples. and α is a class-specific weighting factor, preventing dominance by majority classes. For real-time deployment in the healthcare mobile robot, EfficientDet was trained with the following hyperparameters: the Image Input Size was 1024 × 1024 and the batch size was 16. The Adam optimizer was used with cosine learning rate decay, with an Initial Learning Rate of 0.0002 and a total number of total training epochs of 100, which is discussed in detail in subsequent sections.

3.3.2. BiFormer for Feature Refinement Module

Widely recognized for modeling long-range dependencies, the self-attention mechanism has become a key component in modern object detection frameworks [

38]. However, its benefits come at the cost of a substantial memory footprint and considerable computational overhead. To mitigate these issues, researchers have introduced a variety of handcrafted sparse attention patterns [

39] aimed at reducing model complexity. Although these patterns ease the computational burden, they still fail to fully capture long-range relationships. BiFormer contributes novelty by using dynamic sparse attention to selectively focus on discriminative details of each detected region. BiFormer differs significantly from traditional feature fusion layers like FPN or BiFPN, which apply static feature merging across resolutions. In contrast, BiFormer introduces bi-level routing attention, where each token first performs region-to-region selection followed by intra-region token-level refinement. This enables adaptive attention computation, which has been shown to outperform uniform attention mechanisms in visually cluttered scenarios. Zhu et al. demonstrated that BiFormer improves object differentiation in complex visual contexts by suppressing irrelevant spatial noise and enhancing semantically coherent regions, even in non-contiguous spatial layouts [

11]. Such characteristics are particularly suited to our healthcare inventory scenes, where visual confusion (due to occlusion or similar packaging) can significantly hinder CNN-based methods. In our pipeline, BiFormer is positioned after EfficientDet’s BiFPN output, where it enhances contextual representation by selecting discriminative attention patterns aligned with visual semantics. Thus, even without maps, existing evidence supports its effectiveness as a mid-level context enhancer for fine-grained medical inventory differentiation. By routing attention to fine details, BiFormer helps the system confidently distinguish look-alike items, significantly reducing misclassification. This novel integration thus provides improved contextual feature refinement over EfficientDet alone, as evidenced by fewer false positives and higher

mAP when the BiFormer is included (

mAP increases from 71.4% to 83.2% in our ablation).

Although the EfficientDet-based detection module effectively localizes healthcare shelf objects, traditional CNN-based feature extractors often struggle to differentiate between visually similar medical items, especially when packaging variations are minimal. Many medicines exhibit identical box designs, minor color differences, or nearly indistinguishable text-based labels, making conventional recognition models prone to misclassification and false positives. To address this challenge, we incorporate BiFormer (Bi-level Routing Transformer) as a feature refinement module, which introduces context-aware attention mechanisms to enhance the discriminative capability of the vision pipeline.

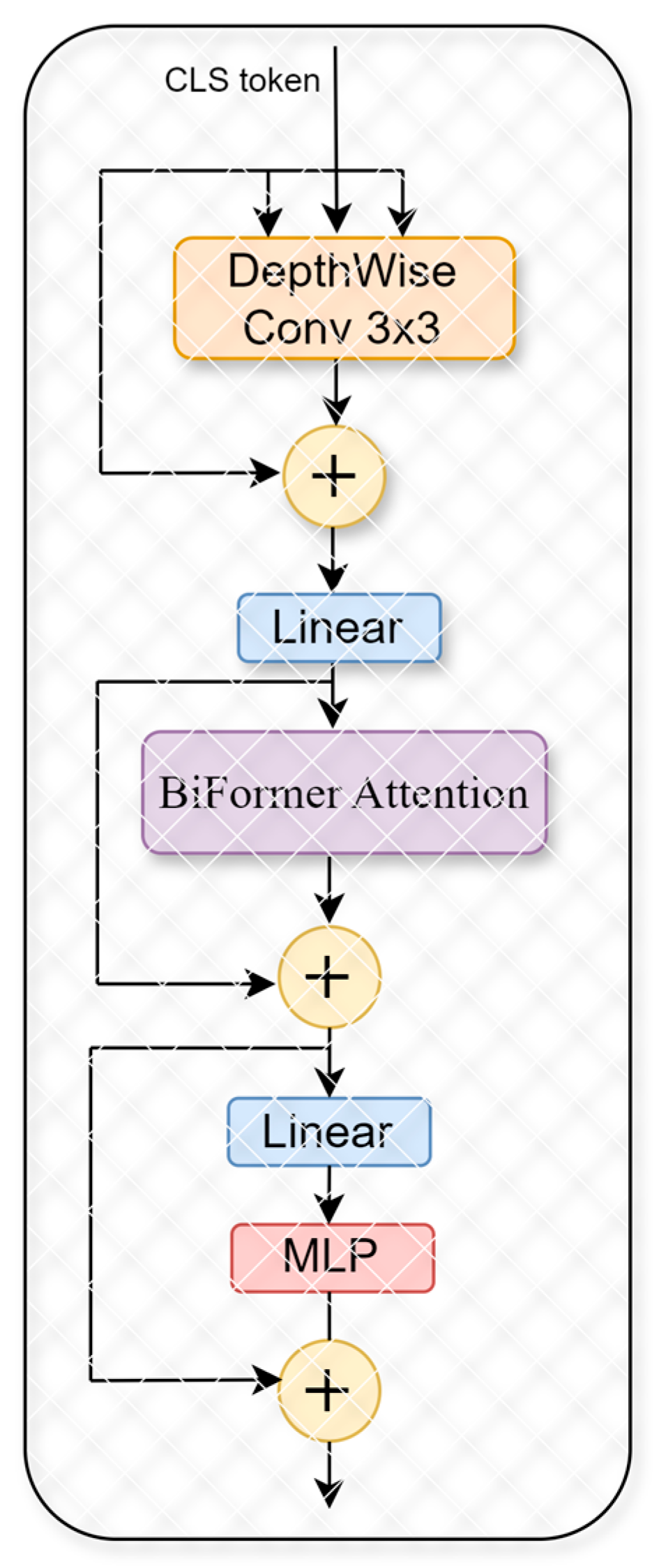

In this study, we utilize the improved generalized visual network framework of BifFormer. The fundamental innovation behind BiFormer lies in its two-stage attention mechanism inspired by [

40]. Initially, it conducts a preliminary filtering of less relevant key-value pairs at a broader, regional scope; subsequently, it refines this attention at a more detailed, token-level granularity. This dual-stage approach not only allows the network greater adaptability in handling diverse content but also significantly boosts computational efficiency and minimizes memory requirements. Thus, the BiFormer model effectively preserves the strengths inherent in the Transformer architecture while also offering enhanced flexibility in content perception and resource management, as illustrated in

Figure 6, which depicts the structure of the BiFormer model. BiFormer operates on a bi-level routing attention mechanism, ensuring that the model selectively focuses on the most informative spatial features.

Given an input feature map

derived from the EfficientDet backbone, BiFormer utilizes an attention-based feature selection technique. The approach operates by segmenting the feature map into

S ×

S distinct regions without overlap. Each of these regions consists of multiple feature vectors, each characterized by a dimensionality denoted as

. After reshaping the input map, the regions are partitioned into

, followed by a linear transformation to obtain

,

and

, where

are the projection weights. A region-to-region affinity matrix is then computed by performing matrix multiplication on

, with each element of the resulting matrix,

, capturing the semantic relationship between regions:

Unlike self-attention in conventional Transformers, which computes relationships between all tokens in an image, here, the bi-level routing strategy incorporates, in which tokens are first filtered based on priorities before attention is applied. This approach enables the network to selectively concentrate on highly informative areas, thus considerably lowering computational demands and simultaneously improving the accuracy of recognition tasks, particularly in densely populated medical shelves. The token-to-token attention mechanism operates by employing a region-specific routing strategy, where a routing index matrix,

, derived by selecting the top

k indices from attention scores

, guides the formation of detailed attention interactions among tokens within these prioritized regions. These regions, though effectively targeted, might be dispersed widely across the entire spatial feature maps. The key

tensors are gathered by:

Subsequently, the gathered key-value pairs undergo a dedicated attention computation, resulting in the final output representation

Z.

where

LCE(

V) denotes a local context enhancement component specifically designed to enrich the descriptive power of local context features, carefully balancing improved representation with computational efficiency.

3.4. Recognition Module

The final stage of the vision system pipeline is the recognition module, which is responsible for fine-grained classification of detected medicines and medical supplies. The ResNet-18 backbone was selected not only for its low inference latency on embedded robotic platforms, but also due to its proven balance of accuracy and computational efficiency in data-limited, fine-grained classification settings [

41]. By coupling ResNet-18 with triplet loss and Online Hard Negative Mining, we achieve competitive discriminative performance without incurring the complexity and higher power demands of deeper architectures [

42].

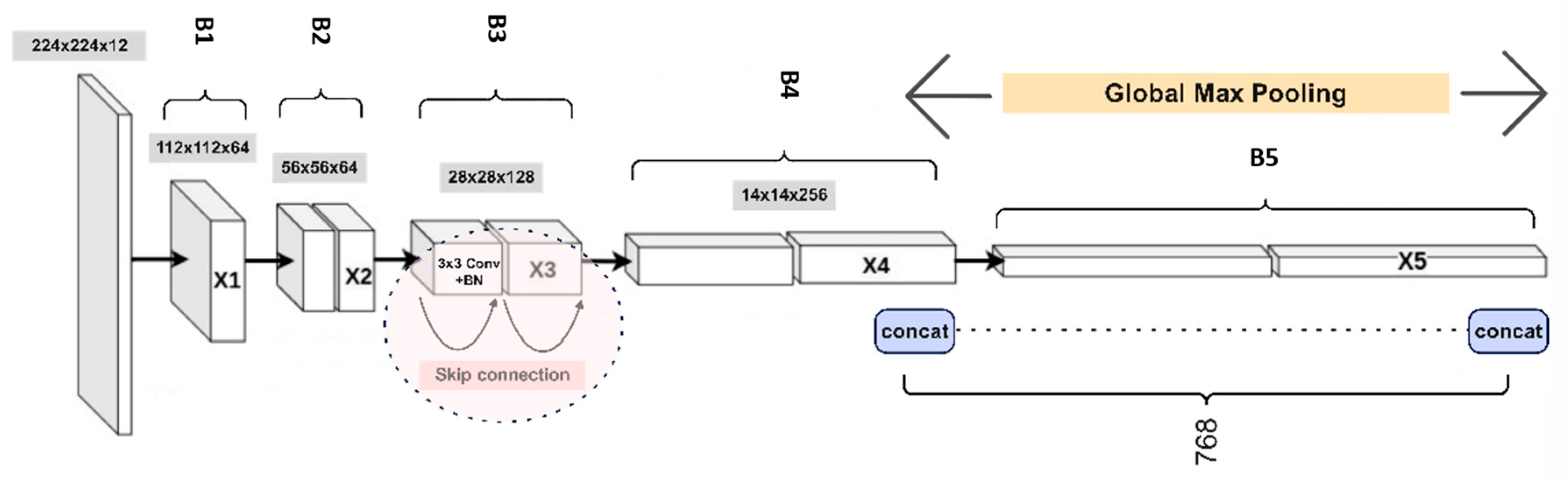

As illustrated in

Figure 7, the recognition framework classifies each object identified in the rack image from the preceding step. ResNet-18 was chosen as the feature extractor due to its residual learning mechanism, which effectively mitigates vanishing gradient issues while maintaining computational efficiency. The embedder utilized, illustrated in

Figure 7, is a ResNet-18 network pre-trained on the ImageNet1K [

43] dataset. The embedding module consists of five consecutive convolutional layers, labeled

B1 to

B5, each generating progressively smaller feature maps with sizes 112, 56, 28, 14, and 7, respectively. These feature maps are denoted as X1, X2, X3, X4, and X5. In this study, final image descriptors

and

5 were obtained by employing the MAC (Maximum Activations of Convolutions) [

44] operation specifically on blocks

B4 and

B5, as depicted below.

Given an input image extracted from a bounding box proposal in EfficientDet, ResNet-18 applies a series of convolutional layers, batch normalization, and Mish activations, followed by global average pooling to extract high-level feature representations. The feature transformation at each residual block is given by:

where

x is the input feature,

and

are learnable weight matrices for convolutional operations,

and

are bias terms, and σ represents the activation function. By allowing skip connections, ResNet-18 ensures that gradient propagation remains stable, enabling deeper feature learning without degradation. The final extracted feature vector from ResNet-18 is then projected into a 128-dimensional embedding space, where metric learning is applied for fine-grained classification.

The ResNet-18-based embedder is trained in an offline setting utilizing a structured sampling of triplets, comprising an anchor image and a positive , sharing semantic similarity, and a negative image from a distinct semantic class, thereby effectively capturing discriminative embeddings. Unlike general object classification tasks, where distinct objects have clear differentiating features, medical inventory presents significant object similarity due to similar packaging, near-identical outlines, etc. Additionally, the medical supplies we collected in the dataset occasionally shared structural similarities, making traditional softmax-based classification methods inadequate for precise recognition. Instead of using conventional cross-entropy loss for classification, the model is trained with metric learning using triplet loss, ensuring that objects from the same category are mapped closer in embedding space while different categories are pushed further apart. By minimizing the defined loss function, the embedder is encouraged to map images belonging to identical classes closely in the learned embedding space, simultaneously distancing those images that represent different semantic categories. For each training sample, a triplet (anchor, positive, negative) is selected, and the loss function is computed as Equation (2).

By training with triplet loss, the system learns to generate highly discriminative feature embeddings. Hence, OHNM is integrated into the training pipeline, ensuring that the most difficult samples are prioritized, significantly improving classification robustness. In standard triplet selection, random negative samples may not always contribute to effective learning, as some negatives may already be well-separated from the anchor. Mathematically, it selects the negative sample

that maximizes the triplet loss function and ensures that the model prioritizes the most difficult classification cases, leading to better generalization performance on unseen medical inventory:

During training, each mini-batch of size

b involves the careful selection of the hardest negative example for every positive sample directly from within the same batch, thus enhancing model discriminative power through challenging and effective negative sample mining and reducing the total computational complexity to

from

. Mathematically,

where

is a mini-batch data,

denotes the image augmentation operator,

denotes an anchor generated from

using data augmentation. which denotes a positive sample. and

denotes a negative sample. In the final stage, from Equations (15)–(21), the vectors are concatenated into a single descriptor and normalized as: