1. Introduction

Simultaneous localization and mapping (SLAM) technology has become one of the crucial technologies in this industry, with the ongoing development and popularity of mobile robots [

1], specifically for obtaining the ability of robots to navigate and perform tasks in unknown settings autonomously. There is a great deal of interest in SLAM applications and research. Particularly, vision-based SLAM (VSLAM) technology stands out in that it can accurately pinpoint a robot’s location and create a comprehensive map of its environment at a minimal hardware cost, facilitating autonomous navigation and obstacle avoidance.

Visual SLAM (VSLAM) has garnered significant research attention in recent years due to its numerous advantages over laser-based SLAM systems, particularly its reliance on cost-effective camera sensors as the primary sensing modality, thereby driving the development of a series of mature VSLAM algorithms. For instance, some scholars have proposed and improved a series of algorithms, such as ORB-SLAM [

2,

3,

4], RGB-D SLAM [

5], LSD-SLAM [

6], and Direct Sparse Mapping [

7]. Among existing approaches, the ORB-SLAM series represents the most established feature point-based visual SLAM framework, widely regarded as a benchmark in the field. Campos et al. [

4] proposed ORB-SLAM, the most advanced algorithm, which demonstrates robust real-time performance across diverse environments, including various indoor, outdoor, and multi-scale scenarios. Despite significant progress, VSLAM algorithms still face many challenges in practical applications, as the above algorithms are mainly designed for static environments to ensure the robustness and efficiency of the system. However, in real life, dynamic objects like people and animals are unavoidable, which makes it challenging for current algorithms to adjust to various situations. The fundamental cause of the aforementioned issue lies in the pervasive presence of moving objects within dynamic scenes. VSLAM algorithms typically extract both static and dynamic feature points, with the latter predominantly emerging from highly textured regions in the environment [

8]. Consequently, the presence of dynamic feature points leads to a significant accumulation of erroneous feature correspondences, substantially degrading the stability and reliability of the SLAM system. In this case, faulty pose estimate and feature matching result in erroneous placement, variations in the creation of environmental maps, and the potential for many overlapping images inside the generated map. In order to enhance the precision and resilience of indoor positioning systems, researchers have employed weighting algorithms based on physical distances and clustering techniques for virtual access points [

9]. This approach has also informed our own research. Thus, an increasing number of researchers in the field of mobile robot navigation focus on VSLAM algorithms that can adapt to dynamic scenes, minimize interference from dynamic objects, and improve positioning accuracy and system robustness [

10].

To overcome the previously discussed challenges, an increasing number of researchers are considering dynamic objects in Visual SLAM (VSLAM) algorithms, thereby introducing the concept of dynamic VSLAM. Existing dynamic VSLAM employs a variety of strategies for handling dynamic objects, primarily including geometric-based and deep learning-based methods. Geometric-based methods identify dynamic elements by analyzing the motion consistency of objects in a scene. Optical flow and background removal are two popular methods for identifying and removing dynamic objects, which lessen their influence on the SLAM system. However, these approaches (including DM-SLAM [

11] and ESD-SLAM [

12]) remain limited in rapidly changing dynamic environments or complex scenes due to their fundamental dependence on the stability of visual features in image sequences. Conversely, convolutional neural networks (CNNs) are the main tool used by deep learning-based techniques to recognize dynamic objects in the scene. These techniques are effective in dynamic object recognition, but they frequently suffer from high computational costs and constrained real-time processing power. For example, DynaSLAM [

13] combines ORB-SLAM2 with the Mask R-CNN instance segmentation algorithm to remove dynamic feature points. While this approach enhances SLAM accuracy in dynamic environments, its significantly increased computational complexity and resource demands impose practical constraints on real-time performance.

In summary, it offers BMP-SLAM, a semantic SLAM system based on object recognition for indoor dynamic settings, to effectively improve the positioning accuracy of VSLAM algorithms in dynamic situations and enable real-time operation. In order to minimize the influence of dynamic objects on the positioning and mapping of the SLAM system, BMP-SLAM combines object recognition with a motion probability propagation model based on the Bayesian theorem. Concurrently, it creates dense point cloud maps to help mobile robots accomplish tasks. The following is a summary of this paper’s primary contributions:

(1) To address the issues of poor positioning accuracy and robustness of SLAM systems in dynamic environments, BMP-SLAM integrates YOLOv5 to construct a dynamic object detection thread, which can identify and process moving objects in real-time and efficiently and significantly improves the positioning accuracy and robustness of the SLAM system in a dynamic environment.

(2) YOLOv5 may suffer from false positives and missed detection, leading to incomplete removal of dynamic features. Additionally, the geometric redundancy in dynamic bounding boxes can result in erroneous elimination of static features within the detected regions. To address these limitations, we propose the Bayesian Moving Probability model for motion by combining historical information and time-series data. The model enables the dynamic estimation of key points not to rely on a single observation, but to enhance the judgment through accumulated data. This approach significantly improves the SLAM system’s ability to perceive and understand the dynamic environment.

(3) To fulfill the complex robot navigation task, the dense point cloud mapping thread is constructed, and the static dense point cloud map is constructed by combining the semantic information captured by the dynamic target detection thread and the Bayesian Moving Probability model to cull the dynamic feature points.

3. Materials and Methods

3.1. System Architecture

In real-world applications, SLAM algorithms continue to face numerous obstacles, particularly in dynamic situations. Moving objects can have a significant impact on the stability and accuracy of SLAM systems in dynamic environments. In order to solve this problem, the research in this paper focuses mainly on reducing the negative impact of dynamic environments on the performance of the algorithms. ORB-SLAM3 has been leading other VSLAM algorithms due to its advantages such as efficient feature extraction, powerful closed-loop detection capability and effective relocation strategy. Therefore, the algorithms in this paper are mainly improved based on ORB-SLAM3 so as to achieve excellent performance even in dynamic environments.

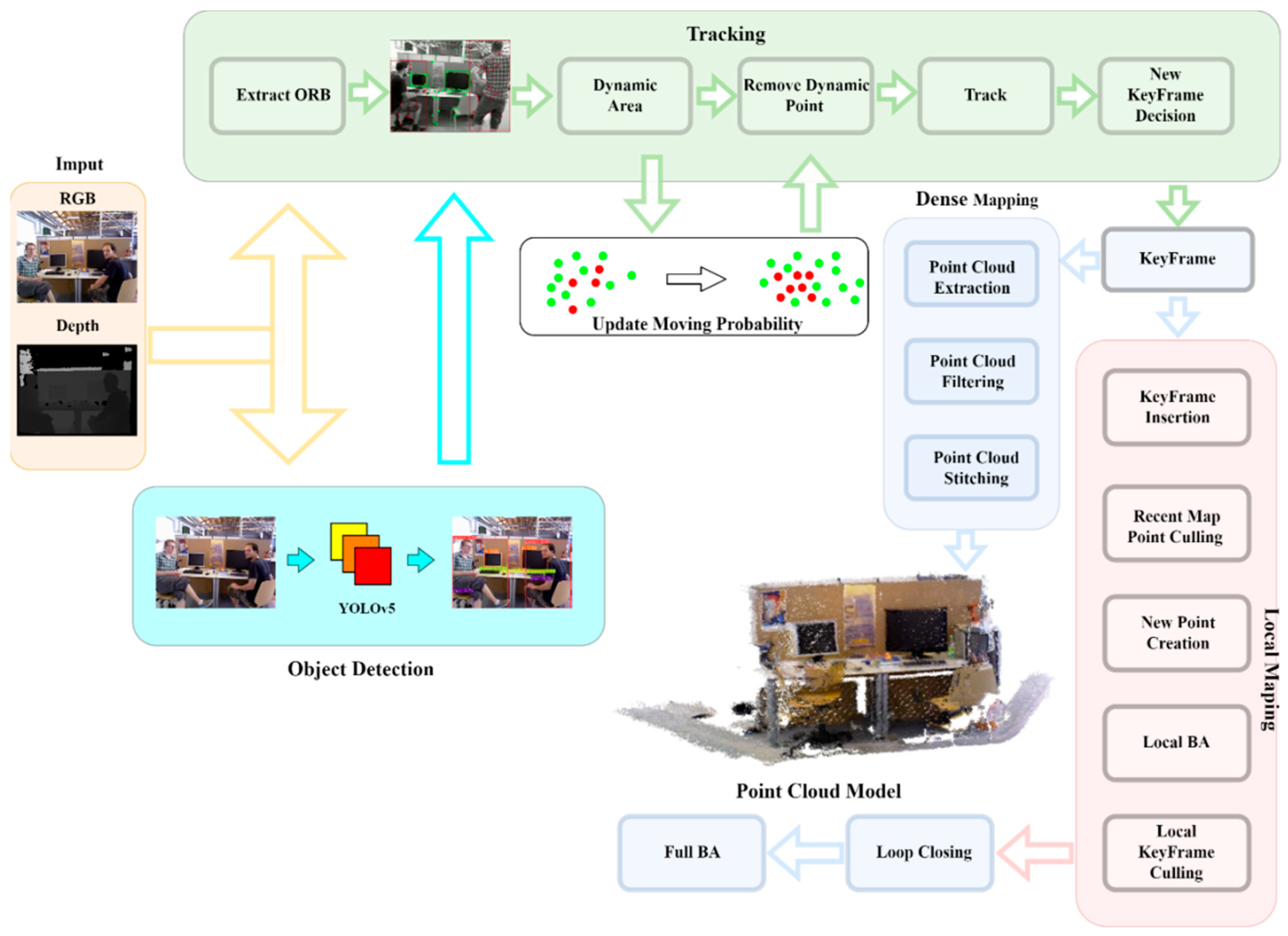

ORB-SLAM3 primarily consists of four core threads: tracking, local mapping, loop closure detection, and map maintenance. Three new modules are added to the foundation of this study, as illustrated in

Figure 1: dynamic object identification, the Bayesian Moving Probability model, and dense point cloud reconstruction. Firstly, the captured RGB image and depth image are input by the depth camera, and then, the tracking thread and the dynamic target detection thread are performed simultaneously. The tracking thread mainly performs ORB feature point extraction for the input RGB image to obtain the feature point information in the scene. The dynamic target detection thread mainly acquires semantic information in the RGB image through YOLOv5, such as the target’s label information and the coordinate information of the bounding box. Subsequently, based on the label information of the target, high dynamic objects, medium dynamic objects and low dynamic objects are identified, and the dynamic region is determined by combining the coordinate information of the ORB feature points with the coordinate information of the target bounding box. Then, the key points for different regions are propagated by the Bayesian motion probability propagation model to achieve the dynamic key point rejection, so that the ORB-SLAM3 algorithm does not retain them in the post-posture tracking and mapping process, as a way to improve the accuracy and robustness of the system in dynamic environments. Finally, the 3D information is extracted using the RGB images and depth images of the keyframes updated in the tracking thread, and a local point cloud with appropriate size and density is generated using voxel mesh filtering, transformed to the global coordinate system, and merged with the global map to create a dense point cloud map of the indoor 3D scene.

3.2. Dynamic Target Detection

To decrease the effect of dynamic targets on the SLAM system’s localization and map creation, semantic information is retrieved via object detection. This information is then fed into the BMP-SLAM system as semantic restrictions. To improve the system’s accuracy and robustness in dynamic situations. Since YOLOv5 employs CSPDarknet as its backbone network, it optimizes the transmission of feature maps via CSP (Cross Stage Partial connections), decreasing computation while retaining effective feature extraction. It also offers outstanding real-time performance, high detection accuracy, and can handle complicated interior dynamic settings; hence, BMP-SLAM employs YOLOv5 for dynamic target identification.

The YOLOv5 network architecture primarily consists of the Backbone, Neck, and Prediction networks, as shown in

Figure 2. The Backbone Network uses CSPDarknet as its framework, combining Focus layers for initial feature extraction and multiple convolutional layers and CSP structures to extract image features. The Neck Network adopts the PANet (Path Aggregation Network) structure to enhance the fusion of features at different scales, thereby improving the model’s detection capability for targets of varying sizes. The Prediction Head Network performs category classification and bounding box regression on multiple scales, utilizing Anchor Boxes to predict the position and size of targets, ensuring effective detection across various scales. The whole structure is designed so that YOLOv5 can not only efficiently extract features from images (Backbone), but also synthesizes these features at different scales for effective target detection (Neck), and ultimately accurately predict the target’s category and location (Prediction).

Since BMP-SLAM is primarily meant for inside dynamic scenes, we pre-train YOLOv5 with the COCO [

31] dataset, which contains the bulk of common interior items. The detection results are achieved, as shown in

Figure 3. As seen in

Figure 3a, an RGB camera captures a picture sequence that is used as an input to YOLOv5 in this paper. Following Yolov5 processing, the outputs are shown in

Figure 3b, where it is clear that distinct objects yield varied-sized anchor frames along with labelling information.

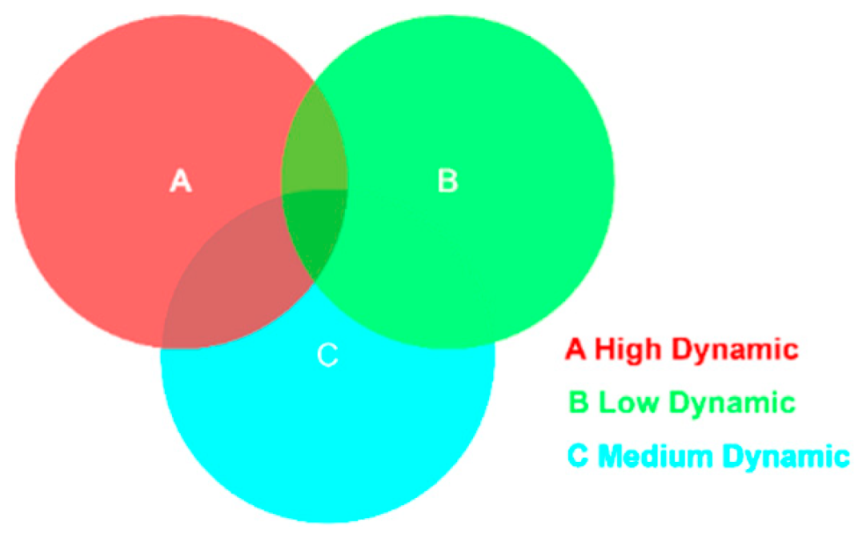

However, after target detection by YOLOv5, although the surrounding environment object categories can be determined, dynamic and static objects cannot be determined. So we define three types of sets for some common objects indoors. High-dynamic objects, such as humans, cats, dogs, and other animals, may move actively. Medium-dynamic objects, such as remote controls, mobile phones, and bags, may move, but their movement is typically driven by external forces rather than autonomous motion. Low dynamic objects, such as monitors, refrigerators, tables, etc., do not move under normal conditions. Dynamic and static objects are identified by YOLOv5 by determining the object categories of the surroundings and then combining these three categories of a priori knowledge for category matching.

Despite BMP-SLAM’s capability to identify dynamic regions following target detection and processing of a priori information, a challenge arises due to the nature of YOLOv5’s target detection framework, which encompasses the entirety of objects. This can lead to overlaps in the detection frames of different objects during the detection process. Particularly, removing all keypoints within the detection frame of a highly dynamic object might result in the loss of critical information, adversely affecting the localization accuracy of the BMP-SLAM system. To address this issue, BMP-SLAM includes a preprocessing step that refines dynamic area determination. This stage involves identifying a region as dynamic only if the keypoints in the current frame fall within the bounding box of a high-dynamic item while remaining outside those of low-dynamic objects.

Figure 4 depicts a schematic diagram of the dynamic region judging procedure.

Following the described processing steps, dynamic areas within the scene are accurately identified, as demonstrated in

Figure 5.

Figure 5a displays the original, unprocessed image, while

Figure 5b reveals the outcomes of post-processing. In these figures, green dots signify static feature points, and red dots designate dynamic feature points. Additionally, red rectangles encapsulate high-dynamic objects, whereas green rectangles outline low-dynamic objects. Notably, low-dynamic objects, such as computers, initially appear within the detection frames of high-dynamic objects, like people. However, the processed results distinctively segregate dynamic from static regions: feature points on high-dynamic entities are correctly marked as dynamic, whereas those on low-dynamic items, exemplified by the computer, are marked as static. This precise delineation ensures that the dynamic region excludes low-dynamic objects, thereby enhancing the accuracy of dynamic feature point identification.

3.3. Bayesian Moving Probability Model

The direct application of YOLOv5 to dynamic object recognition may lead to problems like over-sensitivity to anomalies and under-utilization of historical data. In order to overcome the limitations of YOLOv5, this paper suggests the Bayesian Moving Probability Model, which combines historical data and time-series data to ensure that the dynamic estimation of the key point is not dependent just on a single observation. This improves the VSLAM system’s comprehension of the dynamic environment.

In this paper, we define the dynamic probability of the key point in an image frame F at a given moment

t as

and the static probability as

. The initial dynamic and static probabilities of the key points are set to 0.5. After the dynamic target detection thread, there are observation probabilities

and

if the critical point is located in the dynamic region,

and

if it is located outside the dynamic region. Since in complex environments, there may be noise or possibly target detection model detection errors, in order to reduce the impact of this situation, we propose an observation model for the critical point state.

In Equation (1), represents the probability of accurately observing a key point as dynamic in a dynamic environment, while represents the probability of a key point being misclassified as static in a dynamic environment. denotes the probability that a critical point is observed to be static in a static environment, while refers to the probability that a critical point is misclassified as dynamic in a static environment. To reduce the impact of such erroneous observations, we set the correct dynamic probability and static probability to 0.9, and the incorrect static probability and dynamic probability to 0.1.

We consider the motion probability

of key point

in the previous frame as the prior probability. This is followed by solving for the observation probability through the dynamic object detection thread and observation model, and then updating the posterior probability (the motion probability of the key point) of each key point according to Bayes’ theorem. Assume that

denotes the key point state of the current frame and

denotes the observation of the current frame. Therefore, the motion probability

of the current frame key point

. The initial dynamic probability

and static probability

for the current frame key point.

In Equations (2) and (3), is calculated according to the full probability formula:

The key point is in the dynamic region and the motion probability of the key point is:

The key point is located in the static region and the motion probability of the key point is:

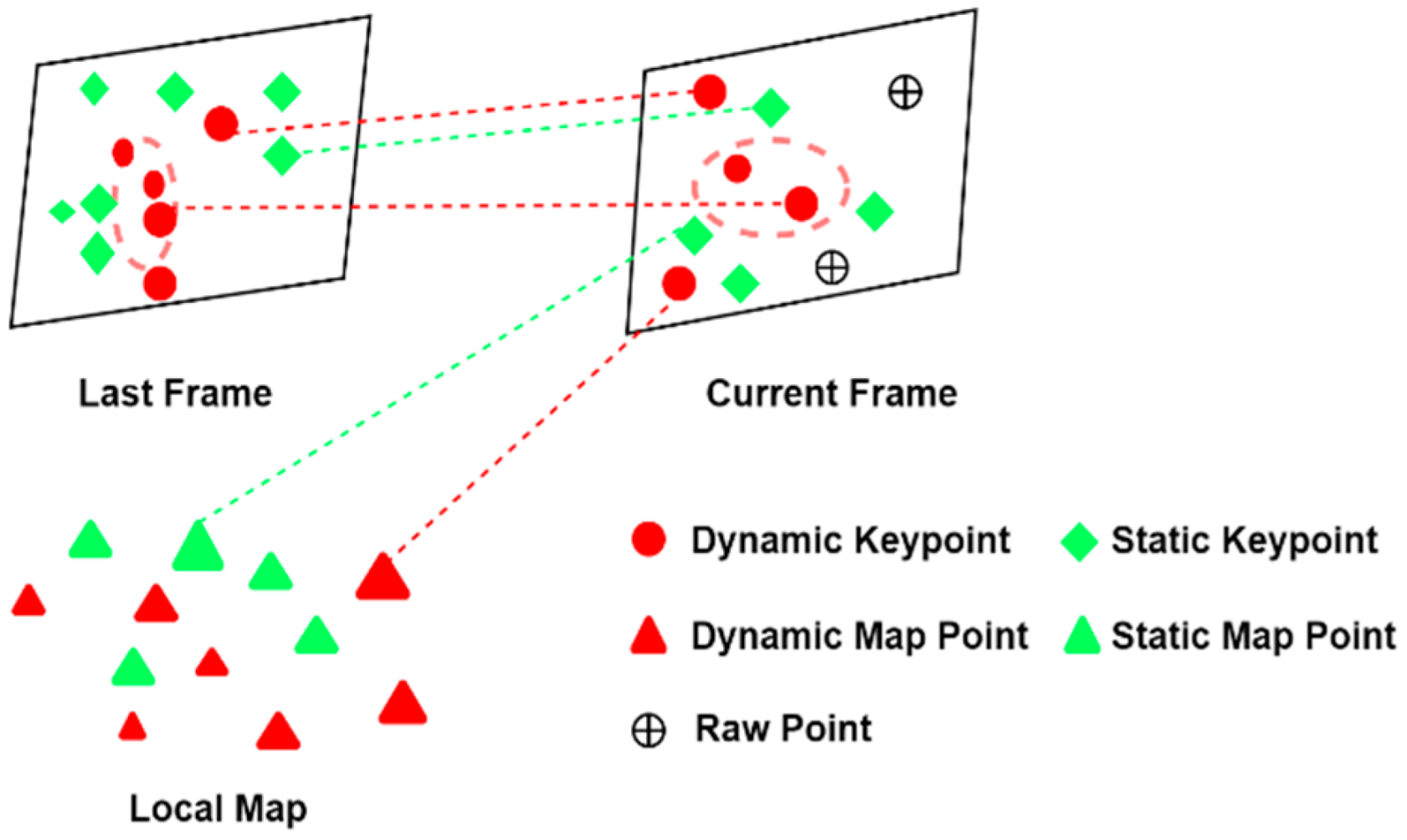

By calculation, if the motion probability of the current key point is greater than a set threshold, it is eliminated so that the ORB-SLAM3 algorithm does not retain them in the later stages of the pose tracking and mapping process. If less than the set threshold, continue with the propagation of dynamic probability, using the posterior probability of the current moment as the prior probability for the next moment. The specific details of propagation are illustrated in the diagram, where the red dashed circles in

Figure 6 indicate dynamic areas. The larger the key points in the diagram, the higher their dynamic probability.

3.4. Dense Point Cloud Reconstruction

The maps constructed by ORB-SLAM3 mainly rely on spatial geometric features, which are achieved by extracting and tracking ORB feature points in RGB images. However, this feature-point dependent approach faces specific challenges in dynamic environments. In dynamic environments, the movement of moving objects may create ghosting or overlapping effects on the map, thereby limiting the performance of ORB-SLAM3 in dynamic scenes. In addition, since ORB-SLAM3 mainly constructs feature point-based maps, this results in relatively sparse generated maps, which may not be sufficiently effective in complex robot navigation tasks that require high-density map information.

To address the above issues, we added a dense point cloud reconstruction thread to ORB-SLAM3, aiming to construct a denser and static point cloud map, as shown in

Figure 7. This thread uses keyframes and combines them with the acquired semantic information obtained by the added dynamic target detection thread to exclude dynamic objects and generate a dense static point cloud map. This approach significantly improves the performance and accuracy of SLAM algorithms for constructing maps in dynamic environments.

The dense point cloud building threads we have constructed are effective in building stable and detailed static dense point cloud maps, even in dynamic environments. Firstly, we utilize the depth information and RGB image data of keyframes to extract two-dimensional pixel coordinates and depth value from the RGB image. Next, the dynamic points are rejected based on the judgment of the dynamic probability of 2D-pixel points.

Then, a coordinate transformation is performed according to the camera pinhole model (see Equations (9) and (10) for mathematical expressions) to generate the corresponding point cloud data. Subsequently, since the camera inevitably has overlapping viewpoints during operation, this will lead to a large amount of redundant information between neighbouring keyframes. We filter this redundant information through voxel network filtering to obtain a new local point cloud map. Finally, we stitch the local point clouds generated from each keyframe to end up with a complete global dense point cloud map (see Equation (11) for the mathematical expression).

where

denotes the proportionality factor between the depth value and the actual spatial distance;

denotes the camera internal reference matrix;

denotes the camera rotation matrix;

denotes the 3D spatial coordinates corresponding to the 2D pixel points;

denotes the translation matrix;

and

denote the camera focal lengths in the horizontal and vertical directions, respectively; and

and

denote the translations of the pixel coordinates from the imaging plane, respectively.

where

denotes the constructed global dense point cloud map,

denotes the local point cloud for each keyframe, and the rotation matrix

and translation matrix

denote the camera’s bit position.

5. Conclusions

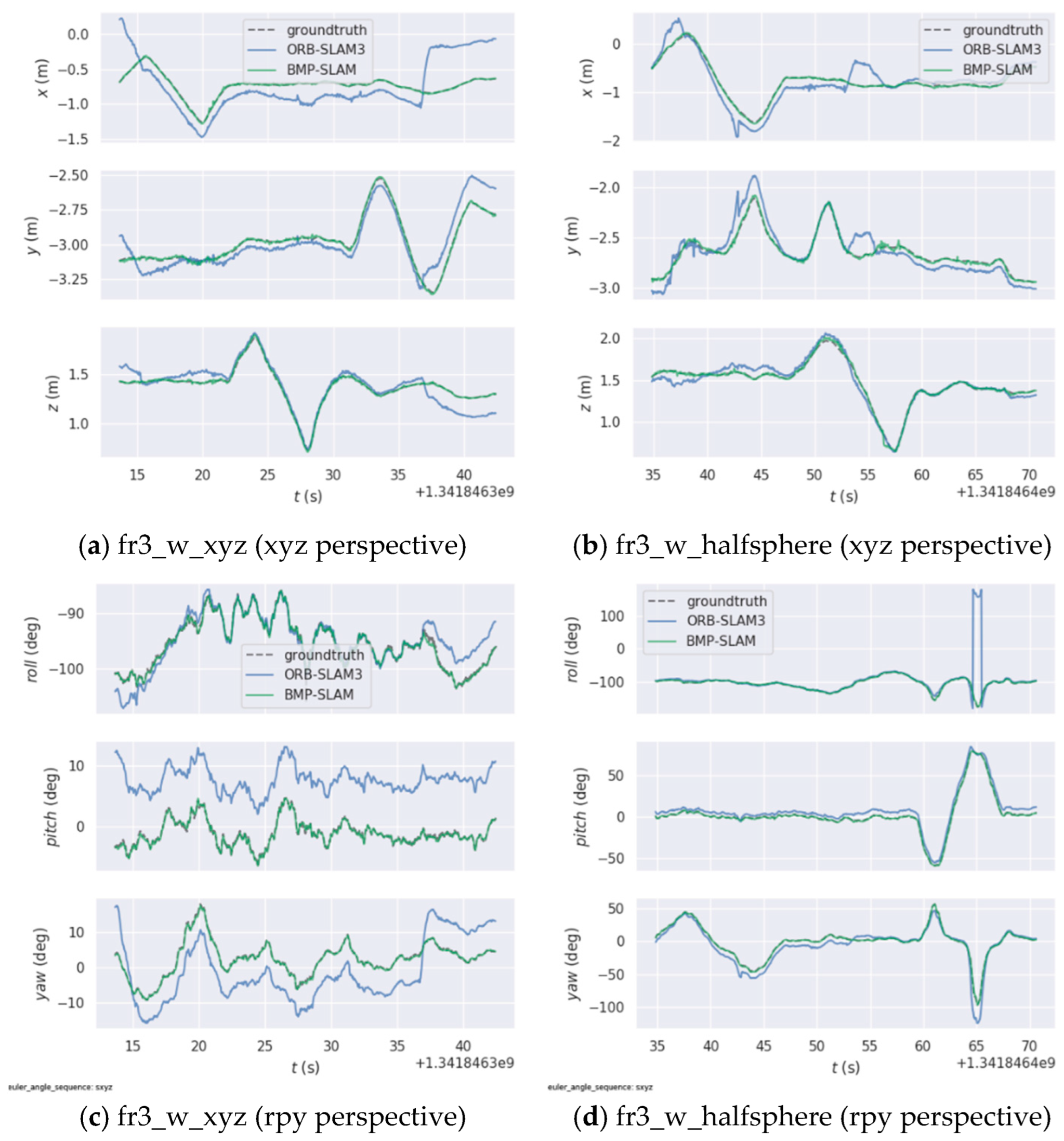

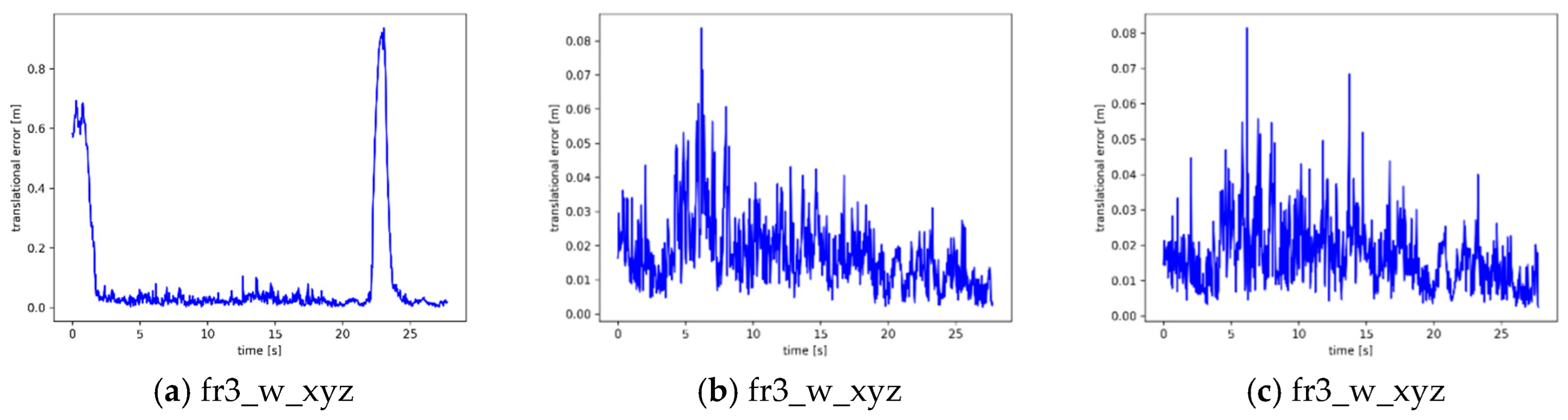

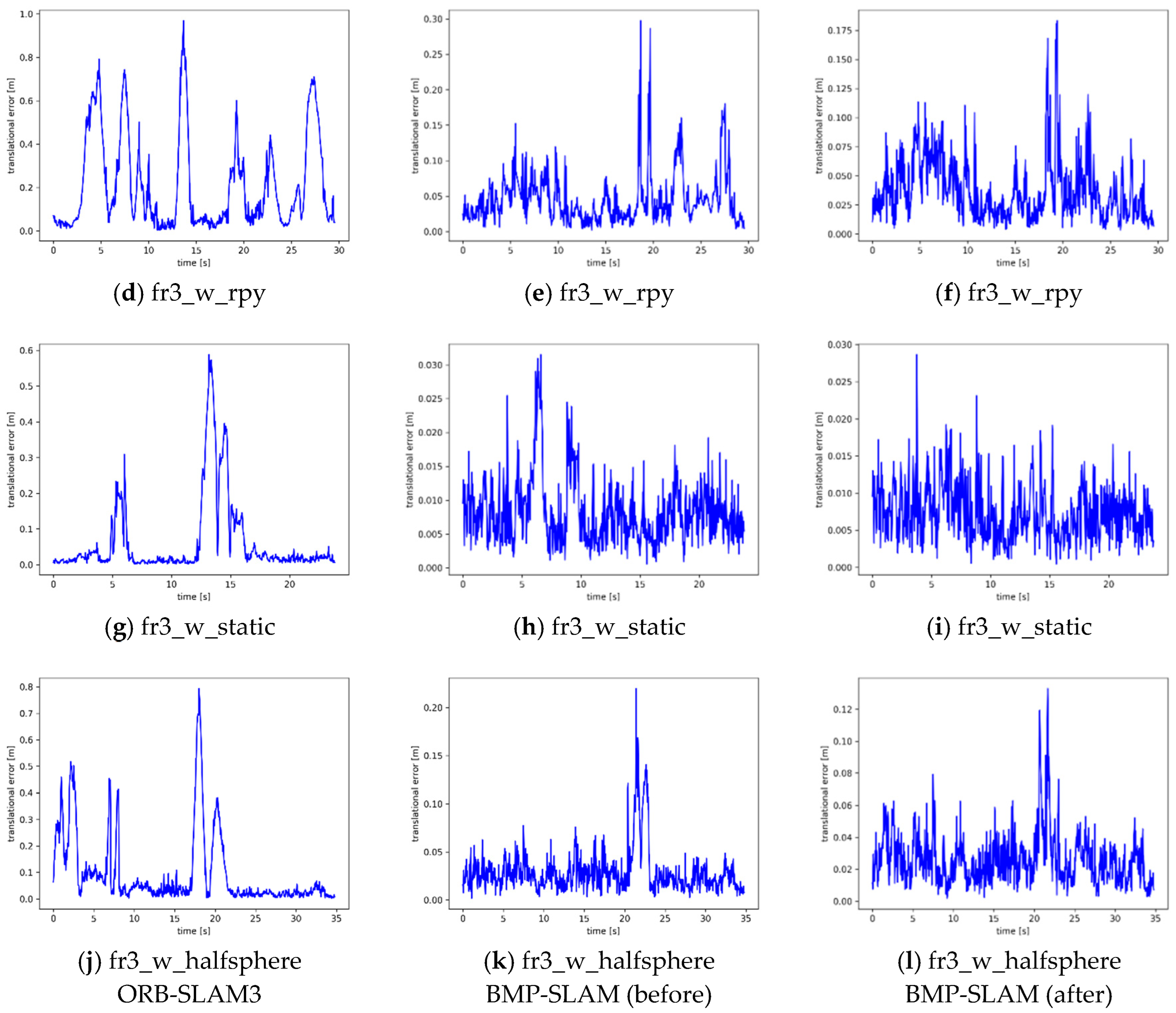

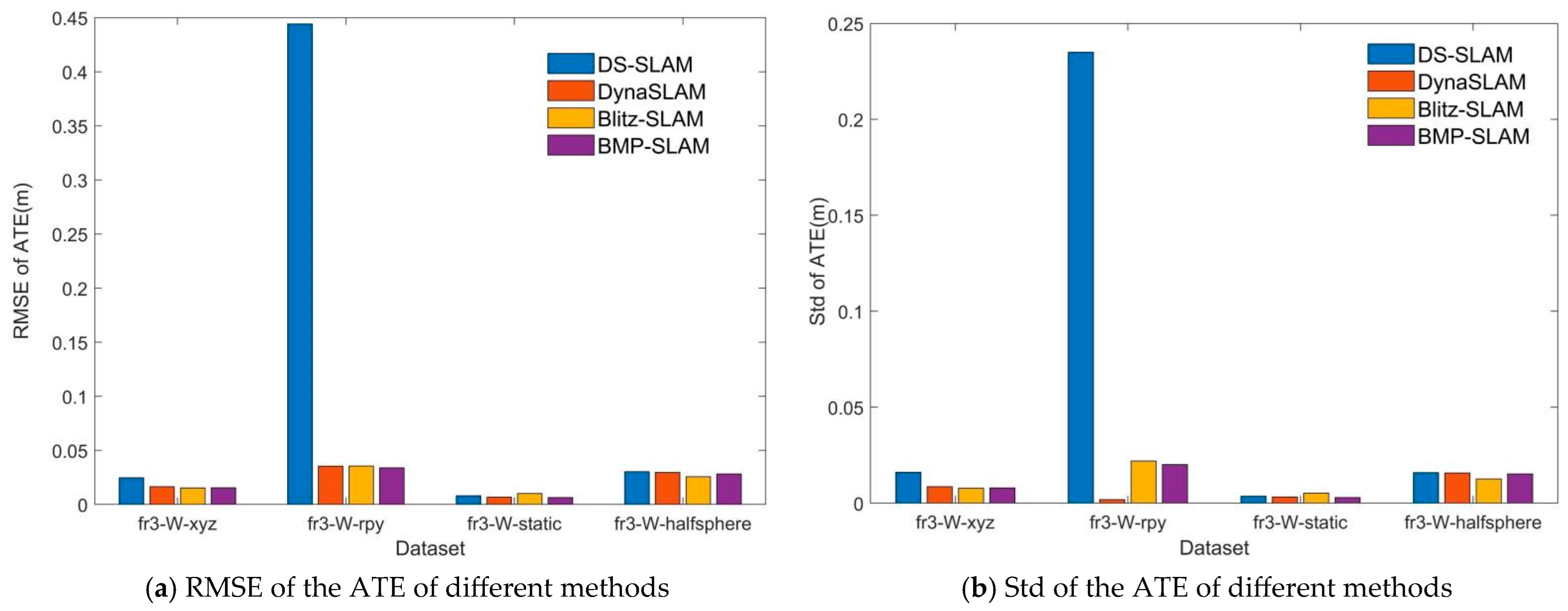

In this paper, we propose a target detection-based VSLAM algorithm (BMP-SLAM) for indoor dynamic scenes, aiming to reduce the impact of dynamic objects on the SLAM system. The proposed BMP-SLAM in this study builds upon ORB-SLAM3 by incorporating an improved YOLOv5 object detection algorithm, which establishes a dedicated dynamic object detection thread to capture environmental semantic information and identify dynamic regions. In addition, the Bayesian Moving Probability Model is integrated into the tracking thread for data correlation, which in turn achieves effective culling of dynamic feature points and significantly improves the accuracy and robustness of the system in dynamic environments. BMP-SLAM also combines keyframe information and semantic information provided by the dynamic target detection thread to perform dynamic object rejection, enabling dense point cloud map construction for indoor 3D scenes. In order to evaluate the BMP_SLAM performance, four highly dynamic scene sequences in the TUM RGB-D dataset were selected for extensive experiments. Experimental findings demonstrate that BMP-SLAM significantly outperforms ORB-SLAM3 in dynamic environments. Moreover, the ATE and RPE of the proposed BMP_SLAM are significantly lower than those of DS-SLAM and slightly lower than those of DynaSLAM and Blitz-SLAM methods, achieving more stable and precise camera trajectory tracking. Even when compared with other excellent dynamic SLAM methods, it possesses smaller trajectory error and more accurate localisation accuracy, which further validates the robustness of BMP-SLAM. In addition, the dense point cloud map constructed by BMP-SLAM can also effectively meet the requirements of indoor complex robot navigation tasks.

Although BMP-SLAM performs well in dynamic indoor environments, there are some limitations that we will try to address in future work. Since the algorithm relies on YOLOv5 for dynamic object detection, it shows a strong dependency on the training dataset. So it leads to the fact that the removal effectiveness of the algorithm may be affected when there are dynamic objects in the environment that are not defined in the training set. Therefore, the ability to handle unknown dynamic objects needs to be enhanced by incorporating more advanced target detection techniques or adopting unsupervised learning methods to improve the adaptability and robustness of the algorithms in diverse environments.