Lightweight Image Super-Resolution Reconstruction Network Based on Multi-Order Information Optimization

Abstract

1. Introduction

- We propose a self-calibrating high-frequency information enhancement block (SCHIEB). By designing an adaptive high-frequency enhancement mechanism, the network can dynamically adjust feature representation across different regions, addressing the insufficient high-frequency expression in traditional distillation networks.

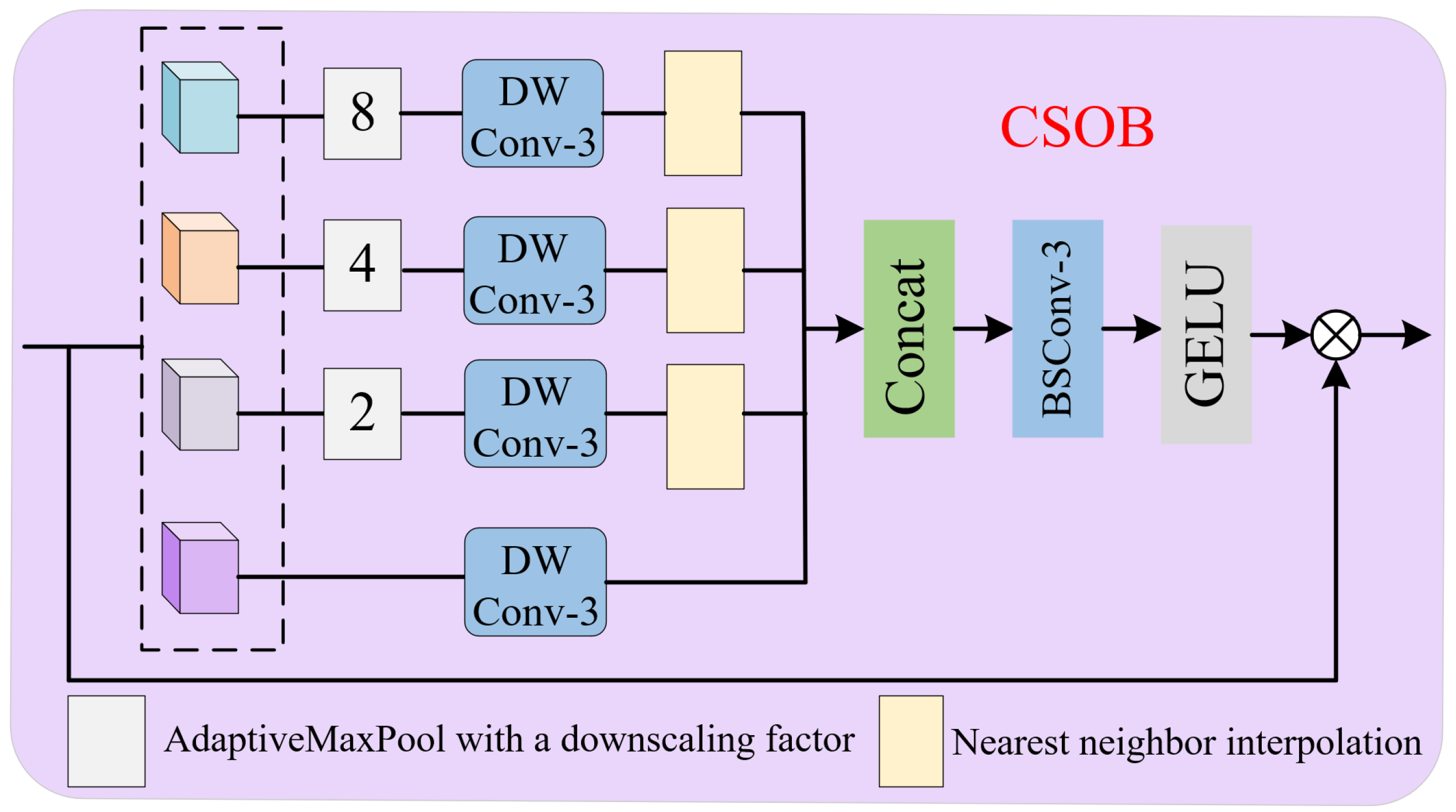

- We design a multi-scale high-frequency information refinement block (MSHIRB). By using a lightweight multiplicity sampling and multi-branch feature extraction method, it fully captures the remaining multi-scale information and high-frequency details, solving the problem of limited feature diversity in traditional distillation networks.

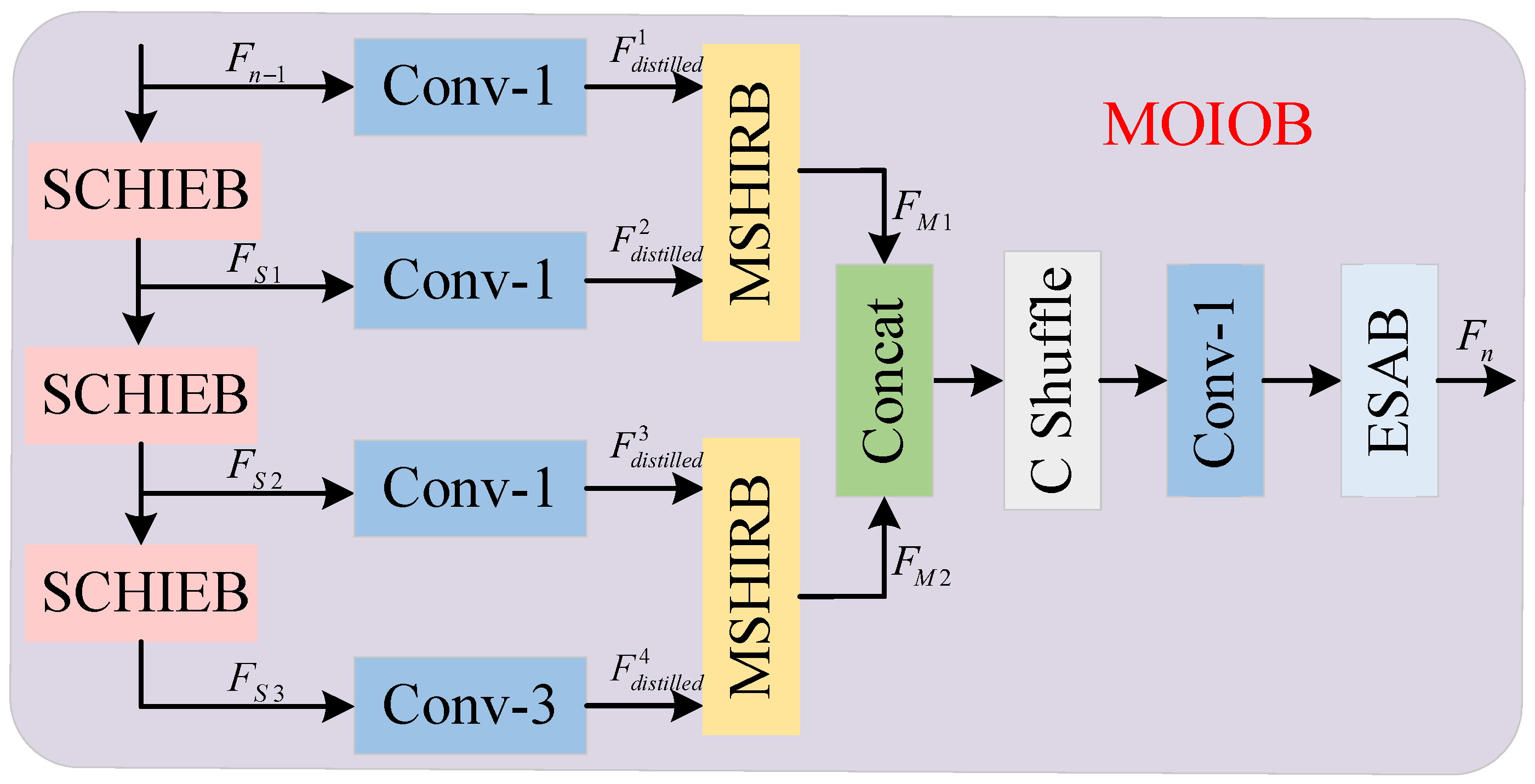

- We propose a multi-order information optimization block (MOIOB). Compared to traditional distillation blocks, our architecture establishes a complete information optimization path, enabling better extraction of high-frequency features and removal of redundant information, thus improving detail recovery.

2. Related Work

2.1. Lightweight SR Network

2.2. Lightweight SR Network Based on Information Distillation

3. Multi-Order Information Optimization Network

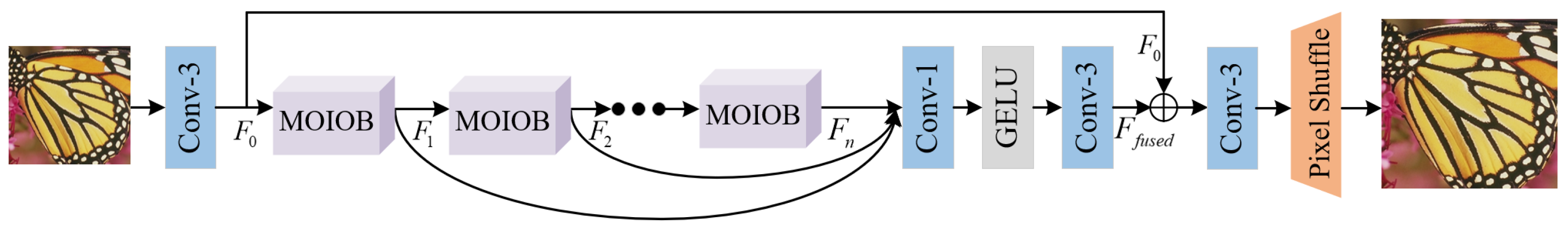

3.1. Network Architecture

3.2. Multi-Order Information Optimization Block

3.3. Self-Calibrating High-Frequency Information Enhancement Block

3.4. Multi-Scale High-Frequency Information Refinement Block

4. Experimental Results and Analysis

4.1. Experimental Setup

4.2. Datasets and Evaluation Indicators

4.3. Network Performance Comparison

4.3.1. Comparison of Objective Quantitative Indicators

4.3.2. Comparison of Subjective Visual Effects

4.3.3. Comparison with Transformer-Based Networks

4.4. Ablation Studies

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Liu, Y.; Zhang, M.; Jiang, B.; Hou, B.; Liu, D.; Chen, J.; Lian, H. Flexible alignment super-resolution network for multi-contrast magnetic resonance imaging. IEEE Trans. Multimed. 2023, 26, 5159–5169. [Google Scholar] [CrossRef]

- Ren, S.; Guo, K.; Zhou, X.; Hu, B.; Zhu, F.; Luo, E. Medical image super-resolution based on semantic perception transfer learning. IEEE/ACM Trans. Comput. Biol. Bioinform. 2022, 20, 2598–2609. [Google Scholar] [CrossRef]

- Cheng, D.; Chen, J.; Kou, Q.; Nie, S.; Zhang, J. Super-resolution reconstruction of lightweight mine images by fusing hierarchical features and attention mechanisms. J. Instrum. 2022, 43, 73–84. [Google Scholar]

- Kou, Q.; Cheng, Z.; Cheng, D.; Chen, J.; Zhang, J. Lightweight super resolution method based on blueprint separable convolution for mine image. J. China Coal Soc. 2024, 49, 4038–4050. [Google Scholar]

- Jiang, H.; Asad, M.; Liu, J.; Zhang, H.; Cheng, D. Single image detail enhancement via metropolis theorem. Multimed. Tools Appl. 2024, 83, 36329–36353. [Google Scholar] [CrossRef]

- Cheng, D.; Yuan, H.; Qian, J.; Kou, Q.; Jiang, H. Image Super-Resolution Algorithms Based on Deep Feature Differentiation Network. J. Electron. Inf. 2024, 46, 1033–1042. [Google Scholar]

- Chao, J.; Zhou, Z.; Gao, H.; Gong, J.; Zeng, Z.; Yang, Z. A novel learnable interpolation approach for scale-arbitrary image super-resolution. In Proceedings of the Thirty-Second International Joint Conference on Artificial Intelligence, Macao, China, 19–25 August 2023; pp. 564–572. [Google Scholar]

- Li, X.; Zhang, Y.; Ge, Z.; Cao, G.; Shi, H.; Fu, P. Adaptive nonnegative sparse representation for hyperspectral image super-resolution. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 4267–4283. [Google Scholar] [CrossRef]

- Chen, W.; Huang, G.; Mo, F.; Lin, J. Image super-resolution reconstruction algorithm with adaptive aggregation of hierarchical information. J. Comput. Eng. Appl. 2024, 60, 221–231. [Google Scholar]

- Zhang, J.; Jia, Y.; Zhu, H.; Li, H.; Du, J. 3D-MRI Super-Resolution Algorithm Fusing Attention and Dilated Encoder-Decoder. J. Comput. Eng. Appl. 2024, 60, 228–236. [Google Scholar]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Learning a deep convolutional network for image super-resolution. In Proceedings of the Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Proceedings, Part IV 13. Springer: Berlin/Heidelberg, Germany, 2014; pp. 184–199. [Google Scholar]

- Dong, C.; Loy, C.C.; Tang, X. Accelerating the super-resolution convolutional neural network. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 391–407. [Google Scholar]

- Shi, W.; Caballero, J.; Huszár, F.; Totz, J.; Aitken, A.P.; Bishop, R.; Rueckert, D.; Wang, Z. Real-time single image and video super-resolution using an efficient sub-pixel convolutional neural network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1874–1883. [Google Scholar]

- Kim, J.; Lee, J.K.; Lee, K.M. Accurate image super-resolution using very deep convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1646–1654. [Google Scholar]

- Lim, B.; Son, S.; Kim, H.; Nah, S.; Mu Lee, K. Enhanced deep residual networks for single image super-resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 136–144. [Google Scholar]

- Liu, J.; Tang, J.; Wu, G. Residual feature distillation network for lightweight image super-resolution. In Proceedings of the Computer Vision–ECCV 2020 Workshops, Glasgow, UK, 23–28 August 2020; Proceedings, Part III 16. Springer: Berlin/Heidelberg, Germany, 2020; pp. 41–55. [Google Scholar]

- Tai, Y.; Yang, J.; Liu, X. Image super-resolution via deep recursive residual network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3147–3155. [Google Scholar]

- Yu, L.; Li, X.; Li, Y.; Jiang, T.; Wu, Q.; Fan, H.; Liu, S. Dipnet: Efficiency distillation and iterative pruning for image super-resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 1692–1701. [Google Scholar]

- Sun, L.; Dong, J.; Tang, J.; Pan, J. Spatially-adaptive feature modulation for efficient image super-resolution. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 13190–13199. [Google Scholar]

- Lu, Z.; Li, J.; Liu, H.; Huang, C.; Zhang, L.; Zeng, T. Transformer for single image super-resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 457–466. [Google Scholar]

- Li, W.; Li, J.; Gao, G.; Deng, W.; Zhou, J.; Yang, J.; Qi, G.J. Cross-receptive focused inference network for lightweight image super-resolution. IEEE Trans. Multimed. 2023, 26, 864–877. [Google Scholar] [CrossRef]

- Hui, Z.; Wang, X.; Gao, X. Fast and accurate single image super-resolution via information distillation network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 723–731. [Google Scholar]

- Hui, Z.; Gao, X.; Yang, Y.; Wang, X. Lightweight image super-resolution with information multi-distillation network. In Proceedings of the 27th ACM International Conference on Multimedia, Nice, France, 21–25 October 2019; pp. 2024–2032. [Google Scholar]

- Kong, F.; Li, M.; Liu, S.; Liu, D.; He, J.; Bai, Y.; Chen, F.; Fu, L. Residual local feature network for efficient super-resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 766–776. [Google Scholar]

- Li, Z.; Liu, Y.; Chen, X.; Cai, H.; Gu, J.; Qiao, Y.; Dong, C. Blueprint separable residual network for efficient image super-resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 833–843. [Google Scholar]

- Liu, J.; Zhang, W.; Tang, Y.; Tang, J.; Wu, G. Residual feature aggregation network for image super-resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 2359–2368. [Google Scholar]

- Finder, S.; Amoyal, R.; Treister, E.; Freifeld, O. Wavelet Convolutions for Large Receptive Fields. arXiv 2024, arXiv:2407.05848. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Timofte, R.; Agustsson, E.; Van Gool, L.; Yang, M.H.; Zhang, L. Ntire 2017 challenge on single image super-resolution: Methods and results. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 114–125. [Google Scholar]

- Bevilacqua, M.; Roumy, A.; Guillemot, C.; Alberi-Morel, M.L. Low-Complexity Single-Image Super-Resolution Based On Nonnegative Neighbor Embedding; BMVA Press: Durham, UK, 2012. [Google Scholar]

- Zeyde, R.; Elad, M.; Protter, M. On single image scale-up using sparse-representations. In Proceedings of the Curves and Surfaces: 7th International Conference, Avignon, France, 24–30 June 2010; Revised Selected Papers 7. Springer: Berlin/Heidelberg, Germany, 2012; pp. 711–730. [Google Scholar]

- Martin, D.; Fowlkes, C.; Tal, D.; Malik, J. A database of human segmented natural images and its application to evaluating segmentation algorithms and measuring ecological statistics. In Proceedings of the Eighth IEEE International Conference on Computer Vision. ICCV 2001, Vancouver, BC, Canada, 7–14 July 2001; Volume 2, pp. 416–423. [Google Scholar]

- Huang, J.B.; Singh, A.; Ahuja, N. Single image super-resolution from transformed self-exemplars. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 5197–5206. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Huang, H.; Shen, L.; He, C.; Dong, W.; Liu, W. Differentiable neural architecture search for extremely lightweight image super-resolution. IEEE Trans. Circuits Syst. Video Technol. 2022, 33, 2672–2682. [Google Scholar] [CrossRef]

- Park, K.; Soh, J.W.; Cho, N.I. A dynamic residual self-attention network for lightweight single image super-resolution. IEEE Trans. Multimed. 2021, 25, 907–918. [Google Scholar] [CrossRef]

- Wang, K.; Yang, X.; Jeon, G. Hybrid attention feature refinement network for lightweight image super-resolution in metaverse immersive display. IEEE Trans. Consum. Electron. 2023, 70, 3232–3244. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, T. Osffnet: Omni-stage feature fusion network for lightweight image super-resolution. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 26–27 February 2024; Volume 38, pp. 5660–5668. [Google Scholar]

- Liu, Y.; Jia, Q.; Zhang, J.; Fan, X.; Wang, S.; Ma, S.; Gao, W. Hierarchical similarity learning for aliasing suppression image super-resolution. IEEE Trans. Neural Networks Learn. Syst. 2022, 35, 2759–2771. [Google Scholar] [CrossRef]

- Yasir, M.; Ullah, I.; Choi, C. Depthwise channel attention network (DWCAN): An efficient and lightweight model for single image super-resolution and metaverse gaming. Expert Syst. 2024, 41, e13516. [Google Scholar] [CrossRef]

- Song, W.; Yan, X.; Guo, W.; Xu, Y.; Ning, K. MSWSR: A Lightweight Multi-Scale Feature Selection Network for Single-Image Super-Resolution Methods. Symmetry 2025, 17, 431. [Google Scholar] [CrossRef]

- Li, F.; Cong, R.; Wu, J.; Bai, H.; Wang, M.; Zhao, Y. Srconvnet: A transformer-style convnet for lightweight image super-resolution. Int. J. Comput. Vis. 2025, 133, 173–189. [Google Scholar] [CrossRef]

- Luo, X.; Qu, Y.; Xie, Y.; Zhang, Y.; Li, C.; Fu, Y. Lattice network for lightweight image restoration. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 4826–4842. [Google Scholar] [CrossRef] [PubMed]

- Choi, H.; Lee, J.; Yang, J. N-gram in swin transformers for efficient lightweight image super-resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 2071–2081. [Google Scholar]

- Liang, J.; Cao, J.; Sun, G.; Zhang, K.; Van Gool, L.; Timofte, R. Swinir: Image restoration using swin transformer. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 1833–1844. [Google Scholar]

- Gao, G.; Wang, Z.; Li, J.; Li, W.; Yu, Y.; Zeng, T. Lightweight bimodal network for single-image super-resolution via symmetric CNN and recursive transformer. arXiv 2022, arXiv:2204.13286. [Google Scholar]

- Li, J.; Ke, Y. Hybrid convolution-transformer for lightweight single image super-resolution. In Proceedings of the ICASSP 2024—2024 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Seoul, Republic of Korea, 14–19 April 2024; pp. 2395–2399. [Google Scholar]

| Scale | Method | Params | FLOPs | Set5 | Set14 | B100 | Urban100 |

|---|---|---|---|---|---|---|---|

| PSNR/SSIM | PSNR/SSIM | PSNR/SSIM | PSNR/SSIM | ||||

| ×2 | EDSR-baseline [15] | 1370 K | 316.3 G | 37.99/0.9604 | 33.57/0.9175 | 32.16/0.8994 | 31.98/0.9272 |

| IMDN [23] | 694 K | 186.7 G | 38.00/0.9605 | 33.63/0.9177 | 32.19/0.8996 | 32.17/0.9283 | |

| RFDN [16] | 534 K | 95.0 G | 38.05/0.9606 | 33.68/0.9184 | 32.16/0.8994 | 32.12/0.9278 | |

| BSRN [25] | 332 K | 73.0 G | 38.10/0.9610 | 33.74/0.9193 | 32.24/0.9006 | 32.34/0.9303 | |

| SAFMN [19] | 228 K | 52.0 G | 38.00/0.9605 | 33.54/0.9177 | 32.16/0.8995 | 31.84/0.9256 | |

| DLSR [35] | 322 K | 68.0 G | 38.04/0.9606 | 33.67/0.9183 | 32.21/0.9002 | 32.26/0.9297 | |

| DRSAN [36] | 1190 K | 274.6 G | 38.14/0.9611 | 33.75/0.9188 | 32.25/0.9010 | 32.46/0.9317 | |

| HAFRN [37] | 496 K | - | 38.05/0.9606 | 33.66/0.9187 | 32.21/0.8999 | 32.20/0.9289 | |

| OSFFNet [38] | 516 K | 83.2 G | 38.11/0.9610 | 33.72/0.9190 | 32.29/0.9012 | 32.67/0.9331 | |

| HSRNet [39] | 1260 K | - | 38.07/0.9607 | 33.78/0.9197 | 32.26/0.9006 | 32.53/0.9320 | |

| DWCAN [40] | 401 K | - | 37.60/0.9598 | 33.33/0.9160 | 32.07/0.8987 | 31.95/0.9267 | |

| MSWSR [41] | 312 K | 243.3 G | 38.01/0.9610 | 33.71/0.9193 | 32.22/0.9003 | 32.29/0.9301 | |

| SRConvNet-L [42] | 885 K | 160 G | 38.14/0.9610 | 33.81/0.9199 | 32.28/0.9010 | 32.59/0.9321 | |

| MOION | 816 K | 163.74 G | 38.16/0.9611 | 33.92/0.9204 | 32.32/0.9014 | 32.69/0.9339 | |

| ×3 | EDSR-baseline [15] | 1555 K | 160.2 G | 34.37/0.9270 | 30.28/0.8417 | 29.09/0.8052 | 28.15/0.8527 |

| IMDN [23] | 703 K | 84.0 G | 34.36/0.9270 | 30.32/0.8417 | 29.09/0.8046 | 28.17/0.8519 | |

| RFDN [16] | 541 K | 42.2 G | 34.41/0.9273 | 30.34/0.8420 | 29.09/0.8050 | 28.21/0.8525 | |

| BSRN [25] | 340 K | 33.3 G | 34.46/0.9277 | 30.47/0.8449 | 29.18/0.8068 | 28.39/0.8567 | |

| SAFMN [19] | 233 K | 23.0 G | 34.34/0.9267 | 30.33/0.8418 | 29.08/0.8048 | 27.95/0.8474 | |

| DLSR [35] | 329 K | - | 34.49/0.9279 | 30.39/0.8428 | 29.13/0.8061 | 28.26/0.8548 | |

| DRSAN [36] | 1290 K | 133.4 G | 34.59/0.9286 | 30.42/0.8443 | 29.18/0.8079 | 28.52/0.8593 | |

| HAFRN [37] | 505 K | - | 34.45/0.9276 | 30.40/0.8433 | 29.12/0.8058 | 28.16/0.8528 | |

| OSFFNet [38] | 524 K | 37.8 G | 34.58/0.9287 | 30.48/0.8450 | 29.21/0.8080 | 28.49/0.8595 | |

| HSRNet [39] | - | - | 34.47/0.9278 | 30.40/0.8435 | 29.15/0.8066 | 28.42/0.8579 | |

| DWCAN [40] | 401 K | - | 34.29/0.9258 | 30.29/0.8410 | 29.00/0.8027 | 28.18/0.8521 | |

| MSWSR [41] | 307 K | 249.6 G | 34.40/0.9277 | 30.35/0.8437 | 29.12/0.8067 | 28.22/0.8548 | |

| SRConvNet-L [42] | 906 K | 74 G | 34.59/0.9288 | 30.50/0.8455 | 29.22/0.8081 | 28.56/0.8600 | |

| MOION | 825 K | 73.72 G | 34.69/0.9294 | 30.57/0.8467 | 29.24/0.8091 | 28.68/0.8629 | |

| ×4 | EDSR-baseline [15] | 1518 K | 114.0 G | 32.09/0.8938 | 28.58/0.7813 | 27.57/0.7357 | 26.04/0.7849 |

| IMDN [23] | 715 K | 48.0 G | 32.21/0.8948 | 28.58/0.7811 | 27.56/0.7353 | 26.04/0.7838 | |

| RFDN [16] | 550 K | 23.9 G | 32.24/0.8952 | 28.61/0.7819 | 27.57/0.7360 | 26.11/0.7858 | |

| BSRN [25] | 352 K | 19.4 G | 32.35/0.8966 | 28.73/0.7847 | 27.65/0.7387 | 26.27/0.7908 | |

| SAFMN [19] | 240 K | 14.0 G | 32.18/0.8948 | 28.60/0.7813 | 27.58/0.7359 | 25.97/0.7809 | |

| DLSR [35] | 338 K | 20 G | 32.33/0.8963 | 28.68/0.7832 | 27.61/0.7374 | 26.19/0.7892 | |

| DRSAN [36] | 1270 K | 88.7 G | 32.34/0.8960 | 28.65/0.7841 | 27.63/0.7390 | 26.33/0.7936 | |

| HAFRN [37] | 517 K | - | 32.24/0.8953 | 28.60/0.7816 | 27.58/0.7365 | 26.02/0.7849 | |

| OSFFNet [38] | 537 K | 22.0 G | 32.39/0.8976 | 28.75/0.7852 | 27.66/0.7393 | 26.36/0.7950 | |

| HSRNet [39] | 1285 K | - | 32.28/0.8960 | 28.68/0.7840 | 27.64/0.7388 | 26.28/0.7934 | |

| DWCAN [40] | 401 K | - | 32.20/0.8938 | 28.56/0.2809 | 27.41/0.7339 | 26.06/0.7851 | |

| MSWSR [41] | 316 K | 257.6 G | 32.26/0.8966 | 28.67/0.7843 | 27.62/0.7379 | 26.17/0.7896 | |

| SRConvNet-L [42] | 902 K | 45 G | 32.44/0.8976 | 28.77/0.7857 | 27.69/0.7402 | 26.47/0.7970 | |

| MOION | 837 K | 42.13 G | 32.51/0.8984 | 28.85/0.7874 | 27.72/0.7418 | 26.55/0.8005 |

| Scale | Method | Params | FLOPs | Set5 | Set14 | B100 | Urban100 |

|---|---|---|---|---|---|---|---|

| PSNR/SSIM | PSNR/SSIM | PSNR/SSIM | PSNR/SSIM | ||||

| ×2 | SwinIR-light [45] | 878 K | 195.6 G | 38.14/0.9611 | 33.86/0.9206 | 32.31/0.9012 | 32.76/0.9340 |

| LBNet [46] | - | - | - | - | - | - | |

| ESRT [20] | 677 K | 191.4 G | 38.03/0.9600 | 33.75/0.9184 | 32.25/0.9001 | 32.58/0.9318 | |

| NGSwin [44] | 998 K | 140.4 G | 38.05/0.9610 | 33.79/0.9199 | 32.27/0.9008 | 32.53/0.9324 | |

| DRSAN [36] | 1190 K | 274.6 G | 38.14/0.9611 | 33.75/0.9188 | 32.25/0.9010 | 32.46/0.9317 | |

| CFIN [21] | 675 K | 116.9 G | 38.14/0.9610 | 33.80/0.9199 | 32.26/0.9006 | 32.48/0.9311 | |

| HCFormer [47] | 911 K | - | 38.06/0.9609 | 34.18/0.9253 | 32.45/0.9051 | 32.67/0.9359 | |

| MOION | 816 K | 163.74 G | 38.16/0.9611 | 33.92/0.9204 | 32.32/0.9014 | 32.69/0.9339 | |

| ×3 | SwinIR-light [45] | 886 K | 87.2 G | 34.62/0.9289 | 30.54/0.8463 | 29.20/0.8082 | 28.66/0.8624 |

| LBNet [46] | 736 K | 68.4 G | 34.47/0.9277 | 30.38/0.8417 | 29.13/0.8061 | 28.42/0.8559 | |

| ESRT [20] | 770 K | 96.4 G | 34.42/0.9268 | 30.43/0.8433 | 29.15/0.8063 | 28.46/0.8574 | |

| NGSwin [44] | 1007 K | 66.6 G | 34.52/0.9282 | 30.53/0.8456 | 29.19/0.8078 | 28.52/0.8603 | |

| DRSAN [36] | 1290 K | 133.4 G | 34.59/0.9286 | 30.42/0.8443 | 29.18/0.8079 | 28.52/0.8593 | |

| CFIN [21] | 681 K | 53.5 G | 34.65/0.9289 | 30.45/0.8443 | 29.18/0.8071 | 28.49/0.8583 | |

| HCFormer [47] | 923 K | - | 34.51/0.9279 | 30.55/0.8459 | 29.31/0.8104 | 28.56/0.8613 | |

| MOION | 825 K | 73.72 G | 34.69/0.9294 | 30.57/0.8467 | 29.24/0.8091 | 28.68/0.8629 | |

| ×4 | SwinIR-light [45] | 897 K | 49.6 G | 32.44/0.8976 | 28.77/0.7858 | 27.69/0.7406 | 26.47/0.7980 |

| LBNet [46] | 742 K | 38.9 G | 32.29/0.8960 | 28.68/0.7832 | 27.62/0.7382 | 26.27/0.7906 | |

| ESRT [20] | 751 K | 67.7 G | 32.19/0.8947 | 28.69/0.7833 | 27.69/0.7379 | 26.39/0.7962 | |

| NGSwin [44] | 1019 K | 36.4 G | 32.33/0.8963 | 28.78/0.7859 | 27.66/0.7396 | 26.45/0.7963 | |

| DRSAN [36] | 1270 K | 88.7 G | 32.34/0.8960 | 28.65/0.7841 | 27.63/0.7390 | 26.33/0.7936 | |

| CFIN [21] | 699 K | 31.2 G | 32.49/0.8985 | 28.74/0.7849 | 27.68/0.7396 | 26.39/0.7946 | |

| HCFormer [47] | 940 K | 58.7 G | 32.41/0.8976 | 28.84/0.7874 | 27.66/0.7413 | 26.51/0.7987 | |

| MOION | 837 K | 42.13 G | 32.51/0.8984 | 28.85/0.7874 | 27.72/0.7418 | 26.55/0.8005 |

| Scale | WTConv-5 | CSOB | MSHIRB | Params | FLOPs | Urban100 |

|---|---|---|---|---|---|---|

| PSNR/SSIM | ||||||

| ×4 | × | × | × | 162 K | 8.79 G | 25.73/0.7734 |

| ✔ | × | × | 200 K | 9.64 G | 25.85/0.7770 | |

| × | ✔ | × | 194 K | 10.30 G | 25.84/0.7771 | |

| × | × | ✔ | 175 K | 9.43 G | 25.82/0.7758 | |

| ✔ | ✔ | × | 231 K | 11.14 G | 25.90/0.7797 | |

| × | ✔ | ✔ | 207 K | 10.93 G | 25.92/0.7806 | |

| ✔ | × | ✔ | 213 K | 10.27 G | 25.88/0.7789 | |

| ✔ | ✔ | ✔ | 244 K | 11.78 G | 25.94/0.7810 |

| Scale | Multiplicity Sampling (MS) | MBFEB | Params | FLOPs | Urban100 |

|---|---|---|---|---|---|

| PSNR/SSIM | |||||

| ×4 | × | × | 162 K | 8.79 G | 25.73/0.7734 |

| × | ✔ | 166 K | 8.89 G | 25.80/0.7757 | |

| ✔ | × | 172 K | 9.34 G | 25.75/0.7742 | |

| ✔ | ✔ | 175 K | 9.43 G | 25.82/0.7758 |

| Scale | Branch Name | Params | FLOPs | Urban100 |

|---|---|---|---|---|

| PSNR/SSIM | ||||

| ×4 | SCB | 195 K | 9.21 G | 25.81/0.7765 |

| AB | 121 K | 6.39 G | 25.61/0.7692 | |

| Dual-Branch | 200 K | 9.64 G | 25.85/0.7770 |

| Scale | Combination | Params | FLOPs | Urban100 |

|---|---|---|---|---|

| PSNR/SSIM | ||||

| ×4 | 3-3-3 | 164 K | 8.83 G | 25.70/0.7724 |

| 3-5-5 | 164 K | 8.84 G | 25.72/0.7725 | |

| 3-7-7 | 164 K | 8.84 G | 25.73/0.7733 | |

| 5-3-3 | 166 K | 8.87 G | 25.75/0.7735 | |

| 5-5-5 | 166 K | 8.88 G | 25.77/0.7747 | |

| 5-7-7 | 166 K | 8.89 G | 25.80/0.7757 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gao, S.; Li, L.; Cui, W.; Jiang, H.; Ge, H. Lightweight Image Super-Resolution Reconstruction Network Based on Multi-Order Information Optimization. Sensors 2025, 25, 5275. https://doi.org/10.3390/s25175275

Gao S, Li L, Cui W, Jiang H, Ge H. Lightweight Image Super-Resolution Reconstruction Network Based on Multi-Order Information Optimization. Sensors. 2025; 25(17):5275. https://doi.org/10.3390/s25175275

Chicago/Turabian StyleGao, Shengxuan, Long Li, Wen Cui, He Jiang, and Hongwei Ge. 2025. "Lightweight Image Super-Resolution Reconstruction Network Based on Multi-Order Information Optimization" Sensors 25, no. 17: 5275. https://doi.org/10.3390/s25175275

APA StyleGao, S., Li, L., Cui, W., Jiang, H., & Ge, H. (2025). Lightweight Image Super-Resolution Reconstruction Network Based on Multi-Order Information Optimization. Sensors, 25(17), 5275. https://doi.org/10.3390/s25175275