Enhancing Real-World Fall Detection Using Commodity Devices: A Systematic Study

Abstract

1. Introduction

- We demonstrated the effectiveness of using the combined accelerometer sensor data from the wrist and the opposite hip for fall detection. We compared the accuracy of the model trained on different combinations of a sensor location and the sensor type and got the best F1-score using the accelerometer data from both the wrist and the opposite hip.

- We designed a two-stage user study protocol to evaluate the real-world performance of fall-detection model in a controlled environment (in the laboratory) and an uncontrolled environment (in the participant’s home).

- We demonstrated that incorporating the user feedback on wrongly classified participant data while using the system in controlled and uncontrolled environments can reduce the number of false positives by a large margin and is a viable approach to deploying a real-world fall-detection system for the targeted older adults.

2. Background

3. Related Work

4. Methodology

4.1. Datasets

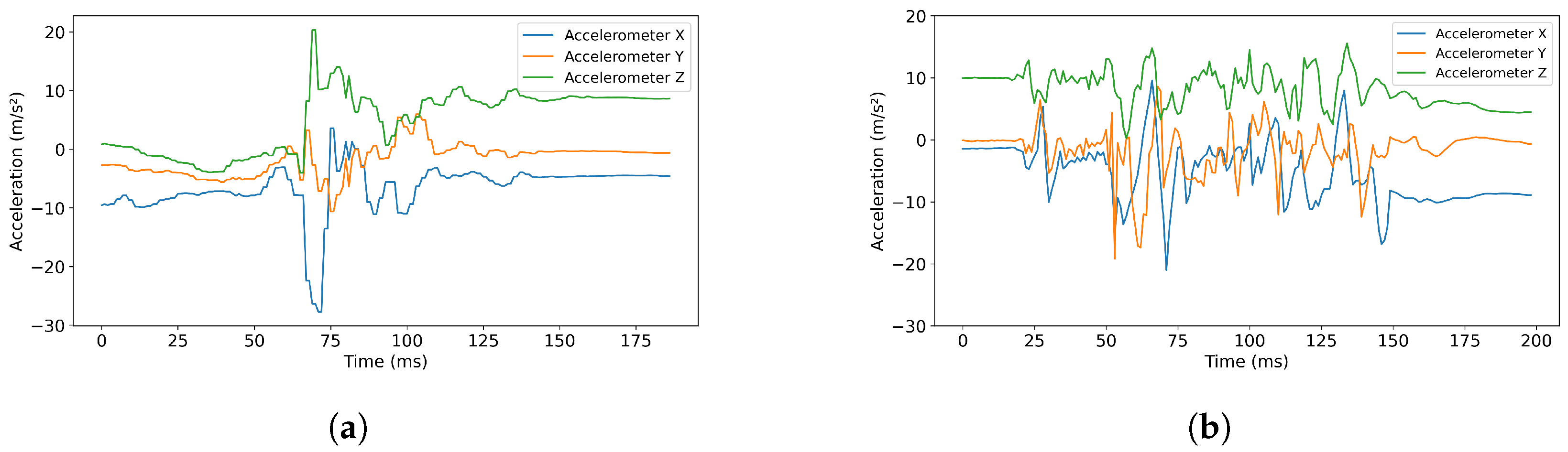

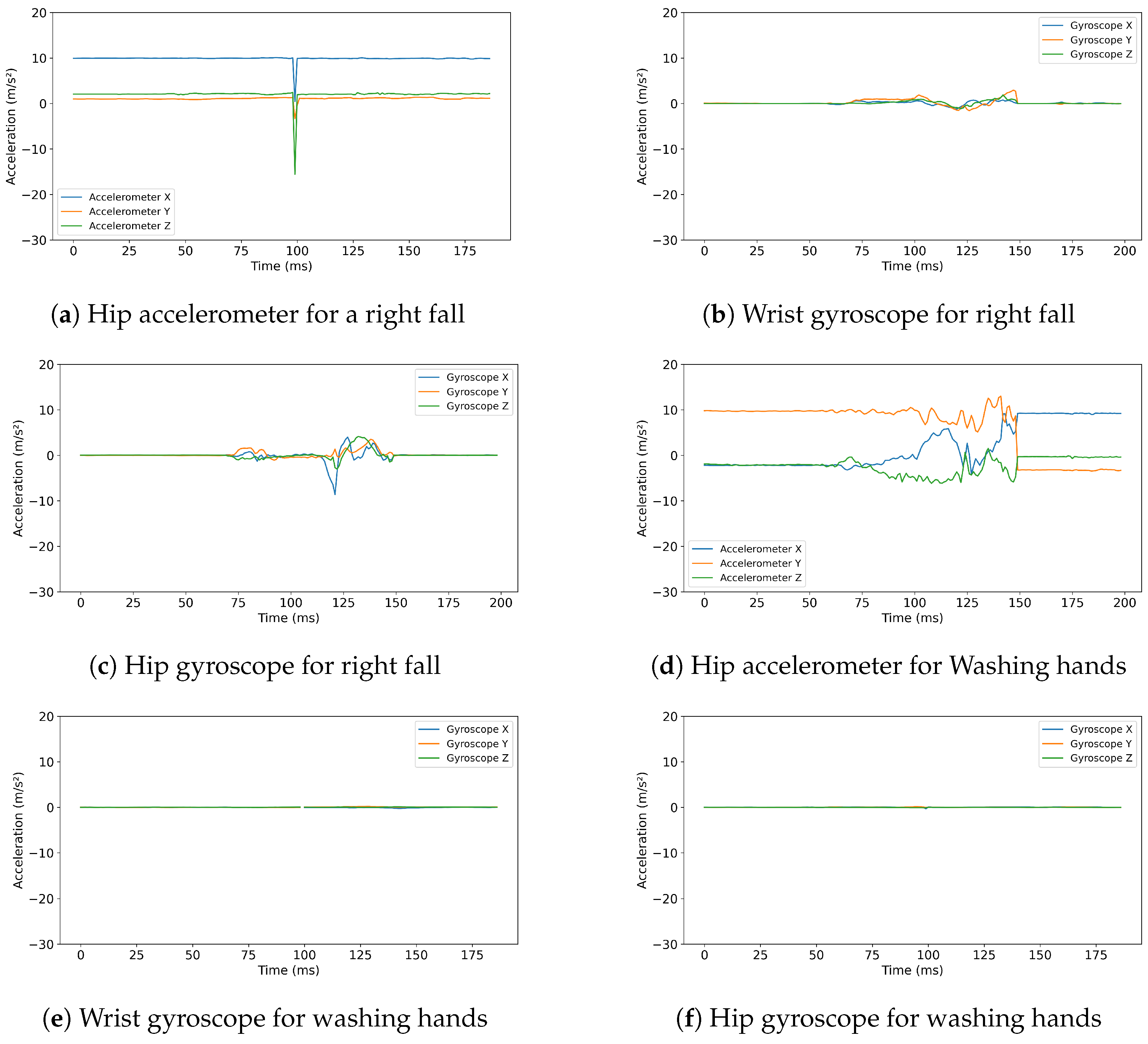

4.2. Data Preprocessing

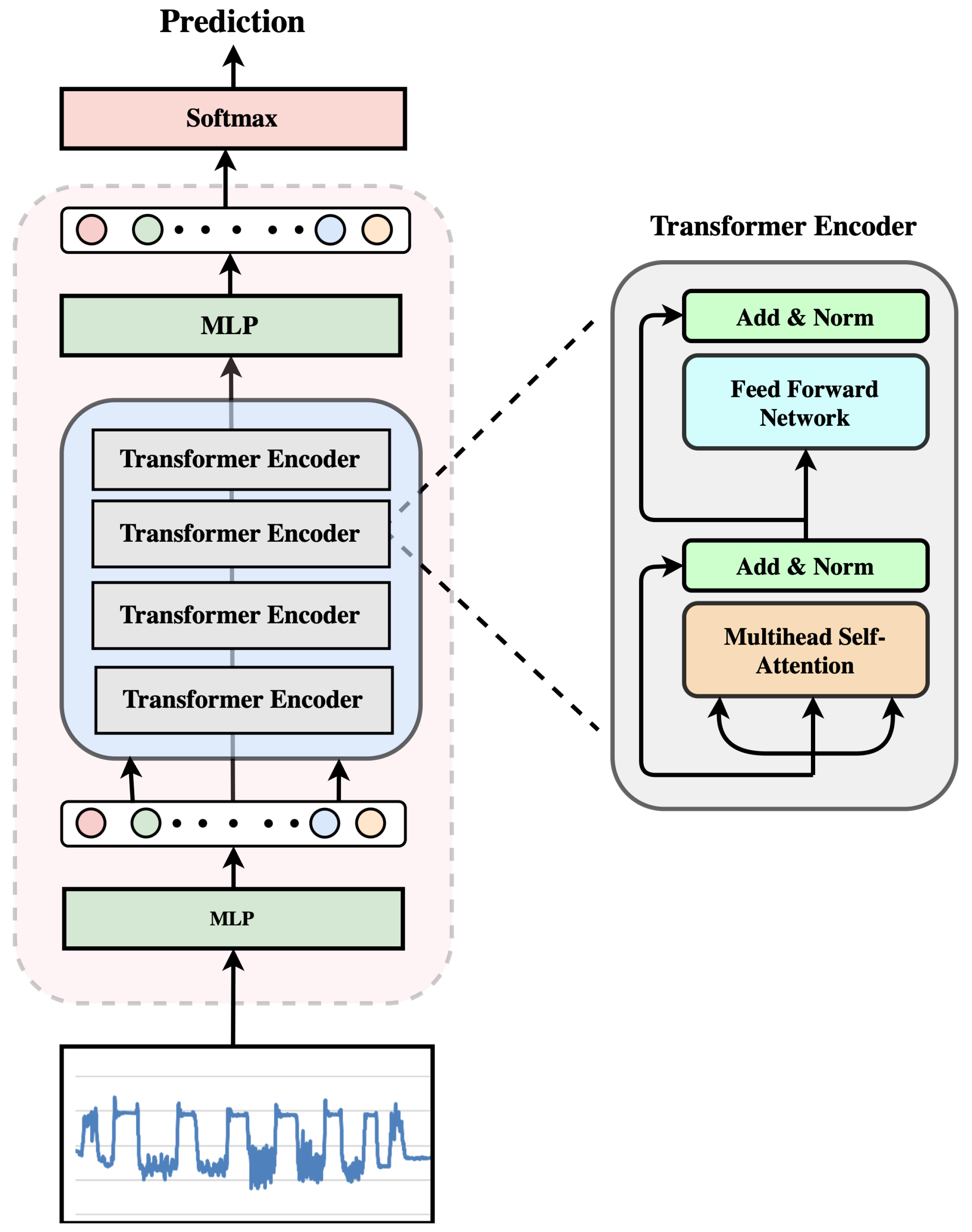

4.3. Computation Model

4.4. Experiments

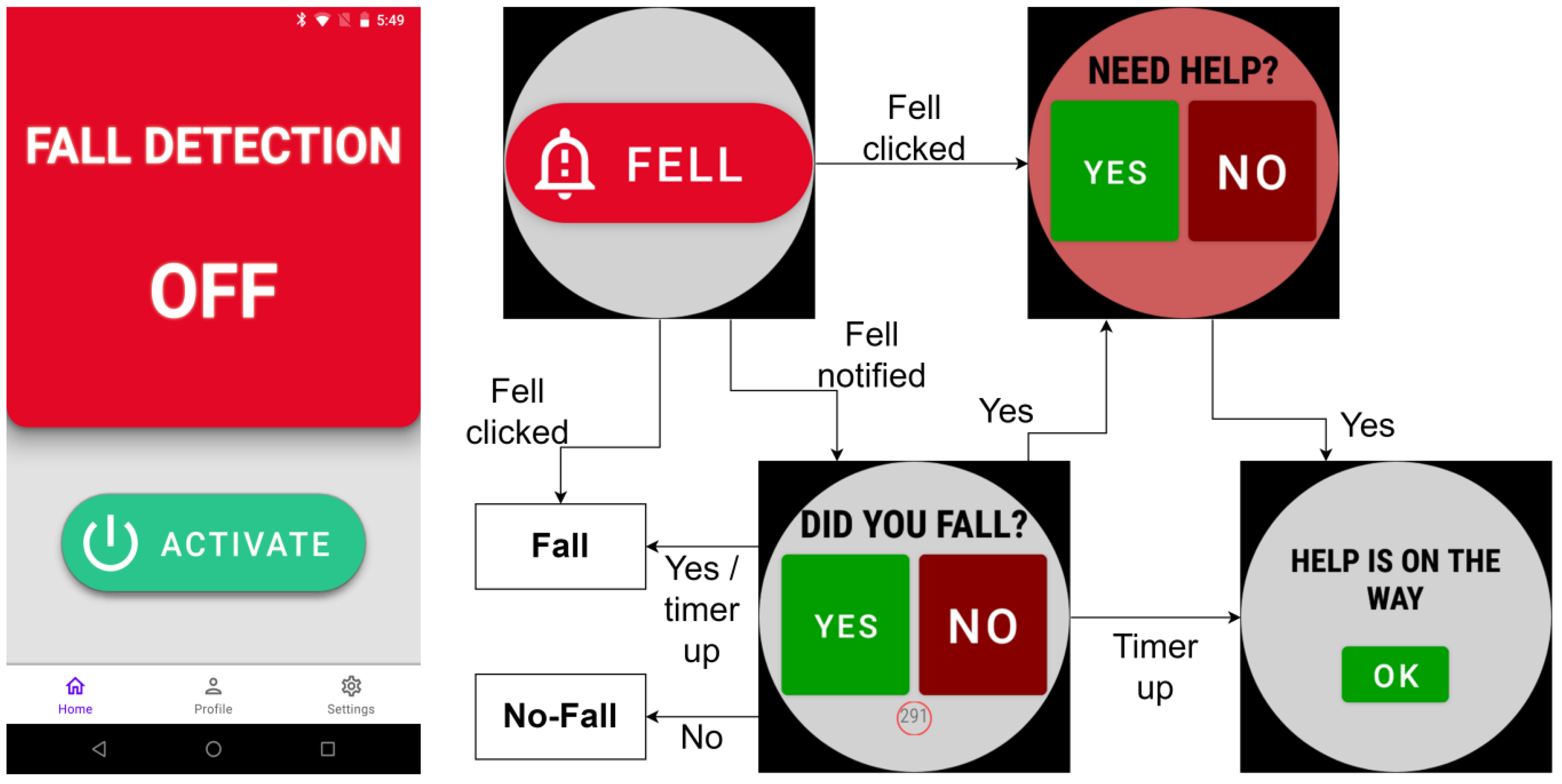

The SmartFall App

5. Results

5.1. Offline Evaluation

5.2. Real World Evaluation

6. User-Feedback Integration

6.1. Methodology

6.2. Evaluation with Retrained Model

6.3. Evaluation with Older Adults

7. Discussion

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Wang, X.; Ellul, J.; Azzopardi, G. Elderly fall-detection systems: A literature survey. Front. Robot. AI 2020, 7, 71. [Google Scholar] [CrossRef]

- Nahian, M.J.A.; Raju, M.H.; Tasnim, Z.; Mahmud, M.; Ahad, M.A.R.; Kaiser, M.S. Contactless fall detection for the elderly. In Contactless Human Activity Analysis; Springer: Cham, Switzerland, 2021. [Google Scholar]

- Yan, L.; Du, Y. Exploring Trends and Clusters in Human Posture Recognition Research: An Analysis Using CiteSpace. Sensors 2025, 25, 632. [Google Scholar] [CrossRef]

- Mohammed Sharook, K.; Premkumar, A.; Aishwaryaa, R.; Amrutha, J.M.; Deepthi, L.R. Fall Detection Using Transformer Model. In Proceedings of the ICT Infrastructure and Computing; Springer: Singapore, 2023. [Google Scholar]

- Wang, S.; Wu, J. Patch-Transformer Network: A Wearable-Sensor-Based Fall Detection Method. Sensors 2023, 23, 6360. [Google Scholar] [CrossRef]

- Chaudhuri, S.; Thompson, H.; Demiris, G. Fall Detection Devices and their use with Older Adults: A Systematic Review. J. Geriatr. Physival Ther. 2014, 37, 178–196. [Google Scholar] [CrossRef] [PubMed]

- Maray, N.; Ngu, A.H.; Ni, J.; Debnath, M.; Wang, L. Transfer Learning on Small Datasets for Improved Fall Detection. Sensors 2023, 23, 1105. [Google Scholar] [CrossRef]

- Ngu, A.H.; Metsis, V.; Coyne, S.; Srinivas, P.; Salad, T.; Mahmud, U.; Chee, K.H. Personalized Watch-Based Fall Detection Using a Collaborative Edge-Cloud Framework. Int. J. Neural Syst. 2022, 32, 2250048. [Google Scholar] [CrossRef]

- SmartFallMM Dataset. Available online: https://anonymous.4open.science/r/smartfallmm-4588 (accessed on 10 August 2025).

- Yi, X.; Zhou, Y.; Xu, F. TransPose: Real-time 3D Human Translation and Pose Estimation with Six Inertial Sensors. arXiv 2021, arXiv:2105.04605. [Google Scholar] [CrossRef]

- Mauldin, T.R.; Canby, M.E.; Metsis, V.; Ngu, A.H.; Rivera, C.C. SmartFall: A Smartwatch-Based Fall Detection System Using Deep Learning. Sensors 2018, 18, 3363. [Google Scholar] [CrossRef] [PubMed]

- SmartFall Dataset. 2018. Available online: https://userweb.cs.txstate.edu/~hn12/data/SmartFallDataSet/ (accessed on 10 August 2025).

- Mauldin, T.R.; Ngu, A.H.; Metsis, V.; Canby, M.E. Ensemble Deep Learning on Wearables Using Small Datasets. ACM Trans. Comput. Healthc. 2021, 2, 1–30. [Google Scholar] [CrossRef]

- Şengül, G.; Karakaya, M.; Misra, S.; Abayomi-Alli, O.O.; Damaševičius, R. Deep learning based fall detection using smartwatches for healthcare applications. Biomed. Signal Process. Control 2022, 71, 103242. [Google Scholar] [CrossRef]

- Kulurkar, P.; Kumar Dixit, C.; Bharathi, V.; Monikavishnuvarthini, A.; Dhakne, A.; Preethi, P. AI based elderly fall prediction system using wearable sensors: A smart home-care technology with IOT. Meas. Sens. 2023, 25, 100614. [Google Scholar] [CrossRef]

- Vavoulas, G.; Chatzaki, C.; Malliotakis, T.; Pediaditis, M.; Tsiknakis, M. The MobiAct Dataset: Recognition of Activities of Daily Living using Smartphones. In Proceedings of the International Conference on Information and Communication Technologies for Ageing Well and e-Health (ICT4AGEINGWELL 2016)—ICT4AWE, Rome, Italy, 21–22 April 2016; INSTICC, SciTePress: Setúbal, Portugal, 2016; pp. 143–151. [Google Scholar] [CrossRef]

- Zhang, J.; Li, Z.; Liu, Y.; Li, J.; Qiu, H.; Li, M.; Hou, G.; Zhou, Z. An Effective Deep Learning Framework for Fall Detection: Model Development and Study Design. J. Med. Internet Res. 2024, 26, e56750. [Google Scholar] [CrossRef]

- Vavoulas, G.; Pediaditis, M.; Spanakis, E.G.; Tsiknakis, M. The MobiFall dataset: An initial evaluation of fall detection algorithms using smartphones. In Proceedings of the 13th IEEE International Conference on BioInformatics and BioEngineering, Chania, Greece, 10–13 November 2013; pp. 1–4. [Google Scholar]

- Buzpinar, M. Machine Learning Approaches for Fall Detection Using Integrated Data from Multi-Brand Sensors. 2024. Available online: https://www.researchsquare.com/article/rs-4673031/v1 (accessed on 10 July 2025).

- Özdemir, A.; Barshan, B. Detecting falls with wearable sensors using machine learning techniques. Sensors 2014, 14, 10691–10708. [Google Scholar] [CrossRef]

- Andrenacci, I.; Boccaccini, R.; Bolzoni, A.; Colavolpe, G.; Costantino, C.; Federico, M.; Ugolini, A.; Vannucci, A. A Comparative Evaluation of Inertial Sensors for Gait and Jump Analysis. Sensors 2021, 21, 5990. [Google Scholar] [CrossRef]

- Geurts, P.; Ernst, D.; Wehenkel, L. Extremely randomized trees. Mach. Learn. 2006, 63, 3–42. [Google Scholar] [CrossRef]

- Yhdego, H.; Paolini, C.; Audette, M. Toward Real-Time, Robust Wearable Sensor Fall Detection Using Deep Learning Methods: A Feasibility Study. Appl. Sci. 2023, 13, 4988. [Google Scholar] [CrossRef]

- Noraxon. MyoRESEARCH 3.14 User Manual. 2019. Available online: https://www.noraxon.com/article/myoresearch-3-14-user-manual/ (accessed on 5 April 2025).

- Dutta, L.; Bharali, S. Tinyml meets iot: A comprehensive survey. Internet Things 2021, 16, 100461. [Google Scholar] [CrossRef]

- Abreha, H.G.; Hayajneh, M.; Serhani, M.A. Federated learning in edge computing: A systematic survey. Sensors 2022, 22, 450. [Google Scholar] [CrossRef] [PubMed]

- Haque, S.T.; Debnath, M.; Yasmin, A.; Mahmud, T.; Ngu, A.H.H. Experimental Study of Long Short-Term Memory and Transformer Models for Fall Detection on Smartwatches. Sensors 2024, 24, 6235. [Google Scholar] [CrossRef]

- Chow, D.H.K.; Tremblay, L.; Lam, C.Y.; Yeung, A.W.Y.; Cheng, W.H.W.; Tse, P.T.W. Comparison between accelerometer and gyroscope in predicting level-ground running kinematics by treadmill running kinematics using a single wearable sensor. Sensors 2021, 21, 4633. [Google Scholar] [CrossRef] [PubMed]

- Klenk, J.; Schwickert, L.; Palmerini, L.; Mellone, S.; Bourke, A.; Ihlen, E.A.; Kerse, N.; Hauer, K.; Pijnappels, M.; Synofzik, M.; et al. The FARSEEING real-world fall repository: A large-scale collaborative database to collect and share sensor signals from real-world falls. Eur. Rev. Aging Phys. Act. 2016, 13, 8. [Google Scholar] [CrossRef]

- Aziz, O.; Musngi, M.; Park, E.J.; Mori, G.; Robinovitch, S.N. A comparison of accuracy of fall-detection algorithms (threshold-based vs. machine learning) using waist-mounted tri-axial accelerometer signals from a comprehensive set of falls and non-fall trials. Med. Biol. Eng. Comput. 2017, 55, 45–55. [Google Scholar] [CrossRef]

- Bagala, F.; Becker, C.; Cappello, A.; Chiari, L.; Aminian, K.; Hausdorff, J.M.; Zijlstra, W.; Klenk, J. Evaluation of accelerometer-based fall-detection algorithms on real-world falls. PLoS ONE 2012, 7, e37062. [Google Scholar] [CrossRef] [PubMed]

- Harari, Y.; Shawen, N.; Mummidisetty, C.K.; Albert, M.V.; Kording, K.P.; Jayaraman, A. A smartphone-based online system for fall detection with alert notifications and contextual information of real-life falls. J. Neuroeng. Rehabil. 2021, 18, 124. [Google Scholar] [CrossRef] [PubMed]

- Ruiz-Garcia, J.C.; Tolosana, R.; Vera-Rodriguez, R.; Moro, C. Carefall: Automatic fall detection through wearable devices and ai methods. arXiv 2023, arXiv:2307.05275. [Google Scholar] [CrossRef]

| Paper | Placement/Sensors/Sampling Rate | Feature Extraction | Model | Device Name/Type | Dataset/Activities | Test Type/F1-Score | Limitations |

|---|---|---|---|---|---|---|---|

| Mauldin et al. [11] | Left Wrist/Accelerometer/32 Hz | No | GRU | Microsoft (MS) Band smartwatch/Commodity | SmartFall2018 [12]/ADLs: jogging, sitting, waving, walking. Falls: front, back, left, right | Real-world/0.73 | (1) Relied on data from a single sensor only. (2) F1-score dropped in real-world testing from 0.87 to 0.73. (3) MS Band is discontinued. |

| Şengül et al. [14] | Left Wrist/Accelerometer +Gyroscope/50 Hz | Yes | BiLSTM | Sony SmartWatch 3 SWR50/Commodity | Dataset not available/ADLs: sitting, squatting, walking, running. Falls: while walking, from a chair | Offline/0.99 | (1) Not tested in real-world, and relied on cloud-based prediction, causing latency (2) Limited fall diversity (only 2 fall types), reducing generalizability (3) The device runs outdated Android Wear 1.5 with no updates |

| Kulurkar et al. [15] | Waist+Wrist/Accelerometer/50 Hz | No | 1DConvLSTM | LSM6DS0/ Specialized Sensor | MobiAct [16]/ADLs: 9 different classes. Falls: 4 different classes | Real-world/0.96 | (1) Relied on cloud-based inference, increasing latency (2) Used IIR low-pass filter, which may suppress sharp fall signals (3) Data collected and tested in different positions (4) The initial LSTM model trained on waist data raises concerns about its generalizability to wearable sensors on other body locations. |

| Zhang et al. [17] | Left Wrist/Accelerometer +Gyroscope/87–200 Hz | No | Two-stream CNN with Self-Attention | Huawei Watch 3/Commodity | MobiFall [18]/ADLs: 9 different classes. Falls: 8 different classes | Real-world/0.95 | (1) Trained using waist-mounted data, tested on wrist-worn device, creating modality mismatch (2) IMU devices used high sampling rate, not available in commodity watches |

| Buzpinar [19] | Waist/Accelerometer +Gyroscope/25 Hz | No | Extra Trees Classifier | Xsens MTWAwinda, andATD-BMX055/Specialized Sensor | MTW-IMU and ATD [20]/ADLs: 16 different classes. Falls: 20 different classes | Offline/0.99 | (1) Not tested in real-world scenarios (2) Used high-precision data [21] for training, which are not available from commodity devices (3) Results may not generalize to smartwatches or phones used in real-world scenarios deployments |

| Yhedgo [23] | Shank/Accelerometer +Gyroscope/200 Hz | Yes | Model with CNN, LSTM, and Transformer components | NoraxonmyoMOTION/Specialized Sensor | Dataset not available/ADLs: unspecified. Falls: near-fall, forward, backward, obstacle | Offline/0.96 | (1) Not tested in real-world scenarios (2) Dataset details unclear, fall and ADL class diversity not reported (3) Used specialized IMUs with a high sampling rate, not representative of consumer devices |

| Training Data | Precision | Recall | F1 Score |

|---|---|---|---|

| Wrist Accelerometer () | 0.79 | 0.84 | 0.81 |

| Hip Accelerometer () | 0.67 | 0.94 | 0.78 |

| Wrist Gyroscope () | 0.76 | 0.60 | 0.67 |

| Hip Gyroscope () | 0.59 | 0.81 | 0.68 |

| Training Data | Precision | Recall | F1 Score |

|---|---|---|---|

| 0.63 | 0.98 | 0.76 | |

| 0.76 | 0.78 | 0.77 | |

| 0.87 | 0.92 | 0.88 | |

| 0.76 | 0.73 | 0.75 | |

| 0.82 | 0.86 | 0.84 | |

| 0.92 | 0.68 | 0.78 | |

| 0.72 | 0.96 | 0.82 | |

| 0.71 | 0.98 | 0.82 | |

| 0.71 | 0.75 | 0.73 | |

| 0.59 | 1 | 0.74 | |

| 0.69 | 0.97 | 0.81 |

| Participant | Model | Precision | Recall | F1 Score |

|---|---|---|---|---|

| Participant 1 | 0.58 | 0.84 | 0.69 | |

| 0.71 (+0.13) | 0.88 (+0.04) | 0.79 (+0.1) | ||

| Participant 2 | 0.62 | 0.84 | 0.71 | |

| 0.78 (+0.16) | 0.84 (+0) | 0.81 (+0.1) | ||

| Participant 3 | 0.7 | 0.84 | 0.76 | |

| 0.88 (+0.18) | 0.92 (+0.08) | 0.9 (+0.14) | ||

| Participant 4 | 0.64 | 0.72 | 0.68 | |

| 0.72 (+0.08) | 0.84 (+0.12) | 0.78 (+0.1) | ||

| Participant 5 | 0.69 | 0.78 | 0.73 | |

| 0.76 (+0.07) | 0.8 (+0.02) | 0.78 (+0.05) | ||

| Participant 6 | 0.7 | 0.84 | 0.76 | |

| 0.75 (+0.05) | 0.88 (+0.04) | 0.81 (+0.05) | ||

| Participant 7 | 0.73 | 0.78 | 0.75 | |

| 0.78 (+0.05) | 0.71 (−0.07) | 0.75 (+0) | ||

| Participant 8 | 0.71 | 0.79 | 0.75 | |

| 0.77 (+0.06) | 0.8 (+0.01) | 0.78 (+0.03) | ||

| Participant 9 | 0.69 | 0.72 | 0.71 | |

| 0.79 (+0.1) | 0.76 (+0.04) | 0.78 (+0.07) | ||

| Participant 10 | 0.66 | 0.84 | 0.74 | |

| 0.81 (+0.15) | 0.84 (+0) | 0.82 (+0.08) | ||

| Average | 0.67 | 0.8 | 0.73 | |

| 0.78 (+0.11) | 0.83 (+0.03) | 0.8 (+0.07) |

| Training Data | Precision | Recall | F1-Score |

|---|---|---|---|

| Initial dataset + TP + FP | 0.84 | 0.93 | 0.88 |

| Initial dataset + TP + FP + TN | 0.99 | 0.54 | 0.70 |

| Initial dataset + TP + FP + 1/2 TN | 0.99 | 0.62 | 0.76 |

| Initial dataset + TP + FP + 1/4 TN | 0.98 | 0.63 | 0.77 |

| Participant | Precision | Recall | F1 Score |

|---|---|---|---|

| Participant 1 | 0.96 (+0.25) | 0.92 (+0.04) | 0.94 (+0.15) |

| Participant 2 | 0.86 (+0.08) | 0.96 (+0.12) | 0.91 (+0.1) |

| Participant 3 | 0.92 (+0.04) | 0.96 (+0.04) | 0.94 (+0.04) |

| Participant 4 | 0.93 (+0.21) | 1 (+0.16) | 0.96 (+0.18) |

| Participant 5 | 0.95 (+0.19) | 0.92 (+0.12) | 0.94 (0.16) |

| Participant 6 | 0.89 (+0.14) | 0.96 (+0.08) | 0.92 (+0.11) |

| Participant 7 | 0.91 (+0.13) | 0.84 (+0.13) | 0.87 (+0.12) |

| Participant 8 | 0.91 (+0.14) | 0.84 (+0.04) | 0.87 (+0.09) |

| Participant 9 | 0.91 (+0.12) | 0.92 (+0.16) | 0.92 (+0.14) |

| Participant 10 | 0.89 (+0.08) | 0.96 (+0.12) | 0.92 (+0.1) |

| Average | 0.91 (+0.13) | 0.93 (+0.1) | 0.92 (+0.12) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yasmin, A.; Mahmud, T.; Haque, S.T.; Alamgeer, S.; Ngu, A.H.H. Enhancing Real-World Fall Detection Using Commodity Devices: A Systematic Study. Sensors 2025, 25, 5249. https://doi.org/10.3390/s25175249

Yasmin A, Mahmud T, Haque ST, Alamgeer S, Ngu AHH. Enhancing Real-World Fall Detection Using Commodity Devices: A Systematic Study. Sensors. 2025; 25(17):5249. https://doi.org/10.3390/s25175249

Chicago/Turabian StyleYasmin, Awatif, Tarek Mahmud, Syed Tousiful Haque, Sana Alamgeer, and Anne H. H. Ngu. 2025. "Enhancing Real-World Fall Detection Using Commodity Devices: A Systematic Study" Sensors 25, no. 17: 5249. https://doi.org/10.3390/s25175249

APA StyleYasmin, A., Mahmud, T., Haque, S. T., Alamgeer, S., & Ngu, A. H. H. (2025). Enhancing Real-World Fall Detection Using Commodity Devices: A Systematic Study. Sensors, 25(17), 5249. https://doi.org/10.3390/s25175249