Multi-Agent DDPG-Based Multi-Device Charging Scheduling for IIoT Smart Grids

Abstract

1. Introduction

- We propose an MADDPG-based coordination framework for smart sensor-integrated Industrial IoT environments that combines real-time multi-device sensor data with multi-agent reinforcement learning for EV charging scheduling. Our framework exploits smart sensor infrastructure characteristics, real-time data collection capabilities, and distributed communication protocols to enhance coordination performance and reduce charging costs in industrial park settings.

- We propose an MADDPG-based multi-agent algorithm that facilitates coordinated policy learning among multiple EVs, ensuring continuous control over charging power. This approach allows each EV agent to autonomously decide based on local observations, effectively accommodating the diverse battery capacities and charging requirements of heterogeneous EVs. By utilizing continuous control, our algorithm overcomes the discrete action limitations found in traditional approaches like QMIX.

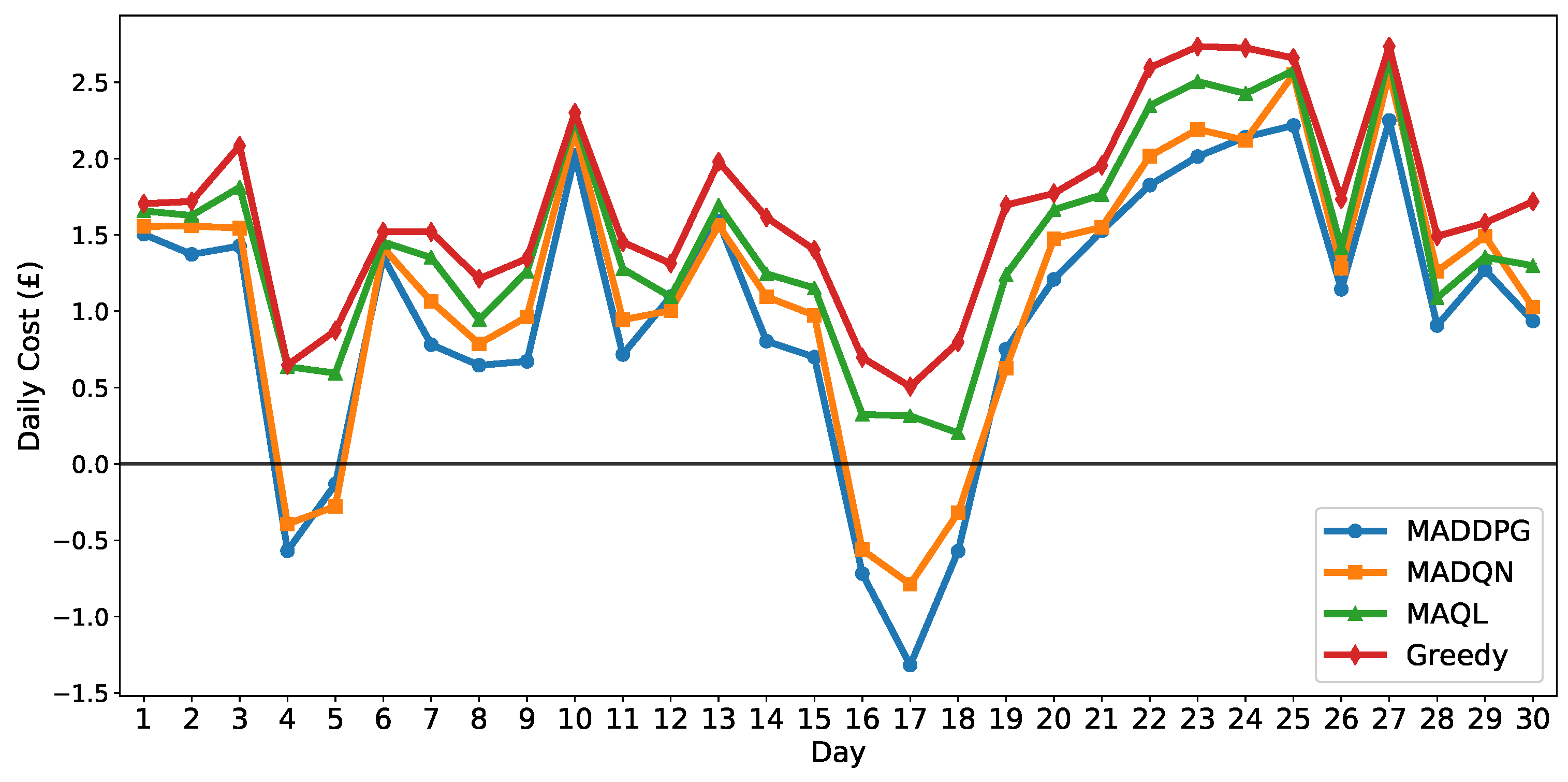

- Comprehensive experimental evaluation demonstrates the effectiveness of the proposed sensor-integrated approach, achieving 43.5% cost reduction compared with baseline methods over a 30-day evaluation period while maintaining grid stability and satisfying each EV’s charging requirements under realistic industrial park scenarios with dynamic pricing, real-time sensor monitoring, and varying EV fleet sizes.

2. System Model and Problem Formulation

2.1. System Model

2.2. Battery State Model

2.3. Problem Formulation

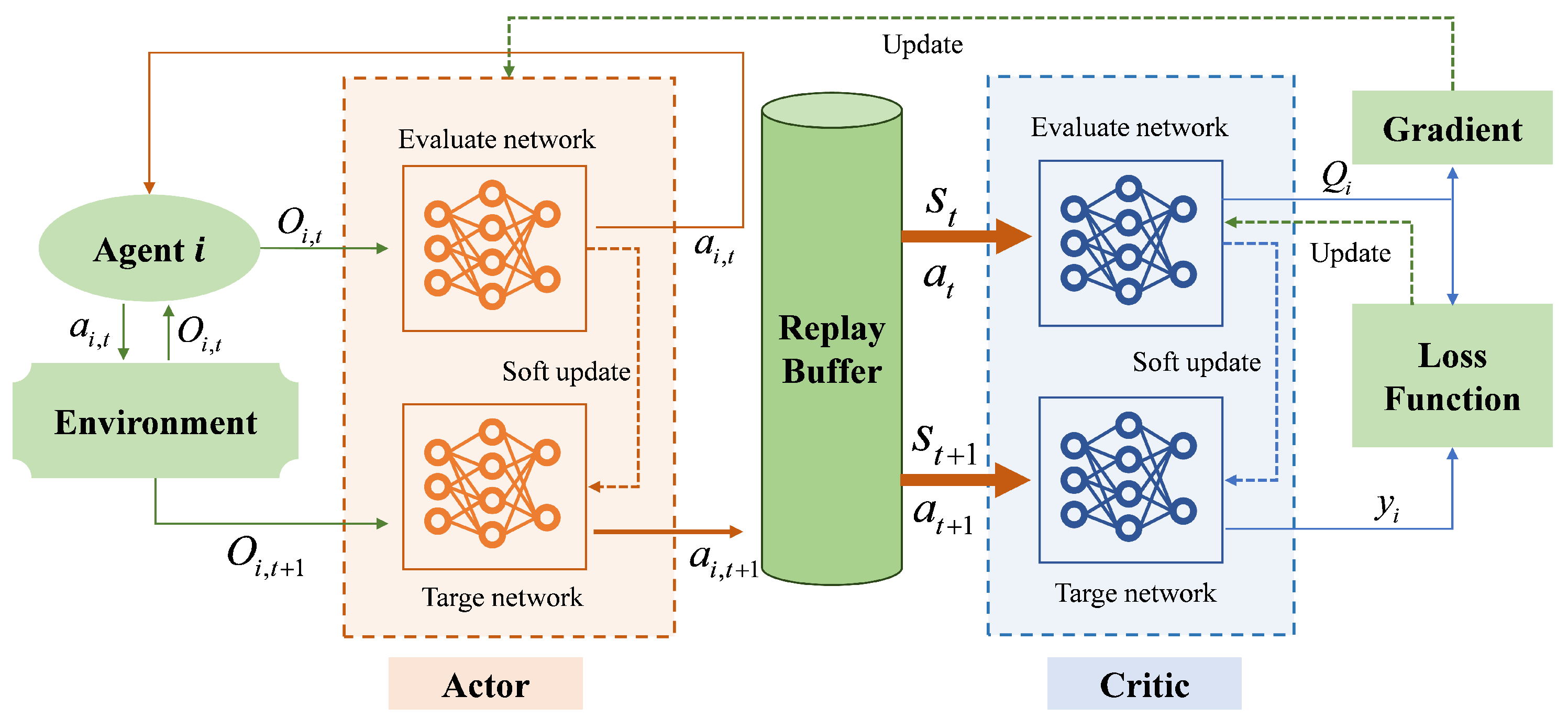

3. Charging Scheduling Algorithm Based on MADDPG

3.1. Multi-Agent MDP Formulation

3.2. MADDPG Algorithm Implementation

| Algorithm 1 MADDPG-based Multi-Agent EV Charging Scheduling |

|

3.3. Computational Complexity Analysis

4. Experimental Results and Discussion

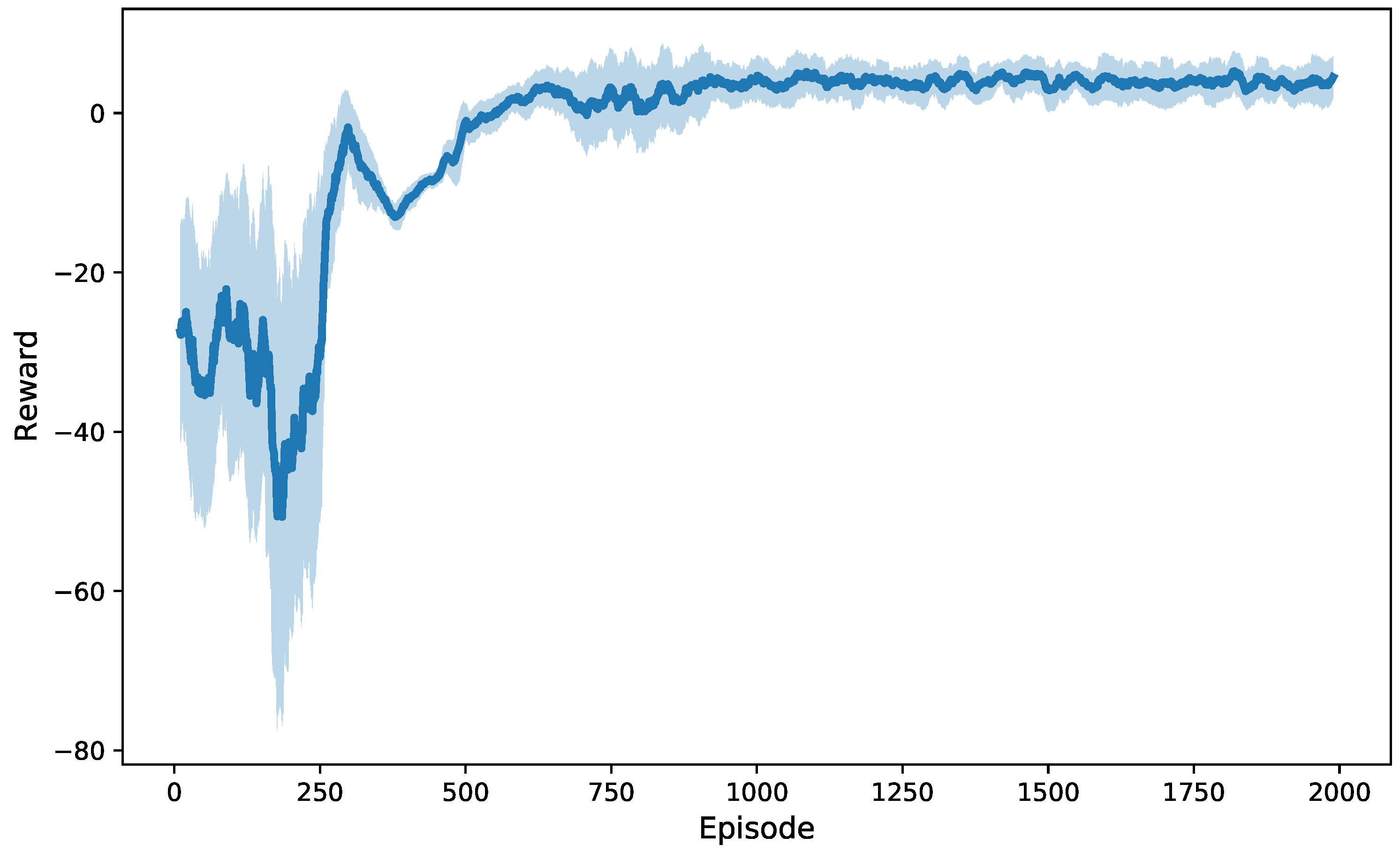

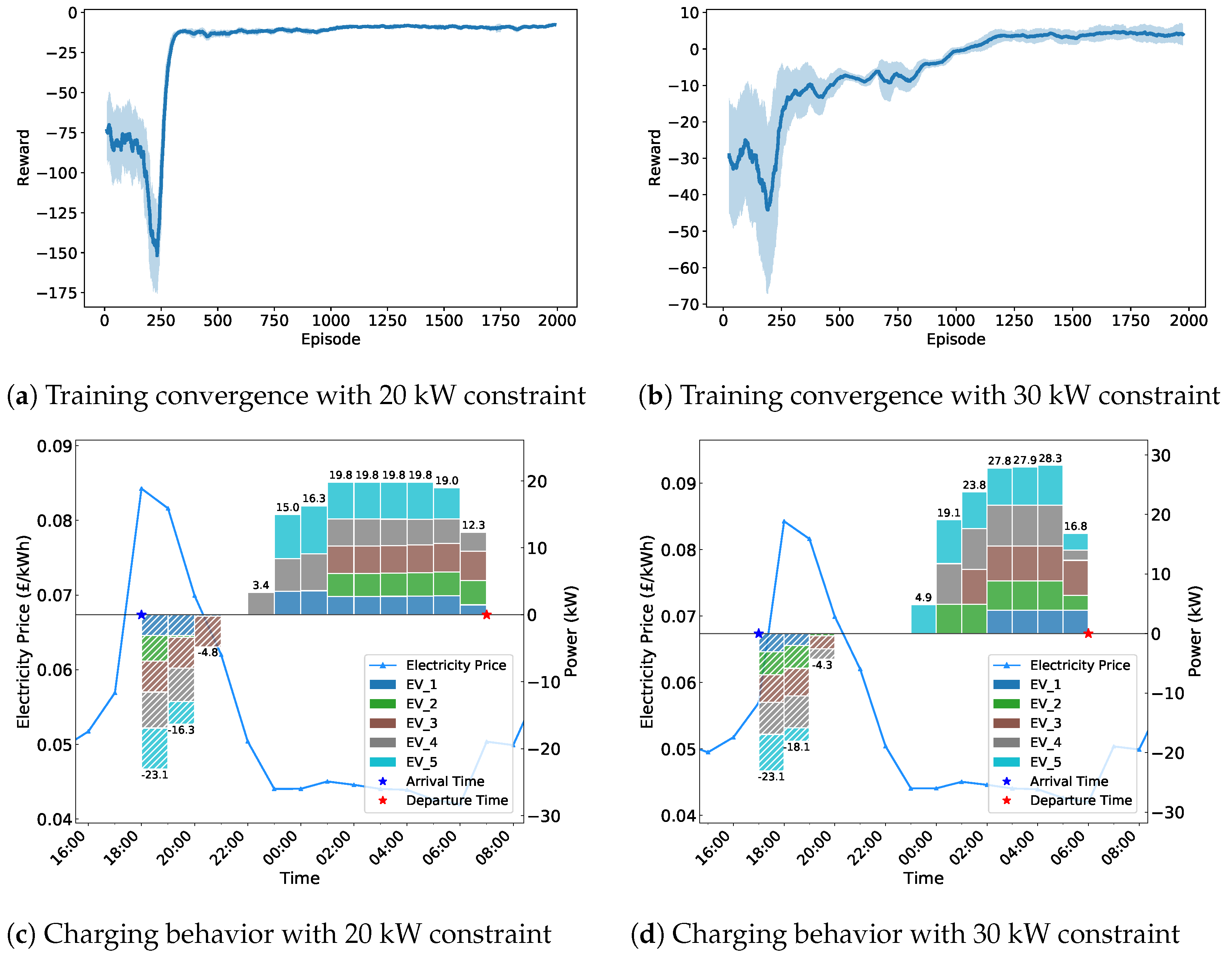

4.1. Setup and Training

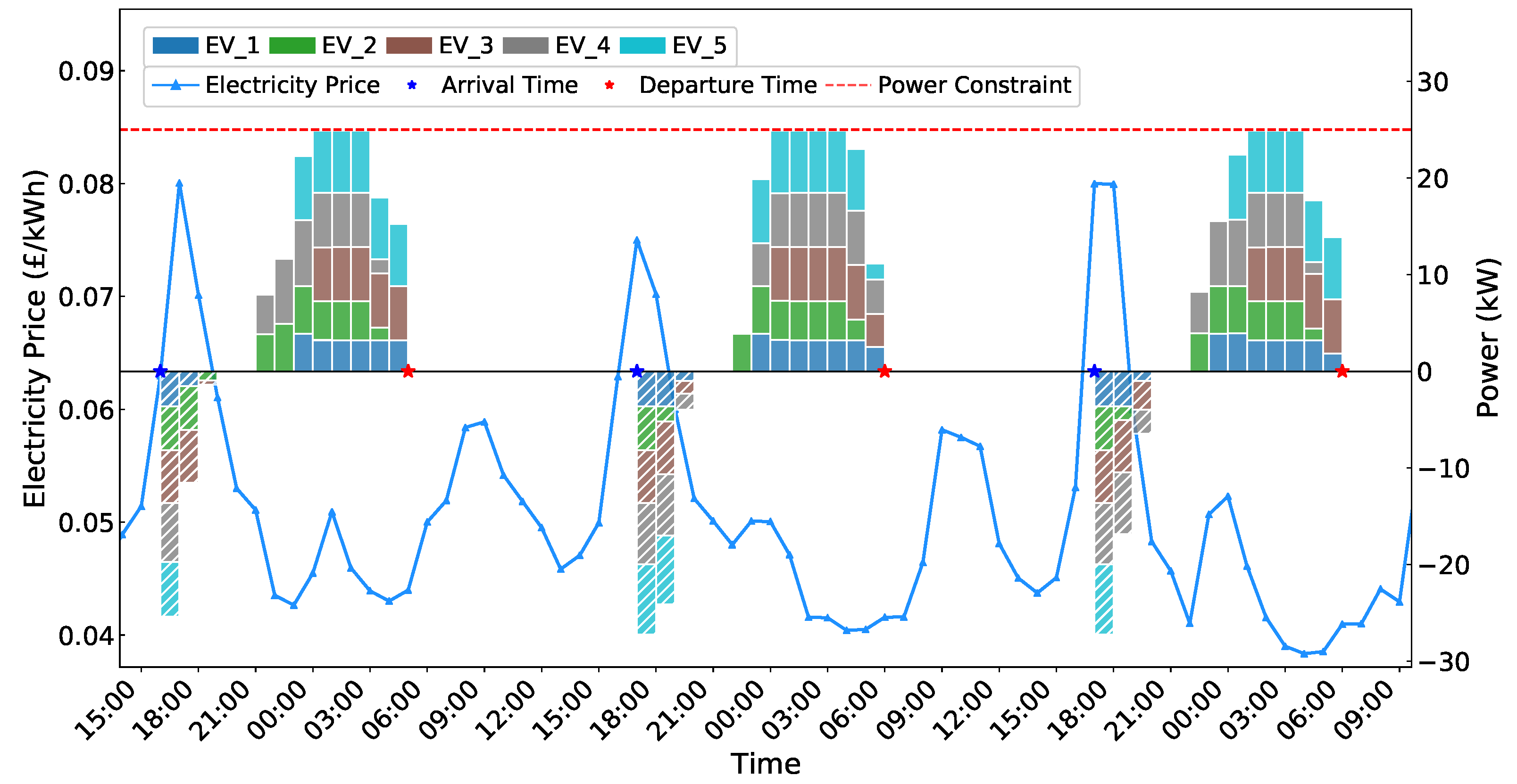

- EVs utilize lithium-ion batteries with constant charging and discharging power rates, with varying battery capacities and maximum charging power limits across different vehicles.

- All EVs participate in charging and discharging processes within the industrial park using conventional slow-charging methods.

- Charging and discharging decisions are influenced by dynamic electricity prices obtained through real-time pricing signals, without considering external disturbances or physical queuing effects at charging stations (This assumption is suitable for IIoT environments with sufficient charging infrastructure and centralized management, where external disturbances and queuing effects can be reasonably neglected [32,33]).

- EVs commence charging immediately upon arrival at charging stations, with charging periods aligned to hourly intervals.

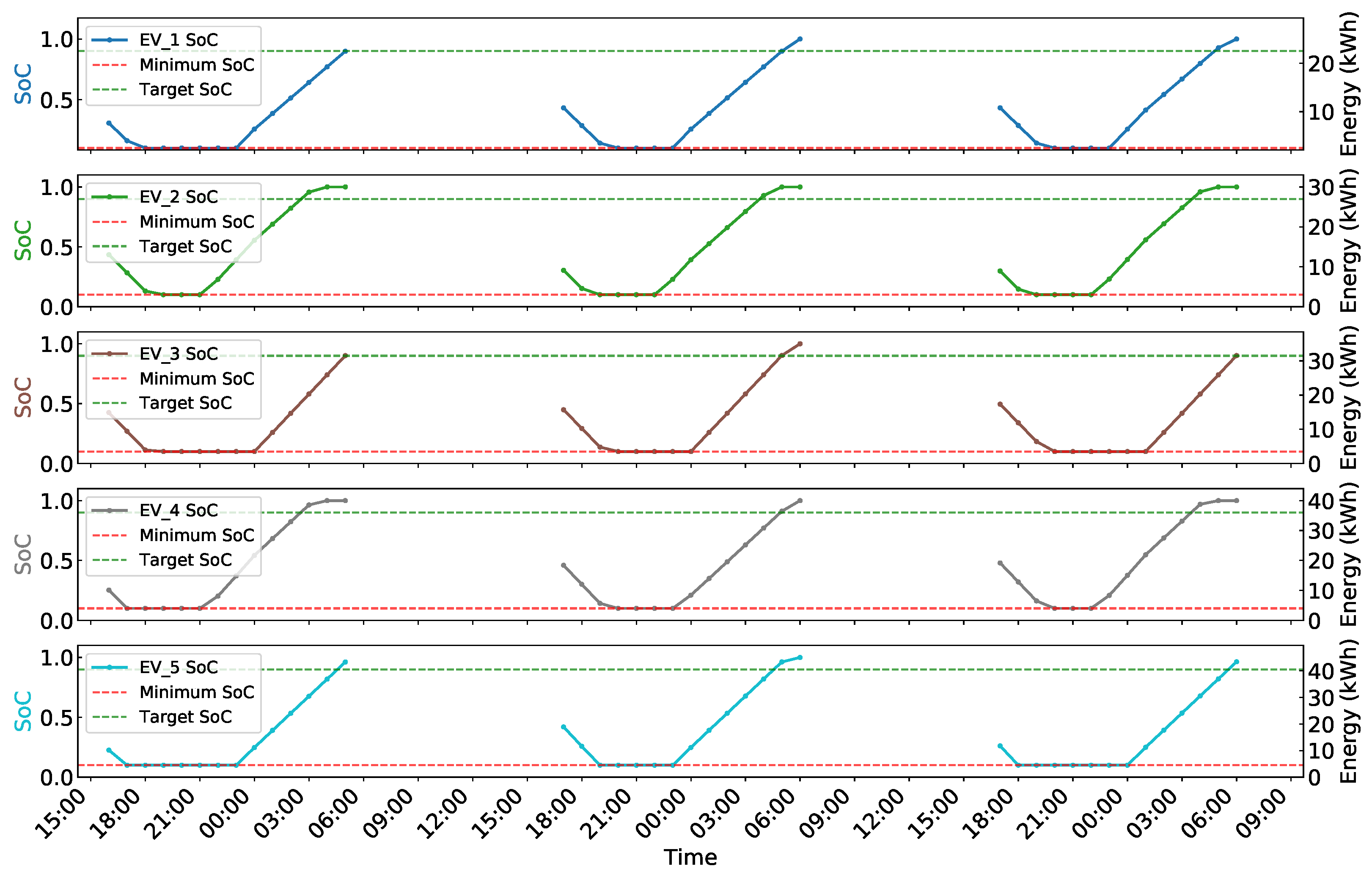

- Battery safety is maintained by constraining SoC between 0.1 and 1.0, with continuous monitoring through simulated sensor feedback systems.

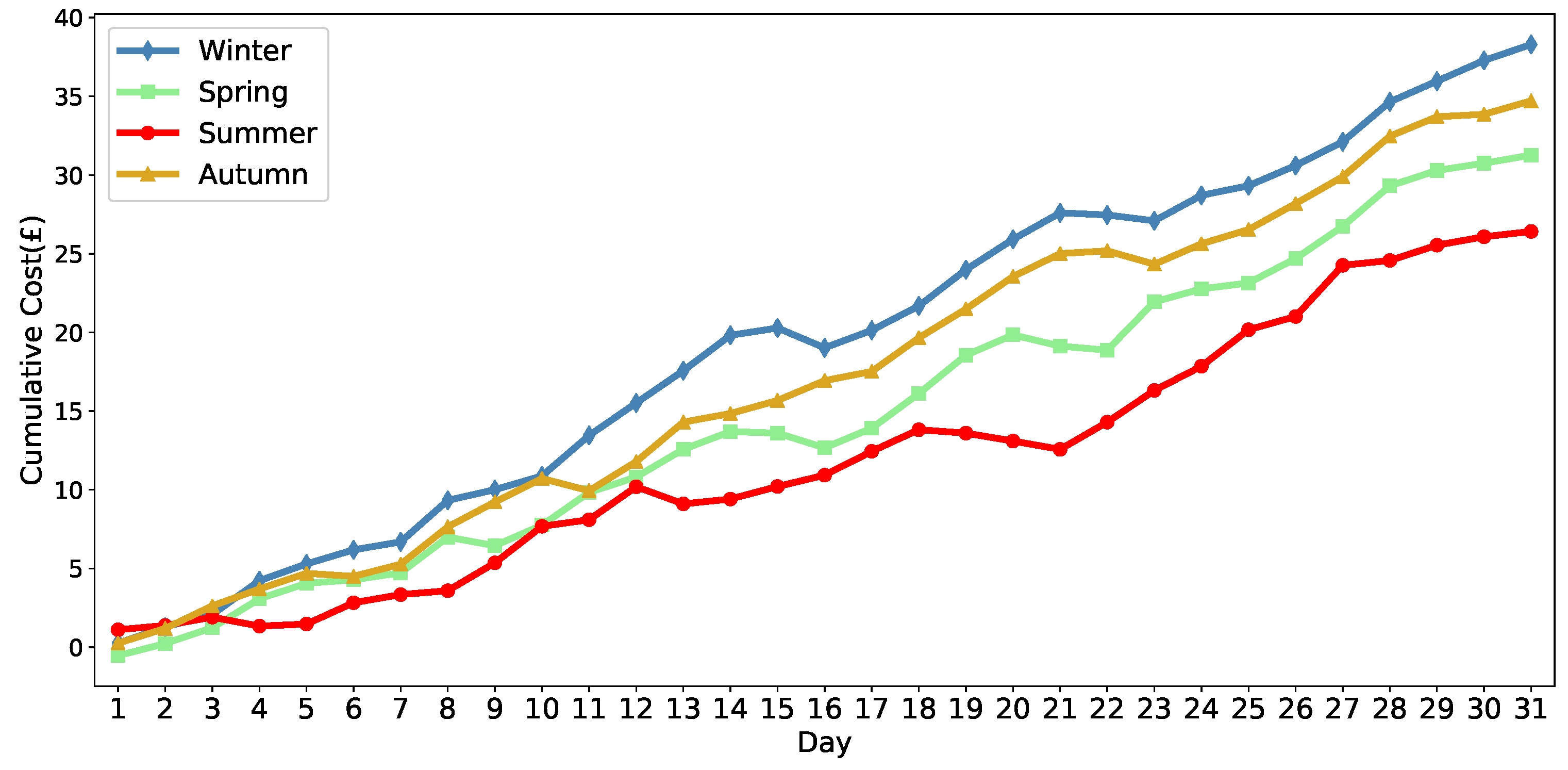

4.2. Performance Evaluation

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| Seed | MADDPG | MADQN | MAQL | Greedy |

|---|---|---|---|---|

| 0 | 27.8945 | 32.1456 | 39.8234 | 47.2341 |

| 5 | 28.9567 | 33.4789 | 41.2567 | 48.5678 |

| 12 | 30.2341 | 35.8901 | 44.7891 | 51.2345 |

| 23 | 29.1234 | 33.7856 | 42.1456 | 49.1789 |

| 25 | 28.4567 | 32.8945 | 40.5678 | 48.0123 |

| 42 | 31.0789 | 37.2341 | 46.5789 | 52.8901 |

| 47 | 29.7891 | 34.9567 | 43.4567 | 50.3456 |

| 52 | 28.1345 | 31.8904 | 39.2341 | 47.9891 |

| 55 | 30.8901 | 36.7891 | 45.9234 | 53.4567 |

| 66 | 29.4567 | 34.2345 | 42.7891 | 49.8234 |

| 78 | 28.7234 | 33.1567 | 40.8901 | 48.9567 |

| 85 | 30.5678 | 36.1234 | 44.3456 | 51.7891 |

| 99 | 29.2341 | 33.9567 | 41.6789 | 49.5678 |

| 101 | 27.6789 | 31.5678 | 38.7891 | 46.3456 |

| 123 | 31.3456 | 38.1234 | 47.8901 | 54.2345 |

| 157 | 29.8234 | 35.4567 | 43.8234 | 50.7891 |

| 178 | 28.5678 | 32.7891 | 40.1234 | 48.8901 |

| 186 | 30.1234 | 35.6789 | 44.5678 | 51.4567 |

| 195 | 29.6789 | 34.5678 | 42.3456 | 49.9234 |

| 225 | 29.3456 | 33.0123 | 41.4567 | 49.6789 |

| Mean | 29.4552 | 34.3866 | 42.6238 | 50.0182 |

| Std. Dev. | 1.0496 | 1.8505 | 2.5111 | 2.0544 |

References

- Jiang, F.; Yuan, X.; Hu, L.; Xie, G.; Zhang, Z.; Li, X.; Hu, J.; Wang, C.; Wang, H. A Comprehensive Review of Energy Storage Technology Development and Application for Pure Electric Vehicles. J. Energy Storage 2024, 86, 111159. [Google Scholar] [CrossRef]

- Husain, I.; Ozpineci, B.; Islam, M.S.; Gurpinar, E.; Su, G.J.; Yu, W.; Chowdhury, S.; Xue, L.; Rahman, D.; Sahu, R. Electric Drive Technology Trends, Challenges, and Opportunities for Future Electric Vehicles. Proc. IEEE 2021, 109, 1039–1059. [Google Scholar] [CrossRef]

- Das, H.S.; Rahman, M.M.; Li, S.; Tan, C.W. Electric Vehicles Standards, Charging Infrastructure, and Impact on Grid Integration: A Technological Review. Renew. Sustain. Energy Rev. 2020, 120, 109618. [Google Scholar] [CrossRef]

- Zhang, X.; Chan, K.W.; Li, H.; Wang, H.; Qiu, J.; Wang, G. Deep-Learning-Based Probabilistic Forecasting of Electric Vehicle Charging Load with a Novel Queuing Model. IEEE Trans. Cybern. 2020, 51, 3157–3170. [Google Scholar] [CrossRef]

- Liu, C.; Chai, K.K.; Zhang, X.; Lau, E.T.; Chen, Y. Adaptive Blockchain-Based Electric Vehicle Participation Scheme in Smart Grid Platform. IEEE Access 2018, 6, 25657–25665. [Google Scholar] [CrossRef]

- Kataray, T.; Nitesh, B.; Yarram, B.; Sinha, S.; Cuce, E.; Shaik, S.; Vigneshwaran, P.; Roy, A. Integration of Smart Grid with Renewable Energy Sources: Opportunities and Challenges–A Comprehensive Review. Sustain. Energy Technol. Assess. 2023, 58, 103363. [Google Scholar] [CrossRef]

- Mounir, M.; Sayed, S.G.; El-Dakroury, M.M.E. Securing the Future: Real-Time Intrusion Detection in IIoT Smart Grids Through Innovative AI Solutions. J. Cybersecur. Inf. Manag. 2025, 15, 208–244. [Google Scholar] [CrossRef]

- Meydani, A.; Shahinzadeh, H.; Ramezani, A.; Moazzami, M.; Nafisi, H.; Askarian-Abyaneh, H. State-of-the-Art Analysis of Blockchain-Based Industrial IoT (IIoT) for Smart Grids. In Proceedings of the 2024 9th International Conference on Technology and Energy Management (ICTEM), Tehran, Iran, 14–15 February 2024; pp. 1–12. [Google Scholar]

- Zeng, H.; Wang, J.; Wei, Z.; Zhu, X.; Jiang, Y.; Wang, Y.; Masouros, C. Multicluster-Coordination Industrial Internet of Things: The Era of Nonorthogonal Transmission. IEEE Veh. Technol. Mag. 2022, 17, 84–93. [Google Scholar] [CrossRef]

- Hou, W.; Zhu, X.; Cao, J.; Zeng, H.; Jiang, Y. Composite Robot Aided Coexistence of eMBB, URLLC and mMTC in Smart Factory. In Proceedings of the IEEE 96th Vehicular Technology Conference (VTC2022-Fall), London, UK, 26–29 September 2022; pp. 1–6. [Google Scholar]

- Li, Z.; Zhu, X.; Cao, J. Localization Accuracy Under Age of Information Influence for Industrial IoT. In Proceedings of the IEEE International Workshop on Radio Frequency and Antenna Technologies (iWRF&AT), Shenzhen, China, 31 May–3 June 2024; pp. 404–409. [Google Scholar]

- Hou, W.; Wei, Z.; Zhu, X.; Cao, J.; Jiang, Y. Toward Proximity Surveillance and Data Collection in Industrial IoT: A Multi-Stage Statistical Optimization Design. IEEE Wirel. Commun. Lett. 2024, 13, 1536–1540. [Google Scholar] [CrossRef]

- Aldossary, M. Enhancing Urban Electric Vehicle (EV) Fleet Management Efficiency in Smart Cities: A Predictive Hybrid Deep Learning Framework. Smart Cities 2024, 7, 3678–3704. [Google Scholar] [CrossRef]

- Ren, J.; Wang, H.; Yang, W.; Liu, Y.; Tsang, K.F.; Lai, L.L.; Chung, L.C. A Novel Genetic Algorithm-Based Emergent Electric Vehicle Charging Scheduling Scheme. In Proceedings of the IECON 2019–45th Annual Conference of the IEEE Industrial Electronics Society, Lisbon, Portugal, 14–17 October 2019; Volume 1, pp. 4289–4292. [Google Scholar]

- Tan, K.M.; Ramachandaramurthy, V.K.; Yong, J.Y.; Padmanaban, S.; Mihet-Popa, L.; Blaabjerg, F. Minimization of Load Variance in Power Grids—Investigation on Optimal Vehicle-to-Grid Scheduling. Energies 2017, 10, 1880. [Google Scholar] [CrossRef]

- Shi, Y.; Tuan, H.D.; Savkin, A.V.; Duong, T.Q.; Poor, H.V. Model Predictive Control for Smart Grids with Multiple Electric-Vehicle Charging Stations. IEEE Trans. Smart Grid 2018, 10, 2127–2136. [Google Scholar] [CrossRef]

- Costa, J.S.; Lunardi, A.; Lourenço, L.F.N.; Oliani, I.; Sguarezi Filho, A.J. Lyapunov-Based Finite Control Set Applied to an EV Charger Grid Converter Under Distorted Voltage. IEEE Trans. Transp. Electr. 2024, 11, 3549–3557. [Google Scholar] [CrossRef]

- Du, Y.; Li, F. Intelligent Multi-Microgrid Energy Management Based on Deep Neural Network and Model-Free Reinforcement Learning. IEEE Trans. Smart Grid 2020, 11, 1066–1076. [Google Scholar] [CrossRef]

- Wan, Z.; Li, H.; He, H.; Prokhorov, D. Model-Free Real-Time EV Charging Scheduling Based on Deep Reinforcement Learning. IEEE Trans. Smart Grid 2019, 10, 5246–5257. [Google Scholar] [CrossRef]

- Zhang, F.; Yang, Q.; An, D. CDDPG: A Deep-Reinforcement-Learning-Based Approach for Electric Vehicle Charging Control. IEEE Internet Things J. 2021, 8, 3075–3087. [Google Scholar] [CrossRef]

- Wang, S.; Bi, S.; Zhang, Y.A. Reinforcement Learning for Real-Time Pricing and Scheduling Control in EV Charging Stations. IEEE Trans. Ind. Inform. 2021, 17, 849–859. [Google Scholar] [CrossRef]

- Li, H.; Li, G.; Lie, T.T.; Li, X.; Wang, K.; Han, B.; Xu, J. Constrained Large-Scale Real-Time EV Scheduling Based on Recurrent Deep Reinforcement Learning. Int. J. Electr. Power Energy Syst. 2023, 144, 108603. [Google Scholar] [CrossRef]

- Wang, L.; Liu, S.; Wang, P.; Xu, L.; Hou, L.; Fei, A. QMIX-Based Multi-Agent Reinforcement Learning for Electric Vehicle-Facilitated Peak Shaving. In Proceedings of the IEEE Global Communications Conference (GLOBECOM), Kuala Lumpur, Malaysia, 4–8 December 2023; pp. 1693–1698. [Google Scholar]

- Zhao, Z.; Lee, C.K.M.; Yan, X.; Wang, H. Reinforcement Learning for Electric Vehicle Charging Scheduling: A Systematic Review. Transp. Res. Part E Logist. Transp. Rev. 2024, 190, 103698. [Google Scholar] [CrossRef]

- Zhang, Q.; Wang, Y. Correlated Information Scheduling in Industrial Internet of Things Based on Multi-Heterogeneous-Agent-Reinforcement-Learning. IEEE Trans. Netw. Sci. Eng. 2023, 11, 1065–1076. [Google Scholar] [CrossRef]

- Yan, L.; Chen, X.; Chen, Y.; Wen, J. A Cooperative Charging Control Strategy for Electric Vehicles Based on Multiagent Deep Reinforcement Learning. IEEE Trans. Ind. Inform. 2022, 18, 8765–8775. [Google Scholar] [CrossRef]

- Park, K.; Moon, I. Multi-Agent Deep Reinforcement Learning Approach for EV Charging Scheduling in a Smart Grid. Appl. Energy 2022, 328, 120111. [Google Scholar] [CrossRef]

- Li, H.; Han, B.; Li, G.; Wang, K.; Xu, J.; Khan, M.W. Decentralized Collaborative Optimal Scheduling for EV Charging Stations Based on Multi-Agent Reinforcement Learning. IET Gener. Transm. Distrib. 2024, 18, 1172–1183. [Google Scholar] [CrossRef]

- Gao, Y.; Wang, W.; Yu, N. Consensus Multi-Agent Reinforcement Learning for Volt-Var Control in Power Distribution Networks. IEEE Trans. Smart Grid 2021, 12, 3594–3604. [Google Scholar] [CrossRef]

- Liang, Y.; Ding, Z.; Zhao, T.; Lee, W.J. Real-Time Operation Management for Battery Swapping-Charging System via Multi-Agent Deep Reinforcement Learning. IEEE Trans. Smart Grid 2022, 14, 559–571. [Google Scholar] [CrossRef]

- Nordpool Group. Historical Market Data. 2024. Available online: https://www.nordpoolgroup.com/ (accessed on 22 September 2024).

- Viegas, M.A.A.; da Costa, C.T., Jr. Fuzzy Logic Controllers for Charging/Discharging Management of Battery Electric Vehicles in a Smart Grid. J. Control Autom. Electr. Syst. 2021, 32, 1214–1227. [Google Scholar] [CrossRef]

- Ren, L.; Yuan, M.; Jiao, X. Electric Vehicle Charging and Discharging Scheduling Strategy Based on Dynamic Electricity Price. Eng. Appl. Artif. Intell. 2023, 123, 106320. [Google Scholar] [CrossRef]

- Mhaisen, N.; Fetais, N.; Massoud, A. Real-Time Scheduling for Electric Vehicles Charging/Discharging Using Reinforcement Learning. In Proceedings of the IEEE International Conference on Informatics, IoT, and Enabling Technologies (ICIoT), Doha, Qatar, 2–5 February 2020; pp. 1–6. [Google Scholar]

| Parameter | Distribution |

|---|---|

| Arrival Time (hour) | |

| Departure Time (hour) | |

| Initial SoC | |

| Target SoC |

| Parameter | Value |

|---|---|

| Actor Learning Rate | |

| Critic Learning Rate | |

| Discount Factor | 0.98 |

| Target Network Soft Update Rate | 0.005 |

| Replay Buffer Size | 100,000 |

| Minimum Replay Buffer Size | 2000 |

| Batch Size B | 128 |

| Total Training Episodes E | 2000 |

| Parameter | Value |

|---|---|

| Number of EVs | 5 |

| EV Battery Capacity Range | 25–40 kWh |

| Max Charging Power per EV | 4.0 to 8.0 kW |

| Total Power Constraint | 25.0 kW |

| Grid Energy Capacity | 30 kWh |

| Time Slot Duration | 1 h |

| SoC Constraints | [0.1, 1.0] |

| Scheduling Algorithm | Cumulative Cost (GBP) | |||||

|---|---|---|---|---|---|---|

| Day 5 | Day 10 | Day 15 | Day 20 | Day 25 | Day 30 | |

| MADDPG | 3.6043 | 9.0806 | 13.9893 | 13.3423 | 23.0675 | 29.4552 |

| MADQN | 3.9888 | 10.4051 | 15.9883 | 16.4228 | 26.8537 | 34.3866 |

| MAQL | 6.3302 | 13.6059 | 20.0723 | 23.8202 | 35.4404 | 42.6238 |

| Greedy | 7.0304 | 14.9308 | 22.6946 | 28.1631 | 40.8343 | 50.0182 |

| Scheduling Algorithm | Daily Cost (£) | |||||

|---|---|---|---|---|---|---|

| Mean | Median | IQR (Q1–Q3) | Worst Case | Best Case | Std. Dev. | |

| MADDPG | 0.986 | 1.121 | 0.704–1.521 | 2.250 | −1.318 | 0.903 |

| MADQN | 1.149 | 1.272 | 0.950–1.558 | 2.560 | −0.788 | 0.886 |

| MAQL | 1.442 | 1.354 | 1.110–1.746 | 2.669 | 0.203 | 0.670 |

| Greedy | 1.670 | 1.655 | 1.360–1.974 | 2.735 | 0.507 | 0.626 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zeng, H.; Huang, Y.; Zhan, K.; Yu, Z.; Zhu, H.; Li, F. Multi-Agent DDPG-Based Multi-Device Charging Scheduling for IIoT Smart Grids. Sensors 2025, 25, 5226. https://doi.org/10.3390/s25175226

Zeng H, Huang Y, Zhan K, Yu Z, Zhu H, Li F. Multi-Agent DDPG-Based Multi-Device Charging Scheduling for IIoT Smart Grids. Sensors. 2025; 25(17):5226. https://doi.org/10.3390/s25175226

Chicago/Turabian StyleZeng, Haiyong, Yuanyan Huang, Kaijie Zhan, Zichao Yu, Hongyan Zhu, and Fangyan Li. 2025. "Multi-Agent DDPG-Based Multi-Device Charging Scheduling for IIoT Smart Grids" Sensors 25, no. 17: 5226. https://doi.org/10.3390/s25175226

APA StyleZeng, H., Huang, Y., Zhan, K., Yu, Z., Zhu, H., & Li, F. (2025). Multi-Agent DDPG-Based Multi-Device Charging Scheduling for IIoT Smart Grids. Sensors, 25(17), 5226. https://doi.org/10.3390/s25175226