Intention Prediction for Active Upper-Limb Exoskeletons in Industrial Applications: A Systematic Literature Review

Abstract

1. Introduction

2. Background

2.1. Active Upper-Limb Industrial Exoskeletons

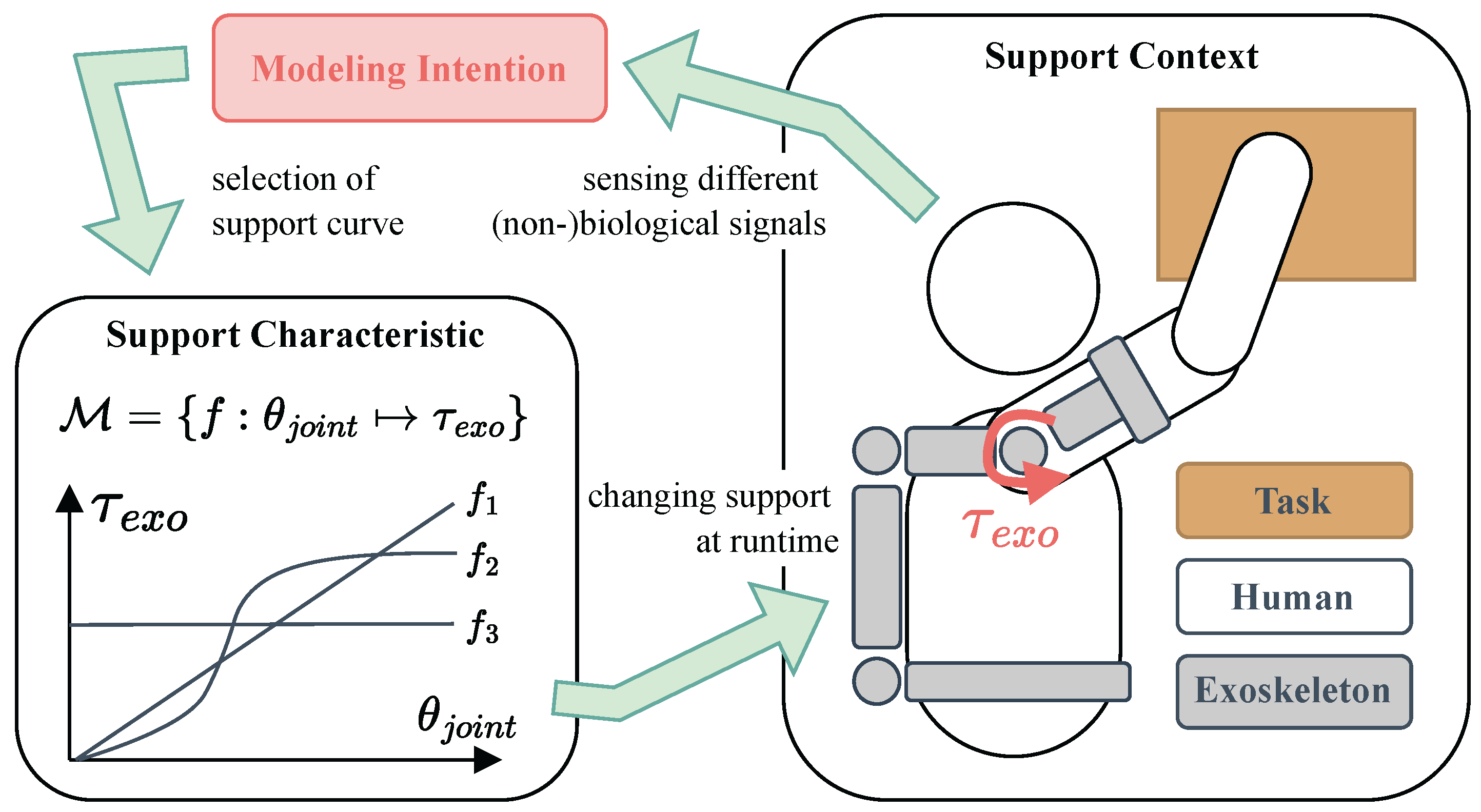

2.2. Intention Prediction

3. Research Objectives and Methodology

3.1. Objectives and Dimensions

3.2. Methodology

3.2.1. Eligibility Criteria

3.2.2. Databases and Search Strategy

3.2.3. Selection Process

3.2.4. Data Collection and Synthesis

4. Results

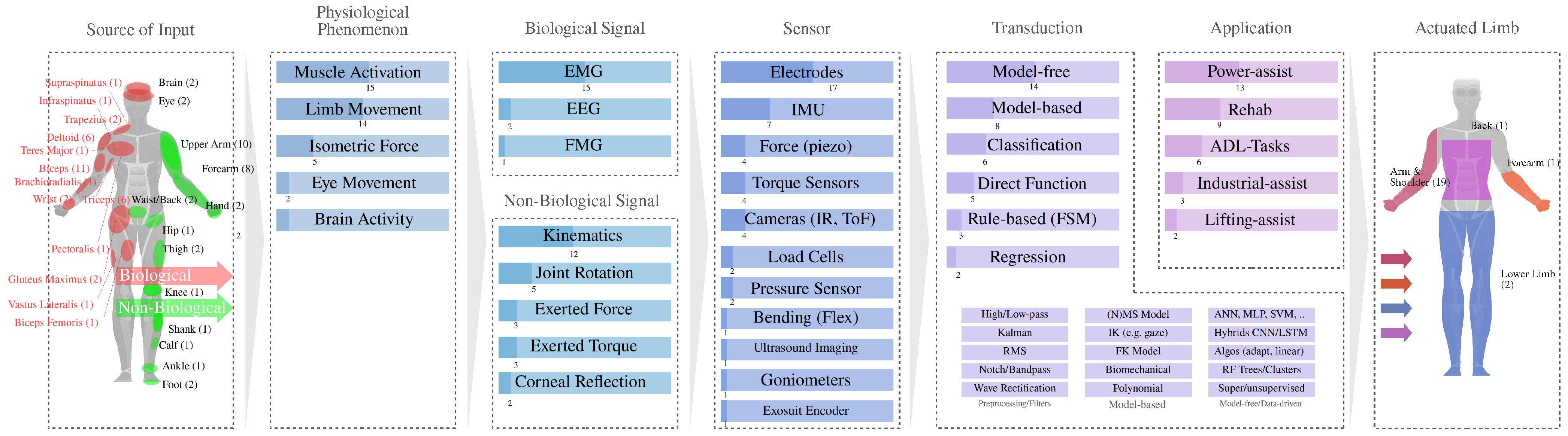

4.1. Intention Cues

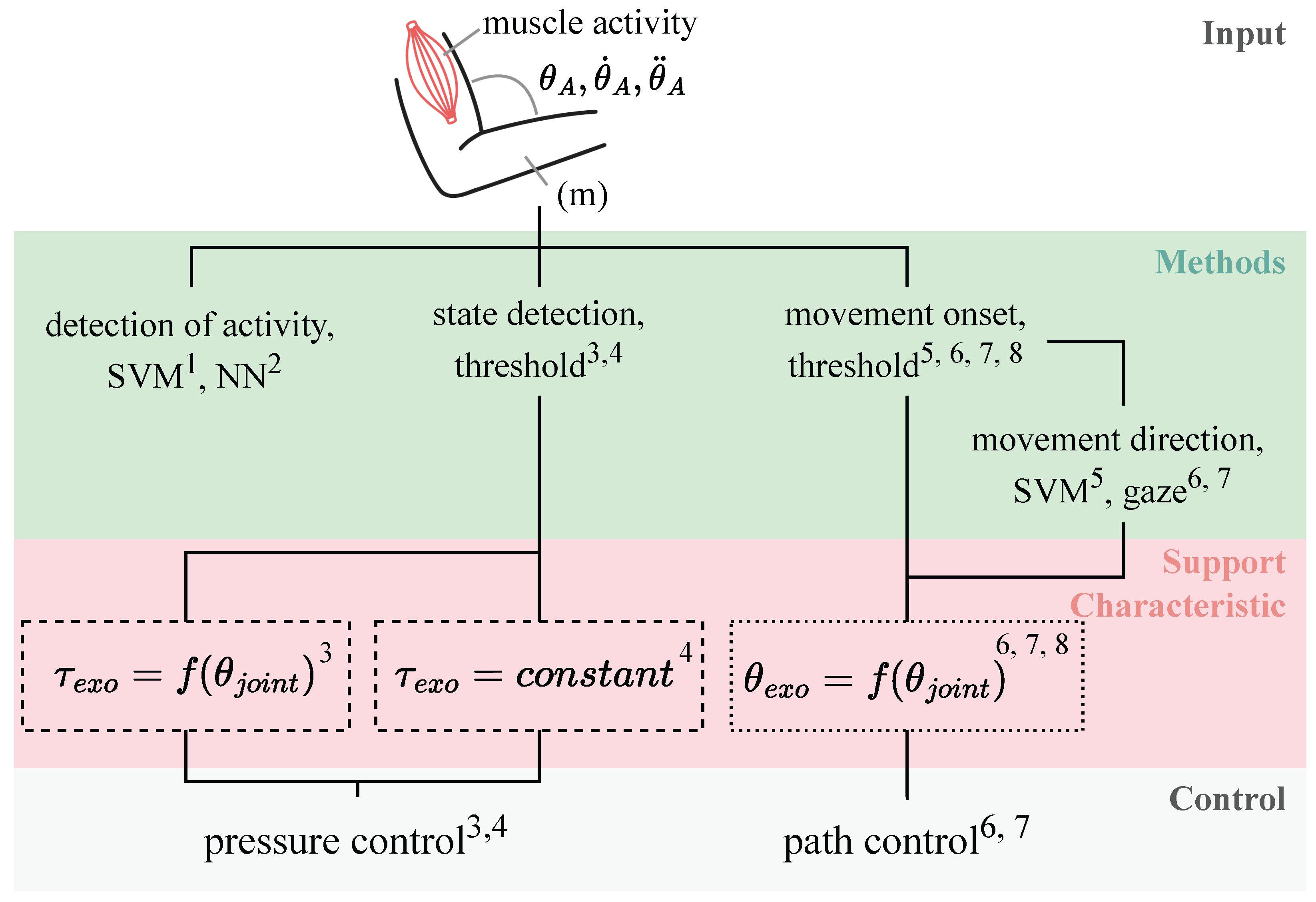

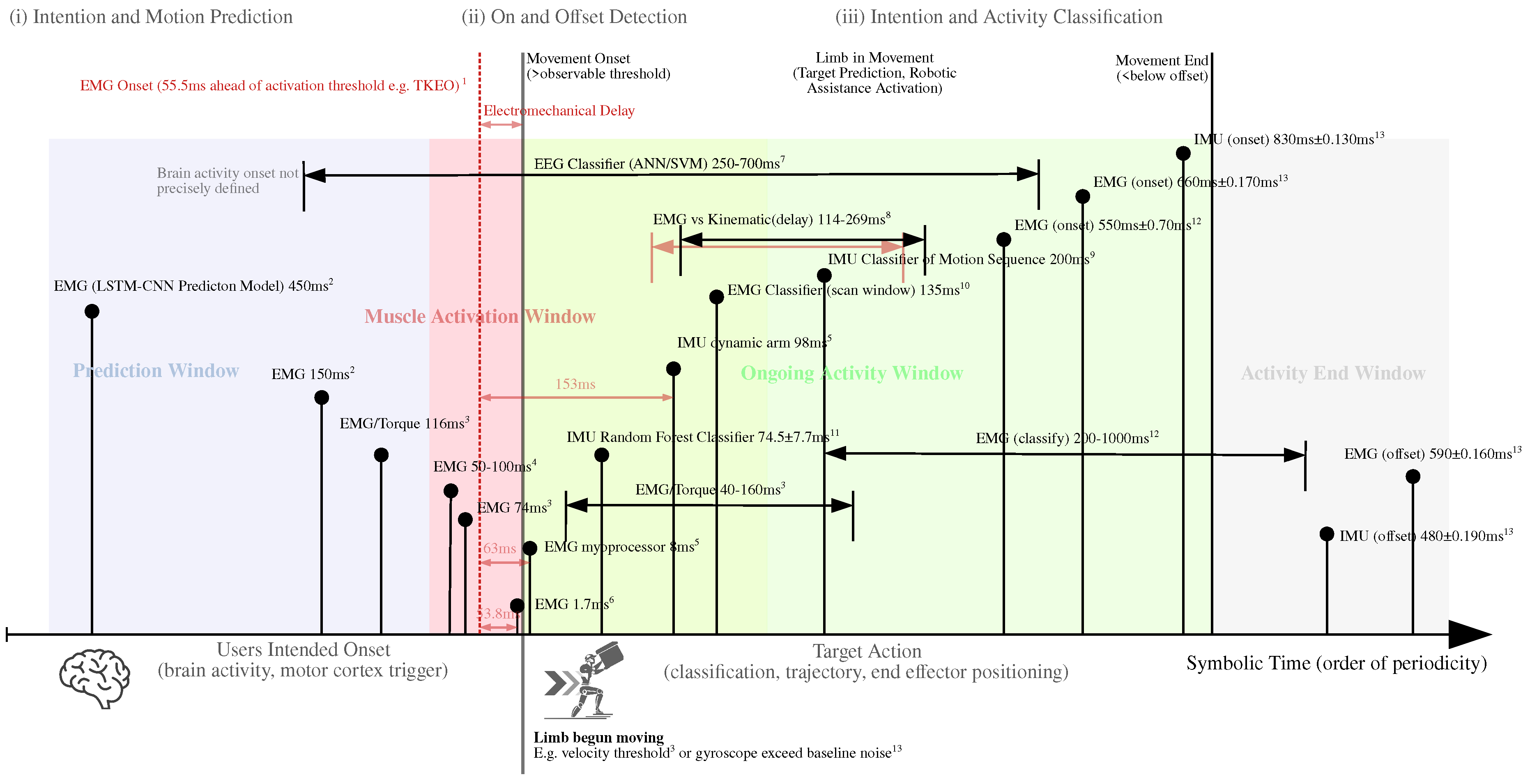

4.1.1. Intention and Activity Prediction

4.1.2. Detect Onset and Offset of Activity

4.1.3. Intention and Activity Classification

4.2. Sensors and Signals

4.2.1. Biological Signals

4.2.2. Non-Biological Signals

4.3. Computation and Models

4.3.1. Classification

4.3.2. Model-Based Regression

4.3.3. Model-Free Regression

4.4. Time

4.4.1. System Response Time

4.4.2. Analysis Times

4.5. Support Characteristic

4.6. Users and Evaluation

5. Discussion

5.1. Intention Cues

5.2. Sensors and Signals

5.3. Computation and Models

5.4. Time

5.5. Exoskeleton Implementations

6. Future Research and Recommendations

- EMG-based muscle activity of targeted, antagonistic, and non-target muscle groups to assess whole-body load and stress distribution.

- Motion capture-based kinematic changes on whole-body movements analyzed via PCA analysis; see, for example, [114].

- Somatic indicators such as metabolic cost (via respirometry), heart rate, and skin temperature.

- Functional task performance (execution time and execution quality).

7. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A. Detailed Results

Appendix A.1. Biological Signals

| Elbow Torque@ | Elbow Shoulder Torque | Elbow Shoulder Angle | Motion Classification | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Muscle | [70] | [64] | [63] | [65] | [7] | [53] | [68] | [75] | [59] | [54] | [57] | [67] | [50] | |

| Upper Limb | Biceps Brachii | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ||

| Triceps Brachii | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ||||||||

| Brachioradialis | ✓ | |||||||||||||

| Anterior Deltoid | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ||||||||

| Posterior Deltoid | ✓ | ✓ | ✓ | ✓ | ||||||||||

| Medial Deltoid | ✓ | ✓ | ✓ | ✓ | ||||||||||

| Lateral Deltoid | ✓ | |||||||||||||

| Supraspinatus | ✓ | |||||||||||||

| Infraspinatus | ✓ | |||||||||||||

| Teres Major | ✓ | |||||||||||||

| Pectoralis Major | ✓ | |||||||||||||

| Trapezius | ✓ | ✓ | ||||||||||||

| Wrist Flexor | ✓ | |||||||||||||

| Wrist Extensor | ✓ | |||||||||||||

| Lower Limb | Gluteus Maximus | ✓ | ✓ | |||||||||||

| Biceps Femoris | ✓ | |||||||||||||

| Vastus Lateralis | ✓ | |||||||||||||

Appendix A.2. Users and Evaluation

| Ref. | Model Type | Evaluation Metric | Result | |

|---|---|---|---|---|

| Classification | [52] | SVM; activity classification | accuracy; offline | 73.1% |

| [54] | SVM; activity classification | accuracy; offline | 90–98% | |

| accuracy; online | 81–99% | |||

| [59] | SVM; movement intention | accuracy | 93.03% | |

| SVM; movement direction | accuracy | 78.28% | ||

| [51] | Random Forest | accuracy | no support; support | |

| [55] | thresholding; kinematics-based | accuracy | 99% | |

| [57] | thresholding; kinematics-based | accuracy | 92.6% | |

| thresholding; EMG-based | accuracy | 96.2% | ||

| [67] | thresholding; EMG/force-based | false positives; online | 3.9% | |

| [50] | thresholding; kinematics/force-based | accuracy | 100% unimpaired; 0% impaired | |

| [75] | thresholding; kinematics-based | accuracy | 98.7–99.7% | |

| thresholding; EMG-based | accuracy | 57.4–95.6% | ||

| Regression | [53] | biomechanical model | R2 | elbow shoulder |

| RMSE | elbow shoulder | |||

| [63] | biomechanical model | R2 | ||

| RMSE | ||||

| [65] | biomechanical model | correlation coefficient | 98.04% | |

| RMSE | 1.90 Nm | |||

| [71] | biomechanical model | RMSE | ||

| [73] | mechanical geodesics model | RMSE | 0.02–0.13 mm | |

| [51] | Random Forest | R2 | ||

| RMSE | ||||

| [60] | Extreme Learning Machine | RMSE | ° | |

| [68] | hybrid CNN-LSTM model | accuracy | 91.2% | |

| [69] | NARX neural network | RMSE | 0.87–1.1° | |

| [70] | multilayer neural network | mean absolute error | 0.58 at 0 kg; 1.53 at 5 lb |

References

- Weidner, R.; Linnenberg, C.; Hoffmann, N.; Prokop, G.; Edwards, V. Exoskelette für den industriellen Kontext: Systematisches Review und Klassifikation. In Proceedings of the Gesellschaft für Arbeitswissenschaft e.V.: Dokumentation des 66. Arbeitswissenschaftlichen Kongresses, Berlin, Germany, 16–18 March 2020. [Google Scholar]

- de Looze, M.P.; Bosch, T.; Krause, F.; Stadler, K.S.; O’Sullivan, L.W. Exoskeletons for industrial application and their potential effects on physical work load. Ergonomics 2016, 59, 671–681. [Google Scholar] [CrossRef]

- Bär, M.; Steinhilber, B.; Rieger, M.A.; Luger, T. The influence of using exoskeletons during occupational tasks on acute physical stress and strain compared to no exoskeleton—A systematic review and meta-analysis. Appl. Ergon. 2021, 94, 103385. [Google Scholar] [CrossRef]

- European Agency for Safety and Health at Work; IKEI; Panteia; de Kok, J.; Vroonhof, P.; Snijders, J.; Roullis, G.; Clarke, M.; Peereboom, K.; van Dorst, P.; et al. Work-Related Musculoskeletal Disorders: Prevalence, Costs and Demographics in the EU; Publications Office: Luxembourg, 2019. [Google Scholar] [CrossRef]

- Ott, O.; Ralfs, L.; Weidner, R. Framework for qualifying exoskeletons as adaptive support technology. Front. Robot. AI 2022, 9, 951382. [Google Scholar] [CrossRef]

- Wandke, H. Assistance in human–machine interaction: A conceptual framework and a proposal for a taxonomy. Theor. Issues Ergon. Sci. 2005, 6, 129–155. [Google Scholar] [CrossRef]

- Dinh, B.K.; Xiloyannis, M.; Antuvan, C.W.; Cappello, L.; Masia, L. Hierarchical Cascade Controller for Assistance Modulation in a Soft Wearable Arm Exoskeleton. IEEE Robot. Autom. Lett. 2017, 2, 1786–1793. [Google Scholar] [CrossRef]

- De Bock, S.; Ghillebert, J.; Govaerts, R.; Elprama, S.A.; Marusic, U.; Serrien, B.; Jacobs, A.; Geeroms, J.; Meeusen, R.; De Pauw, K. Passive Shoulder Exoskeletons: More Effective in the Lab Than in the Field? IEEE Trans. Neural Ayst. Rehab. Eng. 2021, 29, 173–183. [Google Scholar] [CrossRef]

- Wang, W.; Li, R.; Chen, Y.; Jia, Y. Human Intention Prediction in Human-Robot Collaborative Tasks. In Proceedings of the Companion of the 2018 ACM/IEEE International Conference on Human-Robot Interaction, Chicago, IL, USA, 5–8 March 2018; pp. 279–280. [Google Scholar] [CrossRef]

- Wang, W.; Li, R.; Chen, Y.; Sun, Y.; Jia, Y. Predicting Human Intentions in Human–Robot Hand-Over Tasks Through Multimodal Learning. IEEE Trans. Autom. Sci. Eng. 2022, 19, 2339–2353. [Google Scholar] [CrossRef]

- Bi, L.; Feleke, A.G.; Guan, C. A review on EMG-based motor intention prediction of continuous human upper limb motion for human-robot collaboration. Biomed. Signal Process. Control 2019, 51, 113–127. [Google Scholar] [CrossRef]

- Wei, Z.; Zhang, Z.Q.; Xie, S.Q. Continuous Motion Intention Prediction Using sEMG for Upper-Limb Rehabilitation: A Systematic Review of Model-Based and Model-Free Approaches. IEEE Trans. Neural Syst. Rehab. Eng. 2024, 32, 1487–1504. [Google Scholar] [CrossRef] [PubMed]

- Zongxing, L.; Jie, Z.; Ligang, Y.; Jinshui, C.; Hongbin, L. The Human–Machine Interaction Methods and Strategies for Upper and Lower Extremity Rehabilitation Robots: A Review. IEEE Sens. J. 2024, 24, 13773–13787. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef]

- Kim, S.; Nussbaum, M.A.; Smets, M. Usability, User Acceptance, and Health Outcomes of Arm-Support Exoskeleton Use in Automotive Assembly: An 18-month Field Study. JOEM 2022, 64, 202–211. [Google Scholar] [CrossRef]

- Siedl, S.M.; Mara, M. What Drives Acceptance of Occupational Exoskeletons? Focus Group Insights from Workers in Food Retail and Corporate Logistics. Int. J. Hum.-Comput. Int. 2022, 39, 4080–4089. [Google Scholar] [CrossRef]

- Nasr, A.; Ferguson, S.; McPhee, J. Model-Based Design and Optimization of Passive Shoulder Exoskeletons. J. Comput. Nonlinear Dyn. 2022, 17, 051004. [Google Scholar] [CrossRef]

- Gantenbein, J.; Dittli, J.; Meyer, J.T.; Gassert, R.; Lambercy, O. Intention Detection Strategies for Robotic Upper-Limb Orthoses: A Scoping Review Considering Usability, Daily Life Application, and User Evaluation. Front. Neurorobot. 2022, 16, 815693. [Google Scholar] [CrossRef] [PubMed]

- Li, J.; Gu, X.; Qiu, S.; Zhou, X.; Cangelosi, A.; Loo, C.K.; Liu, X. A Survey of Wearable Lower Extremity Neurorehabilitation Exoskeleton: Sensing, Gait Dynamics, and Human–Robot Collaboration. IEEE Trans. Syst. Man. Cybern. Syst. 2024, 54, 3675–3693. [Google Scholar] [CrossRef]

- Luo, S.; Meng, Q.; Li, S.; Yu, H. Research of Intent Recognition in Rehabilitation Robots: A Systematic Review. Disabil. Rehabil. Assist. Technol. 2024, 19, 1307–1318. [Google Scholar] [CrossRef]

- Smith, G.B.; Belle, V.; Petrick, R.P.A. Intention Recognition with ProbLog. Front. Artif. Intell. 2022, 5, 806262. [Google Scholar] [CrossRef]

- Shibata, Y.; Tsuji, K.; Loo, C.K.; Sun, G.; Yamazaki, Y.; Hashimoto, T. Vision-based HRV Measurement for HRI Study. In Proceedings of the 2018 IEEE Symposium Series on Computational Intelligence (SSCI), Bengaluru, India, 18–21 November 2018; pp. 1779–1784. [Google Scholar] [CrossRef]

- Kothig, A.; Muñoz, J.; Akgun, S.A.; Aroyo, A.M.; Dautenhahn, K. Connecting Humans and Robots Using Physiological Signals—Closing-the-Loop in HRI. In Proceedings of the 2021 30th IEEE International Conference on Robot & Human Interactive Communication (RO-MAN), Vancouver, BC, Canada, 8–12 August 2021; pp. 735–742. [Google Scholar] [CrossRef]

- Mulla, D.M.; Keir, P.J. Neuromuscular control: From a biomechanist’s perspective. Front. Sports Act. Living 2023, 5, 1217009. [Google Scholar] [CrossRef]

- Asghar, A.; Jawaid Khan, S.; Azim, F.; Shakeel, C.S.; Hussain, A.; Niazi, I.K. Review on electromyography based intention for upper limb control using pattern recognition for human-machine interaction. Proc. Inst. Mech. Eng. H 2022, 236, 628–645. [Google Scholar] [CrossRef]

- Belardinelli, A. Gaze-Based Intention Estimation: Principles, Methodologies, and Applications in HRI. ACM Trans. Hum.-Robot Interact. 2024, 13, 1–30. [Google Scholar] [CrossRef]

- Canning, C.; Scheutz, M. Functional near-infrared spectroscopy in human-robot interaction. ACM Trans. Hum.-Robot Interact. 2013, 2, 62–84. [Google Scholar] [CrossRef]

- Heredia, J.; Lopes-Silva, E.; Cardinale, Y.; Diaz-Amado, J.; Dongo, I.; Graterol, W.; Aguilera, A. Adaptive Multimodal Emotion Detection Architecture for Social Robots. IEEE Access 2022, 10, 20727–20744. [Google Scholar] [CrossRef]

- Schröder, T.; Stewart, T.C.; Thagard, P. Intention, Emotion, and Action: A Neural Theory Based on Semantic Pointers. Cogn. Sci. 2014, 38, 851–880. [Google Scholar] [CrossRef] [PubMed]

- Cohen, A.O.; Dellarco, D.V.; Breiner, K.; Helion, C.; Heller, A.S.; Rahdar, A.; Pedersen, G.; Chein, J.; Dyke, J.P.; Galvan, A.; et al. The Impact of Emotional States on Cognitive Control Circuitry and Function. J. Cogn. Neurosci. 2016, 28, 446–459. [Google Scholar] [CrossRef]

- Admoni, H.; Scassellati, B. Social eye gaze in human-robot interaction: A review. ACM Trans. Hum.-Robot Interact. 2017, 6, 25–63. [Google Scholar] [CrossRef]

- Lübbert, A.; Göschl, F.; Krause, H.; Schneider, T.R.; Maye, A.; Engel, A.K. Socializing Sensorimotor Contingencies. Front. Hum. Neurosci. 2021, 15, 624610. [Google Scholar] [CrossRef]

- Bremers, A.; Pabst, A.; Parreira, M.T.; Ju, W. Using Social Cues to Recognize Task Failures for HRI: Overview, State-of-the-Art, and Future Directions. arXiv 2024, arXiv:2301.11972. [Google Scholar] [CrossRef]

- Wyer, R.S.; Srull, T.K. Human cognition in its social context. Psychol. Rev. 1986, 93, 322–359. [Google Scholar] [CrossRef] [PubMed]

- Niemann, F.; Lüdtke, S.; Bartelt, C.; Hompel, M. Context-Aware Human Activity Recognition in Industrial Processes. Sensors 2021, 22, 134. [Google Scholar] [CrossRef]

- Riboni, D.; Bettini, C. OWL 2 modeling and reasoning with complex human activities. PMC 2011, 7, 379–395. [Google Scholar] [CrossRef]

- Wei, D.; Chen, L.; Zhao, L.; Zhou, H.; Huang, B. A Vision-Based Measure of Environmental Effects on Inferring Human Intention During Human Robot Interaction. IEEE Sens. J. 2022, 22, 4246–4256. [Google Scholar] [CrossRef]

- Danner, U.N.; Aarts, H.; de Vries, N.K. Habit vs. intention in the prediction of future behaviour: The role of frequency, context stability and mental accessibility of past behaviour. Br. J. Soc. Psychol. 2008, 47, 245–265. [Google Scholar] [CrossRef]

- Wang, X.; Shen, H.; Yu, H.; Guo, J.; Wei, X. Hand and Arm Gesture-based Human-Robot Interaction: A Review. In Proceedings of the 6th International Conference on Algorithms, Computing and Systems, ICACS ’22, Larissa, Greece, 16–18 September 2023; Association for Computing Machinery: New York, NY, USA, 2023; pp. 1–7. [Google Scholar] [CrossRef]

- Shrinah, A.; Bahraini, M.S.; Khan, F.; Asif, S.; Lohse, N.; Eder, K. On the Design of Human-Robot Collaboration Gestures. arXiv 2024, arXiv:2402.19058. [Google Scholar] [CrossRef]

- Koochaki, F.; Najafizadeh, L. Predicting Intention Through Eye Gaze Patterns. In Proceedings of the 2018 IEEE Biomedical Circuits and Systems Conference (BioCAS), Cleveland, OH, USA, 17–19 October 2018; pp. 1–4. [Google Scholar] [CrossRef]

- Menolotto, M.; Komaris, D.S.; Tedesco, S.; O’Flynn, B.; Walsh, M. Motion Capture Technology in Industrial Applications: A Systematic Review. Sensors 2020, 20, 5687. [Google Scholar] [CrossRef]

- Ji, Y.; Yang, Y.; Shen, F.; Shen, H.T.; Li, X. A Survey of Human Action Analysis in HRI Applications. IEEE Trans. Circ. Syst. Video Technol. 2020, 30, 2114–2128. [Google Scholar] [CrossRef]

- Zunino, A.; Cavazza, J.; Koul, A.; Cavallo, A.; Becchio, C.; Murino, V. Predicting Human Intentions from Motion Cues Only: A 2D+3D Fusion Approach. In Proceedings of the 25th ACM International Conference on Multimedia, Mountain View, CA, USA, 27–31 October 2017; pp. 591–599. [Google Scholar] [CrossRef]

- Rinchi, O.; Ghazzai, H.; Alsharoa, A.; Massoud, Y. LiDAR Technology for Human Activity Recognition: Outlooks and Challenges. IEEE IoTM 2023, 6, 143–150. [Google Scholar] [CrossRef]

- Farina, D.; Merletti, R.; Enoka, R.M. The extraction of neural strategies from the surface EMG. J. Appl. Physiol. 2004, 96, 1486–1495. [Google Scholar] [CrossRef] [PubMed]

- Bello, V.; Bodo, E.; Pizzurro, S.; Merlo, S. In Vivo Recognition of Vascular Structures by Near-Infrared Transillumination. Proceedings 2019, 42, 24. [Google Scholar] [CrossRef]

- Zhang, S.; Li, Y.; Zhang, S.; Shahabi, F.; Xia, S.; Deng, Y.; Alshurafa, N. Deep Learning in Human Activity Recognition with Wearable Sensors: A Review on Advances. Sensors 2022, 22, 1476. [Google Scholar] [CrossRef]

- Beck, M.; Pöppel, K.; Spanring, M.; Auer, A.; Prudnikova, O.; Kopp, M.; Klambauer, G.; Brandstetter, J.; Hochreiter, S. xLSTM: Extended Long Short-Term Memory. arXiv 2024, arXiv:2405.04517. [Google Scholar] [CrossRef]

- Zabaleta, H.; Bureau, M.; Eizmendi, G.; Olaiz, E.; Medina, J.; Perez, M. Exoskeleton design for functional rehabilitation in patients with neurological disorders and stroke. In Proceedings of the 2007 IEEE 10th International Conference on Rehabilitation Robotics, Noordwijk, The Netherlands, 13–15 June 2007; pp. 112–118. [Google Scholar] [CrossRef]

- Zhang, X.; Tricomi, E.; Missiroli, F.; Lotti, N.; Masia, L. Real-Time Assistive Control via IMU Locomotion Mode Detection in a Soft Exosuit: An Effective Approach to Enhance Walking Metabolic Efficiency. IEEE/ASME Trans. Mechatron. 2023, 29, 1797–1808. [Google Scholar] [CrossRef]

- Bandara, D.S.V.; Arata, J.; Kiguchi, K. A noninvasive brain-computer interface approach for predicting motion intention of activities of daily living tasks for an upper-limb wearable robot. Int. J. Adv. Robot. Syst. 2018, 15, 1729881418767310. [Google Scholar] [CrossRef]

- Buongiorno, D.; Barsotti, M.; Barone, F.; Bevilacqua, V.; Frisoli, A. A Linear Approach to Optimize an EMG-Driven Neuromusculoskeletal Model for Movement Intention Detection in Myo-Control: A Case Study on Shoulder and Elbow Joints. Front. Neurorobot. 2018, 12, e00074. [Google Scholar] [CrossRef]

- Chen, B.; Zhou, Y.; Chen, C.; Sayeed, Z.; Hu, J.; Qi, J.; Frush, T.; Goitz, H.; Hovorka, J.; Cheng, M.; et al. Volitional control of upper-limb exoskeleton empowered by EMG sensors and machine learning computing. Array 2023, 17, 100277. [Google Scholar] [CrossRef]

- Gandolla, M.; Luciani, B.; Pirovano, D.; Pedrocchi, A.; Braghin, F. A force-based human machine interface to drive a motorized upper limb exoskeleton. a pilot study. In Proceedings of the 2022 International Conference on Rehabilitation Robotics (ICORR), Rotterdam, The Netherlands, 25–29 July 2022; pp. 1–6. [Google Scholar] [CrossRef]

- Gao, Y.; Su, Y.; Dong, W.; Du, Z.; Wu, Y. Intention detection in upper limb kinematics rehabilitation using a GP-based control strategy. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 5032–5038. [Google Scholar] [CrossRef]

- Heo, U.; Feng, J.; Kim, S.J.; Kim, J. sEMG-Triggered Fast Assistance Strategy for a Pneumatic Back Support Exoskeleton. IEEE Trans. Neural Syst. Rehabil. Eng. 2022, 30, 2175–2185. [Google Scholar] [CrossRef]

- Huo, W.; Huang, J.; Wang, Y.; Wu, J.; Cheng, L. Control of upper-limb power-assist exoskeleton based on motion intention recognition. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 2243–2248. [Google Scholar] [CrossRef]

- Irastorza-Landa, N.; Sarasola-Sanz, A.; López-Larraz, E.; Bibián, C.; Shiman, P.; Birbaumer, N.; Ramos-Murguialday, A. Design of continuous EMG classification approaches towards the control of a robotic exoskeleton in reaching movements. In Proceedings of the 2017 International Conference on Rehabilitation Robotics (ICORR), London, UK, 17–20 July 2017; pp. 128–133. [Google Scholar] [CrossRef]

- Khan, A.M.; Yun, D.W.; Zuhaib, K.M.; Iqbal, J.; Yan, R.J.; Khan, F.; Han, C. Estimation of Desired Motion Intention and Compliance Control for Upper Limb Assist Exoskeleton. Int. J. Control Autom. Syst. 2017, 15, 802–814. [Google Scholar] [CrossRef]

- Liao, Y.T.; Yang, H.; Lee, H.H.; Tanaka, E. Development and Evaluation of a Kinect-Based Motion Recognition System based on Kalman Filter for Upper-Limb Assistive Device. In Proceedings of the 2019 58th Annual Conference of the Society of Instrument and Control Engineers of Japan (SICE), Hiroshima, Japan, 10–13 September 2019; pp. 1621–1626. [Google Scholar] [CrossRef]

- Losey, D.P.; McDonald, C.G.; Battaglia, E.; O’Malley, M.K. A Review of Intent Detection, Arbitration, and Communication Aspects of Shared Control for Physical Human-Robot Interaction. Appl. Mech. Rev. 2018, 70, 010804. [Google Scholar] [CrossRef]

- Lotti, N.; Xiloyannis, M.; Durandau, G.; Galofaro, E.; Sanguineti, V.; Masia, L.; Sartori, M. Adaptive Model-Based Myoelectric Control for a Soft Wearable Arm Exosuit: A New Generation of Wearable Robot Control. Robot. Autom. Mag. 2020, 27, 43–53. [Google Scholar] [CrossRef]

- Lotti, N.; Xiloyannis, M.; Missiroli, F.; Bokranz, C.; Chiaradia, D.; Frisoli, A.; Riener, R.; Masia, L. Myoelectric or Force Control? A Comparative Study on a Soft Arm Exosuit. IEEE Trans. Robot. 2022, 38, 1363–1379. [Google Scholar] [CrossRef]

- Lu, L.; Wu, Q.; Chen, X.; Shao, Z.; Chen, B.; Wu, H. Development of a sEMG-based torque estimation control strategy for a soft elbow exoskeleton. Robot. Auton. Syst. 2019, 111, 88–98. [Google Scholar] [CrossRef]

- Novak, D.; Riener, R. Enhancing patient freedom in rehabilitation robotics using gaze-based intention detection. In Proceedings of the 2013 IEEE 13th International Conference on Rehabilitation Robotics (ICORR), Seattle, WA, USA, 24–26 June 2013; pp. 1–6. [Google Scholar] [CrossRef]

- Riener, R.; Novak, D. Movement Onset Detection and Target Estimation for Robot-Aided Arm Training. Automatisierungstechnik 2015, 63, 286–298. [Google Scholar] [CrossRef]

- Sedighi, P.; Li, X.; Tavakoli, M. EMG-Based Intention Detection Using Deep Learning for Shared Control in Upper-Limb Assistive Exoskeletons. IEEE Robot. Autom. Lett. 2024, 9, 41–48. [Google Scholar] [CrossRef]

- Sun, L.; An, H.; Ma, H.; Gao, J. Real-Time Human Intention Recognition of Multi-Joints Based on MYO. IEEE Access 2020, 8, 4235–4243. [Google Scholar] [CrossRef]

- Toro-Ossaba, A.; Tejada, J.C.; Rúa, S.; Núñez, J.D.; Peña, A. Myoelectric Model Reference Adaptive Control with Adaptive Kalman Filter for a soft elbow exoskeleton. Control Eng. Pract. 2024, 142, 105774. [Google Scholar] [CrossRef]

- Treussart, B.; Geffard, F.; Vignais, N.; Marin, F. Controlling an upper-limb exoskeleton by EMG signal while carrying unknown load. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 9107–9113. [Google Scholar] [CrossRef]

- Woo, J.H.; Sahithi, K.K.; Kim, S.T.; Choi, G.R.; Kim, B.S.; Shin, J.G.; Kim, S.H. Machine Learning Based Recognition of Elements in Lower-Limb Movement Sequence for Proactive Control of Exoskeletons to Assist Lifting. IEEE Access 2023, 11, 127107–127118. [Google Scholar] [CrossRef]

- Zarrin, R.S.; Zeiaee, A.; Langari, R.; Buchanan, J.J.; Robson, N. Towards autonomous ergonomic upper-limb exoskeletons: A computational approach for planning a human-like path. Robot. Auton. Syst. 2021, 145, 103843. [Google Scholar] [CrossRef]

- Zhang, L.; Liu, G.; Han, B.; Wang, Z.; Zhang, T.; Payandeh, S. sEMG Based Human Motion Intention Recognition. J. Robot. 2019, 2019, 3679174. [Google Scholar] [CrossRef]

- Zhou, Y.M.; Hohimer, C.; Proietti, T.; O’Neill, C.T.; Walsh, C.J. Kinematics-Based Control of an Inflatable Soft Wearable Robot for Assisting the Shoulder of Industrial Workers. IEEE Robot. Autom. Lett. 2021, 6, 2155–2162. [Google Scholar] [CrossRef]

- Lobo-Prat, J.; Kooren, P.N.; Stienen, A.H.; Herder, J.L.; Koopman, B.F.; Veltink, P.H. Non-invasive control interfaces forintention detection in active movement-assistive devices. J. Neuroeng. Rehabil. 2014, 11, 168. [Google Scholar] [CrossRef]

- Cavanagh, P.R.; Komi, P.V. Electromechanical delay in human skeletal muscle under concentric and eccentric contractions. Eur. J. Appl. Physiol. Occup. Physiol. 1979, 42, 159–163. [Google Scholar] [CrossRef]

- Bai, O.; Rathi, V.; Lin, P.; Huang, D.; Battapady, H.; Fei, D.Y.; Schneider, L.; Houdayer, E.; Chen, X.; Hallett, M. Prediction of human voluntary movement before it occurs. Clin. Neurophysiol. 2010, 122, 364. [Google Scholar] [CrossRef] [PubMed]

- Bonchek-Dokow, E.; Kaminka, G.A. Towards computational models of intention detection and intention prediction. Cogn. Syst. Res. 2014, 28, 44–79. [Google Scholar] [CrossRef]

- Tversky, A.; Kahneman, D.; Slovic, P. Judgment Under Uncertainty: Heuristics and Biases; Cambridge University Press: Cambridge, UK, 1982. [Google Scholar]

- Gallese, V.; Goldman, A. Mirror neurons and the simulation theory of mind-reading. Trends Cogn. Sci. 1998, 2, 493–501. [Google Scholar] [CrossRef] [PubMed]

- Laferriere, P.; Lemaire, E.D.; Chan, A.D.C. Surface Electromyographic Signals Using Dry Electrodes. IEEE Trans. Instrum. Meas. 2011, 60, 3259–3268. [Google Scholar] [CrossRef]

- Rescio, G.; Sciurti, E.; Giampetruzzi, L.; Carluccio, A.M.; Francioso, L.; Leone, A. Preliminary Study on Wearable Smart Socks with Hydrogel Electrodes for Surface Electromyography-Based Muscle Activity Assessment. Sensors 2025, 25, 1618. [Google Scholar] [CrossRef]

- Martins, J.; Cerqueira, S.M.; Catarino, A.W.; da Silva, A.F.; Rocha, A.M.; Vale, J.; Ângelo, M.; Santos, C.P. Integrating sEMG and IMU Sensors in an e-Textile Smart Vest for Forward Posture Monitoring: First Steps. Sensors 2024, 24, 4717. [Google Scholar] [CrossRef]

- Ju, B.; Mark, J.I.; Youn, S.; Ugale, P.; Sennik, B.; Adcock, B.; Mills, A.C. Feasibility assessment of textile electromyography sensors for a wearable telehealth biofeedback system. Wearable Technol. 2025, 6, e26. [Google Scholar] [CrossRef]

- Hossain, G.; Rahman, M.; Hossain, I.Z.; Khan, A. Wearable socks with single electrode triboelectric textile sensors for monitoring footsteps. Sens. Actuators A Phys. 2022, 333, 113316. [Google Scholar] [CrossRef]

- Khan, A.; Rashid, M.; Grabher, G.; Hossain, G. Autonomous Triboelectric Smart Textile Sensor for Vital Sign Monitoring. ACS Appl. Mater. Interfaces 2024, 16, 31807–31816. [Google Scholar] [CrossRef]

- Alam, T.; Saidane, F.; Faisal, A.A.; Khan, A.; Hossain, G. Smart- textile strain sensor for human joint monitoring. Sens. Actuators A Phys. 2022, 341, 113587. [Google Scholar] [CrossRef]

- Cortesi, S.; Crabolu, M.; Mekikis, P.V.; Bellusci, G.; Vogt, C.; Magno, M. A Proximity-Based Approach for Dynamically Matching Industrial Assets and Their Operators Using Low-Power IoT Devices. IEEE Internet Things J. 2025, 12, 3350–3362. [Google Scholar] [CrossRef]

- Al-Okby, M.F.R.; Junginger, S.; Roddelkopf, T.; Thurow, K. UWB-Based Real-Time Indoor Positioning Systems: A Comprehensive Review. Appl. Sci. 2024, 14, 1005. [Google Scholar] [CrossRef]

- Wu, X.; Liang, J.; Yu, Y.; Li, G.; Yen, G.G.; Yu, H. Embodied Perception Interaction, and Cognition for Wearable Robotics: A Survey. IEEE Trans. Cogn. Dev. Syst. 2024, 1–18. [Google Scholar] [CrossRef]

- Pesenti, M.; Invernizzi, G.; Mazzella, J.; Bocciolone, M.; Pedrocchi, A.; Gandolla, M. IMU-based human activity recognition and payload classification for low-back exoskeletons. Sci. Rep. 2023, 13, 1184. [Google Scholar] [CrossRef]

- Niemann, F.; Reining, C.; Moya Rueda, F.; Nair, N.R.; Steffens, J.A.; Fink, G.A.; ten Hompel, M. LARa: Creating a Dataset for Human Activity Recognition in Logistics Using Semantic Attributes. Sensors 2020, 20, 4083. [Google Scholar] [CrossRef]

- Yoshimura, N.; Morales, J.; Maekawa, T.; Hara, T. OpenPack: A Large-Scale Dataset for Recognizing Packaging Works in IoT-Enabled Logistic Environments. In Proceedings of the 2024 IEEE International Conference on Pervasive Computing and Communications (PerCom), Biarritz, France, 11–15 March 2024; pp. 90–97. [Google Scholar] [CrossRef]

- Yan, H.; Tan, H.; Ding, Y.; Zhou, P.; Namboodiri, V.; Yang, Y. Language-centered Human Activity Recognition. arXiv 2024, arXiv:2410.00003. [Google Scholar] [CrossRef]

- Zhang, Z.; Yang, B.; Chen, X.; Shi, W.; Wang, H.; Luo, W.; Huang, J. MindEye-OmniAssist: A Gaze-Driven LLM-Enhanced Assistive Robot System for Implicit Intention Recognition and Task Execution. arXiv 2025, arXiv:2503.13250. [Google Scholar] [CrossRef]

- Ahmadi, E.; Wang, C. Interpretable Locomotion Prediction in Construction Using a Memory-Driven LLM Agent With Chain-of-Thought Reasoning. arXiv 2025, arXiv:2504.15263. [Google Scholar] [CrossRef]

- Zheng, T.; Glock, C.H.; Grosse, E.H. Opportunities for using eye tracking technology in manufacturing and logistics: Systematic literature review and research agenda. Comput. Ind. Eng. 2022, 171, 108444. [Google Scholar] [CrossRef]

- Pesenti, M.; Antonietti, A.; Gandolla, M.; Pedrocchi, A. Towards a Functional Performance Validation Standard for Industrial Low-Back Exoskeletons: State of the Art Review. Sensors 2021, 21, 808. [Google Scholar] [CrossRef]

- Ralfs, L.; Hoffmann, N.; Glitsch, U.; Heinrich, K.; Johns, J.; Weidner, R. Insights into evaluating and using industrial exoskeletons: Summary report, guideline, and lessons learned from the interdisciplinary project “Exo@Work”. Int. J. Ind. Ergon. 2023, 97, 103494. [Google Scholar] [CrossRef]

- Crea, S.; Beckerle, P.; Looze, M.D.; Pauw, K.D.; Grazi, L.; Kermavnar, T.; Masood, J.; O’Sullivan, L.W.; Pacifico, I.; Rodriguez-Guerrero, C.; et al. Occupational exoskeletons: A roadmap toward large-scale adoption. Methodology and challenges of bringing exoskeletons to workplaces. Wearable Technol. 2021, 2, 1729881418767310. [Google Scholar] [CrossRef]

- Lieck, L.; Anyfantis, I.; Irastorza, X.; Munar, L.; Müller, B.; Milczarek, M.; Cockburn, W.; Smith, A. Occupational Safety and Health in Europe—State and Trends 2023; Publications Office of the European Union: Luxembourg, 2023. [Google Scholar] [CrossRef]

- Hollnagel, E.; Wears, R.L.; Braithwaite, J. From Safety-I to Safety-II: A White Paper. The resilient health care net: Published simultaneously by the University of Southern Denmark, University of Florida, USA, and Macquarie University, Australia. 2015. Available online: https://www.england.nhs.uk/signuptosafety/wp-content/uploads/sites/16/2015/10/safety-1-safety-2-whte-papr.pdf (accessed on 13 July 2025).

- Vanderhaegen, F. Erik Hollnagel: Safety-I and Safety-II, the past and future of safety management. Cogn. Tech. Work 2015, 17, 461–464. [Google Scholar] [CrossRef]

- Ray, A.; Kolekar, M.H. Transfer learning and its extensive appositeness in human activity recognition: A survey. Expert Syst. Appl. 2024, 240, 122538. [Google Scholar] [CrossRef]

- Brolin, A.; Thorvald, P.; Case, K. Experimental study of cognitive aspects affecting human performance in manual assembly. Prod. Manuf. Res. 2017, 5, 141–163. [Google Scholar] [CrossRef]

- Wood, W.; Mazar, A.; Neal, D.T. Habits and Goals in Human Behavior: Separate but Interacting Systems. Perspect. Psychol. Sci. 2022, 17, 590–605. [Google Scholar] [CrossRef] [PubMed]

- Li, H.; Zhang, Z.; Wang, W. HabitAction: A Video Dataset for Human Habitual Behavior Recognition. arXiv 2024, arXiv:2408.13463. [Google Scholar] [CrossRef]

- Siedl, S.M.; Mara, M.; Stiglbauer, B. The Role of Social Feedback in Technology Acceptance: A One-Week Diary Study with Exoskeleton Users at the Workplace. Int. J. Hum.-Comput. Interact. 2024, 13, 8210–8223. [Google Scholar] [CrossRef]

- Siedl, S.M.; Mara, M. Exoskeleton acceptance and its relationship to self-efficacy enhancement, perceived usefulness, and physical relief: A field study among logistics workers. Wearable Technol. 2021, 2, e10. [Google Scholar] [CrossRef] [PubMed]

- Davis, K.G.; Reid, C.R.; Rempel, D.D.; Treaster, D. Introduction to the human factors special issue on user-centered design for exoskeleton. Hum. Factors 2020, 62, 333–336. [Google Scholar] [CrossRef] [PubMed]

- Ralfs, L.; Hoffmann, N.; Weidner, R. Method and Test Course for the Evaluation of Industrial Exoskeletons. Appl. Sci. 2021, 11, 9614. [Google Scholar] [CrossRef]

- Kopp, V.; Holl, M.; Schalk, M.; Daub, U.; Bances, E.; García, B.; Schalk, I.; Siegert, J.; Schneider, U. Exoworkathlon: A prospective study approach for the evaluation of industrial exoskeletons. Wearable Technol. 2022, 3, e22. [Google Scholar] [CrossRef] [PubMed]

- Van Andel, S.; Mohr, M.; Schmidt, A.; Werner, I.; Federolf, P. Whole-body movement analysis using principal component analysis: What is the internal consistency between outcomes originating from the same movement simultaneously recorded with different measurement devices? Front. Bioeng. Biotechnol. 2022, 10, 1006670. [Google Scholar] [CrossRef] [PubMed]

| Research Question | Analysis Dimensions |

|---|---|

| (RQ1) How? | |

| A. Sensing and Prediction Approach | |

| A1. What intention cues are used? | Intention Cue |

| A2. What types of sensors are employed? | Sensors |

| A3. What methods are applied to predict intention? | Method |

| B. Temporal Context Consideration | |

| B1. What temporal aspects are considered? | Timing |

| B2. How do sensors influence the temporal context? | Sensors |

| (RQ2) Why? | |

| C. Purpose and Targeting | |

| C1. What is the prediction objective? | Support Characteristic |

| C2. Who are the target users? | Target Users |

| D. Control and Evaluation | |

| D1. How is the intention prediction integrated into control? | Support Characteristic |

| D2. How is the system evaluated? | Evaluation, Target Users |

| Reference | Intention Cue | Sensors | Method | Timing | Support Charac. | Target Users | Evaluation |

|---|---|---|---|---|---|---|---|

| Bandara et al. [52] | ✓ | ✓ | ✓ | ✓ | - | - | - |

| Bi et al. [11] | ✓ | ✓ | ✓ | - | - | - | ✓ |

| Buongiorno et al. [53] | - | ✓ | ✓ | - | ✓ | ✓ | - |

| Chen et al. [54] | ✓ | ✓ | ✓ | ✓ | - | - | - |

| Dinh et al. [7] | - | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Gandolla et al. [55] | ✓ | ✓ | ✓ | - | ✓ | ✓ | ✓ |

| Gantenbein et al. [18] | ✓ | ✓ | - | - | - | - | ✓ |

| Gao et al. [56] | - | ✓ | ✓ | - | ✓ | ✓ | ✓ |

| Heo et al. [57] | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Huo et al. [58] | - | ✓ | ✓ | - | ✓ | ✓ | ✓ |

| Irastorza-Landa et al. [59] | ✓ | ✓ | ✓ | ✓ | - | - | - |

| Khan et al. [60] | - | ✓ | ✓ | - | ✓ | ✓ | ✓ |

| Liao et al. [61] | - | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Losey et al. [62] | ✓ | ✓ | ✓ | ✓ | - | - | - |

| Lotti et al., 2020 [63] | ✓ | - | ✓ | ✓ | ✓ | ✓ | ✓ |

| Lotti et al., 2022 [64] | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Lu et al. [65] | - | ✓ | ✓ | - | ✓ | ✓ | ✓ |

| Novak and Riener [66] | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Riener and Novak [67] | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Sedighi et al. [68] | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | - |

| Sun et al. [69] | ✓ | - | ✓ | ✓ | - | - | ✓ |

| Toro-Ossaba et al. [70] | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | - |

| Treussart et al. [71] | ✓ | ✓ | ✓ | - | ✓ | ✓ | - |

| Woo et al. [72] | ✓ | ✓ | ✓ | - | - | - | - |

| Zabaleta et al. [50] | ✓ | ✓ | ✓ | - | ✓ | ✓ | ✓ |

| Zarrin et al. [73] | ✓ | - | ✓ | - | ✓ | ✓ | ✓ |

| Zhang et al., 2019 [74] | ✓ | ✓ | ✓ | - | - | - | - |

| Zhang et al., 2023 [51] | - | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Zhou et al. [75] | ✓ | - | ✓ | ✓ | ✓ | ✓ | ✓ |

| Activity Type | Description | Examples | Signal, Sensor | Control | References | |

|---|---|---|---|---|---|---|

| Force | high weights, low velocity, small motion range | lifting objects, heavy tool handling, hammering | kinematic, mechanical cues IMUs, embedded motor sensors, force/torque sensors | admittance control, torque control | [7,53,58,63,71] |

| Varying | different weights, different velocities, small motion range | construction work, commissioning, workplace setup | kinematic, mechanical cues IMUs, embedded motor sensors, force/torque sensors, context information | torque control, Model Reference Adaptive control | [57,67,70,71,75] | |

| Speed | low-middle weights, high velocity, high motion range | object sorting, transporting, drilling, cleaning | kinematic cues IMUs, embedded motor sensors | position control | [51,55,68,73] | |

| Precision | low weights, low velocity, small motion range | small part assembly, painting, welding | kinematic cues IMUs, embedded motor sensors, camera | admittance control, position control | [55,58,60,61,68,73] | |

High Torque Accuracy High Torque Accuracy

High Motion Accuracy High Motion Accuracy | ||||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hochreiter, D.; Schmermbeck, K.; Vazquez-Pufleau, M.; Ferscha, A. Intention Prediction for Active Upper-Limb Exoskeletons in Industrial Applications: A Systematic Literature Review. Sensors 2025, 25, 5225. https://doi.org/10.3390/s25175225

Hochreiter D, Schmermbeck K, Vazquez-Pufleau M, Ferscha A. Intention Prediction for Active Upper-Limb Exoskeletons in Industrial Applications: A Systematic Literature Review. Sensors. 2025; 25(17):5225. https://doi.org/10.3390/s25175225

Chicago/Turabian StyleHochreiter, Dominik, Katharina Schmermbeck, Miguel Vazquez-Pufleau, and Alois Ferscha. 2025. "Intention Prediction for Active Upper-Limb Exoskeletons in Industrial Applications: A Systematic Literature Review" Sensors 25, no. 17: 5225. https://doi.org/10.3390/s25175225

APA StyleHochreiter, D., Schmermbeck, K., Vazquez-Pufleau, M., & Ferscha, A. (2025). Intention Prediction for Active Upper-Limb Exoskeletons in Industrial Applications: A Systematic Literature Review. Sensors, 25(17), 5225. https://doi.org/10.3390/s25175225