InstructSee: Instruction-Aware and Feedback-Driven Multimodal Retrieval with Dynamic Query Generation

Abstract

1. Introduction

- Instruction-aware dynamic query generation: We propose a novel mechanism that adaptively determines the number and semantic composition of Q-Former queries based on the complexity of the input instruction.

- Integration of LLM reasoning: The framework leverages the semantic reasoning capabilities of LLMs to enhance instruction interpretation and context-aware visual–textual alignment.

- Iterative refinement via user feedback: We introduce a user-in-the-loop query refinement process that enables the model to progressively adapt to implicit retrieval intentions through multiple rounds of interaction.

- Lightweight and generalizable design: Our architecture maintains compatibility with BLIP2-based retrieval pipelines, introducing minimal computational overhead while demonstrating strong generalization across diverse retrieval tasks and datasets.

2. Related Work

2.1. Multimodal Retrieval with Transformers

2.2. Instruction-Aware and Feedback-Driven Vision–Language Models

2.3. Semantic Reasoning with Large Language Models

3. Methodology

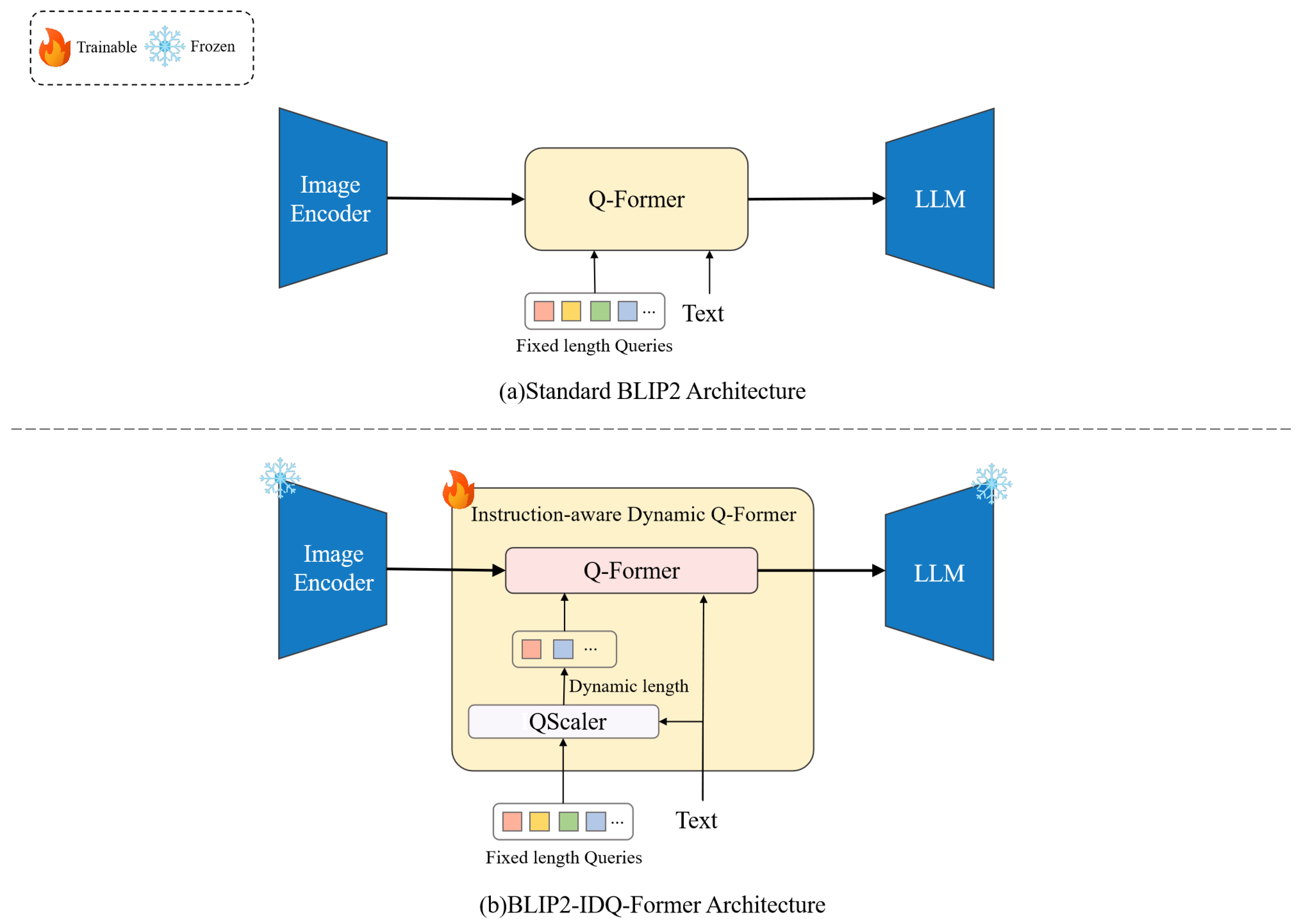

3.1. Fixed-Length Query Design in BLIP2

- For simple instructions, excess tokens lead to unnecessary representational redundancy.

- For complex instructions, the 32-token cap constrains the model’s capacity to capture fine-grained visual details relevant to user intent.

3.2. Instruction-Adaptive Token Scaling via QScaler

| Algorithm 1: Overall Pipeline of BLIP2-IDQ-Former |

Input: Instruction I, Image V, Base query length , Max query length Output: Task-specific output O Step 1: Instruction complexity estimation; Tokenize I and encode via frozen language encoder to obtain contextual embedding ; Predict normalized complexity score using the learnable QScaler module; During training, apply Gaussian noise injection to r for robustness; Step 2: Dynamic query allocation; ; Select first learnable tokens from token bank ; Retrieve first positional encodings from pretrained matrix ; Add positional encodings to tokens to form query sequence ; Step 3: Cross-modal encoding; Extract visual embeddings from frozen image encoder; Fuse with via Q-Former cross-attention; Project multimodal features into LLM input space via projection head; Step 4: Output generation; Feed projected features into frozen LLM; Obtain task-specific output O; return

O; |

3.3. Instruction-Conditioned Cross-Attention

3.4. Training Strategy

- The Q-Former transformer layers responsible for cross-modal feature fusion;

- The learnable query token matrix, which provides the dynamically selected token subsets;

- The QScaler regression head, which predicts the instruction-conditioned complexity scores.

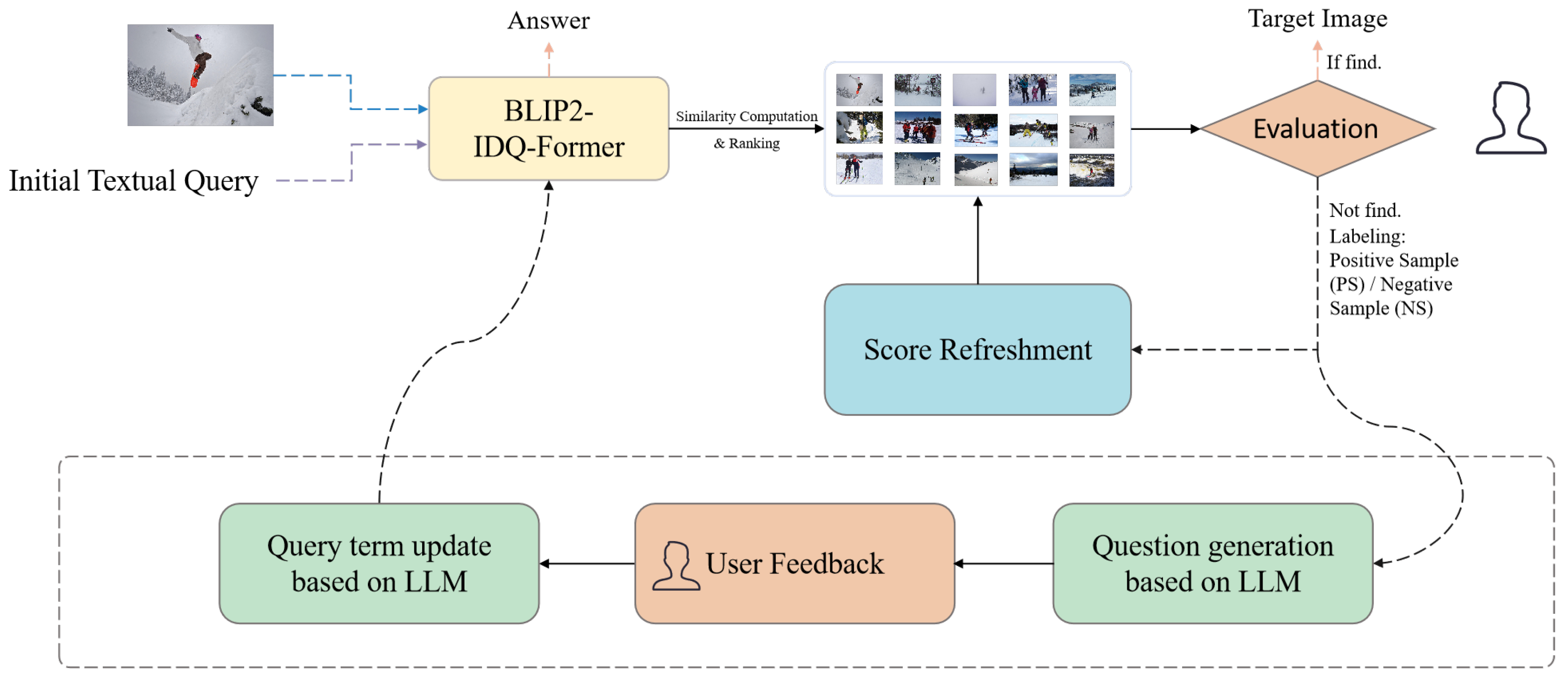

4. System Overview

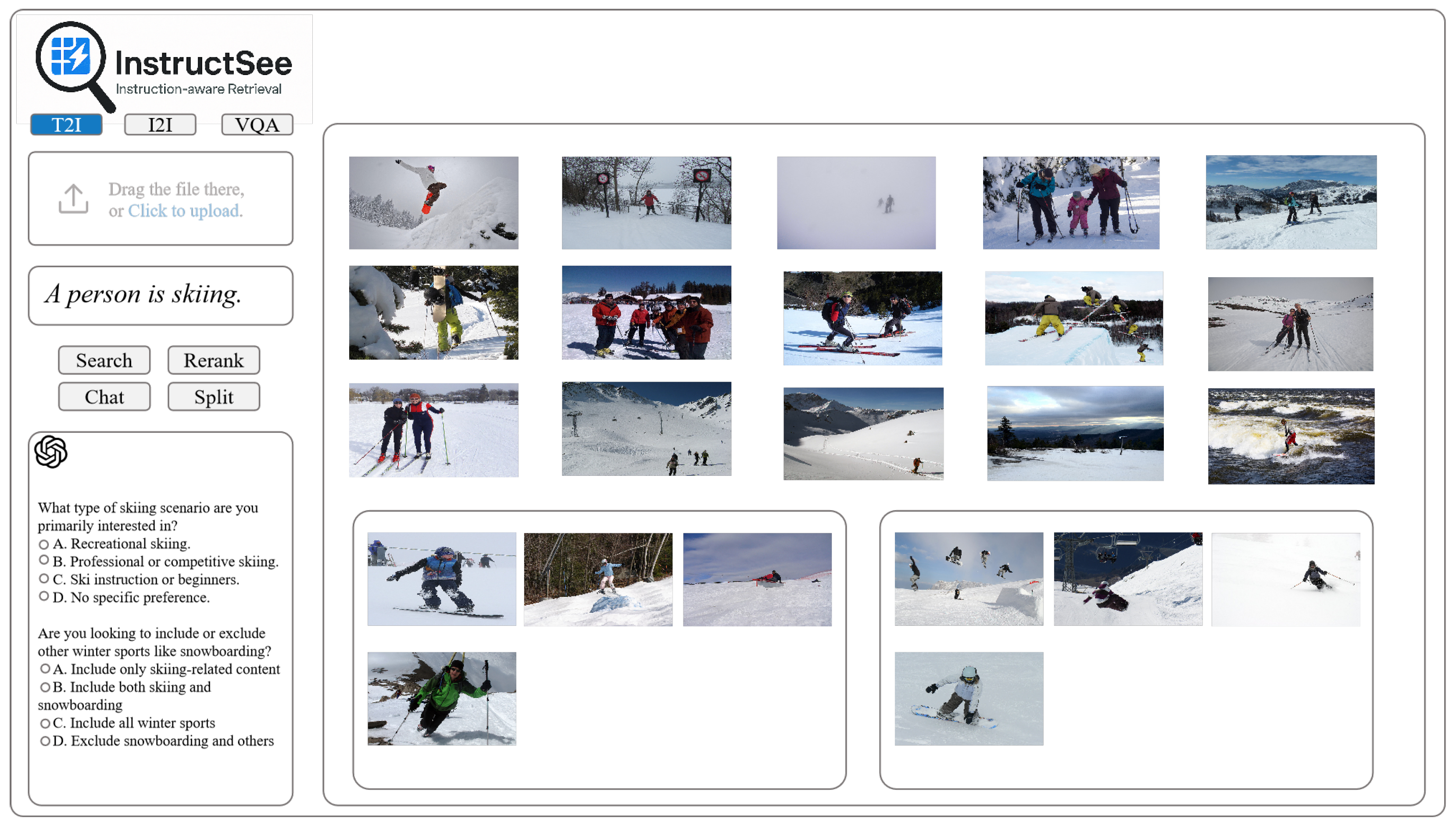

4.1. Task Modes and Input Interface

- Visual Question Answering (VQA): Given an input image and a user-issued question (e.g., “What is the person doing?”), the system directly generates an answer using its multimodal encoder-decoder, without a ranking process.

- Image-to-Image Retrieval (I2I): Provided with a reference image, the system computes cross-modal similarity scores against a gallery of pre-encoded images to retrieve visually or semantically similar samples.

- Text-to-Image Retrieval (T2I): Given a natural language description, the system encodes the textual query and ranks gallery images according to semantic similarity.

4.2. User Feedback and Interactive Refinement

- Score Refreshing: The system updates similarity scores by integrating the embeddings of the original query and the PS/NS samples, allowing local re-ranking without altering the semantic composition of the query.

- LLM-Guided Semantic Refinement: For more nuanced adjustment, the system employs an LLM interaction module [12], with the following features:

- -

- Clarifying single-choice questions are generated based on the original query and the PS/NS captions, aiming to uncover latent intent dimensions (e.g., action, object attributes, and scene context).

- -

- User-selected answers are incorporated to synthesize a semantically refined query.

- -

- The refined query is embedded and used for gallery re-ranking as follows:where denotes the refined query embedding, is the embedding of gallery image i, is the previous similarity score, and balances the updated semantic content and the prior similarity scores.

4.3. Prompt Design for Instruction Interpretation

- Question Generation Prompt: This template incorporates the original query along with natural language captions of the user-labeled positive and negative samples. The LLM is instructed to generate two to three clarifying, single-choice questions that contrast salient semantic attributes (e.g., action, background, clothing), distinguishing relevant from irrelevant results [16].

- Query Update Prompt: This template extends the interaction by embedding the original instruction, the generated questions, the user-selected answers, and sample captions. The LLM uses this information to synthesize a refined, semantically enriched query that more accurately reflects the clarified retrieval objective.

5. Results

5.1. Experimental Setup

5.1.1. Datasets

5.1.2. Evaluation Strategy

5.1.3. Baselines

- CLIP [23]: a dual-encoder architecture trained on large-scale image–text pairs, widely adopted for zero-shot image–text retrieval;

- BLIP2 [1]: employs a fixed-length Q-Former with 32 learnable query tokens for vision–language alignment and serves as the primary backbone for our approach;

- DynamicViT [24]: adapts token usage dynamically based on visual input, reducing computational redundancy in vision transformers;

- CrossGET [2]: a cross-modal retrieval model that leverages global–local attention for enhanced vision–language matching.

5.1.4. Implementation Details

5.2. Quantitative Results

5.2.1. Evaluation Metrics

5.2.2. Performance Comparison

5.2.3. Practical Feedback Protocol and Convergence Analysis

- Score Refreshing: The system updates similarity scores by integrating the embeddings of the original query with those of positive/negative samples (PS/NS), enabling local re-ranking while preserving the semantic composition of the query.

- LLM-Guided Semantic Refinement: The system employs an LLM interaction module to generate semantically refined query variants, which are re-encoded and re-ranked to better capture nuanced retrieval intents.

5.3. Ablation Study

5.3.1. Effect of QScaler-Guided Query Length Adaptation

5.3.2. Effect of Training the Q-Former

6. Discussion

6.1. Instruction-Aware Query Allocation Improves Semantic Alignment

6.2. Joint Optimization of Q-Former and QScaler Is Essential

6.3. Token Efficiency and Inference Trade-Offs

6.4. Extensibility Beyond Retrieval

6.5. Ethical Considerations and Robustness

6.6. Limitations and Future Work

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Li, J.; Li, D.; Savarese, S.; Hoi, S. Blip-2: Bootstrapping language-image pre-training with frozen image encoders and large language models. In Proceedings of the International Conference on Machine Learning. PMLR, Honolulu, HI, USA, 23–29 July 2023; pp. 19730–19742. [Google Scholar]

- Shi, D.; Tao, C.; Rao, A.; Yang, Z.; Yuan, C.; Wang, J. Crossget: Cross-guided ensemble of tokens for accelerating vision-language transformers. arXiv 2023, arXiv:2305.17455. [Google Scholar]

- Gu, G.; Wu, Z.; He, J.; Song, L.; Wang, Z.; Liang, C. Talksee: Interactive video retrieval engine using large language model. In Proceedings of the International Conference on Multimedia Modeling, Amsterdam, The Netherlands, 29 January–2 February 2024; Springer: Berlin/Heidelberg, Germany, 2024; pp. 387–393. [Google Scholar]

- Chen, W.; Shi, C.; Ma, C.; Li, W.; Dong, S. DepthBLIP-2: Leveraging Language to Guide BLIP-2 in Understanding Depth Information. In Proceedings of the Asian Conference on Computer Vision, Hanoi, Vietnam, 8–12 December 2024; pp. 2939–2953. [Google Scholar]

- Paul, D.; Parvez, M.R.; Mohammed, N.; Rahman, S. VideoLights: Feature Refinement and Cross-Task Alignment Transformer for Joint Video Highlight Detection and Moment Retrieval. arXiv 2024, arXiv:2412.01558. [Google Scholar]

- Jiang, Z.; Meng, R.; Yang, X.; Yavuz, S.; Zhou, Y.; Chen, W. Vlm2vec: Training vision-language models for massive multimodal embedding tasks. arXiv 2024, arXiv:2410.05160. [Google Scholar]

- Zhang, L.; Wu, H.; Chen, Q.; Deng, Y.; Siebert, J.; Li, Z.; Han, Y.; Kong, D.; Cao, Z. VLDeformer: Vision–language decomposed transformer for fast cross-modal retrieval. Knowl.-Based Syst. 2022, 252, 109316. [Google Scholar] [CrossRef]

- Dai, W.; Li, J.; Li, D.; Tiong, A.M.H.; Zhao, J.; Wang, W.; Li, B.; Fung, P.; Hoi, S. InstructBLIP: Towards General-purpose Vision-Language Models with Instruction Tuning. arXiv 2023, arXiv:2305.06500. [Google Scholar]

- Liu, H.; Li, C.; Wu, Q.; Lee, Y.J. Visual Instruction Tuning. arXiv 2023, arXiv:2304.08485. [Google Scholar] [PubMed]

- Zhu, D.; Chen, J.; Shen, X.; Li, X.; Elhoseiny, M. MiniGPT-4: Enhancing Vision-Language Understanding with Advanced Large Language Models. arXiv 2023, arXiv:2304.10592. [Google Scholar]

- Liu, Y.; Zhang, Y.; Cai, J.; Jiang, X.; Hu, Y.; Yao, J.; Wang, Y.; Xie, W. Lamra: Large multimodal model as your advanced retrieval assistant. In Proceedings of the Computer Vision and Pattern Recognition Conference, Nashville, TN, USA, 11–15 June 2025; pp. 4015–4025. [Google Scholar]

- Fu, T.J.; Hu, W.; Du, X.; Wang, W.Y.; Yang, Y.; Gan, Z. Guiding instruction-based image editing via multimodal large language models. arXiv 2023, arXiv:2309.17102. [Google Scholar]

- Zhu, Y.; Zhang, P.; Zhang, C.; Chen, Y.; Xie, B.; Liu, Z.; Wen, J.R.; Dou, Z. INTERS: Unlocking the power of large language models in search with instruction tuning. arXiv 2024, arXiv:2401.06532. [Google Scholar] [CrossRef]

- Weller, O.; Van Durme, B.; Lawrie, D.; Paranjape, A.; Zhang, Y.; Hessel, J. Promptriever: Instruction-trained retrievers can be prompted like language models. arXiv 2024, arXiv:2409.11136. [Google Scholar] [CrossRef]

- Hu, Z.; Wang, C.; Shu, Y.; Paik, H.Y.; Zhu, L. Prompt perturbation in retrieval-augmented generation based large language models. In Proceedings of the 30th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, Barcelona, Spain, 25–29 August 2024; pp. 1119–1130. [Google Scholar]

- Xiao, Z.; Chen, Y.; Zhang, L.; Yao, J.; Wu, Z.; Yu, X.; Pan, Y.; Zhao, L.; Ma, C.; Liu, X.; et al. Instruction-vit: Multi-modal prompts for instruction learning in vit. arXiv 2023, arXiv:2305.00201. [Google Scholar]

- Bao, Y. Prompt Tuning Empowering Downstream Tasks in Multimodal Federated Learning. In Proceedings of the 2024 11th International Conference on Behavioural and Social Computing (BESC), Harbin, China, 16–18 August 2024; pp. 1–7. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Bourdev, L.; Girshick, R.; Hays, J.; Perona, P.; Ramanan, D.; Zitnick, C.L.; Dollár, P. Microsoft COCO: Common Objects in Context. arXiv 2015, arXiv:1405.0312. [Google Scholar] [CrossRef]

- Siragusa, I.; Contino, S.; La Ciura, M.; Alicata, R.; Pirrone, R. MedPix 2.0: A Comprehensive Multimodal Biomedical Data set for Advanced AI Applications. arXiv 2024, arXiv:2407.02994. [Google Scholar]

- Hu, Y.; Yuan, J.; Wen, C.; Lu, X.; Liu, Y.; Li, X. Rsgpt: A remote sensing vision language model and benchmark. ISPRS J. Photogramm. Remote Sens. 2025, 224, 272–286. [Google Scholar] [CrossRef]

- Karpathy, A.; Fei-Fei, L. Deep Visual-Semantic Alignments for Generating Image Descriptions. arXiv 2015, arXiv:1412.2306. [Google Scholar] [CrossRef]

- Zhou, J.; Zheng, Y.; Chen, W.; Zheng, Q.; Su, H.; Zhang, W.; Meng, R.; Shen, X. Beyond content relevance: Evaluating instruction following in retrieval models. arXiv 2024, arXiv:2410.23841. [Google Scholar] [CrossRef]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning Transferable Visual Models From Natural Language Supervision. arXiv 2021, arXiv:2103.00020. [Google Scholar] [CrossRef]

- Rao, Y.; Zhao, W.; Liu, B.; Lu, J.; Zhou, J.; Hsieh, C.J. DynamicViT: Efficient Vision Transformers with Dynamic Token Sparsification. arXiv 2021, arXiv:2106.02034. [Google Scholar]

| Configuration | Value |

|---|---|

| Vision Encoder Initialization | Pretrained BLIP2 ViT-L/14 |

| LLM Initialization | Pretrained OPT-2.7B (frozen) |

| Q-Former Initialization | BLIP2 Q-Former weights |

| QScaler Initialization | Random |

| Learning Rate | |

| Optimizer | AdamW |

| Epochs | 1 |

| GPU Hardware | 1 × NVIDIA RTX 4090 (24 GB) |

| Model | COCO | MedPix | RSICAP | Memory (MB) | Throughput (Images/s) |

|---|---|---|---|---|---|

| CLIP | 0.2572 | 0.2531 | 0.2726 | 2880 | 250.00 |

| DynamicViT | 0.7982 | - | 0.5501 | 14,561 | 29.41 |

| CrossGET | 0.9387 | 0.3478 | 0.4981 | 16,648 | 33.33 |

| BLIP2 | 0.8569 | 0.3473 | 0.5394 | 7213 | 52.63 |

| Ours | 0.9411 | 0.3231 | 0.4946 | 15,149 | 29.41 |

| Setting | Sim↑ | R@5↑ | MRR↑ | Q-Len | FLOPs↓ (G) | Thr↑ (img/s) |

|---|---|---|---|---|---|---|

| With QScaler | 0.94 | 0.34 | 0.25 | 17.80 | 258.29 | 21.85 |

| Without QScaler | 0.93 | 0.35 | 0.26 | 32.00 | 259.63 | 21.66 |

| Setting | Sim↑ | R@5↑ | MRR↑ | QLen | FLOPs↓ (G) | Thr↑ (img/s) |

|---|---|---|---|---|---|---|

| Trainable Q-Former | 0.94 | 0.34 | 0.25 | 17.80 | 258.29 | 21.85 |

| Frozen Q-Former | 0.93 | 0.27 | 0.20 | 16.42 | 258.10 | 21.89 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gu, G.; Xue, Y.; Wu, Z.; Song, L.; Liang, C. InstructSee: Instruction-Aware and Feedback-Driven Multimodal Retrieval with Dynamic Query Generation. Sensors 2025, 25, 5195. https://doi.org/10.3390/s25165195

Gu G, Xue Y, Wu Z, Song L, Liang C. InstructSee: Instruction-Aware and Feedback-Driven Multimodal Retrieval with Dynamic Query Generation. Sensors. 2025; 25(16):5195. https://doi.org/10.3390/s25165195

Chicago/Turabian StyleGu, Guihe, Yuan Xue, Zhengqian Wu, Lin Song, and Chao Liang. 2025. "InstructSee: Instruction-Aware and Feedback-Driven Multimodal Retrieval with Dynamic Query Generation" Sensors 25, no. 16: 5195. https://doi.org/10.3390/s25165195

APA StyleGu, G., Xue, Y., Wu, Z., Song, L., & Liang, C. (2025). InstructSee: Instruction-Aware and Feedback-Driven Multimodal Retrieval with Dynamic Query Generation. Sensors, 25(16), 5195. https://doi.org/10.3390/s25165195