We first introduce the implementation details, evaluation datasets, and performance metrics. Then, we present both quantitative and qualitative comparisons with state-of-the-art methods. Finally, ablation studies are conducted to verify the effectiveness and contribution of each component.

4.1. Implementation and Evaluation Details

This section outlines the implementation settings, datasets used for training and evaluation, and the metrics employed to assess model performance.

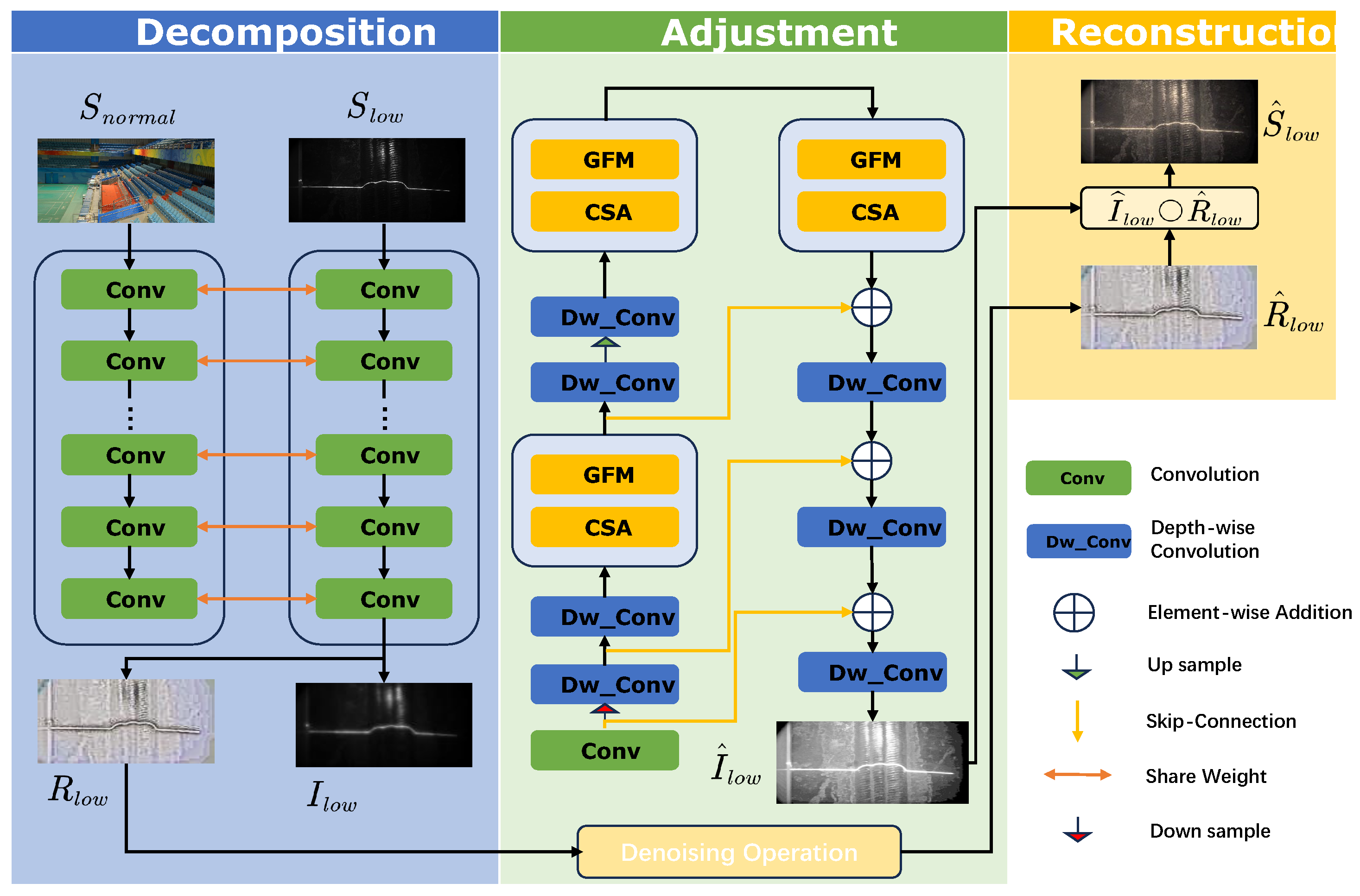

All models were implemented in PyTorch 1.0.0 and trained on an NVIDIA RTX 3090 GPU (24 GB). The decomposition network (DD-Net) was trained on the LOLv1 dataset [

17], which contains 485 training pairs and 15 testing pairs, supplemented by 1000 synthetic pairs to enhance generalization. DD-Net employed a lightweight five-layer convolutional architecture with ReLU activations between most layers to preserve essential features. Training was performed using stochastic gradient descent (SGD) with a batch size of 16 on

image patches. The loss function consisted of multiple terms weighted by hyperparameters:

,

,

, and

for

, and

for

. These hyperparameters were empirically determined based on performance on a validation set to effectively balance the contribution of each loss component.

For illumination curve estimation, 2002 images from 360 multi-exposure sequences in SICE Part 1 [

33] were used. All images were resized to

and trained with a batch size of 8. Convolutional weights are initialized with a Gaussian distribution (mean 0, std 0.02), and biases were set to constants. Optimization was performed using ADAM with a learning rate of

, and loss weights were

,

.

We evaluated our model on several standard benchmarks, including LOLv1 [

17], LOLv2 [

34] (115 image pairs in total), and a custom weld seam dataset with 112 low-/normal-light pairs. For further validation, we used the Part2 subset of the SICE dataset [

33], which contains 229 multi-exposure sequences. Following [

33], we selected the first three or four low-light images (depending on sequence length) from each sequence and paired them with the reference image, resizing all images to 1200 × 900 × 3. This resulted in 767 paired low-/normal-light images, referred to as the Part2 testing set. Evaluation metrics included PSNR [

35,

36] and SSIM [

37,

38,

39] for distortion measurement, and LPIPS [

40] (AlexNet backbone [

41]) for perceptual quality assessment.

4.2. Image Enhancement Performance

We compared our method to a diverse set of state-of-the-art low-light enhancement algorithms, including the unpaired learning-based CLIP-LIT [

31], several zero-reference approaches such as ZeroDCE [

42], ZeroDCE++ [

43], RUAS [

44], and SCI [

45], and the classical supervised method Retinex-Net [

17].

As summarized in

Table 1 and illustrated in

Figure 5, our method was evaluated against this comprehensive range of supervised, zero-reference, and unpaired learning-based enhancement techniques. As summarized in

Table 1 and illustrated in

Figure 5, we evaluated our method against a variety of supervised, zero-reference, and unpaired enhancement techniques.

The classical supervised method Retinex-Net [

17] showed moderate results with PSNR 16.77, SSIM 0.56, and a relatively high LPIPS of 0.47, reflecting challenges in handling complex lighting. Among the zero-reference methods, RUAS [

44] achieved strong performance (PSNR 18.23, SSIM 0.72, and LPIPS 0.35), ranking second overall. Other zero-reference methods like SCI [

45] and Zero-DCE [

42] showed comparable perceptual quality (LPIPS 0.32) but lower fidelity (PSNR and SSIM). Zero-DCE++ [

43] exhibited the weakest structural preservation, with SSIM 0.45. The unpaired method CLIP-LIT [

31] performed the worst numerically (PSNR 14.82 and SSIM 0.52) but maintained reasonable perceptual quality (LPIPS 0.30). Our method outperformed all others in SSIM (0.79) and LPIPS (0.19), with PSNR (18.88) close to RUAS. This demonstrates superior structural fidelity and perceptual quality, effectively balancing enhancement and texture preservation.

As shown in

Table 2, we further compared these methods on the SICE Part2 testing set, which contained 767 low-/normal-light image pairs. Retinex-Net and CLIP-LIT again showed moderate performance. Zero-DCE achieved strong perceptual quality (LPIPS 0.207), while SCI offerred a good balance across metrics. However, our method consistently outperformed all others, achieving the best PSNR (19.97), SSIM (0.812), and lowest LPIPS (0.201), demonstrating its robustness across scenes with diverse exposure levels.

Overall, the zero-reference methods dominated over supervised and unpaired approaches on LOL and SICE, with our method setting a new state of the art in perceptual quality and structural preservation.

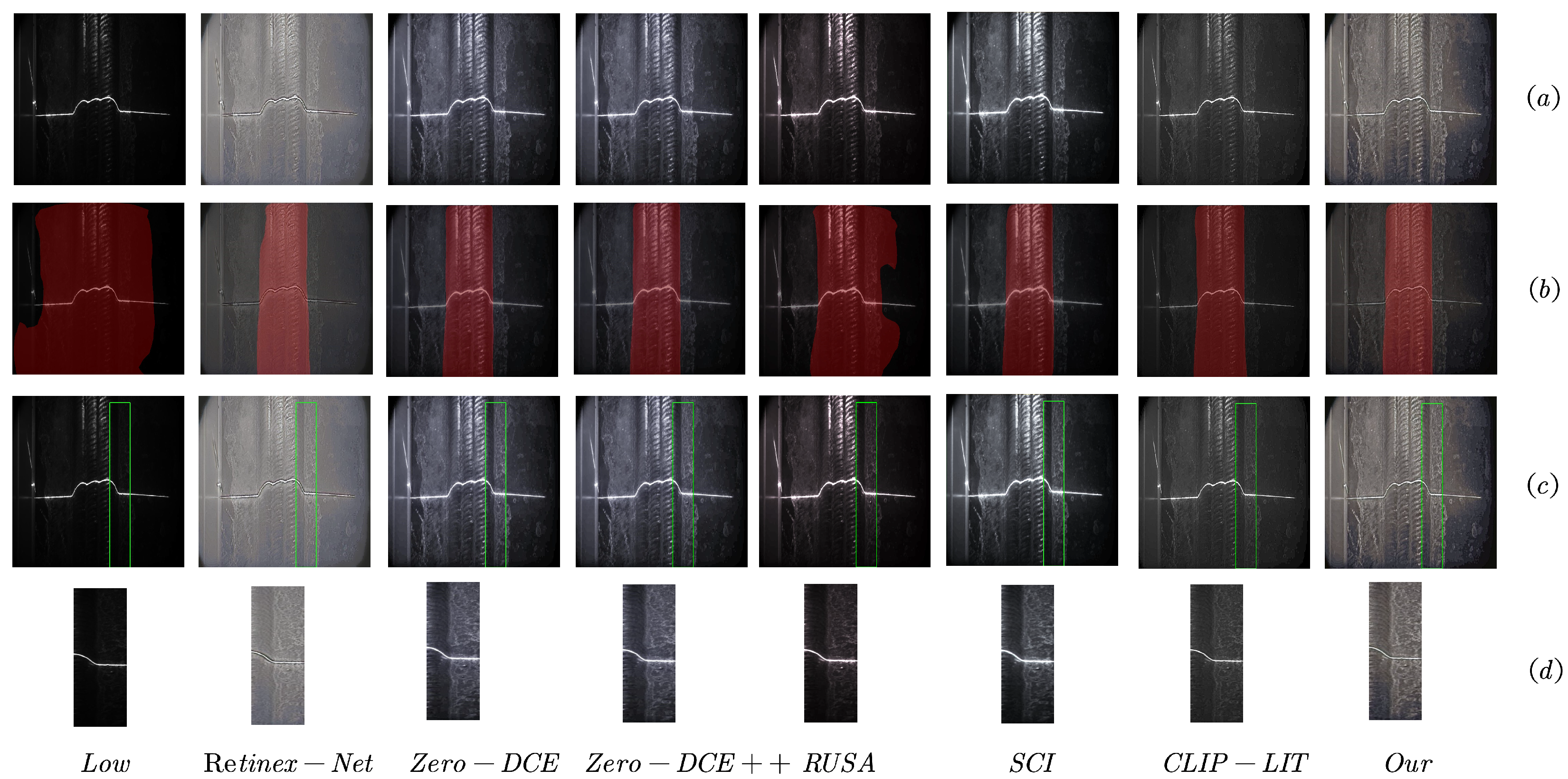

In the weld seam dataset, as shown in

Table 3 and

Figure 6, our method also demonstrated superior generalization capability and enhancement quality, making it well-suited for industrial scenarios with challenging illumination conditions. Specifically, we adopted 223 pairs of weld seam images captured under both low-light and normal-light conditions to evaluate the enhancement performance more comprehensively. Here, the low-light condition is defined as environments with ambient illumination below 200 lux, typical of welding and industrial sites where lighting is insufficient and uneven, thus posing significant challenges for image acquisition and feature recognition.

Specifically, our method achieved the highest PSNR (17.38) and SSIM (0.78), along with the lowest LPIPS (0.30), clearly outperforming all baseline methods. These results indicate that our model not only restores image fidelity more accurately but also preserves perceptual quality more effectively, which is critical in complex, low-light industrial environments where accurate feature representation is essential for downstream tasks such as weld seam detection and robot guidance.

Among the baseline methods, Retinex-Net [

17], a supervised method, showed relatively strong performance, with PSNR of 16.52 and SSIM of 0.66, but sufferred from a higher LPIPS of 0.40, reflecting limitations in perceptual realism. The zero-reference method Zero-DCE [

42] also performed reasonably well (PSNR 12.65, SSIM 0.70, LPIPS 0.38), indicating better generalizability than several other baselines.

However, the other methods struggled significantly. For example, RUAS [

44] and Zero-DCE++ [

43] exhibited weak structural fidelity and poor perceptual scores, with low SSIM values of 0.37 and 0.35, respectively. CLIP-LIT [

31], although based on unpaired learning, also failed to produce competitive results, with PSNR of only 9.19 and LPIPS as high as 0.44. These findings emphasize the challenges of low-light enhancement in real-world industrial data, where many existing methods suffer from insufficient robustness or structural degradation. In contrast, the strong performance of our approach underscores its robustness and adaptability to non-ideal lighting conditions, making it a promising solution for practical deployment in low-light weld inspection and automation tasks.

Table 4 compares different methods in terms of model complexity and runtime. The FLOPs were calculated based on an input image size of 1200 × 900 × 3. Although our method only has 0.15 M parameters, 88.1 G FLOPs, and a runtime of 0.0031 s, demonstrating a good balance between performance and efficiency, Retinex-Net and SCI have similarly small parameter counts but much higher FLOPs—587.47 G and 188.87 G respectively—resulting in significantly lower computational efficiency. While Zero-DCE++ is the most lightweight and fastest method, its enhancement capability is limited. Overall, our method achieved an effective trade-off between complexity and speed, making it more suitable for practical deployment.

4.3. Ablation Study

To verify the effectiveness of each component in the proposed UICE-Net architecture, we conducted comprehensive ablation experiments. All models were trained on the publicly available SICE dataset [

33] and evaluated on the LOL and weld datasets. Each model was trained for 100 epochs to ensure reliable and fair comparison.

In our ablation setup, we progressively removed individual modules from the full UICE-Net to assess their contribution to the overall performance. The evaluation was performed using the LOL and Weld datasets to demonstrate the model’s generalizability under domain shift.

Table 5 presents the results of this study. For placeholder purposes, all metric values were set to zero.

Compared to the baseline, the complete UICE-Net achieved significant performance improvements across all evaluation metrics. PSNR increased from 18.34 to 18.88, which corresponds to a relative improvement of approximately 2.9%. SSIM improved from 0.61 to 0.79, a notable increase of approximately 29.5%, indicating much better preservation of structural information. Meanwhile, the LPIPS score decreased from 0.39 to 0.19, representing a 51.3% reduction, which highlights the enhanced perceptual quality of the output images.

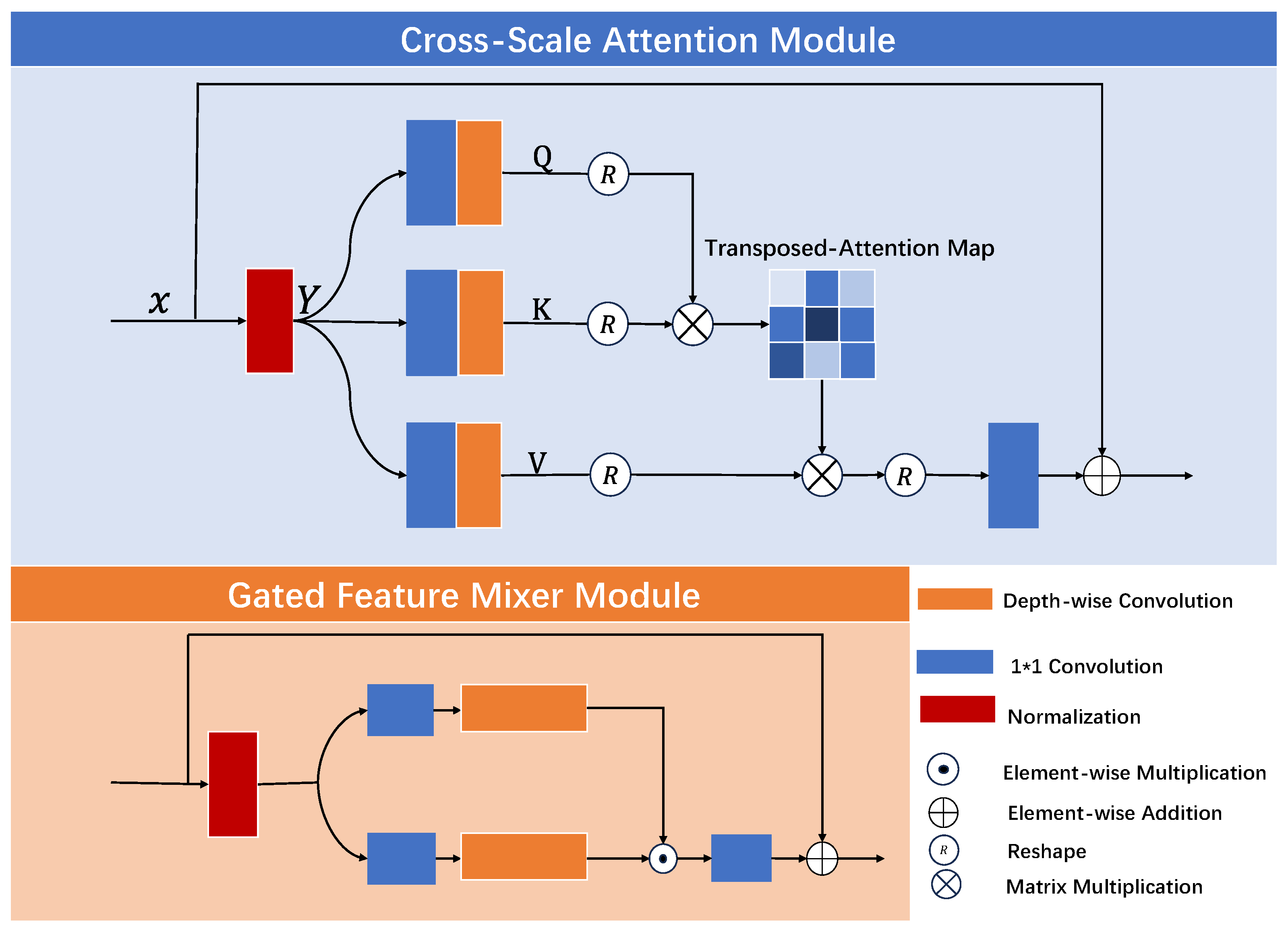

Removing the GFM module led to a drop in SSIM from 0.79 to 0.65 (a 17.7% decrease) and a rise in LPIPS from 0.19 to 0.25 (a 31.6% increase), suggesting that GFM plays a vital role in preserving structure and enhancing visual quality. Similarly, removing the CSA module resulted in PSNR degradation from 18.88 to 18.37 (a 2.7% decrease), and LPIPS worsening from 0.19 to 0.36 (an 89.5% increase), indicating that CSA significantly contributes to both fidelity and perceptual quality. These findings confirm the effectiveness and necessity of each module in the UICE-Net architecture.

In addition to module-wise ablation, we further investigated the impact of the training strategy on model performance. Specifically, we compared two approaches using the SICE dataset: (1) directly training UICE-Net on the raw input images without any preprocessing, and (2) first decomposing images into illumination and reflectance components using Retinex theory, then training the UICE-Net solely on the illumination maps. This comparison aimed to evaluate whether explicit illumination modeling improves low-light enhancement.

All models were trained for 100 epochs under identical conditions. The evaluation on the LOL and weld datasets are summarized in

Table 6, which shows that training on Retinex-decomposed illumination maps significantly outperformed direct training on raw images.

These results clearly demonstrate that explicit decomposition of images into illumination and reflectance components via Retinex theory, followed by training UICE-Net on the illumination component, enables the model to more effectively capture and enhance lighting information under low-light conditions. Specifically, PSNR improved from 18.51 to 18.88, representing an increase of approximately 2.0%. SSIM showed a substantial improvement from 0.56 to 0.79, which corresponds to a relative increase of approximately 41.1%. Meanwhile, LPIPS decreased from 0.34 to 0.19, indicating a reduction of approximately 44.1%, reflecting significantly better perceptual quality. These improvements highlight that the decomposition strategy not only enhanced objective image quality metrics but also greatly improved perceptual similarity and structural fidelity. Consequently, this training strategy substantially improved enhancement quality and visual fidelity compared to direct end-to-end training on raw images.

4.4. Application to Low-Light Weld Seam Segmentation

To further assess the practical effectiveness of low-light image enhancement, as highlighted in recent reviews and empirical studies [

46,

47], we evaluated its impact on a downstream task—weld seam segmentation under challenging illumination conditions.Specifically, we constructed a dedicated dataset comprising 5000 training images captured under normal lighting and 683 testing images collected in real-world low-light environments. The segmentation task was performed using the lightweight yet effective PidNet model. To evaluate the segmentation performance, we adopted four widely-used metrics: Intersection over Union (IoU) [

48], mean Intersection over Union (mIoU) [

49], accuracy [

50], and mean accuracy (mAcc) [

50], which together provided a comprehensive assessment of pixel-level accuracy and region-level consistency.

As shown in

Table 7 and

Figure 7, our method achieved the highest segmentation performance across all metrics. Specifically, we obtained IoU values of 98.20% and 95.10% for the background and weld seam line classes, respectively, with corresponding accuracies of 98.75% and 98.90%. These results significantly surpassed all baseline and state-of-the-art enhancement methods, clearly demonstrating the benefit of our approach in supporting robust downstream vision tasks.

The second-best performer was CLIP-LIT, which also delivered strong segmentation performance but still lagged behind our method. Other methods such as Zero-DCE, Zero-DCE++, RUSA, SCI, and Retinex-Net offerred moderate improvements over the original low-light images but suffered from visual artifacts, insufficient contrast recovery, or structural distortion that negatively impacted segmentation accuracy.

In comparison, segmentation on the original low-light images (i.e., without any enhancement) produced the lowest scores—particularly for the weld seam line class, where accuracy dropped to only 5.97%. This underscores the significant challenge posed by poor illumination in real-world welding scenarios and the necessity of effective enhancement techniques.

To complement the quantitative results, we also present a visual comparison of the enhanced weld seam images captured under low-light conditions in

Figure 7. Our method was capable of directly enhancing such images without requiring additional illumination or pre-processing.

As observed in

Figure 7, methods like CLIP-LIT and Retinex-Net failed to fully restore edge details, often resulting in blurry or low-contrast outputs. While Zero-DCE, Zero-DCE++, and SCI improved visual clarity and edge contrast, their results often appearred unnaturally bright and deviated from the appearance of weld seams under standard lighting conditions. In contrast, our method produced visually consistent outputs that preserved structural fidelity and maintained natural texture, making it more suitable for industrial applications.