SimID: Wi-Fi-Based Few-Shot Cross-Domain User Recognition with Identity Similarity Learning

Abstract

1. Introduction

- We propose SimID, a Wi-Fi-based user identification system that exhibits strong generalization capabilities in new actions, new users, and new scenes.

- SimID is built on Prototypical Network and SE-ResNet that generates high-level identity features from Wi-Fi signals, maximizing the similarity between the same identity while maximizing separation between different identities.

2. Related Work

2.1. Wi-Fi Action Recognition

2.2. Wi-Fi User Identification

2.3. Few-Shot Learning

3. SimID System

3.1. Problem Formulation

3.2. Training Strategy

3.2.1. Signal Processing

3.2.2. Training Support Set and Training Query Set Sampling

3.2.3. Prototype Computation and Loss Function

3.3. Test Strategy

3.4. Hyper Parameters

4. Evaluation

4.1. Datasets and Data Splitting

- (1)

- Cross-Person-Cross-Scene (CPCS): In a practical setting, we may deploy SimID in new scenes for new users. We use samples from all four scenes to evaluate SimID ’s generalization capabilities. The training phase utilizes actions from users of Scene 1. To evaluate SimID on unseen subjects from unseen scenes, we define the test user set as from three other scenes. Additionally, the action set is defined as

- (2)

- Cross-Action (CA): In practical deployments, users may perform actions that were never observed during system training. We use samples from Scene 1 to evaluate SimID ’s generalization capabilities. The training phase utilizes actions , where are selected from the ‘Human-Object Interaction’ category, from ‘Fitness’, from ‘Body Motion’, and from ‘Human-Computer Interaction’. To evaluate SimID on unseen actions, we define the test set as . Additionally, the user set is defined as and all the samples are from Scene 1.

- (3)

- Cross-Person (CP): In practical deployments, the composition of household users is inherently dynamic—new family members may join or visitors may temporarily interact with the system. We use samples from Scene 1 to evaluate SimID’s generalization capabilities. The training phase utilizes users . To evaluate SimID on unseen subjects, we define the test user set as . Additionally, the action set is defined as and all samples are from Scene 1.

- (4)

- Cross-Action-Cross-Person (CACP): In practical deployments, new household members or visitors may exhibit behavioral patterns that significantly differ from those observed during system training. We use samples from Scene 1 to evaluate SimID’s generalization capabilities. The training phase utilizes actions from users . To evaluate SimID on unseen subjects’ unseen actions, we have and . All samples are from Scene 1.

4.2. Results

4.2.1. Different Few-Shot Learning Networks

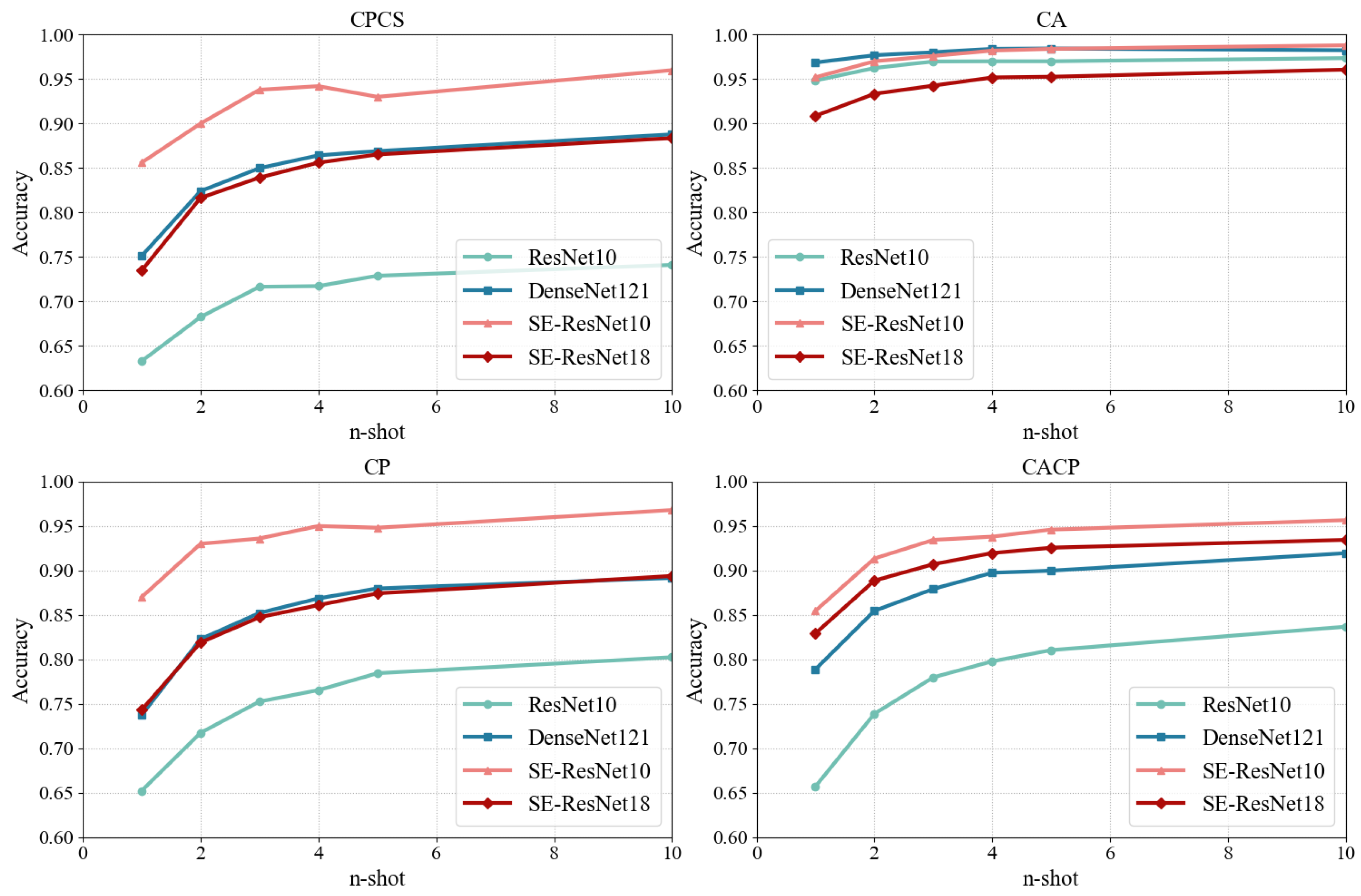

4.2.2. Different Feature Encoders

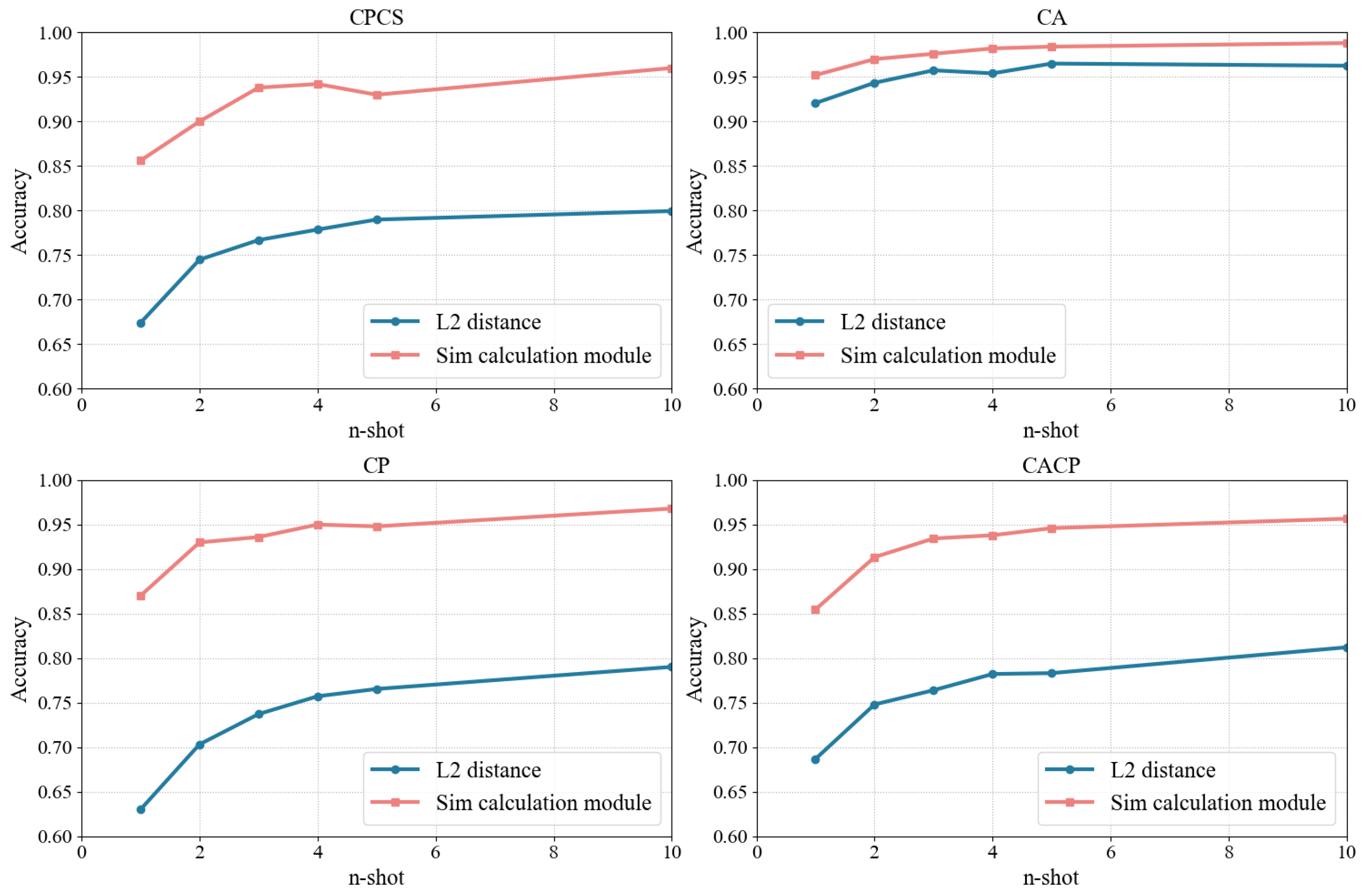

4.2.3. Different Similarity Computation Methods

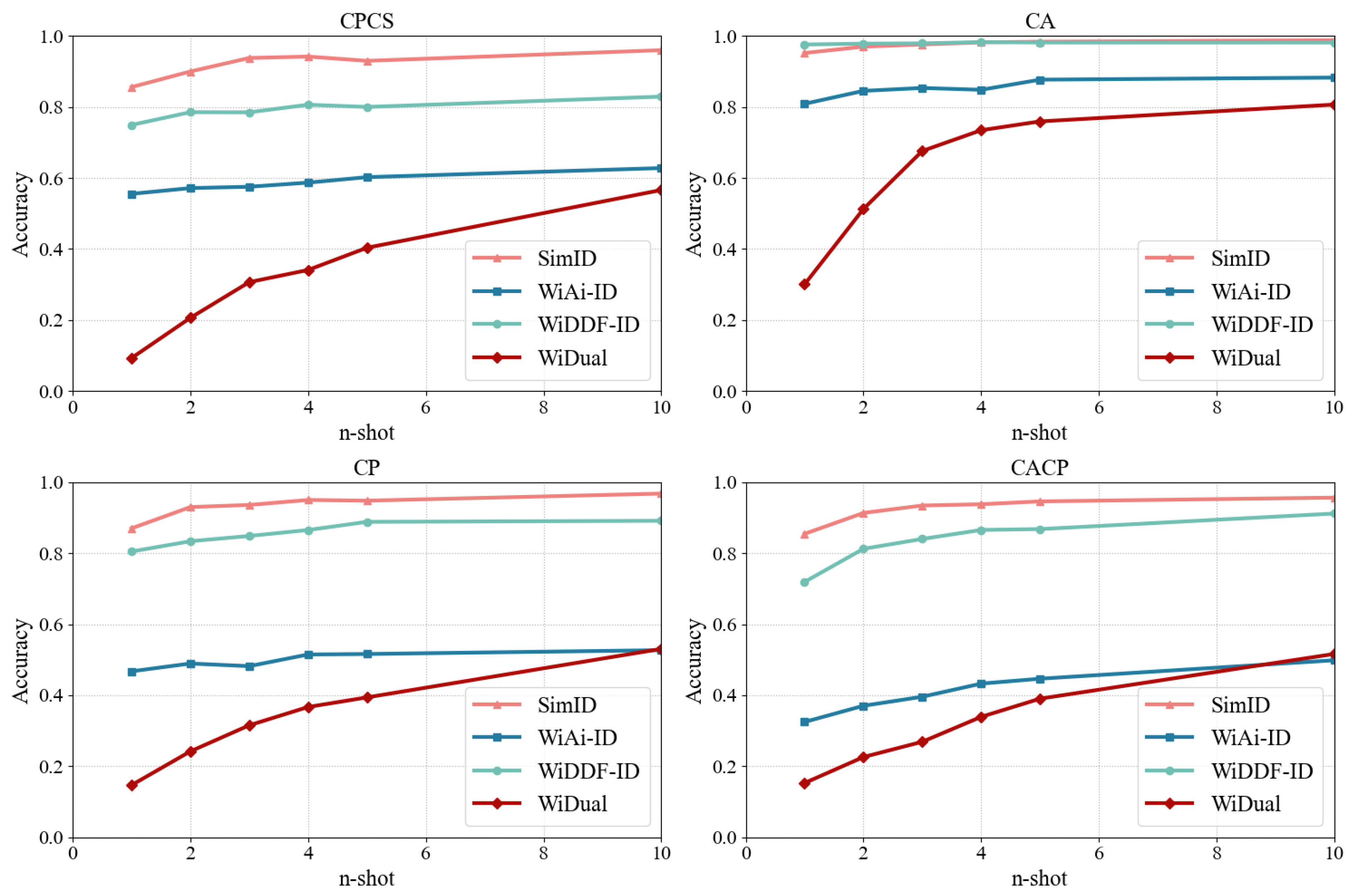

4.2.4. SimID vs. Conventional Methods

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| User | 1-Shot | 2-Shot | 3-Shot | 4-Shot | 5-Shot | 10-Shot | Average |

|---|---|---|---|---|---|---|---|

| 03 | 85.90% | 91.15% | 90.86% | 94.95% | 93.43% | 94.65% | 91.82% |

| 04 | 81.24% | 87.71% | 91.51% | 91.46% | 91.79% | 93.37% | 89.51% |

| 05 | 91.41% | 96.04% | 97.39% | 97.50% | 95.70% | 98.12% | 96.03% |

| 06 | 77.70% | 84.31% | 86.95% | 85.97% | 88.43% | 89.63% | 85.50% |

| 07 | 78.53% | 84.84% | 86.91% | 90.12% | 89.93% | 90.93% | 86.88% |

| 13 | 84.11% | 90.30% | 91.32% | 90.89% | 92.99% | 94.01% | 90.61% |

| 23 | 79.85% | 86.52% | 86.00% | 86.91% | 87.95% | 86.80% | 85.67% |

| 24 | 90.47% | 94.27% | 93.67% | 94.54% | 95.30% | 96.13% | 94.06% |

| 31 | 88.76% | 91.27% | 92.74% | 95.79% | 94.24% | 95.58% | 93.06% |

| average | 84.22% | 89.60% | 90.82% | 92.01% | 92.20% | 93.25% | 90.35% |

| User | 1-Shot | 2-Shot | 3-Shot | 4-Shot | 5-Shot | 10-Shot | Average |

|---|---|---|---|---|---|---|---|

| 03 | 81.99% | 88.32% | 90.02% | 88.72% | 91.92% | 93.65% | 89.10% |

| 04 | 82.37% | 88.72% | 92.77% | 93.58% | 92.32% | 92.72% | 90.41% |

| 05 | 96.11% | 97.21% | 98.19% | 98.55% | 98.24% | 99.24% | 97.92% |

| 06 | 77.56% | 87.59% | 84.83% | 88.41% | 89.71% | 87.83% | 85.99% |

| 07 | 82.91% | 87.10% | 89.09% | 91.71% | 91.20% | 92.90% | 89.15% |

| 13 | 84.43% | 91.59% | 91.49% | 95.14% | 93.70% | 95.70% | 92.01% |

| 23 | 74.87% | 81.33% | 84.91% | 83.42% | 85.76% | 86.80% | 82.85% |

| 24 | 89.13% | 91.88% | 91.39% | 94.71% | 93.94% | 95.07% | 92.69% |

| 31 | 88.93% | 93.31% | 94.45% | 94.63% | 93.20% | 95.74% | 93.38% |

| average | 84.26% | 89.67% | 90.79% | 92.10% | 92.22% | 93.30% | 90.39% |

| User | 1-Shot | 2-Shot | 3-Shot | 4-Shot | 5-Shot | 10-Shot | Average |

|---|---|---|---|---|---|---|---|

| 03 | 85.90% | 91.15% | 90.86% | 94.95% | 93.43% | 94.65% | 91.82% |

| 04 | 81.24% | 87.71% | 91.51% | 91.46% | 91.79% | 93.37% | 89.51% |

| 05 | 91.41% | 96.04% | 97.39% | 97.50% | 95.70% | 98.12% | 96.03% |

| 06 | 77.70% | 84.31% | 86.95% | 85.97% | 88.43% | 89.63% | 85.50% |

| 07 | 78.53% | 84.84% | 86.91% | 90.12% | 89.93% | 90.93% | 86.88% |

| 13 | 84.11% | 90.30% | 91.32% | 90.89% | 92.99% | 94.01% | 90.61% |

| 23 | 79.85% | 86.52% | 86.00% | 86.91% | 87.95% | 86.80% | 85.67% |

| 24 | 90.47% | 94.27% | 93.67% | 94.54% | 95.30% | 96.13% | 94.06% |

| 31 | 88.76% | 91.27% | 92.74% | 95.79% | 94.24% | 95.58% | 93.06% |

| average | 84.22% | 89.60% | 90.82% | 92.01% | 92.20% | 93.25% | 90.35% |

| User | 1-Shot | 2-Shot | 3-Shot | 4-Shot | 5-Shot | 10-Shot | Average |

|---|---|---|---|---|---|---|---|

| 03 | 83.90% | 89.71% | 90.44% | 91.73% | 92.67% | 94.15% | 90.43% |

| 04 | 81.80% | 88.21% | 92.14% | 92.51% | 92.06% | 93.04% | 89.96% |

| 05 | 93.70% | 96.62% | 97.79% | 98.02% | 96.95% | 98.68% | 96.96% |

| 06 | 77.63% | 85.92% | 85.88% | 87.17% | 89.07% | 88.72% | 85.73% |

| 07 | 80.66% | 85.96% | 87.99% | 90.91% | 90.56% | 91.90% | 88.00% |

| 13 | 84.27% | 90.94% | 91.40% | 92.97% | 93.35% | 94.85% | 91.30% |

| 23 | 77.28% | 83.85% | 85.45% | 85.13% | 86.84% | 86.80% | 84.23% |

| 24 | 89.80% | 93.06% | 92.51% | 94.62% | 94.61% | 95.60% | 93.37% |

| 31 | 88.85% | 92.28% | 93.59% | 95.20% | 93.72% | 95.66% | 93.22% |

| average | 84.21% | 89.62% | 90.80% | 92.03% | 92.20% | 93.27% | 90.35% |

| User | 1-Shot | 2-Shot | 3-Shot | 4-Shot | 5-Shot | 10-Shot | Average |

|---|---|---|---|---|---|---|---|

| 01 | 91.02% | 90.70% | 93.04% | 94.56% | 95.57% | 94.48% | 93.23% |

| 02 | 96.76% | 96.94% | 96.71% | 98.87% | 97.37% | 97.01% | 97.28% |

| 03 | 92.74% | 93.96% | 92.94% | 94.05% | 95.51% | 97.65% | 94.47% |

| 04 | 98.24% | 99.40% | 99.47% | 99.47% | 100.00% | 99.39% | 99.33% |

| 05 | 90.14% | 95.76% | 99.37% | 95.40% | 98.84% | 98.15% | 96.28% |

| 06 | 91.14% | 94.61% | 97.63% | 97.55% | 97.21% | 97.55% | 95.95% |

| 07 | 98.78% | 99.33% | 99.31% | 96.91% | 99.36% | 98.83% | 98.76% |

| 08 | 96.25% | 100.00% | 99.44% | 100.00% | 99.38% | 99.38% | 99.07% |

| 09 | 98.19% | 97.99% | 98.73% | 98.82% | 100.00% | 100.00% | 98.95% |

| 10 | 95.71% | 96.36% | 98.91% | 97.81% | 98.77% | 99.39% | 97.83% |

| 11 | 99.40% | 99.43% | 100.00% | 100.00% | 100.00% | 100.00% | 99.81% |

| 12 | 99.36% | 99.41% | 99.42% | 100.00% | 100.00% | 98.92% | 99.52% |

| 13 | 94.34% | 95.71% | 99.44% | 98.73% | 97.35% | 97.77% | 97.22% |

| 14 | 83.72% | 89.87% | 91.95% | 90.86% | 93.37% | 93.42% | 90.53% |

| 15 | 90.06% | 92.36% | 97.37% | 92.77% | 94.54% | 96.41% | 93.92% |

| 16 | 99.39% | 100.00% | 100.00% | 100.00% | 100.00% | 100.00% | 99.90% |

| 17 | 95.27% | 96.91% | 96.95% | 100.00% | 99.47% | 99.45% | 98.01% |

| 18 | 100.00% | 99.43% | 100.00% | 100.00% | 100.00% | 100.00% | 99.90% |

| 19 | 100.00% | 99.41% | 100.00% | 100.00% | 100.00% | 100.00% | 99.90% |

| 20 | 95.73% | 97.45% | 99.37% | 98.11% | 97.53% | 98.97% | 97.86% |

| 21 | 78.98% | 94.12% | 90.36% | 92.90% | 92.98% | 93.45% | 90.47% |

| 22 | 93.79% | 97.08% | 94.89% | 93.79% | 97.31% | 99.35% | 96.03% |

| 23 | 93.17% | 92.35% | 94.59% | 92.78% | 90.85% | 96.43% | 93.36% |

| 24 | 82.39% | 91.93% | 88.69% | 95.63% | 94.61% | 90.91% | 90.69% |

| 25 | 89.88% | 96.59% | 95.48% | 95.57% | 98.73% | 98.01% | 95.71% |

| 26 | 91.56% | 96.89% | 100.00% | 98.80% | 97.63% | 97.58% | 97.08% |

| 27 | 95.32% | 93.98% | 96.49% | 97.02% | 95.57% | 98.69% | 96.18% |

| 28 | 84.15% | 88.30% | 91.52% | 94.64% | 93.90% | 94.74% | 91.21% |

| 29 | 100.00% | 100.00% | 100.00% | 100.00% | 100.00% | 100.00% | 100.00% |

| 30 | 96.51% | 98.78% | 98.88% | 97.01% | 98.31% | 98.73% | 98.04% |

| average | 93.73% | 96.17% | 97.03% | 97.07% | 97.47% | 97.82% | 96.55% |

| User | 1-Shot | 2-Shot | 3-Shot | 4-Shot | 5-Shot | 10-Shot | Average |

|---|---|---|---|---|---|---|---|

| 01 | 93.83% | 96.89% | 93.63% | 92.67% | 94.97% | 96.25% | 94.71% |

| 02 | 94.71% | 95.96% | 95.45% | 99.43% | 98.67% | 99.39% | 97.27% |

| 03 | 92.22% | 88.61% | 94.05% | 94.61% | 95.51% | 93.26% | 93.04% |

| 04 | 98.82% | 99.40% | 100.00% | 98.95% | 99.44% | 100.00% | 99.43% |

| 05 | 93.43% | 96.34% | 98.74% | 97.08% | 98.27% | 99.38% | 97.21% |

| 06 | 94.74% | 98.14% | 99.40% | 97.55% | 99.43% | 99.38% | 98.10% |

| 07 | 97.01% | 94.90% | 97.30% | 98.74% | 97.50% | 97.69% | 97.19% |

| 08 | 94.48% | 97.31% | 98.32% | 97.97% | 100.00% | 98.76% | 97.81% |

| 09 | 97.60% | 100.00% | 99.36% | 100.00% | 100.00% | 99.42% | 99.40% |

| 10 | 92.86% | 96.95% | 99.45% | 96.24% | 98.77% | 98.19% | 97.08% |

| 11 | 98.82% | 99.43% | 98.77% | 100.00% | 99.40% | 99.47% | 99.31% |

| 12 | 99.36% | 100.00% | 100.00% | 100.00% | 100.00% | 100.00% | 99.89% |

| 13 | 99.34% | 100.00% | 100.00% | 100.00% | 100.00% | 100.00% | 99.89% |

| 14 | 87.27% | 91.61% | 96.48% | 92.44% | 93.89% | 94.67% | 92.73% |

| 15 | 83.16% | 86.83% | 92.50% | 90.06% | 92.02% | 93.06% | 89.61% |

| 16 | 100.00% | 100.00% | 100.00% | 100.00% | 100.00% | 100.00% | 100.00% |

| 17 | 99.38% | 100.00% | 100.00% | 100.00% | 100.00% | 100.00% | 99.90% |

| 18 | 98.29% | 99.43% | 99.42% | 100.00% | 100.00% | 100.00% | 99.52% |

| 19 | 96.72% | 97.13% | 98.18% | 98.10% | 97.50% | 100.00% | 97.94% |

| 20 | 90.75% | 97.45% | 96.32% | 96.89% | 96.34% | 97.46% | 95.87% |

| 21 | 90.85% | 96.39% | 93.75% | 94.74% | 95.78% | 96.91% | 94.74% |

| 22 | 84.83% | 95.95% | 92.78% | 95.57% | 95.26% | 96.20% | 93.43% |

| 23 | 85.71% | 91.41% | 91.62% | 94.89% | 94.85% | 91.22% | 91.62% |

| 24 | 88.51% | 88.10% | 91.98% | 90.00% | 90.29% | 96.39% | 90.88% |

| 25 | 93.79% | 97.14% | 96.57% | 96.79% | 96.89% | 98.67% | 96.64% |

| 26 | 92.76% | 95.12% | 98.62% | 97.62% | 98.21% | 98.77% | 96.85% |

| 27 | 91.06% | 92.31% | 95.38% | 97.60% | 96.79% | 95.57% | 94.79% |

| 28 | 88.00% | 93.79% | 93.79% | 94.64% | 96.25% | 96.43% | 93.82% |

| 29 | 99.37% | 100.00% | 100.00% | 100.00% | 100.00% | 100.00% | 99.89% |

| 30 | 96.51% | 99.39% | 98.88% | 99.39% | 99.43% | 97.48% | 98.51% |

| average | 93.81% | 96.20% | 97.02% | 97.07% | 97.52% | 97.80% | 96.57% |

| User | 1-Shot | 2-Shot | 3-Shot | 4-Shot | 5-Shot | 10-Shot | Average |

|---|---|---|---|---|---|---|---|

| 01 | 91.02% | 90.70% | 93.04% | 94.56% | 95.57% | 94.48% | 93.23% |

| 02 | 96.76% | 96.94% | 96.71% | 98.87% | 97.37% | 97.01% | 97.28% |

| 03 | 92.74% | 93.96% | 92.94% | 94.05% | 95.51% | 97.65% | 94.47% |

| 04 | 98.24% | 99.40% | 99.47% | 99.47% | 100.00% | 99.39% | 99.33% |

| 05 | 90.14% | 95.76% | 99.37% | 95.40% | 98.84% | 98.15% | 96.28% |

| 06 | 91.14% | 94.61% | 97.63% | 97.55% | 97.21% | 97.55% | 95.95% |

| 07 | 98.78% | 99.33% | 99.31% | 96.91% | 99.36% | 98.83% | 98.76% |

| 08 | 96.25% | 100.00% | 99.44% | 100.00% | 99.38% | 99.38% | 99.07% |

| 09 | 98.19% | 97.99% | 98.73% | 98.82% | 100.00% | 100.00% | 98.95% |

| 10 | 95.71% | 96.36% | 98.91% | 97.81% | 98.77% | 99.39% | 97.83% |

| 11 | 99.40% | 99.43% | 100.00% | 100.00% | 100.00% | 100.00% | 99.81% |

| 12 | 99.36% | 99.41% | 99.42% | 100.00% | 100.00% | 98.92% | 99.52% |

| 13 | 94.34% | 95.71% | 99.44% | 98.73% | 97.35% | 97.77% | 97.22% |

| 14 | 83.72% | 89.87% | 91.95% | 90.86% | 93.37% | 93.42% | 90.53% |

| 15 | 90.06% | 92.36% | 97.37% | 92.77% | 94.54% | 96.41% | 93.92% |

| 16 | 99.39% | 100.00% | 100.00% | 100.00% | 100.00% | 100.00% | 99.90% |

| 17 | 95.27% | 96.91% | 96.95% | 100.00% | 99.47% | 99.45% | 98.01% |

| 18 | 100.00% | 99.43% | 100.00% | 100.00% | 100.00% | 100.00% | 99.90% |

| 19 | 100.00% | 99.41% | 100.00% | 100.00% | 100.00% | 100.00% | 99.90% |

| 20 | 95.73% | 97.45% | 99.37% | 98.11% | 97.53% | 98.97% | 97.86% |

| 21 | 78.98% | 94.12% | 90.36% | 92.90% | 92.98% | 93.45% | 90.47% |

| 22 | 93.79% | 97.08% | 94.89% | 93.79% | 97.31% | 99.35% | 96.03% |

| 23 | 93.17% | 92.35% | 94.59% | 92.78% | 90.85% | 96.43% | 93.36% |

| 24 | 82.39% | 91.93% | 88.69% | 95.63% | 94.61% | 90.91% | 90.69% |

| 25 | 89.88% | 96.59% | 95.48% | 95.57% | 98.73% | 98.01% | 95.71% |

| 26 | 91.56% | 96.89% | 100.00% | 98.80% | 97.63% | 97.58% | 97.08% |

| 27 | 95.32% | 93.98% | 96.49% | 97.02% | 95.57% | 98.69% | 96.18% |

| 28 | 84.15% | 88.30% | 91.52% | 94.64% | 93.90% | 94.74% | 91.21% |

| 29 | 100.00% | 100.00% | 100.00% | 100.00% | 100.00% | 100.00% | 100.00% |

| 30 | 96.51% | 98.78% | 98.88% | 97.01% | 98.31% | 98.73% | 98.04% |

| average | 93.73% | 96.17% | 97.03% | 97.07% | 97.47% | 97.82% | 96.55% |

| User | 1-Shot | 2-Shot | 3-Shot | 4-Shot | 5-Shot | 10-Shot | Average |

|---|---|---|---|---|---|---|---|

| 01 | 92.40% | 93.69% | 93.33% | 93.60% | 95.27% | 95.36% | 93.94% |

| 02 | 95.72% | 96.45% | 96.08% | 99.15% | 98.01% | 98.18% | 97.27% |

| 03 | 92.48% | 91.21% | 93.49% | 94.33% | 95.51% | 95.40% | 93.74% |

| 04 | 98.53% | 99.40% | 99.73% | 99.21% | 99.72% | 99.69% | 99.38% |

| 05 | 91.76% | 96.05% | 99.05% | 96.23% | 98.55% | 98.76% | 96.73% |

| 06 | 92.90% | 96.34% | 98.51% | 97.55% | 98.31% | 98.45% | 97.01% |

| 07 | 97.89% | 97.07% | 98.29% | 97.82% | 98.42% | 98.26% | 97.96% |

| 08 | 95.36% | 98.64% | 98.88% | 98.98% | 99.69% | 99.07% | 98.43% |

| 09 | 97.90% | 98.98% | 99.04% | 99.40% | 100.00% | 99.71% | 99.17% |

| 10 | 94.26% | 96.66% | 99.18% | 97.02% | 98.77% | 98.79% | 97.45% |

| 11 | 99.11% | 99.43% | 99.38% | 100.00% | 99.70% | 99.73% | 99.56% |

| 12 | 99.36% | 99.71% | 99.71% | 100.00% | 100.00% | 99.46% | 99.71% |

| 13 | 96.77% | 97.81% | 99.72% | 99.36% | 98.66% | 98.87% | 98.53% |

| 14 | 85.46% | 90.73% | 94.16% | 91.64% | 93.63% | 94.04% | 91.61% |

| 15 | 86.47% | 89.51% | 94.87% | 91.39% | 93.26% | 94.71% | 91.70% |

| 16 | 99.70% | 100.00% | 100.00% | 100.00% | 100.00% | 100.00% | 99.95% |

| 17 | 97.28% | 98.43% | 98.45% | 100.00% | 99.74% | 99.72% | 98.94% |

| 18 | 99.14% | 99.43% | 99.71% | 100.00% | 100.00% | 100.00% | 99.71% |

| 19 | 98.33% | 98.26% | 99.08% | 99.04% | 98.73% | 100.00% | 98.91% |

| 20 | 93.18% | 97.45% | 97.82% | 97.50% | 96.93% | 98.21% | 96.85% |

| 21 | 84.50% | 95.24% | 92.02% | 93.81% | 94.36% | 95.15% | 92.51% |

| 22 | 89.09% | 96.51% | 93.82% | 94.67% | 96.28% | 97.75% | 94.69% |

| 23 | 89.29% | 91.88% | 93.09% | 93.82% | 92.81% | 93.75% | 92.44% |

| 24 | 85.34% | 89.97% | 90.30% | 92.73% | 92.40% | 93.57% | 90.72% |

| 25 | 91.79% | 96.87% | 96.02% | 96.18% | 97.81% | 98.34% | 96.17% |

| 26 | 92.16% | 96.00% | 99.31% | 98.20% | 97.92% | 98.17% | 96.96% |

| 27 | 93.14% | 93.13% | 95.93% | 97.31% | 96.18% | 97.11% | 95.47% |

| 28 | 86.03% | 90.96% | 92.64% | 94.64% | 95.06% | 95.58% | 92.49% |

| 29 | 99.68% | 100.00% | 100.00% | 100.00% | 100.00% | 100.00% | 99.95% |

| 30 | 96.51% | 99.08% | 98.88% | 98.18% | 98.86% | 98.10% | 98.27% |

| average | 93.72% | 96.16% | 97.02% | 97.06% | 97.49% | 97.80% | 96.54% |

| User | 1-Shot | 2-Shot | 3-Shot | 4-Shot | 5-Shot | 10-Shot | Average |

|---|---|---|---|---|---|---|---|

| 21 | 91.14% | 95.67% | 95.80% | 96.63% | 97.08% | 96.24% | 95.43% |

| 22 | 87.53% | 91.91% | 91.68% | 96.07% | 95.73% | 96.15% | 93.18% |

| 23 | 83.23% | 88.32% | 91.20% | 91.67% | 92.55% | 92.98% | 89.99% |

| 24 | 82.95% | 89.46% | 91.03% | 92.11% | 90.48% | 91.60% | 89.60% |

| 25 | 78.31% | 87.55% | 89.11% | 90.96% | 90.56% | 91.21% | 87.95% |

| 26 | 83.37% | 90.00% | 91.79% | 94.36% | 93.79% | 95.74% | 91.51% |

| 27 | 86.42% | 93.86% | 94.89% | 94.35% | 95.23% | 96.85% | 93.60% |

| 28 | 80.63% | 92.70% | 91.97% | 92.35% | 93.16% | 96.49% | 91.22% |

| 29 | 86.90% | 90.96% | 91.40% | 92.51% | 92.60% | 94.55% | 91.49% |

| 30 | 90.65% | 93.44% | 95.16% | 94.83% | 97.49% | 99.20% | 95.13% |

| average | 85.11% | 91.39% | 92.40% | 93.58% | 93.87% | 95.10% | 91.91% |

| User | 1-Shot | 2-Shot | 3-Shot | 4-Shot | 5-Shot | 10-Shot | Average |

|---|---|---|---|---|---|---|---|

| 21 | 85.11% | 90.27% | 91.61% | 92.94% | 93.76% | 93.89% | 91.26% |

| 22 | 87.88% | 93.53% | 96.15% | 95.28% | 96.99% | 96.15% | 94.33% |

| 23 | 84.79% | 89.38% | 90.51% | 91.30% | 91.04% | 92.59% | 89.94% |

| 24 | 76.43% | 85.39% | 87.52% | 89.22% | 89.73% | 93.75% | 87.01% |

| 25 | 88.04% | 92.84% | 93.95% | 95.37% | 96.16% | 96.96% | 93.88% |

| 26 | 88.50% | 95.98% | 94.24% | 95.66% | 97.10% | 96.87% | 94.72% |

| 27 | 85.08% | 91.15% | 92.43% | 93.41% | 93.01% | 93.71% | 91.47% |

| 28 | 72.17% | 82.19% | 83.82% | 87.22% | 85.58% | 90.99% | 83.66% |

| 29 | 95.00% | 98.26% | 97.48% | 98.28% | 98.72% | 98.94% | 97.78% |

| 30 | 93.56% | 97.23% | 98.40% | 98.35% | 97.68% | 97.82% | 97.17% |

| average | 85.66% | 91.62% | 92.61% | 93.70% | 93.98% | 95.17% | 92.12% |

| User | 1-Shot | 2-Shot | 3-Shot | 4-Shot | 5-Shot | 10-Shot | Average |

|---|---|---|---|---|---|---|---|

| 21 | 91.14% | 95.67% | 95.80% | 96.63% | 97.08% | 96.24% | 95.43% |

| 22 | 87.53% | 91.91% | 91.68% | 96.07% | 95.73% | 96.15% | 93.18% |

| 23 | 83.23% | 88.32% | 91.20% | 91.67% | 92.55% | 92.98% | 89.99% |

| 24 | 82.95% | 89.46% | 91.03% | 92.11% | 90.48% | 91.60% | 89.60% |

| 25 | 78.31% | 87.55% | 89.11% | 90.96% | 90.56% | 91.21% | 87.95% |

| 26 | 83.37% | 90.00% | 91.79% | 94.36% | 93.79% | 95.74% | 91.51% |

| 27 | 86.42% | 93.86% | 94.89% | 94.35% | 95.23% | 96.85% | 93.60% |

| 28 | 80.63% | 92.70% | 91.97% | 92.35% | 93.16% | 96.49% | 91.22% |

| 29 | 86.90% | 90.96% | 91.40% | 92.51% | 92.60% | 94.55% | 91.49% |

| 30 | 90.65% | 93.44% | 95.16% | 94.83% | 97.49% | 99.20% | 95.13% |

| average | 85.11% | 91.39% | 92.40% | 93.58% | 93.87% | 95.10% | 91.91% |

| User | 1-Shot | 2-Shot | 3-Shot | 4-Shot | 5-Shot | 10-Shot | Average |

|---|---|---|---|---|---|---|---|

| 21 | 88.02% | 92.89% | 93.66% | 94.75% | 95.39% | 95.05% | 93.29% |

| 22 | 87.70% | 92.71% | 93.86% | 95.67% | 96.36% | 96.15% | 93.74% |

| 23 | 84.00% | 88.84% | 90.86% | 91.49% | 91.79% | 92.78% | 89.96% |

| 24 | 79.55% | 87.38% | 89.24% | 90.64% | 90.10% | 92.66% | 88.26% |

| 25 | 82.89% | 90.12% | 91.46% | 93.11% | 93.28% | 93.99% | 90.81% |

| 26 | 85.86% | 92.89% | 93.00% | 95.00% | 95.41% | 96.30% | 93.08% |

| 27 | 85.74% | 92.49% | 93.64% | 93.88% | 94.11% | 95.26% | 92.52% |

| 28 | 76.17% | 87.13% | 87.70% | 89.71% | 89.21% | 93.66% | 87.26% |

| 29 | 90.77% | 94.47% | 94.34% | 95.31% | 95.56% | 96.69% | 94.52% |

| 30 | 92.08% | 95.30% | 96.76% | 96.56% | 97.58% | 98.50% | 96.13% |

| average | 85.28% | 91.42% | 92.45% | 93.61% | 93.88% | 95.11% | 91.96% |

| User | 1-Shot | 2-Shot | 3-Shot | 4-Shot | 5-Shot | 10-Shot | Average |

|---|---|---|---|---|---|---|---|

| 21 | 82.68% | 86.97% | 91.27% | 91.65% | 91.44% | 91.29% | 89.22% |

| 22 | 84.19% | 92.22% | 92.48% | 93.87% | 93.78% | 97.86% | 92.40% |

| 23 | 91.86% | 93.28% | 94.48% | 95.29% | 96.87% | 96.79% | 94.76% |

| 24 | 85.51% | 89.94% | 93.00% | 94.35% | 94.73% | 96.02% | 92.26% |

| 25 | 84.60% | 90.35% | 92.32% | 92.25% | 92.38% | 94.98% | 91.15% |

| 26 | 78.27% | 85.26% | 88.61% | 89.04% | 91.25% | 94.73% | 87.86% |

| 27 | 87.38% | 94.28% | 95.11% | 96.19% | 96.12% | 98.05% | 94.52% |

| 28 | 79.08% | 84.26% | 89.21% | 91.11% | 90.63% | 92.46% | 87.79% |

| 29 | 84.71% | 91.68% | 95.44% | 95.33% | 96.92% | 98.34% | 93.74% |

| 30 | 94.41% | 97.30% | 96.18% | 96.84% | 97.84% | 97.84% | 96.74% |

| average | 85.27% | 90.55% | 92.81% | 93.59% | 94.20% | 95.83% | 92.04% |

| User | 1-Shot | 2-Shot | 3-Shot | 4-Shot | 5-Shot | 10-Shot | Average |

|---|---|---|---|---|---|---|---|

| 21 | 81.87% | 88.75% | 89.51% | 93.97% | 91.79% | 93.89% | 89.96% |

| 22 | 80.71% | 86.65% | 91.75% | 88.27% | 89.98% | 90.47% | 87.97% |

| 23 | 85.39% | 89.39% | 92.43% | 93.82% | 94.83% | 95.45% | 91.89% |

| 24 | 78.56% | 85.05% | 88.11% | 89.48% | 91.82% | 93.20% | 87.70% |

| 25 | 89.81% | 94.16% | 94.23% | 96.87% | 97.26% | 100.00% | 95.39% |

| 26 | 91.10% | 93.86% | 93.96% | 96.13% | 94.94% | 96.15% | 94.36% |

| 27 | 82.50% | 87.08% | 90.67% | 92.32% | 91.28% | 95.57% | 89.90% |

| 28 | 81.82% | 90.19% | 93.79% | 91.93% | 94.99% | 97.15% | 91.65% |

| 29 | 94.39% | 96.29% | 97.37% | 97.51% | 98.22% | 99.07% | 97.14% |

| 30 | 89.75% | 95.28% | 96.96% | 96.65% | 97.08% | 98.26% | 95.66% |

| average | 85.59% | 90.67% | 92.88% | 93.69% | 94.22% | 95.92% | 92.16% |

| User | 1-Shot | 2-Shot | 3-Shot | 4-Shot | 5-Shot | 10-Shot | Average |

|---|---|---|---|---|---|---|---|

| 21 | 82.68% | 86.97% | 91.27% | 91.65% | 91.44% | 91.29% | 89.22% |

| 22 | 84.19% | 92.22% | 92.48% | 93.87% | 93.78% | 97.86% | 92.40% |

| 23 | 91.86% | 93.28% | 94.48% | 95.29% | 96.87% | 96.79% | 94.76% |

| 24 | 85.51% | 89.94% | 93.00% | 94.35% | 94.73% | 96.02% | 92.26% |

| 25 | 84.60% | 90.35% | 92.32% | 92.25% | 92.38% | 94.98% | 91.15% |

| 26 | 78.27% | 85.26% | 88.61% | 89.04% | 91.25% | 94.73% | 87.86% |

| 27 | 87.38% | 94.28% | 95.11% | 96.19% | 96.12% | 98.05% | 94.52% |

| 28 | 79.08% | 84.26% | 89.21% | 91.11% | 90.63% | 92.46% | 87.79% |

| 29 | 84.71% | 91.68% | 95.44% | 95.33% | 96.92% | 98.34% | 93.74% |

| 30 | 94.41% | 97.30% | 96.18% | 96.84% | 97.84% | 97.84% | 96.74% |

| average | 85.27% | 90.55% | 92.81% | 93.59% | 94.20% | 95.83% | 92.04% |

| User | 1-Shot | 2-Shot | 3-Shot | 4-Shot | 5-Shot | 10-Shot | Average |

|---|---|---|---|---|---|---|---|

| 21 | 82.27% | 87.85% | 90.38% | 92.80% | 91.62% | 92.57% | 89.58% |

| 22 | 82.41% | 89.35% | 92.11% | 90.98% | 91.84% | 94.02% | 90.12% |

| 23 | 88.50% | 91.30% | 93.44% | 94.55% | 95.84% | 96.12% | 93.29% |

| 24 | 81.89% | 87.43% | 90.49% | 91.85% | 93.25% | 94.59% | 89.92% |

| 25 | 87.13% | 92.22% | 93.27% | 94.50% | 94.76% | 97.43% | 93.22% |

| 26 | 84.20% | 89.35% | 91.20% | 92.45% | 93.06% | 95.43% | 90.95% |

| 27 | 84.87% | 90.54% | 92.84% | 94.22% | 93.64% | 96.79% | 92.15% |

| 28 | 80.43% | 87.13% | 91.44% | 91.52% | 92.76% | 94.75% | 89.67% |

| 29 | 89.29% | 93.93% | 96.39% | 96.40% | 97.57% | 98.70% | 95.38% |

| 30 | 92.02% | 96.28% | 96.57% | 96.74% | 97.46% | 98.05% | 96.19% |

| average | 85.30% | 90.54% | 92.81% | 93.60% | 94.18% | 95.84% | 92.05% |

References

- Kong, H.; Lu, L.; Yu, J.; Chen, Y.; Tang, F. Continuous Authentication Through Finger Gesture Interaction for Smart Homes Using WiFi. IEEE Trans. Mob. Comput. 2021, 20, 3148–3162. [Google Scholar] [CrossRef]

- Javed, A.R.; Faheem, R.; Asim, M.; Baker, T.; Beg, M.O. A smartphone sensors-based personalized human activity recognition system for sustainable smart cities. Sustain. Cities Soc. 2021, 71, 102970. [Google Scholar] [CrossRef]

- Tan, Y.; Chen, P.; Shou, W.; Sadick, A.M. Digital Twin-driven approach to improving energy efficiency of indoor lighting based on computer vision and dynamic BIM. Energy Build. 2022, 270, 112271. [Google Scholar] [CrossRef]

- Stopps, H.; Touchie, M.F. Residential smart thermostat use: An exploration of thermostat programming, environmental attitudes, and the influence of smart controls on energy savings. Energy Build. 2021, 238, 110834. [Google Scholar] [CrossRef]

- Shi, Q.; Zhang, Z.; Yang, Y.; Shan, X.; Salam, B.; Lee, C. Artificial Intelligence of Things (AIoT) Enabled Floor Monitoring System for Smart Home Applications. ACS Nano 2021, 15, 18312–18326. [Google Scholar] [CrossRef]

- Bouchabou, D.; Nguyen, S.M.; Lohr, C.; LeDuc, B.; Kanellos, I. A Survey of Human Activity Recognition in Smart Homes Based on IoT Sensors Algorithms: Taxonomies, Challenges, and Opportunities with Deep Learning. Sensors 2021, 21, 6037. [Google Scholar] [CrossRef]

- Gragnaniello, D.; Poggi, G.; Sansone, C.; Verdoliva, L. Local contrast phase descriptor for fingerprint liveness detection. Pattern Recognit. 2015, 48, 1050–1058. [Google Scholar] [CrossRef]

- Pawar, S.; Kithani, V.; Ahuja, S.; Sahu, S. Smart Home Security Using IoT and Face Recognition. In Proceedings of the 2018 Fourth International Conference on Computing Communication Control and Automation (ICCUBEA), Pune, India, 16–18 August 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Wu, J.; Konrad, J.; Ishwar, P. Dynamic time warping for gesture-based user identification and authentication with Kinect. In Proceedings of the 2013 IEEE International Conference on Acoustics, Speech and Signal Processing, Vancouver, BC, USA, 36–31 May 2013; pp. 2371–2375. [Google Scholar] [CrossRef]

- Pokkunuru, A.; Jakkala, K.; Bhuyan, A.; Wang, P.; Sun, Z. NeuralWave: Gait-Based User Identification Through Commodity WiFi and Deep Learning. In Proceedings of the IECON 2018—44th Annual Conference of the IEEE Industrial Electronics Society, Washington, DC, USA, 21–23 October 2018; pp. 758–765. [Google Scholar] [CrossRef]

- Zhao, P.; Lu, C.X.; Wang, J.; Chen, C.; Wang, W.; Trigoni, N.; Markham, A. mID: Tracking and Identifying People with Millimeter Wave Radar. In Proceedings of the 2019 15th International Conference on Distributed Computing in Sensor Systems (DCOSS), Santorini Island, Greece, 29–31 May 2019; pp. 33–40. [Google Scholar] [CrossRef]

- Wang, J.; She, M.; Nahavandi, S.; Kouzani, A. A Review of Vision-Based Gait Recognition Methods for Human Identification. In Proceedings of the 2010 International Conference on Digital Image Computing: Techniques and Applications, Sydney, Australia, 1–3 December 2010; pp. 320–327. [Google Scholar] [CrossRef]

- Zeng, Y.; Pathak, P.H.; Mohapatra, P. WiWho: WiFi-Based Person Identification in Smart Spaces. In Proceedings of the 2016 15th ACM/IEEE International Conference on Information Processing in Sensor Networks (IPSN), Vienna, Austria, 11–14 April 2016; pp. 1–12. [Google Scholar] [CrossRef]

- Xin, T.; Guo, B.; Wang, Z.; Li, M.; Yu, Z.; Zhou, X. FreeSense: Indoor Human Identification with Wi-Fi Signals. In Proceedings of the 2016 IEEE Global Communications Conference (GLOBECOM), Washington, DC, USA, 4–8 December 2016; pp. 1–7. [Google Scholar] [CrossRef]

- Wang, F.; Han, J.; Lin, F.; Ren, K. WiPIN: Operation-Free Passive Person Identification Using Wi-Fi Signals. In Proceedings of the 2019 IEEE Global Communications Conference (GLOBECOM), Waikoloa, HI, USA, 9–13 December 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Shi, C.; Liu, J.; Liu, H.; Chen, Y. Smart user authentication through actuation of daily activities leveraging WiFi-enabled IoT. In Proceedings of the 18th ACM International Symposium on Mobile ad hoc Networking and Computing, Chennai, India, 10–14 July 2017; pp. 1–10. [Google Scholar] [CrossRef]

- Wang, F.; Lv, Y.; Zhu, M.; Ding, H.; Han, J. XRF55: A radio frequency dataset for human indoor action analysis. In Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies; Association for Computing Machinery: New York, NY, USA, 2024; Volume 8, pp. 1–34. [Google Scholar] [CrossRef]

- Zhang, J.; Wei, B.; Hu, W.; Kanhere, S.S. Wifi-id: Human identification using wifi signal. In Proceedings of the 2016 International Conference on Distributed Computing in Sensor Systems (DCOSS), Washington, DC, USA, 36–28 May 2016; pp. 75–82. [Google Scholar] [CrossRef]

- Ali, K.; Liu, A.X.; Wang, W.; Shahzad, M. Keystroke Recognition Using WiFi Signals. In Proceedings of the 21st Annual International Conference on Mobile Computing and Networking, MobiCom ’15, Paris, France, 7–11 September 2015; pp. 90–102. [Google Scholar] [CrossRef]

- Snell, J.; Swersky, K.; Zemel, R. Prototypical Networks for Few-Shot Learning. Adv. Neural Inf. Process. Syst. 2017, 30, 4080–4090. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar] [CrossRef]

- Koch, G.; Zemel, R.; Salakhutdinov, R. Siamese Neural Networks for One-Shot Image Recognition. In Proceedings of the ICML Deep Learning Workshop, Lille, France, 6–11 July 2015; Volume 2, pp. 1–30. [Google Scholar]

- Sung, F.; Yang, Y.; Zhang, L.; Xiang, T.; Torr, P.H.; Hospedales, T.M. Learning to Compare: Relation Network for Few-Shot Learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Wang, Y.; Liu, J.; Chen, Y.; Gruteser, M.; Yang, J.; Liu, H. E-eyes: Device-free location-oriented activity identification using fine-grained WiFi signatures. In Proceedings of the 20th Annual International Conference on Mobile Computing and Networking, MobiCom ’14, Maui, HI, USA, 7–11 September 2014; pp. 617–628. [Google Scholar] [CrossRef]

- Adib, F.; Katabi, D. See through walls with WiFi! SIGCOMM Comput. Commun. Rev. 2013, 43, 75–86. [Google Scholar] [CrossRef]

- Zhang, Y.; Zheng, Y.; Qian, K.; Zhang, G.; Liu, Y.; Wu, C.; Yang, Z. Widar3.0: Zero-Effort Cross-Domain Gesture Recognition with Wi-Fi. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 8671–8688. [Google Scholar] [CrossRef]

- Wang, F.; Zhou, S.; Panev, S.; Han, J.; Huang, D. Person-in-WiFi: Fine-Grained Person Perception Using WiFi. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 5451–5460. [Google Scholar] [CrossRef]

- Wang, W.; Liu, A.X.; Shahzad, M.; Ling, K.; Lu, S. Understanding and Modeling of WiFi Signal Based Human Activity Recognition. In Proceedings of the 21st Annual International Conference on Mobile Computing and Networking, MobiCom ’15, Paris, France, 7–11 September 2015; pp. 65–76. [Google Scholar] [CrossRef]

- Li, C.; Liu, M.; Cao, Z. WiHF: Enable User Identified Gesture Recognition with WiFi. In Proceedings of the IEEE INFOCOM 2020—IEEE Conference on Computer Communications, Beijing, China, 6–9 July 2020; pp. 586–595. [Google Scholar] [CrossRef]

- Wang, F.; Feng, J.; Zhao, Y.; Zhang, X.; Zhang, S.; Han, J. Joint activity recognition and indoor localization with WiFi fingerprints. IEEE Access 2019, 7, 80058–80068. [Google Scholar] [CrossRef]

- Wang, F.; Gao, Y.; Lan, B.; Ding, H.; Shi, J.; Han, J. U-Shape Networks Are Unified Backbones for Human Action Understanding From Wi-Fi Signals. IEEE Internet Things J. 2023, 11, 10020–10030. [Google Scholar] [CrossRef]

- Lan, B.; Li, P.; Yin, J.; Song, Y.; Wang, G.; Ding, H.; Han, J.; Wang, F. XRF V2: A Dataset for Action Summarization with Wi-Fi Signals, and IMUs in Phones, Watches, Earbuds, and Glasses. arXiv 2025, arXiv:2501.19034. [Google Scholar]

- Wang, F.; Panev, S.; Dai, Z.; Han, J.; Huang, D. Can WiFi estimate person pose? arXiv 2019, arXiv:1904.00277. [Google Scholar] [CrossRef]

- Yan, K.; Wang, F.; Qian, B.; Ding, H.; Han, J.; Wei, X. Person-in-wifi 3d: End-to-end multi-person 3d pose estimation with wi-fi. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2024; pp. 969–978. [Google Scholar]

- Korany, B.; Cai, H.; Mostofi, Y. Multiple people identification through walls using off-the-shelf WiFi. IEEE Internet Things J. 2020, 8, 6963–6974. [Google Scholar] [CrossRef]

- Wang, X.; Li, F.; Xie, Y.; Yang, S.; Wang, Y. Gait and Respiration-Based User Identification Using Wi-Fi Signal. IEEE Internet Things J. 2022, 9, 3509–3521. [Google Scholar] [CrossRef]

- Ding, J.; Wang, Y.; Fu, X. Wihi: WiFi Based Human Identity Identification Using Deep Learning. IEEE Access 2020, 8, 129246–129262. [Google Scholar] [CrossRef]

- Lin, C.; Hu, J.; Sun, Y.; Ma, F.; Wang, L.; Wu, G. WiAU: An accurate device-free authentication system with ResNet. In Proceedings of the 2018 15th Annual IEEE International Conference on Sensing, Communication, and Networking (SECON), Hong Kong, 11–13 June 2018; pp. 1–9. [Google Scholar] [CrossRef]

- Wang, F.; Li, Z.; Han, J. Continuous user authentication by contactless wireless sensing. IEEE Internet Things J. 2019, 6, 8323–8331. [Google Scholar] [CrossRef]

- Wu, Z.; Xiao, X.; Lin, C.; Gong, S.; Fang, L. WiDFF-ID: Device-free fast person identification using commodity WiFi. IEEE Trans. Cogn. Commun. Netw. 2022, 9, 198–210. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Liang, Y.; Wu, W.; Li, H.; Han, F.; Liu, Z.; Xu, P.; Lian, X.; Chen, X. WiAi-ID: Wi-Fi-based domain adaptation for appearance-independent passive person identification. IEEE Internet Things J. 2023, 11, 1012–1027. [Google Scholar] [CrossRef]

- Dai, M.; Cao, C.; Liu, T.; Su, M.; Li, Y.; Li, J. WiDual: User Identified Gesture Recognition Using Commercial WiFi. In Proceedings of the 2023 IEEE/ACM 23rd International Symposium on Cluster, Cloud and Internet Computing (CCGrid), Bangalore, India, 1–4 May 2023; pp. 673–683. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Fei-Fei, L.; Fergus, R.; Perona, P. One-shot learning of object categories. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 28, 594–611. [Google Scholar] [CrossRef] [PubMed]

- Vinyals, O.; Blundell, C.; Lillicrap, T.; Kavukcuoglu, K.; Wierstra, D. Matching Networks for One Shot Learning. Adv. Neural Inf. Process. Syst. 2016, 29, 3637–3645. [Google Scholar]

- Hoffer, E.; Ailon, N. Deep Metric Learning Using Triplet Network. In Similarity-Based Pattern Recognition, Proceedings of the Third International Workshop, SIMBAD 2015, Copenhagen, Denmark, 12–14 October 2015; Springer International Publishing: Cham, Switzerland, 2015; pp. 84–92. [Google Scholar] [CrossRef]

- Finn, C.; Abbeel, P.; Levine, S. Model-Agnostic Meta-Learning for Fast Adaptation of Deep Networks. In Proceedings of the 34th International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017; Precup, D., Teh, Y.W., Eds.; Proceedings of Machine Learning Research. JMLR: Cambridge, MA, USA, 2017; Volume 70, pp. 1126–1135. [Google Scholar]

- Selesnick, I.; Burrus, C. Generalized digital Butterworth filter design. In Proceedings of the 1996 IEEE International Conference on Acoustics, Speech, and Signal Processing Conference Proceedings, Atlanta, GA, USA, 7–13 May 2002; pp. 1367–1370. [Google Scholar] [CrossRef]

- IEEE Std 802.11n-2009; IEEE Standard for Information Technology—Telecommunications and Information Exchange Between Systems— Local and Metropolitan Area Networks—Specific Requirements—Part 11: Wireless LAN Medium Access Control (MAC) and Physical Layer (PHY) Specifications—Amendment 5: Enhancements for Higher Throughput. IEEE: New York, NY, USA, 2009.

- Wang, F.; Zhang, T.; Zhao, B.; Xing, L.; Wang, T.; Ding, H.; Han, T.X. A survey on wi-fi sensing generalizability: Taxonomy, techniques, datasets, and future research prospects. arXiv 2025, arXiv:2503.08008. [Google Scholar]

| Hyper Parameter | Meaning | Value |

|---|---|---|

| B | size of the training query set, or batch size | 128 |

| number of samples per category in the training support set | 4 | |

| number of samples per category in the test support set, or shot | ||

| the total number of iterations executed during training | 20,000 | |

| learning rate | * |

| Scene | Scene 1 | Scene 2 | Scene 3 | Scene 4 |

|---|---|---|---|---|

| Identity |

| CPCS | CA | CP | CACP | |

|---|---|---|---|---|

| train | 21,120 | 19,200 | 19,200 | 12,800 |

| test | 8640 | 9600 | 9600 | 3200 |

| Total | 29,760 | 28,800 | 28,800 | 16,000 |

| Network | Dataset | n-Shot in Test | ||||||

|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 10 | Average | ||

| Siamese Networks | CPCS | 70.83% | 71.93% | 74.65% | 76.22% | 75.76% | 76.90% | 74.38% |

| CA | 92.76% | 93.98% | 94.56% | 94.70% | 94.78% | 95.36% | 94.36% | |

| CP | 64.03% | 67.27% | 68.81% | 69.65% | 70.83% | 72.45% | 68.84% | |

| CACP | 62.23% | 65.27% | 65.57% | 67.21% | 68.53% | 71.07% | 66.65% | |

| average | 72.46% | 74.61% | 75.90% | 76.95% | 77.48% | 78.95% | 76.06% | |

| Relation Network | CPCS | 70.23% | 76.27% | 76.88% | 77.60% | 79.35% | 81.38% | 76.95% |

| CA | 93.85% | 94.60% | 95.12% | 94.83% | 95.67% | 94.67% | 94.79% | |

| CP | 62.55% | 67.45% | 67.22% | 67.53% | 67.05% | 69.63% | 66.90% | |

| CACP | 65.42% | 69.32% | 71.93% | 72.20% | 73.55% | 73.53% | 70.99% | |

| average | 73.01% | 76.91% | 77.79% | 78.04% | 78.91% | 79.80% | 77.41% | |

| Prototypical Network | CPCS | 85.60% | 90.00% | 93.80% | 94.20% | 93.00% | 96.00% | 92.10% |

| CA | 95.20% | 97.00% | 97.60% | 98.20% | 98.40% | 98.80% | 97.53% | |

| CP | 87.00% | 93.00% | 93.60% | 95.00% | 94.80% | 96.80% | 93.37% | |

| CACP | 85.46% | 91.34% | 93.44% | 93.80% | 94.60% | 95.66% | 92.38% | |

| average | 88.32% | 92.84% | 94.61% | 95.30% | 95.20% | 96.82% | 93.85% | |

| Feature Encoder | ResNet10 | DenseNet121 | SE-ResNet18 | SE-ResNet10 |

|---|---|---|---|---|

| Trainable parameters | 3.11 M | 5.64 M | 5.37 M | 3.16 M |

| Inference time (ms) | ||||

| Accuracy | 79.62% | 88.39% | 87.87% | 93.85% |

| Feature Encoder | Dataset | n-Shot in Test | ||||||

|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 10 | Average | ||

| ResNet10 | CPCS | 63.28% | 68.24% | 71.64% | 71.72% | 72.88% | 74.10% | 70.31% |

| CA | 94.84% | 96.24% | 96.98% | 97.00% | 97.00% | 97.36% | 96.57% | |

| CP | 65.22% | 71.74% | 75.26% | 76.54% | 78.44% | 80.24% | 74.57% | |

| CACP | 65.70% | 73.88% | 77.98% | 79.78% | 81.04% | 83.68% | 77.01% | |

| average | 72.26% | 77.53% | 80.47% | 81.26% | 82.34% | 83.85% | 79.62% | |

| DenseNet121 | CPCS | 75.10% | 82.40% | 84.98% | 86.42% | 86.90% | 88.78% | 84.10% |

| CA | 96.86% | 97.68% | 98.02% | 98.40% | 98.44% | 98.24% | 97.94% | |

| CP | 73.74% | 82.28% | 85.22% | 86.86% | 87.98% | 89.16% | 84.21% | |

| CACP | 78.88% | 85.46% | 87.92% | 89.74% | 89.98% | 91.94% | 87.32% | |

| average | 81.15% | 86.96% | 89.04% | 90.36% | 90.83% | 92.03% | 88.39% | |

| SE-ResNet18 | CPCS | 73.46% | 81.64% | 83.92% | 85.60% | 86.52% | 88.36% | 83.25% |

| CA | 90.86% | 93.34% | 94.26% | 95.18% | 95.26% | 96.06% | 94.16% | |

| CP | 74.32% | 81.90% | 84.74% | 86.10% | 87.42% | 89.38% | 83.98% | |

| CACP | 82.92% | 88.86% | 90.70% | 91.96% | 92.56% | 93.44% | 90.07% | |

| average | 80.39% | 86.44% | 88.41% | 89.71% | 90.44% | 91.81% | 87.87% | |

| SE-ResNet10 | CPCS | 85.60% | 90.00% | 93.80% | 94.20% | 93.00% | 96.00% | 92.10% |

| CA | 95.20% | 97.00% | 97.60% | 98.20% | 98.40% | 98.80% | 97.53% | |

| CP | 87.00% | 93.00% | 93.60% | 95.00% | 94.80% | 96.80% | 93.37% | |

| CACP | 85.46% | 91.34% | 93.44% | 93.80% | 94.60% | 95.66% | 92.38% | |

| average | 88.32% | 92.84% | 94.61% | 95.30% | 95.20% | 96.82% | 93.85% | |

| Distance | Dataset | n-Shot in Test | ||||||

|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 10 | Average | ||

| L2 | CPCS | 67.38% | 74.48% | 76.68% | 77.86% | 78.98% | 79.94% | 75.89% |

| CA | 92.06% | 94.34% | 95.74% | 95.40% | 96.50% | 96.26% | 95.05% | |

| CP | 63.00% | 70.32% | 73.72% | 75.72% | 76.54% | 79.02% | 73.05% | |

| CACP | 68.68% | 74.80% | 76.40% | 78.22% | 78.32% | 81.22% | 76.27% | |

| average | 72.78% | 78.49% | 80.64% | 81.80% | 82.59% | 84.11% | 80.07% | |

| Sim Computation Module | CPCS | 85.60% | 90.00% | 93.80% | 94.20% | 93.00% | 96.00% | 92.10% |

| CA | 95.20% | 97.00% | 97.60% | 98.20% | 98.40% | 98.80% | 97.53% | |

| CP | 87.00% | 93.00% | 93.60% | 95.00% | 94.80% | 96.80% | 93.37% | |

| CACP | 85.46% | 91.34% | 93.44% | 93.80% | 94.60% | 95.66% | 92.38% | |

| average | 88.32% | 92.84% | 94.61% | 95.30% | 95.20% | 96.82% | 93.85% | |

| Distance | Dataset | n-Shot in Test | ||||||

|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 10 | Average | ||

| WiDDF-ID | CPCS | 74.94% | 78.54% | 78.50% | 80.64% | 80.02% | 82.92% | 79.26% |

| CA | 97.60% | 97.84% | 97.92% | 98.28% | 98.16% | 98.16% | 97.99% | |

| CP | 80.48% | 83.38% | 84.86% | 86.54% | 88.84% | 89.14% | 85.54% | |

| CACP | 71.92% | 81.24% | 84.02% | 86.56% | 86.80% | 91.18% | 83.62% | |

| average | 81.24% | 85.25% | 86.33% | 88.01% | 88.46% | 90.35% | 86.60% | |

| WiAi-ID | CPCS | 55.54% | 57.16% | 57.54% | 58.70% | 60.24% | 62.80% | 58.66% |

| CA | 80.90% | 84.54% | 85.36% | 84.84% | 87.70% | 88.28% | 85.27% | |

| CP | 46.74% | 48.94% | 48.20% | 51.48% | 51.62% | 52.70% | 49.95% | |

| CACP | 32.48% | 37.04% | 39.58% | 43.28% | 44.66% | 49.86% | 41.15% | |

| average | 53.92% | 56.92% | 57.67% | 59.58% | 61.06% | 63.41% | 58.76% | |

| WiDual | CPCS | 9.28% | 20.62% | 30.68% | 34.10% | 40.36% | 56.62% | 31.94% |

| CA | 30.04% | 51.28% | 67.62% | 73.46% | 75.94% | 80.68% | 63.17% | |

| CP | 14.68% | 24.20% | 31.54% | 36.70% | 39.40% | 53.06% | 33.26% | |

| CACP | 15.28% | 22.60% | 26.90% | 33.92% | 39.04% | 51.64% | 31.56% | |

| average | 17.32% | 29.68% | 39.19% | 44.55% | 48.69% | 60.50% | 39.99% | |

| SimID | CPCS | 85.60% | 90.00% | 93.80% | 94.20% | 93.00% | 96.00% | 92.10% |

| CA | 95.20% | 97.00% | 97.60% | 98.20% | 98.40% | 98.80% | 97.53% | |

| CP | 87.00% | 93.00% | 93.60% | 95.00% | 94.80% | 96.80% | 93.37% | |

| CACP | 85.46% | 91.34% | 93.44% | 93.80% | 94.60% | 95.66% | 92.38% | |

| average | 88.32% | 92.84% | 94.61% | 95.30% | 95.20% | 96.82% | 93.85% | |

| System | WiDDF-ID | WiAi-ID | WiDual | SimID |

|---|---|---|---|---|

| Working distance |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Z.; Ouyang, L.; Chen, S.; Ding, H.; Wang, G.; Wang, F. SimID: Wi-Fi-Based Few-Shot Cross-Domain User Recognition with Identity Similarity Learning. Sensors 2025, 25, 5151. https://doi.org/10.3390/s25165151

Wang Z, Ouyang L, Chen S, Ding H, Wang G, Wang F. SimID: Wi-Fi-Based Few-Shot Cross-Domain User Recognition with Identity Similarity Learning. Sensors. 2025; 25(16):5151. https://doi.org/10.3390/s25165151

Chicago/Turabian StyleWang, Zhijian, Lei Ouyang, Shi Chen, Han Ding, Ge Wang, and Fei Wang. 2025. "SimID: Wi-Fi-Based Few-Shot Cross-Domain User Recognition with Identity Similarity Learning" Sensors 25, no. 16: 5151. https://doi.org/10.3390/s25165151

APA StyleWang, Z., Ouyang, L., Chen, S., Ding, H., Wang, G., & Wang, F. (2025). SimID: Wi-Fi-Based Few-Shot Cross-Domain User Recognition with Identity Similarity Learning. Sensors, 25(16), 5151. https://doi.org/10.3390/s25165151