Abstract

The axle-box bearing is a critical load-bearing component in high-speed trains and is prone to failure under long-term heavy-duty operation, affecting both operational efficiency and safety. Current deep-learning-based fault diagnosis methods face two key challenges: difficulty in capturing temporal features across multiple scales simultaneously, and limited capability in modeling local sequential patterns. To address these issues, we propose P2IFormer, a fault diagnosis model based on multi-granularity patch-to-image embedding. The raw vibration sequence is divided into equal-length patch sequences under multiple granularities, each defined by a fixed window size. Each patch is then transformed into a Gramian Angular Field (GAF) image to extract spatial features and generate granularity-specific embedding. A multi-granularity self-attention mechanism is used to model both intra- and inter-granularity dependencies. The resulting multi-granularity features are fused and fed into a softmax classifier for final fault prediction. Experiments conducted under four constant-speed conditions and one variable-speed condition demonstrate that P2IFormer achieves over 99.5% accuracy across all scenarios, significantly outperforming existing CNN- and Transformer-based methods.

1. Introduction

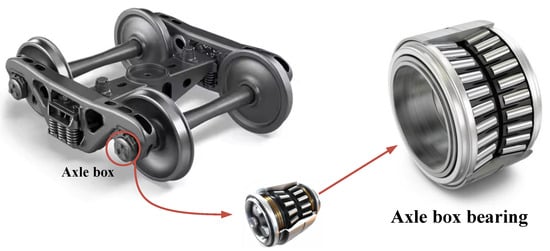

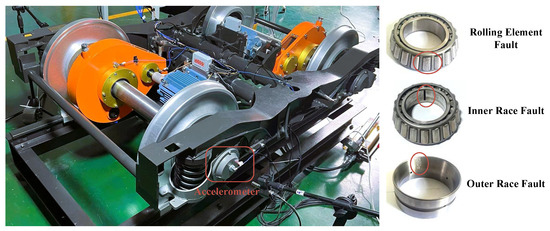

High-speed trains, as a vital component of modern transportation systems, are widely used for intercity passenger and freight transport due to their high speed and large carrying capacity. The axle-box bearing, shown in Figure 1, a key load-bearing component connecting the wheelset and the bogie, plays a crucial role in ensuring the train’s operational performance and safety. However, due to frequent dynamic loads and complex wheel–rail interactions, axle-box bearings are prone to wear and degradation. If their condition cannot be accurately diagnosed in a timely manner and corresponding maintenance is not carried out, such failures may lead to severe malfunctions or even major accidents. Therefore, developing effective fault diagnosis methods for axle-box bearings is of great practical importance for ensuring the safe operation of high-speed trains, reducing maintenance costs, and improving overall operational efficiency [1,2].

Figure 1.

The structural location of the axle-box bearing in a high-speed train bogie.

The health condition of high-speed train axle-box bearings is directly related to the safety and reliability of train operations. However, fault diagnosis in practical scenarios faces multiple challenges. On the one hand, it is extremely difficult to obtain sufficient fault samples under real operating conditions and the available data are often highly imbalanced. On the other hand, the complex and variable operating conditions of trains—such as changes in rotational speed and noise interference—significantly increase the difficulty of diagnosis [3,4]. Traditional diagnostic methods typically rely on signal processing in the time domain, frequency domain, or time-frequency domain, combined with machine learning models such as support vector machines for fault identification [5,6,7]. These approaches are heavily dependent on manual feature extraction, which is not only time-consuming and expertise-intensive but also poorly adaptable to variable operating conditions, thereby limiting the accuracy and robustness of diagnostic models [8].

In recent years, the rapid development of deep learning has driven its widespread application in fault diagnosis. Compared with traditional methods, deep learning models can directly extract high-level features from raw vibration signals, reducing reliance on manual feature engineering and significantly improving diagnostic accuracy [9]. Chen et al. [10] proposed a feature-aligned multi-scale convolutional neural network (MSCNN-FA) that converts vibration signals into time-frequency images using short-time Fourier transform (STFT) and incorporates a feature alignment module with a multi-scale convolution strategy, achieving a diagnostic accuracy of 99.2%. Sun et al. [11] developed a fault diagnosis method that combines multi-scale CNN and long short-term memory (LSTM) networks, where the multi-scale CNN extracts local features from time-frequency images and the LSTM captures long-term temporal dependencies, resulting in an accuracy of approximately 98%. Zhao et al. [12] introduced a multi-scale convolutional neural network–bidirectional LSTM–attention mechanism (MSCNN-BiLSTM-AM) approach, in which the multi-scale CNN extracts spatiotemporal features, the BiLSTM captures bidirectional temporal dependencies, and the attention mechanism enhances the representation of key features, achieving a diagnostic accuracy of 99.5%. To address the issue of data imbalance, Hou et al. [13] and Luo et al. [14] designed improved generative adversarial networks (GANs), which achieved diagnostic accuracies of 97.8% and 98.2%, respectively, on the CWRU dataset, demonstrating strong robustness under imbalanced data conditions.

In fault diagnosis research, a widely used approach is to transform time-series signals into two-dimensional representations to enhance the expressive capacity of temporal features and to apply two-dimensional convolutional neural networks (2D-CNNs) for fault identification [15]. Among such methods, the transformation of time-series data into images using the Gramian Angular Field (GAF) has been extensively adopted [16,17]. For example, Bai et al. [18] proposed a GAF-based SEDenseNet method that converts vibration signals into two-dimensional images via GAF and incorporates a squeeze-and-excitation (SE) module to optimize feature extraction, achieving a diagnostic accuracy of 97.5%. Yu et al. [19] presented a GAF-based bearing fault diagnosis approach that similarly maps vibration signals to images and employs a generative adversarial network (GAN) to synthesize training samples, reaching 96.8% accuracy and demonstrating robustness under class-imbalanced conditions. Tong et al. [20] developed a method using the Gramian Angular Difference Field (GADF) combined with channel attention and SimAM attention mechanisms, obtaining 98.7% accuracy. However, the inherently local receptive field of convolutional neural networks limits their capacity to model global contextual information, which can reduce diagnostic performance when handling long sequences or complex fault patterns.

Transformer architectures, through their intrinsic self-attention mechanisms, enable the direct modeling of long-range dependencies across all positions in sequential data, thereby demonstrating substantial efficacy in fault diagnosis [21,22]. For instance, Hou et al. [23] proposed the Diagnosisformer model, which extracts frequency-domain features using Fast Fourier Transform (FFT) and integrates a multi-feature parallel fusion encoder and a cross-flip decoder, achieving a diagnostic accuracy of 99.85% on the CWRU dataset. However, Transformers have certain limitations in modeling local patterns and extracting multi-granularity temporal features. To address these issues, hybrid CNN–Transformer architectures have been proposed. Chen et al. [24] introduced an efficient cross-space multi-scale CNN–Transformer parallel architecture (ECMCTP) that generates time-frequency images using the continuous wavelet transform (CWT) and employs parallel CNN and Transformer branches to capture local and global features, delivering excellent diagnostic accuracy and noise robustness. Han et al. [25] developed a multi-task MT-ConvFormer model that generates time-frequency representations via short-time Fourier transform (STFT) and integrates CNNs’ local feature extraction with Transformers’ long-range dependency modeling, achieving a diagnostic accuracy close to 98%.

To address the limitations of existing fault diagnosis methods in local feature modeling and multi-scale feature extraction, this paper proposes P2IFormer, a fault diagnosis model for high-speed train axle-box bearings based on multi-granularity patch-to-image embedding. The proposed approach first segments raw vibration signals into equal-length patch sequences at multiple granularities using a set of predefined window sizes, enabling structured multi-granularity modeling of the time series. For each granularity level, the individual patches are then transformed into corresponding Gramian Angular Field (GAF) images, enhancing the visual expression of local temporal features. These images are subsequently processed to extract deep features and mapped into granularity-specific embedding representations. Building on these embeddings, P2IFormer employs a multi-granularity self-attention mechanism to model both intra-granularity and inter-granularity contextual dependencies, achieving deep fusion of features across different granularities. Finally, a linear projection layer followed by a softmax function is used to perform fault classification. The experimental results under various constant-speed and variable-speed conditions demonstrate that the proposed method achieves excellent diagnostic performance, significantly outperforming existing CNN- and Transformer-based models. The main contributions of this paper are summarized as follows:

- (1)

- A fault diagnosis model for high-speed train axle-box bearings, named P2IFormer, is developed based on a patch-to-image embedding framework. By combining multi-granularity segmentation of time series with image transformation, patch sequences at different granularities are converted into multi-channel Gramian Angular Field (GAF) images, significantly enhancing the modeling of local temporal features.

- (2)

- A granularity-specific image embedding module is designed to generate feature representations for each granularity. Patch images at each granularity are processed through feature extraction, pooling, and linear projection, and then uniformly encoded as granularity-specific tokens. This provides high-quality representations to support effective multi-granularity interaction modeling.

- (3)

- The proposed method is evaluated under various constant-speed and variable-speed operating conditions. The results demonstrate that P2IFormer achieves over 99.5% accuracy across all scenarios, significantly outperforming existing CNN- and Transformer-based methods.

2. Related Work

2.1. Transformer-Based Approaches for Bearing Fault Diagnosis

In recent years, the Transformer architecture has gained significant attention in the field of fault diagnosis due to its superior global modeling capability [26]. Compared with traditional convolutional neural networks (CNNs), Transformers leverage self-attention mechanisms to capture dependencies between arbitrary time steps, effectively addressing the limitations of CNNs, which are restricted to local feature extraction and struggle to model long-range temporal dependencies. In addition, Transformers support parallel computation and offer stronger representational capacity. In bearing fault diagnosis, some studies have applied or modified standard Transformer models to improve global feature extraction [27,28], while others have explored hybrid models that combine Transformers with CNNs to exploit their complementary strengths in local and global feature modeling [29,30]. However, most existing Transformer-based methods rely on fixed-length patch segmentation strategies, which hinder the ability to simultaneously capture multi-granularity temporal features and thus limit the modeling of complex fault patterns.

2.2. Image-Based Fault Diagnosis Through Time-Series Transformation

Transforming time-series data into images for fault diagnosis has emerged as an effective and rapidly evolving approach in recent years, with particularly notable success in bearing fault diagnosis. The core idea of this method is to map one-dimensional vibration signals into two-dimensional image representations using specific transformation algorithms, enabling powerful image-based models such as convolutional neural networks (CNNs) and vision Transformers to perform feature extraction and fault classification tasks [31]. Common transformation techniques include time-frequency representation methods such as short-time Fourier transform (STFT), wavelet transform (WT), and continuous wavelet transform (CWT), as well as structural mapping methods like Gramian Angular Field (GAF) and Markov Transition Field (MTF) [32,33,34,35,36]. These image-based techniques not only reveal the local dynamics and global trends within time-series data, but also enhance the ability of visual models to identify complex fault patterns.

2.3. Multi-Scale Feature Extraction for Fault Diagnosis

In recent years, multi-scale feature extraction has received increasing attention in bearing fault diagnosis, aiming to capture critical signal patterns distributed across different temporal and frequency ranges. The core idea behind such methods is to process vibration signals using varying receptive fields or segmentation granularities, thereby enabling joint modeling of both local details and global structures. In convolutional neural networks (CNNs), multi-scale architectures are typically constructed using parallel branches with different convolutional kernel sizes to extract and fuse features at multiple scales [37,38,39]. More recently, attention mechanisms have been incorporated into multi-scale CNN frameworks to enhance the model’s focus on salient features and improve the robustness of feature representations [40,41]. However, most existing multi-scale methods lack explicit modeling of the relationships between different scales, making it difficult to fully capture the semantic dependencies among features of varying scales.

3. Methodology

3.1. Problem Definition

The fault diagnosis of high-speed train axle-box bearings can be formulated as a multivariate time-series classification task. Let denote a segment of vibration signal, where T represents the sequence length (i.e., number of time steps) and C denotes the number of sensor channels (e.g., vibration, temperature). Each sample is associated with a label , indicating one of M predefined bearing conditions or fault types (e.g., normal, inner race fault, outer race fault). Given a training set composed of N labeled instances, the objective is to learn a classification function that can accurately predict the fault category for any unseen sample x.

3.2. Overview of the P2IFormer Architecture

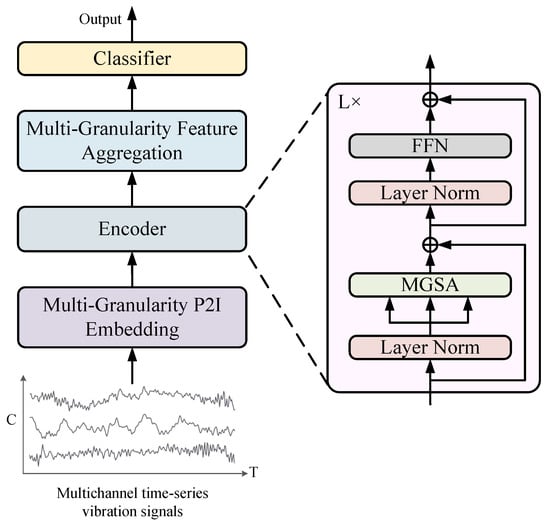

The overall architecture of the proposed P2IFormer is illustrated in Figure 2. The input to the model is a multivariate vibration signal segment collected from axle-box bearings. First, the signal is processed by the Multi-Granularity Patch-to-Image (P2I) Embedding module, which partitions the sequence into multiple granularities using different window sizes and converts each patch into a Gramian Angular Field (GAF) image. Each image is then encoded into a latent token representation via a feature extraction module. Subsequently, the resulting multi-granularity embeddings are fed into a stack of encoder layers, each consisting of layer normalization, the Multi-Granularity Self-Attention (MGSA) module, and a feed-forward network. The MGSA mechanism models both intra-granularity and inter-granularity dependencies by leveraging learnable router tokens. To integrate the semantic information across different granularities, the updated router tokens from all granularities are concatenated in the Multi-Granularity Feature Aggregation module and projected into a unified feature space. Finally, the aggregated representation is passed through a fully connected layer followed by a softmax classifier to determine the bearing fault category.

Figure 2.

The overall architecture of the proposed P2IFormer model. The input consists of multi-channel vibration signal sequences collected from axle-box bearings. These signals are first processed by the Multi-Granularity P2I Embedding module, which splits the time series into patch sequences at multiple granularities and transforms them into image representations. The resulting embeddings are passed through a stack of encoder layers, each consisting of layer normalization, Multi-Granularity Self-Attention (MGSA), and a feed-forward network (FFN) with residual connections. The outputs from different granularities are integrated via the Multi-Granularity Information Aggregation module. Finally, the fused features are classified into fault categories using a softmax classifier.

3.3. Multi-Granularity Patch-to-Image Embedding

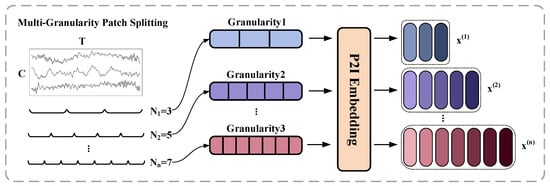

To effectively capture temporal features across different scales, this study introduces a Multi-Granularity Patch-to-Image (P2I) Embedding module, as illustrated in Figure 3. The input multivariate time series is first divided into patch sequences under multiple granularity levels by applying sliding windows of different lengths. Each patch is then passed into the P2I module, which internally performs Gramian Angular Field (GAF) transformation and feature extraction through convolutional and attention-based operations. This process yields a token embedding for each patch. By applying the P2I module to all patches within each granularity level, a complete granularity-specific embedding sequence is constructed. The resulting embeddings across all granularities, denoted as , serve as the foundation for subsequent multi-granularity attention modeling.

Figure 3.

Illustration of the multi-granularity patch-to-image (P2I) embedding process.

3.3.1. Multi-Granularity Patch Splitting

To effectively capture fault-related patterns in axle-box bearing vibration signals across multiple temporal scales, this study introduces a multi-granularity sequence partitioning strategy. Let the original multivariate time series be , where T denotes the sequence length and C is the number of sensor channels. A set of n patch lengths is selected. At granularity level i, the sequence x is divided into non-overlapping patches of length , denoted as . This multi-granularity partitioning strategy enables the model to simultaneously capture fine-grained local dynamics (e.g., short-term transients) and coarse-grained global trends. It provides a rich and structured representation foundation for subsequent image transformation and deep feature extraction, thereby significantly enhancing the model’s temporal perception across multiple granularities in fault diagnosis.

3.3.2. Patch-to-Image Embedding

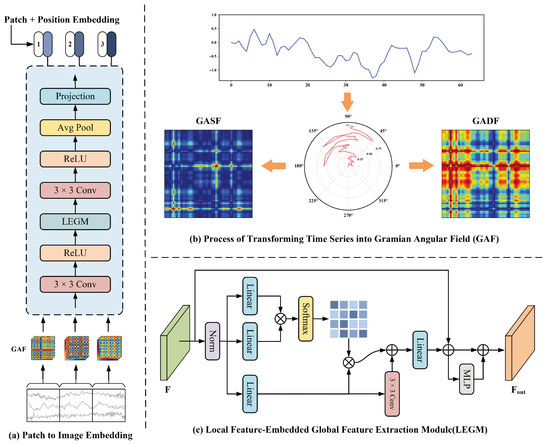

To enhance the ability to learn local fault-sensitive features under multiple temporal scales, each patch at granularity level i is processed through the Patch-to-Image Embedding (P2I) module, as illustrated in Figure 4a. This module consists of three main stages: image transformation, deep feature extraction, and token generation. Ultimately, it produces a d-dimensional embedding vector for each patch.

Figure 4.

The architecture of the Patch-to-Image (P2I) Embedding module. Subfigure (a) illustrates the P2I embedding process at a granularity level where the time series is split into 3 non-overlapping patches, where each time-series patch is first transformed into a Gramian Angular Field (GAF) image. These images are then processed through a convolutional backbone combined with the Local Feature Embedded Global Feature Extraction Module (LEGM) module to extract deep semantic features. A projection layer finally outputs the embedding token for each patch. Subfigure (b) shows the GAF transformation procedure, encoding temporal dependencies via polar coordinate mapping. Subfigure (c) depicts the structure of the LEGM module, which jointly captures local spatial patterns and long-range dependencies through the integration of convolution and self-attention mechanisms.

First, each patch , where denotes the patch length and C is the number of sensor channels, is converted into a 2D image using the Gramian Angular Field (GAF) technique [42], shown in Figure 4b. This method transforms the 1D time-domain subsequence into a 2D structured representation that captures temporal dependencies and dynamics. The GAF generation process includes the following steps:

- Step 1: Normalization. Each channel-wise sequence is normalized to the range using min–max scaling:

- Step 2: Polar coordinate mapping. Each normalized data point is mapped to a point in the polar coordinate system.where and represent the angular coordinate and radial coordinate in the polar space, respectively.

- Step 3: GAF image generation. The Gram Angular Summation Field (GASF) and Gram Angular Difference Field (GADF) are defined aswhere denote the row and column indices in the resulting GAF image and and are the polar angles corresponding to the time-series values at time steps i and j, respectively.

Each channel undergoes the above process independently, and the resulting GAF images are stacked along the channel dimension, forming a multi-channel image of shape . To extract high-level semantic features, the image is first processed by a convolution followed by a ReLU activation:

where F denotes the intermediate feature map.

Next, the Local Feature-Embedded Global Feature Extraction Module (LEGM), shown in Figure 4c, is applied to capture both fine-grained local details and long-range dependencies through a hybrid structure combining convolution and self-attention [43]. Given the feature map F, three learnable linear transformations are applied to produce the query Q, key K, and value V:

where are learnable projection matrices. The attention map A and attention-enhanced feature are then computed using scaled dot-product attention:

where D is the feature dimension. In addition, V is passed through a convolutional layer to emphasize spatial locality, producing , which is fused with the attention output:

The fused feature map is then passed through a linear layer and added residually to the original convolution-enhanced map, followed by an MLP layer:

To obtain the final token representation for the patch, is processed by convolution, ReLU activation, global average pooling, and a linear projection:

where and are learnable parameters and is the patch-level token. To enhance the model’s sensitivity to patch ordering and temporal structure, a learnable positional encoding is added. Specifically, each patch position j at granularity level i is associated with a trainable positional vector , which is added to the patch embedding:

where denotes the final token embedding for the j-th patch at granularity level i. Finally, the embedding vectors of all patches at granularity level i form a sequence-level embedding:

where is the number of patches at granularity level i and d is the embedding dimension. After converting all patches into embeddings at their respective granularities, the original multivariate time series is represented as a set of granularity-specific embeddings.

3.4. Multi-Granularity Self Attention

To effectively model both intra- and inter-granularity contextual dependencies, a multi-granularity self-attention module is introduced. This module consists of two sequential stages: intra-granularity self-attention and inter-granularity self-attention [44]. First, for each of the n granularity levels, a learnable router vector is initialized to summarize the global semantic representation at that granularity. These router vectors serve not only as semantic anchors within each granularity but also as bridges for communication across different granularities. For the i-th granularity level with patches, the router vector is initialized as the sum of a trainable global token embedding and a learnable positional encoding at the position , formulated as

3.4.1. Intra-Granularity Self-Attention

At each granularity level i, the patch embedding sequence is concatenated vertically with its corresponding router vector to form the extended sequence

where the symbol denotes concatenation along the row dimension and the router vector is added as an additional token after the patch embeddings. Next, standard multi-head self-attention is applied:

Here, are the query, key, and value matrices and are trainable linear projection weights. This intra-granularity attention focuses on learning temporal dependencies within each individual granularity level.

3.4.2. Inter-Granularity Self-Attention

After intra-granularity self-attention is completed for all levels, the updated router vectors each contain global semantic information representative of their respective granularity levels. These router vectors encode the contextual dependencies aggregated from the patch embeddings within their own granularities, effectively summarizing granularity-specific features. To enable cross-granularity interaction, all updated router vectors are concatenated to form the cross-granularity router matrix

Next, standard multi-head self-attention is applied over the router sequence to model inter-granularity semantic dependencies:

where , and are learnable projection matrices.

3.5. Multi-Granularity Feature Aggregation

After processing through the encoder with multi-granularity self-attention, each updated router vector encodes both intra- and inter-granularity semantic information specific to granularity level i. To integrate global representations from all n granularities, we concatenate these router vectors along the feature dimension to form a single high-dimensional fused vector:

Here, denotes the concatenation operation along the feature axis. Subsequently, the fused vector is projected to a target dimension via a fully connected layer followed by a GeLU activation function:

where and are the learnable parameters of the projection layer. The output serves as the final fused representation, integrating semantic features from all granularities. This information-rich vector is then used as the input to the classifier for fault type prediction.

3.6. Classifier

After multi-granularity feature aggregation, a unified representation vector is obtained, encapsulating both global and cross-granularity semantic information. In the classification stage, this vector is fed into a simple yet effective discriminator to predict the fault category. Specifically, for a classification task with M fault types, the vector h is passed through a classifier composed of a fully connected layer followed by a softmax activation to produce the class probability distribution

where and are learnable parameters. The output represents the predicted probability for each fault class. During training, the model is optimized using the cross-entropy loss to measure the discrepancy between the predicted probability distribution and and the ground truth label y.

4. Experiments and Results

4.1. Dataset Description

The dataset used in this study was collected from an experimental platform simulating the axle-box bearings of high-speed trains, specifically using the HRB352213 bearing model. It includes one healthy state and four typical fault types: inner race fault, outer race fault, rolling element fault, and combination fault. Vibration signals were acquired using a triaxial accelerometer mounted on one side of the bearing, capturing three-channel data that comprehensively reflect the dynamic characteristics of the bearing. A photograph of the experimental platform is shown in Figure 5. During the experiments, the bearings were operated under four constant-speed conditions (20 Hz, 40 Hz, 60 Hz, and 80 Hz) and one variable-speed condition. In the variable-speed scenario, the rotation speed follows a triangular waveform: it increases linearly from 0 Hz to 40 Hz and then decreases linearly back to 0 Hz, with the entire cycle lasting approximately 2 s and peaking at 1 s. This speed variation simulates the actual acceleration and deceleration processes of high-speed trains, enabling the evaluation of the model’s robustness under dynamic operating conditions. The vibration signals were sampled at a frequency of 25.6 kHz, and each sample has a duration of 10 s, ensuring sufficient resolution for capturing fault-related features.

Figure 5.

Axle-box bearing experimental platform.

4.2. Experimental Setup

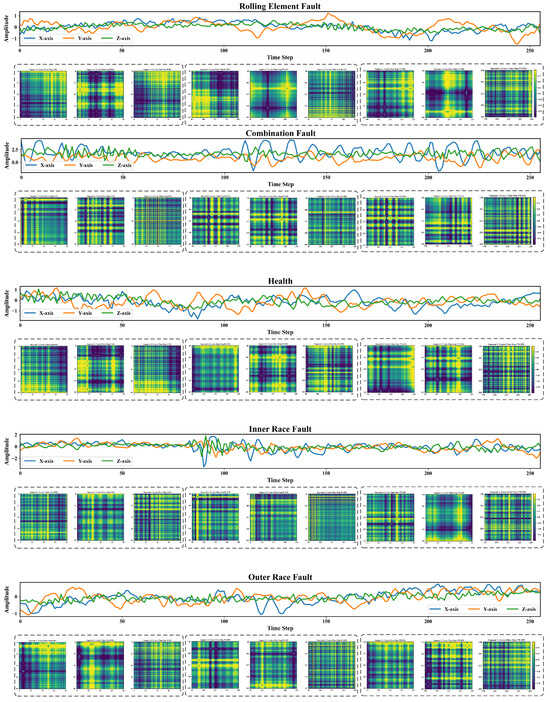

In this study, the collected vibration signals were segmented into training samples using a sliding window technique with a window size of 256 and a step size of 64. As a result, 4093 samples were generated for each fault category, yielding a total of 20,465 samples for model training, validation, and testing. Each vibration signal sample was segmented into three granularity levels using predefined patch lengths of 85, 51, and 36, respectively. At each level, the sample was divided into non-overlapping patches of the corresponding length. These patches were subsequently transformed into Gramian Angular Field (GAF) images, with the resulting image size equal to the respective patch length (i.e., 85 × 85, 51 × 51, and 36 × 36 for each granularity level). Figure 6 illustrates example vibration signal samples for each fault category at the 80 Hz rotational speed, along with their corresponding GADF images at the patch length of 85. The entire dataset was divided into training, validation, and test sets in a 60%:20%:20% ratio.

Figure 6.

Visualization of vibration signal segments for each fault category under the 80 Hz rotational speed condition and their corresponding GADF images (patch length = 85).

The overall performance and stability of the proposed model are influenced by various architectural and hyperparameter settings, such as the embedding dimension and the number of attention heads. The detailed network configuration and hyperparameter values adopted in this study are summarized in Table 1.

Table 1.

Hyperparameter settings of the proposed P2IFormer model.

To comprehensively evaluate the robustness and generalization capability of the proposed P2IFormer model under varying operating conditions, extensive experiments were conducted across four constant-speed scenarios (20 Hz, 40 Hz, 60 Hz, and 80 Hz), as well as one variable-speed condition. Several representative baseline models were selected for comparison, including CNN-LSTM [45], WDCNN [46], DenseNet [36], ResNet [47], Vision Transformer (ViT) [48], and ECMCTP [24]. All experiments were implemented using the PyTorch 2.0 deep learning framework in a Python 3.8 environment and executed on a workstation equipped with an NVIDIA RTX 3090 GPU (24 GB memory).

To quantitatively assess the performance of the models, average accuracy and F1-score were employed as evaluation metrics. Their definitions are as follows:

Here, , , , and denote the true positives, true negatives, false positives, and false negatives for class i, respectively. These metrics effectively reflect classification performance and model generalization, particularly under class-imbalanced multi-class conditions.

4.3. Performance Evaluation Under Constant-Speed Conditions

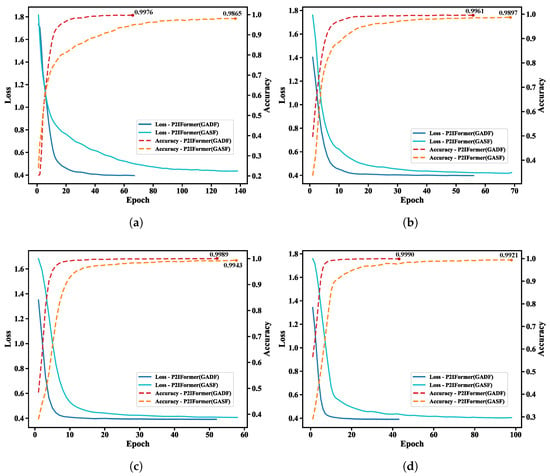

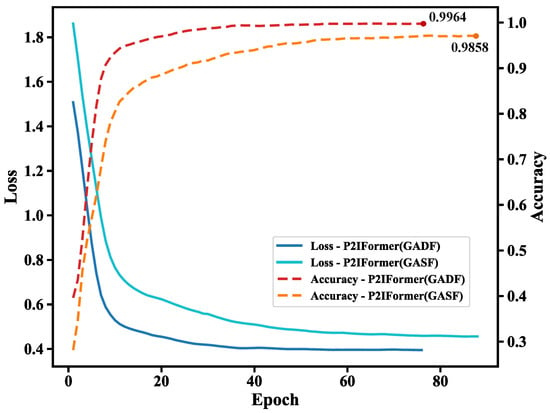

To evaluate the fault recognition capability of the proposed P2IFormer model under varying operating conditions, the model was independently trained and validated under four constant-speed scenarios: 20 Hz, 40 Hz, 60 Hz, and 80 Hz. During the patch-to-GAF image transformation stage, two Gramian Angular Field encoding methods—Gramian Angular Summation Field (GASF) and Gramian Angular Difference Field (GADF)—were employed to examine their effects on model performance. The corresponding loss and accuracy curves on the validation set are illustrated in Figure 7.

Figure 7.

Validation loss and accuracy curves of the P2IFormer model under four constant-speed conditions using GASF and GADF encodings: (a) 20 Hz, (b) 40 Hz, (c) 60 Hz, and (d) 80 Hz.

As shown in the Figure 7, P2IFormer with GADF encoding consistently exhibits faster convergence and higher final accuracy across all speed conditions. For instance, at 20 Hz (Figure 7a) and 40 Hz (Figure 7b), the GADF-based model achieves rapid convergence within the first 20 epochs and reaches final accuracies of 99.76% and 99.61%, respectively, while the GASF-based model lags slightly behind, with accuracies of 98.65% and 98.97%. Similarly, under the 60 Hz (Figure 7c) and 80 Hz (Figure 7d) conditions, the GADF-based model maintains superior performance, achieving over 99.8% accuracy and demonstrating excellent robustness and stability.

These results confirm that GADF encoding more effectively preserves local temporal variations and enhances the discriminative representation of fault patterns, enabling P2IFormer to achieve consistently high diagnostic accuracy across varying rotational speeds. The superiority of GADF over GASF lies in its distinct mathematical formulation and its capacity to capture critical temporal dynamics. Specifically, the GADF matrix is constructed based on angular differences (), resulting in an anti-symmetric structure () that encodes directional information and intuitively reflects upward or downward trends within the sequence. Moreover, its diagonal elements are always zero, eliminating redundant self-correlations and yielding a more compact and efficient representation. In contrast, GASF relies on angular summation (), producing a symmetric matrix () that lacks directional sensitivity. Additionally, its non-zero diagonal elements retain self-correlations, which may introduce irrelevant redundancy in classification tasks. Consequently, GADF facilitates faster model convergence and improves classification performance during training. Therefore, GADF was selected as the default encoding method for subsequent comparative experiments against baseline models such as DenseNet, ResNet, ViT, and the proposed P2IFormer. The final evaluation results are summarized in Table 2.

Table 2.

Comparison of fault diagnosis performance across different models under four constant-speed conditions. Accuracy and F1-score are expressed as percentages (%).

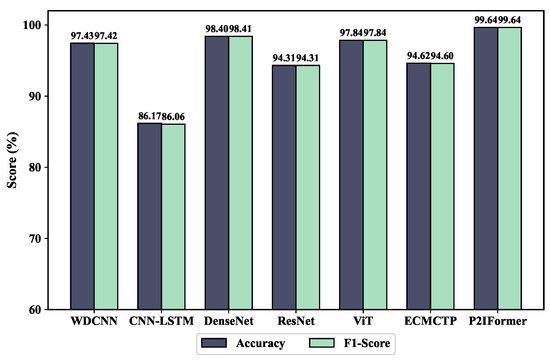

As shown in Table 2, the proposed P2IFormer achieves the highest accuracy and F1-score under the 20 Hz, 40 Hz, and 60 Hz speed conditions, demonstrating superior fault classification performance. At the 80 Hz high-speed condition, the DenseNet model slightly outperforms P2IFormer, yielding the best results. Overall, the performance advantage of P2IFormer becomes more evident as the rotational speed decreases, indicating stronger robustness and generalization capability under low-speed scenarios.

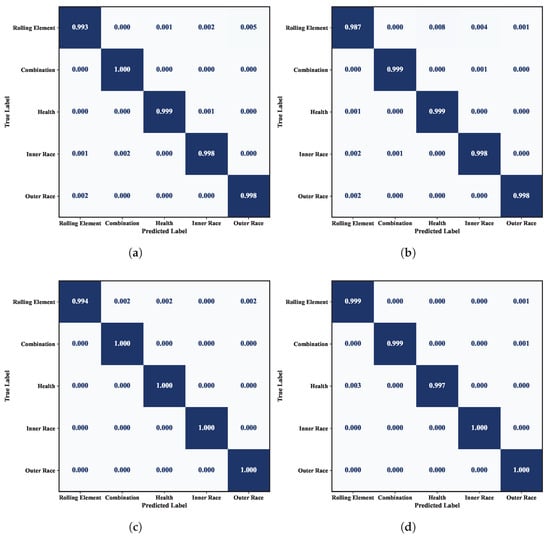

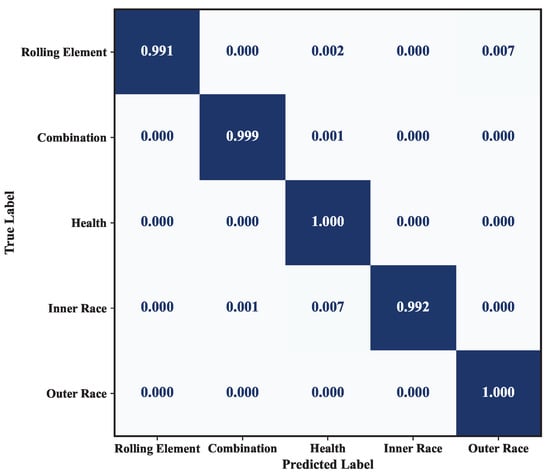

In terms of model complexity, P2IFormer contains 17.14 million parameters, which is significantly higher than lightweight models such as WDCNN (0.029 M), CNN-LSTM (0.093 M), and ECMCTP (0.781 M), but remains much smaller than large-scale models like ResNet (42.51 M) and ViT (85.80 M). Although the training time per epoch (43 s) is moderately higher compared to lightweight models, P2IFormer maintains a reasonable computational cost, making it feasible for deployment in environments with sufficient computational resources. The confusion matrices under different speed conditions, as shown in Figure 8, further validate the stability and effectiveness of P2IFormer in multi-class fault diagnosis tasks.

Figure 8.

Confusion matrices of the P2IFormer model under constant-speed conditions: (a) 20 Hz, (b) 40 Hz, (c) 60 Hz, and (d) 80 Hz.

4.4. Performance Evaluation Under the Variable-Speed Condition

Similar to the experiments conducted under constant-speed conditions, the performance of the proposed model was further evaluated under a variable-speed scenario. As shown in Figure 9, the validation loss and accuracy curves demonstrate the model’s effectiveness in handling non-stationary signals caused by speed variations. Compared to constant-speed settings, variable-speed conditions better reflect real-world operating environments, posing greater challenges to the model’s robustness and generalization capability. The results show that the model maintains strong convergence and diagnostic performance under these complex conditions. Notably, when using the GADF encoding, the model achieves faster convergence and higher accuracy, reaching 99.64% on the validation set, compared to 98.58% with GASF encoding.

Figure 9.

Validation loss and accuracy curves of P2IFormer using GASF and GADF encodings under the variable-speed condition.

The fault diagnosis performance of different models under the variable-speed condition is compared in Figure 10. As shown, the CNN-LSTM model performs the worst, achieving only 86.17% accuracy and an F1-score of 86.06%. This indicates its limited robustness in handling the the non-stationary characteristics of vibration signals caused by varying speed. In contrast, the proposed P2IFormer achieves the best performance, with both accuracy and F1-score reaching 99.64%. This demonstrates its superior capability in modeling temporal dependencies and integrating multi-granularity features, making it more adaptive to complex operating conditions. ResNet, ViT, and DenseNet also achieve relatively high accuracy and F1-scores. Among them, DenseNet reaches 98.40% accuracy, slightly outperforming the other two models, though still exhibiting a performance gap compared to P2IFormer. WDCNN performs moderately well but falls short of ResNet and ViT, particularly in handling non-stationary input sequences. The confusion matrix results on the test set are illustrated in Figure 11.

Figure 10.

Comparison of accuracy and F1-score for different models under variable-speed conditions.

Figure 11.

Confusion matrix of the proposed P2IFormer under the variable-speed condition.

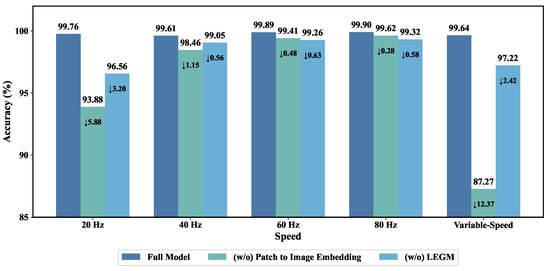

4.5. Ablation Study

To evaluate the contribution of each core component in the proposed P2IFormer model, a series of ablation experiments were conducted. The corresponding results are illustrated in Figure 12 and Table 3.

Figure 12.

Ablation study results on the effectiveness of the patch-to-image embedding and LEGM.

Table 3.

Performance of single-granularity variants and accuracy drop compared to the full multi-granularity model.

As shown in Figure 12, to validate the effectiveness of the Patch-to-Image Embedding module, we replaced it with a traditional patch embedding method, where each patch is directly projected into a high-dimensional vector using a multilayer perceptron (MLP). The results show that this substitution leads to a significant drop in accuracy under all speed conditions, with reductions of 5.88% and 12.37% observed in the 20 Hz and variable-speed scenarios, respectively. This demonstrates the advantage of image-based embedding in capturing spatiotemporal structures from raw sequences. Additionally, we investigated the impact of the Local Feature-Embedded Global Feature Extraction Module (LEGM) by removing it while preserving the patch-to-image embedding framework. In this setting, standard convolutional layers were used for feature extraction. Performance declined in all cases, indicating that LEGM contributes meaningfully by integrating local and global information.

To evaluate the effectiveness of the multi-granularity strategy, we designed three single-granularity variants with the number of patches per sample set to 3, 5, and 7, respectively. These models retain the core architecture but use standard self-attention for feature learning. As shown in Table 3, all single-granularity models underperform the full model, especially under the variable-speed and low-speed (20 Hz) settings. This demonstrates that the multi-granularity approach enhances the model’s ability to capture diverse temporal patterns and improves robustness under non-stationary conditions.

4.6. Robustness Evaluation Under Noisy Conditions

To further validate the robustness and practical applicability of the proposed model in real-world industrial environments, we conducted a comparative analysis of fault diagnosis performance across multiple baseline models under different noise levels. Specifically, Gaussian white noise with varying intensities was added to the original vibration signals to simulate five distinct signal-to-noise ratio (SNR) conditions: −6 dB, −3 dB, 0 dB, 3 dB, and 6 dB. This setup aims to replicate the typical background interference encountered in real applications. The SNR is defined as

where and represent the power of the signal and noise, respectively.

Under each SNR condition, the classification accuracy of all models was evaluated across five speed scenarios: 20 Hz, 40 Hz, 60 Hz, 80 Hz, and variable speed. As shown in Table 4, the proposed P2IFormer consistently achieved superior performance across most speed and noise settings, demonstrating excellent robustness against noise. Notably, under the low-speed (20 Hz) and variable-speed conditions, P2IFormer outperformed all other models by a significant margin, achieving 90.26% and 90.23% accuracy at −6 dB, respectively. Under the 80 Hz condition, ECMCTP achieves the highest accuracy across all noise levels. The DenseNet-based model also shows relatively stable performance across different conditions, ranking second only to P2IFormer in overall average accuracy.

Table 4.

Classification accuracy (%) under different SNR levels grouped by noise level.

4.7. Discussion

The experimental results indicate that, except for the 80 Hz condition where the DenseNet-based model with GADF encoding achieved comparable performance, P2IFormer consistently outperforms all baseline models in terms of accuracy and F1-score under the remaining constant-speed conditions, demonstrating remarkable stability and strong discriminative capability. Notably, its performance advantage is more pronounced under the low-speed (20 Hz) and variable-speed scenarios. This superiority is primarily attributed to the proposed innovative model architecture. On the one hand, the multi-granularity patching strategy, combined with time-series-to-image conversion using GAF encoding, enables the model to capture both local dynamics and global trends across multiple temporal granularities, thereby enriching the diversity and expressiveness of feature representations. On the other hand, the incorporation of a multi-granularity self-attention mechanism effectively models both intra- and inter-granularity contextual dependencies, further enhancing the integration of semantic information.

The ablation study confirms that the Patch-to-Image Embedding module, Local Feature-Embedded Global Feature Extraction Module (LEGM), and the multi-granularity strategy all contribute positively to the final performance, validating the rationality of the proposed architectural design. Furthermore, in the robustness evaluation under varying noise levels (SNR = −6 dB to 6 dB), P2IFormer consistently maintained high diagnostic accuracy across all rotational speeds. Its performance remained stable even under low-SNR and variable-speed conditions, highlighting its strong noise resistance and adaptability to complex environments.

In terms of model complexity and computational cost, P2IFormer comprises 17.14 million parameters, which is substantially lower than ViT (85.80 M) and ResNet (42.51 M), but significantly higher than lightweight models such as WDCNN (0.029 M), CNN-LSTM (0.093 M), and ECMCTP (0.781 M). Moreover, P2IFormer also incurs a higher per-epoch training time compared to these lightweight baselines, indicating a greater demand for computational resources.

5. Conclusions

This paper proposes a deep learning model named P2IFormer, which is designed to address the limitations of existing fault diagnosis methods for high-speed train axle-box bearings in multi-scale modeling and temporal feature extraction. The proposed model first segments the raw vibration signals using multiple window lengths, generating patch sequences at various granularities to enable structured modeling across different temporal scales. Each patch is then transformed into a corresponding Gramian Angular Field (GAF) image, enhancing the representation of local temporal features. A Local Feature-Embedded Global Feature Extraction module is subsequently applied to extract deep semantic features and generate token embedding at each granularity. Furthermore, a multi-granularity self-attention mechanism is employed to capture both intra- and inter-granularity semantic dependencies, effectively integrating fault information across granularities and improving the model’s capability to distinguish complex fault patterns. Experimental results demonstrate that P2IFormer achieves fault diagnosis accuracy exceeding 99.5% under four constant-speed conditions and one variable-speed condition, significantly outperforming mainstream Transformer- and CNN-based models. These results validate the model’s strong robustness and generalization capability across diverse operating scenarios. Furthermore, ablation and noise robustness experiments confirm the effectiveness of the model’s key components and its resilience to noise interference. Nevertheless, P2IFormer exhibits a larger parameter size and longer training time compared to lightweight models such as WDCNN and ECMCTP, indicating higher computational requirements. Future research will focus on lightweight model design to reduce computational resource requirements and improve deployment efficiency. Additionally, efforts will be directed toward developing improved strategies for handling scenarios with limited fault samples and imbalanced datasets, aiming to enhance the model’s adaptability and robustness in real-world industrial applications.

Author Contributions

Conceptualization, W.M.; methodology, W.M.; validation, Z.W.; formal analysis, X.F.; investigation, L.C.; writing—original draft preparation, C.Z.; writing—review and editing, C.Z.; visualization, L.C.; supervision, Y.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors upon request.

Acknowledgments

During the preparation of this manuscript, the authors used ChatGPT (OpenAI, GPT-4) to improve the clarity and fluency of English expressions. All outputs were carefully reviewed and edited by the authors, who take full responsibility for the final content of the paper.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Xie, S.; Tan, H.; Yang, C.; Yan, H. A review of fault diagnosis methods for key systems of the high-speed train. Appl. Sci. 2023, 13, 4790. [Google Scholar] [CrossRef]

- Ma, W.; Wang, J.; Zhang, C.; Jia, Q.; Zhu, L.; Ji, W.; Wang, Z. Application of Variational Graph Autoencoder in Traction Control of Energy-Saving Driving for High-Speed Train. Appl. Sci. 2024, 14, 2037. [Google Scholar] [CrossRef]

- Wu, J.; Li, Y.; Jia, L.; An, G.; Li, Y.F.; Antoni, J.; Xin, G. Semi-supervised fault diagnosis of wheelset bearings in high-speed trains using autocorrelation and improved flow Gaussian mixture model. Eng. Appl. Artif. Intell. 2024, 132, 107861. [Google Scholar] [CrossRef]

- Zhao, L.; Yang, S.; Liu, Y. Weak fault feature extraction of axle box bearing based on pre-identification and singular value decomposition. Machines 2022, 10, 1213. [Google Scholar] [CrossRef]

- Jin, Z.; He, D.; Wei, Z. Intelligent fault diagnosis of train axle box bearing based on parameter optimization VMD and improved DBN. Eng. Appl. Artif. Intell. 2022, 110, 104713. [Google Scholar] [CrossRef]

- Hu, W.; Xin, G.; Wu, J.; An, G.; Li, Y.; Feng, K.; Antoni, J. Vibration-based bearing fault diagnosis of high-speed trains: A literature review. High-Speed Railw. 2023, 1, 219–223. [Google Scholar] [CrossRef]

- Yu, M.; Zhang, Y.; Yang, C. Rolling bearing faults identification based on multiscale singular value. Adv. Eng. Inform. 2023, 57, 102040. [Google Scholar] [CrossRef]

- Yang, Z.; Wu, B.; Shao, J.; Lu, X.; Zhang, L.; Xu, Y.; Chen, G. Fault detection of high-speed train axle bearings based on a hybridized physical and data-driven temperature model. Mech. Syst. Signal Process. 2024, 208, 111037. [Google Scholar] [CrossRef]

- Shaalan, A.A.; Mefteh, W.; Frihida, A.M. Review on deep learning classifiers for faults diagnosis of rotating industrial machinery. Serv. Oriented Comput. Appl. 2024, 18, 361–379. [Google Scholar] [CrossRef]

- Chen, J.; Huang, R.; Zhao, K.; Wang, W.; Liu, L.; Li, W. Multiscale convolutional neural network with feature alignment for bearing fault diagnosis. IEEE Trans. Instrum. Meas. 2021, 70, 1–10. [Google Scholar] [CrossRef]

- Sun, H.; Fan, Y. A new bearing fault diagnosis method based on multi-scale CNN and LSTM. In Proceedings of the International Conference on Mechatronics and Intelligent Control (ICMIC 2023), Wuhan, China, 21–23 July 2023; SPIE: Bellingham WA, USA, 2023; Volume 12793, pp. 440–461. [Google Scholar]

- Dengfeng, Z.; Chaoyang, T.; Zhijun, F.; Yudong, Z.; Junjian, H.; Wenbin, H. Multi scale convolutional neural network combining BiLSTM and attention mechanism for bearing fault diagnosis under multiple working conditions. Sci. Rep. 2025, 15, 13035. [Google Scholar] [CrossRef]

- Hou, Y.; Ma, J.; Wang, J.; Li, T.; Chen, Z. Enhanced generative adversarial networks for bearing imbalanced fault diagnosis of rotating machinery. Appl. Intell. 2023, 53, 25201–25215. [Google Scholar] [CrossRef]

- Luo, J.; Zhang, Y.; Yang, F.; Jing, X. Imbalanced data fault diagnosis of rolling bearings using enhanced relative generative adversarial network. J. Mech. Sci. Technol. 2024, 38, 541–555. [Google Scholar] [CrossRef]

- Zhao, J.; Wang, W.; Huang, J.; Ma, X. A comprehensive review of deep learning-based fault diagnosis approaches for rolling bearings: Advancements and challenges. AIP Adv. 2025, 15, 020702. [Google Scholar] [CrossRef]

- Hakim, M.; Omran, A.A.B.; Ahmed, A.N.; Al-Waily, M.; Abdellatif, A. A systematic review of rolling bearing fault diagnoses based on deep learning and transfer learning: Taxonomy, overview, application, open challenges, weaknesses and recommendations. Ain Shams Eng. J. 2023, 14, 101945. [Google Scholar] [CrossRef]

- Shen, J.; Wu, Z.; Cao, Y.; Zhang, Q.; Cui, Y. Research on Fault Diagnosis of Rolling Bearing Based on Gramian Angular Field and Lightweight Model. Sensors 2024, 24, 5952. [Google Scholar] [CrossRef]

- Bai, R.; Wang, H.; Sun, W.; Shi, Y. Fault diagnosis method for rotating machinery based on SEDenseNet and Gramian Angular Field. Maint. Reliab. I Niezawodn. 2024, 26, 191445. [Google Scholar] [CrossRef]

- Yu, P.; Li, R.b.; Cao, J.; Qin, J.h. Bearing fault diagnosis method for unbalance data based on Gramian angular field. J. Intell. Fuzzy Syst. 2024, 47, 45–54. [Google Scholar] [CrossRef]

- Tong, A.; Zhang, J.; Xie, L. Intelligent fault diagnosis of rolling bearing based on Gramian angular difference field and improved dual attention residual network. Sensors 2024, 24, 2156. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30. [Google Scholar] [CrossRef]

- Wang, R.; Dong, E.; Cheng, Z.; Liu, Z.; Jia, X. Transformer-based intelligent fault diagnosis methods of mechanical equipment: A survey. Open Phys. 2024, 22, 20240015. [Google Scholar] [CrossRef]

- Hou, Y.; Wang, J.; Chen, Z.; Ma, J.; Li, T. Diagnosisformer: An efficient rolling bearing fault diagnosis method based on improved Transformer. Eng. Appl. Artif. Intell. 2023, 124, 106507. [Google Scholar] [CrossRef]

- Chen, Q.; Zhang, F.; Wang, Y.; Yu, Q.; Lang, G.; Zeng, L. Bearing fault diagnosis based on efficient cross space multiscale CNN transformer parallelism. Sci. Rep. 2025, 15, 12344. [Google Scholar] [CrossRef]

- Han, Y.; Zhang, F.; Li, Z.; Wang, Q.; Li, C.; Lai, P.; Li, T.; Teng, F.; Jin, Z. Mt-ConvFormer: A multi-task bearing fault diagnosis method using a combination of CNN and transformer. IEEE Trans. Instrum. Meas. 2024, 74, 3501816. [Google Scholar] [CrossRef]

- Lv, J.; Xiao, Q.; Zhai, X.; Shi, W. A high-performance rolling bearing fault diagnosis method based on adaptive feature mode decomposition and Transformer. Appl. Acoust. 2024, 224, 110156. [Google Scholar] [CrossRef]

- Chen, F.; Wang, X.; Zhu, Y.; Yuan, W.; Hu, Y. Time–frequency Transformer with shifted windows for journal bearing-rotor systems fault diagnosis under multiple working conditions. Meas. Sci. Technol. 2023, 34, 085121. [Google Scholar] [CrossRef]

- Cen, J.; Yang, Z.; Wu, Y.; Hu, X.; Jiang, L.; Chen, H.; Si, W. A mask self-supervised learning-based transformer for bearing fault diagnosis with limited labeled samples. IEEE Sens. J. 2023, 23, 10359–10369. [Google Scholar] [CrossRef]

- Fang, X.; Deng, X.; Chen, J.; Liu, M.; Fu, Y.; Huang, G.; Zhou, C. Convolution Transformer Based Fault Diagnosis Method For Aircraft Engine Bearings. In Proceedings of the 2024 43rd Chinese Control Conference (CCC), Kunming, China, 28–31 July 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 5038–5042. [Google Scholar]

- Liu, W.; Zhang, Z.; Zhang, J.; Huang, H.; Zhang, G.; Peng, M. A novel fault diagnosis method of rolling bearings combining convolutional neural network and transformer. Electronics 2023, 12, 1838. [Google Scholar] [CrossRef]

- Zhang, S.; Zhang, S.; Wang, B.; Habetler, T.G. Deep learning algorithms for bearing fault diagnostics—A comprehensive review. IEEE Access 2020, 8, 29857–29881. [Google Scholar] [CrossRef]

- Zhang, M. Multi-resolution short-time Fourier transform providing deep features for 3D CNN to classify rolling bearing fault vibration signals. Eng. Res. Express 2024, 6, 035201. [Google Scholar] [CrossRef]

- Toma, R.N.; Toma, F.H.; Kim, J.M. Comparative analysis of continuous wavelet transforms on vibration signal in bearing fault diagnosis of induction motor. In Proceedings of the 2021 International Conference on Electronics, Communications and Information Technology (ICECIT), Khulna, Bangladesh, 14–16 September 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1–4. [Google Scholar]

- Yu, S.; Liu, Z.; Wang, S.; Zhang, G. A novel adaptive gramian angle field based intelligent fault diagnosis for motor rolling bearings. J. Phys. Conf. Ser. 2024, 2785, 012042. [Google Scholar] [CrossRef]

- Dou, S.; Cheng, X.; Du, Y.; Wang, Z.; Liu, Y. Gearbox fault diagnosis based on Gramian angular field and TLCA-MobileNetV3 with limited samples. Int. J. Metrol. Qual. Eng. 2024, 15, 15. [Google Scholar] [CrossRef]

- Zhou, Y.; Long, X.; Sun, M.; Chen, Z. Bearing fault diagnosis based on Gramian angular field and DenseNet. Math. Biosci. Eng. 2022, 19, 14086–14101. [Google Scholar] [CrossRef] [PubMed]

- Yin, Z.; Zhang, F.; Xu, G.; Han, G.; Bi, Y. Multi-scale rolling bearing fault diagnosis method based on transfer learning. Appl. Sci. 2024, 14, 1198. [Google Scholar] [CrossRef]

- Ding, S.; Rui, Z.; Lei, C.; Zhuo, J.; Shi, J.; Lv, X. A rolling bearing fault diagnosis method based on Markov transition field and multi-scale Runge-Kutta residual network. Meas. Sci. Technol. 2023, 34, 125150. [Google Scholar] [CrossRef]

- Deng, J.; Liu, H.; Fang, H.; Shao, S.; Wang, D.; Hou, Y.; Chen, D.; Tang, M. MgNet: A fault diagnosis approach for multi-bearing system based on auxiliary bearing and multi-granularity information fusion. Mech. Syst. Signal Process. 2023, 193, 110253. [Google Scholar] [CrossRef]

- Xue, L.; Ningyun, L.; Chuang, C.; Tianzhen, H.; Bin, J. Attention mechanism based multi-scale feature extraction of bearing fault diagnosis. J. Syst. Eng. Electron. 2023, 34, 1359–1367. [Google Scholar] [CrossRef]

- Hu, B.; Liu, J.; Xu, Y. A novel multi-scale convolutional neural network incorporating multiple attention mechanisms for bearing fault diagnosis. Measurement 2025, 242, 115927. [Google Scholar] [CrossRef]

- Wang, Z.; Oates, T. Encoding time series as images for visual inspection and classification using tiled convolutional neural networks. In Proceedings of the Workshops at the Twenty-Ninth AAAI Conference on Artificial Intelligence, Austin, TX, USA, 25–30 January 2015; Volume 1, pp. 1–7. [Google Scholar]

- Zhang, Y.; Zhou, S.; Li, H. Depth information assisted collaborative mutual promotion network for single image dehazing. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 2846–2855. [Google Scholar]

- Wang, Y.; Huang, N.; Li, T.; Yan, Y.; Zhang, X. Medformer: A multi-granularity patching transformer for medical time-series classification. arXiv 2024, arXiv:2405.19363. [Google Scholar]

- Chen, X.; Zhang, B.; Gao, D. Bearing fault diagnosis base on multi-scale CNN and LSTM model. J. Intell. Manuf. 2021, 32, 971–987. [Google Scholar] [CrossRef]

- Zhang, W.; Peng, G.; Li, C.; Chen, Y.; Zhang, Z. A new deep learning model for fault diagnosis with good anti-noise and domain adaptation ability on raw vibration signals. Sensors 2017, 17, 425. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).