Intelligent Vehicle Target Detection Algorithm Based on Multiscale Features

Abstract

1. Introduction

- (1)

- Environmental complexity degrades feature quality, increasing detection errors.

- (2)

- Extreme scale variations—from distant cyclists to nearby trucks—strain fixed-receptive-field convolutions, reducing localization accuracy.

- (3)

- Severe occlusions obscure critical features, leading to missed or misclassified targets.

- (1)

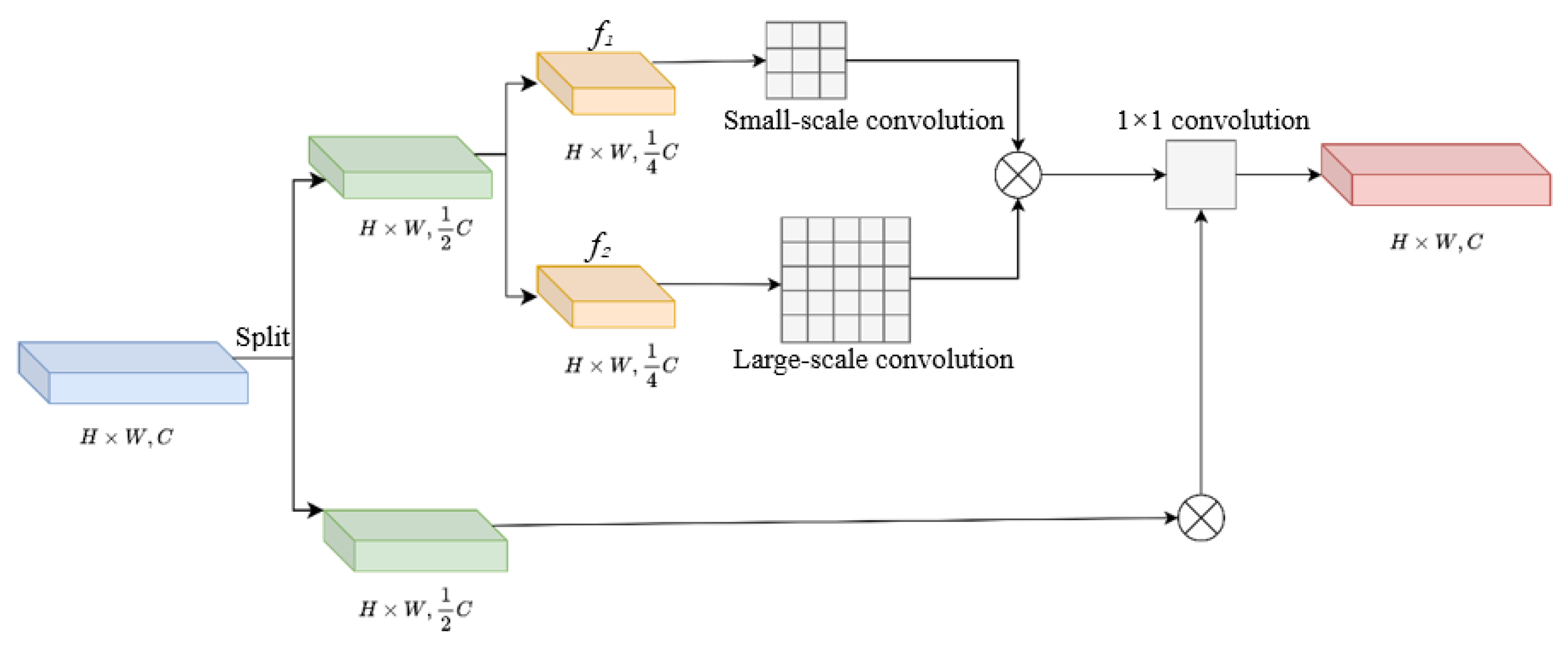

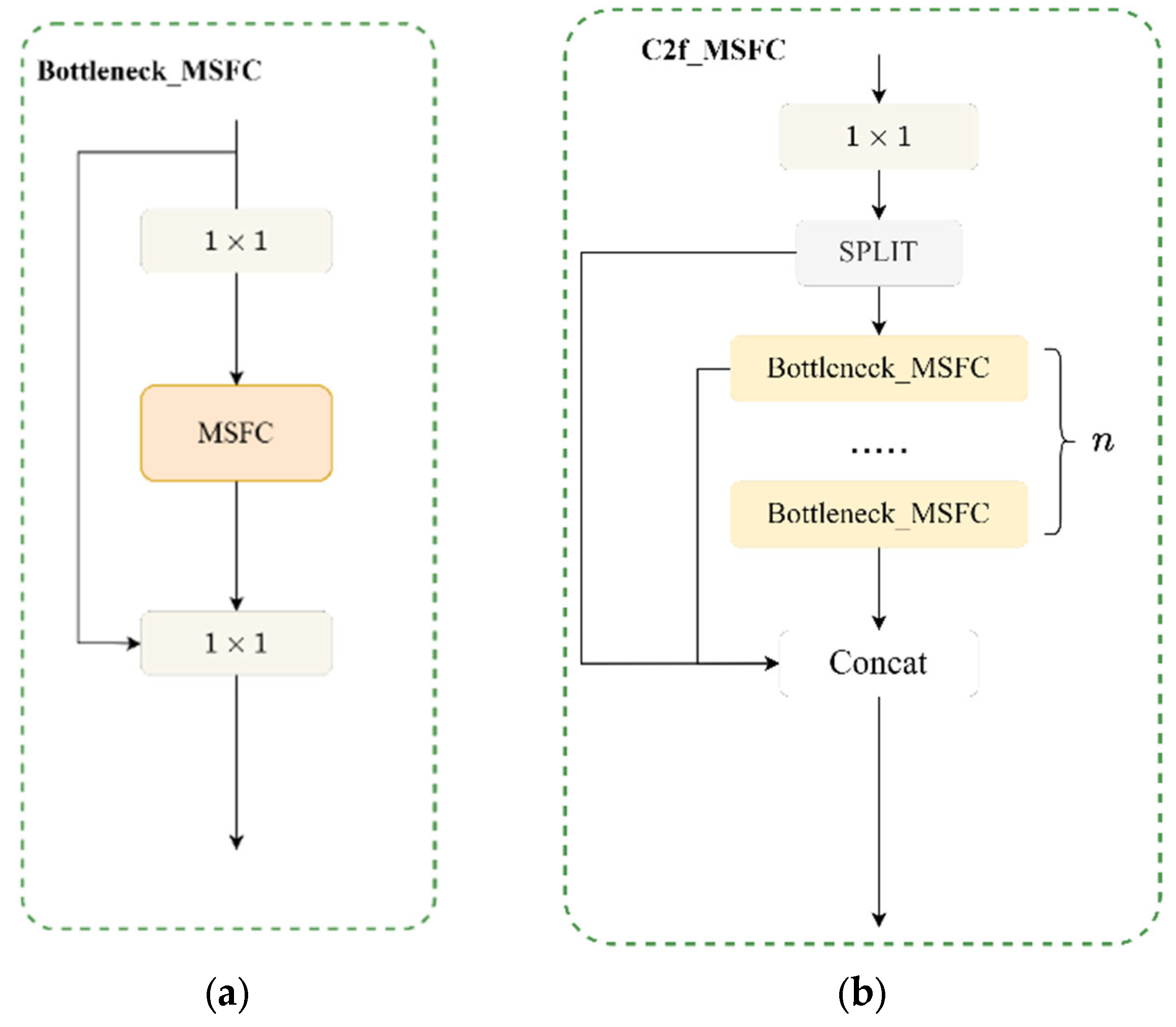

- A multi-scale flexible convolution (MSFC) to dynamically adapt to varying feature scales, reducing computational overhead.

- (2)

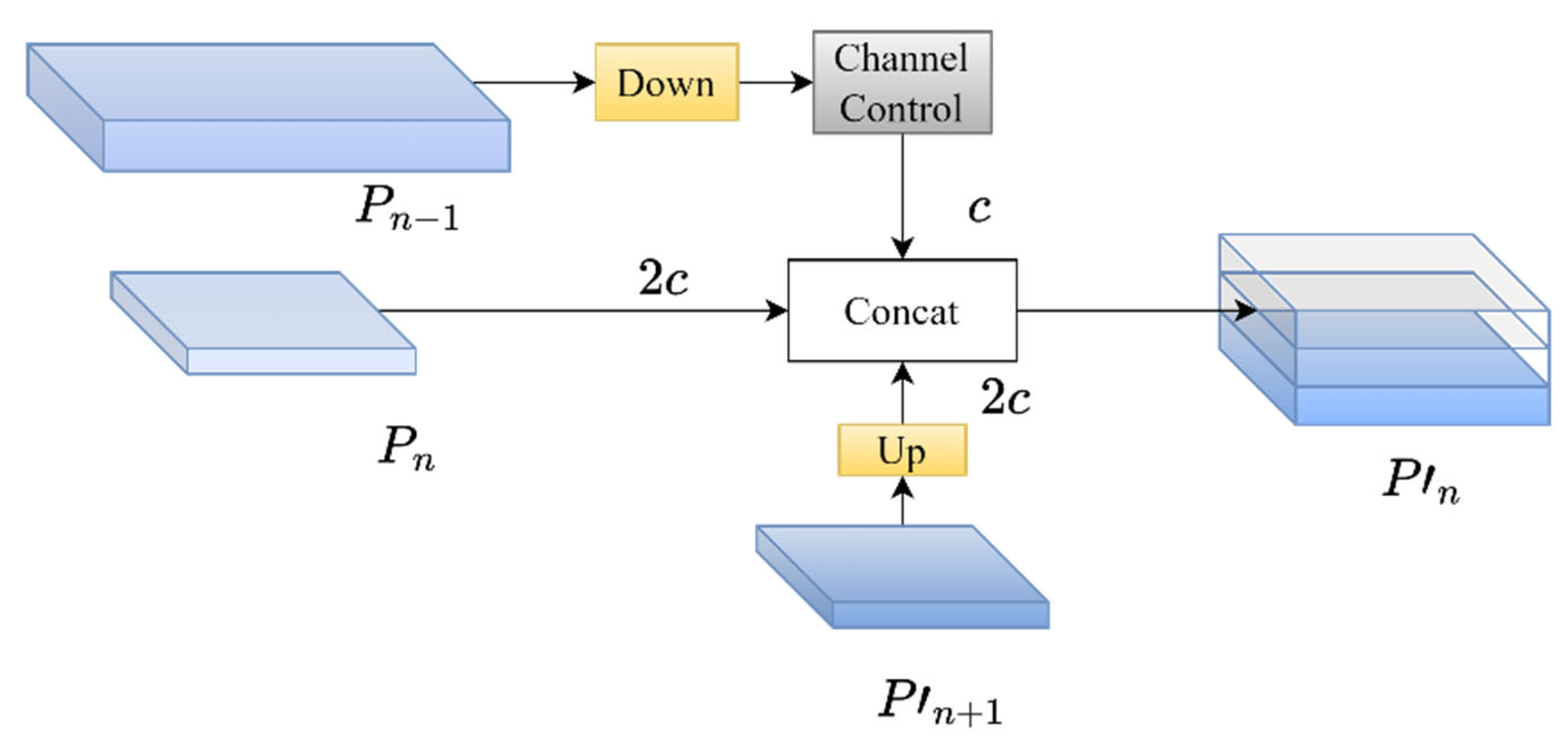

- A reconstructed neck network integrating Shallow Auxiliary Fusion (SAF) and Advanced Auxiliary Fusion (AAF) to optimize multi-scale feature interaction.

- (3)

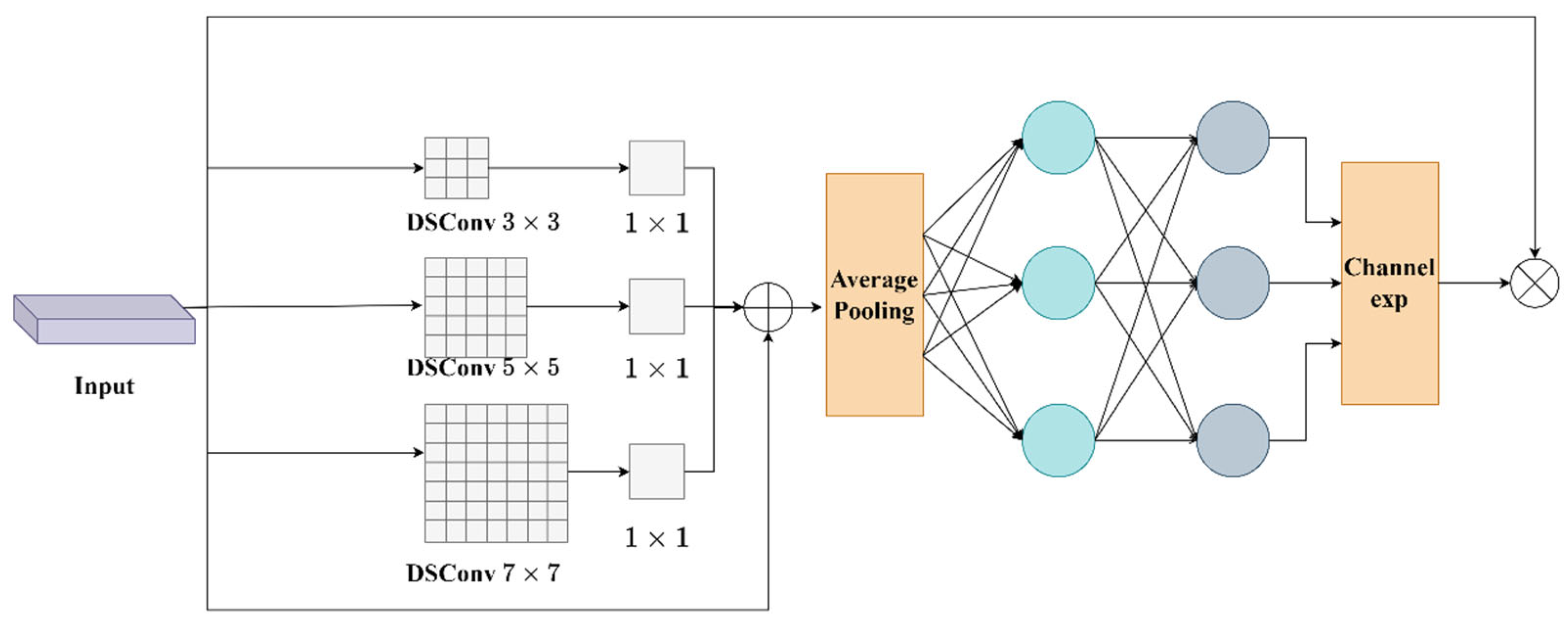

- A SEAM, combining multi-scale convolutions and channel attention, to boost feature extraction robustness.

2. Related Work

2.1. Target Detection Method

2.2. Evolution of YOLO Series in Intelligent Driving

2.3. Research on the Object Detection Algorithm of YOLOv10

3. Methodology

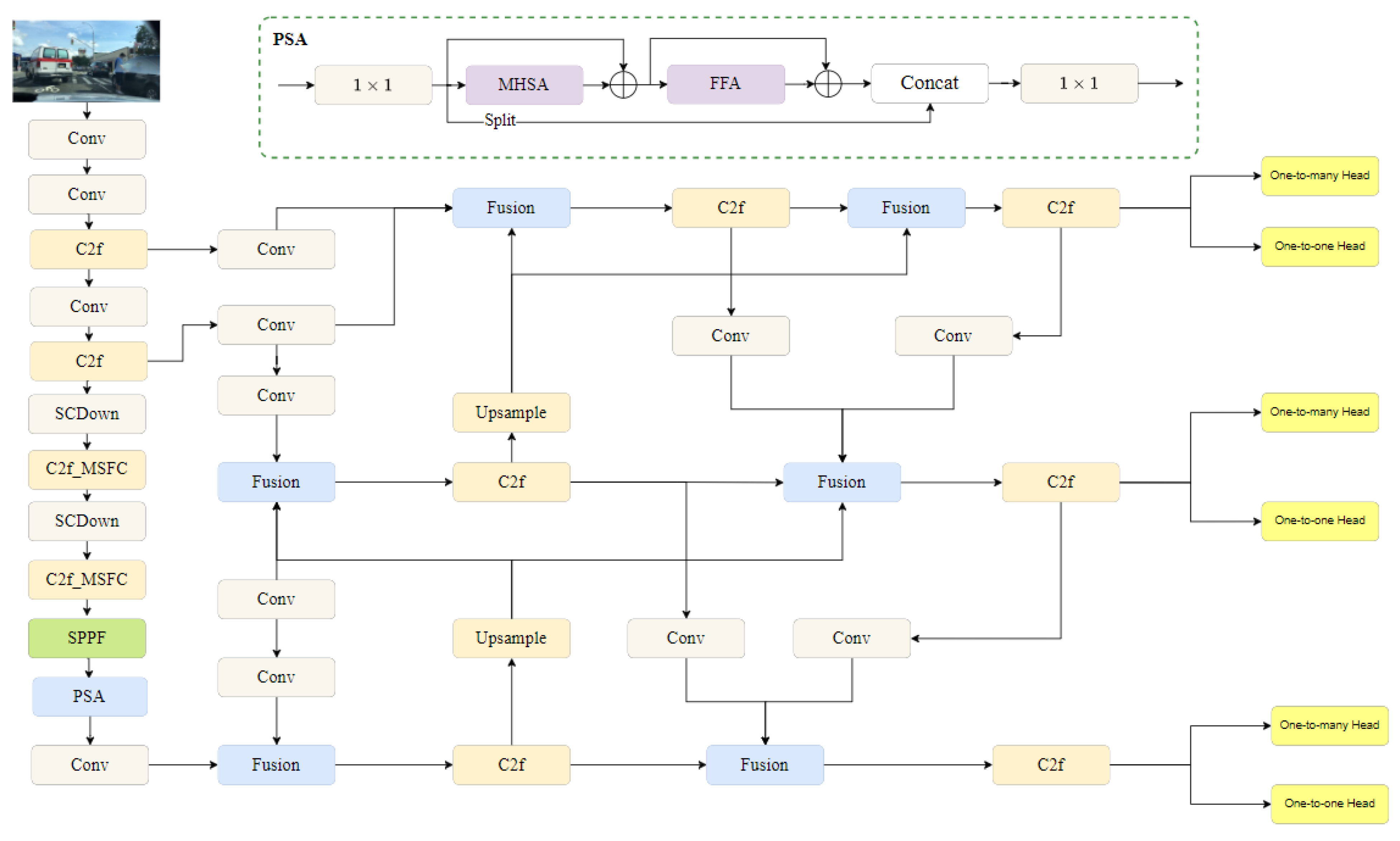

3.1. Overall Architecture of Improved YOLOv10

3.2. Design of Multi-Scale Flexible Convolution

3.3. Design of Neck Network

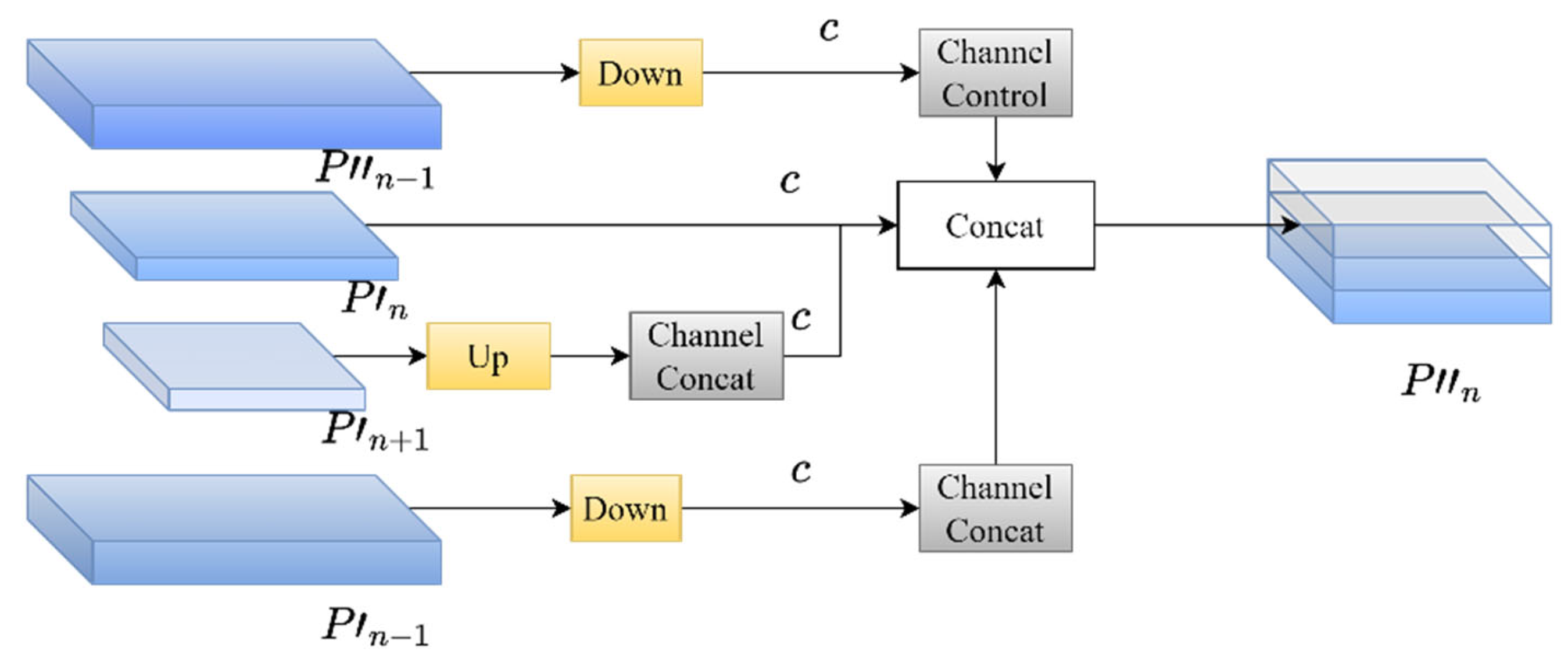

3.3.1. Superficial Assisted Fusion (SAF)

3.3.2. Advanced Assisted Fusion (AAF)

3.4. Detection Head Improvements

4. Experimentation and Analysis

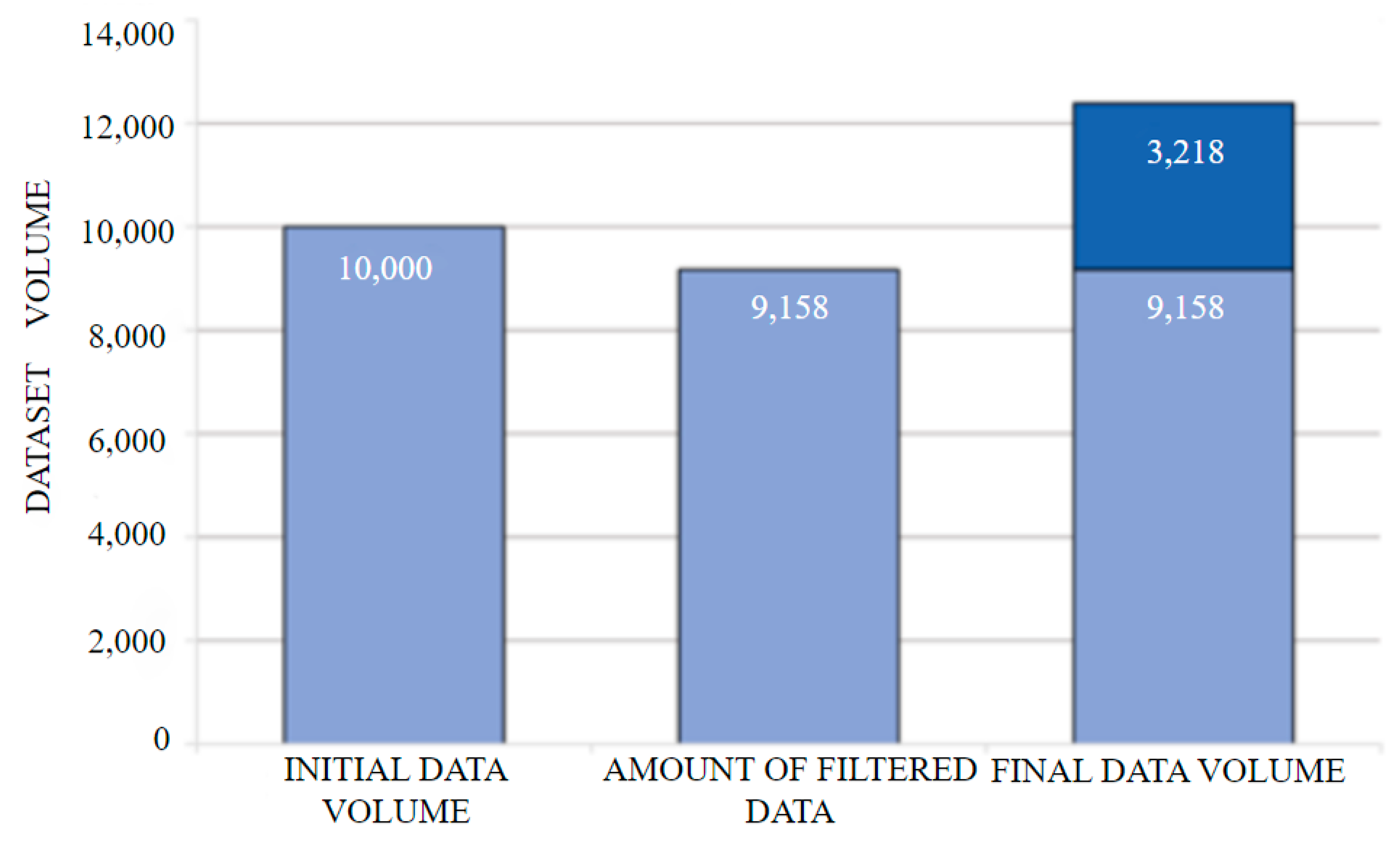

4.1. Dataset Construction

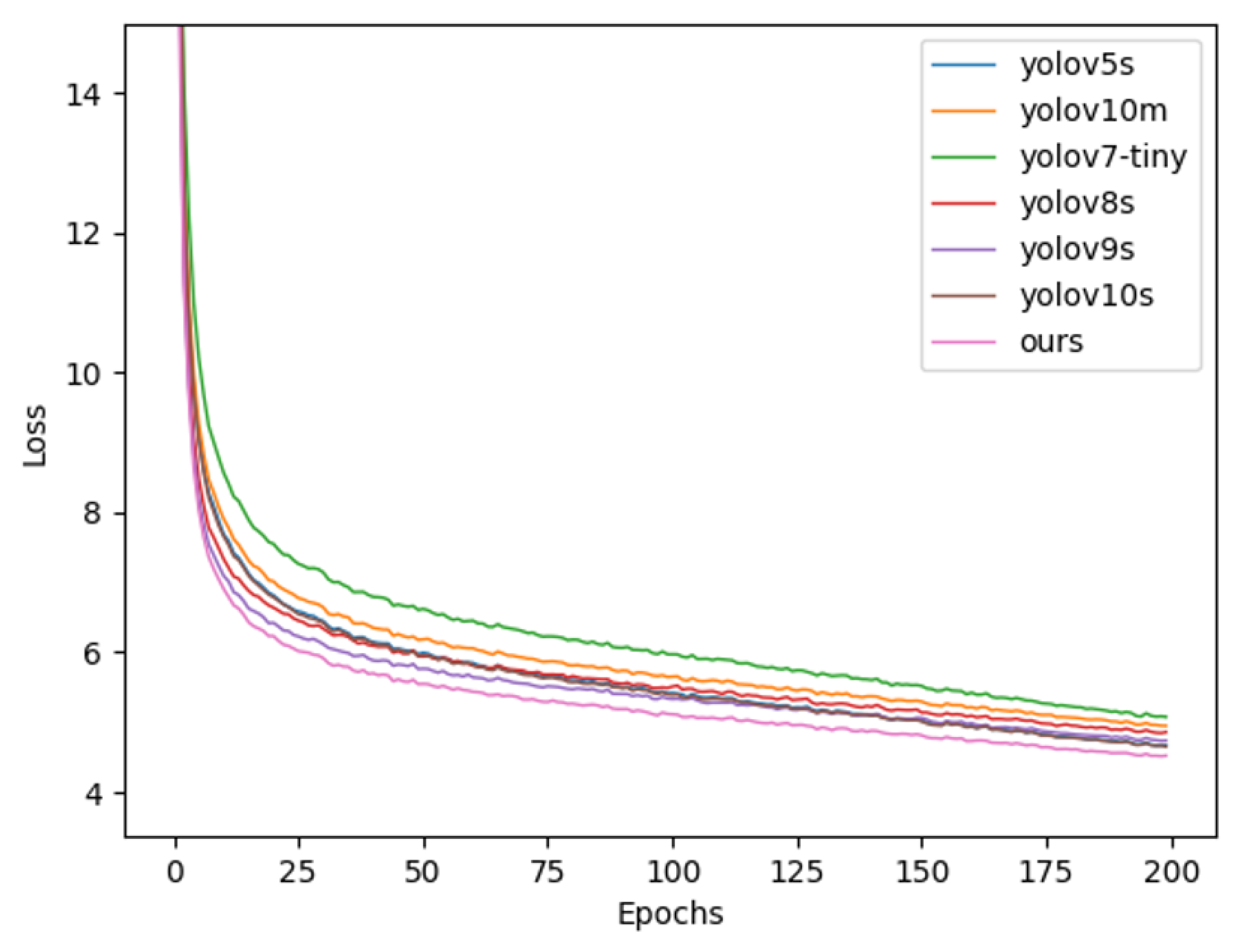

4.2. Model Training and Experimental Validation

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Lu, H.; Huang, J.; Zhang, M.; Shangguan, W. Night driving target detection algorithm based on YOLOv8n improvement study. J. Hunan Univ. (Nat. Sci. Ed.) 2025, 52, 59–68. [Google Scholar]

- Zhou, J.; Chen, X.; Gao, J.; Tang, Y.Q. Traffic participants of the infrared target under complex traffic environment lightweight detection model. J. Saf. Environ. 2025, 25, 1400–1411. [Google Scholar]

- Zhang, M.; Zhang, Z. Research on Vehicle Target Detection Method Based on Improved YOLOv8. Appl. Sci. 2025, 15, 5546. [Google Scholar] [CrossRef]

- Wen, S.; Li, L.; Ren, W. A Lightweight and Effective YOLO Model for Infrared Small Object Detection. Int. J. Pattern Recognit. Artif. Intell. 2025, 39, 32–48. [Google Scholar] [CrossRef]

- Zhang, H.; Yang, R.; Deng, X.; Li, F.; Zhong, M. Infrared Detection Algorithm for Defect Targets Based on Improved SSD. Laser Infrared 2024, 54, 1885–1893. [Google Scholar]

- Du, H.; Gu, C.; Zhang, X.; Liu, G.T.; Li, X.X.; Yang, Z.L. Vehicle Target Detection Method in Complex Weather Conditions Based on YOLOv10-vehicle Algorithm. J. Jilin Univ. (Eng. Technol. Ed.) 2025, 1–10. [Google Scholar] [CrossRef]

- Chen, H.; Chen, Z.; Ning, X.; Wang, S.Y.; Lu, Y.S.; Lu, H.T. Improved Detection Method for Dense Pedestrian Small Targets by YOLOv10n. Small Micro Comput. Syst. 2025, 48, 1–12. [Google Scholar]

- Mei, J.; Zhu, W. BGF-YOLOv10: Small Object Detection Algorithm from Unmanned Aerial Vehicle Perspective Based on Improved YOLOv10. Sensors 2024, 24, 6911. [Google Scholar] [CrossRef]

- Del-Coco, M.; Carcagnì, P.; Oliver, S.T.; Iskandaryan, D.; Leo, M. The Role of AI in Smart Mobility: A Comprehensive Survey. Electronics 2025, 14, 1801. [Google Scholar] [CrossRef]

- Wen, J.; Wang, Y.; Li, J.; Zhang, Y. Faster R-CNN Object Detection Algorithm Based on Multi-head Self-Attention Mechanism. Mod. Electron. Technol. 2024, 47, 8–16. [Google Scholar]

- Lai, S.; Shi, F.; Liao, J.; Zhou, X.L.; Zhao, J. Street Tree Object Detection Based on Mask R-CNN. Radio Eng. 2022, 52, 2263–2270. [Google Scholar]

- Liu, Y.; Wang, X.; Song, J.; Liu, X.Y.; Pu, J.P. Lightweight Aerial Target Detection Method Based on Improved RetinaNet. Fire Command Control 2024, 49, 76–82+89. [Google Scholar]

- Liu, X.; Huang, J.; Yang, Y.; Li, J. Improved Remote Sensing Rotating Target Detection Based on CenterNet. Remote Sens. Technol. Appl. 2023, 38, 1081–1091. [Google Scholar]

- Chen, J.; Cheng, M.; Xu, Z. Improved FCOS Object Detection Algorithm. Comput. Sci. 2022, 49, 467–472. [Google Scholar]

- Li, Y.; Xiong, X.; Xin, W.; Huang, J.; Hao, H. MobileNetV3-CenterNet: A Target Recognition Method for Avoiding Missed Detection Effectively Based on a Lightweight Network. J. Beijing Inst. Technol. 2023, 32, 82–94. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

- Qu, Z.; Gao, L.Y.; Wang, S.Y.; Yin, H.N.; Yi, T.M. An improved YOLOv5 method for large objects detection with multi-scale feature cross-layer fusion network. Image Vis. Comput. 2022, 125, 104518. [Google Scholar] [CrossRef]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J. Yolov10: Real-time end-to-end object detection. Adv. Neural Inf. Process. Syst. 2024, 37, 107984–108011. [Google Scholar]

- Yang, Y.; Zhang, X. Unmanned Aerial Vehicle Small Target Detection Algorithm Improved by YOLOv10n. Comput. Appl. Softw. 2025, 1–9. Available online: https://www.cnki.com.cn/Article/CJFDTotal-JYRJ20250713001.htm (accessed on 5 August 2005).

- Chen, G.; Liu, S.J.; Sun, Y.J.; Ji, G.P.; Wu, Y.F.; Zhou, T. Camouflaged Object Detection via Context-Aware Cross-Level Fusion. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 6981–6993. [Google Scholar] [CrossRef]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 8759–8768. [Google Scholar]

- Yang, Z.; Guan, Q.; Zhao, K.; Yang, J.; Xu, X.; Long, H.; Tang, Y. Multi-branch Auxiliary Fusion YOLO with Re-parameterization Heterogeneous Convolutional for Accurate Object Detection. In Proceedings of the Pattern Recognition and Computer Vision, Urumqi, China, 18–20 October 2024; Springer Nature: Singapore, 2025; pp. 492–505. [Google Scholar]

- Yu, Z.; Huang, H.; Chen, W.; Su, Y.; Liu, Y.; Wang, X. YOLO-FaceV2: A scale and occlusion aware face detector. Pattern Recognit. 2024, 155, 110714. [Google Scholar] [CrossRef]

- Yu, F.; Chen, H.; Wang, X.; Xian, W.; Chen, Y.; Liu, F.; Madhavan, V.; Darrell, T. BDD100K: A Diverse Driving Dataset for Heterogeneous Multitask Learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 2636–2645. [Google Scholar]

- Cordts, M.; Omran, M.; Ramos, S.; Rehfeld, T.; Enzweiler, M.; Benenson, R.; Franke, U.; Roth, S.; Schiele, B. The Cityscapes Dataset for Semantic Urban Scene Understanding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 3213–3223. [Google Scholar]

| Parameters | Configuration |

|---|---|

| CPU | Intel i5-13600KF CPU@ 3.5 GHz |

| RAM | 32G |

| GPU | NVIDIA GeForce RTX 4070Ti SUPER |

| Display Memory | 16G |

| Virtualized Environment | Anaconda 3.0 |

|---|---|

| Language | Python 3.9 |

| CUDA | 12.3 |

| Deep Learning Frame | PyTorch 1.12.0 |

| OpenCV | 4.9.0 |

| OS | Windows 10 |

| Parameters | Configuration |

|---|---|

| Epochs | 200 |

| Batch size | 8 |

| Learning rate | 0.0001 |

| Image size | 640 × 640 |

| Label | YOLOv10s | MSFC | MAFPN | SEAM |

|---|---|---|---|---|

| A | √ | |||

| B | √ | √ | ||

| C | √ | √ | ||

| D | √ | √ | ||

| E | √ | √ | √ | |

| F | √ | √ | √ | |

| G | √ | √ | √ | |

| H (ours) | √ | √ | √ | √ |

| Experiments | mAP50 (%) | mAP50-95 (%) | P (%) | R (%) | FlOPs (G) | Params (M) | FPS (f/s) | Model Size (MB) |

|---|---|---|---|---|---|---|---|---|

| A | 91.1 | 69.2 | 91.2 | 83.1 | 24.4 | 8.04 | 270 | 15.7 |

| B | 91.4 | 69.1 | 90.8 | 82.9 | 21.0 | 7.84 | 227 | 16.9 |

| C | 91.9 | 69.9 | 92.2 | 82.7 | 21.7 | 6.98 | 244 | 14.5 |

| D | 93.8 | 71.2 | 93.4 | 85.6 | 22.3 | 13.26 | 151 | 27.3 |

| E | 91.7 | 69.9 | 91.4 | 82.8 | 20.7 | 6.40 | 196 | 13.4 |

| F | 93.2 | 70.5 | 90.1 | 82.2 | 21.1 | 13.00 | 139 | 15.8 |

| G | 93.5 | 70.5 | 90.4 | 83.1 | 21.8 | 12.13 | 135 | 15.5 |

| H (ours) | 93 | 70.9 | 92.4 | 82.8 | 20.9 | 11.56 | 128 | 13.4 |

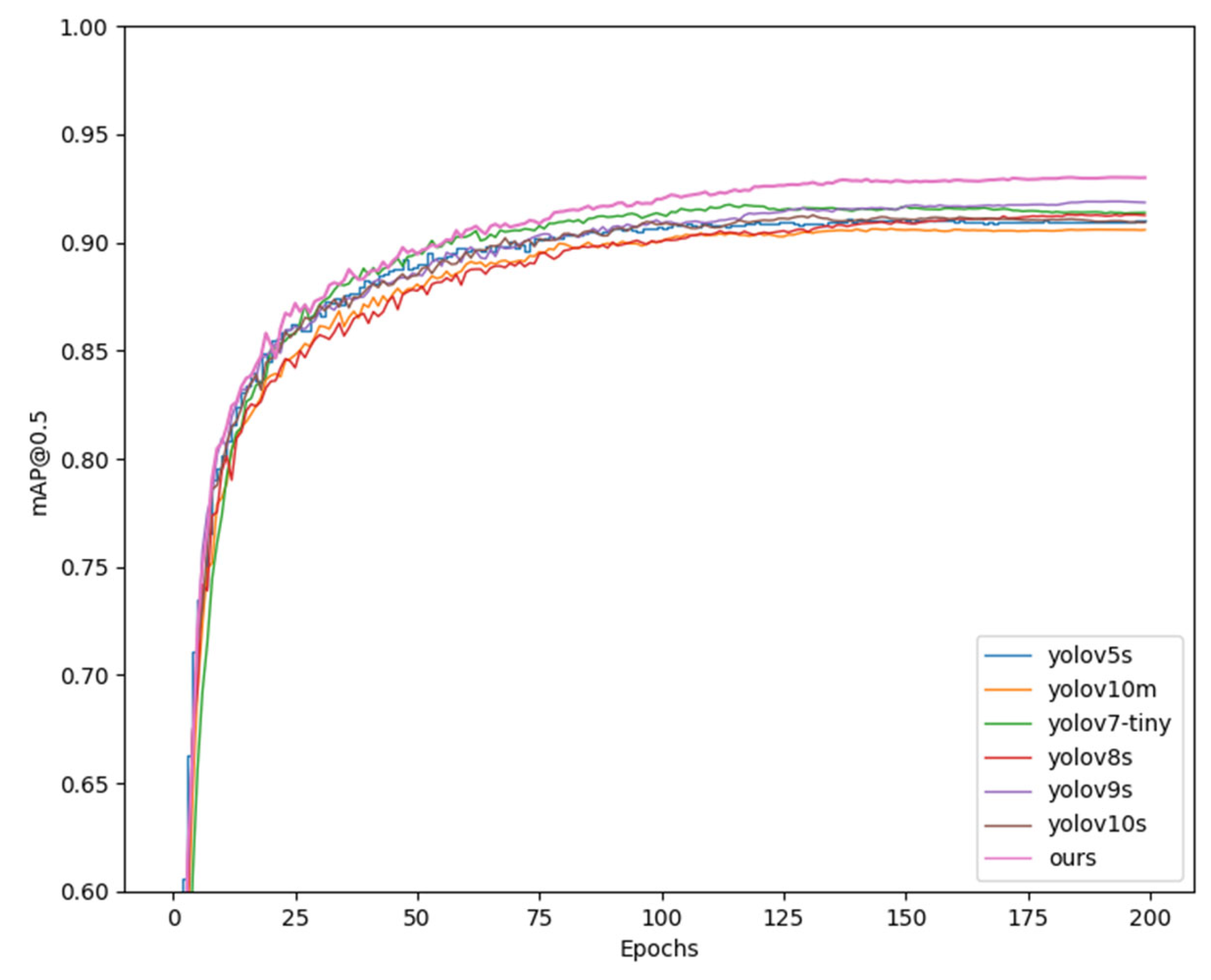

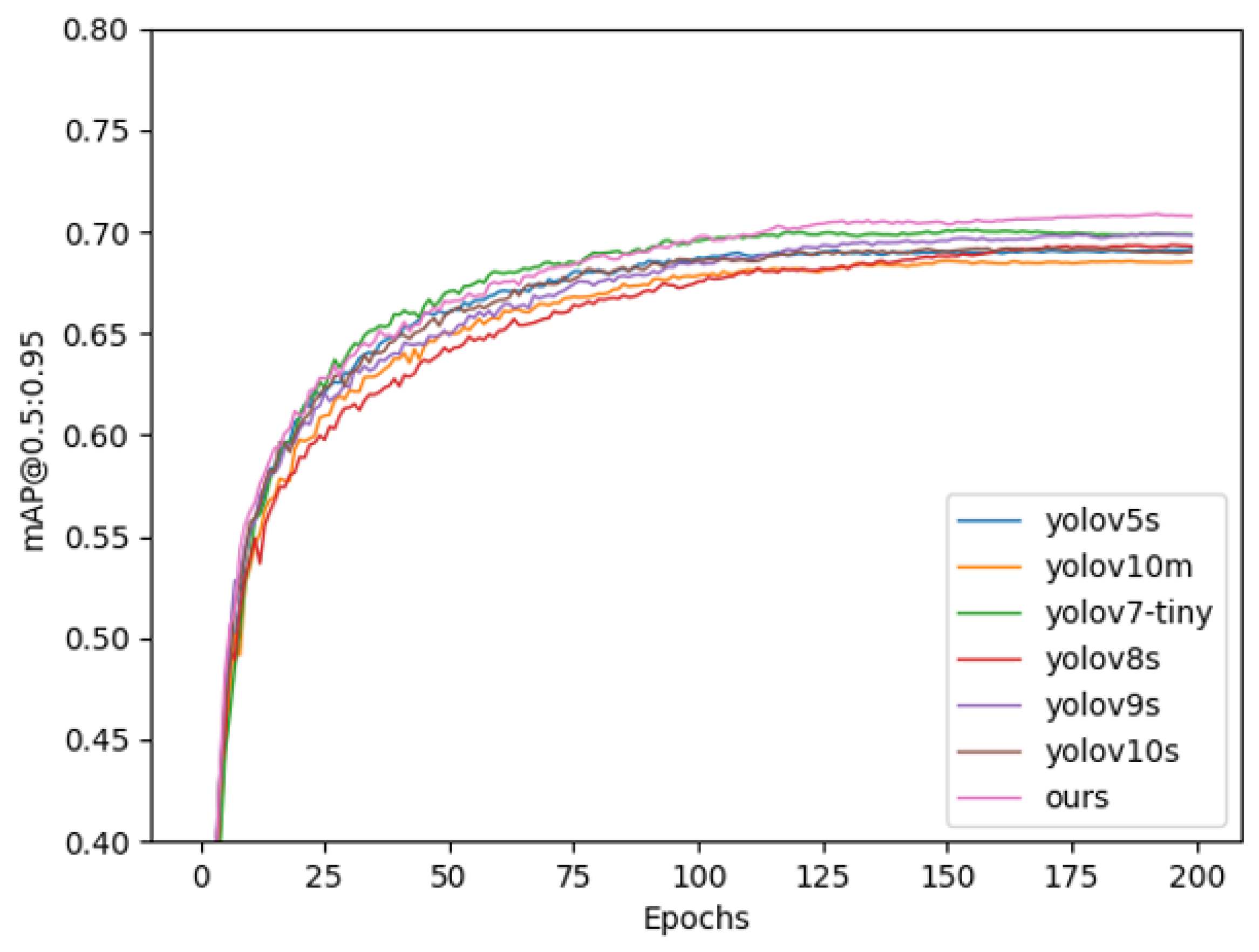

| Detectors | mAP50 (%) | mAP50-95 (%) | P (%) | R (%) | Params (M) | FlOPs (G) | Model Size (MB) | FPS (f/s) |

|---|---|---|---|---|---|---|---|---|

| yolov10s | 91.1 | 69.2 | 91.2 | 83.1 | 8.04 | 24.4 | 15.7 | 270 |

| yolov10m | 91.6 | 70.5 | 91.9 | 83.2 | 15.31 | 63.4 | 31.9 | 250 |

| yolov9s | 91.6 | 70.3 | 91.3 | 83.1 | 7.17 | 26.7 | 15.2 | 222 |

| yolov8s | 91.3 | 69.2 | 90.3 | 84.1 | 11.1 | 22.5 | 21.4 | 238 |

| yolov7-tiny | 90.5 | 69.0 | 91.1 | 83.1 | 6.0 | 13.0 | 12.3 | 276 |

| yolov5s | 91.1 | 69.2 | 92.8 | 81.3 | 9.11 | 23.8 | 18.5 | 256 |

| ours | 93 | 70.9 | 92.4 | 82.8 | 11.56 | 20.9 | 13.4 | 128 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, A.; Ning, X.; Zöldy, M.; Chen, J.; Xu, G. Intelligent Vehicle Target Detection Algorithm Based on Multiscale Features. Sensors 2025, 25, 5084. https://doi.org/10.3390/s25165084

Li A, Ning X, Zöldy M, Chen J, Xu G. Intelligent Vehicle Target Detection Algorithm Based on Multiscale Features. Sensors. 2025; 25(16):5084. https://doi.org/10.3390/s25165084

Chicago/Turabian StyleLi, Aijuan, Xiangsen Ning, Máté Zöldy, Jiaqi Chen, and Guangpeng Xu. 2025. "Intelligent Vehicle Target Detection Algorithm Based on Multiscale Features" Sensors 25, no. 16: 5084. https://doi.org/10.3390/s25165084

APA StyleLi, A., Ning, X., Zöldy, M., Chen, J., & Xu, G. (2025). Intelligent Vehicle Target Detection Algorithm Based on Multiscale Features. Sensors, 25(16), 5084. https://doi.org/10.3390/s25165084