Single Shot High-Accuracy Diameter at Breast Height Measurement with Smartphone Embedded Sensors

Abstract

1. Introduction

2. Materials and Methods

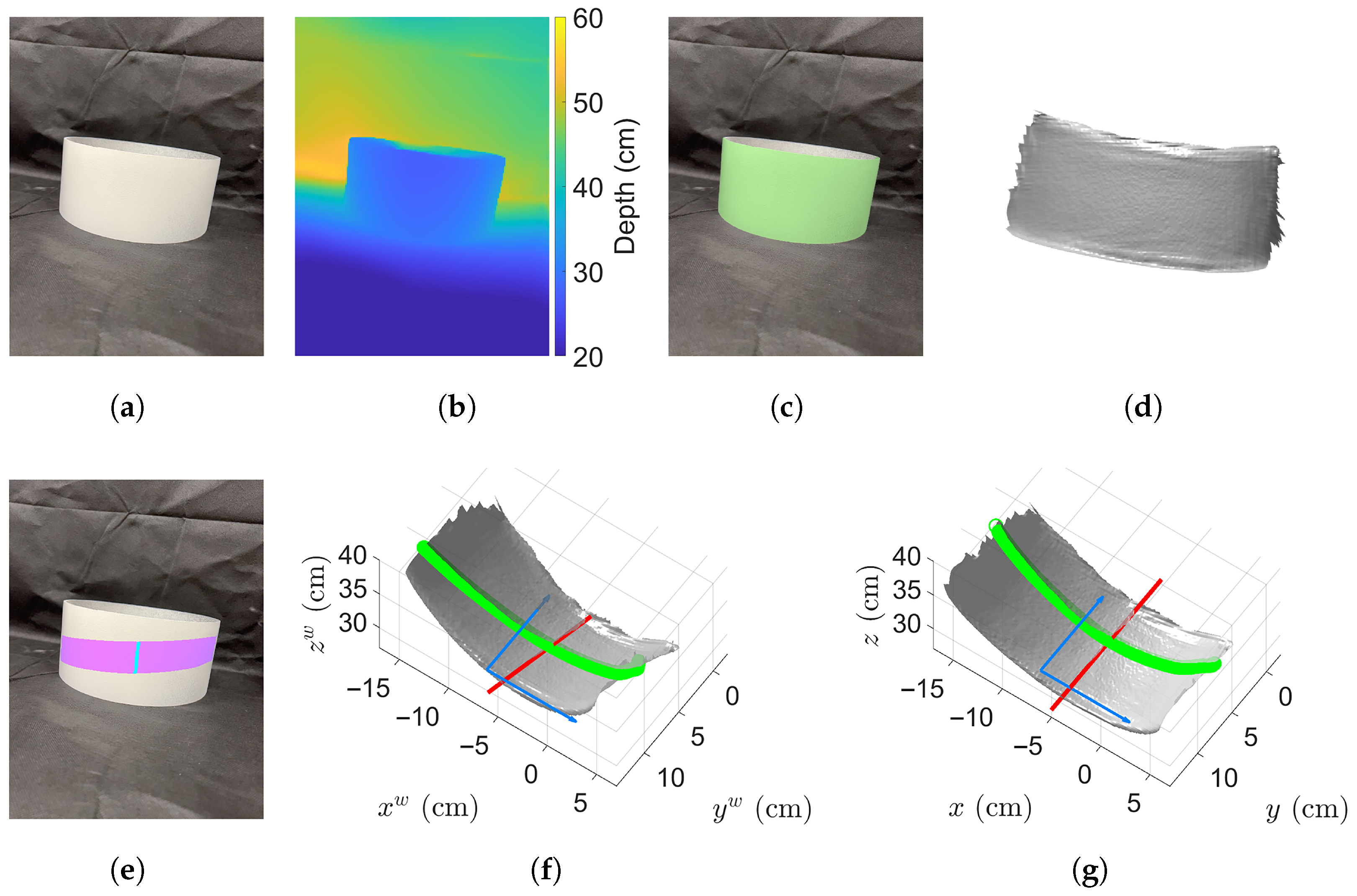

2.1. 3D Point Cloud Reconstruction

2.2. Tree Trunk and Ground Segmentation

2.3. Growth Orientation and Breast Height Location Estimation

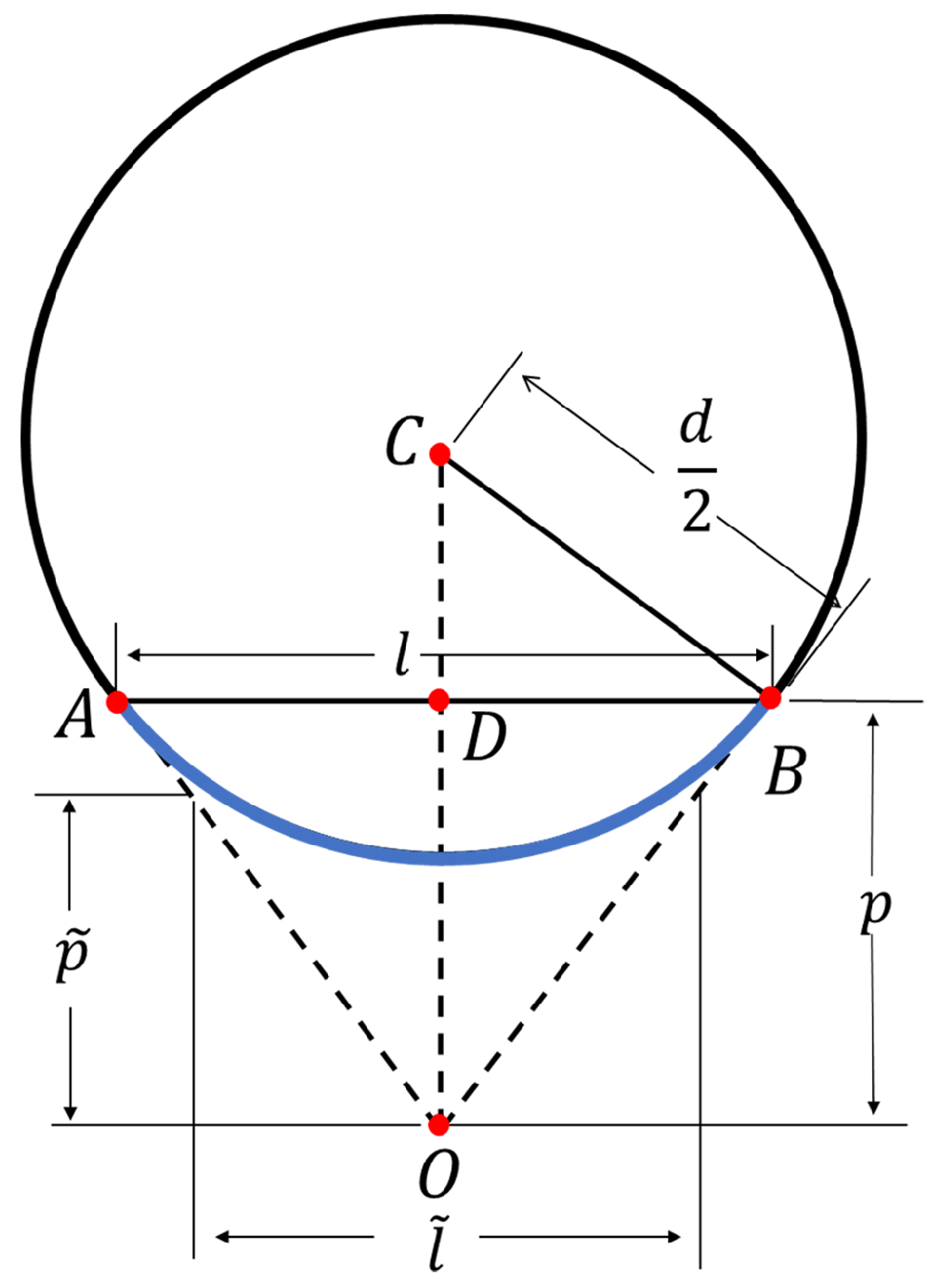

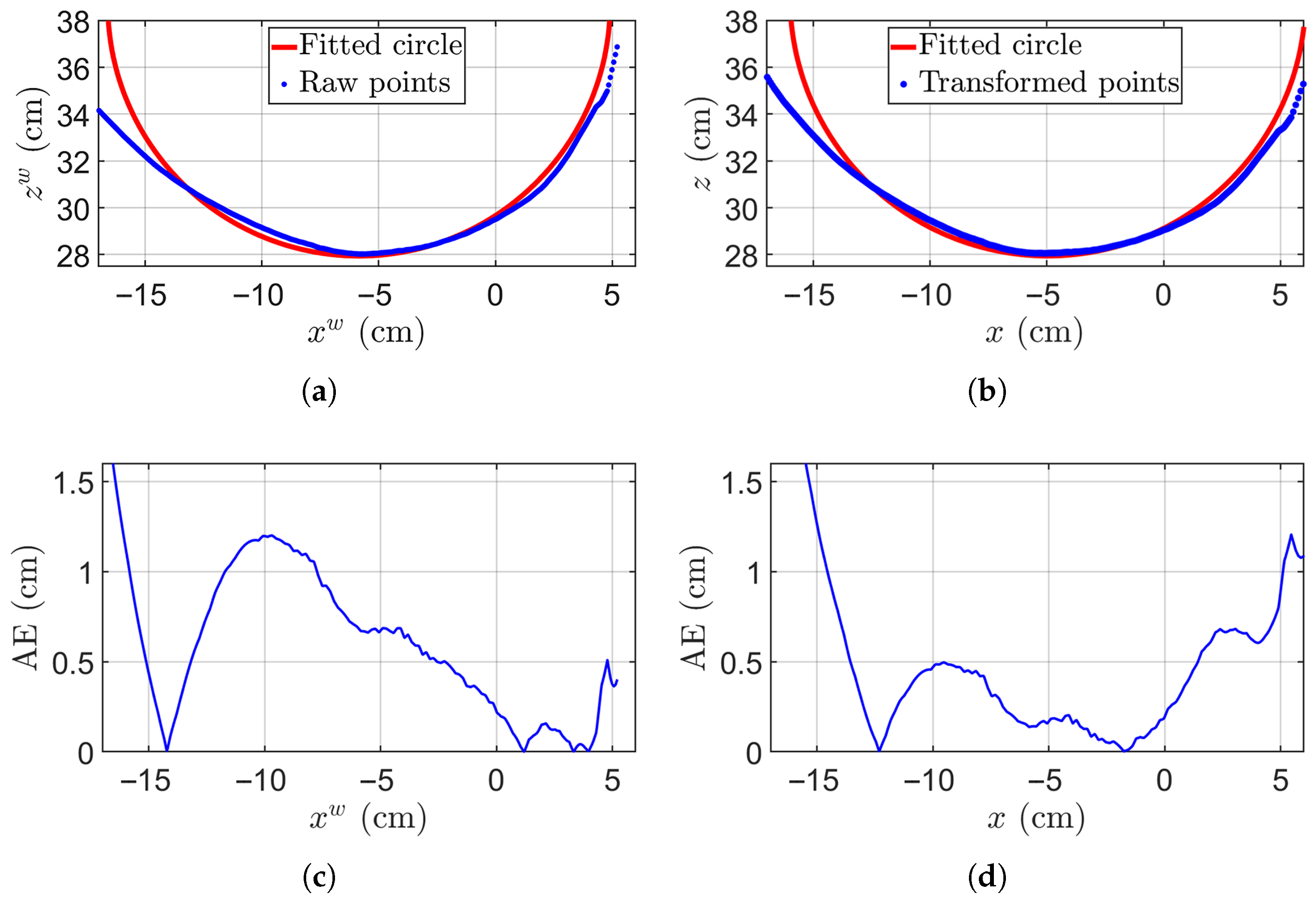

2.4. Initial DBH Estimation and Improvement

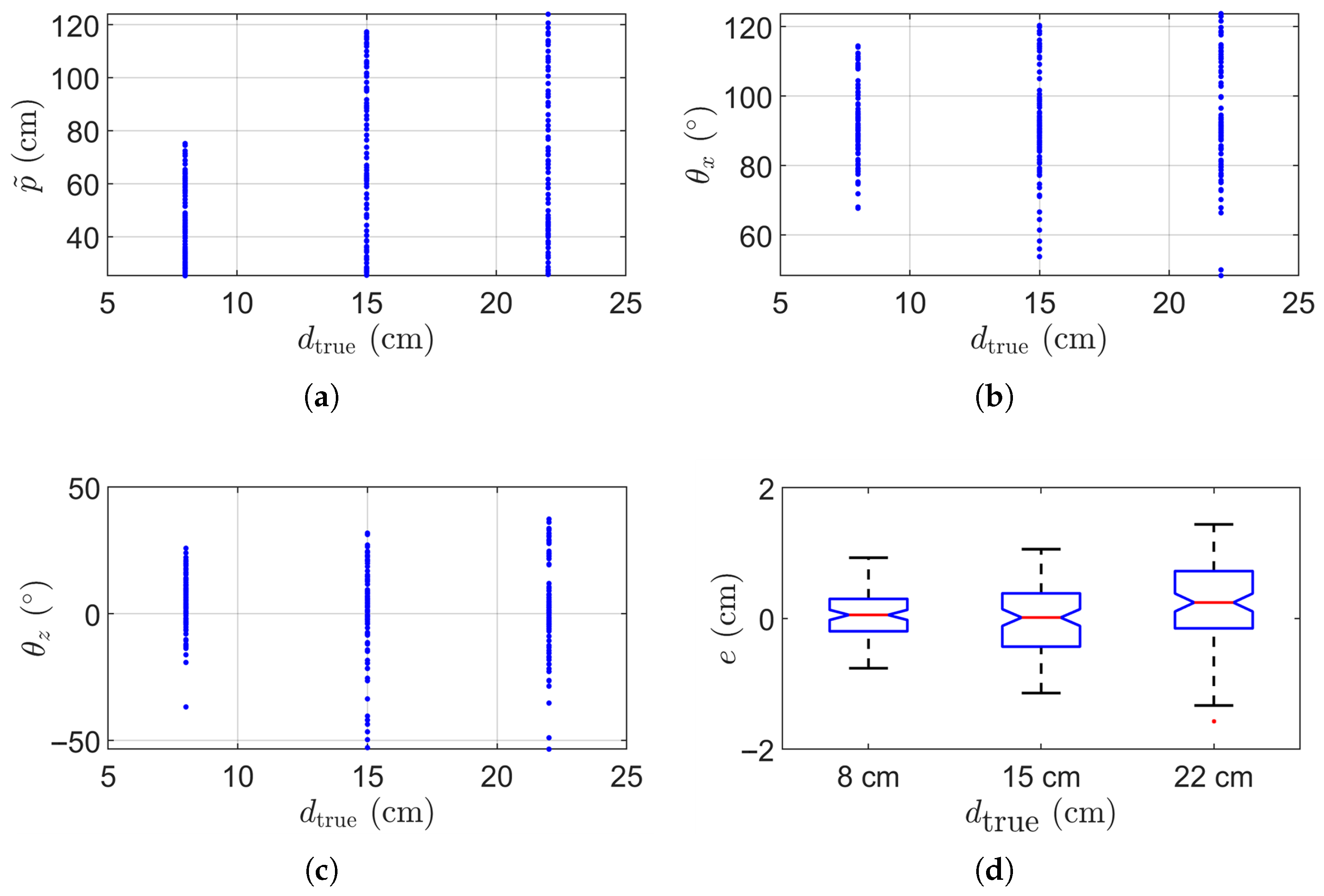

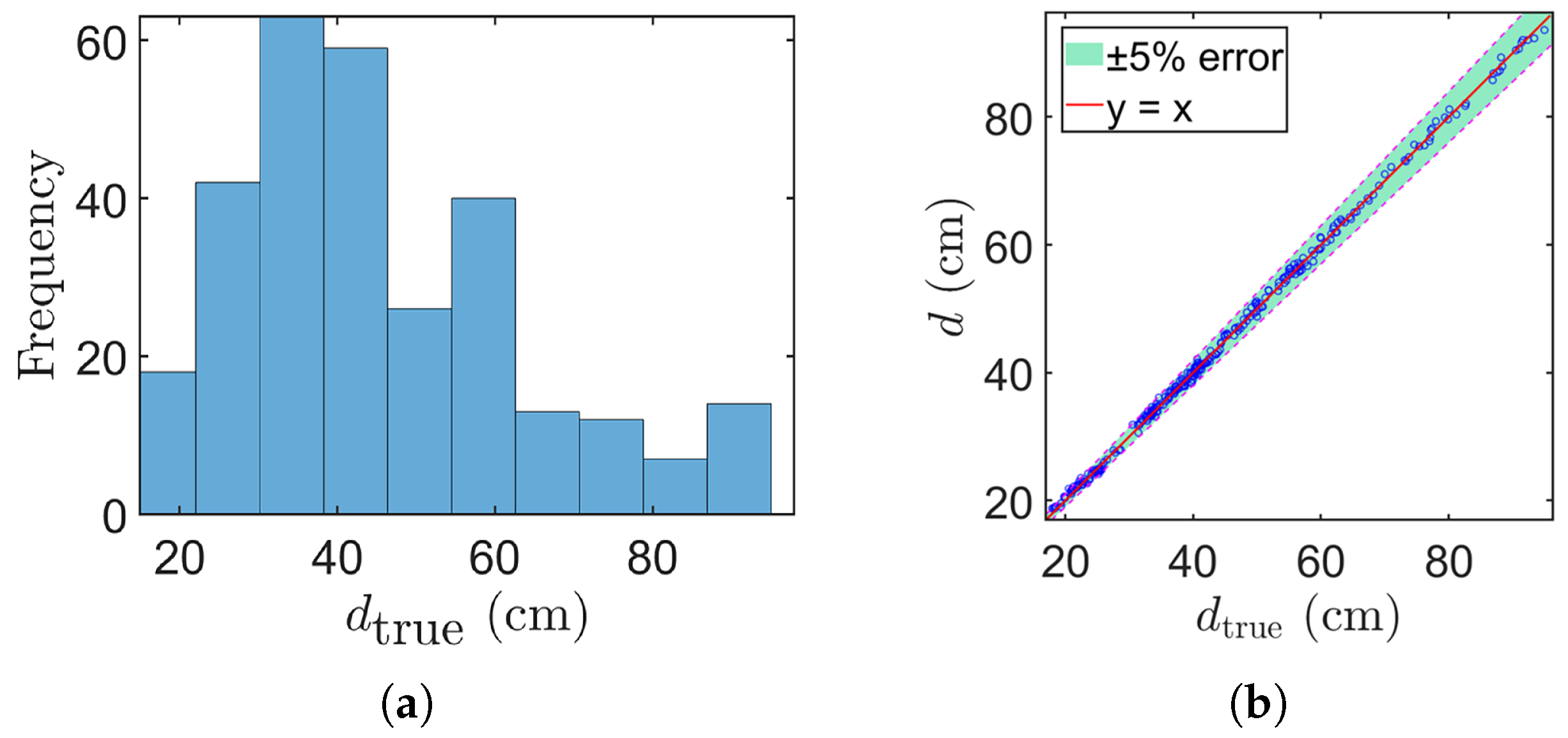

3. Results

4. Discussion

- High Accuracy. The proposed method achieved high accuracy through rigorous mathematical formation, and improved computational efficiency through approximation coupled with a pre-computed LUT. The method developed for the integrated sensor [2] estimates DBH by using the closest point on the circle at trunk cross-section to the camera center to determine the chord depth, and compute the diameter based on the circle geometry, achieving a best-case RMSE of 1.02 cm. The method designed for ARTreeWatch (Android Studio 4.0) [34] leverages motion tracking through visual-inertial odometry, along with feature and plane detection, followed by circle fitting for DBH estimation, resulting in a best-case RMSE of 1.04 cm. In comparison, our method achieves a lower RMSE of 0.63 cm, representing a clear improvement in accuracy.

- High Efficiency. Our proposed methodology significantly improves the efficiency of measuring DBH in forest settings. It takes approximately 20 s to perform each measurement with a caliper, whilst our smartphone-based approach requires less than one second. Our method is even more beneficial to measure large trees since it can be challenging to use a caliper to directly measure those large trees, or requires collaborative effort if a tape is used. The mobility offered by a smartphone, coupled with immediate data processing and storage capabilities, streamlines the entire measurement process.

- High Flexibility. Unlike those traditional methods that often require the image plane to align parallel to the tangential plane of the tree trunk at breast height to ensure accuracy, our approach relaxed such constraints by incorporating tree trunk orientation estimation and point cloud re-projection techniques prior to DBH estimation, thereby increasing flexibility of the data capture process.

- Depth range limit. The limited depth range of the iPhone LiDAR (Apple Inc., Cupertino, CA, USA) sensor (i.e., 0.25~5 m) poses challenges if the tree is too small or too far away. Moreover, this proposed method assumes cylindrical tree trunk, the single snapshot method may not give an accurate DBH estimation if the tree trunk does not satisfy this condition.

- Non-cylindrical trunk. The proposed method assumed that the tree trunk is cylindrical, yet in natural forest, tree trunks exhibit deviations such as tapering, fluting, or leaning. Despite this, our forest measurement result is encouraging considering that we did not select trees whose trunks are close to be cylindrical but rather captured all trees within the sampled area. In practice, measurement from different perspectives could be taken to further improve DBH measurement accuracy.

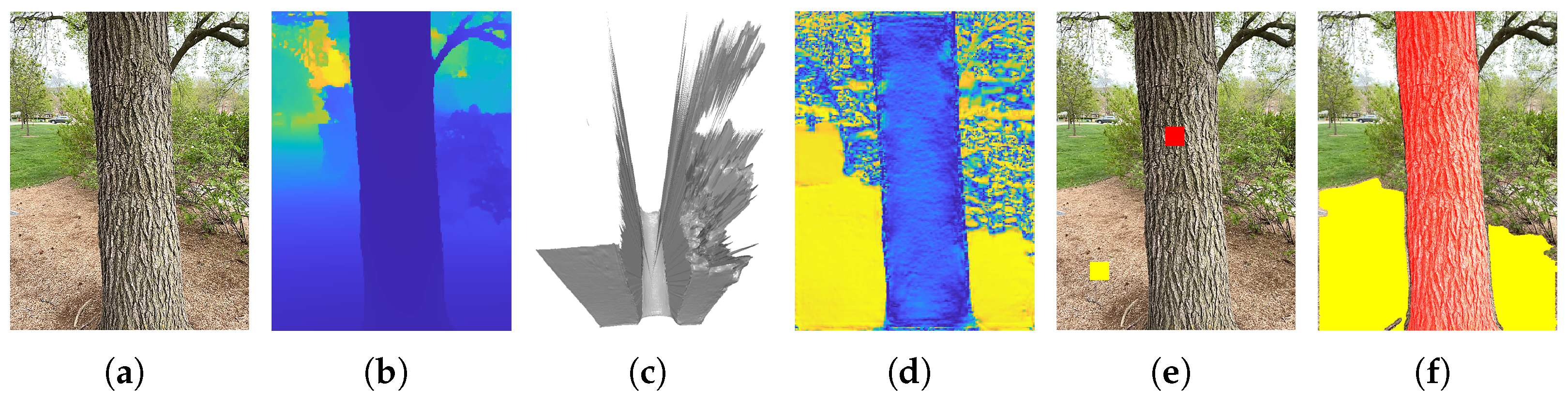

- Segmentation challenge. Our proposed method assumed that the tree trunk has been recognized and segmented properly. However, tree trunk segmentation is extremely challenging. We found that SAM often fails if the tree trunk is not clean or the background is complex. To automatically and robustly recognize tree trunk, it is probably necessary to train a new artificial intelligence model specifically for this purpose. The current algorithm requires precise segmentation of the tree trunk and ground areas. It fails if the algorithm cannot detect tree trunk accurately either because the tree trunk is occluded (e.g., vines and leaves) at the DBH location when the trunk boundary cannot be precisely located. It might be also challenging in scenarios where understory vegetation or complex terrain obscure clear delineation. The problem becomes more complicated when the ground area has dense vegetation where the “ground” could be incorrectly detected. Segmentation failures occurred at a rate of 2.65% across the evaluated dataset, primarily due to partial occlusion, suboptimal lighting conditions, and complex trunk textures. Figure 11 presents three representative examples. Figure 11a shows understory vegetation and leaves partially obscure the trunk could fail segmentation and measurement, and the segmented trunk is shown in the yellow area in Figure 11d. Figure 11b shows uneven illumination (i.e., a portion of the trunk appear excessively dark) resulting in segmentation failure, as shown in Figure 11d. And Figure 11c shows local variations in appearance due to intricate bark textures introduce could mislead the model and cause segmentation errors, as shown in Figure 11f.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| DBH | Diameter at breast height |

| AE | Absolute error |

| MAE | Mean absolute error |

| RMSE | Root mean square error |

| LUT | Lookup table |

| LiDAR | Light Detection and Ranging |

| RGB | Red, green and blue |

References

- West, P.W. Tree and Forest Measurement; Springer: Berlin/Heidelberg, Germany, 2009; Volume 20. [Google Scholar] [CrossRef]

- Shao, T.; Qu, Y.; Du, J. A low-cost integrated sensor for measuring tree diameter at breast height (DBH). Comput. Electron. Agric. 2022, 199, 107140. [Google Scholar] [CrossRef]

- Clark, N.A.; Wynne, R.; Schmoldt, D.; Winn, M. An assessment of the utility of a non-metric digital camera for measuring standing trees. Comput. Electron. Agric. 2000, 28, 151–169. [Google Scholar] [CrossRef]

- Marzulli, M.I.; Raumonen, P.; Greco, R.; Persia, M.; Tartarino, P. Estimating tree stem diameters and volume from smartphone photogrammetric point clouds. For. Int. J. For. Res. 2020, 93, 411–429. [Google Scholar] [CrossRef]

- Mokroš, M.; Liang, X.; Surovỳ, P.; Valent, P.; Čerňava, J.; Chudỳ, F.; Tunák, D.; Saloň, Š.; Merganič, J. Evaluation of close-range photogrammetry image collection methods for estimating tree diameters. ISPRS Int. J. Geo-Inf. 2018, 7, 93. [Google Scholar] [CrossRef]

- Piermattei, L.; Karel, W.; Wang, D.; Wieser, M.; Mokroš, M.; Surovỳ, P.; Koreň, M.; Tomaštík, J.; Pfeifer, N.; Hollaus, M. Terrestrial structure from motion photogrammetry for deriving forest inventory data. Remote Sens. 2019, 11, 950. [Google Scholar] [CrossRef]

- Liang, X.; Jaakkola, A.; Wang, Y.; Hyyppä, J.; Honkavaara, E.; Liu, J.; Kaartinen, H. The use of a hand-held camera for individual tree 3D mapping in forest sample plots. Remote Sens. 2014, 6, 6587–6603. [Google Scholar] [CrossRef]

- Roberts, J.; Koeser, A.; Abd-Elrahman, A.; Wilkinson, B.; Hansen, G.; Landry, S.; Perez, A. Mobile terrestrial photogrammetry for street tree mapping and measurements. Forests 2019, 10, 701. [Google Scholar] [CrossRef]

- Surovỳ, P.; Yoshimoto, A.; Panagiotidis, D. Accuracy of reconstruction of the tree stem surface using terrestrial close-range photogrammetry. Remote Sens. 2016, 8, 123. [Google Scholar] [CrossRef]

- Vastaranta, M.; González Latorre, E.; Luoma, V.; Saarinen, N.; Holopainen, M.; Hyyppä, J. Evaluation of a smartphone app for forest sample plot measurements. Forests 2015, 6, 1179–1194. [Google Scholar] [CrossRef]

- Brovkina, O.; Navrátilová, B.; Novotnỳ, J.; Albert, J.; Slezák, L.; Cienciala, E. Influences of vegetation, model, and data parameters on forest aboveground biomass assessment using an area-based approach. Ecol. Inform. 2022, 70, 101754. [Google Scholar] [CrossRef]

- Kissling, W.D.; Shi, Y.; Koma, Z.; Meijer, C.; Ku, O.; Nattino, F.; Seijmonsbergen, A.C.; Grootes, M.W. Laserfarm–A high-throughput workflow for generating geospatial data products of ecosystem structure from airborne laser scanning point clouds. Ecol. Inform. 2022, 72, 101836. [Google Scholar] [CrossRef]

- Zhang, L.; Grift, T.E. A monocular vision-based diameter sensor for Miscanthus giganteus. Biosyst. Eng. 2012, 111, 298–304. [Google Scholar] [CrossRef]

- Giannetti, F.; Puletti, N.; Quatrini, V.; Travaglini, D.; Bottalico, F.; Corona, P.; Chirici, G. Integrating terrestrial and airborne laser scanning for the assessment of single-tree attributes in Mediterranean forest stands. Eur. J. Remote Sens. 2018, 51, 795–807. [Google Scholar] [CrossRef]

- Gonzalez de Tanago, J.; Lau, A.; Bartholomeus, H.; Herold, M.; Avitabile, V.; Raumonen, P.; Martius, C.; Goodman, R.C.; Disney, M.; Manuri, S.; et al. Estimation of above-ground biomass of large tropical trees with terrestrial LiDAR. Methods Ecol. Evol. 2018, 9, 223–234. [Google Scholar] [CrossRef]

- Brede, B.; Calders, K.; Lau, A.; Raumonen, P.; Bartholomeus, H.M.; Herold, M.; Kooistra, L. Non-destructive tree volume estimation through quantitative structure modelling: Comparing UAV laser scanning with terrestrial LIDAR. Remote Sens. Environ. 2019, 233, 111355. [Google Scholar] [CrossRef]

- Indirabai, I.; Nair, M.H.; Jaishanker, R.N.; Nidamanuri, R.R. Terrestrial laser scanner based 3D reconstruction of trees and retrieval of leaf area index in a forest environment. Ecol. Inform. 2019, 53, 100986. [Google Scholar] [CrossRef]

- Liang, X.; Hyyppä, J.; Kaartinen, H.; Lehtomäki, M.; Pyörälä, J.; Pfeifer, N.; Holopainen, M.; Brolly, G.; Francesco, P.; Hackenberg, J.; et al. International benchmarking of terrestrial laser scanning approaches for forest inventories. ISPRS J. Photogramm. Remote Sens. 2018, 144, 137–179. [Google Scholar] [CrossRef]

- Cabo, C.; Del Pozo, S.; Rodríguez-Gonzálvez, P.; Ordóñez, C.; González-Aguilera, D. Comparing terrestrial laser scanning (TLS) and wearable laser scanning (WLS) for individual tree modeling at plot level. Remote Sens. 2018, 10, 540. [Google Scholar] [CrossRef]

- Oveland, I.; Hauglin, M.; Giannetti, F.; Schipper Kjørsvik, N.; Gobakken, T. Comparing three different ground based laser scanning methods for tree stem detection. Remote Sens. 2018, 10, 538. [Google Scholar] [CrossRef]

- Hyyppä, E.; Yu, X.; Kaartinen, H.; Hakala, T.; Kukko, A.; Vastaranta, M.; Hyyppä, J. Comparison of backpack, handheld, under-canopy UAV, and above-canopy UAV laser scanning for field reference data collection in boreal forests. Remote Sens. 2020, 12, 3327. [Google Scholar] [CrossRef]

- Gülci, S.; Yurtseven, H.; Akay, A.O.; Akgul, M. Measuring tree diameter using a LiDAR-equipped smartphone: A comparison of smartphone-and caliper-based DBH. Environ. Monit. Assess. 2023, 195, 678. [Google Scholar] [CrossRef]

- Magnuson, R.; Erfanifard, Y.; Kulicki, M.; Gasica, T.A.; Tangwa, E.; Mielcarek, M.; Stereńczak, K. Mobile Devices in Forest Mensuration: A Review of Technologies and Methods in Single Tree Measurements. Remote Sens. 2024, 16, 3570. [Google Scholar] [CrossRef]

- Holcomb, A.; Tong, L.; Keshav, S. Robust single-image tree diameter estimation with mobile phones. Remote Sens. 2023, 15, 772. [Google Scholar] [CrossRef]

- Ye, W.; Qian, C.; Tang, J.; Liu, H.; Fan, X.; Liang, X.; Zhang, H. Improved 3D stem mapping method and elliptic hypothesis-based DBH estimation from terrestrial laser scanning data. Remote Sens. 2020, 12, 352. [Google Scholar] [CrossRef]

- Olofsson, K.; Holmgren, J.; Olsson, H. Tree stem and height measurements using terrestrial laser scanning and the RANSAC algorithm. Remote Sens. 2014, 6, 4323–4344. [Google Scholar] [CrossRef]

- Pitkänen, T.P.; Raumonen, P.; Kangas, A. Measuring stem diameters with TLS in boreal forests by complementary fitting procedure. ISPRS J. Photogramm. Remote Sens. 2019, 147, 294–306. [Google Scholar] [CrossRef]

- Cabo, C.; Ordóñez, C.; López-Sánchez, C.A.; Armesto, J. Automatic dendrometry: Tree detection, tree height and diameter estimation using terrestrial laser scanning. Int. J. Appl. Earth Obs. Geoinf. 2018, 69, 164–174. [Google Scholar] [CrossRef]

- Huang, H.; Li, Z.; Gong, P.; Cheng, X.; Clinton, N.; Cao, C.; Ni, W.; Wang, L. Automated methods for measuring DBH and tree heights with a commercial scanning lidar. Photogramm. Eng. Remote Sens. 2011, 77, 219–227. [Google Scholar] [CrossRef]

- Liu, C.; Xing, Y.; Duanmu, J.; Tian, X. Evaluating different methods for estimating diameter at breast height from terrestrial laser scanning. Remote Sens. 2018, 10, 513. [Google Scholar] [CrossRef]

- Wieser, M.; Mandlburger, G.; Hollaus, M.; Otepka, J.; Glira, P.; Pfeifer, N. A case study of UAS borne laser scanning for measurement of tree stem diameter. Remote Sens. 2017, 9, 1154. [Google Scholar] [CrossRef]

- Zhang, W.; Wan, P.; Wang, T.; Cai, S.; Chen, Y.; Jin, X.; Yan, G. A novel approach for the detection of standing tree stems from plot-level terrestrial laser scanning data. Remote Sens. 2019, 11, 211. [Google Scholar] [CrossRef]

- Liu, G.; Wang, J.; Dong, P.; Chen, Y.; Liu, Z. Estimating individual tree height and diameter at breast height (DBH) from terrestrial laser scanning (TLS) data at plot level. Forests 2018, 9, 398. [Google Scholar] [CrossRef]

- Wu, F.; Wu, B.; Zhao, D. Real-time measurement of individual tree structure parameters based on augmented reality in an urban environment. Ecol. Inform. 2023, 77, 102207. [Google Scholar] [CrossRef]

- Wu, X.; Zhou, S.; Xu, A.; Chen, B. Passive measurement method of tree diameter at breast height using a smartphone. Comput. Electron. Agric. 2019, 163, 104875. [Google Scholar] [CrossRef]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Kirillov, A.; Mintun, E.; Ravi, N.; Mao, H.; Rolland, C.; Gustafson, L.; Xiao, T.; Whitehead, S.; Berg, A.C.; Lo, W.Y.; et al. Segment Anything. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 2–6 October 2023; pp. 4015–4026. [Google Scholar] [CrossRef]

- Fish, J.; Belytschko, T. A First Course in Finite Elements; Wiley: New York, NY, USA, 2007; Volume 1. [Google Scholar] [CrossRef]

| (cm) | 50 | 150 | 250 | 350 | |

|---|---|---|---|---|---|

| (cm) | |||||

| 10 | 10.69 | 10.21 | 10.11 | 10.07 | |

| 30 | 35.73 | 32.22 | 31.37 | 30.99 | |

| 50 | 63.43 | 55.93 | 53.75 | 52.75 | |

| 70 | 92.33 | 81.04 | 77.16 | 75.29 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xiang, W.; Fei, S.; Zhang, S. Single Shot High-Accuracy Diameter at Breast Height Measurement with Smartphone Embedded Sensors. Sensors 2025, 25, 5060. https://doi.org/10.3390/s25165060

Xiang W, Fei S, Zhang S. Single Shot High-Accuracy Diameter at Breast Height Measurement with Smartphone Embedded Sensors. Sensors. 2025; 25(16):5060. https://doi.org/10.3390/s25165060

Chicago/Turabian StyleXiang, Wang, Songlin Fei, and Song Zhang. 2025. "Single Shot High-Accuracy Diameter at Breast Height Measurement with Smartphone Embedded Sensors" Sensors 25, no. 16: 5060. https://doi.org/10.3390/s25165060

APA StyleXiang, W., Fei, S., & Zhang, S. (2025). Single Shot High-Accuracy Diameter at Breast Height Measurement with Smartphone Embedded Sensors. Sensors, 25(16), 5060. https://doi.org/10.3390/s25165060