Combined Particle Swarm Optimization and Reinforcement Learning for Water Level Control in a Reservoir

Abstract

1. Introduction

1.1. Impact of Floods at International Level

- International flood impacts:Floods cause thousands of deaths annually, forcing millions of people from their homes. Regions with poorly developed infrastructure, such as Southeast Asia and sub-Saharan Africa, are particularly vulnerable.

- Infrastructure destruction:Roads, bridges, buildings and other essential structures are frequently destroyed, which can paralyze transportation, trade, and public services. The reconstruction costs are often high.

- Economic impact:Flooding affects agriculture through the loss of crops and fertile land, which directly affects food security. It also affects industrial activities and tourism, reducing national GDP in many countries.

- Public health issues:Flooding can contribute to the spread of communicable diseases, such as malaria, dengue fever, and cholera, due to contaminated water and lack of hygiene. However, access to drinking water and food is a major challenge.

- Environmental degradation:Natural ecosystems are affected, and wildlife habitats can be destroyed. Waste and chemical pollution during floods have long-lasting effects on the environment.

- Role of climate change:Rising sea levels and extreme weather events, such as torrential rain, have made flooding more frequent and severe. This particularly affects coastal communities and cities along large rivers.

1.2. The Role of Dams in Flood Prevention

1.3. Related Literature on Flow Monitoring and Prediction

1.4. A Comparison Between Different Similar Control Methods and the Proposed PSO + RL Method

1.4.1. Output Feedback Based Adaptive Optimal Output Regulation for Continuous-Time Strict-Feedback Nonlinear Systems

- Does not require measurement of internal states—relies only on observable outputs.

- Adaptive—the lake is a system with high uncertainties: meteorological variations, tributary flows, evaporation. This method adapts in real time to changes, even if the exact model of the system is not completely known

- Optimal—the method optimizes performance against a reference, not just stabilization. It does not just maintain the level within a range, but makes it follow an optimal trajectory (maintaining an optimal average level for flood safety)

- Suitable for strict-feedback systems—the output depends on a series of subsystems linked in a chain.

- Guaranteed stability (Lyapunov-based)—the controller is designed to be mathematically stable, using Lyapunov theory. This means you can guarantee that the water level will not become uncontrollable, even under perturbation conditions.

- Requires fine and sensitive tuning of the parameters. Adaptation parameters, feedback gains, and Lyapunov functions must be tuned carefully. If you choose inappropriate values, you may experience oscillations, slow behavior, or even temporary instability.

- Higher complexity than classical control (e.g., PID).

- Requires an adaptive observer in full versions—may increase computational cost.

- Possible slow convergence. Because it is adaptive, the method has a “learning” or adjustment phase. In applications with rapid variations (e.g., sudden torrential rain), it may adapt too slowly to react effectively in time.

Comparison Between Output Feedback Adaptive and PSO + RL Methods

Conclusion

1.4.2. Model-Free Output Feedback Optimal Tracking Control for Two-Dimensional Batch Processes

- The input is H(t) the actual water level measured by the sensor;

- The output is Dv(t) the gate opening;

- The gate control is desired so that the water level H(t) follows a desired value Hd(t).

Advantages and Disadvantages of the Method

- No need for an explicit mathematical model: There is no need to identify the differential equations that describe the process. The system learns only from the data. It is the ideal method for complex or poorly modeled processes.

- Based on real-time monitored data: The method learns from input-output data collected in real time.

- Feedback only on output: It does not require measuring all internal states of the system but only the observable outputs.

- Optimal tracking: It allows optimal control over a desired value (e.g., maintaining the level), not just stable regulation.

- Suitable for 2D processes: Extend the method to systems with two dimensions; for example, time and level.

- Flexible and adaptable to changes: It automatically adapts if conditions change (e.g., lake level rises due to rain). Other perturbations (precipitation) can be added to the state data without changing the fundamentals of the method.

- Requires large amounts of data: The quality of control depends on the volume and diversity of historical data available. If the data is incomplete, performance de-creases.

- Computational complexity: Learning algorithms (especially in 2D and with Q functions) can require large resources (memory, processing time), especially in real-time applications.

- No formal guarantees of stability: In many cases, control stability cannot be guaranteed theoretically, especially in online learning. Validation through simulation or experimental testing is required.

- Difficulty in tuning: The choice of RL parameters (learning rate, exploration policy, discount factor) strongly influences performance and may require expertise.

- Risk of overlearning: If historical data does not cover enough variation, the learned policy may perform poorly in new conditions.

- Low interpretability: Compared to classical control (PID), the learned policy is often difficult to understand or explain to human operators.

Control Method Comparison Between This Method and the Method Presented in the Paper

Conclusions

- It learns control policies optimally from interaction with the environment;

- It can adapt the control strategy to unpredictable conditions (drought, torrential rains);

- Optimization with PSO can improve the parameters of the RL network for increased performance.

- It does not require the system model;

- It is directly applicable to the output feedback;

- It is stable, easier to implement, and can work well in less variable environments.

1.5. The Need to Optimize Control with Intelligent Algorithms

1.6. Practical Application of the Paper

1.7. Original Contributions of the Study

1.7.1. The Difference Between What Exists and the Study Presented in the Paper

- It proposes a hybrid combination of PSO and RL, in which PSO is used as a pre-training or initial optimization method for RL, which is not frequently encountered in the dam control literature or in water control applications;

- Integration with real SCADA systems and physical testing, not just in simulation, provides added practical value.

1.7.2. The Difference Between the Bibliographic References and the Study Presented in the Paper

- Ref. [16] use RL for water level control, but do not integrate PSO;

- Ref. [18] optimize a PID with PSO for flow control, but without real-time adaptation through RL;

- In the work of [17], an intelligent control model with RL for hydropower plants is developed, but the lack of physical testing limits its applicability.

1.7.3. The Main Difficulty of the Proposed Method

- PSO, which searches globally and offline;

- RL, which learns locally, online, and can have unstable behaviors if not well tuned.

1.7.4. Clarity of Contribution

1.8. Real-World Applications

- Flood prevention in urban areas:

- Efficient hydroelectric power generation:

- Smart agricultural irrigation:

- SCADA systems for smart dams:

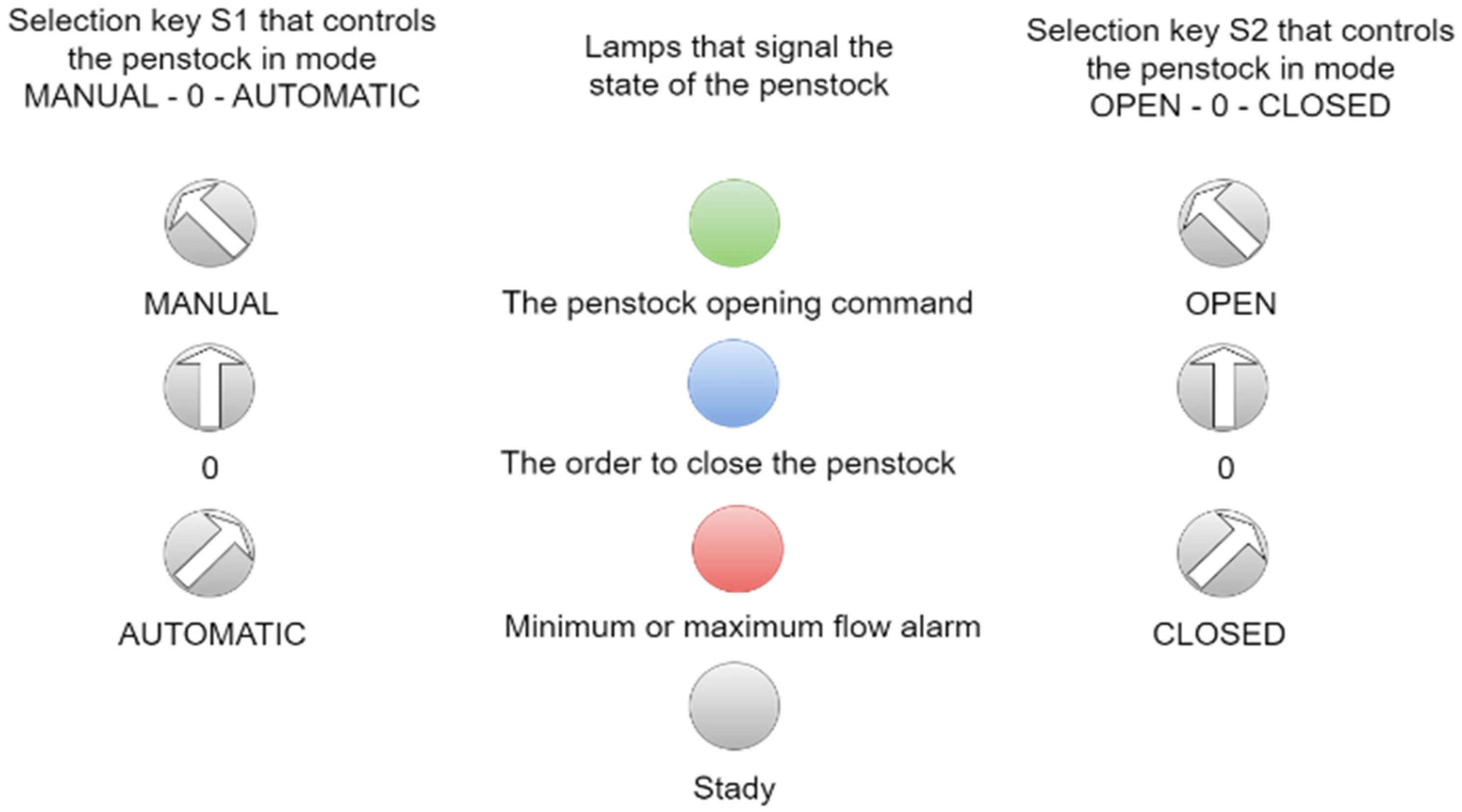

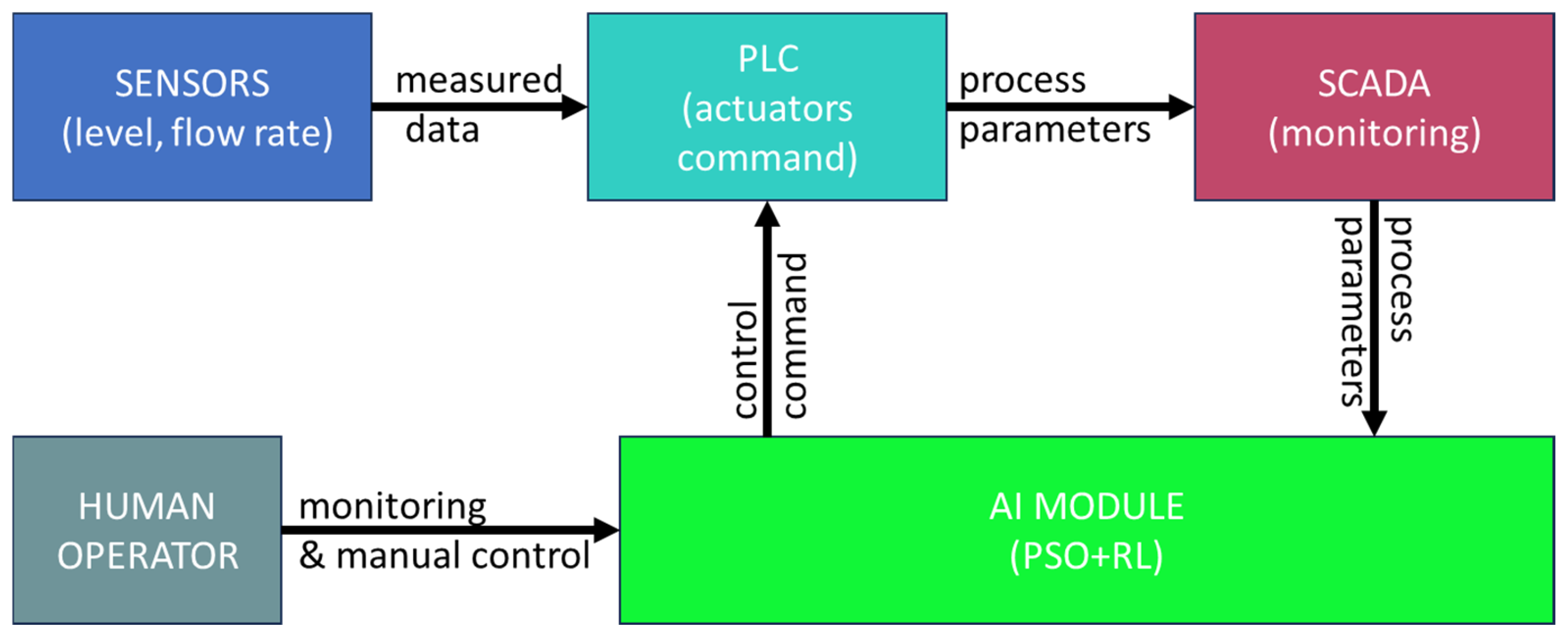

2. Automation Application

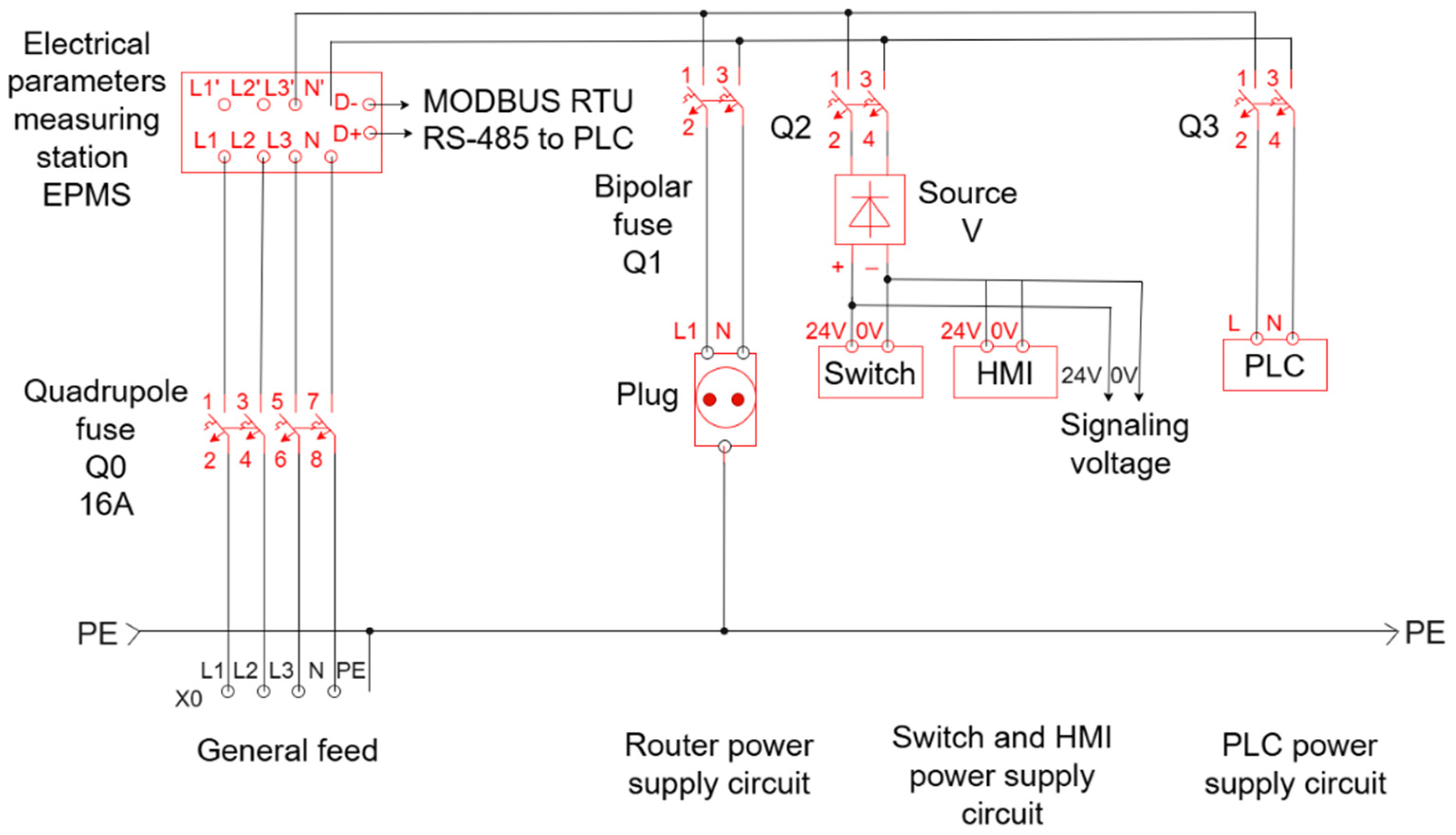

2.1. The Electrical Project

- Power supply of automation equipment (PLC, sensors, actuators);

- Electrical protection through automatic fuses and contactors;

- Integration of input/output modules for data acquisition and device control;

- Interconnection with the SCADA system via Ethernet/serial network.

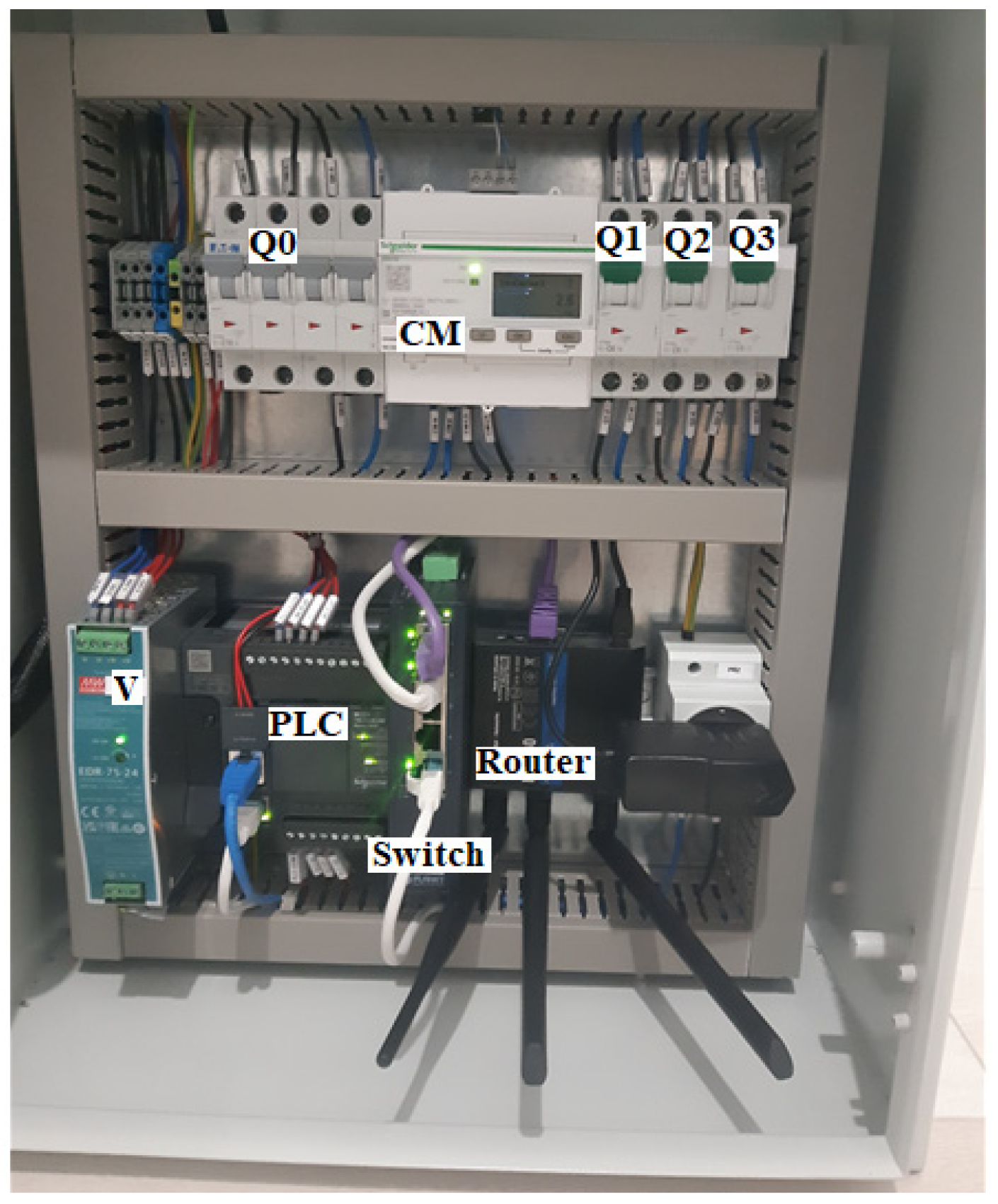

2.2. The Components Inside the Automation Panel

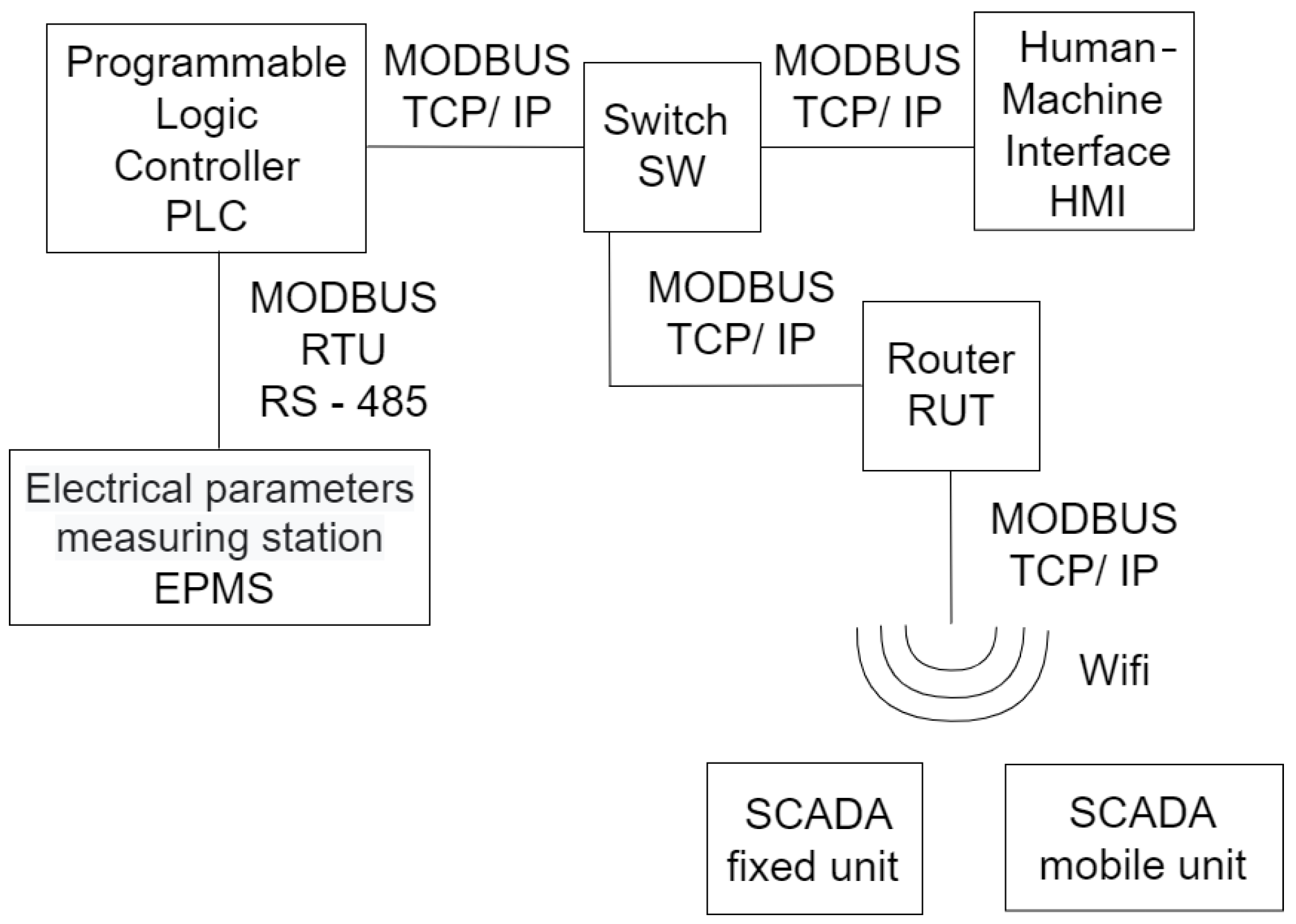

2.3. SCADA Architecture

- Apure KS-SMY2 Water Hydrostatic Level Transmitter (GL Environmental Technology Co., Ltd., Shanghai, China), 4–20 mA, 0–10 V.

- Opening and closing the segment gate with the flap are performed by means of left and right chain mechanisms. Each mechanism is driven by a motor controlled by a frequency converter.

3. Making the Software for the Automation Application

3.1. Realization of the Software for the Programmable Automaton

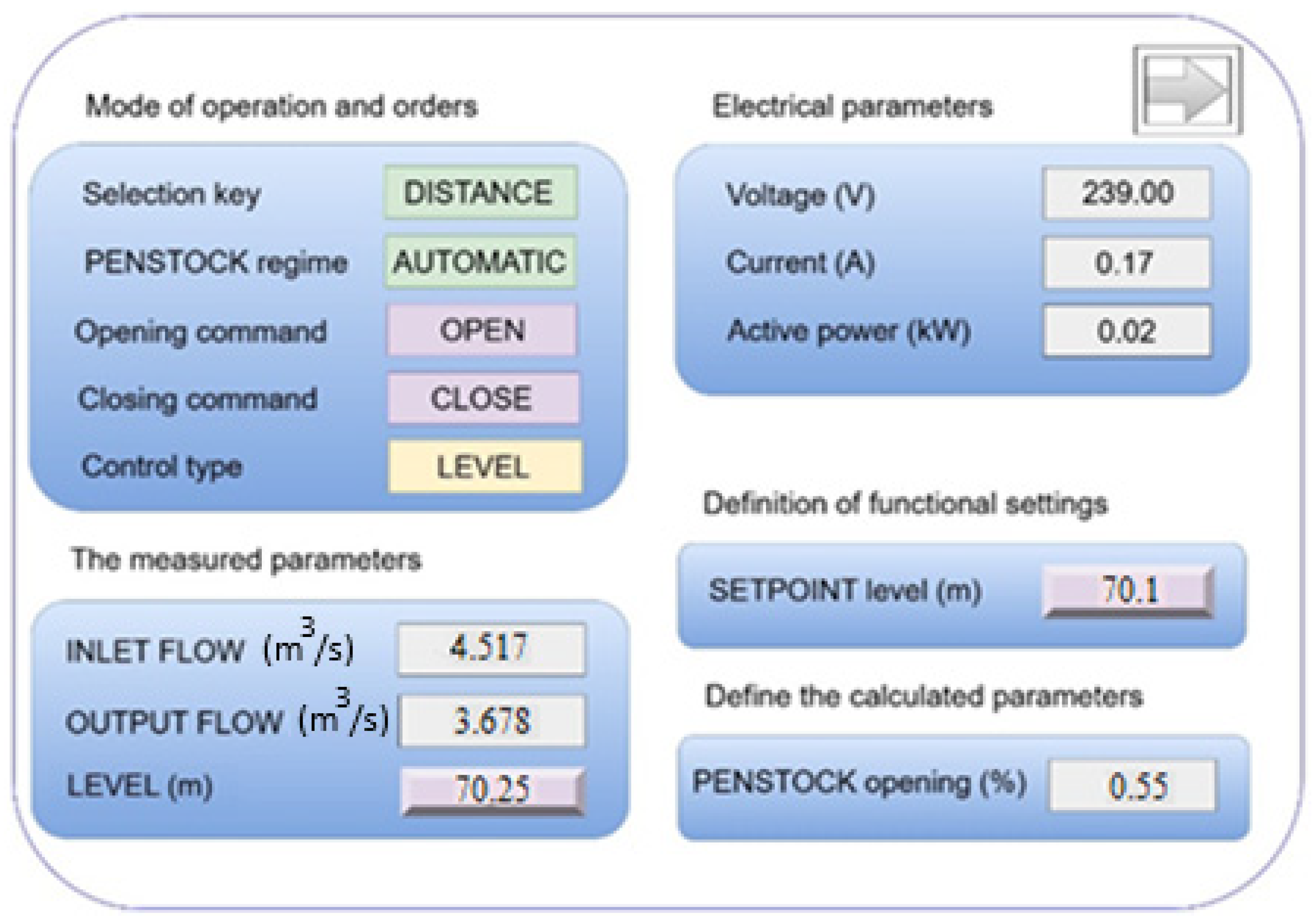

3.2. Realization of Monitoring, Control, and Data Acquisition System Software (SCADA)

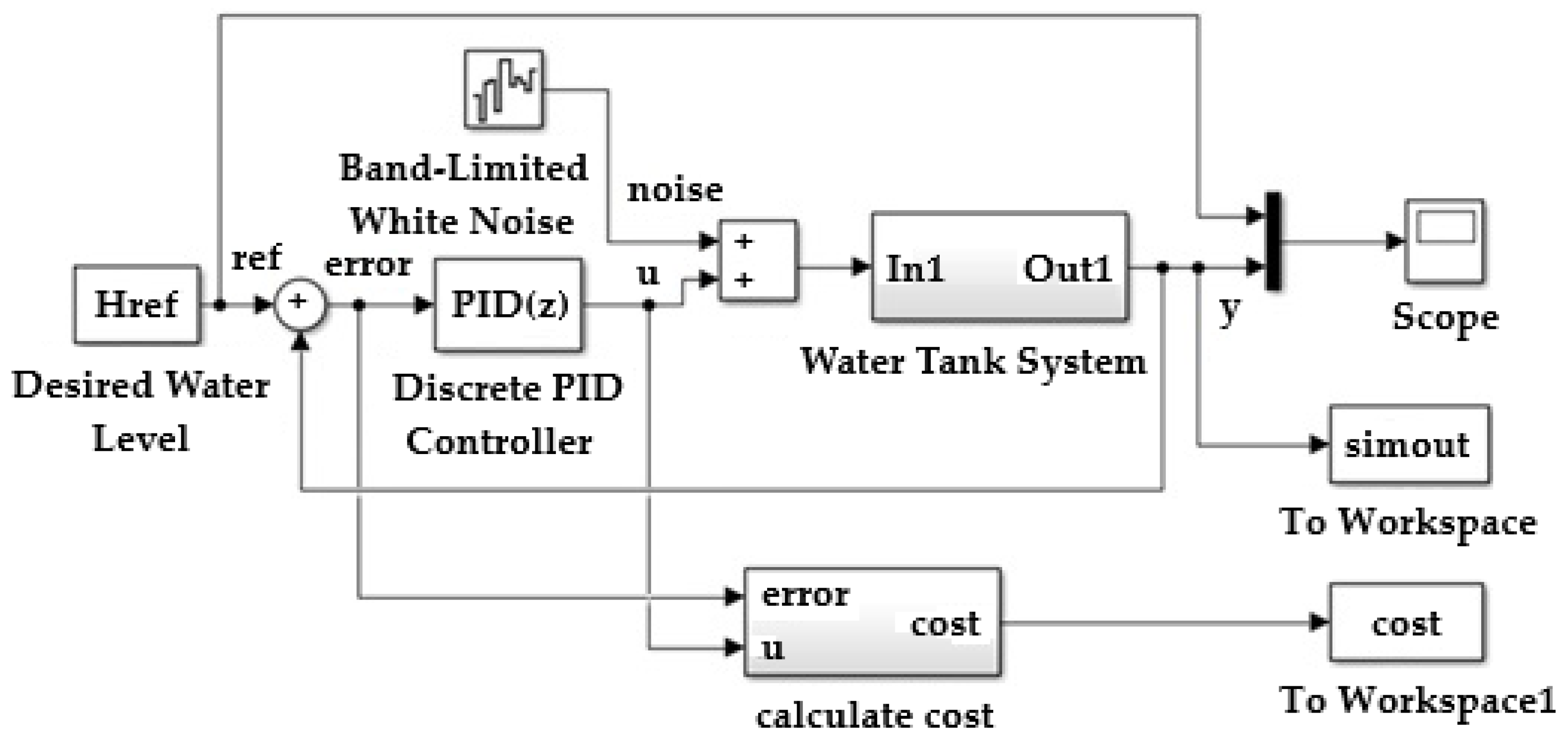

4. Optimization of PID Controller Parameters

The Need to Optimize PID Parameters

- Weather conditions (precipitation, snowmelt) can vary suddenly;

- The inlet flow is difficult to control;

- Reaction processes (opening of valves) have delays and nonlinearities.

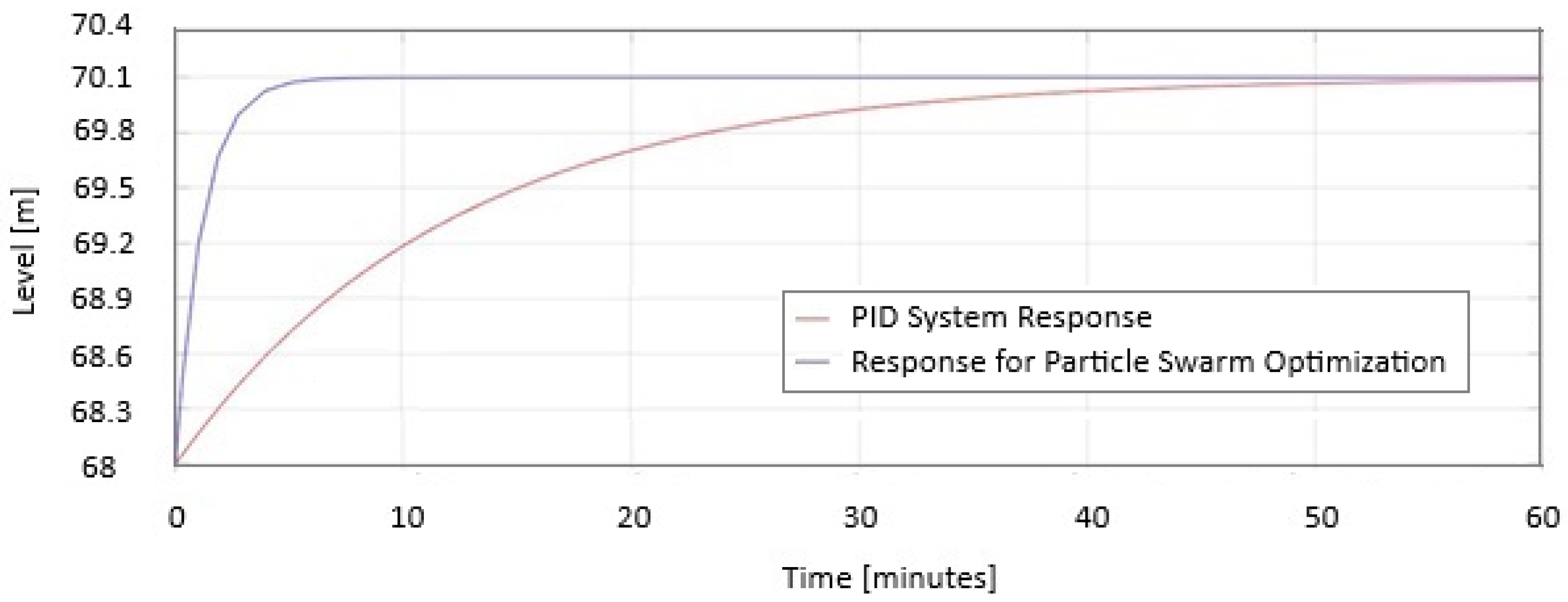

5. The Proposed Method of Optimizing the Parameters

5.1. Comparison Between the Optimization Methods of the PID Controller

5.2. Optimal Tuning of PID Controller Using Particle Swarm Optimization

- represents the rate of variation in the level at time t;

- is the time-varying level of the lake;

- is the surface of the reservoir;

- is the section constant of the beam (its width);

- is the opening of the sluice;

- is the operating speed of the sluice;

- represents the measured input flow.

- Identifying the equilibrium point;

- Linearizing the equation;

- Applying the Laplace transform;

- Determining the transfer function;

- Identifying the equilibrium point.

- Linearizing the equation

- = 10,000 ;

- = 1 [m];

- = 9.81 ;

- represents the initial level of the lake = 70 [m];

- represents the initial opening of the sluice = 0.05 [m].

- Kp = 10.1;

- Ki = 7.56;

- Kd = 0.14.

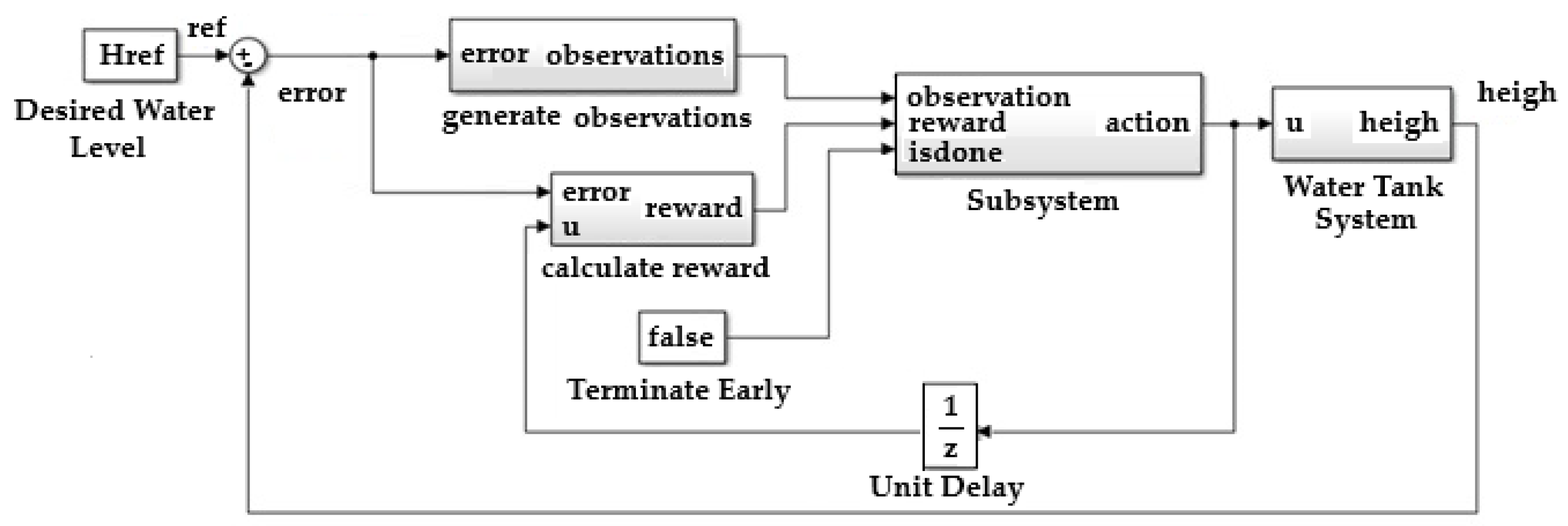

5.3. Tuning the PID Controller Using Reinforcement Learning in MATLAB

- An agent reinforcement learning block was inserted;

- The wave vector is created.

- The reward function for the reinforcement learning agent is defined as the negative of the Linear Quadratic Gaussian cost, as follows (8):

- is the height of water in the tank;

- is the water reference height.

- Creation of reinforcement learning water storage environment function: Randomize the reference signal and initial reservoir water height at the beginning of each episode.

- Linearization function and calculation of stability margins of the SISO water storage system.

- Simple actor and critic neural network creation functions using TensorFlow or PyTorch.

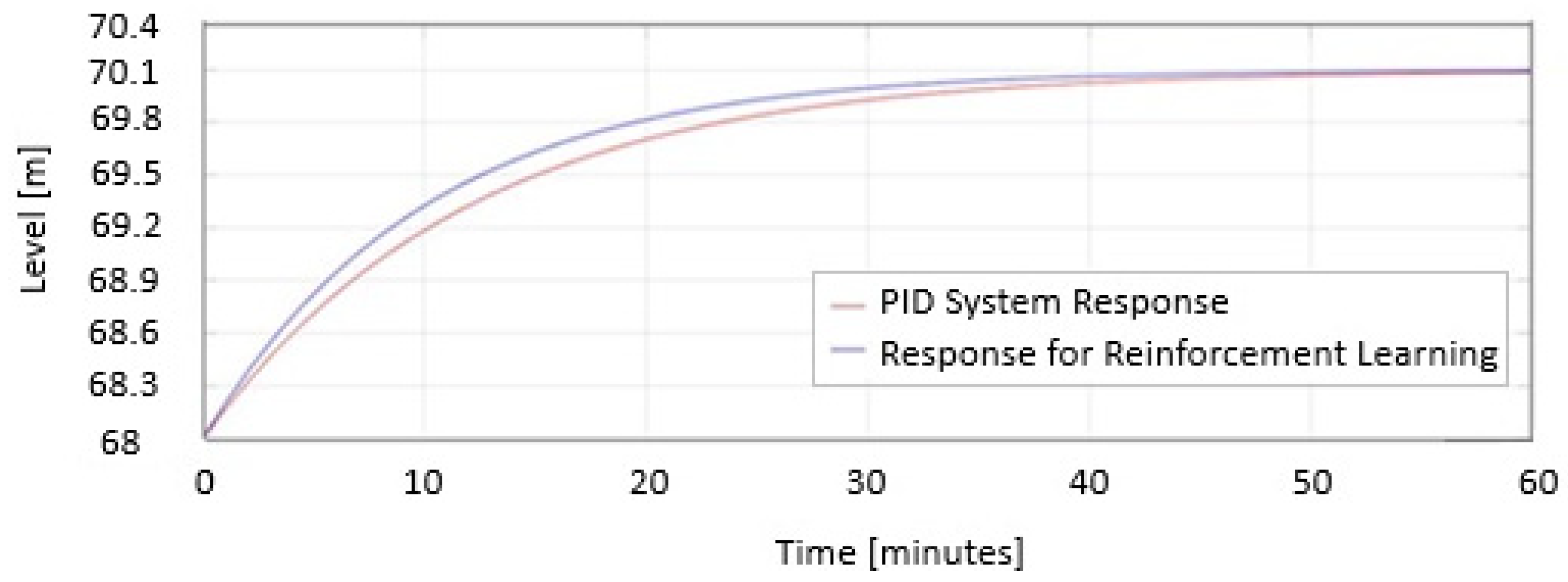

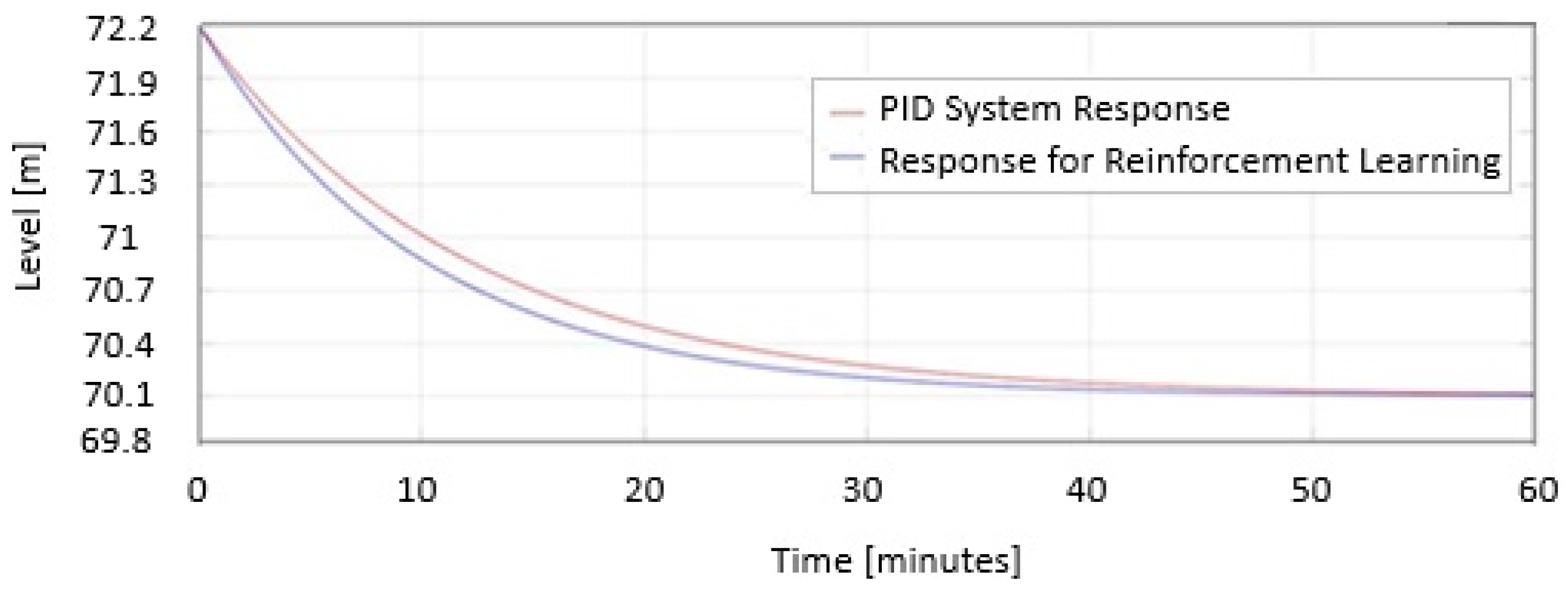

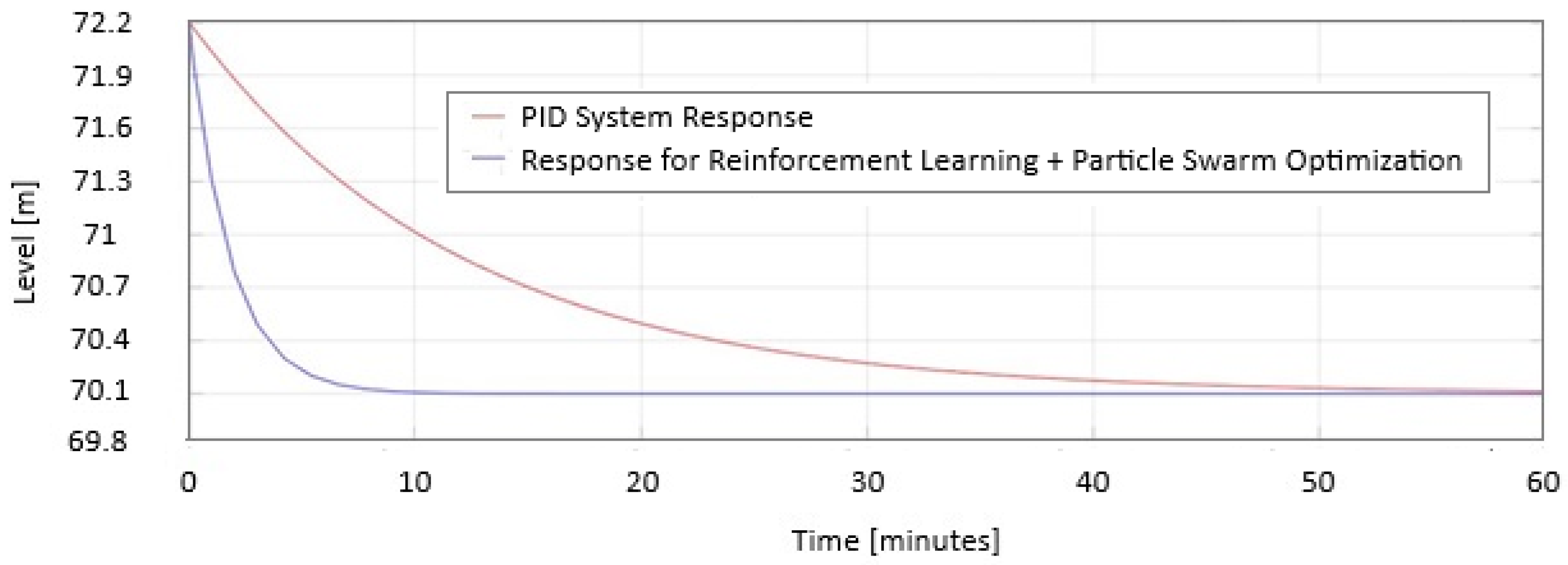

5.4. The Physical System Response

5.5. The Performance Evaluation of the Proposed PSO + RL System

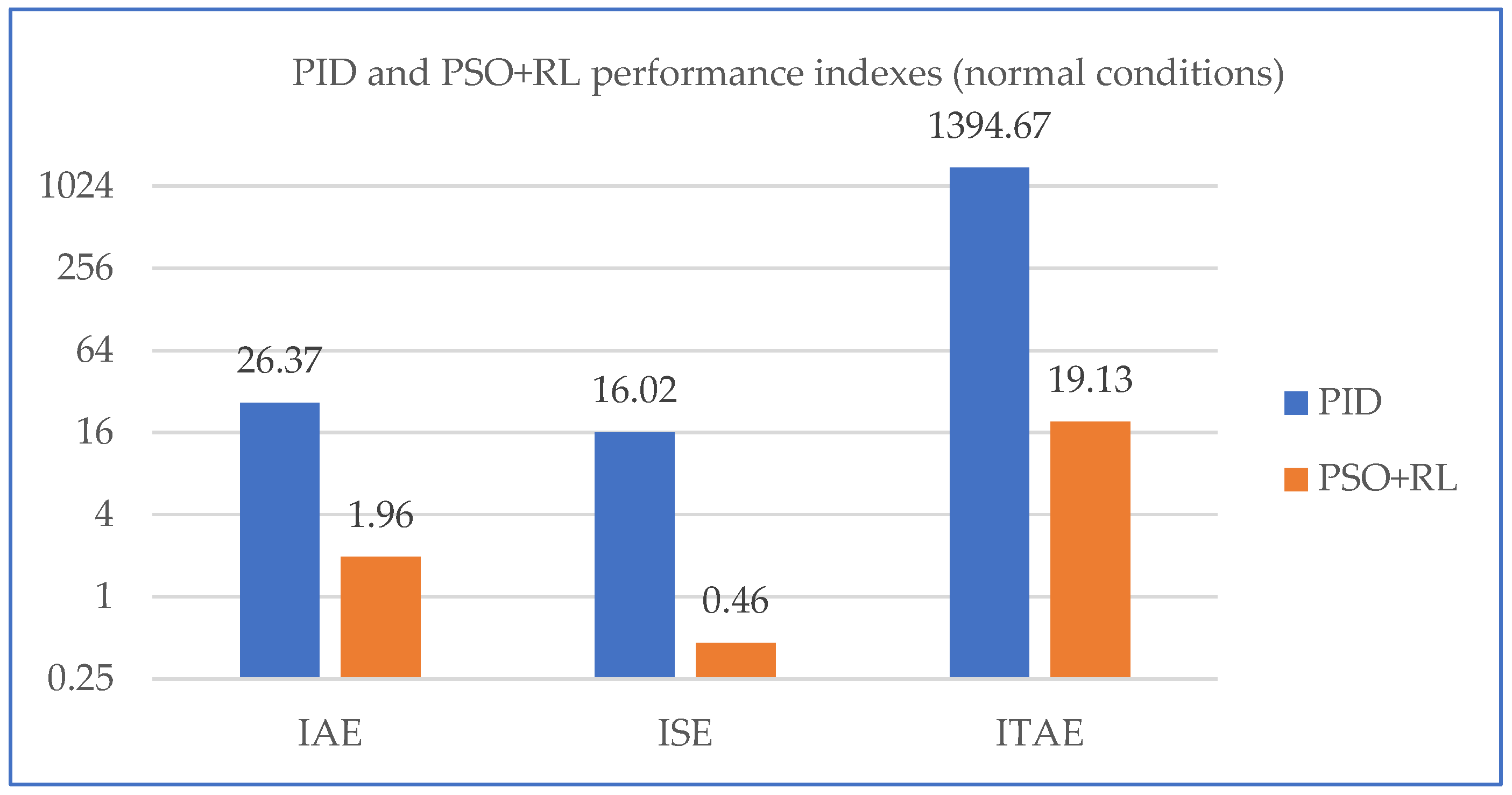

5.5.1. The Performance Evaluation Under Normal Conditions

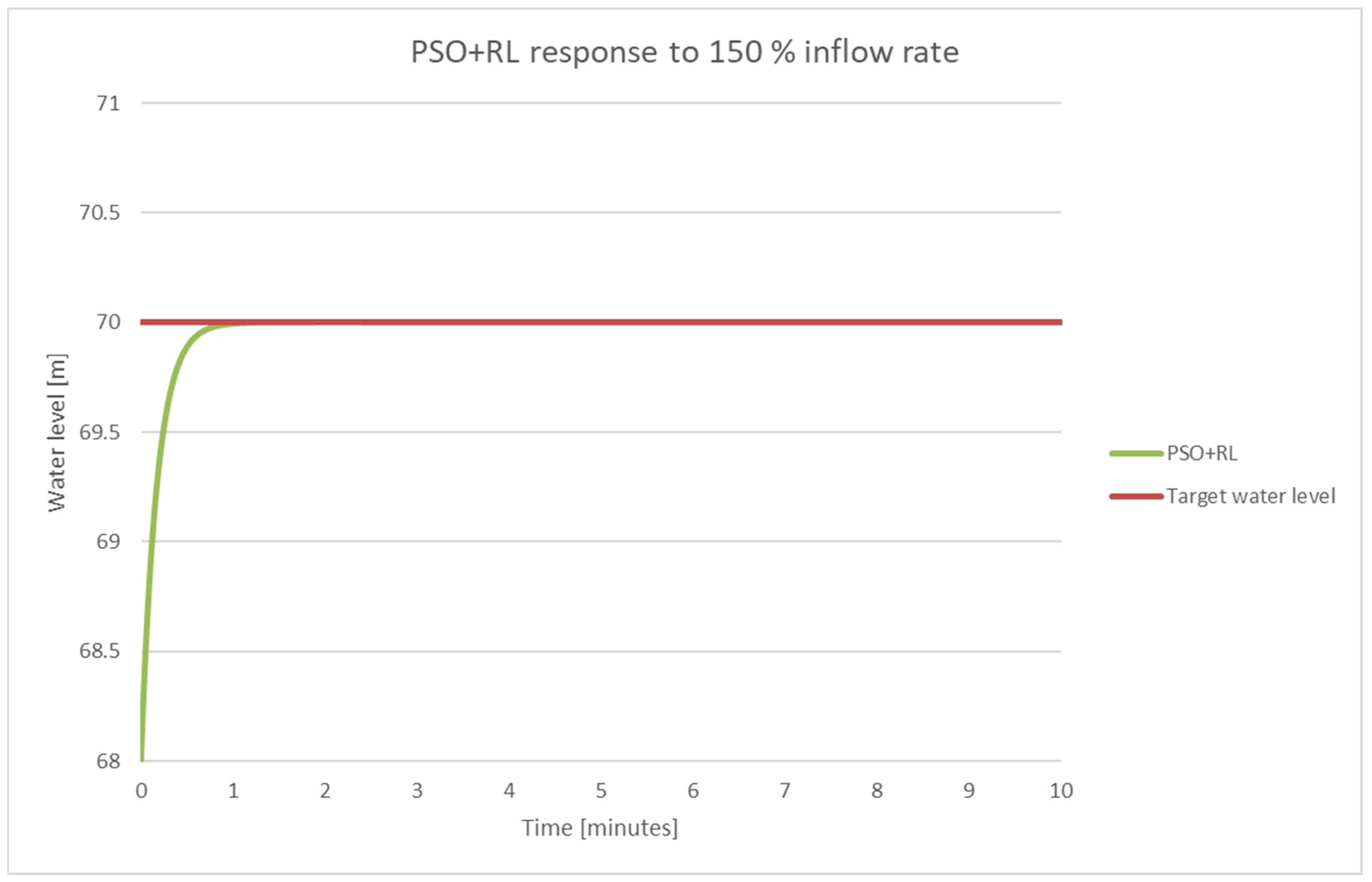

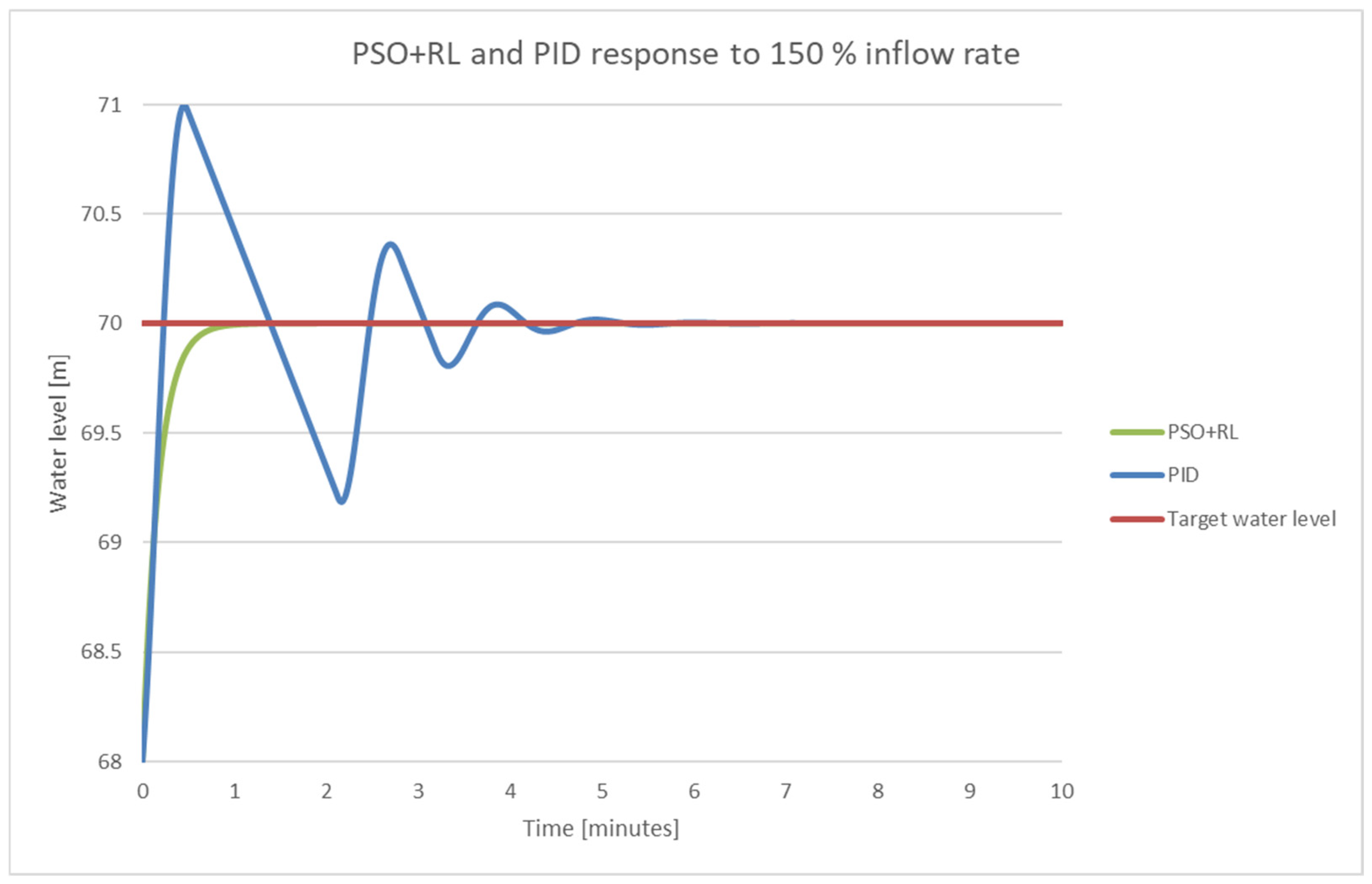

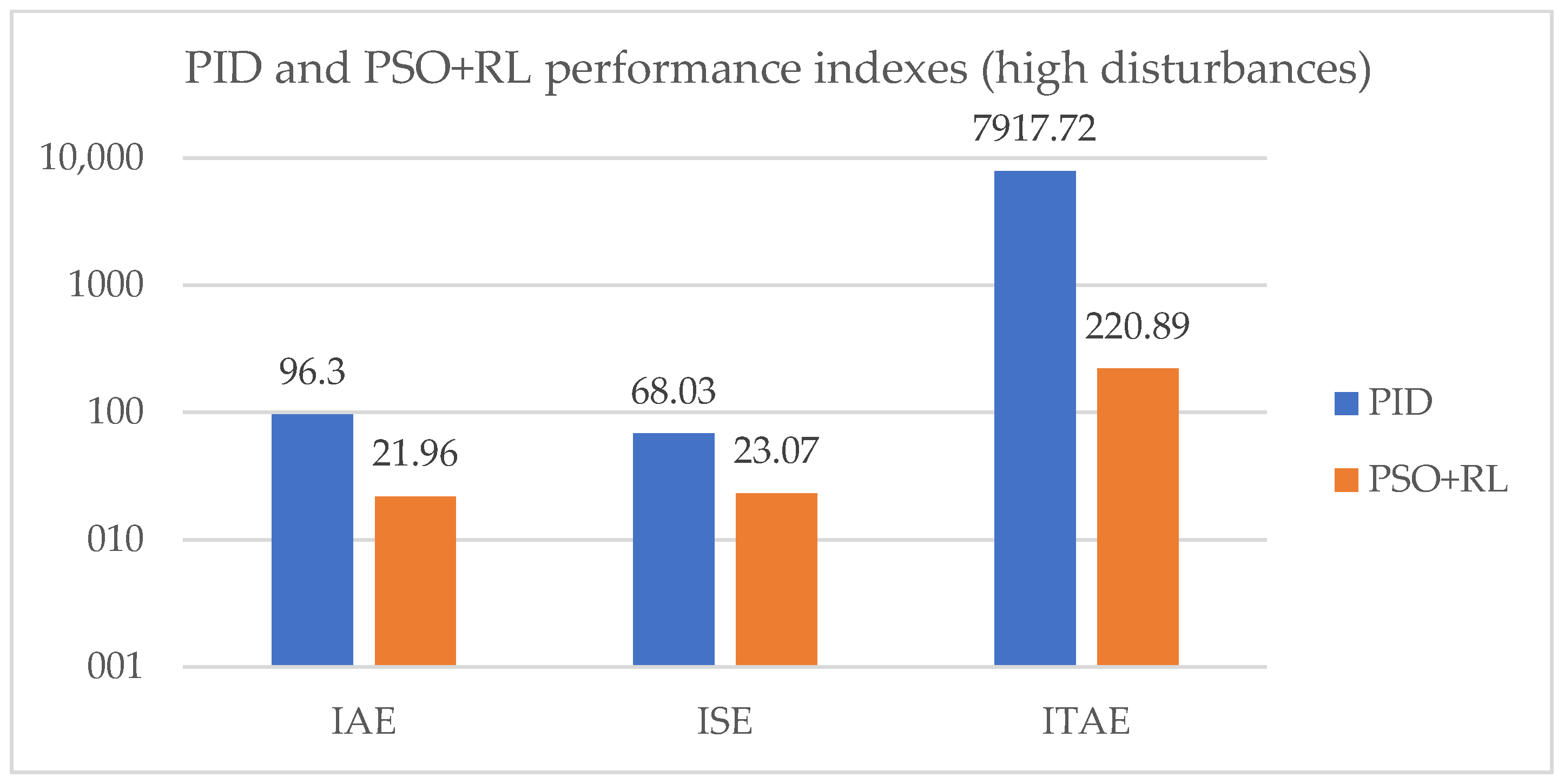

5.5.2. The Performance Evaluation Under High Disturbances

5.6. Robustness and Reproducibility Analysis

5.6.1. Robustness Evaluation

- Sensitivity to inflow rate variations

- Robustness to measurement noise

- Response under extreme disturbance

5.6.2. Reproducibility Evaluation

- Fixed random seed: All PSO and RL simulations were executed using a fixed random seed (np.random.seed(42)), ensuring identical initial conditions across independent runs.

- Multiple runs: The optimization was repeated in 50 independent runs. The mean and standard deviation of the main performance indexes were calculated.

- Full parameter disclosure: All PSO hyperparameters (number of particles = 20; inertia weight = 0.7; cognitive coefficient = 1.5; social coefficient = 1.5) and RL training parameters (actor learning rate = 0.01; critic learning rate = 0.01; batch size = 128; training episodes = 100) are reported.

- Code availability: The complete Python source code for PSO is provided in the Appendix A.

6. Computational Issues

6.1. Computational Requirements

6.1.1. Hardware Platform

- CPU: Intel Core i7-11700K @ 3.6 GHz (8 cores, 16 threads);

- GPU: NVIDIA RTX 3070 with 8 GB VRAM, CUDA 11.4 support;

- RAM: 32 GB DDR4;

- Software environment: Python 3.10 with TensorFlow 2.12 and MATLAB R2023a.

Training and Optimization Performance Are Summarized as Follows

- PSO Time: The PSO module required approximately 8 min for 50 iterations to provide initial PID parameters guiding the RL agent;

- RL Training Time: The RL agent required approximately 2 h for 100 episodes to achieve stable performance with cumulative reward > −355;

- Inference Latency: After training, the control policy delivered actions in 4.2 ms per step, well below the control loop sampling time of 0.1 s, ensuring real-time feasibility.

Resource Utilization

- CPU usage averaged 12%;

- GPU usage remained below 5%;

- Memory consumption was less than 4 GB RAM, leaving sufficient resources for additional monitoring tasks.

6.1.2. Fallback and Safety Mechanisms

- A safe PID controller (Kp = 5.3; Ki = 2.1; Kd = 0.06) operates as a fallback mechanism, automatically taking control if the RL inference exceeds 50 ms or produces invalid outputs;

- The sluice opening speed is physically limited to v = 0.03 m/s, preventing dangerous commands regardless of the controller decision;

- A watchdog system continuously monitors inference latency and performance indexes to trigger fallback when necessary.

6.2. Conclusions

7. Practical Feasibility and Limitations

- Training data requirements and quality:

- Robustness to noise and unexpected disturbances:

- Computational constraints and latency:

- Risks associated with online tuning:

- Need for extensive validation:

8. Conclusions

9. Future Perspectives

- Integration of weather forecasting into the RL agent: Incorporating short- and medium-term meteorological predictions (e.g., precipitation forecasts, temperature, inflow estimations) will enable more proactive and anticipatory decision-making, especially in the face of torrential rains or prolonged droughts.

- Scalability and deployment across multiple reservoirs: The current system can be scaled and adapted for real-time coordination between multiple dams in Romania, improving water distribution, energy efficiency, and flood protection at a regional level.

- Transition to online reinforcement learning: Future systems could include online learning agents that continue to improve based on new environmental data, ensuring adaptability to climate change and anthropic interventions.

- Enhancing robustness and cybersecurity: With increased autonomy and remote operation, the system should integrate fault-tolerant mechanisms and cybersecurity protocols to maintain safe and reliable operation of critical infrastructure.

- Extension to multi-objective control: Further developments could target not only flood prevention and level regulation, but also hydropower optimization, environmental flow preservation, and sediment control in dam reservoirs.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

References

- Berghuijs, W.-R.; Aabers, E.-E.; Larsen, J.-R.; Trancoso, R.; Woods, R.-A. Recent changes in extreme floods across multiple continents. Environ. Res. Lett. 2017, 12, 114035. [Google Scholar] [CrossRef]

- Arnell, N.-W.; Gosling, S.-N. The impacts of climate change on river flood risk at the global scale. Clim. Change 2016, 134, 387–401. [Google Scholar] [CrossRef]

- Berga, L. The Role of Hydropower in Climate Change Mitigation and Adaptation: A Review. Engineering 2016, 2, 313–318. [Google Scholar] [CrossRef]

- Babei, M.; Moeini, R.; Ehsanzadeh, E. Artificial Neural Network and Support Vector Machine Models for Inflow Prediction of Dam Reservoir (Case Study: Zayandehroud Dam Reservoir). Water Resour. Manag. 2019, 33, 1–16. [Google Scholar] [CrossRef]

- Kim, B.-J.; Lee, Y.-T.; Kim, B.-H. A Study on the Optimal Deep Learning Model for Dam Inflow Prediction. Water 2022, 14, 2766. [Google Scholar] [CrossRef]

- Bin, L.; Jie, Y.; Dexiu, H. Dam monitoring data analysis methods: A literature review. Struct. Control Health Monit. 2019, 27, e2501. [Google Scholar] [CrossRef]

- Mata, J. Interpretation of concrete dam behaviour with artificial neural network and multiple linear regression models. Eng. Struct. 2011, 33, 903–910. [Google Scholar] [CrossRef]

- Lin, C.; Li, T.; Chen, S.; Liu, X. Gaussian process regression-based forecasting model of dam deformation. Neural Comput. Appl. 2019, 31, 8503–8518. [Google Scholar] [CrossRef]

- Chang, F.-J.; Hsu, K.; Chang, L.-C. Flood Forecasting Using Machine Learning Methods; MDPI: Basel, Switzerland, 2019; p. 376. ISBN 978-3-03897-549-6. [Google Scholar]

- Liu, X.; Li, Z.; Sun, L.; Khailah, E.Y.; Wang, J. Critical review of statistical model of dam monitoring data. J. Build. Eng. 2023, 80, 108106. [Google Scholar] [CrossRef]

- Valipour, M.; Banihabib, M.-E.; Behbahani, S.-M.-R. Comparison of the ARMA, ARIMA, and the autoregressive artificial neural network models in forecasting the monthly inflow of Dez dam reservoir. J. Hydrol. 2013, 476, 433–441. [Google Scholar] [CrossRef]

- Jiang, H. Overview and development of PID control. Appl. Comput. Eng. 2024, 66, 187–191. [Google Scholar] [CrossRef]

- Kim, J.-J.; Lee, J.-J. Trajectory Optimization With Particle Swarm Optimization for Manipulator Motion Planning. IEEE Trans. Ind. Inform. 2015, 11, 620–631. [Google Scholar] [CrossRef]

- Szymon, B.; Sara, B.; Michal, F.; Pawel, N.; Tomasz, K.; Jacek, C.; Krzysztof, S.; Piotr, L. PID Controller tuning by Virtual Commissioning-a step to Industry 4.0. J. Phys. Conf. Ser. 2022, 2198, 012010. [Google Scholar] [CrossRef]

- Chen, S.-Y.; Yu, Y.; Da, Q.; Tan, J. Stabilizing Reinforcement Learning in Dynamic Environment with Application to Online Recommendation; Association for Computing Machinery: New York, NY, USA, 2018; pp. 1187–1196. [Google Scholar] [CrossRef]

- Li, Z.; Bai, L.; Tian, W.; Yan, H.; Hu, W.; Xin, K. Online Control of the Raw Water System of a High-Sediment River Based on Deep Reinforcement Learning. Water 2023, 15, 1131. [Google Scholar] [CrossRef]

- Amini, A.; Rey-Mermet, S.; Crettenand, S. A hybrid methodology for assessing hydropower plants under flexible operations: Leveraging experimental data and machine learning techniques. Appl. Energy 2025, 383, 1–24. [Google Scholar] [CrossRef]

- Shuprajhaa, T.; Kanth, S.; Srinivasan, K. Reinforcement learning based adaptive PID controller design for control of linear/nonlinear unstable processes. Appl. Soft Comput. 2022, 128, 109450. [Google Scholar] [CrossRef]

- Thiago, A.M.E.; Mario, R.; Sebastian, R.; Uwe, H. Energy Price as an Input to Fuzzy Wastewater Level Control in Pump Storage Operation. IEEE Access 2023, 11, 93701–93712. [Google Scholar] [CrossRef]

- Muhammad, I.; Rathiah, H.; Noor- Elaiza, A.-K. An Overview of Particle Swarm Optimization Variant. Procedia Eng. 2013, 53, 491–496. [Google Scholar] [CrossRef]

- Tareq, M.-S.; Ayman, A.-E.-S.; Mohammed, A.; Qasem, A.-T.; Mhd, A.-S.; Seyedall, M. Particle Swarm Optimization: A Comprehensive Survey. IEEE Access 2022, 10, 10031–10061. [Google Scholar] [CrossRef]

- Kristian, S.; Anton, C. When is PID a good choice? IFAC-PapersOnLine 2018, 51, 250–255. [Google Scholar] [CrossRef]

- Pei, J. Summary of PID Control System of Liquid Level of a SingleCapacity Tank. Int. Conf. Adv. Opt. Comput. Sci. 2021, 1865, 022061. [Google Scholar] [CrossRef]

- Nathan, P.-L.; Gregory, E.-S.; Philip, D.-L.; Michael, G.-F.; Johan, U.-B.; Gopaluni, R.-B. Optimal PID and Antiwindup Control Design as a Reinforcement Learning Problem. IFAC-PapersOnLine 2020, 53, 236–241. [Google Scholar] [CrossRef]

- Wang, D.; Tan, D.; Liu, L. Particle swarm optimization algorithm: An overview. In Soft Computing; Springer: Berlin/Heidelberg, Germany, 2017; Volume 22, pp. 387–408. [Google Scholar] [CrossRef]

- Adam, P.-P.; Jaroslaw, J.-N.; Agneieszka, E.-P. Population size in Particle Swarm Optimization. Swarm Evol. Comput. 2020, 58, 100718. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, S.; Ji, G. A Comprehensive Survey on Particle Swarm Optimization Algorithm and Its Applications. Math. Probl. Eng. 2015, 2015, 931256. [Google Scholar] [CrossRef]

- Wang, C. A modified particle swarm optimization algorithm based on velocity updating mechanism. Ain Shams Eng. J. 2019, 10, 847–866. [Google Scholar] [CrossRef]

- Meetu, J.; Vibha, S.; Singh, S. An Overview of Variants and Advancements of PSO Algorithm. Appl. Sci. 2022, 12, 8392. [Google Scholar] [CrossRef]

- Hu, J.; Wang, Z.; Qiao, S.; Gan, J. The fitness evaluation strategy in particle swarm optimization. Appl. Math. Comput. 2011, 217, 8655–8670. [Google Scholar] [CrossRef]

- Matthew, B.; Sam, R.; Jane, X.-W.; Zeb, K.-N.; Charles, B.; Demis, H. Reinforcement Learning, Fast and Slow. Trends Cogn. Sci. 2019, 23, 408–422. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y. Elite archives-driven particle swarm optimization for large scale numerical optimization and its engineering applications. Swarm Evol. Comput. 2023, 76, 101212. [Google Scholar] [CrossRef]

| Criterion | Output Feedback Adaptive Optimal Control | Particle Swarm Optimization + Reinforcement Learning |

|---|---|---|

| Type method | Feedback-based control + adaptation | Global optimization + learning |

| Real-time control? | Yes, for initial design | PSO is more suitable for offline optimization |

| Dynamic adaptation? | Yes—adaptive online control | No—PSO optimizes static parameters or offline policies |

| Complexity | Yes—go on | Large (optimization + learning) |

| Theoretical stability | Medium to large (nonlinear equations + adaptation) | No strict theoretical guarantee |

| Sturdiness | It can be proved formally (Lyapunov) | Excellent in global exploration |

| Appearance | Model-Free Output Feedback Control | PSO + RL |

|---|---|---|

| Model independence | Completely model-independent | PSO may require an approximate model for guidance |

| Convergence speed | Fast if the data is good | PSO accelerates RL → faster convergence than simple RL |

| Response quality | Good for tracking a reference in a 2D system | Very good when PSO is well guided by a mathematical model |

| Parameter optimization | Does not optimize RL hyperparameters | Yes—optimize policies, networks, or other RL parameters |

| Sturdiness | Good against process variations (through data learning) | Excellent—looks for good solutions globally, avoids getting stuck in local minima |

| Appearance | Model-free Output Feedback control | PSO + RL |

| Appearance | Model-Free 2D Tracking | PSO + RL |

|---|---|---|

| Need data | Strongly dependent on historical data | Less dependent— PSO can explore and learn |

| Computational complexity | Medium to large | Large—PSO and RL combined involves multiple evaluations per iteration |

| Guaranteed stability? | Not under all conditions | Not exactly—PSO optimizes performance, does not ensure formal stability |

| Exploration vs. exploitation | Based on RL policies | PSO can get stuck in local optimum without diversification |

| Sensitive tuning | Requires correct choice of reward function | Wrong choice of PSO parameters affects performance |

| Controller Type | Kp | Ti | Td |

|---|---|---|---|

| PID | 4 | 5 | 0.1 |

| Characteristics | Reinforcement Learning | Particle Swarm Optimization |

|---|---|---|

| Optimization method | Trial and error, based on rewards | Search by swarm behavior |

| Application | Sequential and complex problems | Continuous and uncomplicated problems |

| Efficiency | Effective in continuous learning | Fast for simple functions |

| The risk of blocking | Exploration-exploitation | Local minima |

| Convergence | Requires a lot of data; converges slowly | Fast convergence |

| Complexity | Complex and high computational resources | Easy to implement |

| The Parameters | Value |

|---|---|

| Mini size—lot | 128 samples |

| Gaussian noise variation | 0.1 |

| The fully connected learning dimension | 32 |

| Actor learning rate | 0.01 |

| Tuned Parameters and Step Response Characteristics | Kp | Ki | Kd | Overpass [%] | Time Settling [h] |

|---|---|---|---|---|---|

| Tuning based on TD3 | 7.3 | 6.1 | 0.06 | 0 | 0.3 |

| Adjustment based on linearizers | 106 | 5.9 | 0.03 | 1 | 0.1 |

| Day | Target Level [m] | Obtained Level [m] | Stabilization Time [min] | Level Oscillations [m] |

|---|---|---|---|---|

| 1 | 70 | 69.2 | 50 | ±0.8 |

| 2 | 70 | 69.5 | 55 | ±0.5 |

| 3 | 70 | 69.8 | 58 | ±0.2 |

| Day | Stationary Error [m] | Stabilization Time [min] | IAE | ISE | ITAE |

|---|---|---|---|---|---|

| 1 | 0.8 | 50 | 40 | 32 | 2000 |

| 2 | 0.5 | 55 | 27.5 | 13.75 | 1512 |

| 3 | 0.2 | 58 | 11.6 | 2.32 | 672 |

| Day | Target Level [m] | Obtained Level [m] | Stabilization Time [min] | Level Oscillations [m] |

|---|---|---|---|---|

| 1 | 70 | 69.8 | 9.8 | ±0.2 |

| 2 | 70 | 69.9 | 10 | ±0.1 |

| 3 | 70 | 69.7 | 9.7 | ±0.3 |

| Day | Stationary Error [m] | Stabilization Time [min] | IAE | ISE | ITAE |

|---|---|---|---|---|---|

| 1 | 0.2 | 9.8 | 1.96 | 0.392 | 19.2 |

| 2 | 0.1 | 10 | 1 | 0.1 | 10 |

| 3 | 0.3 | 9.7 | 2.91 | 0.873 | 28.2 |

| Control System | IAE [ms] | ISE [m2s] | ITAE [ms2] |

|---|---|---|---|

| PID | 96.3 | 68.03 | 7917.72 |

| PSO + RL | 21.96 | 23.07 | 220.88 |

| Scenario | Inflow Rate [m3/s] | Setling Time [min] | ISE | IAE | ITAE | Max Level [m] |

|---|---|---|---|---|---|---|

| Nominal | 125 | 1 | 0.15 | 3 | 3 | 69.95 |

| +10% Increase | 137.5 | 1.1 | 0.2376 | 3.96 | 4.356 | 69.94 |

| −10% Decrease | 112.5 | 1.2 | 0.18 | 3.6 | 4.32 | 69.95 |

| +20% Increase | 150 | 1.3 | 0.3822 | 5.46 | 7.098 | 69.93 |

| −20% Decrease | 100 | 1.3 | 0.2808 | 4.68 | 6.084 | 69.94 |

| Metric | Value |

|---|---|

| Hardware used | Intel i7-11700K, 32 GB RAM, RTX 3070 |

| PSO time | ~8 min (50 iterations) |

| RL training time | ~2 h (100 episodes) |

| Average inference time | 4.2 ms per decision |

| Sampling period | 0.1 s |

| CPU usage (online) ~12% GPU usage (online) ~5% Fallback strategy Safe PID controller |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Niculescu-Faida, O.; Popescu, C. Combined Particle Swarm Optimization and Reinforcement Learning for Water Level Control in a Reservoir. Sensors 2025, 25, 5055. https://doi.org/10.3390/s25165055

Niculescu-Faida O, Popescu C. Combined Particle Swarm Optimization and Reinforcement Learning for Water Level Control in a Reservoir. Sensors. 2025; 25(16):5055. https://doi.org/10.3390/s25165055

Chicago/Turabian StyleNiculescu-Faida, Oana, and Catalin Popescu. 2025. "Combined Particle Swarm Optimization and Reinforcement Learning for Water Level Control in a Reservoir" Sensors 25, no. 16: 5055. https://doi.org/10.3390/s25165055

APA StyleNiculescu-Faida, O., & Popescu, C. (2025). Combined Particle Swarm Optimization and Reinforcement Learning for Water Level Control in a Reservoir. Sensors, 25(16), 5055. https://doi.org/10.3390/s25165055