Sweet—An Open Source Modular Platform for Contactless Hand Vascular Biometric Experiments

Abstract

1. Introduction

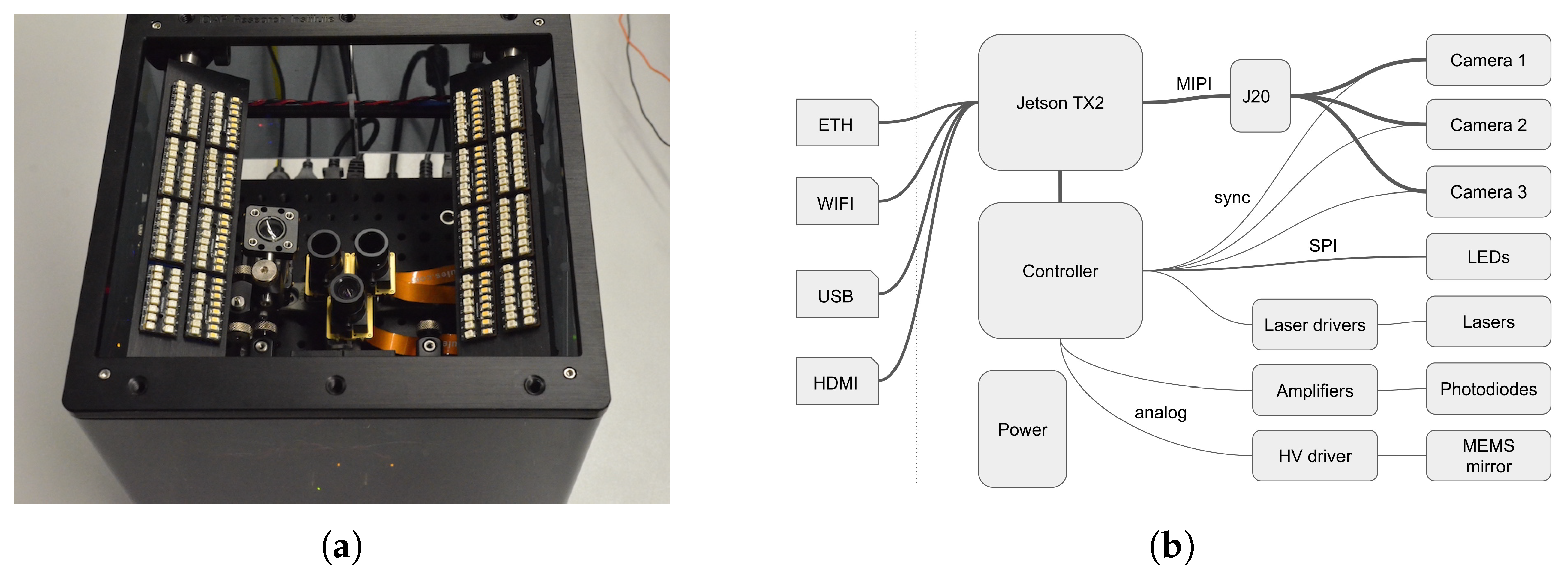

2. Related Work

3. Hardware Design

3.1. The Sweet Sensor Platform

- NIR HD camera pair, with multi-spectral illumination at 850 nm and 950 nm, for vein recognition (VR).

- Color HD camera with white illumination, used for surface features and PAD.

- Stereo Vision (SV) depth measurement using the NIR camera pair and laser dot projectors.

- Photometric Stereo (PS) to obtain fine grained depth resolution and texture from a set of frames illuminated from different angles.

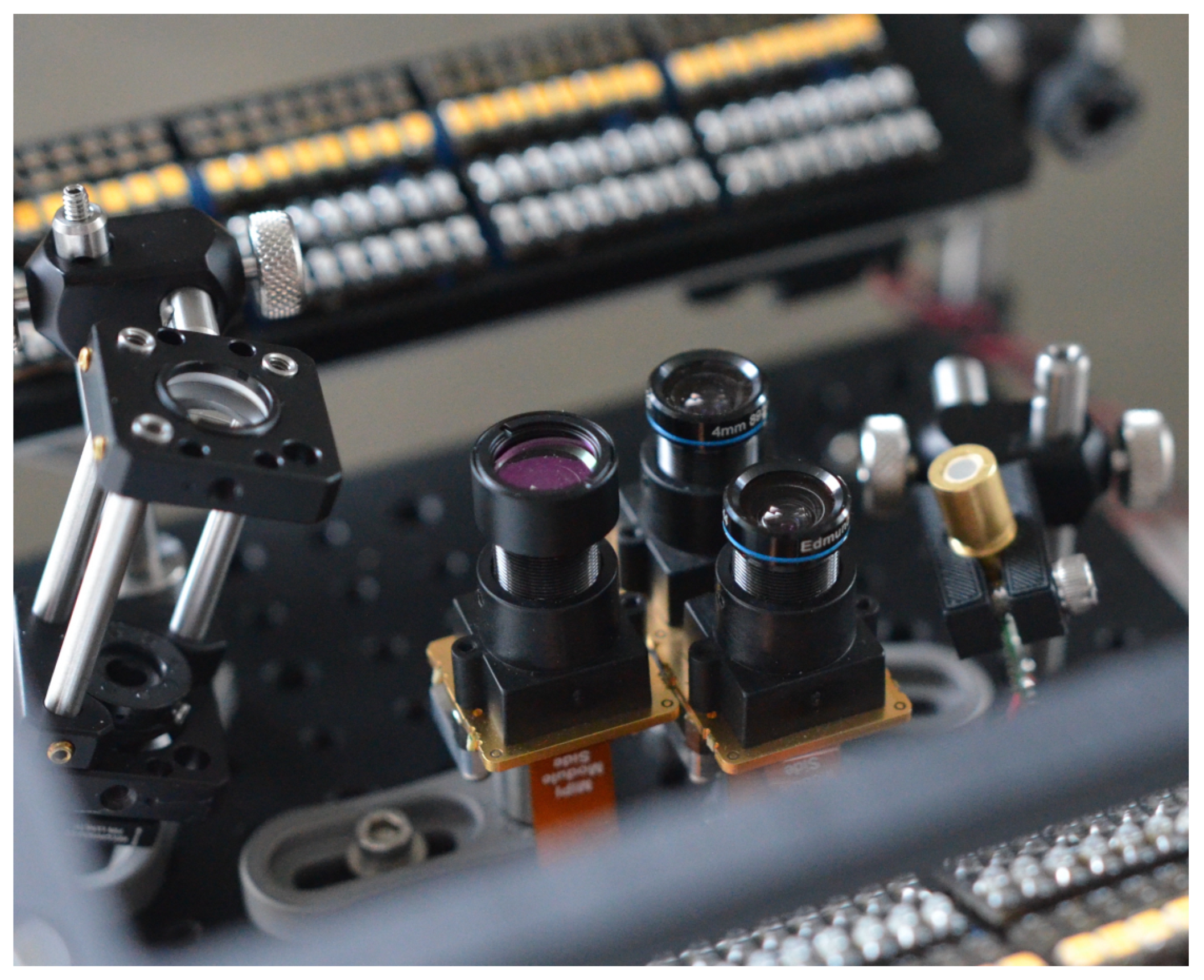

3.2. Camera and Optics

3.3. Jetson Acquisition and Processing Platform

3.4. Illumination, Lasers and Controller

3.5. Software

3.6. Lasers and Eye Safety Considerations

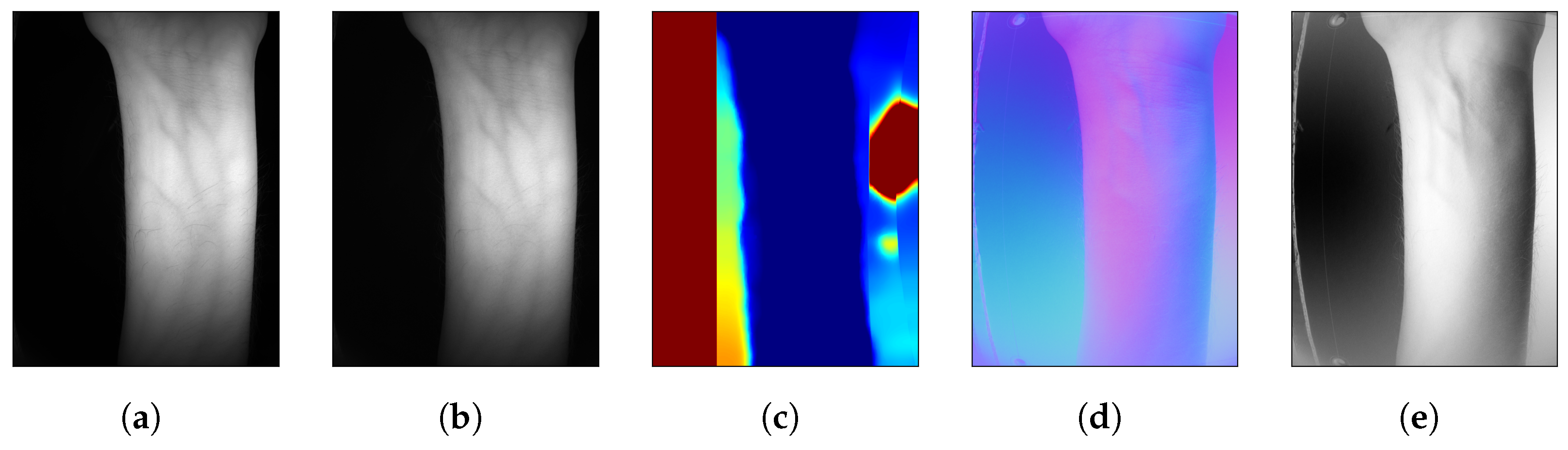

4. Calibration, Capture, Pre-Processing and Stereo Reconstruction

4.1. Illumination and Light Field Calibration

4.2. Camera Gain Calibration and Frame Alignment

4.3. Camera Calibration and Sensors Characterization

4.4. Stereo Reconstruction and Camera Views Alignment

4.5. Photometric Stereo

- The light vectors are assumed constant overall the image, such as emitted from infinitely distant isotropic sources.

- The hand skin is considered with a Lambertian reflectance model, without specular reflection.

- The hand surface is assumed smooth.

5. Vein Recognition Experiments

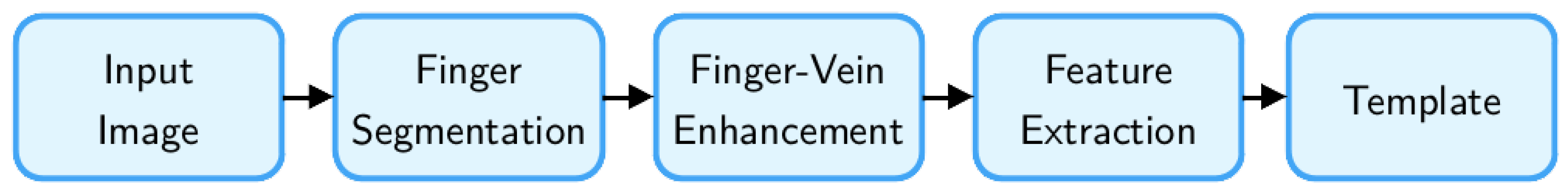

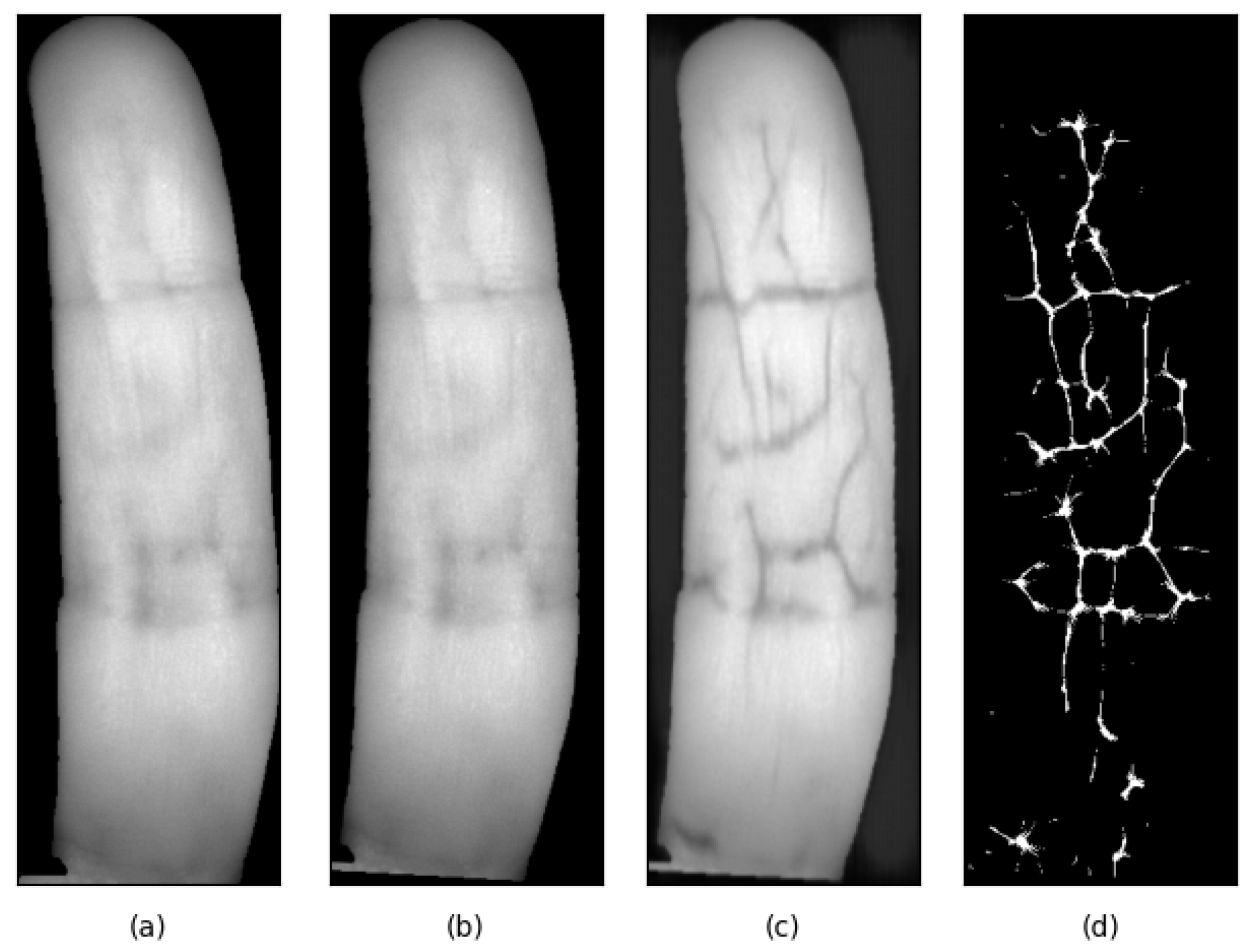

5.1. Finger-Vein Template Creation

5.2. Finger-Vein Matching

- FMR (False Match Rate): The proportion of comparison attempts between samples from different identities that are incorrectly accepted as a match.

- FNMR (False Non-Match Rate): The proportion of comparison attempts between samples from the same identity that are incorrectly rejected as a non-match.

- HTER (Half Total Error Rate): The average of FMR and FNMR, computed as: HTER = 0.5 × (FMR + FNMR).

5.3. Dataset Construction

5.4. Hand Recognition Experiments

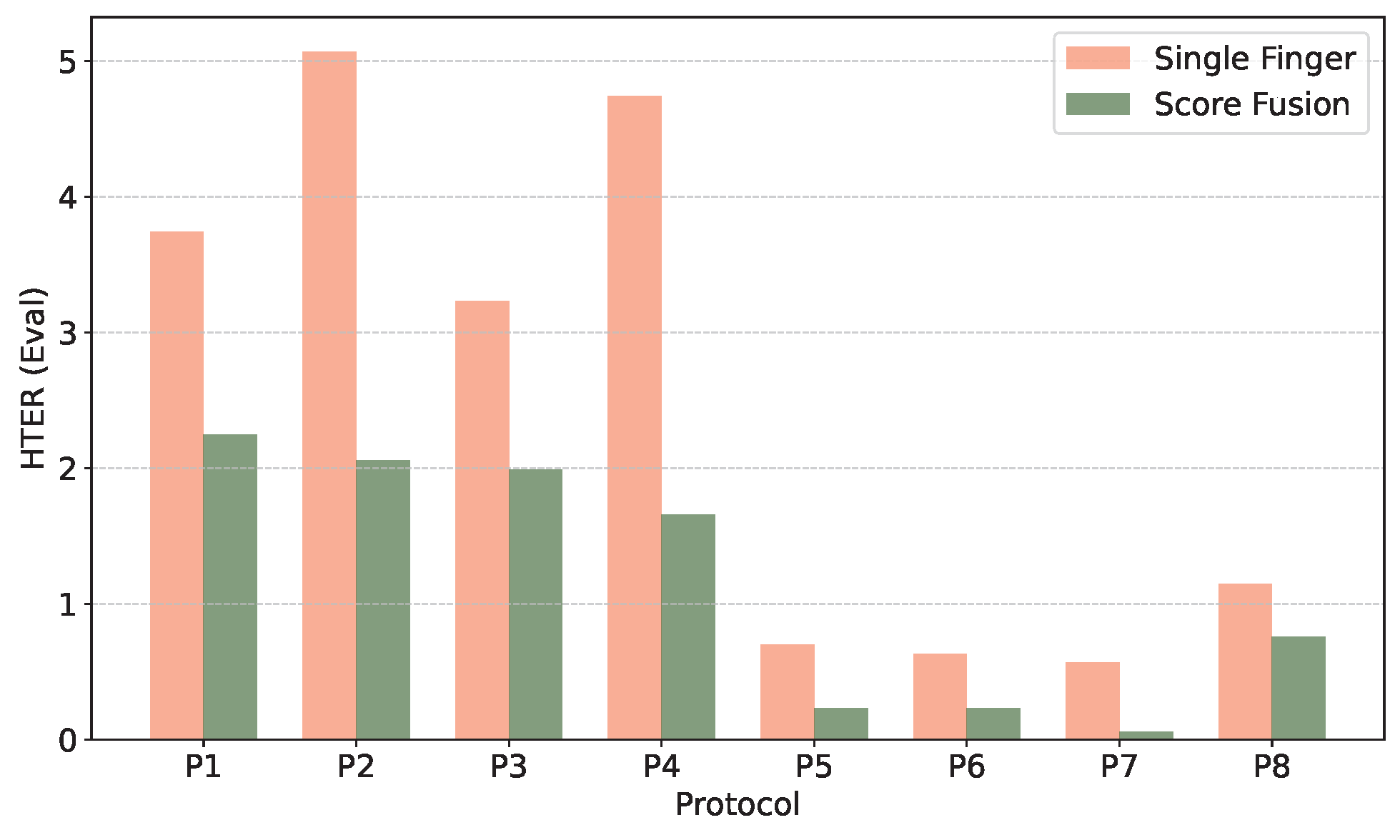

5.4.1. Single Finger Recognition

- The number of enrollment and probe samples for the protocols with NIR-950 illumination are consistently smaller than those for the NIR-850 protocols;

- The numbers of images used for enrolment are not exactly 180 (three fingers for 60 subjects each).

- Increased familiarity with the data-capture procedure– subjects were consistently asked to present the left-hand first, therefore, presentations with the right-hand may have been better, or

- Simply due to right-handedness of most subjects, which may lead to smaller variability in right-hand data, compared to left-hand data.

5.4.2. Hand-Recognition Based on Finger-Score Fusion

6. Concluding Remarks

Author Contributions

Funding

Conflicts of Interest

References

- Wang, L.; Leedham, G. Near- and far-infrared imaging for vein pattern biometrics. In Proceedings of the 2006 IEEE International Conference on Video and Signal Based Surveillance, Sydney, NSW, Australia, 22–24 November 2006; IEEE: Piscataway, NJ, USA, 2006; p. 52. [Google Scholar]

- Hashimoto, J. Finger vein authentication technology and its future. In Proceedings of the 2006 Symposium on VLSI Circuits, Honolulu, HI, USA, 15–17 June 2006; Digest of Technical Papers. IEEE: Piscataway, NJ, USA, 2006; pp. 5–8. [Google Scholar]

- Shaheed, K.; Liu, H.; Yang, G.; Qureshi, I.; Gou, J.; Yin, Y. A systematic review of finger vein recognition techniques. Information 2018, 9, 213. [Google Scholar] [CrossRef]

- Vanoni, M.; Tome, P.; El Shafey, L.; Marcel, S. Cross-database evaluation using an open finger vein sensor. In Proceedings of the 2014 IEEE Workshop on Biometric Measurements and Systems for Security And Medical Applications (BIOMS) Proceedings, Rome, Italy, 17 October 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 30–35. [Google Scholar]

- Mohsin, A.H.; Zaidan, A.A.; Zaidan, B.B.; Albahri, O.S.; Ariffin, S.A.B.; Alemran, A.; Enaizan, O.; Shareef, A.H.; Jasim, A.N.; Jalood, N.S.; et al. Finger vein biometrics: Taxonomy analysis, open challenges, future directions, and recommended solution for decentralised network architectures. IEEE Access 2020, 8, 9821–9845. [Google Scholar] [CrossRef]

- Ramach, R.; Raja, K.B.; Venkatesh, S.K.; Busch, C. Design and development of low-cost sensor to capture ventral and dorsal finger vein for biometric authentication. IEEE Sens. J. 2019, 19, 6102–6111. [Google Scholar]

- Otter, J.A.; Yezli, S.; Salkeld, J.A.; French, G.L. Evidence that contaminated surfaces contribute to the transmission of hospital pathogens and an overview of strategies to address contaminated surfaces in hospital settings. Am. J. Infect. Control 2013, 41, S6–S11. [Google Scholar] [CrossRef]

- Raghavendra, R.; Venkatesh, S.; Raja, K.; Busch, C. A low-cost multi-fingervein verification system. In Proceedings of the 2018 IEEE International Conference on Imaging Systems and Techniques (IST), Krakow, Poland, 16–18 October 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–6. [Google Scholar]

- Michael, G.K.O.; Connie, T.; Jin, A.T.B. Design and implementation of a contactless palm print and palm vein sensor. In Proceedings of the 11th International Conference on Control Automation Robotics & Vision, Singapore, 7–10 December 2010; IEEE: Piscataway, NJ, USA, 2010; pp. 1268–1273. [Google Scholar]

- Zhang, Z.; Zhong, F.; Kang, W. Study on reflection-based imaging finger vein recognition. IEEE Trans. Inf. Forensics Secur. 2021, 17, 2298–2310. [Google Scholar] [CrossRef]

- Bhattacharjee, S.; Geissbuehler, D.; Clivaz, G.; Kotwal, K.; Marcel, S. Vascular Biometrics Experiments on Candy–A New Contactless Finger-Vein Dataset. In Proceedings of the International Conference on Pattern Recognition, Kolkata, India, 1–5 December 2024; Springer: Berlin/Heidelberg, Germany, 2024; pp. 290–308. [Google Scholar]

- CandyFV. 2024. Available online: https://www.idiap.ch/en/scientific-research/data/candyfv (accessed on 1 August 2025).

- Tome, P.; Marcel, S. Palm vein database and experimental framework for reproducible research. In Proceedings of the 2015 International Conference of the Biometrics Special Interest Group (BIOSIG), Darmstadt, Germany, 9–11 September 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 1–7. [Google Scholar]

- Chen, P.; Ding, B.; Wang, H.; Liang, R.; Zhang, Y.; Zhu, W.; Liu, Y. Design of low-cost personal identification system that uses combined palm vein and palmprint biometric features. IEEE Access 2019, 7, 15922–15931. [Google Scholar] [CrossRef]

- Sierro, A.; Ferrez, P.; Roduit, P. Contact-less palm/finger vein biometrics. In Proceedings of the 2015 International Conference of the Biometrics Special Interest Group (BIOSIG), Darmstadt, Germany, 9–11 September 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 1–12. [Google Scholar]

- Raghavendra, R.; Busch, C. A low cost wrist vein sensor for biometric authentication. In Proceedings of the 2016 IEEE International Conference on Imaging Systems and Techniques (IST), Chania, Greece, 4–6 October 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 201–205. [Google Scholar]

- Bhattacharya, S.; Ranjan, A.; Reza, M. A portable biometrics system based on forehead subcutaneous vein pattern and periocular biometric pattern. IEEE Sens. J. 2022, 22, 7022–7033. [Google Scholar] [CrossRef]

- Yuan, W.; Tang, Y. The driver authentication device based on the characteristics of palmprint and palm vein. In Proceedings of the 2011 International Conference on Hand-Based Biometrics, Hong Kong, China, 17–18 November 2011; IEEE: Piscataway, NJ, USA, 2011; pp. 1–5. [Google Scholar]

- Zhang, D.; Guo, Z.; Gong, Y. Multispectral biometrics systems. In Multispectral Biometrics; Springer: Berlin/Heidelberg, Germany, 2016; pp. 23–35. [Google Scholar]

- Spinoulas, L.; Hussein, M.E.; Geissbühler, D.; Mathai, J.; Almeida, O.G.; Clivaz, G.; Marcel, S.; Abdalmageed, W. Multispectral biometrics system framework: Application to presentation attack detection. IEEE Sens. J. 2021, 21, 15022–15041. [Google Scholar] [CrossRef]

- Crihalmeanu, S.; Ross, A. Multispectral scleral patterns for ocular biometric recognition. Pattern Recognit. Lett. 2012, 33, 1860–1869. [Google Scholar] [CrossRef]

- Rowe, R.; Nixon, K.; Corcoran, S. Multispectral fingerprint biometrics. In Proceedings of the Proceedings from the Sixth Annual IEEE SMC Information Assurance Workshop, West Point, NY, USA, 15–17 June 2005; pp. 14–20. [Google Scholar]

- Hao, Y.; Sun, Z.; Tan, T.; Ren, C. Multispectral palm image fusion for accurate contact-free palmprint recognition. In Proceedings of the 2008 15th IEEE International Conference on Image Processing, San Diego, CA, USA, 12–15 October 2008; IEEE: Piscataway, NJ, USA, 2008; pp. 281–284. [Google Scholar]

- Chang, K.I.; Bowyer, K.W.; Flynn, P.J. Multimodal 2D and 3D biometrics for face recognition. In Proceedings of the 2003 IEEE International SOI Conference. Proceedings (Cat. No. 03CH37443), Nice, France, 17 October 2003; IEEE: Piscataway, NJ, USA, 2003; pp. 187–194. [Google Scholar]

- Liang, X.; Li, Z.; Fan, D.; Zhang, B.; Lu, G.; Zhang, D. Innovative contactless palmprint recognition system based on dual-camera alignment. IEEE Trans. Syst. Man, Cybern. Syst. 2022, 52, 6464–6476. [Google Scholar] [CrossRef]

- Kauba, C.; Drahanský, M.; Nováková, M.; Uhl, A.; Rydlo, Š. Three-Dimensional Finger Vein Recognition: A Novel Mirror-Based Imaging Device. J. Imaging 2022, 8, 148. [Google Scholar] [CrossRef] [PubMed]

- Kang, W.; Liu, H.; Luo, W.; Deng, F. Study of a full-view 3D finger vein verification technique. IEEE Trans. Inf. Forensics Secur. 2019, 15, 1175–1189. [Google Scholar] [CrossRef]

- Cheng, K.H.; Kumar, A. Contactless biometric identification using 3D finger knuckle patterns. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 42, 1868–1883. [Google Scholar] [CrossRef]

- Chen, L.; Wang, X.; Jiang, H.; Tang, L.; Li, Z.; Du, Y. Design of Palm Vein Platform and Pattern Enhancement Model Based on Raspberry Pi. In Proceedings of the 2021 IEEE International Conference on Emergency Science and Information Technology (ICESIT), Chongqing, China, 22–24 November 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 495–498. [Google Scholar]

- Gunawan, I.P.A.S.; Sigit, R.; Gunawan, A.I. Vein visualization system using camera and projector based on distance sensor. In Proceedings of the 2018 International Electronics Symposium on Engineering Technology and Applications (IES-ETA), Bali, Indonesia, 29–30 October 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 150–156. [Google Scholar]

- Yin, Y.; Liu, L.; Sun, X. SDUMLA-HMT: A Multimodal Biometric Database. In Proceedings of the Biometric Recognition, Beijing, China, 3–4 December 2011; Springer: Berlin/Heidelberg, Germany, 2011; pp. 260–268. [Google Scholar]

- Lu, Y.; Xie, S.J.; Yoon, S.; Wang, Z.; Park, D.S. An available database for the research of finger vein recognition. In Proceedings of the 2013 6th International Congress on Image and Signal Processing (CISP), Hangzhou, China, 16–18 December 2013; IEEE: Piscataway, NJ, USA, 2013; Volume 1, pp. 410–415. [Google Scholar]

- Ton, B.T.; Veldhuis, R.N.J. A high quality finger vascular pattern dataset collected using a custom designed capturing device. In Proceedings of the 2013 International Conference on Biometrics (ICB), Madrid, Spain, 4–7 June 2013; pp. 1–5. [Google Scholar]

- Qiu, X.; Kang, W.; Tian, S.; Jia, W.; Huang, Z. Finger Vein Presentation Attack Detection Using Total Variation Decomposition. IEEE Trans. Inf. Forensics Secur. 2018, 13, 465–477. [Google Scholar] [CrossRef]

- Miura, M.; Nagasaka, A.; Miyatake, T. Feature Extraction of finger-vein patterns based on repeated line tracking and its Application to Personal Identification. Mach. Vis. Appl. 2004, 15, 194–203. [Google Scholar] [CrossRef]

- Miura, M.; Nagasaka, A.; Miyatake, T. Extraction of Finger-Vein Pattern Using Maximum Curvature Points in Image Profiles. In Proceedings of the IAPR Conference on Machine Vision Applications, Tsukuba Science City, Japan, 16–18 May 2005; pp. 347–350. [Google Scholar]

- Huang, B.; Dai, Y.; Li, R.; Tang, D.; Li, W. Finger-vein authentication based on wide line detector and pattern normalization. In Proceedings of the 2010 20th International Conference on Pattern Recognition, Istanbul, Turkey, 23–26 August 2010; IEEE: Piscataway, NJ, USA, 2010; pp. 1269–1272. [Google Scholar]

- Yang, J.; Shi, Y.; Wu, R. Finger-Vein Recognition Based on Gabor Features. In Biometric Systems; Riaz, Z., Ed.; IntechOpen: Rijeka, Croatia, 2011; Chapter 2. [Google Scholar]

- Kovač, I.; Marák, P. Finger vein recognition: Utilization of adaptive gabor filters in the enhancement stage combined with SIFT/SURF-based feature extraction. Signal Image Video Process. 2023, 17, 635–641. [Google Scholar] [CrossRef]

- Bay, H.; Tuytelaars, T.; Van Gool, L. SURF: Speeded Up Robust Features. Comput. Vis. Image Underst. (CVIU) 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Zhang, R.; Yin, Y.; Deng, W.; Li, C.; Zhang, J. Deep learning for finger vein recognition: A brief survey of recent trend. arXiv 2022, arXiv:2207.02148. [Google Scholar] [CrossRef]

- Bros, V.; Kotwal, K.; Marcel, S. Vein enhancement with deep auto-encoders to improve finger vein recognition. In Proceedings of the 2021 International Conference of the Biometrics Special Interest Group (BIOSIG), Darmstadt, Germany, 15–17 September 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1–5. [Google Scholar]

- Kotwal, K.; Marcel, S. Residual Feature Pyramid Network for Enhancement of Vascular Patterns. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, New Orleans, LA, USA, 18–24 June 2022; pp. 1588–1595. [Google Scholar]

- Hirschmuller, H. Stereo Processing by Semiglobal Matching and Mutual Information. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 328–341. [Google Scholar] [CrossRef] [PubMed]

- Woodham, R.J. Photometric method for determining surface orientation from multiple images. Opt. Eng. 1980, 19, 139–144. [Google Scholar] [CrossRef]

- Otsu, N. A Threshold Selection Method from Gray-Level Histograms. IEEE Trans. Syst. Man. Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

| Age-Group (in Years) | Male | Female |

|---|---|---|

| 18–30 | 22 | 19 |

| 31–50 | 20 | 18 |

| 51 and above | 20 | 21 |

| Total | 62 | 58 |

| Id | Protocol | # Images Dev | # Images Eval | ||

|---|---|---|---|---|---|

| Enrol | Probe | Enrol | Probe | ||

| P1 | LH_left_850 | 159 | 8064 | 159 | 7836 |

| P2 | LH_left_950 | 141 | 4908 | 138 | 4665 |

| P3 | LH_right_850 | 159 | 7968 | 156 | 7791 |

| P4 | LH_right_950 | 147 | 6372 | 144 | 6300 |

| P5 | RH_left_850 | 156 | 7200 | 156 | 6998 |

| P6 | RH_left_950 | 153 | 6138 | 136 | 4644 |

| P7 | RH_right_850 | 156 | 7116 | 156 | 7032 |

| P8 | RH_right_950 | 144 | 4842 | 123 | 3969 |

| Protocol | Dev Set | Eval Set | ||||

|---|---|---|---|---|---|---|

| FMR | FNMR | HTER | FMR | FNMR | HTER | |

| P1 | 0.1 | 3.82 | 1.96 | 0.17 | 7.3 | 3.74 |

| P2 | 0.08 | 8.7 | 4.39 | 0.09 | 10.05 | 5.07 |

| P3 | 0.08 | 5.77 | 2.93 | 0.0 | 6.46 | 3.23 |

| P4 | 0.09 | 7.74 | 3.91 | 0.02 | 9.45 | 4.74 |

| P5 | 0.09 | 0.66 | 0.38 | 0.79 | 0.61 | 0.7 |

| P6 | 0.09 | 0.57 | 0.33 | 0.66 | 0.60 | 0.63 |

| P7 | 0.09 | 0.33 | 0.21 | 0.68 | 0.45 | 0.57 |

| P8 | 0.09 | 1.39 | 0.74 | 0.04 | 2.27 | 1.15 |

| Protocol | Dev Set | Eval Set | ||||

|---|---|---|---|---|---|---|

| FMR | FNMR | HTER | FMR | FNMR | HTER | |

| P1 | 0.1 | 2.68 | 1.39 | 0.20 | 4.29 | 2.25 |

| P2 | 0.08 | 4.2 | 2.14 | 0.54 | 3.57 | 2.06 |

| P3 | 0.05 | 2.71 | 1.38 | 0.05 | 3.93 | 1.99 |

| P4 | 0.06 | 3.04 | 1.55 | 0.6 | 2.67 | 1.66 |

| P5 | 0.06 | 0.0 | 0.03 | 0.46 | 0.0 | 0.23 |

| P6 | 0.07 | 0.0 | 0.03 | 0.45 | 0.0 | 0.23 |

| P7 | 0.06 | 0.0 | 0.03 | 0.11 | 0.0 | 0.06 |

| P8 | 0.08 | 0.46 | 0.27 | 0.0 | 1.51 | 0.76 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Geissbühler, D.; Bhattacharjee, S.; Kotwal, K.; Clivaz, G.; Marcel, S. Sweet—An Open Source Modular Platform for Contactless Hand Vascular Biometric Experiments. Sensors 2025, 25, 4990. https://doi.org/10.3390/s25164990

Geissbühler D, Bhattacharjee S, Kotwal K, Clivaz G, Marcel S. Sweet—An Open Source Modular Platform for Contactless Hand Vascular Biometric Experiments. Sensors. 2025; 25(16):4990. https://doi.org/10.3390/s25164990

Chicago/Turabian StyleGeissbühler, David, Sushil Bhattacharjee, Ketan Kotwal, Guillaume Clivaz, and Sébastien Marcel. 2025. "Sweet—An Open Source Modular Platform for Contactless Hand Vascular Biometric Experiments" Sensors 25, no. 16: 4990. https://doi.org/10.3390/s25164990

APA StyleGeissbühler, D., Bhattacharjee, S., Kotwal, K., Clivaz, G., & Marcel, S. (2025). Sweet—An Open Source Modular Platform for Contactless Hand Vascular Biometric Experiments. Sensors, 25(16), 4990. https://doi.org/10.3390/s25164990