Train-YOLO: An Efficient and Lightweight Network Model for Train Component Damage Detection

Abstract

1. Introduction

2. Dataset Preparation

2.1. Data Collection

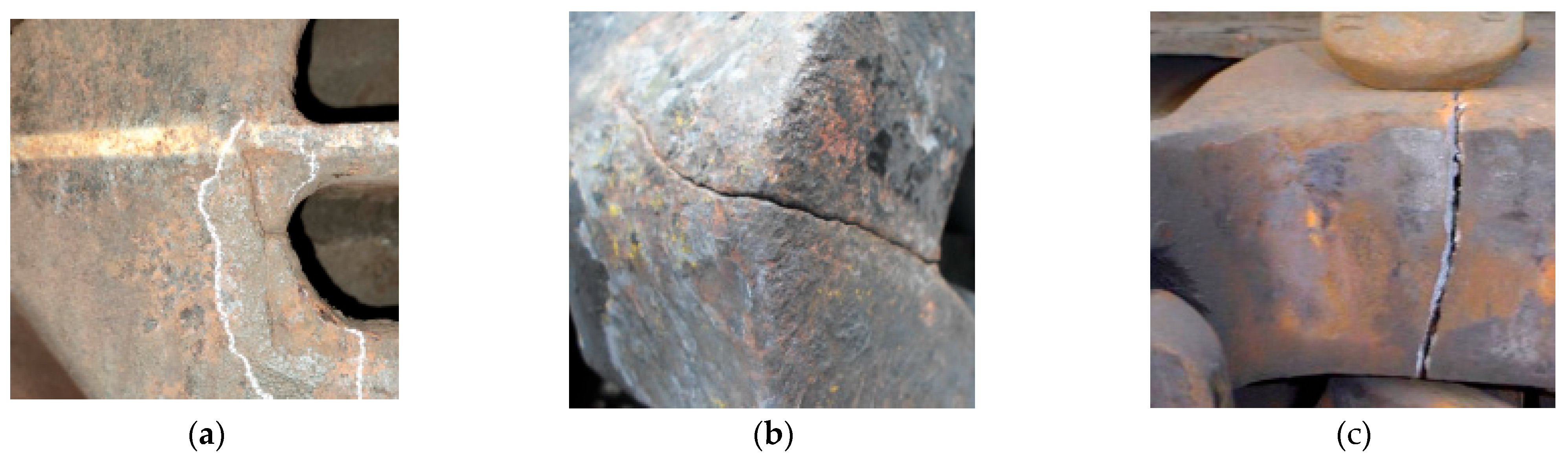

2.2. Fault Categorization

2.3. Data Augmentation

3. The Proposed Method Models and Improvements

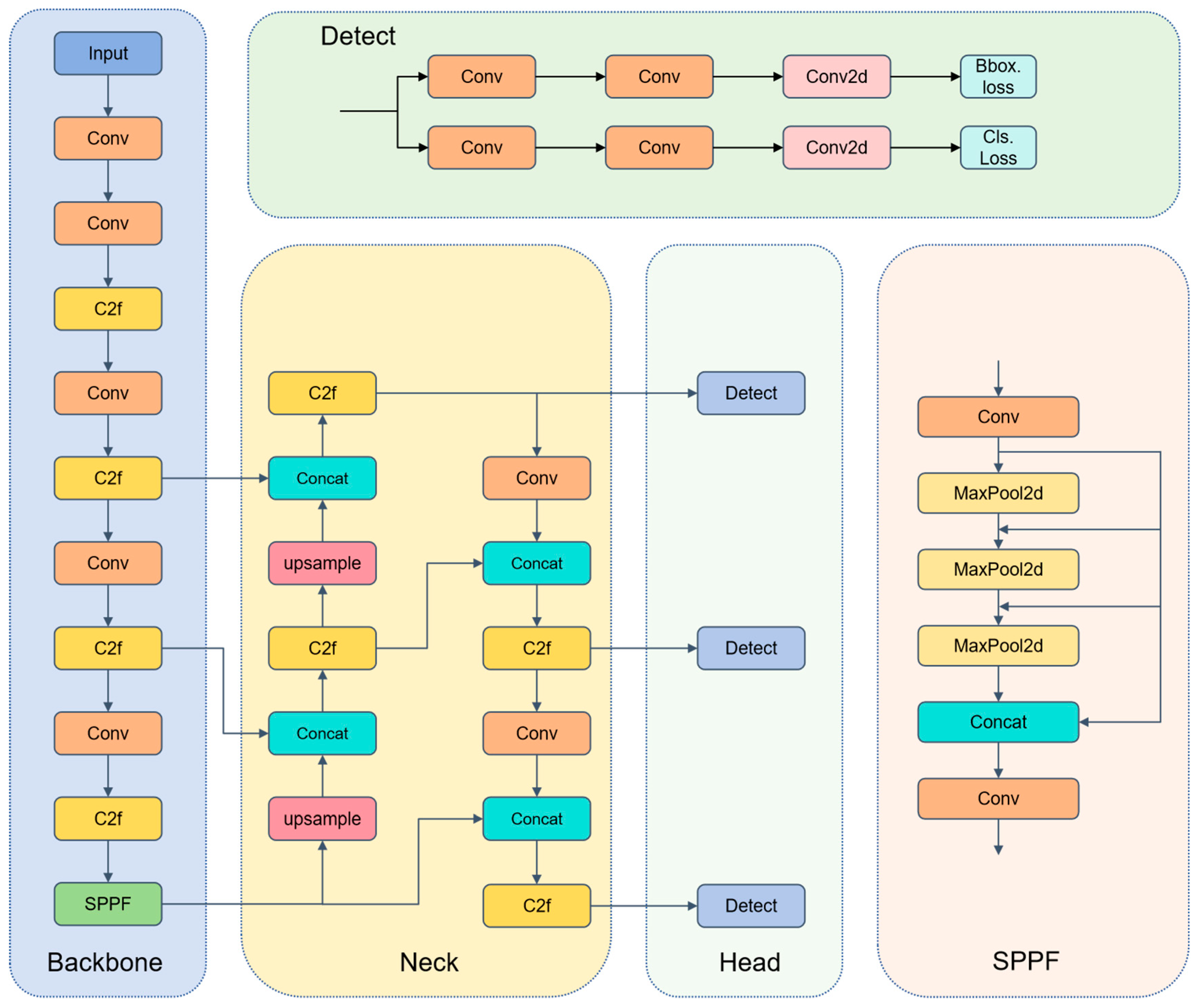

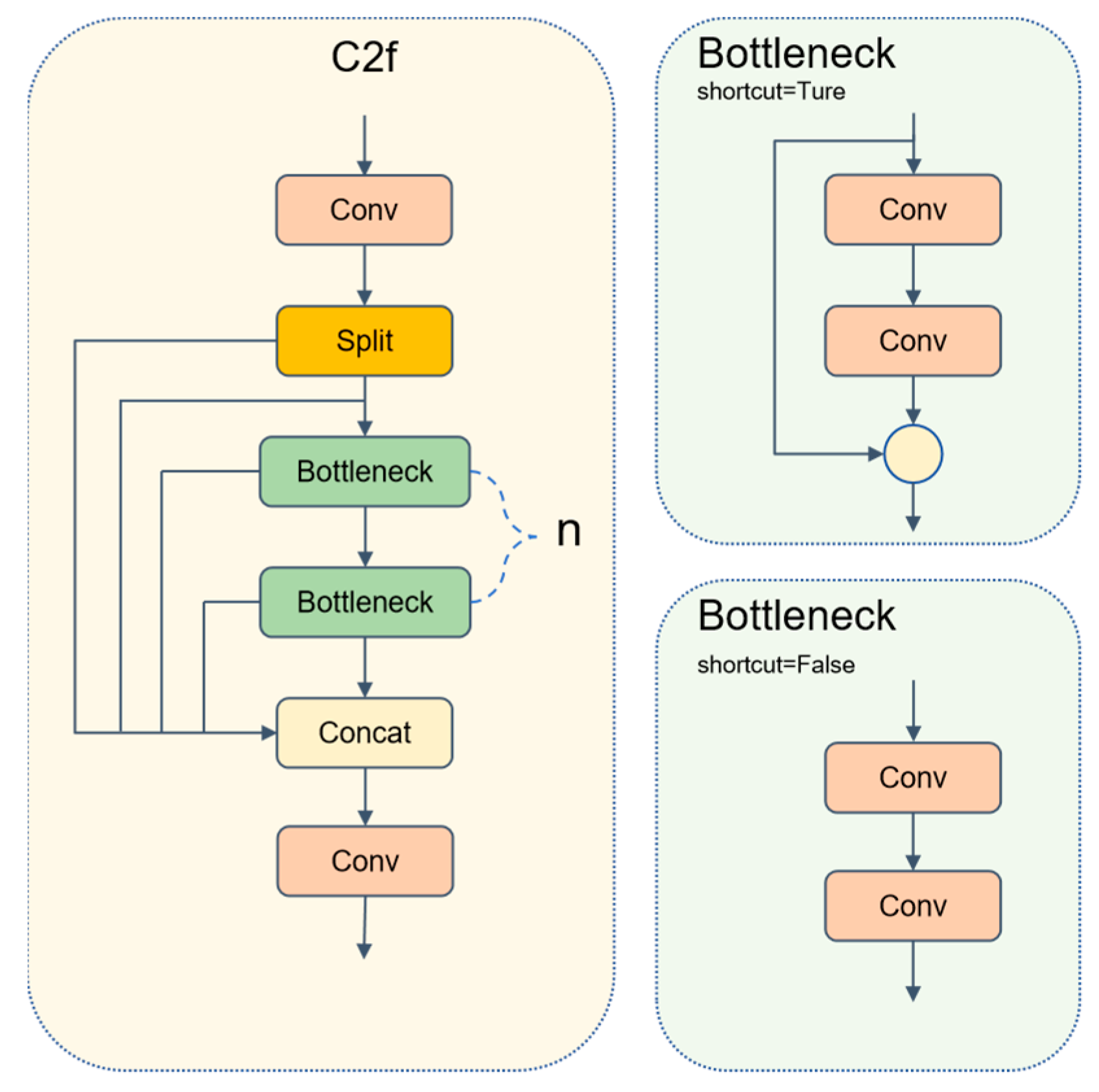

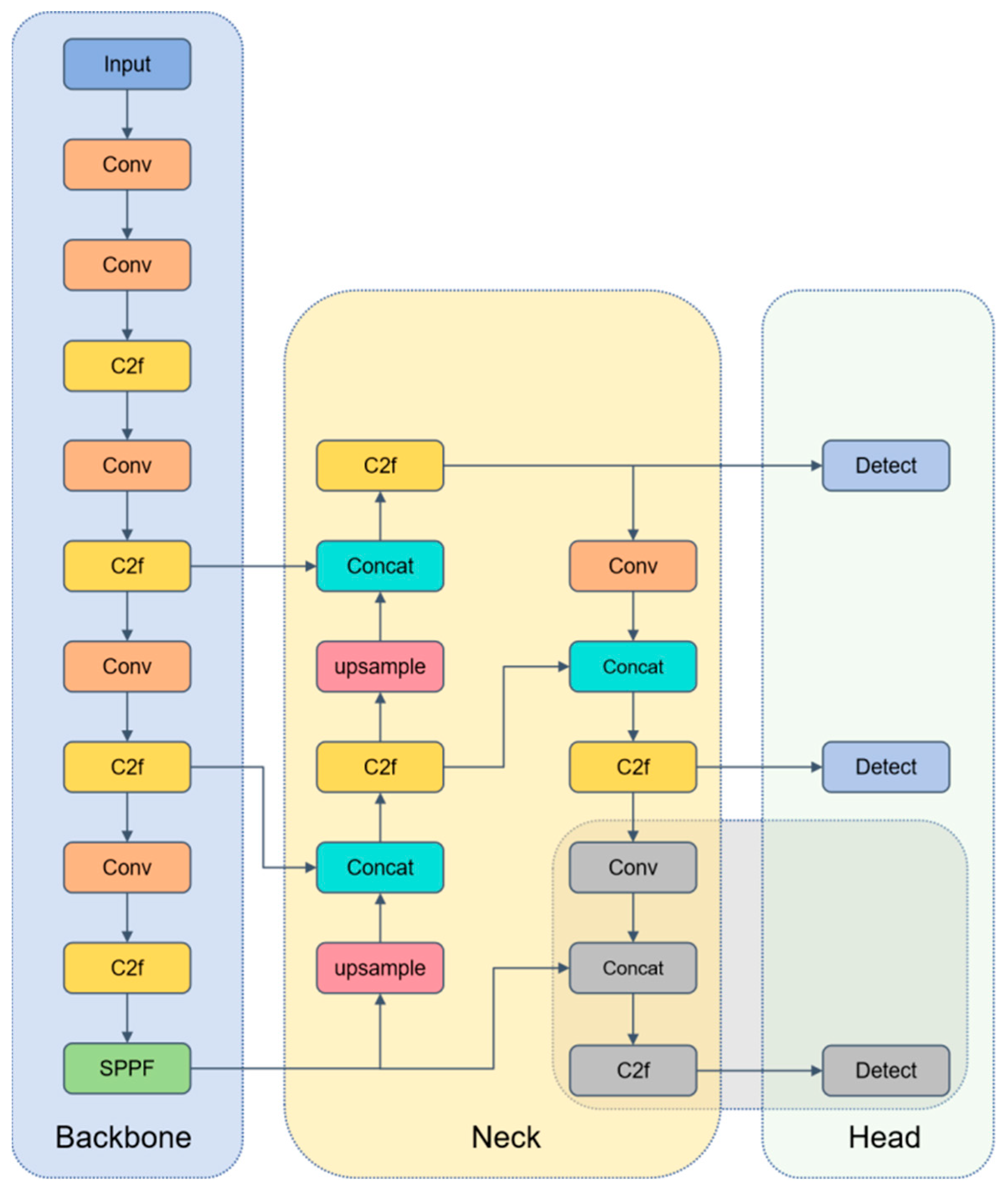

3.1. Original YOLOv8n Model

3.2. Improvement of the YOLOv8 Model: Train-YOLO Model

3.3. ADown

3.4. C2f-Rep

3.5. DHD

4. Experiments

4.1. Experimental Configuration and Training Parameters

4.2. Evaluation Indicators

4.3. Ablation Experiments

- The introduction of the ADown module significantly improved recall and overall detection accuracy while reducing the model size.

- The implementation of the C2f-Rep module maintained high precision and recall rates while reducing computational demands and model size.

- The incorporation of the DHD structure dramatically reduced parameters to 1.67 and significantly enhanced precision, as well as reducing model size.

4.4. Comparative Experiments

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Yang, F.; Tu, W.-J.; Wei, Z.-L.; Ke, Z.-T.; Liu, X.-B.; Yang, A.-H.; Wang, S.-L. Review on Development Status of Inspection Equipment for Track Maintenance, Communication and Signaling, and Power Supply of Railway. J. Traffic Transp. Eng. 2023, 23, 47–69. [Google Scholar] [CrossRef]

- Sang, H.; Zeng, J.; Qi, Y.; Mu, J.; Gan, F. Study on Wheel Wear Mechanism of High-Speed Train in Accelerating Conditions. Wear 2023, 516, 204597. [Google Scholar] [CrossRef]

- Peng, Y.; Li, T.; Bao, C.; Zhang, J.; Xie, G.; Zhang, H. Performance Analysis and Multi-Objective Optimization of Bionic Dendritic Furcal Energy-Absorbing Structures for Trains. Int. J. Mech. Sci. 2023, 246, 108145. [Google Scholar] [CrossRef]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-End Object Detection with Transformers. In Computer Vision—ECCV 2020; Vedaldi, A., Bischof, H., Brox, T., Frahm, J.M., Eds.; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2020; Volume 12346, pp. 213–229. [Google Scholar] [CrossRef]

- Cao, X.; Zuo, M.; Chen, G.; Wu, X.; Wang, P.; Liu, Y. Visual Localization Method for Fastener-Nut Disassembly and Assembly Robot Based on Improved Canny and HOG-SED. Appl. Sci. 2025, 15, 1645. [Google Scholar] [CrossRef]

- Dang, D.-Z.; Wang, Y.-W.; Ni, Y.-Q. A Novel Guided Wave Testing Method for Identifying Rail Web Cracks Using Optical Fiber Bragg Grating Sensing and Orthogonal Matching Pursuit. Measurement 2025, 243, 116317. [Google Scholar] [CrossRef]

- Sangaiah, A.K.; Yu, F.-N.; Lin, Y.-B.; Shen, W.-C.; Sharma, A. UAV T-YOLO-Rice: An Enhanced Tiny Yolo Networks for Rice Leaves Diseases Detection in Paddy Agronomy. IEEE Trans. Netw. Sci. Eng. 2024, 11, 5201–5216. [Google Scholar] [CrossRef]

- Lu, Y.-F.; Gao, J.-W.; Yu, Q.; Li, Y.; Lv, Y.-S.; Qiao, H. A Cross-Scale and Illumination Invariance-Based Model for Robust Object Detection in Traffic Surveillance Scenarios. IEEE Trans. Intell. Transp. Syst. 2023, 24, 6989–6999. [Google Scholar] [CrossRef]

- Huang, X.; Zhu, J.; Huo, Y. SSA-YOLO: An Improved YOLO for Hot-Rolled Strip Steel Surface Defect Detection. IEEE Trans. Instrum. Meas. 2024, 73, 5040017. [Google Scholar] [CrossRef]

- Chen, W.; Liu, W.; Li, K.; Wang, P.; Zhu, H.; Zhang, Y.; Hang, C. Rail Crack Recognition Based on Adaptive Weighting Multi-Classifier Fusion Decision. Measurement 2018, 123, 102–114. [Google Scholar] [CrossRef]

- Thanh Noi, P.; Kappas, M. Comparison of Random Forest, k-Nearest Neighbor, and Support Vector Machine Classifiers for Land Cover Classification Using Sentinel-2 Imagery. Sensors 2018, 18, 18. [Google Scholar] [CrossRef]

- Fan, X.; Jiao, X.; Shuai, M.; Qin, Y.; Chen, J. Application Research of Image Recognition Technology Based on Improved SVM in Abnormal Monitoring of Rail Fasteners. J. Comput. Methods Sci. Eng. 2023, 23, 1307–1319. [Google Scholar] [CrossRef]

- Wang, X.; Li, H.; Yue, X.; Meng, L. A Comprehensive Survey on Object Detection YOLO. In Proceedings of the International Symposium on Advanced Technologies and Applications in the Internet of Things, Kusatsu, Japan, 28–29 August 2023; Available online: https://api.semanticscholar.org/CorpusID:261125293 (accessed on 6 August 2025).

- Yong, J.; Dang, J.; Deng, W. A Parts Detection Network for Switch Machine Parts in Complex Rail Transit Scenarios. Sensors 2025, 25, 3287. [Google Scholar] [CrossRef]

- Ye, J.; Wu, Y.; Rong, W. Based on the Optimization and Performance Evaluation of YOLOv8 Object Detection Model with Multi-Backbone Network Fusion. In Proceedings of the 2024 IEEE International Conference on Mechatronics and Automation (ICMA 2024), Tianjin, China, 4–7 August 2024; IEEE: New York, NY, USA, 2024; pp. 269–274. [Google Scholar] [CrossRef]

- Guan, L.; Jia, L.; Xie, Z.; Yin, C. A Lightweight Framework for Obstacle Detection in the Railway Image Based on Fast Region Proposal and Improved YOLO-Tiny Network. IEEE Trans. Instrum. Meas. 2022, 71, 5009116. [Google Scholar] [CrossRef]

- Terven, J.; Córdova-Esparza, D.-M.; Romero-González, J.-A. A Comprehensive Review of YOLO Architectures in Computer Vision: From YOLOv1 to YOLOv8 and YOLO-NAS. Mach. Learn. Knowl. Extr. 2023, 5, 1680–1716. [Google Scholar] [CrossRef]

- Zhang, Y.; Feng, T.; Song, Y.; Shi, Y.; Cai, G. An Improved Target Network Model for Rail Surface Defect Detection. Appl. Sci. 2024, 14, 6467. [Google Scholar] [CrossRef]

- Alsenan, A.; Ben Youssef, B.; Alhichri, H. MobileUNetV3—A Combined UNet and MobileNetV3 Architecture for Spinal Cord Gray Matter Segmentation. Electronics 2022, 11, 2388. [Google Scholar] [CrossRef]

- Chen, M.; Zhang, M.; Peng, J.; Huang, J.; Li, H. A Multi-Category Defect Detection Model for Rail Fastener Based on Optimized YOLOv8n. Machines 2025, 13, 511. [Google Scholar] [CrossRef]

- Zhao, X.; Song, Y. Improved Ship Detection with YOLOv8 Enhanced with MobileViT and GSConv. Electronics 2023, 12, 4666. [Google Scholar] [CrossRef]

- Chen, L.; Sun, Q.; Han, Z.; Zhai, F. DP-YOLO: A Lightweight Real-Time Detection Algorithm for Rail Fastener Defects. Sensors 2025, 25, 2139. [Google Scholar] [CrossRef]

- Choi, J.-Y.; Han, J.-M. Deep Learning (Fast R-CNN)-Based Evaluation of Rail Surface Defects. Appl. Sci. 2024, 14, 1874. [Google Scholar] [CrossRef]

- Tao, Y.; Xu, Z.-D.; Wei, Y.; Liu, X.-Y.; Dong, Y.-R.; Dai, J. Integrating Deep Learning into an Energy Framework for Rapid Regional Damage Assessment and Fragility Analysis under Mainshock-Aftershock Sequences. Earthq. Eng. Struct. Dyn. 2025, 54, 1678–1697. [Google Scholar] [CrossRef]

- Shang, Z.; Li, L.; Zheng, S.; Mao, Y.; Shi, R. FIQ: A Fastener Inspection and Quantization Method Based on Mask FRCN. Appl. Sci. 2024, 14, 5267. [Google Scholar] [CrossRef]

- Bai, T.; Yang, J.; Xu, G.; Yao, D. An Optimized Railway Fastener Detection Method Based on Modified Faster R-CNN. Measurement 2021, 182, 109742. [Google Scholar] [CrossRef]

- Hsieh, C.-C.; Hsu, T.-Y.; Huang, W.-H. An Online Rail Track Fastener Classification System Based on YOLO Models. Sensors 2022, 22, 9970. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Ren, B. Quadrotor-Enabled Autonomous Parking Occupancy Detection. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 25–29 October 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 8287–8292. [Google Scholar] [CrossRef]

- Li, C.; Zhu, J.; Bi, L.; Chen, X.; Wang, Y. A Low-Light Image Enhancement Method with Brightness Balance and Detail Preservation. PLoS ONE 2022, 17, e0262478. [Google Scholar] [CrossRef]

- Wei, T.; Zhang, D.; He, Z.; Zhou, R.; Meng, X. Multi-Domain Conditional Prior Network for Water-Related Optical Image Enhancement. Comput. Vis. Image Underst. 2025, 251, 104251. [Google Scholar] [CrossRef]

- Sheeba, M.C.; Seldev, C.; Christopher, C. Adaptive Deep Residual Network for Image Denoising Across Multiple Noise Levels in Medical, Nature, and Satellite Images. Ain Shams Eng. J. 2025, 16, 103188. [Google Scholar] [CrossRef]

- Li, X.; Wang, Q.; Yang, X.; Wang, K.; Zhang, H. Track Fastener Defect Detection Model Based on Improved YOLOv5s. Sensors 2023, 23, 6457. [Google Scholar] [CrossRef]

- Zhou, W.; Li, L.; Liu, B.; Cao, Y.; Ni, W. A Multi-Tiered Collaborative Network for Optical Remote Sensing Fine-Grained Ship Detection in Foggy Conditions. Remote Sens. 2024, 16, 3968. [Google Scholar] [CrossRef]

- Gong, M.; Wang, D.; Zhao, X.; Guo, H.; Luo, D.; Song, M. A Review of Non-Maximum Suppression Algorithms for Deep Learning Target Detection. In Proceedings of the SPIE, Seventh Symposium on Novel Photoelectronic Detection Technology and Applications, Kunming, China, 5–7 November 2020; Volume 11763. [Google Scholar] [CrossRef]

- Li, X.; Wang, W.; Wu, L.; Chen, S.; Hu, X.; Li, J.; Tang, J.; Yang, J. Generalized Focal Loss: Learning Qualified and Distributed Bounding Boxes for Dense Object Detection. In Proceedings of the Advances in Neural Information Processing Systems, NeurIPS 2020, Vancouver, BC, Canada, 6–12 December 2020; Larochelle, H., Ranzato, M., Hadsell, R., Balcan, M.F., Lin, H., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2020; Volume 33. [Google Scholar]

- Dai, Q.; Xiao, Y.; Lv, S.; Song, S.; Xue, X.; Liang, S.; Huang, Y.; Li, Z. YOLOv8-GABNet: An Enhanced Lightweight Network for the High-Precision Recognition of Citrus Diseases and Nutrient Deficiencies. Agriculture 2024, 14, 1964. [Google Scholar] [CrossRef]

- Wang, A.; Chen, H.; Lin, Z.; Han, J.; Ding, G. Rep ViT: Revisiting Mobile CNN from ViT Perspective. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 17–21 June 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 15909–15920. [Google Scholar] [CrossRef]

- Hussain, M. YOLO-v1 to YOLO-v8, the Rise of YOLO and Its Complementary Nature toward Digital Manufacturing and Industrial Defect Detection. Machines 2023, 11, 677. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.; Berg, A. SSD: Single Shot MultiBox Detector. In Computer Vision—ECCV 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2016; Volume 9905, pp. 21–37. [Google Scholar] [CrossRef]

- Miao, D.; Wang, Y.; Yang, L.; Wei, S. Foreign Object Detection Method of Conveyor Belt Based on Improved Nanodet. IEEE Access 2023, 11, 23046–23052. [Google Scholar] [CrossRef]

- Kee, E.; Chong, J.J.; Choong, Z.J.; Lau, M. Development of Smart and Lean Pick-and-Place System Using EfficientDet-Lite for Custom Dataset. Appl. Sci. 2023, 13, 11131. [Google Scholar] [CrossRef]

| Device | Configuration |

|---|---|

| CPU | AMD Ryzen 9 7945HX |

| GPU | NVIDIA GeForce RTX 4060 |

| System | Windows 11 |

| Framework | Pytorch 2.2.2 |

| IDE | Pycharm 2023.2.2 |

| Python version | version 3.11.8 |

| Parameter | Setting |

|---|---|

| Input image size | 640 × 640 |

| Epochs | 300 |

| Batch size | 8 |

| Initial learning rate | 0.01 |

| Optimizer | SGD |

| Python version | version 3.11.8 |

| Model | Params/M | GFLOPs | P/% | Recall/% | mAP@50/% | F1 Score | Size/MB |

|---|---|---|---|---|---|---|---|

| YOLOv8 | 3.01 | 8.2 | 87.8 | 76.5 | 82.7 | 81.7 | 5.98 |

| YOLOv8 + ADown | 2.71 (−0.30) | 7.5 (−0.7) | 87.1 (−0.7) | 79.2 (+2.7) | 85.8 (+3.1) | 83.0 (+1.3) | 5.44 (−0.54) |

| YOLOv8 + C2f-Rep | 2.68 (−0.33) | 7.3 (−0.9) | 86.3 (+1.5) | 77.9 (+1.4) | 81.9 (−0.8) | 81.9 (+0.2) | 5.39 (−0.59) |

| YOLOv8 + DHD | 2.00 (−1.01) | 7.3 (−0.9) | 92.9 (+5.1) | 74.1 (−2.4) | 84.0 (+1.3) | 82.4 (+0.7) | 4.01 (−1.97) |

| YOLOv8 + DHD + C2f-Rep | 1.67 (−1.34) | 6.5 (−1.7) | 84.5 (−3.3) | 76.7 (+0.2) | 81.9 (−0.8) | 80.4 (−1.3) | 3.43 (−2.55) |

| YOLOv8 + DHD + ADown | 1.71 (−1.30) | 6.7 (−1.5) | 89.3 (+1.5) | 77.3 (+0.8) | 84.6 (+1.9) | 82.9 (+1.2) | 3.48 (−2.50) |

| YOLOv8 + Adown + C2f-Rep | 2.40 (−0.61) | 6.6 (−1.6) | 87.8 (0.0) | 78.9 (+2.4) | 83.6 (+0.9) | 83.1 (+1.4) | 4.86 (−1.12) |

| Train-YOLO | 1.38 (−1.63) | 5.8 (−2.4) | 92.9 (+5.1) | 78.6 (+2.1) | 84.9 (+2.2) | 85.2 (+3.5) | 2.90 (−3.08) |

| Model | P | Recall | mAP@50 | F1 Score | Size/MB |

|---|---|---|---|---|---|

| SSD | 0.79 | 0.402 | 0.743 | 0.533 | 91.6 |

| Faster RCNN | 0.515 | 0.811 | 0.281 | 0.630 | 108 |

| YOLOv3 | 0.915 | 0.768 | 0.851 | 0.835 | 207.8 |

| YOLOv5 | 0.824 | 0.727 | 0.800 | 0.772 | 5.3 |

| YOLOv8 | 0.878 | 0.765 | 0.827 | 0.817 | 5.98 |

| NanoDet | 0.834 | 0.585 | 0.775 | 0.688 | 16.2 |

| EfficientDet-Lite | 0.426 | 0.382 | 0.411 | 0.403 | 12.0 |

| Train-YOLO | 0.929 | 0.786 | 0.849 | 0.852 | 2.90 |

| Fault Type | P | R | mAP | |||

|---|---|---|---|---|---|---|

| Train-YOLO | YOLOv8 | Train-YOLO | YOLOv8 | Train-YOLO | YOLOv8 | |

| Fine cracks | 0.932 | 0.875 | 0.655 | 0.628 | 0.764 | 0.729 |

| Coarse cracks | 0.934 | 0.916 | 0.812 | 0.757 | 0.856 | 0.818 |

| Fractures | 0.923 | 0.843 | 0.889 | 0.911 | 0.927 | 0.934 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zong, H.; Jiang, Y.; Huang, X. Train-YOLO: An Efficient and Lightweight Network Model for Train Component Damage Detection. Sensors 2025, 25, 4953. https://doi.org/10.3390/s25164953

Zong H, Jiang Y, Huang X. Train-YOLO: An Efficient and Lightweight Network Model for Train Component Damage Detection. Sensors. 2025; 25(16):4953. https://doi.org/10.3390/s25164953

Chicago/Turabian StyleZong, Hanqing, Ying Jiang, and Xinghuai Huang. 2025. "Train-YOLO: An Efficient and Lightweight Network Model for Train Component Damage Detection" Sensors 25, no. 16: 4953. https://doi.org/10.3390/s25164953

APA StyleZong, H., Jiang, Y., & Huang, X. (2025). Train-YOLO: An Efficient and Lightweight Network Model for Train Component Damage Detection. Sensors, 25(16), 4953. https://doi.org/10.3390/s25164953