A Contrastive Representation Learning Method for Event Classification in Φ-OTDR Systems

Abstract

1. Introduction

2. Methodology

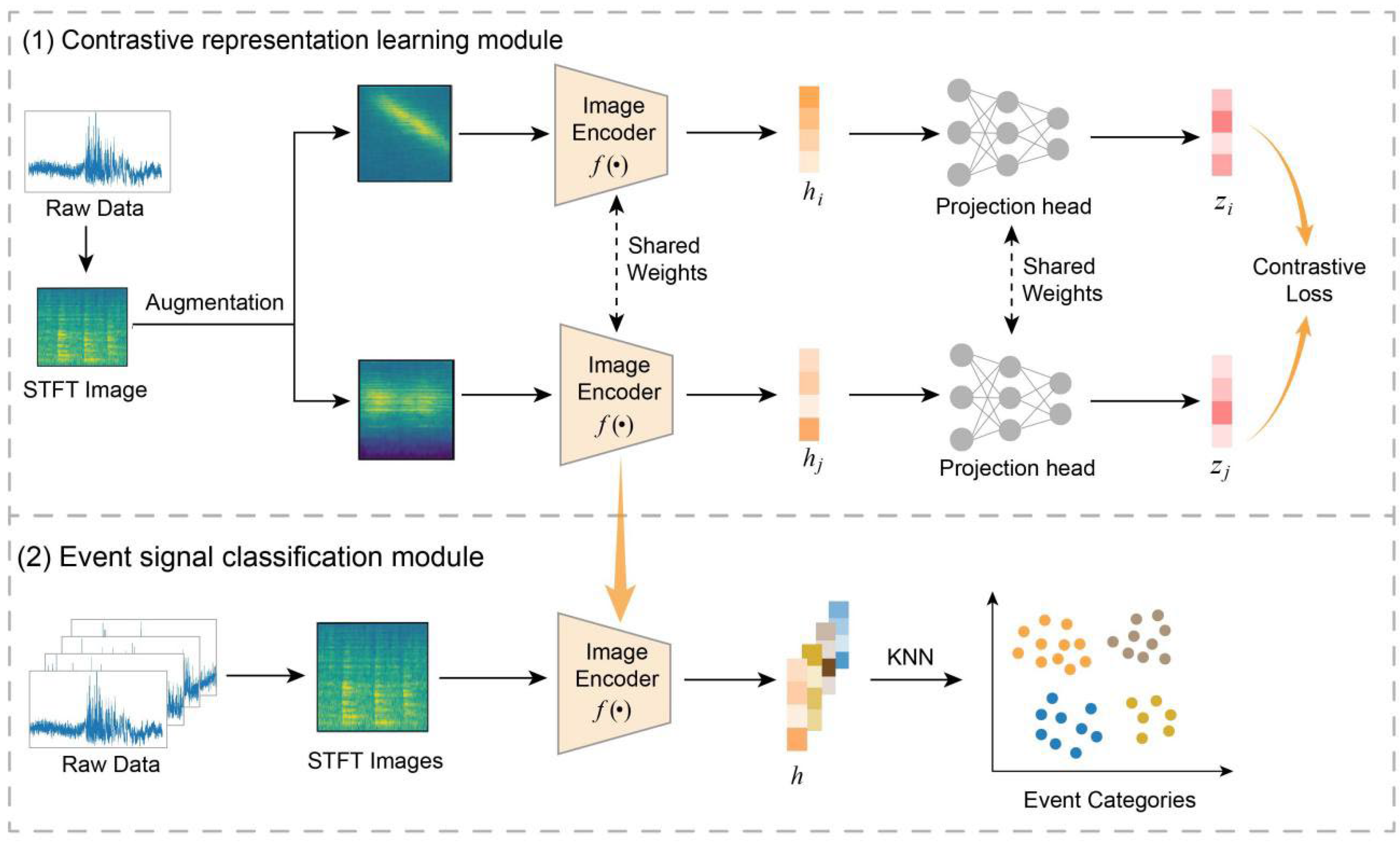

2.1. Overview of CLWTNet

2.2. Contrastive Representation Learning Module

2.2.1. Signal Transformation

2.2.2. Image Encoder

2.2.3. Projection Head

2.2.4. Contrastive Loss Function

2.3. Event Signal Classification Module

3. Experiments and Results

3.1. Data Collection and Preprocessing

3.2. Evaluation Metrics

3.3. Performance Comparison

- AE [34]: It performs encoding of an STFT image to a low-dimensional representation and then decoding of the low-dimensional representation to reconstruct the STFT image. The training process of AE is conducted in an unsupervised manner, without requiring labeled samples.

- DAE [35]: DAE is a variation of AE. Differently, DAE first introduces a corruption process applied to the STFT image and then reconstructs the original STFT image from low-dimensional representations, thus enhancing the robustness of representations learned from STFT images.

- VAE [36]: VAE incorporates probabilistic principles to map the STFT image to a set of probability distribution parameters in the latent space. Then, the latent representation is sampled from this distribution and used for reconstructing the STFT image. VAE is trained in an unsupervised manner by optimizing the reconstruction loss with label-free samples.

- CLNet [29]: CLNet is a contrastive representation learning method, and it is a variation of CLWTNet. Unlike CLWTNet, which employs WTConv layers, it uses CNN layers to construct the image encoder.

- ResNet [37]: This is a neural network architecture that utilizes residual connections to mitigate the vanishing gradient problem, allowing gradients to flow more effectively through the network during training. It is frequently used in image classification tasks.

- AlexNet [38]: This is a deeper convolutional neural network than ResNet, which includes multiple convolutional layers, ReLU activations, and dropout regularization. It has a profound influence in computer vision research.

- MFM [11]: A method is presented that manually extracts features from each event signal as representations for classification.

| Method Name | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|

| AE | 0.774 | 0.769 | 0.768 | 0.767 |

| DAE | 0.787 | 0.786 | 0.781 | 0.781 |

| VAE | 0.798 | 0.795 | 0.789 | 0.789 |

| CLNet | 0.873 | 0.870 | 0.867 | 0.867 |

| MFM | 0.820 | 0.816 | 0.815 | 0.814 |

| ResNet | 0.925 | 0.922 | 0.920 | 0.919 |

| AlexNet | 0.924 | 0.919 | 0.918 | 0.917 |

| CLWTNet | 0.922 | 0.919 | 0.921 | 0.915 |

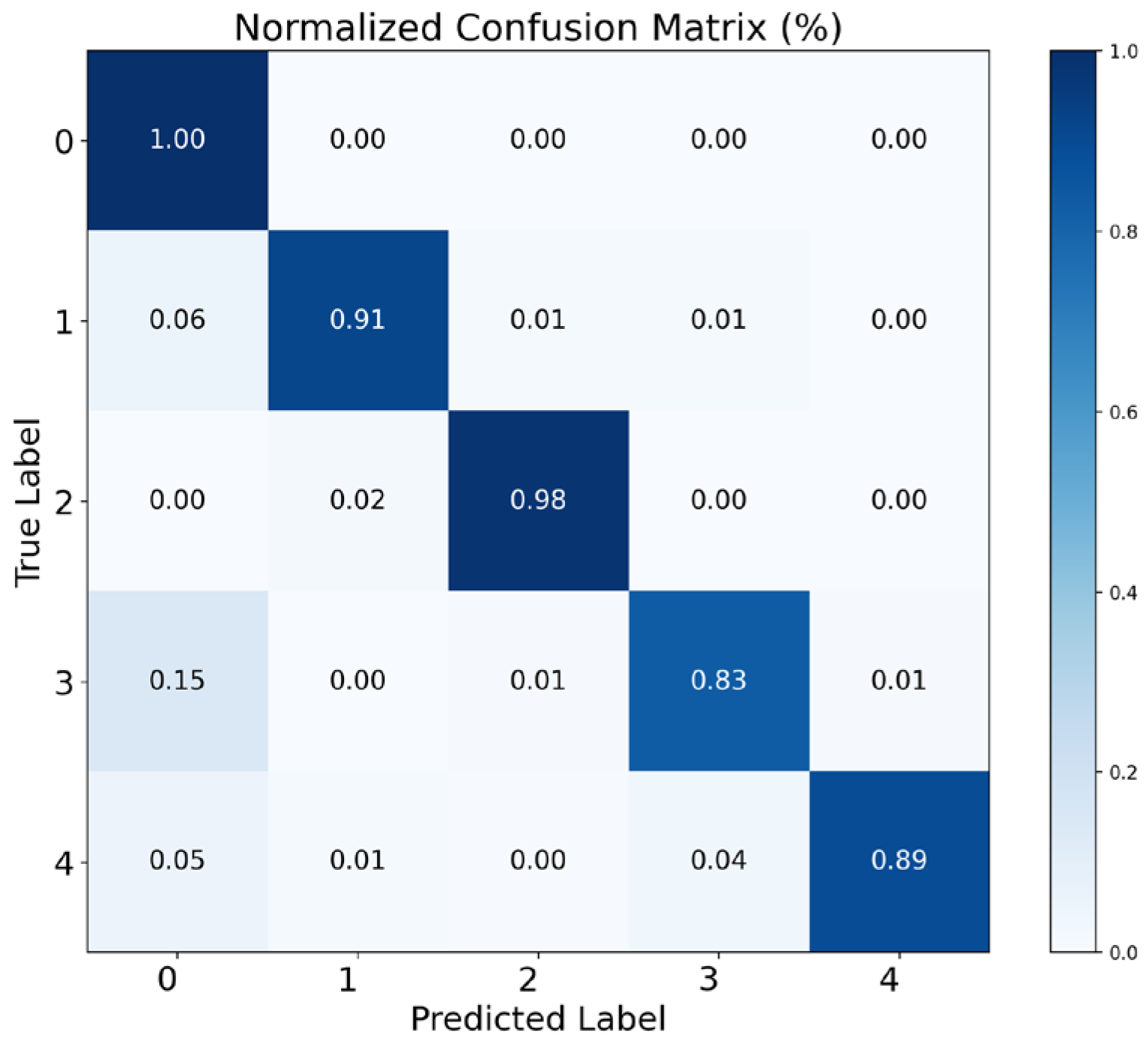

3.4. Analysis of Classification Performance

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Yang, Y.; Zhang, H.; Li, Y. Pipeline Safety Early Warning by Multifeature-Fusion CNN and LightGBM Analysis of Signals From Distributed Optical Fiber Sensors. IEEE Trans. Instrum. Meas. 2021, 70, 1–13. [Google Scholar] [CrossRef]

- Juarez, J.C.; Taylor, H.F. Field Test of a Distributed Fiber-Optic Intrusion Sensor System for Long Perimeters. Appl. Opt. 2007, 46, 1968–1971. [Google Scholar] [CrossRef] [PubMed]

- Lopez-Higuera, J.M.; Rodriguez Cobo, L.; Quintela Incera, A.; Cobo Fiber, A. Optic Sensors in Structural Health Monitoring. J. Light. Technol. 2011, 29, 587–608. [Google Scholar] [CrossRef]

- Merlo, S.; Malcovati, P.; Norgia, M.; Pesatori, A.; Svelto, C.; Pniov, A.; Zhirnov, A.; Nesterov, E.; Karassik, V. Runways Ground Monitoring System by Phase-Sensitive Optical-Fiber OTDR. In Proceedings of the 2017 IEEE International Workshop on Metrology for AeroSpace (MetroAeroSpace), Padua, Italy, 21 June 2017; pp. 523–529. [Google Scholar]

- Wu, H.; Li, X.; Peng, Z.; Rao, Y. A Novel Intrusion Signal Processing Method for Phase-Sensitive Optical Time-Domain Reflectometry (Φ-OTDR). In Proceedings of the 23rd International Conference on Optical Fiber Sensors, Santander, Spain, 2 June 2014; p. 91575. [Google Scholar]

- Timofeev, A.V.; Groznov, D.I. Classification of Seismoacoustic Emission Sources in Fiber Optic Systems for Monitoring Extended Objects. Optoelectron. Instrum. Data Process. 2020, 56, 50–60. [Google Scholar] [CrossRef]

- Zhou, Y.; Zhang, G.; Li, Q.; Teng, W.; Yang, Q. Distributed Optical Fiber Intrusion Warning Based on Multi-Model Fusion. In Proceedings of the 2020 7th International Conference on Information, Cybernetics, and Computational Social Systems (ICCSS), Guangzhou, China, 13 November 2020; pp. 843–848. [Google Scholar]

- Qin, Z.; Chen, H.; Chang, J. Signal-to-Noise Ratio Enhancement Based on Empirical Mode Decomposition in Phase-Sensitive Optical Time Domain Reflectometry Systems. Sensors 2017, 17, 1870. [Google Scholar] [CrossRef]

- Wiesmeyr, C.; Litzenberger, M.; Waser, M.; Papp, A.; Garn, H.; Neunteufel, G.; Döller, H. Real-Time Train Tracking from Distributed Acoustic Sensing Data. Appl. Sci. 2020, 10, 448. [Google Scholar] [CrossRef]

- Zhan, Y.; Xu, L.; Han, M.; Zhang, W.; Lin, G.; Cui, X.; Li, Z.; Yang, Y. Multi-Dimensional Feature Extraction Method for Distributed Optical Fiber Sensing Signals. J. Opt. 2024, 53, 662–675. [Google Scholar] [CrossRef]

- Jia, H.; Liang, S.; Lou, S.; Sheng, X. A K-Nearest Neighbor Algorithm-Based Near Category Support Vector Machine Method for Event Identification of Φ-OTDR. IEEE Sens. J. 2019, 19, 3683–3689. [Google Scholar] [CrossRef]

- Fedorov, A.K.; Anufriev, M.N.; Zhirnov, A.A.; Stepanov, K.V.; Nesterov, E.T.; Namiot, D.E.; Karasik, V.E.; Pnev, A.B. Note: Gaussian Mixture Model for Event Recognition in Optical Time-Domain Reflectometry Based Sensing Systems. Rev. Sci. Instrum. 2016, 87, 036107. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Lou, S.; Liang, S. Sheng Multi-Class Disturbance Events Recognition Based on EMD and XGBoost in φ-OTDR. IEEE Access 2020, 8, 63551–63558. [Google Scholar] [CrossRef]

- Shi, Y.; Wang, Y.; Zhao, L.; Fan, Z. An Event Recognition Method for Φ-OTDR Sensing System Based on Deep Learning. Sensors 2019, 19, 3421. [Google Scholar] [CrossRef]

- Kandamali, D.F.; Cao, X.; Tian, M.; Jin, Z.; Dong, H.; Yu, K. Machine Learning Methods for Identification and Classification Ofevents in Φ-OTDR Systems: A Review. Appl. Opt. 2022, 61, 2975–2997. [Google Scholar] [CrossRef]

- Wu, H.; Chen, J.; Liu, X.; Xiao, Y.; Wang, M.; Zheng, Y.; Rao, Y. One-Dimensional CNN-Based Intelligent Recognition of Vibrations in Pipeline Monitoring With DAS. J. Light. Technol. 2019, 37, 4359–4366. [Google Scholar] [CrossRef]

- Sun, Q.; Li, Q.; Chen, L.; Quan, J.; Li, L. Pattern Recognition Based on Pulse Scanning Imaging and Convolutional Neural Network for Vibrational Events in Φ-OTDR. Optik 2020, 219, 165205. [Google Scholar] [CrossRef]

- Wu, H.; Yang, M.; Yang, S.; Lu, H.; Wang, C.; Rao, Y. A Novel DAS Signal Recognition Method Based on Spatiotemporal Information Extraction With 1DCNNs-BiLSTM Network. IEEE Access 2020, 8, 119448–119457. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhao, W.; Dong, L.; Zhang, C.; Peng, G.; Shang, Y.; Liu, G.; Yao, C.; Liu, S.; Wan, N.; et al. Intrusion Event Identification Approach for Distributed Vibration Sensing Using Multimodal Fusion. IEEE Sens. J. 2024, 24, 37114–37124. [Google Scholar] [CrossRef]

- Zeng, Y.; Zhang, J.; Zhong, Y.; Deng, L.; Wang, M. STNet: A Time-Frequency Analysis-Based Intrusion Detection Network for Distributed Optical Fiber Acoustic Sensing Systems. Sensors 2024, 24, 1570. [Google Scholar] [CrossRef]

- Le-Xuan, T.; Nguyen-Chi, T.; Bui-Tien, T.; Tran-Ngoc, H. ResUNet4T: A Potential Deep Learning Model for Damage Detection Based on a Numerical Case Study of a Large-Scale Bridge Using Time-Series Data. Eng. Struct. 2025, 340, 120668. [Google Scholar] [CrossRef]

- Shi, Y.; Liu, H.; Zhang, W.; Cheng, Z.; Chen, J.; Sun, Q. Event Recognition Method Based on Feature Synthesizing for a Zero-Shot Intelligent Distributed Optical Fiber Sensor. Opt. Express 2024, 32, 8321–8334. [Google Scholar] [CrossRef]

- Wang, J.; Huang, S.; Wang, C.; Qu, S.; Wang, W.; Liu, G.; Yao, C.; Wan, N.; Kong, X.; Zhao, H.; et al. Multichannel Hybrid Parallel Classification Network Based on Siamese Network for the DAS Event Recognition System. IEEE Sens. J. 2025, 25, 2629–2637. [Google Scholar] [CrossRef]

- Shiloh, L.; Eyal, A.; Giryes, R. Efficient Processing of Distributed Acoustic Sensing Data Using a Deep Learning Approach. J. Light. Technol. 2019, 37, 4755–4762. [Google Scholar] [CrossRef]

- Zhang, J.; Zhao, X.; Zhao, Y.; Zhong, X.; Wang, Y.; Meng, F.; Ding, J.; Niu, Y.; Zhang, X.; Dong, L.; et al. Unsupervised Learning Method for Events Identification in Φ-OTDR. Opt. Quantum Electron. 2022, 54, 457. [Google Scholar] [CrossRef]

- Shi, Y.; Dai, S.; Liu, X.; Zhang, Y.; Wu, X.; Jiang, T. Event Recognition Method Based on Dual-Augmentation for a Φ-OTDR System with a Few Training Samples. Opt. Express 2022, 30, 31232–31243. [Google Scholar] [CrossRef]

- Zhou, Y.; Yang, G.; Xu, L.; Wang, L.; Tang, M. Mixed Event Separation and Identification Based on a Convolutional Neural Network Trained with the Domain Transfer Method for a Φ-OTDR Sensing System. Opt. Express 2024, 32, 25849–25865. [Google Scholar] [CrossRef]

- Wu, Z.; Xiong, Y.; Yu, S.X.; Lin, D. Unsupervised Feature Learning via Non-Parametric Instance Discrimination. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 16 December 2018; pp. 3733–3742. [Google Scholar]

- Chen, T.; Kornblith, S.; Norouzi, M.; Hinton, G. A Simple Framework for Contrastive Learning of Visual Representations. In Proceedings of the 37th International Conference on Machine Learning, PMLR, Vienna, Austria, 21 November 2020; pp. 1597–1607. [Google Scholar]

- Hu, H.; Wang, X.; Zhang, Y.; Chen, Q.; Guan, Q. A Comprehensive Survey on Contrastive Learning. Neurocomputing 2024, 610, 128645. [Google Scholar] [CrossRef]

- Finder, S.E.; Amoyal, R.; Treister, E.; Freifeld, O. Wavelet Convolutions for Large Receptive Fields. In Proceedings of the Computer Vision-ECCV 2024, Milan, Italy, 29 September–4 October 2024; Leonardis, A., Ricci, E., Roth, S., Russakovsky, O., Sattler, T., Varol, G., Eds.; Springer: Cham, Switzerland, 2025; pp. 363–380. [Google Scholar]

- Cao, X.; Su, Y.; Jin, Z.; Yu, K. An Open Dataset of φ-OTDR Events with Two Classification Models as Baselines. Results Opt. 2023, 10, 100372. [Google Scholar] [CrossRef]

- Lyu, Z.; Zhu, C.; Pu, Y.; Chen, Z.; Yang, K. Yang Two-Stage Intrusion Events Recognition for Vibration Signals From Distributed Optical Fiber Sensors. IEEE Trans. Instrum. Meas. 2024, 73, 1–10. [Google Scholar] [CrossRef]

- Zhai, J.; Zhang, S.; Chen, J.; He, Q. Autoencoder and Its Various Variants. In Proceedings of the 2018 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Miyazaki, Japan,, 7–10 October 2018; pp. 415–419. [Google Scholar]

- Vincent, P.; Larochelle, H.; Lajoie, I.; Bengio, Y.; Manzagol, P.A.; Bottou, L. Stacked Denoising Autoencoders: Learning Useful Representations in a Deep Network with a Local Denoising Criterion. J. Mach. Learn. Res. 2010, 11, 1532–4435. [Google Scholar]

- Kingma, D.P.; Welling, M. Auto-Encoding Variational Bayes. In Proceedings of the International Conference on Learning Representations, Banff, AB, Canada, 14–16 April 2014. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. In Proceedings of the Advances in Neural Information Processing Systems; Curran Associates, Inc.: Red Hook, NY, USA, 2012; Volume 25. [Google Scholar]

| Event Type | Label | Number of Samples | Description |

|---|---|---|---|

| Background | 0 | 547 | The signals were collected during daytime and at night, in the absence of intentional interference. |

| Digging | 1 | 793 | A person used a shovel to dig near the sensing fiber at a rate of one second. |

| Knocking | 2 | 890 | A person used a shovel to tap near the sensing fiber at a rate of one second. |

| Watering | 3 | 863 | A person used a watering can to wash near the sensing fiber, positioned at a height of about half a meter. |

| Shaking | 4 | 620 | The fence equipped with the sensing fiber was vibrated by human movement to simulate climbing activities against the fence. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, T.; Peng, X.; Liu, Y.; Yin, K.; Li, P. A Contrastive Representation Learning Method for Event Classification in Φ-OTDR Systems. Sensors 2025, 25, 4744. https://doi.org/10.3390/s25154744

Zhang T, Peng X, Liu Y, Yin K, Li P. A Contrastive Representation Learning Method for Event Classification in Φ-OTDR Systems. Sensors. 2025; 25(15):4744. https://doi.org/10.3390/s25154744

Chicago/Turabian StyleZhang, Tong, Xinjie Peng, Yifan Liu, Kaiyang Yin, and Pengfei Li. 2025. "A Contrastive Representation Learning Method for Event Classification in Φ-OTDR Systems" Sensors 25, no. 15: 4744. https://doi.org/10.3390/s25154744

APA StyleZhang, T., Peng, X., Liu, Y., Yin, K., & Li, P. (2025). A Contrastive Representation Learning Method for Event Classification in Φ-OTDR Systems. Sensors, 25(15), 4744. https://doi.org/10.3390/s25154744