Adaptive Covariance Matrix for UAV-Based Visual–Inertial Navigation Systems Using Gaussian Formulas

Abstract

1. Introduction

- A novel covariance matrix estimation method is proposed to efficiently adapt to the image quality, where the Laplacian operator is utilized to evaluate the motion blur score.

- A novel VINS framework is constructed, transforming the adaptive covariance matrix into visual uncertainties using Gaussian formulas to improve the system’s performance, especially in dynamic environments.

- Extensive simulation and field experiments validate the effectiveness of our method, demonstrating significant improvements in navigation performance compared to the traditional VINS method.

2. Methodology

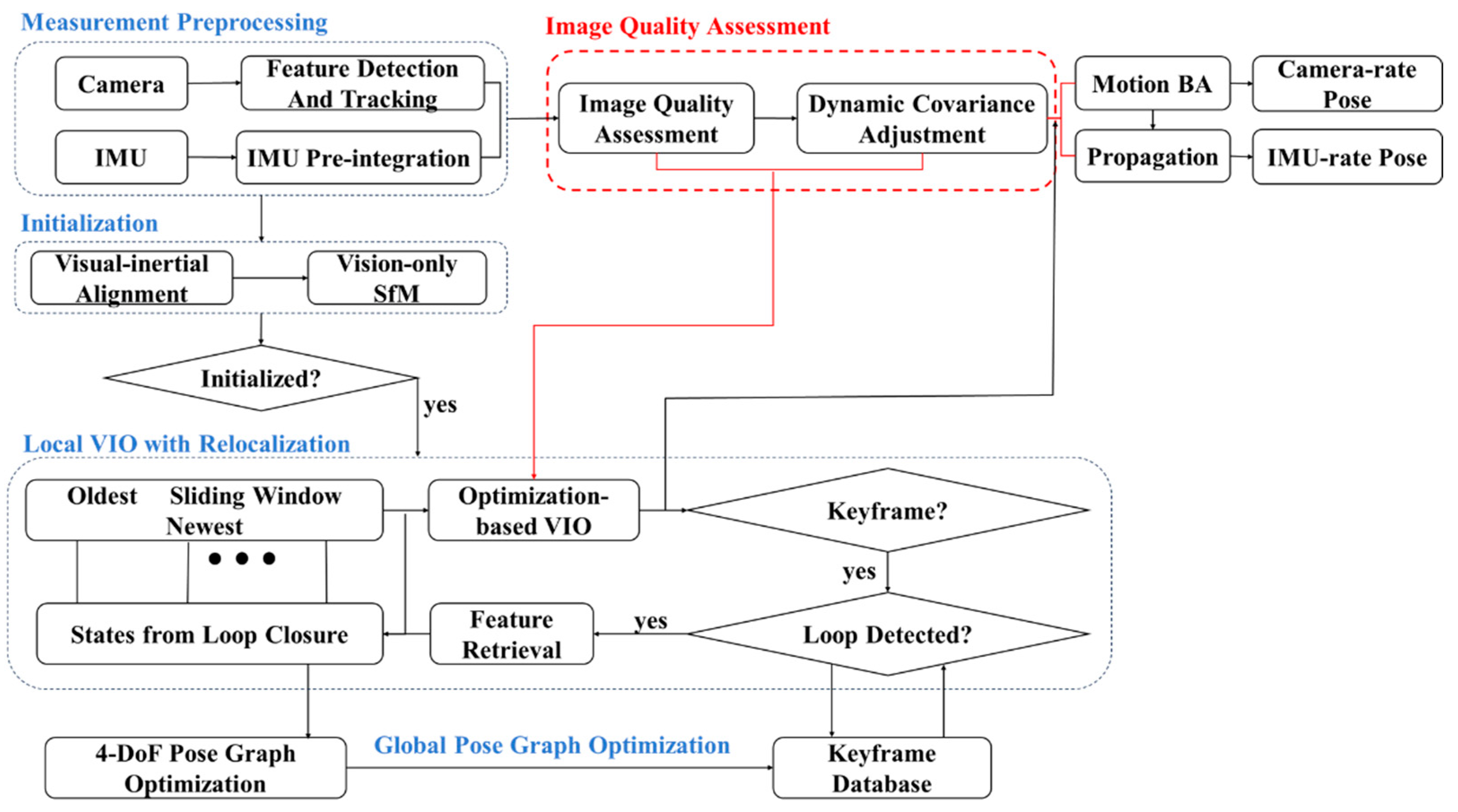

2.1. System Overview

2.2. Image Quality Calculation

- (a)

- Image Acquisition: Obtain the image to be analyzed (Line 2–3).

- (b)

- Gaussian Smoothing: Apply Gaussian smoothing to the image to reduce noise (Line 4–7).

- (c)

- Laplacian Calculation: Compute the Laplacian of the smoothed image using the convolution kernel (Line 8–9).

- (d)

- Variance Computation: Calculate the variance of the Laplacian result to quantify the image blur (Line 10–17).

| Algorithm 1: Calculate Image Blur Using Laplacian Algorithm |

| Input: Image Output: Image Blur 1: function CALCULATE_IMAGE_BLUR(image) 2: image ← LOAD_IMAGE(image) 3: (h, w) ← DIMENSIONS(image) 4: sigma ← 1.0 5: gaussian_kernel_size ← 3 6: gaussian_kernel ←GAUSSIAN_KERNEL(gaussian_kernel_size, sigma) 7: smoothed_image ← CONVOLVE(image, gaussian_kernel, (h, w)) 8: laplacian_kernel ← [[0, −1, 0], [−1, 4, −1], [0, −1, 0]] 9: laplacian_image ← CONVOLVE(smoothed_image, laplacian_kernel, (h, w)) 10: sum_pixels ← 0 11: for each pixel in laplacian_image do 12: sum_pixels ← sum_pixels + pixel 13: mean ← sum_pixels/(h * w) 14: variance_sum ← 0 15: for each pixel in laplacian_image do 16: variance_sum ← variance_sum + (pixel − mean)^2 17: Image Blur ← variance_sum/(h * w) 18: return Image Blur 19: end function |

2.3. Adaptive Covariance Matrix Estimation

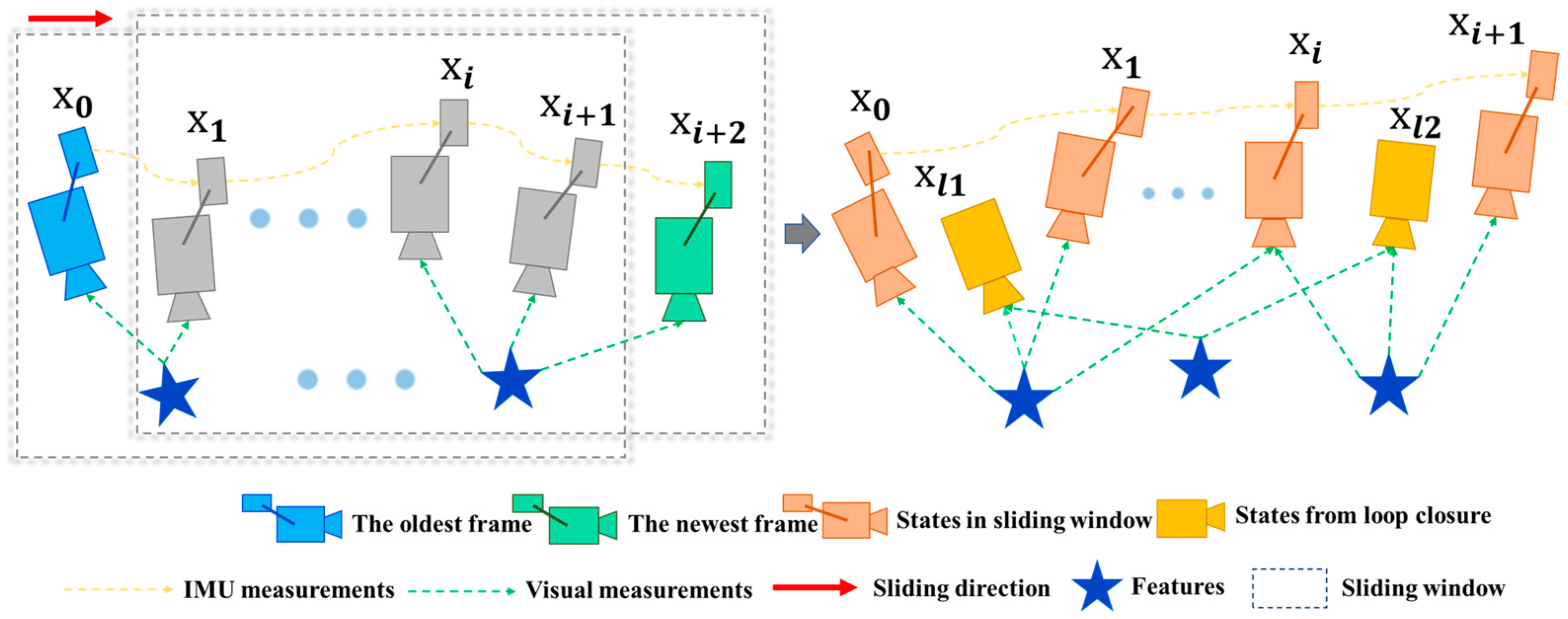

2.4. Adaptive Integration of Visual–Inertial Odometry with Global Optimization

3. Experiments and Analysis

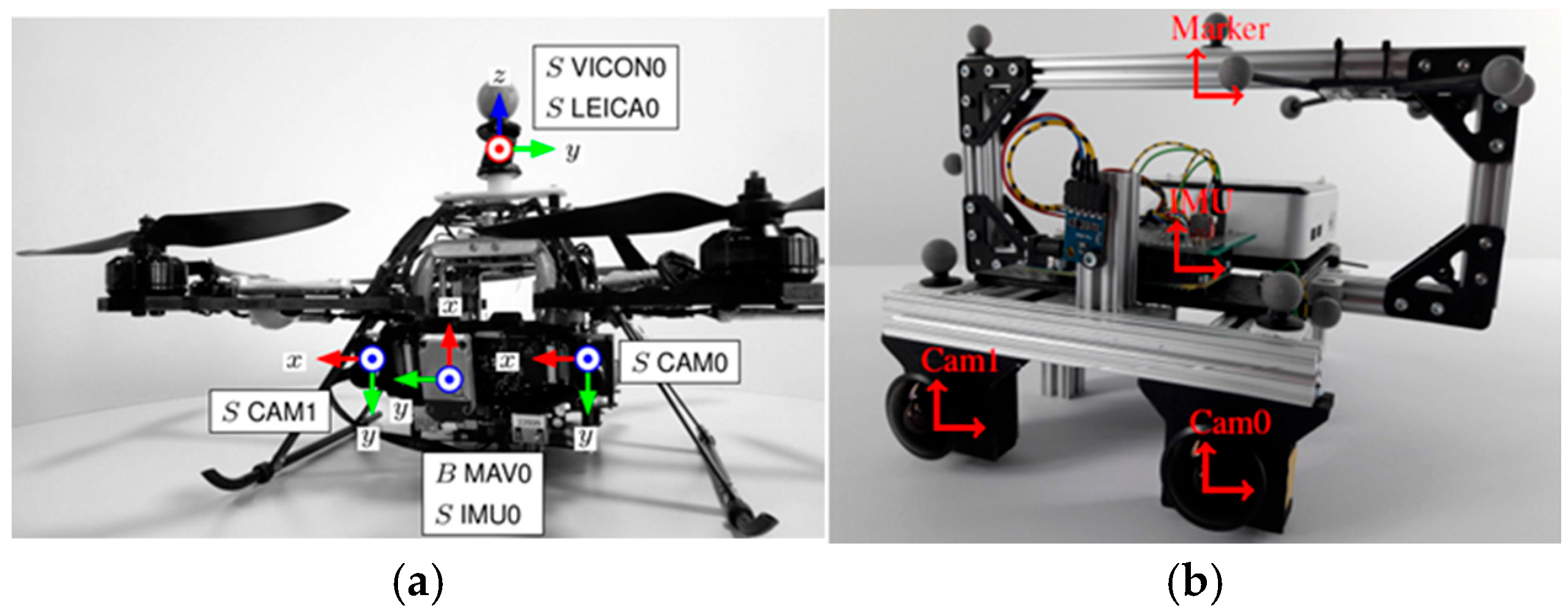

3.1. Experimental Data

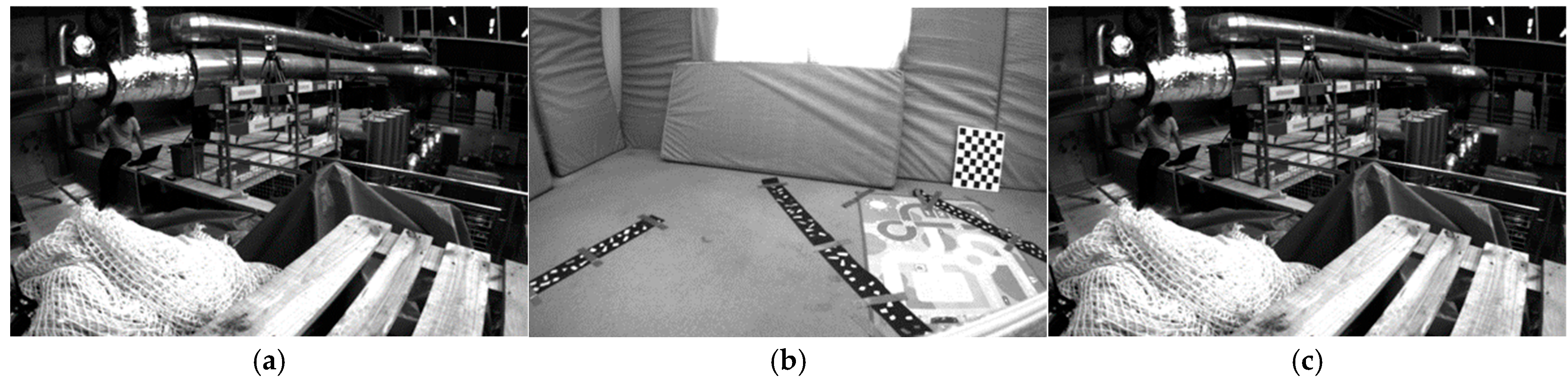

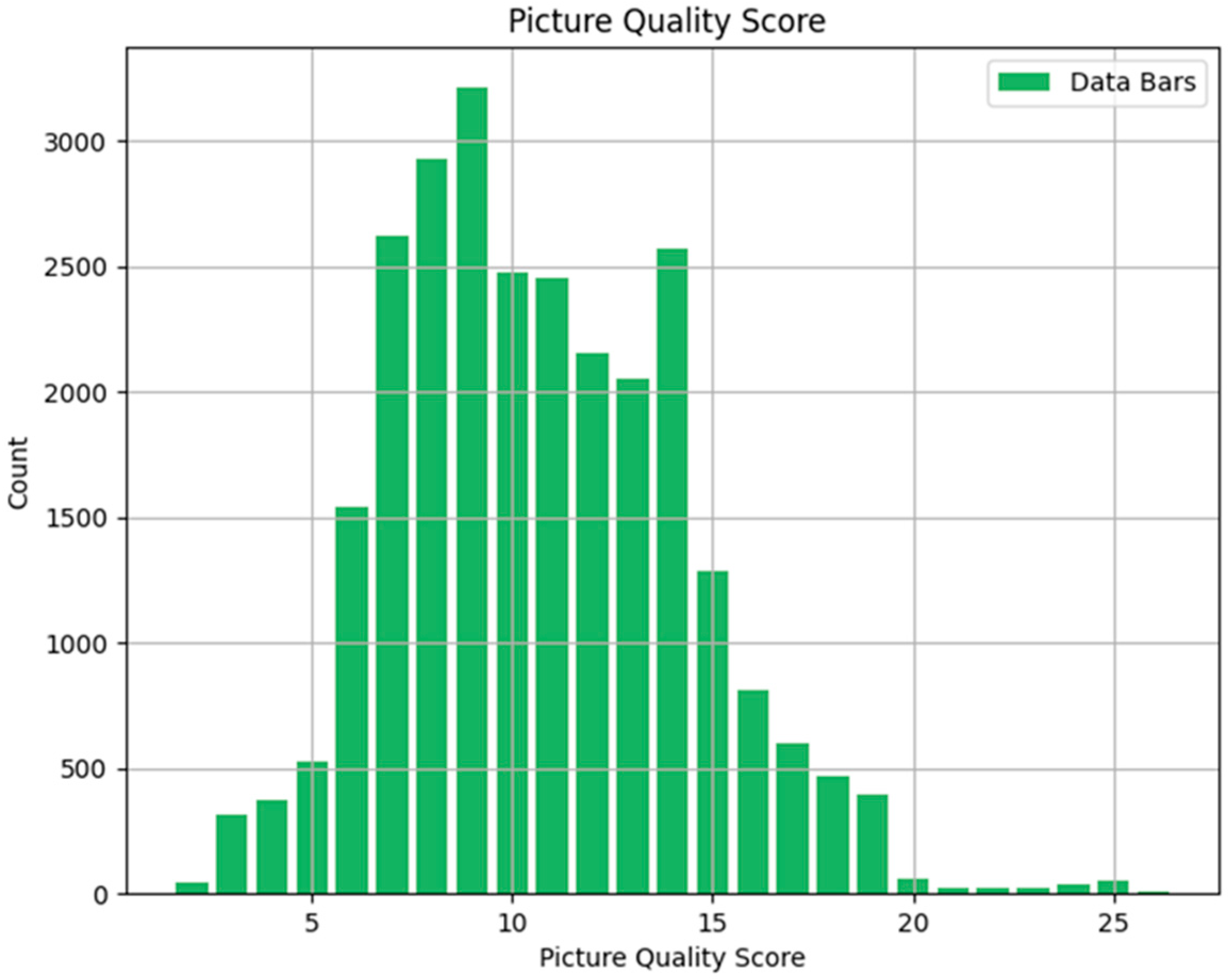

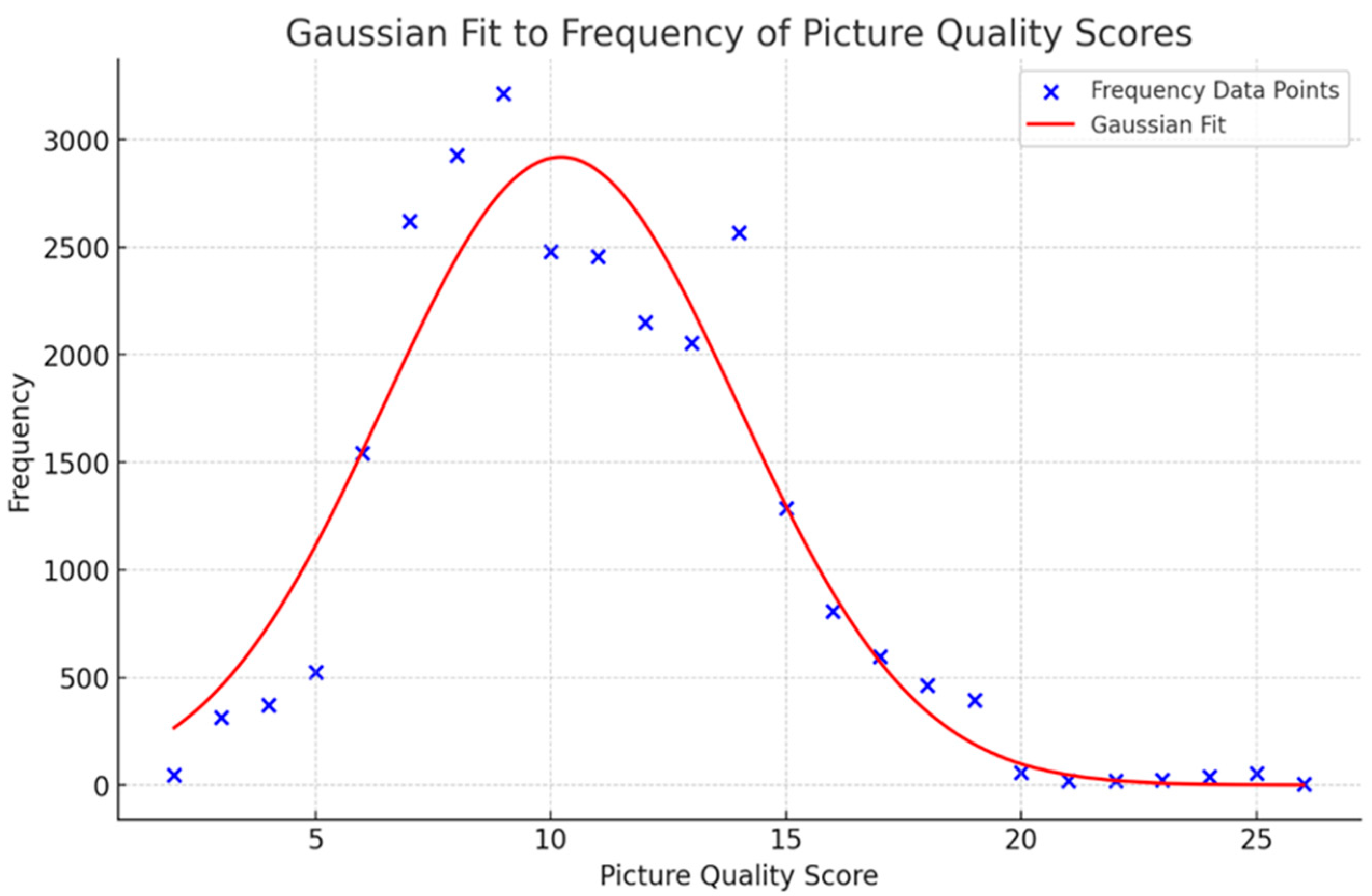

3.2. Image Blur Processing and Quality Assessment

3.3. Experimental Analysis of Adaptive VINS System Based on Image Quality

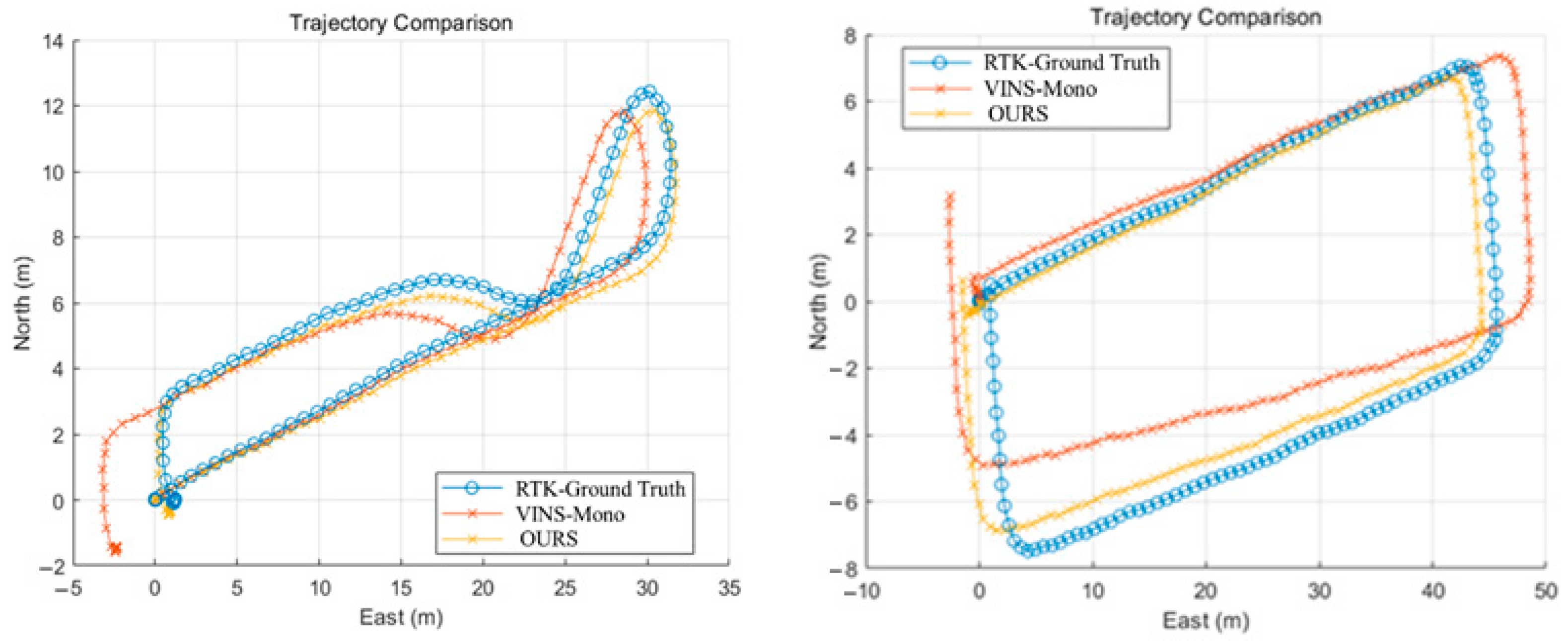

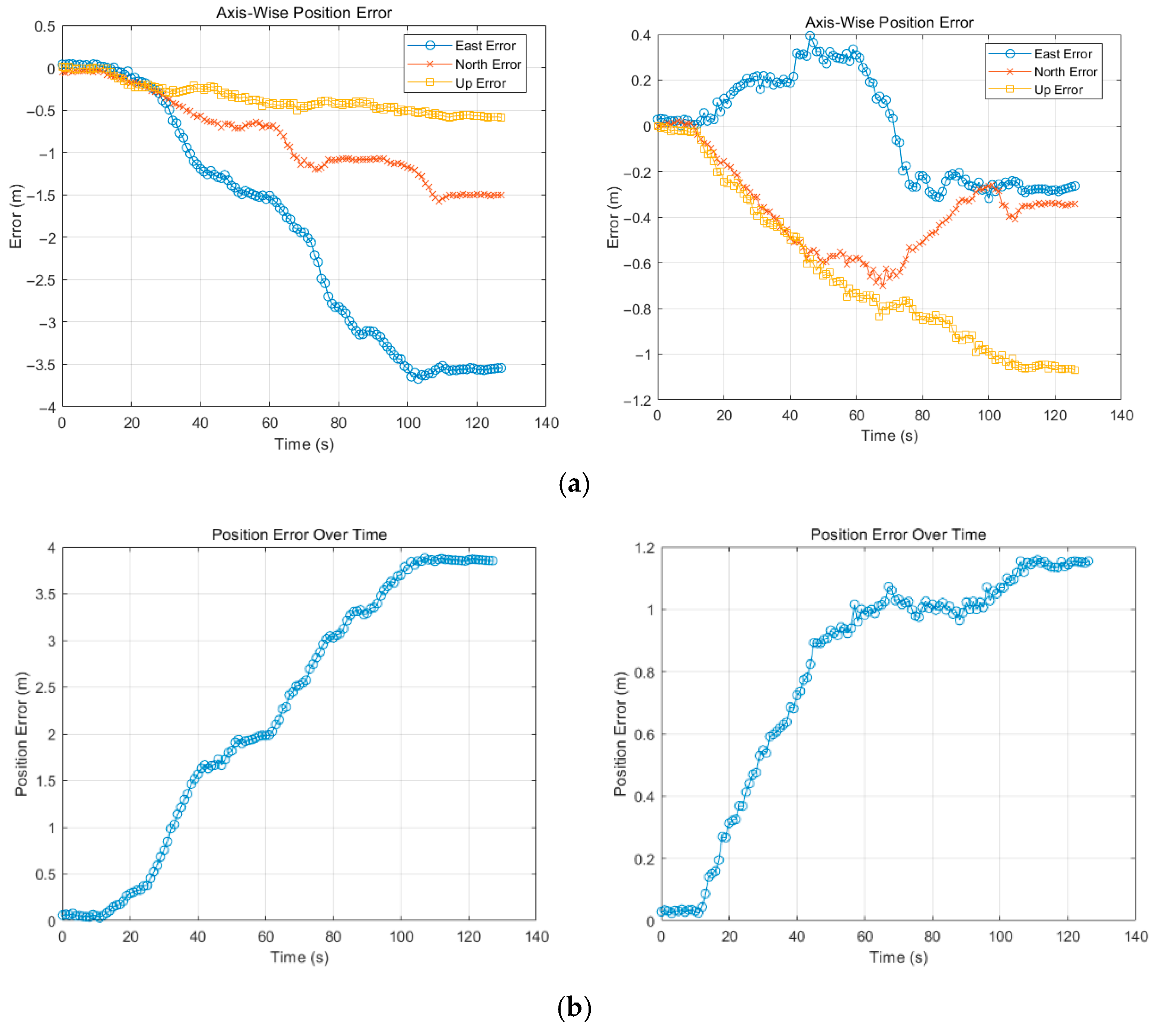

3.4. Field Experiments Outdoors

4. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Tang, X.; Yang, L.; Wang, D.; Li, W.; Xin, D.; Jia, H. A collaborative navigation algorithm for UAV Ad Hoc network based on improved sequence quadratic programming and unscented Kalman filtering in GNSS denied area. Measurement 2025, 242, 115977. [Google Scholar] [CrossRef]

- Li, C.; Wang, J.; Liu, J.; Shan, J. Cooperative visual–range–inertial navigation for multiple unmanned aerial vehicles. IEEE Trans. Aerosp. Electron. Syst. 2023, 59, 7851–7865. [Google Scholar] [CrossRef]

- Huang, G. Visual-inertial navigation: A concise review. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 9572–9582. [Google Scholar]

- Motlagh, N.H.; Bagaa, M.; Taleb, T. UAV-based IoT platform: A crowd surveillance use case. IEEE Commun. Mag. 2017, 55, 128–134. [Google Scholar] [CrossRef]

- Bisio, I.; Garibotto, C.; Haleem, H.; Lavagetto, F.; Sciarrone, A. RF/WiFi-based UAV surveillance systems: A systematic literature review. Internet Things 2024, 26, 101201. [Google Scholar] [CrossRef]

- Huang, H.; Zhao, G.; Bo, Y.; Yu, J.; Liang, L.; Yang, Y.; Ou, K. Railway intrusion detection based on refined spatial and temporal features for UAV surveillance scene. Measurement 2023, 211, 112602. [Google Scholar] [CrossRef]

- Zhu, Y.; Yan, Y.; Dai, A.; Dai, H.; Zhang, Y.; Zhang, W.; Wang, Z.; Li, J. UAV-MSSH: A novel UAV photogrammetry-based framework for mining surface three-dimensional movement basin monitoring. Measurement 2025, 242, 115944. [Google Scholar] [CrossRef]

- Yang, Z.; Yu, X.; Dedman, S.; Rosso, M.; Zhu, J.; Yang, J.; Xia, Y.; Tian, Y.; Zhang, G.; Wang, J. UAV remote sensing applications in marine monitoring: Knowledge visualization and review. Sci. Total Environ. 2022, 838, 155939. [Google Scholar] [CrossRef]

- Lin, Y.; Gao, F.; Qin, T.; Gao, W.; Liu, T.; Wu, W.; Yang, Z.; Shen, S. Autonomous aerial navigation using monocular visual-inertial fusion. J. Field Robot. 2018, 35, 23–51. [Google Scholar] [CrossRef]

- Qin, T.; Li, P.; Shen, S. Vins-mono: A robust and versatile monocular visual-inertial state estimator. IEEE Trans. Robot. 2018, 34, 1004–1020. [Google Scholar] [CrossRef]

- Chen, J.; Zhang, W.; Wei, D.; Liu, X. Optimized visual inertial SLAM for complex indoor dynamic scenes using RGB-D camera. Measurement 2025, 245, 116615. [Google Scholar] [CrossRef]

- Aslan, M.F.; Durdu, A.; Sabanci, K. Visual-Inertial Image-Odometry Network (VIIONet): A Gaussian process regression-based deep architecture proposal for UAV pose estimation. Measurement 2022, 194, 111030. [Google Scholar] [CrossRef]

- Hayat, S.; Yanmaz, E.; Brown, T.X.; Bettstetter, C. Multi-objective UAV path planning for search and rescue. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 5569–5574. [Google Scholar]

- Atif, M.; Ahmad, R.; Ahmad, W.; Zhao, L.; Rodrigues, J.J. UAV-assisted wireless localization for search and rescue. IEEE Syst. J. 2021, 15, 3261–3272. [Google Scholar] [CrossRef]

- Xiao, J.; Suab, S.A.; Chen, X.; Singh, C.K.; Singh, D.; Aggarwal, A.K.; Korom, A.; Widyatmanti, W.; Mollah, T.H.; Minh, H.V.T. Enhancing assessment of corn growth performance using unmanned aerial vehicles (UAVs) and deep learning. Measurement 2023, 214, 112764. [Google Scholar] [CrossRef]

- Yasin Yiğit, A.; Uysal, M. Virtual reality visualisation of automatic crack detection for bridge inspection from 3D digital twin generated by UAV photogrammetry. Measurement 2025, 242, 115931. [Google Scholar] [CrossRef]

- Zhao, Z.; Wu, C.; Kong, X.; Lv, Z.; Du, X.; Li, Q. Light-SLAM: A Robust Deep-Learning Visual SLAM System Based on LightGlue under Challenging Lighting Conditions. arXiv 2024, arXiv:2407.02382. [Google Scholar] [CrossRef]

- Alismail, H.; Kaess, M.; Browning, B.; Lucey, S. Direct visual odometry in low light using binary descriptors. IEEE Robot. Autom. Lett. 2016, 2, 444–451. [Google Scholar] [CrossRef]

- Pretto, A.; Menegatti, E.; Bennewitz, M.; Burgard, W.; Pagello, E. A visual odometry framework robust to motion blur. In Proceedings of the 2009 IEEE International Conference on Robotics and Automation, Kobe, Japan, 12–17 May 2009; pp. 2250–2257. [Google Scholar]

- Lupton, T.; Sukkarieh, S. Visual-inertial-aided navigation for high-dynamic motion in built environments without initial conditions. IEEE Trans. Robot. 2011, 28, 61–76. [Google Scholar] [CrossRef]

- Xiaoji, N.; Yan, W.; Jian, K. A pedestrian POS for indoor Mobile Mapping System based on foot-mounted visual–inertial sensors. Measurement 2022, 199, 111559. [Google Scholar] [CrossRef]

- Mustaniemi, J.; Kannala, J.; Särkkä, S.; Matas, J.; Heikkilä, J. Fast motion deblurring for feature detection and matching using inertial measurements. In Proceedings of the 2018 24th International Conference on Pattern Recognition (ICPR), Beijing, China, 20–24 August 2018; pp. 3068–3073. [Google Scholar]

- Ma, B.; Huang, L.; Shen, J.; Shao, L.; Yang, M.; Porikli, F. Visual tracking under motion blur. IEEE Trans. Image Process. 2016, 25, 5867–5876. [Google Scholar] [CrossRef]

- Song, S.; Lim, H.; Lee, A.J.; Myung, H. DynaVINS: A visual-inertial SLAM for dynamic environments. IEEE Robot. Autom. Lett. 2022, 7, 11523–11530. [Google Scholar] [CrossRef]

- Xu, X.; Hu, J.; Zhang, L.; Cao, C.; Yang, J.; Ran, Y.; Tan, Z.; Xu, L.; Luo, M. Detection-first tightly-coupled LiDAR-Visual-Inertial SLAM in dynamic environments. Measurement 2025, 239, 115506. [Google Scholar] [CrossRef]

- Solodar, D.; Klein, I. VIO-DualProNet: Visual-inertial odometry with learning based process noise covariance. Eng. Appl. Artif. Intell. 2024, 133, 108466. [Google Scholar] [CrossRef]

- Zhang, Y.; Yang, K.; Wang, Z.; Wang, K. P2U-SLAM: A Monocular Wide-FoV SLAM System Based on Point Uncertainty and Pose Uncertainty. arXiv 2024, arXiv:2409.10143. [Google Scholar]

- Zheng, J.; Zhou, K.; Li, J. Visual-inertial-wheel SLAM with high-accuracy localization measurement for wheeled robots on complex terrain. Measurement 2025, 243, 116356. [Google Scholar] [CrossRef]

- Loianno, G.; Watterson, M.; Kumar, V. Visual inertial odometry for quadrotors on SE (3). In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 1544–1551. [Google Scholar]

- Arjmandi, Z.; Kang, J.; Sohn, G.; Armenakis, C.; Shahbazi, M. DeepCovPG: Deep-Learning-based Dynamic Covariance Prediction in Pose Graphs for Ultra-Wideband-Aided UAV Positioning. In Proceedings of the 2024 IEEE 20th International Conference on Automation Science and Engineering (CASE), Bari, Italy, 28 August–1 September 2024; pp. 3836–3843. [Google Scholar]

- Shi, P.; Zhu, Z.; Sun, S.; Rong, Z.; Zhao, X.; Tan, M. Covariance estimation for pose graph optimization in visual-inertial navigation systems. IEEE Trans. Intell. Veh. 2023, 8, 3657–3667. [Google Scholar] [CrossRef]

- Anderson, M.L.; Brink, K.M.; Willis, A.R. Real-time visual odometry covariance estimation for unmanned air vehicle navigation. J. Guid. Control. Dyn. 2019, 42, 1272–1288. [Google Scholar] [CrossRef]

- Jung, J.H.; Choe, Y.; Park, C.G. Photometric visual-inertial navigation with uncertainty-aware ensembles. IEEE Trans. Robot. 2022, 38, 2039–2052. [Google Scholar] [CrossRef]

- Li, K.; Li, J.; Wang, A.; Luo, H.; Li, X.; Yang, Z. A resilient method for visual–inertial fusion based on covariance tuning. Sensors 2022, 22, 9836. [Google Scholar] [CrossRef]

- Kim, Y.; Yoon, S.; Kim, S.; Kim, A. Unsupervised balanced covariance learning for visual-inertial sensor fusion. IEEE Robot. Autom. Lett. 2021, 6, 819–826. [Google Scholar] [CrossRef]

- Huai, J.; Lin, Y.; Zhuang, Y.; Shi, M. Consistent right-invariant fixed-lag smoother with application to visual inertial SLAM. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 2–9 February 2021; Volume 35, pp. 6084–6092. [Google Scholar]

- Qin, T.; Shen, S. Robust initialization of monocular visual-inertial estimation on aerial robots. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; IEEE: New York, NY, USA, 2017; pp. 4225–4232. [Google Scholar]

- Ying, T.; Yan, H.; Li, Z.; Shi, K.; Feng, X. Loop closure detection based on image covariance matrix matching for visual SLAM. Int. J. Control. Autom. Syst. 2021, 19, 3708–3719. [Google Scholar] [CrossRef]

- Feng, Y.; Liu, Z.; Cui, J.; Fang, H. Robust Pose Graph Optimization Using Two-Stage Initialization and Covariance Matrix Rescaling Algorithm. In Proceedings of the 2024 China Automation Congress (CAC), Qingdao, China, 1–3 November 2024; pp. 4578–4583. [Google Scholar]

- Campos, C.; Montiel, J.M.; Tardós, J.D. Inertial-only optimization for visual-inertial initialization. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 51–57. [Google Scholar]

- Carlone, L. A convergence analysis for pose graph optimization via gauss-newton methods. In Proceedings of the 2013 IEEE International Conference on Robotics and Automation, Karlsruhe, Germany, 6–10 May 2013; pp. 965–972. [Google Scholar]

- Wang, C.; Wang, J.; Zhang, X.; Zhang, X. Autonomous navigation of UAV in large-scale unknown complex environment with deep reinforcement learning. In Proceedings of the 2017 IEEE Global Conference on Signal and Information Processing (GlobalSIP), Montreal, QC, Canada, 14–16 November 2017; pp. 858–862. [Google Scholar]

- Shi, J. Good features to track. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA; 1994; pp. 593–600. [Google Scholar]

- Gálvez-López, D.; Tardos, J.D. Bags of binary words for fast place recognition in image sequences. IEEE Trans. Robot. 2012, 28, 1188–1197. [Google Scholar] [CrossRef]

- Burri, M.; Nikolic, J.; Gohl, P.; Schneider, T.; Rehder, J.; Omari, S.; Achtelik, M.W.; Siegwart, R. The EuRoC micro aerial vehicle datasets. Int. J. Robot. Res. 2016, 35, 1157–1163. [Google Scholar] [CrossRef]

- Schubert, D.; Goll, T.; Demmel, N.; Usenko, V.; Stückler, J.; Cremers, D. The TUM VI benchmark for evaluating visual-inertial odometry. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 1680–1687. [Google Scholar]

| Sequence | Environment | Key Features |

|---|---|---|

| MH01 | Machine Hall | High-speed flight, cluttered environment |

| MH02 | Machine Hall | Complex, unstructured environment, varying lighting |

| MH03 | Machine Hall | Fast motion, dark scenes, high angular velocities |

| MH04 | Machine Hall | Challenging navigation with dynamic obstacles |

| MH05 | Machine Hall | High-speed, complex trajectories, low-light conditions |

| V101 | Vicon Room | Controlled environment, standard trajectories |

| V102 | Vicon Room | Varying viewpoints, moderate motion |

| V103 | Vicon Room | Detailed feature tracking, controlled lighting |

| V201 | Vicon Room | More complex paths, higher speeds |

| V202 | Vicon Room | Diverse scene changes, increased difficulty |

| V203 | Vicon Room | Long trajectories, mixed motion scenarios |

| Room1 | Indoor Room | Moderate lighting, structured scenes with furniture |

| Room2 | Indoor Room | Cluttered scenes, varied illumination |

| Room3 | Indoor Room | Rapid motion, dynamic lighting changes |

| Dataset | Ours | VINS-Mono | Optimization Rate |

|---|---|---|---|

| MH01 | 0.226439 | 0.249729 | 9.33% |

| MH02 | 0.152096 | 0.160133 | 5.02% |

| MH03 | 0.292502 | 0.356147 | 17.87% |

| MH04 | 0.285233 | 0.367638 | 22.41% |

| MH05 | 0.297453 | 0.327509 | 9.18% |

| V101 | 0.18902 | 0.196633 | 3.87% |

| V102 | 0.183044 | 0.241726 | 24.28% |

| V103 | 0.283535 | 0.304758 | 6.96% |

| V201 | 0.134362 | 0.166646 | 19.37% |

| V202 | 0.202847 | 0.26214 | 23.23% |

| V203 | 0.325277 | 0.441438 | 26.31% |

| Room1 | 0.188013 | 0.224466 | 16.24% |

| Room2 | 0.266701 | 0.32504 | 17.95% |

| Room3 | 1.031089 | 2.17341 | 52.56% |

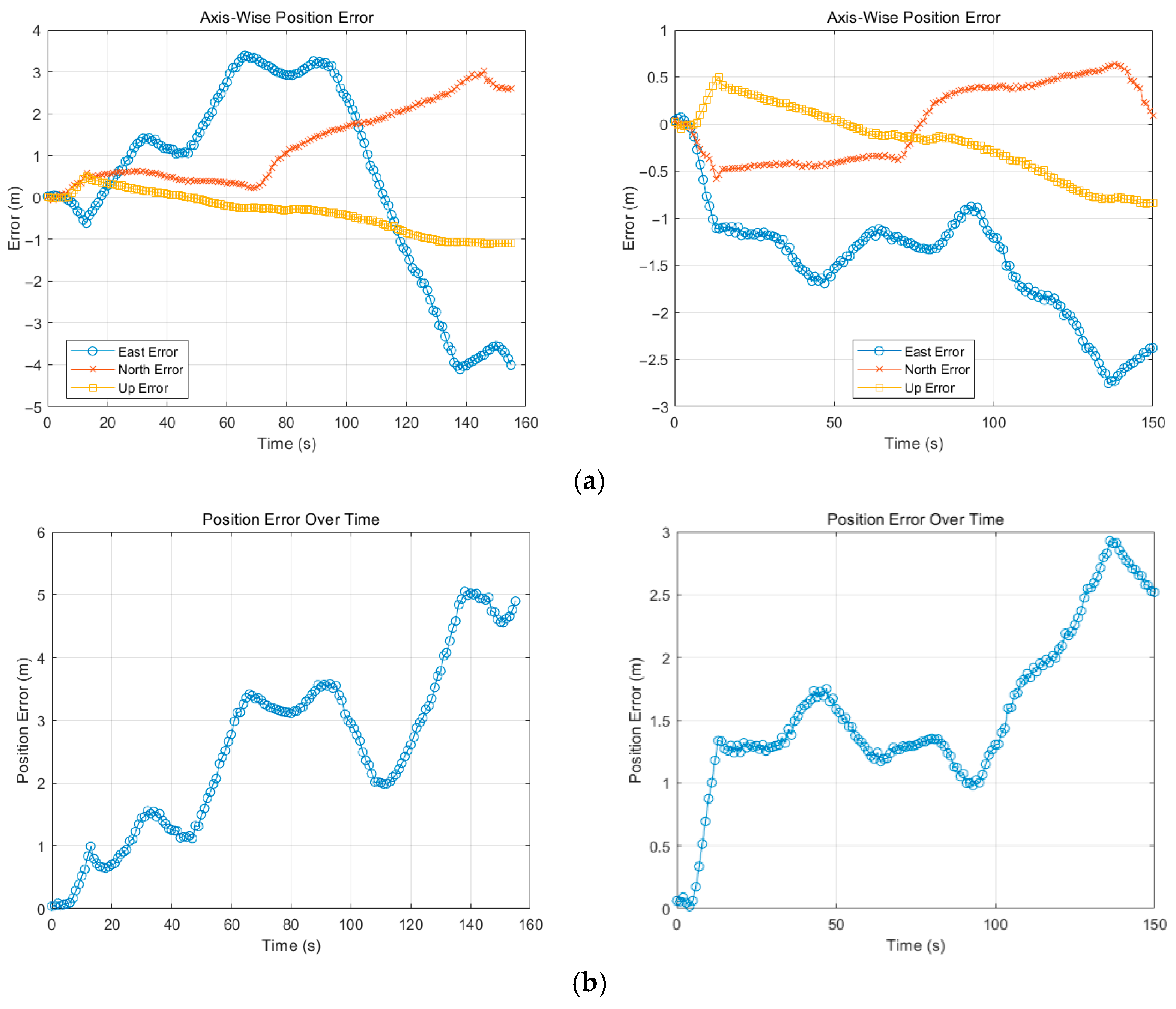

| Method | E (m) | N (m) | U (m) | 3D (m) | |

|---|---|---|---|---|---|

| RMS (60 s *) | VINS-Mono | 1.03 | 0.58 | 0.23 | 1.20 |

| Ours | 0.22 | 0.41 | 0.41 | 0.62 | |

| RMS (100 s) | VINS-Mono | 1.91 | 0.89 | 0.32 | 2.13 |

| Ours | 0.22 | 0.48 | 0.61 | 0.81 | |

| RMS (full) | VINS-Mono | 2.32 | 1.06 | 0.38 | 2.58 |

| Ours | 0.23 | 0.46 | 0.72 | 0.88 | |

| STD (60 s) | VINS-Mono | 0.71 | 0.36 | 0.12 | 0.77 |

| Ours | 0.12 | 0.23 | 0.26 | 0.35 | |

| STD (100 s) | VINS-Mono | 1.15 | 0.48 | 0.14 | 1.22 |

| Ours | 0.21 | 0.22 | 0.31 | 0.37 | |

| STD (full) | VINS-Mono | 1.30 | 0.53 | 0.17 | 1.38 |

| Ours | 0.23 | 0.20 | 0.35 | 0.37 | |

| MAE (60 s) | VINS-Mono | 0.69 | 0.33 | 0.22 | 0.81 |

| Ours | 0.17 | 0.31 | 0.35 | 0.50 | |

| MAE (100 s) | VINS-Mono | 1.49 | 0.61 | 0.32 | 1.66 |

| Ours | 0.19 | 0.38 | 0.55 | 0.71 | |

| MAE (full) | VINS-Mono | 1.92 | 0.77 | 0.37 | 2.12 |

| Ours | 0.21 | 0.37 | 0.65 | 0.79 |

| Method | E (m) | N (m) | U (m) | 3D (m) | |

|---|---|---|---|---|---|

| RMS (60 s *) | VINS-Mono | 0.53 | 0.46 | 0.28 | 0.75 |

| Ours | 0.93 | 0.39 | 0.29 | 1.05 | |

| RMS (100 s) | VINS-Mono | 2.04 | 0.63 | 0.24 | 2.15 |

| Ours | 1.21 | 0.37 | 0.20 | 1.28 | |

| RMS (full) | VINS-Mono | 2.39 | 1.53 | 0.58 | 2.90 |

| Ours | 1.58 | 0.41 | 0.42 | 1.69 | |

| STD (60 s) | VINS-Mono | 0.51 | 0.24 | 0.16 | 0.41 |

| Ours | 0.49 | 0.19 | 0.17 | 0.52 | |

| STD (100 s) | VINS-Mono | 1.29 | 0.33 | 0.24 | 1.15 |

| Ours | 0.41 | 0.25 | 0.19 | 0.40 | |

| STD (full) | VINS-Mono | 2.35 | 0.91 | 0.47 | 1.40 |

| Ours | 0.62 | 0.41 | 0.37 | 0.65 | |

| MEAN (60 s) | VINS-Mono | 0.38 | 0.40 | 0.23 | 0.63 |

| Ours | 0.80 | 0.34 | 0.25 | 0.91 | |

| MEAN (100 s) | VINS-Mono | 1.67 | 0.54 | 0.20 | 1.82 |

| Ours | 1.15 | 0.34 | 0.17 | 1.22 | |

| MEAN (full) | VINS-Mono | 2.02 | 1.24 | 0.45 | 2.54 |

| Ours | 1.46 | 0.38 | 0.33 | 1.56 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cong, Y.; Su, W.; Jiang, N.; Zong, W.; Li, L.; Xu, Y.; Xu, T.; Wu, P. Adaptive Covariance Matrix for UAV-Based Visual–Inertial Navigation Systems Using Gaussian Formulas. Sensors 2025, 25, 4745. https://doi.org/10.3390/s25154745

Cong Y, Su W, Jiang N, Zong W, Li L, Xu Y, Xu T, Wu P. Adaptive Covariance Matrix for UAV-Based Visual–Inertial Navigation Systems Using Gaussian Formulas. Sensors. 2025; 25(15):4745. https://doi.org/10.3390/s25154745

Chicago/Turabian StyleCong, Yangzi, Wenbin Su, Nan Jiang, Wenpeng Zong, Long Li, Yan Xu, Tianhe Xu, and Paipai Wu. 2025. "Adaptive Covariance Matrix for UAV-Based Visual–Inertial Navigation Systems Using Gaussian Formulas" Sensors 25, no. 15: 4745. https://doi.org/10.3390/s25154745

APA StyleCong, Y., Su, W., Jiang, N., Zong, W., Li, L., Xu, Y., Xu, T., & Wu, P. (2025). Adaptive Covariance Matrix for UAV-Based Visual–Inertial Navigation Systems Using Gaussian Formulas. Sensors, 25(15), 4745. https://doi.org/10.3390/s25154745