Abstract

Amid the global shift toward clean energy, wind power has emerged as a critical pillar of the modern energy matrix. To improve the reliability and maintainability of wind farms, this work proposes a novel hybrid fault detection approach that combines expert-driven diagnostic knowledge with data-driven modeling. The framework integrates autoencoder-based neural networks with Failure Mode and Symptoms Analysis, leveraging the strengths of both methodologies to enhance anomaly detection, feature selection, and fault localization. The methodology comprises five main stages: (i) the identification of failure modes and their observable symptoms using FMSA, (ii) the acquisition and preprocessing of SCADA monitoring data, (iii) the development of dedicated autoencoder models trained exclusively on healthy operational data, (iv) the implementation of an anomaly detection strategy based on the reconstruction error and a persistence-based rule to reduce false positives, and (v) evaluation using performance metrics. The approach adopts a fault-specific modeling strategy, in which each turbine and failure mode is associated with a customized autoencoder. The methodology was first validated using OpenFAST 3.5 simulated data with induced faults comprising normal conditions and a 1% mass imbalance fault on a blade, enabling the verification of its effectiveness under controlled conditions. Subsequently, the methodology was applied to a real-world SCADA data case study from wind turbines operated by EDP, employing historical operational data from turbines, including thermal measurements and operational variables such as wind speed and generated power. The proposed system achieved 99% classification accuracy on simulated data detect anomalies up to 60 days before reported failures in real operational conditions, successfully identifying degradations in components such as the transformer, gearbox, generator, and hydraulic group. The integration of FMSA improves feature selection and fault localization, enhancing both the interpretability and precision of the detection system. This hybrid approach demonstrates the potential to support predictive maintenance in complex industrial environments.

1. Introduction

In recent years, the growing concern over environmental degradation has driven the increasing prominence of sustainability topics in modern society [1]. The dependence on fossil fuels for electricity generation, characterized by environmental harm and resource finitude, underscores the urgency of transitioning to renewable sources. Among these, wind energy has emerged as a key pillar for building a more sustainable energy future. Technological development is, thus, essential to enhance the efficiency of renewable sources and facilitate their broader integration.

The global wind energy sector has experienced continuous growth, with the Global Wind Energy Council reporting a cumulative installed capacity of 77.6 GW in 2022 and projections exceeding 100 GW by 2024 [2]. Despite this significant growth, ensuring operational reliability and effective maintenance strategies remains challenging, particularly due to the remote and often inaccessible locations of wind farms, complicating routine inspections and interventions.

Effective asset maintenance is essential to guarantee the continuous and efficient operation of wind turbines. Recent advances in artificial intelligence (AI) and data-driven technologies have substantially improved predictive maintenance, especially in fault detection and diagnosis tasks. Data-driven approaches leverage operational data from supervisory control and data acquisition (SCADA) and condition monitoring systems (CMSs) to monitor asset health in real time. Among these methodologies, deep learning techniques, particularly autoencoder-based neural networks, have demonstrated promising results in anomaly detection applications. Autoencoders effectively model complex patterns in high-dimensional data without relying extensively on labeled datasets.

Previous studies have demonstrated the effectiveness of autoencoders in various applications. For instance, in the study by Cui and Tjernberg [3], autoencoders and recurrent units were used to model the normal behavior of power transformers, enabling the early detection of operational risks before sensor faults occurred.

Similarly, Radaideh et al. [4] explored the use of recurrent and convolutional LSTMs autoencoders for anomaly detection in time series signals from power electronics. Additionally, Qi et al. [5] proposed a fault detection method for arc faults using wavelet transform and LSTM autoencoders for photovoltaic energy systems.

Huynh et al. [6] developed an unsupervised framework using multilayer autoencoders for anomaly detection in power grids. Rosa et al. [7] also employed deep neural networks based on autoencoders for fault detection in hydroelectric plants. Their work was later expanded to include failure prognosis in the proposed framework, demonstrating that the remaining useful life of components can be predicted semi-supervised using autoencoders.

Tang et al. [2] propose a hybrid approach for fault diagnosis in wind turbine generators, combining a stacking algorithm with an adaptive threshold mechanism. The model is structured into two layers: In the first, some base classifiers are employed; in the second layer, a logistic regression-based meta-classifier integrates these outputs, forming the decision-making structure. The authors also introduce an adaptive threshold calculated from the mean and standard deviation of the estimated fault probability during the machine’s healthy condition. The methodology was initially validated on simulated data from an experimental test bench using a three-phase asynchronous generator subjected to induced faults. Subsequently, the approach was tested on a real-world dataset provided by EDP, focusing on Turbine T07, which presented a recorded generator bearing failure.

Bindingsb et al. [8] propose a methodology based on interpretable machine learning for fault detection in wind turbine generator bearings. The technique involves predicting bearing temperature under normal operating conditions. To ensure model interpretability, the authors employ the Shapley Additive Explanations tool, enabling the identification of each input variable’s contribution to the model’s predictions and enhancing confidence in the obtained results. Several algorithms, including linear regression, random forest, support vector regression, and XGBoost, were tested and compared, with XGBoost ultimately selected as the most effective, based on metrics such as RMSE, MAE, and execution time. The methodology was applied to dataset provided by EDP, with the case study focusing on Turbine T07. The results demonstrate that the model is capable of detecting failures in advance.

Du et al. [9] present a denoising autoencoder designed to detect anomalies in wind turbines. The DAE is trained on historical normal data to enhance robustness against noise, and the reconstruction error is evaluated using an exponentially weighted moving average control chart to reduce false positives. The model’s performance is assessed through sparse fault estimation, which identifies the key variables contributing to the detected anomalies.

Chokr et al. [10] propose an approach for anomaly detection in wind farms using a bidirectional long short-term memory autoencoder, designed for high-dimensional multivariate time series data. The model was trained and tested with real SCADA data from five wind turbines, demonstrating its ability to detect anomalies based on normal data. The study highlights the importance of capturing temporal dependencies and the challenges in selecting detection thresholds, contributing to improved safety and reliability in renewable energy systems.

Miele, Bonacina, and Corsini [11] propose an anomaly detection approach for wind turbines using an autoencoder applied to multivariate time series derived from SCADA systems. The proposed architecture consists of an encoder and decoder based on convolutional neural networks. Input data are organized into sliding windows. The model is trained to reconstruct the machine’s normal behavior. The detection methodology comprises two main stages: (i) the definition of a global Mahalanobis indicator, which assesses global multivariate deviations based on the reconstruction error distribution during normal operation and (ii) the calculation of local residual indicators, which quantify reconstruction errors for each monitored features. The model was validated using a publicly available real SCADA dataset provided by EDP. MTGCAE was benchmarked against two reference architectures from the literature, LSTM-AE and CNN–LSTM, demonstrating superior performance across all evaluation metrics.

Nogueira et al. [12] propose a fault detection methodology for wind turbines based on autoencoder neural networks applied to SCADA time-series data. The approach consists of a structured six-step procedure. The autoencoder was implemented using a multi-layer perceptron architecture and trained with only healthy operational data. Anomalies were identified through the reconstruction error, quantified by the root mean squared error, and a static detection threshold was defined as the mean RMSE plus three standard deviations. The methodology was validated using a real-world SCADA dataset from the EDP Open Data. However, only one failure mode was analyzed: overheating of the high-voltage transformer of Turbine T01, caused by fan degradation, which led to a total turbine shutdown. Although Failure Mode and Symptoms Analysis (FMSA) was not performed, the authors recognize its potential for future integration to improve feature selection and enhance fault localization. The model successfully detected a deviation in the reconstruction error before the failure recorded in the log, indicating its anomaly detection capability in wind turbine monitoring systems.

Despite the strong performance of autoencoder-based models in anomaly detection, their practical applicability heavily depends on the interpretability of results and the careful selection of input data, highlighting the need for integrating structured methods to support these tasks. The primary objective of this study is to develop and validate a novel fault detection framework for wind turbines by integrating autoencoder-based neural networks with Failure Mode and Symptoms Analysis (FMSA). This integration addresses one of the main limitations of data-driven approaches: the lack of physical meaning behind the input variables and the resulting anomalies. FMSA plays a central role in this framework by guiding the feature selection process based on engineering knowledge and historical failure evidence. Unlike purely statistical or correlation-based methods, FMSA enables the identification of measurable symptoms that are causally linked to specific failure mechanisms. Moreover, it allows the construction of interpretable models even in the absence of labeled fault data, supporting the early deployment of detection tools. In real-world scenarios, where faults may not have occurred yet in the monitored turbine, FMSA provides a proactive and structured way to isolate the most relevant input signals, enhancing the observability of degradation patterns and improving the reliability of the resulting anomaly detection system. As shown in Table 1, this approach distinguishes itself from recent studies by systematically incorporating expert knowledge through FMSA, which is rarely addressed explicitly in the existing literature.

Table 1.

Comparison of recent studies on data-driven fault detection methods.

To demonstrate the applicability and effectiveness of the proposed methodology, the developed framework was applied to two complementary case studies. The first involved simulated data generated via OpenFAST, allowing the validation of the model under controlled conditions with a known fault scenario. The second study relied on real operational data provided by the EDP Open Data platform, encompassing historical SCADA records and documented failures from actual wind turbines. This dual approach enables a comprehensive assessment of the method’s robustness and generalizability.

The novelty of this research resides in its systematic integration of autoencoders with FMSA, an approach scarcely explored in existing literature. While previous studies primarily rely on either deep learning architectures or interpretability-driven methods separately, the present study uniquely combines these elements to enhance the accuracy, interpretability, and reliability of fault detection. By explicitly linking observed anomalies identified by autoencoders with specific fault symptoms and degradation patterns through FMSA, this work provides a comprehensive and actionable diagnostic tool, capable of supporting informed and proactive maintenance decisions in wind energy operations.

2. Theoretical Background

This chapter introduces the theoretical foundations of the proposed methodology. First, the Failure Mode and Symptoms Analysis (FMSA) method is briefly described, followed by an explanation of autoencoder neural networks. These two techniques form the analytical basis for the fault detection approach developed in this study.

2.1. Failure Modes and Symptoms Analysis

FMSA is a structured approach used to systematically identify potential failures and their observable symptoms in operational data. According to ISO 13379 [13], FMSA enables the mapping of specific failure modes to measurable operational symptoms, supporting the selection of relevant features for fault detection models.

The FMSA process follows the main steps outlined below:

- Each component is analyzed based on historical records and technical insights to identify potential failure modes and the mechanisms that lead to their occurrence.

- For each identified failure mode, associated symptoms are mapped. These may range from physical signs, such as vibrations or abnormal noise, to anomalies observed in operational data.

- The severity of each failure, in terms of its impact on turbine operation and safety, is assessed. This evaluation supports the prioritization of critical failures and the identification of symptoms that require monitoring.

In contrast to traditional methodologies such as Failure Mode and Effects Analysis (FMEA) or Failure Mode, Effects, and Criticality Analysis (FMECA), FMSA specifically emphasizes symptom-based detection and monitoring strategies, assessing the effectiveness of different monitoring techniques in identifying failure symptoms rather than focusing solely on failure occurrences [14]. Gonzalez et al. (2023) [15] applied FMSA combined with FMECA to evaluate monitoring techniques in feed-drive systems, demonstrating the importance of selecting suitable detection strategies to maximize confidence and reduce unnecessary sensor deployment.

FMSA can also be employed to establish robust correlations between possible failure modes and specific signals monitored in industrial systems, such as those collected by SCADA systems in wind farms. By linking each identified failure mode with measurable symptoms, such as abnormal temperature fluctuations or excessive vibration, the analysis supports the technical validity and interpretability of the features used in anomaly detection models. Unlike traditional applications that emphasize prioritization and risk scoring, FMSA can serve as an analytical tool to support attribute selection. This aligns with recent literature [14,15] highlighting the benefits of symptom-based clustering in enhancing the sensitivity and specificity of predictive models, particularly those employing unsupervised techniques like autoencoders.

2.2. Autoencoder

The autoencoder is a specific type of neural network, known for its ability to reconstruct input data and is widely used in tasks such as compression, reconstruction, and anomaly detection [16]. It is an unsupervised representation learning algorithm in which the target values are set equal to the inputs. To prevent the autoencoder from learning a trivial identity mapping by simply copying the input data directly to the output without extracting any meaningful information, it is necessary to constrain the number of neurons in the hidden layers. This limitation forces the network to compress the data into a lower-dimensional representation, thereby preserving only the most relevant or informative aspects of the input. These aspects correspond to latent patterns, correlations, or structures present in the data, while noise and irrelevant variations tend to be discarded.

The autoencoder consists of two main components: the Encoder and the Decoder . The Encoder maps the input data to a compact latent representation , whereas the Decoder reconstructs the data from this compressed latent representation back to its original space . During training, the objective is to minimize a loss function that measures the discrepancy between the input data and their corresponding reconstructions at the output. This process forces the model to learn a compressed representation that retains the most relevant information from the original data. By minimizing this loss function using gradient-based optimization algorithms, the model parameters in both the encoder and the decoder are iteratively adjusted to improve the reconstruction capability of the network. For the backpropagation process to work properly, both components of the autoencoder must consist of continuous and differentiable functions [9].

Since the model proposed in this work is based on artificial neural networks, formal definitions are appropriate. According to [17], a feedforward neural network can be defined as , where represents the set of r layers, with each layer composed of neurons; is the set of weight matrices connecting each layer to the subsequent layer ; and is the set of bias vectors applied to each respective layer. The activation of the j-th neuron in layer is computed through the general feedforward propagation equation:

where is the activation function of layer i, denotes the activation of the k- th neuron in the previous layer, is the weight associated with the connection between neurons k and j, and is the bias applied to neuron j in layer .

In the specific case of an autoencoder, this operation can be represented in a more compact, vectorized form, considering a single hidden layer in the encoder and a single output layer in the decoder. For an input vector , the encoder projects the data into a latent space as follows:

where is the weight matrix between the input and hidden layers, is the bias vector, and is the activation function used in the encoder. In the decoding step, the latent vector h is used to reconstruct the input, resulting in an output given by

where e are the weight matrix and bias vector of the output layer, respectively, and is the decoder’s activation function. The model is trained to minimize the difference between x and .

As the autoencoder must reconstruct the input as accurately as possible during the training process, the parameters should be adjusted to minimize the reconstruction error between the original input data and the reconstructed output [18]. The reconstruction error can be quantified by the mean absolute error (MAE), mean square error (MSE), root mean square error (RMSE), or another similar metric. Analysis of the distribution of these metrics allows anomaly thresholds to be defined, with a substantial increase in the reconstruction error suggesting the presence of anomalies, indicating that the model is having difficulty reconstructing anomalous data with the same accuracy as normal data.

In the context of predictive maintenance, the autoencoder has proven particularly effective in the early detection of asset failures. Typically, the autoencoder is trained using monitoring data that represent the asset’s healthy operational behavior. When the trained autoencoder is later applied to operational data containing failures or degradation, it is unable to reconstruct the input as effectively, resulting in noticeable discrepancies between the input and output data. These discrepancies can then be used to identify anomalies in complex systems [16].

In addition, the autoencoder can be applied to diagnostic and prognostic tasks. When coupled with a classifier, it can help diagnose problems based on previously recognized patterns [19]. Similarly, by extrapolating the residuals or using them in supervised models, the autoencoder can be used to predict the future behavior of systems [19].

Although autoencoders share conceptual similarities with traditional dimensionality reduction techniques such as principal component analysis (PCA) and t-distributed stochastic neighbor embedding (t-SNE), their applicability to unsupervised fault detection presents distinct advantages. PCA, a linear method, is limited in its capacity to capture nonlinear relationships between variables, which are often critical in complex systems such as wind turbines. In contrast, autoencoders, being neural network-based models, offer nonlinear mappings and greater representational power, allowing them to learn more intricate latent structures. While t-SNE is effective for visualization purposes, particularly in two or three dimensions, it is non-parametric and non-invertible, making it unsuitable for reconstruction-based anomaly detection. Autoencoders, on the other hand, are trained to minimize reconstruction error, which directly supports the identification of deviations from normal operating patterns. These properties make autoencoders particularly suitable for condition monitoring tasks where subtle and nonlinear degradation behaviors need to be captured without supervision.

3. The Proposed Framework

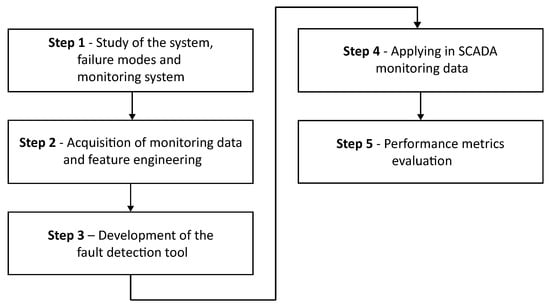

The proposed approach relies on detecting fault degradation patterns using the extrapolation of the reconstruction error from a deep autoencoder trained exclusively with monitoring data from the machine’s normal operational condition. This study focuses on identifying and isolating potential divergences in monitoring measurements, which may indicate a fault. The proposed methodology is structured into five main stages, which are illustrated in Figure 1.

Figure 1.

Flowchart describing the development of the proposed approach.

3.1. Step 1—Study of the System, Failure Modes, and Monitoring System

In this first stage, a comprehensive study of the operating principles of wind turbines and their main failure modes is carried out. The focus is on the complete architecture of the turbine, its internal interactions, and the joint impact of each component on overall performance, providing a solid basis for the subsequent stages.

The FMSA is used to map possible failure modes and their respective observable symptoms in the operational data. This process is conducted on the basis of a specialized literature review, the manufacturer’s technical documents, and a history of recorded failures, making it possible to identify which monitored variables are most likely to reflect changes in the system’s behavior during the degradation process. For each failure mode identified, typical symptoms that can be observed during its progression are associated, such as abnormal temperature variations in certain components, which make up the set of attributes that are candidates for input into the model.

Although FMSA offers methods for calculating prioritization indexes and incorporating detection scales, this work does not use these classifications. The emphasis is on establishing a link among physical symptoms, sensor signals, and degradation mechanisms to ensure that the attributes used as input in the detection models are technically justifiable and faithfully represent the manifestation of the failure process. This guarantees a set of key characteristics and parameters capable of distinguishing the identified failure modes and ensures that autoencoder training focuses on attributes based on real field evidence and not on simple statistical correlation or empirical heuristics.

This form of structuring by failure mode and symptom is supported by recent literature. According to [20], the clustering approach has proven to be highly effective in condition-based maintenance contexts, as it directs the selection of signals based on their observability in relation to each failure mode. The authors point out that an observability-oriented detection model outperforms generic models that do not consider the alignment between symptom and cause. Similarly, ref. [21] demonstrate that segmenting input data based on groups of variables related to specific failure modes rather than using a single set for all cases results in a significant increase in the sensitivity of multivariate methods such as PCA and autoencoders. Clustering by failure mode, according to the authors, helps to reduce statistical noise, improve interpretability, and allow the model to specialize in identifying degradation patterns that are distinct from each other.

Although FMSA can, in theory, map a wide range of failure modes, it is essential to recognize that not all of them can be incorporated into the predictive model, especially when there are no monitored attributes that reliably represent them. In many cases, the relevant symptoms are not recorded by the monitoring system or are of poor quality, which compromises their usefulness for modeling purposes. For this reason, only failure modes whose symptoms are measurable with adequate frequency and quality are effectively integrated into the set of attributes used for training.

However, one of the advantages of FMSA over purely empirical approaches is the possibility of considering failures not yet observed in the actual maintenance history, based on consolidated technical knowledge and evidence documented in the literature. This makes it possible to anticipate degradation behavior even for components that have not previously failed in the system under analysis. For example, even if a component such as the generator bearing has never failed in the field, it is known from studies and experience in similar systems that it may show a rise in temperature as a symptom of degradation. Information like this can be previously mapped in the FMSA and used to select relevant attributes to be implemented in the model.

FMSA, thus, acts as a bridge between expert knowledge and algorithm modeling, structuring input data based on reliable causal relationships. This alignment among fault, symptom, and sensor contributes to building more robust models, capable of identifying faults more sensitively, more accurately, and in advance.

3.2. Step 2—Acquisition of Monitoring Data and Feature Engineering

At this stage, data acquisition is a determining factor in the robustness of the anomaly detection system. The dataset used in this study comes from sensors installed in a wind turbine in real operation, covering highly critical attributes such as rotation speeds, temperatures, and power levels, in order to capture a complete overview of operating conditions.

To train the algorithm, it is necessary to divide the database into two parts: one containing records representative of the turbine’s normal operating behavior and the other containing data associated with degradation conditions. This separation is necessary because the model is initially trained exclusively with data considered to be healthy, in order to learn the typical and recurring patterns of normal system operation. Based on this learning, the model develops an ability to accurately reconstruct normal inputs. Subsequently, when exposed to data containing signs of failure or degradation, the algorithm tends to present higher reconstruction errors, since these patterns were not present in the training set.

To characterize the normal operational state, historical records from failure-free periods or maintenance expert insights are utilized. In certain cases, reference data may also include readings collected immediately after repairs or during a predefined time interval preceding failure occurrences. Although it is not feasible to establish an absolute threshold for distinguishing between normal and abnormal conditions in real-world operational data, this strategy seeks to capture gradual deviations that diverge from standard functioning patterns.

Data quality is another fundamental aspect of this phase. It is imperative that records classified under “normal operation” do not contain significant gaps or systematic errors that could compromise the model training process. Therefore, preliminary data audits must be performed to correct inconsistencies and ensure the reliability of sensor readings. Only after this verification has been completed does the preprocessing phase begin, followed by the implementation of the fault detection model.

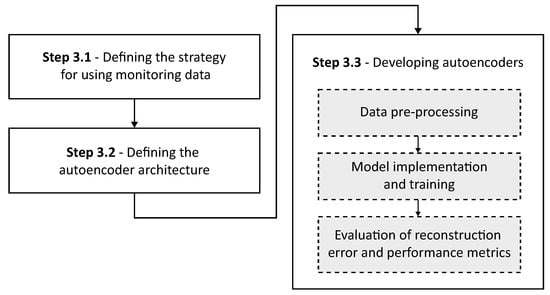

3.3. Step 3—Development of the Fault Detection Tool

With a solid foundation established in the previous stage, the third stage involves the development of autoencoder models. These models are designed to detect faults in the wind turbine system, based on each specific fault mode, taking advantage of both the detailed study of the system and the monitoring data. Figure 2 illustrates the substeps corresponding to Stage 3 of the research.

Figure 2.

Visual representation of the sub-stages of step 3.

3.3.1. Defining the Strategy for Using the Monitoring Data

As highlighted by [22], the available dataset allows the development of a number of algorithmic strategies for fault detection, each with its own particularities.

- Strategy 1—Specific model for each wind turbine: This approach involves creating an individual anomaly detection model for each wind turbine. This strategy can offer good accuracy as the model is adapted to a specific system, taking into account the particularities of each turbine. On the other hand, there is a higher computational cost and effort involved in maintaining multiple models;

- Strategy 2—General model for all the turbines in a wind farm: In this case, a single anomaly detection model is created for all the wind turbines in a specific wind farm. The advantage of this approach is that machine learning algorithms can be trained on a more comprehensive dataset collected from multiple turbines, resulting in a more informative training set that can lead to a good detection model, at the cost of potential loss of accuracy due to not considering individual specificities of each wind turbine;

- Strategy 3—Specific model for each failure mode: This strategy focuses on creating specific predictive models for each failure mode, training the algorithms on data targeted to each specific failure. This requires the creation of an individual training set for each failure mode, grouping together similar failure data from all turbines, as well as including a set of data without failures.

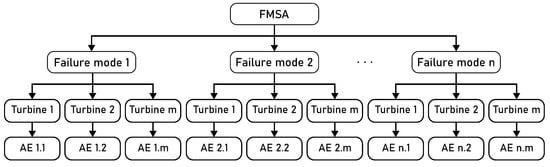

In this work, an adaptation of Strategy 3 for the autoencoder development was selected, taking into account both the specificity of the failure modes and the particularities of each turbine. Based on the mapping of possible failure modes and their respective symptoms through FMSA and the analysis of monitoring data, customized autoencoders were developed for each failure mode, and for each turbine in particular. By training specific autoencoders, each focused on a particular failure mode, it becomes possible not only to reduce computational costs and processing time but also to increase the efficiency of the system, allowing for more accurate detection of the failure process. This approach also provides greater flexibility in adapting the models to operational variations and the environmental characteristics of each wind turbine. In addition, individualized training of the autoencoders provides better interpretability of the results, as the reconstruction error patterns can be traced directly to a specific failure mode and turbine. The system chosen for this strategy is detailed in Figure 3.

Figure 3.

FMSA-guided development of turbine- and fault-specific autoencoder models.

Some events are external to the operation of the wind turbines and, although they can affect the subsystem, they are not included in the analysis, such as stoppages caused by weather conditions or human interference. This delimitation aims to focus on failures endogenous to the plant, allowing a more in-depth understanding of the system’s vulnerabilities and critical points.

3.3.2. Defining the Autoencoder Architecture

The architecture of the autoencoder (i.e., the arrangement of layers and dimensions of the neurons) must be carefully designed to balance complexity and generalization capacity. Various architectures, such as multi-layer perceptron (MLP), convolutional neural networks (CNN) or long short-term memory networks (LSTM), can be used depending on the specific requirements and the nature of the data.

In general, the input layer corresponds to the number of features in each time window, representing all the sensory variables. In the encoder, a dense layer arrangement is adopted whose number of neurons decreases progressively (e.g., 32-16-8). This architecture allows the model to learn increasingly compact representations of the data.

At the center of the network, the latent space layer contains fewer neurons because they are the compressed representation of the data. This layer acts as a bottleneck, forcing the model to learn the most important features and discard noise and redundant information.

In the decoder, the architecture mirrors that of the encoder, progressively expanding (e.g., 8-16-32 neurons) and reconstructing the original data based on the latent representation.

The process also follows the usual steps of adjusting parameters and evaluating the model:

- Definition of the loss function: Generally, the mean squared error or the mean absolute error are adopted as reconstruction metrics.

- Choice of optimizer: Optimizers such as Adam, root mean square propagation (RMSProp) or stochastic gradient descent (SGD) can be used, depending on the behavior of the model and the desired performance.

- Setting hyperparameters: This involves selecting the number of layers, number of neurons in each layer, dropout rate, regularization techniques, activation functions (e.g., ReLU, sigmoid or tanh), and number of training epochs.

When adjusting hyperparameters, different combinations are explored to find the configuration with the lowest reconstruction error in the validation data. The search for these parameters can be performed either manually or automatically, using algorithms such as grid search, which is a method used to go through a matrix of defined values. Techniques such as random search or Bayesian optimization can also be used, which usually converge more quickly to regions of global minimum. At the end of this phase, the autoencoder architecture has been adapted to the characteristics of the problem and validated in terms of its reconstruction capacity.

3.3.3. Developing Autoencoders

This subsection deals with the process of developing the networks. It begins by describing the pre-processing of the data, which is a fundamental stage in guaranteeing the quality and consistency of the information supplied to the model. This is followed by the implementation and training of the autoencoders. This workflow allows the correct learning of normal operating patterns and enables the identification of possible faults or degradations in turbine performance.

Data pre-processing is a fundamental stage in preparing raw data for analysis. This process involves various activities aimed at correcting inconsistencies, removing noise, and standardizing the variables. This initial care has a direct impact on faster convergence during training and on the model’s generalization power, allowing for more reliable detection of any abnormal operating events.

Initially, the data is cleaned, which includes checking for and dealing with missing values. Where feasible, imputation techniques such as interpolation are applied to fill in measurement gaps over time and maintain signal continuity. If the missing data is too numerous or inconsistent, it may be necessary to discard certain samples so as not to compromise the learning of the model.

Next, outliers filtering is performed on the data from the normal operating state, in order to identify and remove values that deviate significantly from the turbine’s normal behavior. These values may correspond to measurement errors or very specific operating conditions that do not represent routine operation. Statistical methods such as standard deviation and interquartile range (IQR) are used for this purpose. The IQR corresponds to the difference between the third (Q3) and first quartile (Q1) of the data distribution, resulting in the lower and upper limits.

Any data point that falls below the lower limit is considered an outlier. These values are significantly lower than those of the majority of the data set and are potential candidates for removal or further investigation. On the other hand, any data point that exceeds the upper limit is also considered an outlier. These values are much higher than those of the majority of the dataset and require special attention.

Another fundamental step in data pre-processing is standardization, in which the values of each attribute are updated so that the resulting set has an average value equal to zero and a standard deviation of one. This standardization makes it possible to compare quantities with different scales and contributes to numerical stability and accelerated convergence of the model during training. This prevents variables with higher magnitudes from dominating learning.

Mathematically, standardization using the Z-score technique consists of subtracting the mean () and dividing by the standard deviation ().

The autoencoder must be trained exclusively with normal operating data, so that the model learns the typical operating patterns of the wind turbine. During training, the autoencoder adjusts its internal weights to reduce the discrepancy between the inputs and the reconstructed outputs, promoting the internalization of the regularities present in the data.

At the end of the training periods, the model is submitted to a validation process in order to assess its generalization capacity and its effectiveness in detecting degradation. To do this, a separate training dataset known as the hold-out set is used, which can include both records of normal operation not seen during training and data representative of degradation conditions, to assess the model’s ability to detect deviations.

During this stage, performance indicators are monitored, such as the validation loss function and reconstruction curves over time, which help to verify whether the model is overfitting or underfitting. These indicators serve as a basis for determining whether the model has reached a satisfactory configuration or whether additional adjustments to the architecture or hyperparameters are required.

The evaluation of the autoencoder’s performance is conducted on the basis of test data, focusing on the model’s ability to reconstruct representative samples of normal operating conditions and to identify degradation patterns or anomalous conditions. Initially, the accuracy with which the model reproduces the input data is analyzed, which reflects the autoencoder’s effectiveness in capturing the intrinsic patterns of normal data. At the same time, the sensitivity of the model in detecting atypical behaviour is assessed, characterized by significant increases in the reconstruction error when subjected to operating conditions that diverge from the learned patterns.

Specific metrics are used to measure the quality of the reconstruction and the efficiency of the model in detecting anomalies, in particular, the MSE. This metric is widely used to quantify the difference between the signal sequences reconstructed by the autoencoder and the values observed in the real data. A substantial increase in MSE is a reliable indicator of anomalies, as it reflects the model’s difficulty in reconstructing data that deviates from previously learned patterns.

3.4. Step 4—Applying in SCADA Monitoring Data

The application of the developed tool was carried out in two complementary stages. Initially, the model was tested with simulated data, containing representative records of different operating conditions and an induced failure mode. This first phase made it possible to validate the model’s ability to reconstruct normal behavior and detect anomaly patterns in a controlled environment, in which failure is previously known and labeled. From these data, indicators were generated that demonstrate the model’s potential to support predictive maintenance strategies.

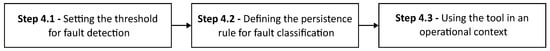

The tool was then applied to a set of real operational data from SCADA systems, covering the operational variables of wind turbines in operation. These data include historical records of continuous operation and documented failure events, allowing an analysis of a controlled environment that is closer to the industrial environment. Figure 4 presents the sub-steps that make up Stage 4 of this paper.

Figure 4.

Stages of step 4.

3.4.1. Setting the Threshold for Fault Detection

Once the model has been trained, the identification of anomalies is based on the difference between the input values and the values reconstructed by the autoencoder. To formalize this process, a threshold is set for the reconstruction error which, when consistently exceeded, indicates the presence of anomalous behaviour.

The anomaly detection threshold was set based on a non-parametric approach, using the value corresponding to the 99.7% percentile (percentage equivalent to the mean plus three times the standard deviation in a normal distribution) of the MSE obtained during the autoencoder training phase. This choice is justified by the lack of adherence of reconstruction errors to a normal distribution, which makes it impossible to apply parametric criteria based on mean and standard deviation. Let be the set of reconstruction errors, where each error is defined as

with representing the input and the respective reconstruction generated by the autoencoder. The threshold is set as

where corresponds to the value below which 99.7% of the reconstruction errors observed during normal operation lie. This value represents an upper tolerance limit, without the need to assume symmetry or any parametric form, normality, or underlying statistical distribution. The choice of percentile is based on coverage and safety criteria, seeking to restrict the signaling of anomalies to significant deviations from the behavior reconstructed by the model, regardless of the probabilistic structure of the data. Anomalies are detected by checking whether the reconstruction error of a new observation satisfies . However, to avoid undue alarms caused by transient fluctuations or occasional noise, a temporal persistence rule was adopted. An event is classified as an anomaly only when n consecutive samples exceed the threshold.

3.4.2. Defining the Persistence Rule for Fault Classification

After the data have been reconstructed by the autoencoder and the reconstruction error vector has been calculated, an additional verification routine is adopted to decide whether degradation is actually present. This approach seeks to deal with false positives arising from point variations or noise, ensuring greater reliability in the detection of anomalies.

Fault detection is based on a persistence criterion, which assesses whether reconstruction errors remain consistently above the established threshold. If a sequence of consecutive MSE values exceeds the threshold, an accumulative anomaly is characterized, indicating the start of degradation behaviour.

The persistence logic was implemented using a sliding window of fixed size n, applied to the reconstruction error vector. The value of n is adjustable and can be set based on the characteristics of the system or the desired level of sensitivity, preferably calibrated with expert support. Operation is as follows:

- Persistence for activation of fault state (state 1): The system monitors the reconstruction error vector over time, applying a sliding window with n consecutive readings. If all the values within this window are greater than or equal to a certain threshold, the system changes the state to failure (state 1), indicating the detection of a persistent anomalous condition. This criterion was established to ensure that small momentary deviations in the error data do not result in incorrect fault detection.

- Persistence for return to normal state (state 0): Similarly, reversion to the normal operating state (state 0) only occurs if n consecutive readings in the sliding window show reconstruction error values below the threshold. This criterion prevents a brief correction in the system’s behavior, which may be transient, from being interpreted as a complete return to the normal condition.

This persistence introduces an additional layer of robustness to fault detection, as it prevents the system from repeatedly switching between states in response to momentary fluctuations or noise in the data, thus ensuring that the transition between states only occurs in cases of consistent variations. This approach is in line with the need for stability in industrial systems, where temporary variations are common and where the early and consistent identification of faults can avoid unnecessary interventions and high operating costs.

For this work, n = 20 was adopted, i.e., when 20 points exceed the established threshold, the machine was classified as failing. This persistence was defined to reduce false positives caused by noise or transient weather phenomena, which can temporarily interfere with sensor readings, since the aim is to identify only progressive faults. These points are equivalent to 3 h and 20 min of machine operation. It should also be noted that the selection of this value is based on a global analysis of the system and the knowledge of experts in the field, as too few points could generate false positives, while too many would delay decision-making.

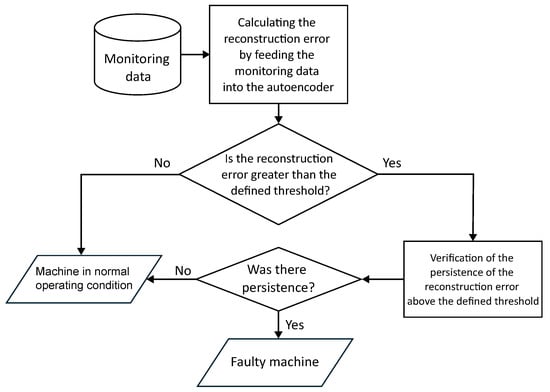

3.4.3. Using the Tool in an Operational Context

The application of the tool in an operational context follows the flow presented in the Figure 5, detailing the stages of the monitoring and fault detection process. The process begins with the acquisition of monitoring data from sensors installed in the turbine. These data are processed by the autoencoder, which calculates the reconstruction error by comparing the input values with the values reconstructed by the model, previously trained under normal operating conditions.

Figure 5.

Flowchart of the application of the tool in an operational context.

The first analysis is to check whether the reconstruction error exceeds the previously defined threshold, which represents the expected range of variation for normal data. If the error remains below this threshold, the machine is considered to be in a normal operating state, reflecting stable and adequate system conditions. On the other hand, if the reconstruction error exceeds the threshold, an additional analysis stage begins, which assesses the persistence of this anomalous behavior.

Checking for persistence avoids generating false alarms due to temporary fluctuations in the data, such as noise or transient variations. Only when the reconstruction error remains consistently above the threshold over a predetermined period does the system classify the machine as being in a fault state.

3.5. Step 5—Performance Metrics Evaluation

The final stage of this research aims to discuss the applicability of the proposed model for automatic fault detection, by analyzing its performance on both simulated and real wind turbine operating data. After training the autoencoder, the models generated are experimentally validated using a separate dataset exclusively for testing.

When a labeled database is available, i.e., one in which normal operation records and fault events are previously identified, it becomes possible to use specific evaluation metrics in addition to the average reconstruction error. Metrics such as the true positive rate, which represents the proportion of faults correctly identified, and the false positive rate, which indicates the proportion of normal conditions incorrectly classified as anomalies, can be used for a more robust quantitative assessment. These metrics are calculated exclusively during the testing phase, from a reference vector containing known periods of degradation. Thus, initially, the model is then evaluated in a controlled environment, using simulated data with known labels, in which the periods of failure and degradation are explicitly defined.

Based on the results obtained from the simulation data, the model is then applied to a set of unlabeled real data from the SCADA system. In this scenario, the absence of explicit labels makes it impossible to use supervised metrics directly. Thus, the evaluation is based on anticipating the fault record present in the EDP’s operational log, using the temporal persistence rule to ensure that the fault classification is robust and not influenced by transient fluctuations in the reconstruction error.

In addition, the local variability present in the data is taken into account, especially in equipment subject to multiple operating modes, intermittent operating cycles or natural transitions between operating states, since fluctuations in reconstruction values can directly affect the network’s ability to detect anomalies. In these situations, the dynamic behavior of the machine can generate momentary fluctuations in the reconstruction error, which do not necessarily indicate faults. These fluctuations can compromise the sensitivity of the model and lead to misinterpretations caused by occasional fluctuations or noise in the data.

For this reason, the definition of the detection threshold and the size of the persistence window must take into account the operational characteristics of the monitored equipment, ideally with the help of experts. This phase is crucial to ensure a balance between sensitivity (the ability to identify real faults) and specificity (avoiding false positives).

4. Results

This chapter examines the applicability of the formulated method and presents the results of applying autoencoder techniques to a simulated database and a real wind farm operation database. Finally, it discusses limitations, further improvements, and future opportunities.

The incorporation of expert knowledge into the proposed methodology is formalized through the development of the FMSA, which serves as a cornerstone for fault-mode-driven variable selection and system understanding. This analytical framework was constructed with the active participation of three highly qualified experts, all holding doctoral degrees in engineering. Two of them are recognized specialists in reliability engineering, with extensive experience in methodologies such as FMEA, FMSA, and failure diagnostics across complex industrial systems. The third expert brings deep practical expertise in wind turbine operation, maintenance routines, and failure mechanisms, having worked directly with utility-scale wind energy systems. Their combined contributions ensured that FMSA was not merely a theoretical construct, but a rigorously validated framework grounded in field knowledge and reliability science.

4.1. Simulated Data

To support the initial validation of the proposed methodology, a simulated database was employed to assess the model’s effectiveness in detecting structural faults in wind turbines. The simulations were based on the 5MW reference wind turbine model developed by the National Renewable Energy Laboratory (NREL), which is widely adopted in aeroelastic and structural dynamics research. These synthetic data were generated under controlled fault conditions, allowing for precise control over failure scenarios and the availability of fault labels information that is not present in the real SCADA dataset provided by EDP. Such labeling enables the evaluation of detection performance through objective metrics, such as accuracy, sensitivity, and detection time. Additionally, the use of simulated scenarios facilitates consistent benchmarking and reproducibility in future comparative studies with other anomaly detection techniques. The simulation and post-processing procedures are thoroughly described in [23], including the fault injection mechanisms and modeling assumptions, which enhance the transparency and interpretability of the results.

The simulation modeling was conducted in the OpenFAST software, and the database was provided by the Wind Engineering Laboratory (LEVE) of the University of São Paulo. The dataset covers nine normal operating conditions, generated from the combination of three average wind speeds, 7 m/s (torque control region), 10.5 m/s (transition zone), and 14 m/s (above the nominal speed), with three levels of turbulence (12%, 14%, and 16%), according to the criteria of the [24] standard.

To represent the failure condition, the presence of a mass imbalance of 1% at the end of one of the blades was simulated, in accordance with the guidelines of the [24] standard, which consider failures induced by ice formation or loss of material due to atmospheric discharges. This level of asymmetry represents an incipient but realistic fault, capable of generating centrifugal forces and anomalous vibrational patterns, without compromising the turbine’s continuous operation.

The methodology for generating the simulated data and the details of the modeling are described in [23], which presents a structured approach for synthesizing conditional monitoring signals using OpenFAST. The study provides complete documentation of the turbine adopted, including geometric, structural, and dynamic properties, as well as the signal processing flow required to transform the raw data from the simulation into a structured database suitable for the application of machine learning and fault detection techniques.

The final database contains 11,232 simulated records of normal operation and 442 records of unbalanced turbine conditions. The selection of the model’s input attributes is based on the expected sensitivity of the signals to structural variations caused by unbalance, also provided by the work of [23].

An FMSA analysis was carried out on the simulated database, as illustrated in Table 2, from which the most relevant attributes for detecting the fault under study were selected. Next, the variables representative of the machine’s operating conditions were identified, with the aim of contextualizing the behaviour of the signals and ensuring that the analyses were consistent with the equipment’s operating regime.

Table 2.

FMSA worksheet for the simulated fault.

For the anomaly detection algorithm, a fully connected autoencoder with symmetric architecture and five hidden layers was used. Training was conducted only on normal operation data. The autoencoder hyperparameters, such as the number of layers, units per layer, activation functions, and optimizer, were selected through a structured empirical process, rather than via exhaustive grid search or automated tuning algorithms. This decision is justified by the nature of the datasets used in this study, both simulated and real-world operational data, which are relatively low-dimensional and exhibit limited structural variability. Under such conditions, highly complex or over-parameterized architectures are not required to achieve adequate reconstruction performance. Given the goal of detecting deviations from normal operation in relatively stable signals, simpler architectures tend to generalize better, reduce overfitting risk, and allow for efficient training and deployment. The adopted architectures were refined iteratively based on validation loss trends and empirical evaluation of reconstruction error behavior, balancing model capacity and robustness. This approach aligns with findings from previous literature, where lightweight autoencoder configurations have been shown to perform effectively in condition monitoring tasks when the input space is well behaved and domain-specific preselection of features is applied. Moreover, the use of the FMSA as a prior knowledge layer significantly reduces input dimensionality and noise, further mitigating the need for large-scale hyperparameter optimization. A summary of the final configurations adopted is presented in Table 3.

Table 3.

Autoencoder hyperparameters for simulated data.

The dataset was stratified into three subsets: 7862 samples (70%) were used for model training, 1685 samples (15%) for validation during the weight adjustment process, and 1685 samples (15%) for final performance evaluation in the testing phase. All features were standardized using the z-score method to ensure the normalization of attribute scales and to improve training convergence.

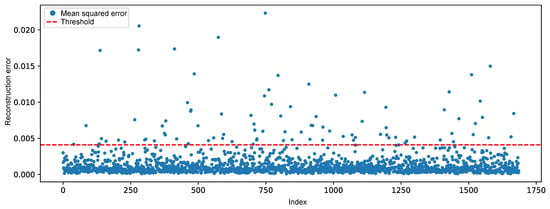

After training, the model achieved a mean squared error of 0.0007821 on the training set and 0.000797 on the validation set, indicating good generalization capability and absence of overfitting. The detection threshold, corresponding to the 99.7% percentile of the reconstruction errors, was computed, resulting in a threshold of .

Figure 6 illustrates the reconstruction error for the test samples under normal operating conditions. It can be observed that the majority of the points remain consistently below the threshold. This behavior is expected, as the autoencoder was trained exclusively on data representing normal system operation, learning to accurately reconstruct the typical patterns of the system. The low magnitude of the errors confirms the model’s ability to adequately represent normal behavior.

Figure 6.

Reconstruction error over time of the simulated (normal) test data.

Although most of the data exhibited low reconstruction errors, occasional values exceeding the threshold were observed, resulting in false positives. These deviations can be attributed to noise, natural operational fluctuations, or local data peculiarities that were not fully captured during training.

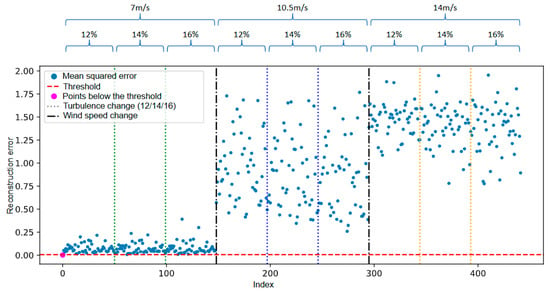

Figure 7 illustrates the temporal evolution of the reconstruction error generated by the autoencoder for the simulated fault scenario involving a 1% mass imbalance in one of the turbine blades. The blue points represent the mean squared error values computed for each observation, while the red dashed line indicates the predefined anomaly detection threshold. The magenta-highlighted points correspond to samples that remain below the detection threshold. The dotted and dashed vertical lines indicate transitions in operational conditions during the simulation: Thin dashed lines mark changes in wind turbulence levels, while the black double-dashed lines denote changes in average wind speed.

Figure 7.

Reconstruction error over time of simulated fault data.

It is observed that, from the second transition in wind speed onwards, the reconstruction error increases significantly and persistently. This behavior demonstrates that the autoencoder, trained exclusively on data representative of normal operation, was able to capture deviations in the anomalous behavior pattern and the shift in operating conditions. The increase in error beyond the predefined threshold indicates that the model responded sensitively to the onset of degradation.

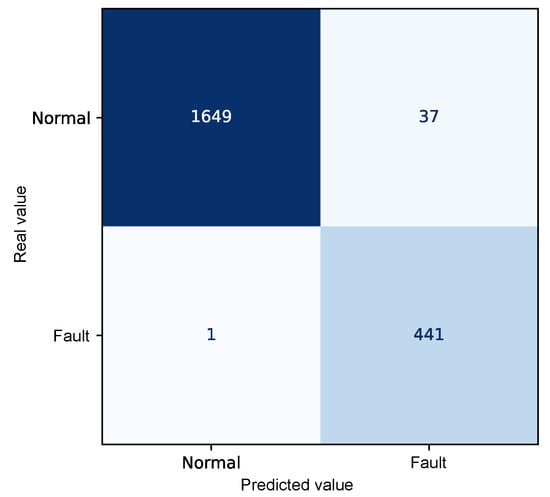

The autoencoder model was evaluated using confusion matrix analysis applied to the combined test and fault dataset, comprising a total of 2128 instances. Figure 8 presents the confusion matrix derived from the reconstruction errors of the autoencoder, summarizing the relationship between the predicted values and the actual class labels “Normal” and “Fault”.

Figure 8.

Confusion matrix relating the autoencoder’s reconstruction error to the detection threshold.

The resulting confusion matrix shows that 1649 observations corresponding to the normal operating state exhibited reconstruction errors below the defined threshold and were, therefore, correctly identified as regular operating conditions. Similarly, 441 records associated with faulty conditions presented reconstruction errors above the threshold, correctly indicating the presence of a fault.

However, 38 misclassifications were observed. In 37 of these cases, normal operating data produced reconstruction errors above the threshold, leading to their incorrect identification as anomalous conditions (false positives). Only one faulty instance exhibited an error below the threshold and was not detected by the model, constituting a false negative.

The quantitative performance analysis of the model, based on metrics derived from the confusion matrix, demonstrates a high discriminative capability for fault detection, as shown in Table 4.

Table 4.

Model evaluation of the reconstruction error detection model on simulated data from normal and faulty operation.

The overall accuracy achieved was 98.2%, reflecting the total percentage of correctly detected instances. A precision of 99.9% was observed for the “Normal" class and 92.3% for the “Fault” class, indicating that most samples identified as normal or faulty indeed correspond to their actual class labels. The recall rate, which expresses the proportion of correctly identified instances within each class, was 97.8% for the “Normal” class and 99.8% for the "Fault" class, highlighting the model’s effectiveness in detecting anomalous events.

Additionally, the F1-Score, defined as the harmonic mean between precision and recall, reached 98.9% for the “Normal” class and 95.9% for the “Fault” class, reinforcing the model’s balance between sensitivity and specificity. Both macro and weighted averages also support the robustness of the model, with all metrics exceeding 96%, indicating consistent performance even in the presence of class imbalance.

It is important to emphasize that, since this is an unsupervised approach based on autoencoders, the separation between “Normal” and “Fault” instances does not result from a conventional supervised classification process. Instead, anomaly detection is conducted by comparing the reconstruction error generated by the model against a predefined decision threshold. The effectiveness of the method is, therefore, directly linked to the autoencoder’s ability to accurately reconstruct normal operating patterns, such that significant deviations in reconstruction error can be interpreted as potential indicators of failure.

Considering the practical application in a realistic scenario, in which fault identification is not based solely on checking the reconstruction error at each observation, but rather on the persistence of deviations over time, the simulated data representing the fault condition were submitted to detection logic using the temporal persistence rule, which establishes that the transition from the normal operating state to the anomalous state is only confirmed when the reconstruction error exceeds the detection threshold in a sustained manner for a window of points, in this case, consecutive samples.

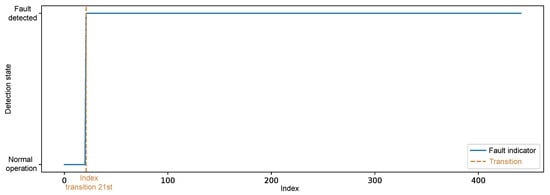

According to the reconstruction error of the fault data, it was observed that the first observation of the set was below the threshold, as shown in Figure 7; however, the function responsible for detection only classified the change of state to an anomalous condition from the 21st observation, when the persistence of the error was validated, as illustrated in Figure 9.

Figure 9.

Detection state over time of simulated failure data.

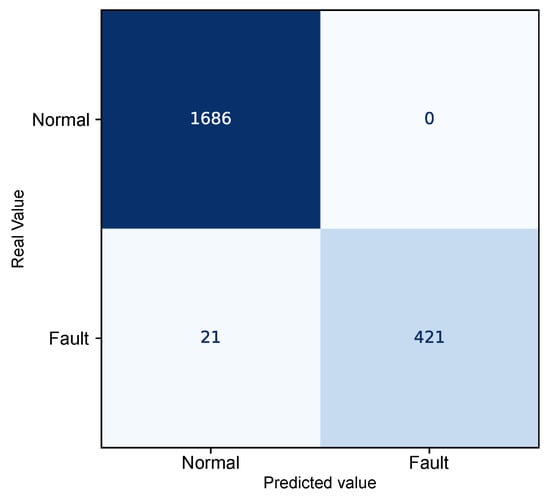

After applying the persistence window, the model’s final metrics were obtained, now adjusted to reflect only sustained state changes over time. These classifications were then compared with the actual labels of the instances, enabling a quantitative assessment of the model’s performance. Figure 10 shows the resulting confusion matrix after classifying the persistence rule, accompanied by the main evaluation metrics presented in Table 5.

Figure 10.

Confusion matrix after persistence window.

Table 5.

Classification performance evaluation after applying the persistence rule on simulated data of normal operation and failure.

The evaluation of the performance metrics after applying the persistence rule shows the robustness of the proposed approach. The overall accuracy achieved was 99.0%, with high F1-scores for both the “Normal” class with 99.4% and the “Failure” class with 97.6%, indicating a balance between precision and recall.

The recall of the “Normal” class was 100%, which means that all instances of regular operation were correctly recognized, without generating undue alarms. This result reinforces the importance of the persistence rule as an effective strategy for mitigating false positives, by requiring that deviations in the reconstruction error remain above the threshold for a minimum time interval before concluding that a fault has occurred. This requirement acts as a temporal filter, capable of ruling out one-off fluctuations or transient noise that could otherwise lead to erroneous classifications.

On the other hand, the recall of the “Failure” class was 95.2%, reflecting the existence of 21 observations which, although fully associated with the anomalous regime, were not immediately classified as a failure. This stems directly from the time window defined in the persistence rule, which requires the anomalous condition to persist for at least 20 consecutive samples for the change of state to be confirmed. Although this approach introduces an inherent detection delay, it contributes to system reliability by prioritizing signaling stability and avoiding hasty detections.

Regarding the quantification of how early the model can detect anomalies before actual failure, it is important to highlight a key limitation of the simulated dataset. As described in [23], the simulation framework does not model the complete progression of failures until the end of the asset’s life. Instead, it introduces three predefined levels of degradation severity, which allow the system to distinguish between healthy and degraded states, but not to estimate time-to-failure or the full degradation trajectory. Consequently, the current model’s output enables the identification of anomalous behavior indicative of early-stage degradation but does not allow for the precise quantification of advance warning time. Extending the simulation framework to include full-life failure progression would be necessary to assess detection lead time with greater precision.

Initially, the proposed methodology was validated using simulated fault data, which provided a controlled environment for analyzing the model’s response to well-defined anomalous patterns. Given the promising results obtained in the simulation, we moved on to a more challenging stage, representative of the real context, using historical data from EDP’s wind turbine SCADA system. The aim of this transition is to assess the model’s generalizability in the face of the complexities inherent in field operation, such as noise, operational variability, transient events, and unmodeled uncertainties. The results obtained from applying the model to real data are presented below.

4.2. EDP Database

The dataset used in this study comes from the Energias de Portugal (EDP) Open Data (https://www.edp.com/en/innovation/data, accessed on 12 July 2024), collected from an offshore wind farm located in the Gulf of Guinea, West Africa. This dataset comprises continuous monitoring and fault history records for five wind turbines, equipped with SCADA systems that monitor several key parameters of the turbines’ main components, as well as environmental measurements. The data were collected at regular 10 min intervals between 1 January 2016 and 31 December 2017, totaling 81 features.

The features include environmental parameters, such as wind speed and direction, and turbine operating conditions, such as component temperature and active power. Of these, 25 features are related to the temperature of the main turbine components, the notation and description of which are detailed in Table 6. It is important to note that some features, although associated with the same physical attribute, provide different statistics, such as average, minimum, maximum, and standard deviation, for the five turbines named T01, T06, T07, T09, and T11.

Table 6.

SCADA temperature features.

According to the ISO 16079 [25], the evolution of a mechanical failure can be detected initially by anomalies in vibration signals, often acquired by condition monitoring systems (CMS), followed by oil degradation, acoustic noise, and temperature, which is usually monitored by the SCADA system, until the problem is visually perceived. However, due to limitations of the dataset employed in this study, the available monitoring variables are restricted to SCADA measurements. As such, the present methodology focuses exclusively on thermal variables, to identify anomalies indicative of potential failure. This constraint inherently reduces the observability of early-stage degradation processes and may delay detection in comparison to methodologies that leverage higher-sensitivity signals such as vibration. Despite this limitation, prior studies such as [26] have demonstrated that temperature remains a relevant indicator in the detection of specific failure modes, particularly in components such as transformers and bearings where thermal imbalance is a direct consequence of mechanical or electrical degradation. Therefore, although the absence of CMS data imposes constraints on fault detectability and temporal resolution, the use of SCADA-based temperature monitoring still allows for the identification of deviations consistent with abnormal operational behavior, especially in the later stages of fault development.

The dataset includes historical records, also called a logbook, referring to 28 anomalies documented over two years of operation. These events have been categorized by affected component, covering critical systems such as the generator, generator bearing, multiplier box, transformer, and hydraulic group. The records, or logs, detail relevant information about each occurrence, such as the type of failure, its severity, and the approximate times at which the events were detected or reported.

According to [10], the anomaly file is filled in by the turbine operators, recording the moment a fault was detected, a component was affected, or a repair was carried out at a specific time. However, during the analysis of the data made available by EDP’s Open Data platform, an inconsistency was identified, since it does not include the SCADA data for Turbine 09 that was not available, despite the fact that the logbook shows several anomalies attributed to this unit. This absence compromises the possibility of correlating recorded events with the operating history of the turbine in question, limiting its use in quantitative analyses or in the validation of models. Table 7 presents a summary of the faults reported in the logbook.

Table 7.

Example of summary of the turbine’s failure log.

The wind turbines are class 2 according to the [24] standard, have three blades with a diameter of 90 m and a hub height of 80 m, and are equipped with asynchronous generators connected to three-stage planetary gearboxes. The maximum rotor speed is 14.9 rpm, with a nominal power of 2 MW and a nominal wind speed of 12 m/s [27]. The technical data sheet is summarized in Table 8.

Table 8.

Technical description of each turbine.

4.3. Failure Mode and Symptoms Analysis

The analysis was conducted taking into account data availability and quality restrictions, so that only failure modes actually observed in the history could be analyzed. This decision aimed to optimize the study for failures that had concrete information in the maintenance log, minimizing the risk of including hypothetical scenarios or those that were poorly supported by real data.

In this sense, each fault identified was correlated with possible symptoms that manifest themselves before or during its occurrence, such as temperature variations. This mapping showed which variables are most relevant for the early detection of each fault, serving as a basis for the careful selection of signals to be used in training the autoencoder.

As a result of this targeted approach, a set of key characteristics and parameters capable of distinguishing the failure modes identified from the available field data were established, consolidated in Table 9. This means that autoencoder training can focus on a representative dataset, prioritizing variables whose relationship with failures is backed up by concrete evidence.

Table 9.

Failure mode and symptoms analysis for wind turbine components.

In addition, the FMSA adaptation used allows efforts to be concentrated on components and subsystems whose criticality has been confirmed by real occurrences, avoiding overloading the models with speculative or inadequately documented failure scenarios. As a result, the analysis increases the reliability and coherence of the set of variables that will be used in training, ensuring that each failure mode and its symptoms are given due prominence in the modeling and anomaly detection process.

4.4. Data Extraction

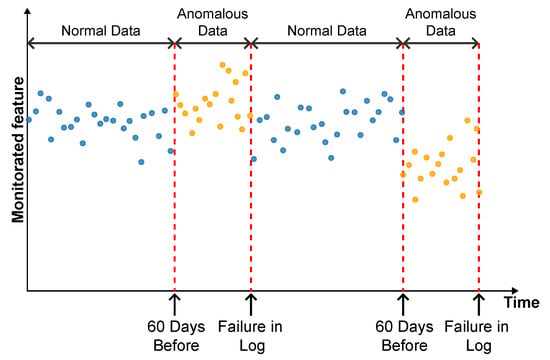

As highlighted by [22], there is evidence that incipient failures can manifest themselves in monitoring data up to 60 days before their effective detection in this database. In the context of this work, this hypothesis was adopted to delimit the period of separation of data for those classified as normal, in order to avoid the inclusion of possible anomalies in the training set of the detection model, as illustrated in Figure 11.

Figure 11.

Extraction of normal data.

As described by [10], the log is filled in by the machine operators, which makes it impossible to be certain as to the exact moment when the failure began or whether the date recorded corresponds to a turbine shutdown for maintenance, as it is possible to observe signs of failures on dates prior to the official date of the log.

In order to analyze the fault data, a period of approximately 40 days prior to logging was defined for each failure mode studied, as the aim of this work is to evaluate the ability of the tools developed to detect the recorded failure, avoiding the inclusion of possible previous failures that were not actually recorded.

4.5. Data Preparation

After selecting the most representative characteristics, the data were subjected to a preparation process to make them suitable for the subsequent modeling stages. First, the data were cleaned, eliminating records with missing values. Subsequently, the data were converted and restructured into a format compatible with the analysis algorithms, so that all the variables were in numerical format and organized in a matrix structure.

The occurrence of atypical values in the data, commonly known as “outliers”, can compromise the accuracy and reliability of the autoencoder, since these extreme values reflect measurement oscillations or unusual operating conditions that do not represent the standard behavior of the system. After separating the records considered “normal”, i.e., with the machine operating without faults, specific filtering was carried out to remove possible outliers. This ensured that the dataset used for training was effectively representative of the standard operating regime.

It is important to note that this filtering applies exclusively to data labeled as normal, since the purpose is to eradicate possible incorrect readings from the sensors or discrepant values that would not manifest themselves in genuinely normal operating circumstances. Data classified as anomalous or faulty are not subject to this removal.

The identification and filtering of extreme points in normal data was carried out using statistical methods based on the standard deviation and the interquartile range. According to established standards, any datapoint that falls below the lower limit is considered an outlier. These values are substantially lower than the majority of the dataset and are potential candidates for removal or further investigation. Conversely, any datapoint that exceeds the upper limit is also considered an outlier. These values are significantly higher in magnitude than the majority of the dataset, which may warrant particular attention.

After the cleaning and filtering stage, the data were standardized using the Z-score technique. For this purpose, a statistical standardizer was used, which calculates the mean and standard deviation of the attributes from a reference subset. In this study, the reference sample was made up exclusively of data labelled as “normal operation”, in order to prevent the presence of anomalies from influencing the calculation of the standardization metrics.

This processing ensures that all attributes contribute equally to the training of the autoencoder model. Without this standardization, variables with greater numerical amplitude could dominate the learning process, compromising the model’s ability to capture subtle patterns or correlations between attributes of different scales. The decision to calibrate the standardizer only with normal data maintains the model’s consistency with its operating principle: learning to reconstruct only typical operating patterns, from which significant deviations are interpreted as potential anomalies.

4.6. Development of Autoencoder for Fault Detection

After preprocessing, the autoencoder model was implemented and trained. The selected architecture was the fully connected multi-layer perceptron. Training was performed with the hyperparameters described in Table 10, over 150 epochs, ensuring model convergence.

Table 10.

Autoencoder hyperparameters.

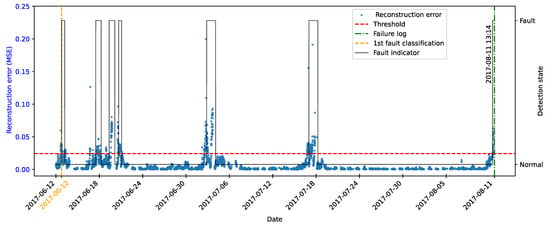

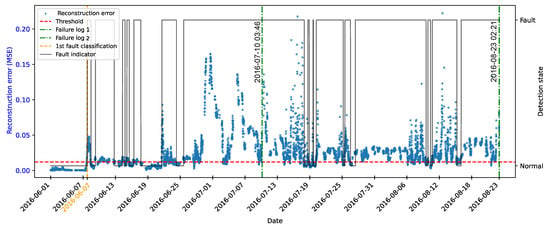

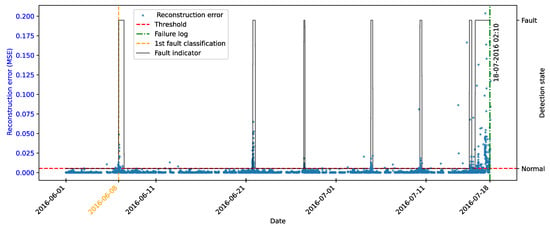

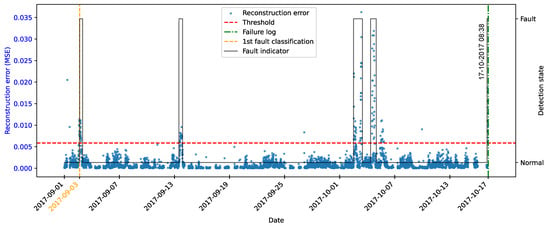

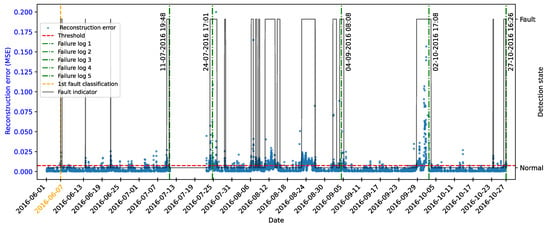

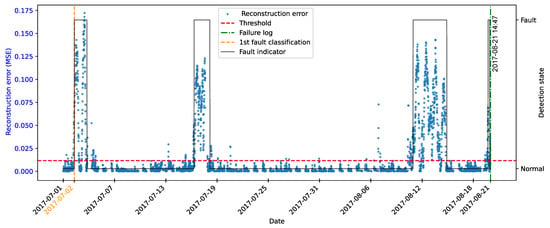

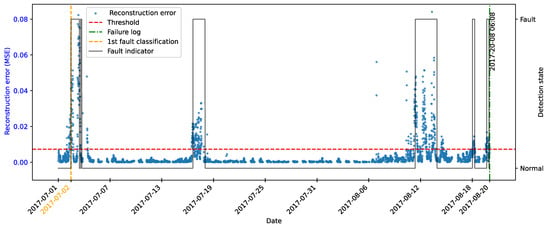

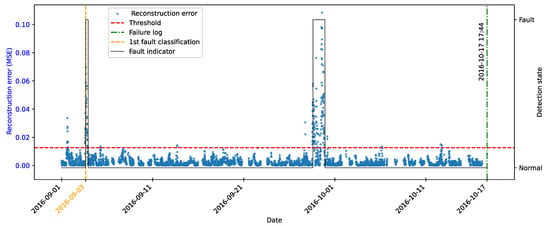

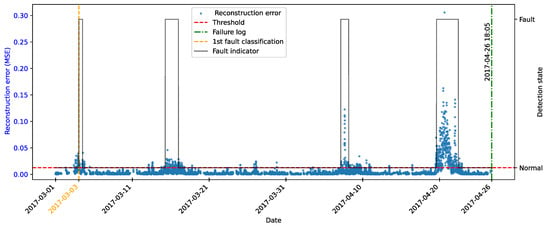

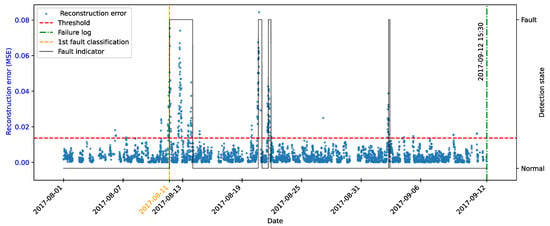

The autoencoder was developed to compress the input data (encoding phase) and then reconstruct them (decoding phase), leading the model to learn the fundamental characteristics of the normal operating regime. The reconstruction error is monitored during the use of the model, making it possible to detect behavior that deviates significantly from these patterns, which makes it possible to characterize anomalies or incipient faults.