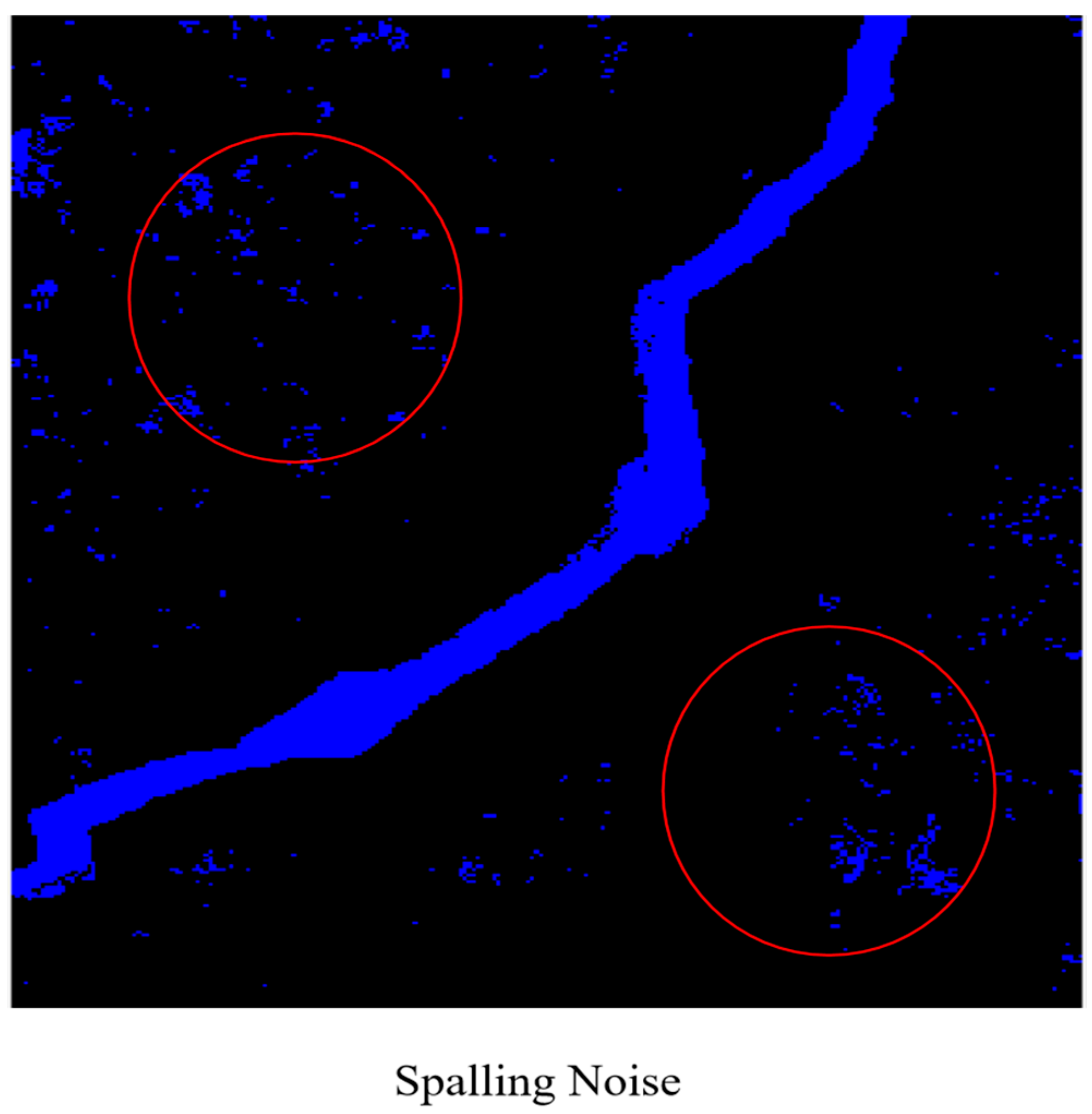

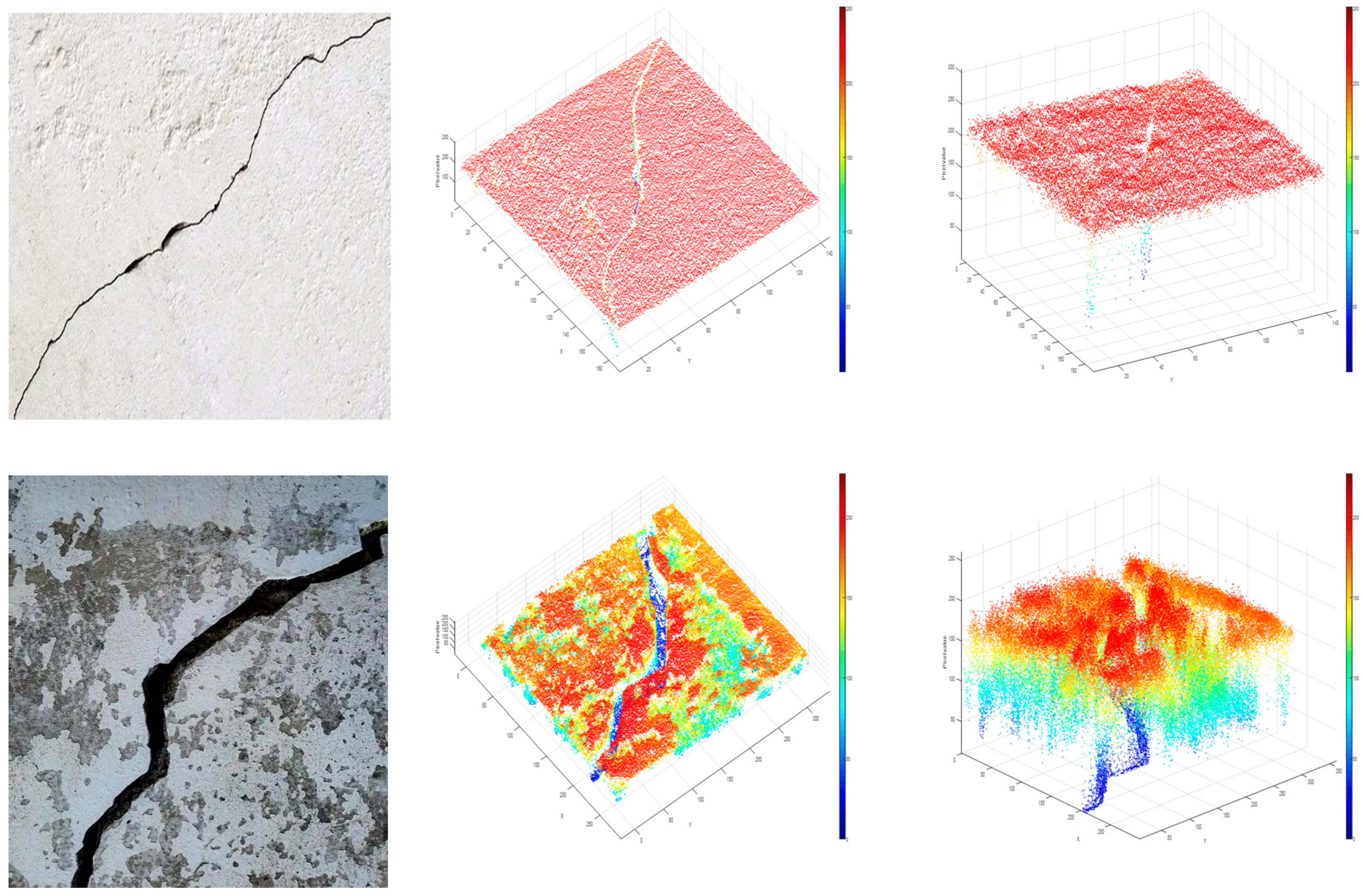

Traditional segmentation algorithms struggle with complex scenarios like blurred crack edges and noise. This study focuses on the fuzzy C-means (FCM) algorithm. It compares FCM’s performance with hard-clustering algorithms (C-means, K-means) and density-based algorithms (DBSCAN). The aim is to offer a more robust segmentation solution for feature fusion-based crack detection using FCM.

3.2. Comparison of Different Clustering Algorithms

This study conducted the following experiments to evaluate the crack segmentation quality of different algorithms by controlling their respective parameters:

C-Means Algorithm: The key parameter is the number of cluster centers. After numerous experiments, it was found that setting the number of cluster centers to 3 effectively distinguishes cracks, backgrounds, and other interfering objects (e.g., stains, textures), ensuring accurate crack segmentation. While increasing the number of cluster centers sometimes allows for more detailed local distinctions in complex images, the overall improvement in crack detection is limited and comes with increased computational complexity [

21]. Therefore, the number of cluster centers was set to 3.

K-Means Algorithm: In addition to the number of cluster centers (also set to 3 like the C-means algorithm), the key parameter is the number of iterations. Experiments with values of 50, 100, 150, and 200 showed that 100 iterations are sufficient for the algorithm to converge, ensuring stable results while avoiding excessive computational overhead from too many iterations. Thus, the number of iterations was set to 100 [

22].

DBSCAN Algorithm: The key parameters are the neighborhood radius (ε) and the minimum number of samples (MinPts). After analyzing the distribution of crack image data and conducting multiple experiments, it was determined that setting ε to 3 and MinPts to 10 best preserves the integrity of cracks while effectively removing scattered noise points, preventing over- or under-segmentation [

5].

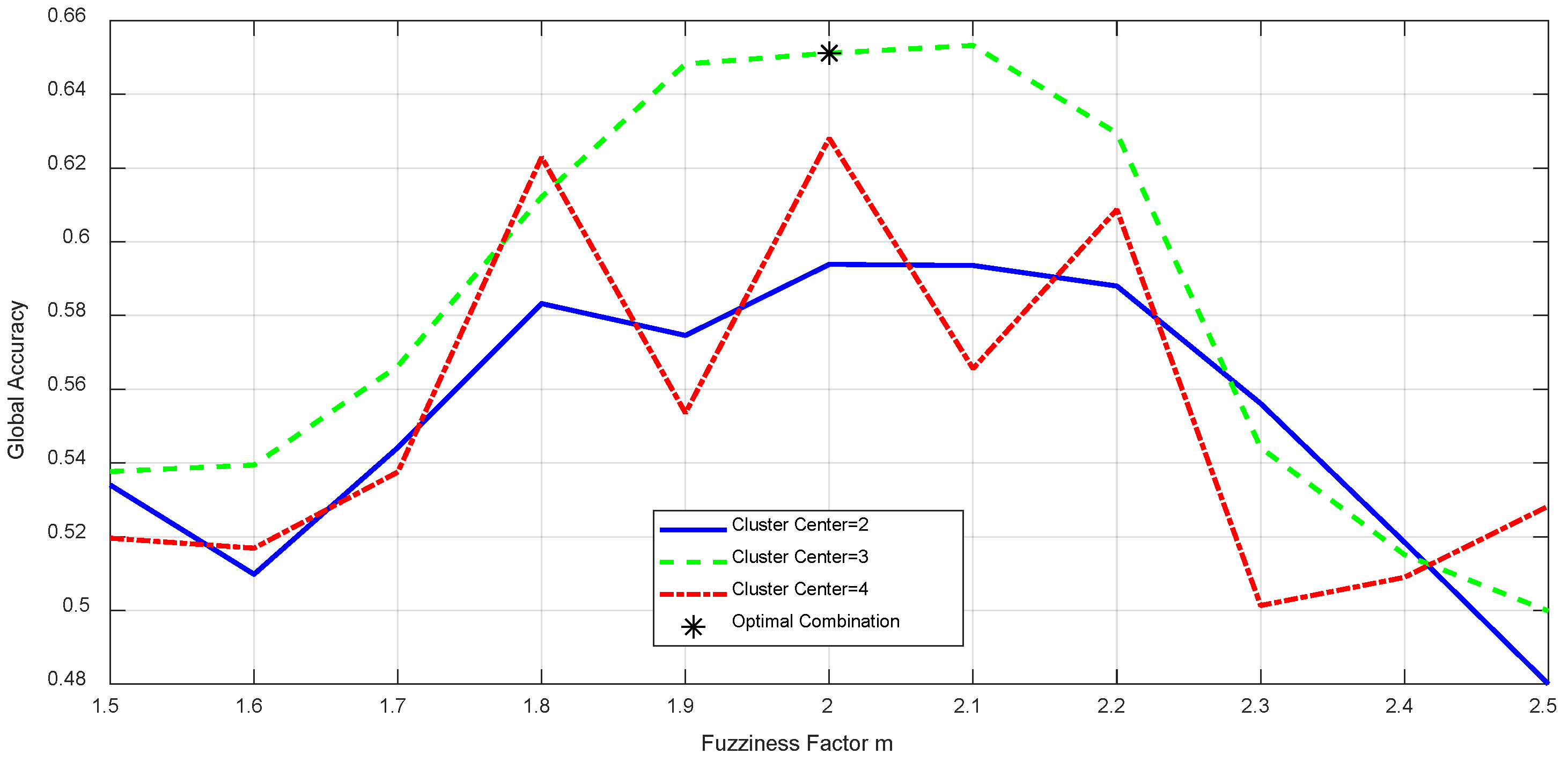

FCM Algorithm: The key parameters are the fuzziness factor (m) and the number of cluster centers. Experiments with m values from 1.5 to 2.5 in increments of 0.1 revealed that = 2.0 achieves the best balance between segmentation accuracy, edge smoothness, and noise resistance.

The experimental results comparing the performance of fuzzy factors under different cluster centers are shown in

Figure 12.

Both higher and lower m values can reduce crack recognition accuracy. The number of cluster centers was also set to 3, consistent with the C-means and K-means algorithms. This is based on the common categories of targets in crack images, and experiments showed that three cluster centers can effectively separate cracks, backgrounds, and interfering objects, ensuring accurate segmentation and facilitating comparison with other algorithms. The comparison results are presented in

Table 2.

The data in

Table 2 demonstrates that in the crack segmentation process, for those clustering algorithms requiring the selection of the number of cluster centers, setting the number of cluster centers to 3 is the most reasonable.

For each test image, the segmentation results of each algorithm are saved, and the corresponding evaluation metrics, including accuracy, recall, and Dice coefficient, are calculated to quantitatively assess the clustering and segmentation quality of the algorithms. The specific calculation methods are as follows:

1. Global Accuracy:

Defined as the ratio of correctly predicted pixels to the total number of pixels. This indicator reflects the overall performance of the model on all pixels.

TP (true positives): the true example is the number of positive class samples correctly predicted by the model.

TN (true negatives): true negative examples refer to the number of negative class samples correctly predicted by the model.

FP (false positives): false positive examples, the model incorrectly predicts the number of negative class samples as positive.

FN (false negatives): false negative examples, the model incorrectly predicts the number of positive samples in the negative category.

2. Recall: recall rate refers to the proportion of samples correctly predicted as positive by the

model to all actual positive samples.

3. Dice coefficient (DC): the DC is used to evaluate the spatial overlap between the predicted crack regions and the true crack regions.

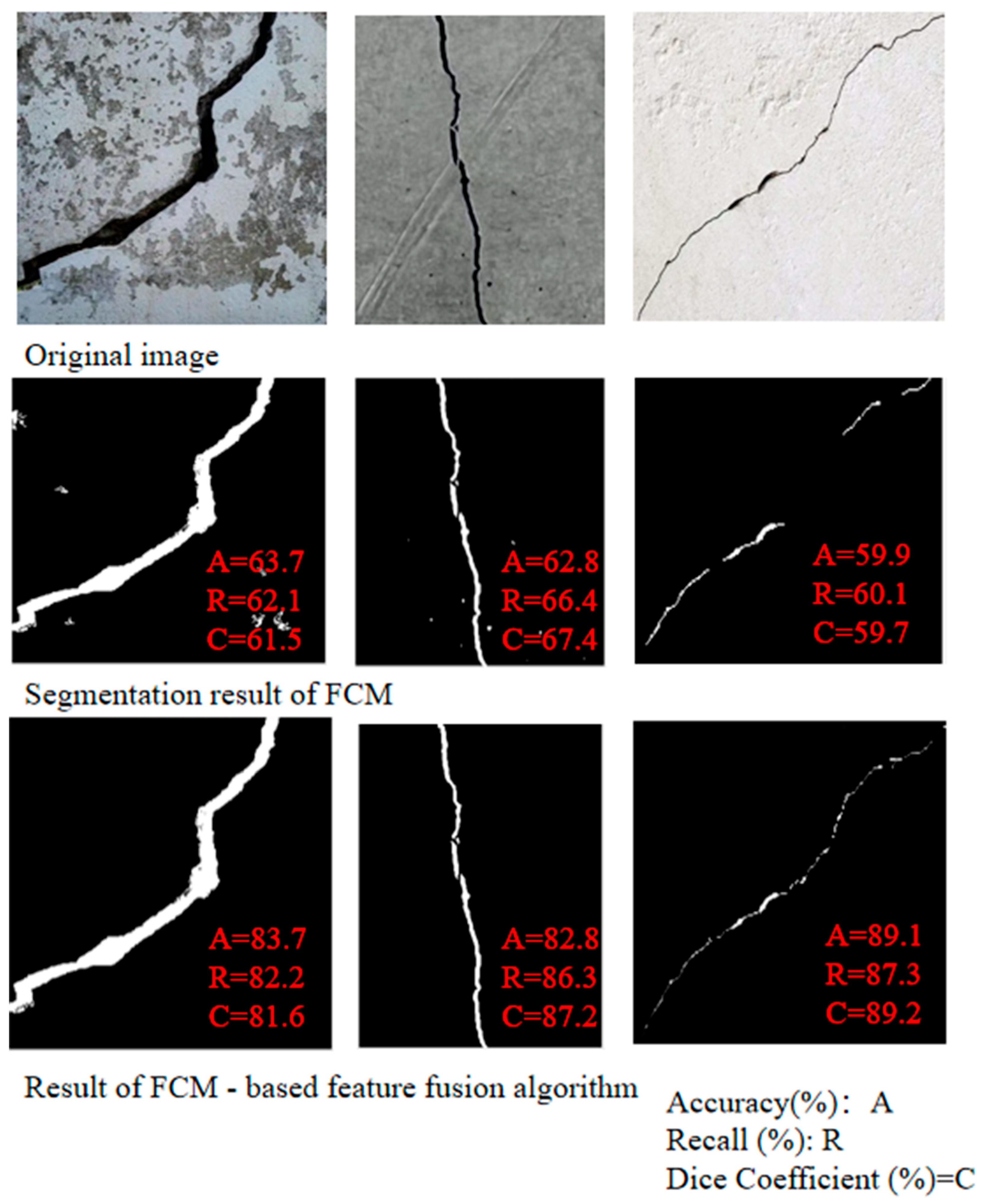

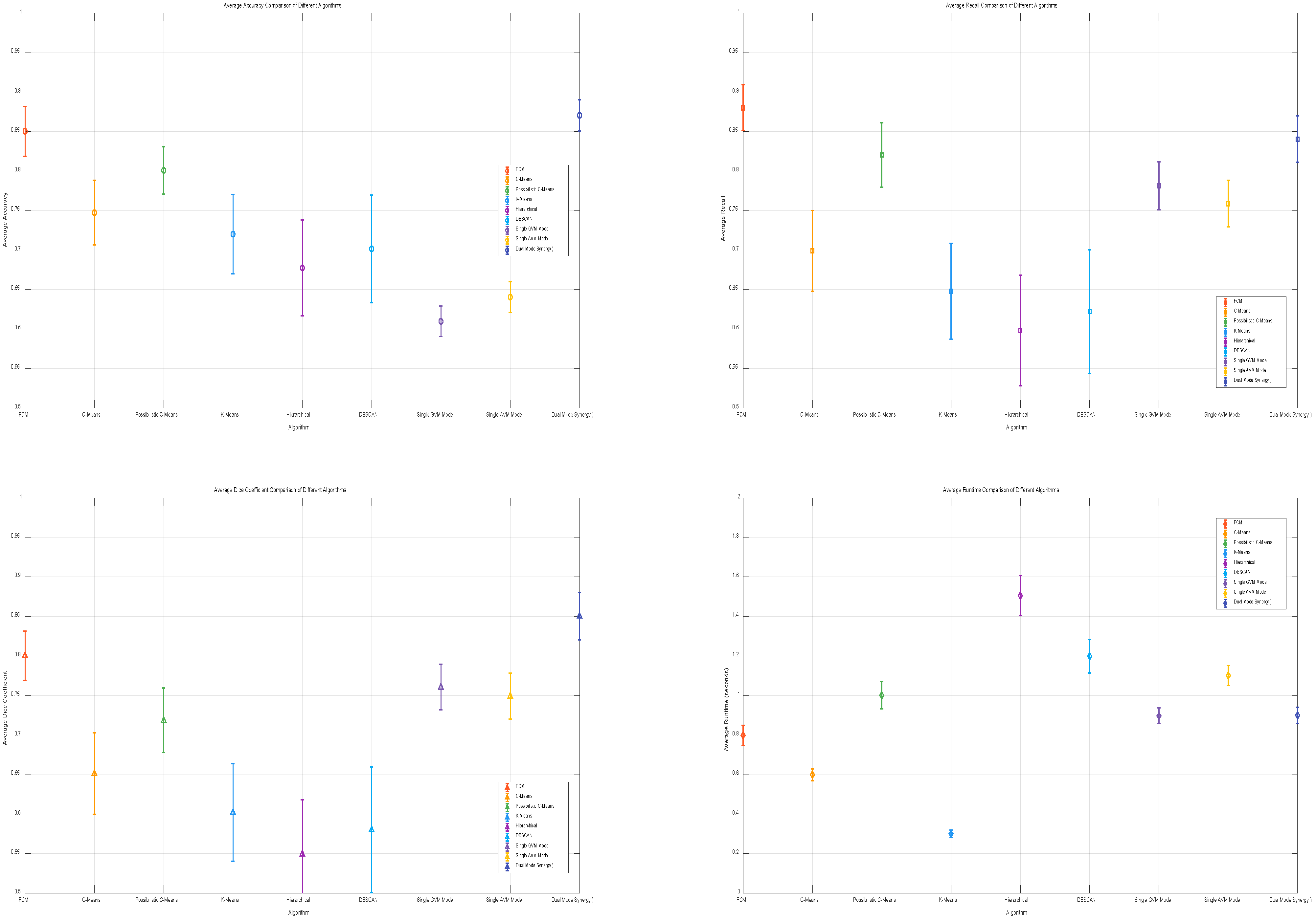

After carrying out ablation studies to pinpoint the optimal parameters for the FCM algorithm, we proceeded to experimentally assess different algorithms under fixed parameters. In the realm of concrete crack detection, we rigorously evaluated their segmentation effectiveness across multiple metrics, including accuracy, recall, Dice coefficient, and runtime. The results of this comparative analysis are visually represented in

Figure 13 and detailed in

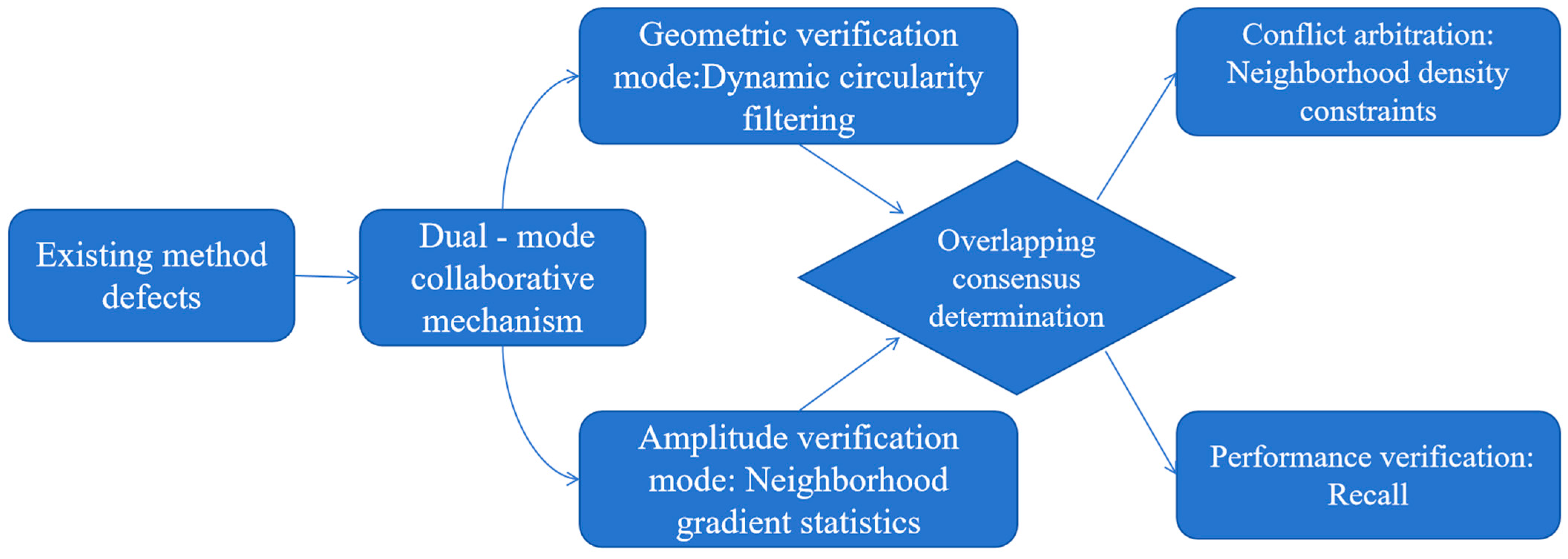

Table 3. Notably, the proposed dual-mode collaborative verification signifies a paradigm shift from conventional single-feature detection approaches. It ingeniously leverages the complementary nature of geometric and amplitude features to counteract the vulnerabilities inherent in traditional detection methods, thereby enhancing the overall performance and reliability of concrete crack detection.

Specific analyses are as follows:

Average Accuracy: the FCM algorithm shows the best accuracy, outperforming other algorithms such as C-means, possibilistic C-means, K-means, hierarchical, and DBSCAN; this suggests that FCM has a superior ability to recognize and classify pixels in crack segmentation.

Average Recall: the FCM algorithm has the highest recall, indicating its strong sensitivity in identifying crack pixels and effectively capturing crack details.

Average Dice Coefficient: the FCM algorithm achieves the best Dice coefficient, reflecting the highest similarity between segmentation results and ground truth; this further demonstrates its excellent performance in crack segmentation.

Average Runtime: although the runtime of FCM is longer than that of K-means, its significant advantages in accuracy, recall, and Dice coefficient make it a more effective choice for crack segmentation; the additional time is justified for applications requiring high precision and recall.

3.3. Parameter Selection Validation for the FCM-Based Feature Fusion Crack Segmentation Algorithm

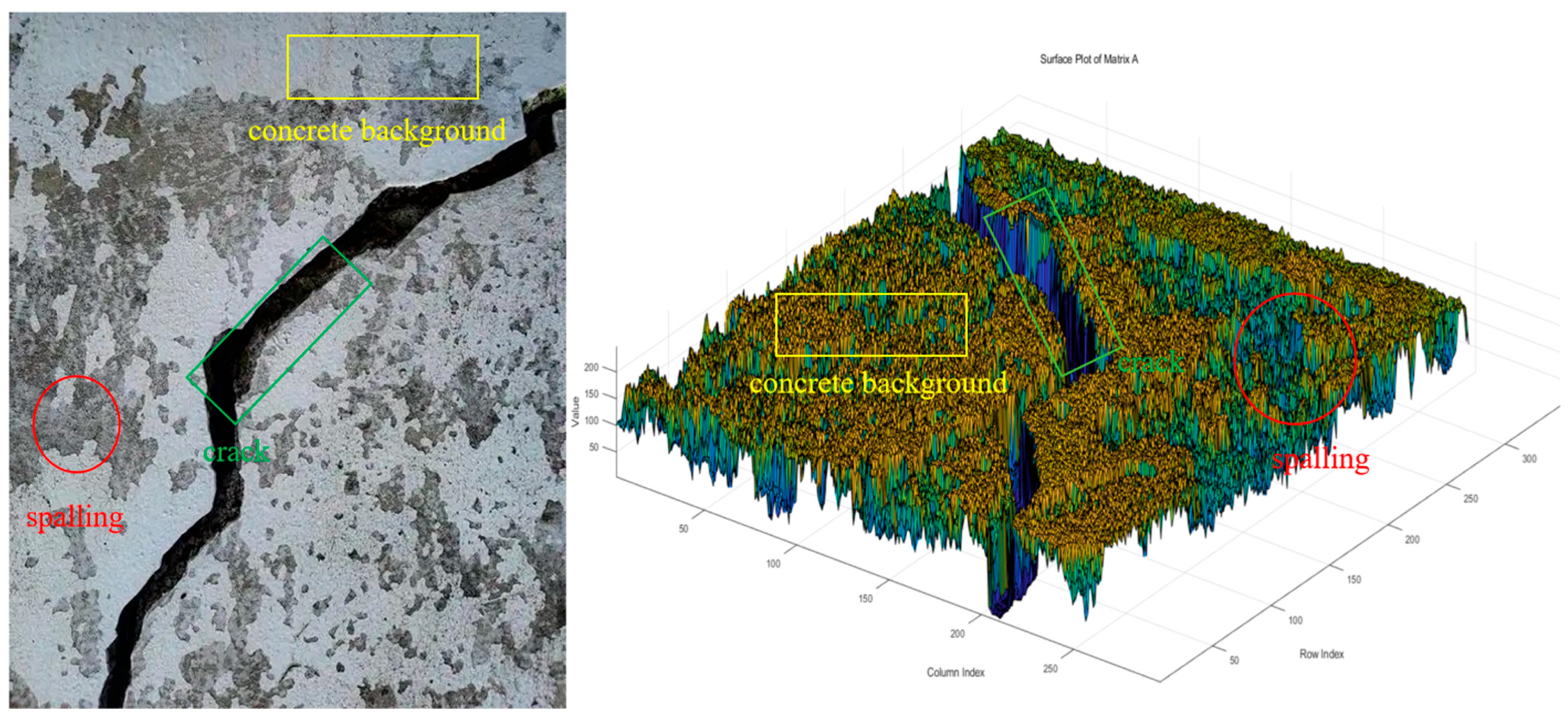

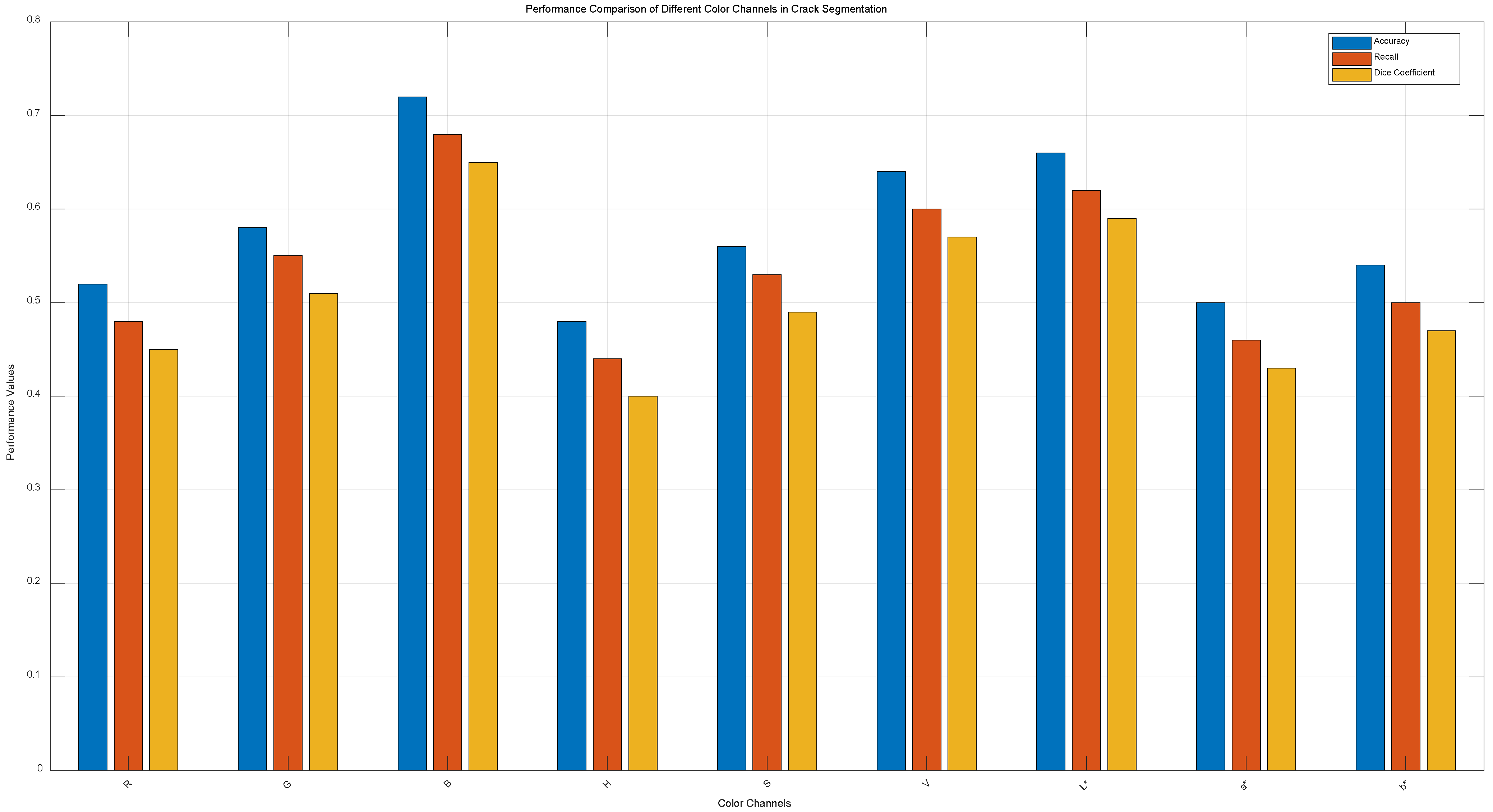

1. The method proposed in the paper selects the B-channel pixel values from RGB as representatives to simplify calculations.

To validate the B-channel’s advantage, a quantitative comparison of the segmentation performance of R/G/B channels, as well as the H/S/V channels in HSV and the L/a/b channels in Lab color spaces, was conducted.

The same batch of test images was used, with each channel individually input into the FCM algorithm to compute metrics like accuracy, recall, and Dice coefficient.

A statistical analysis of the segmentation performance across different channels was performed, and the results are shown in

Figure 14 as a bar or line chart, demonstrating that the B-channel in RGB yields superior segmentation results compared to HSV and Lab color spaces.

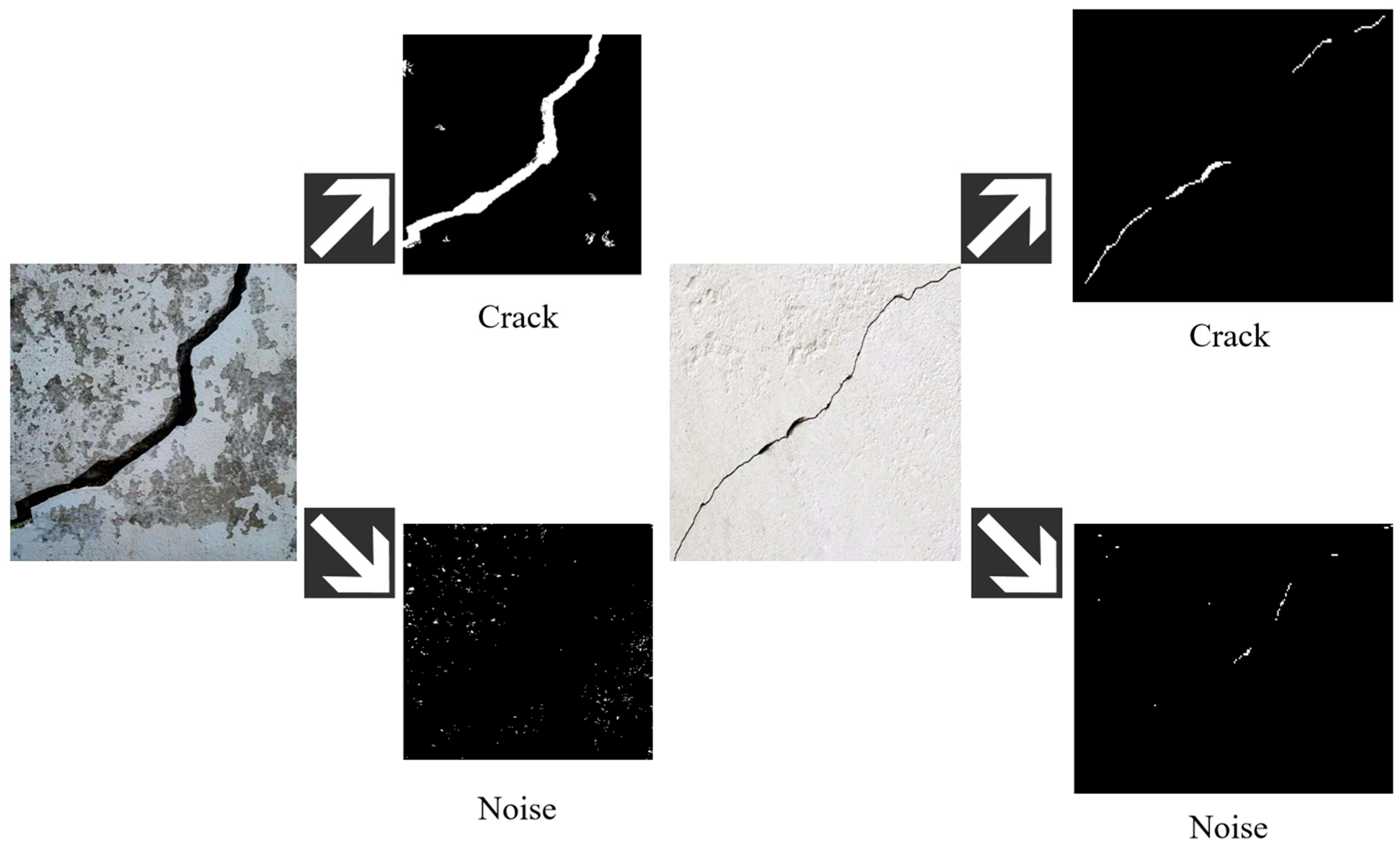

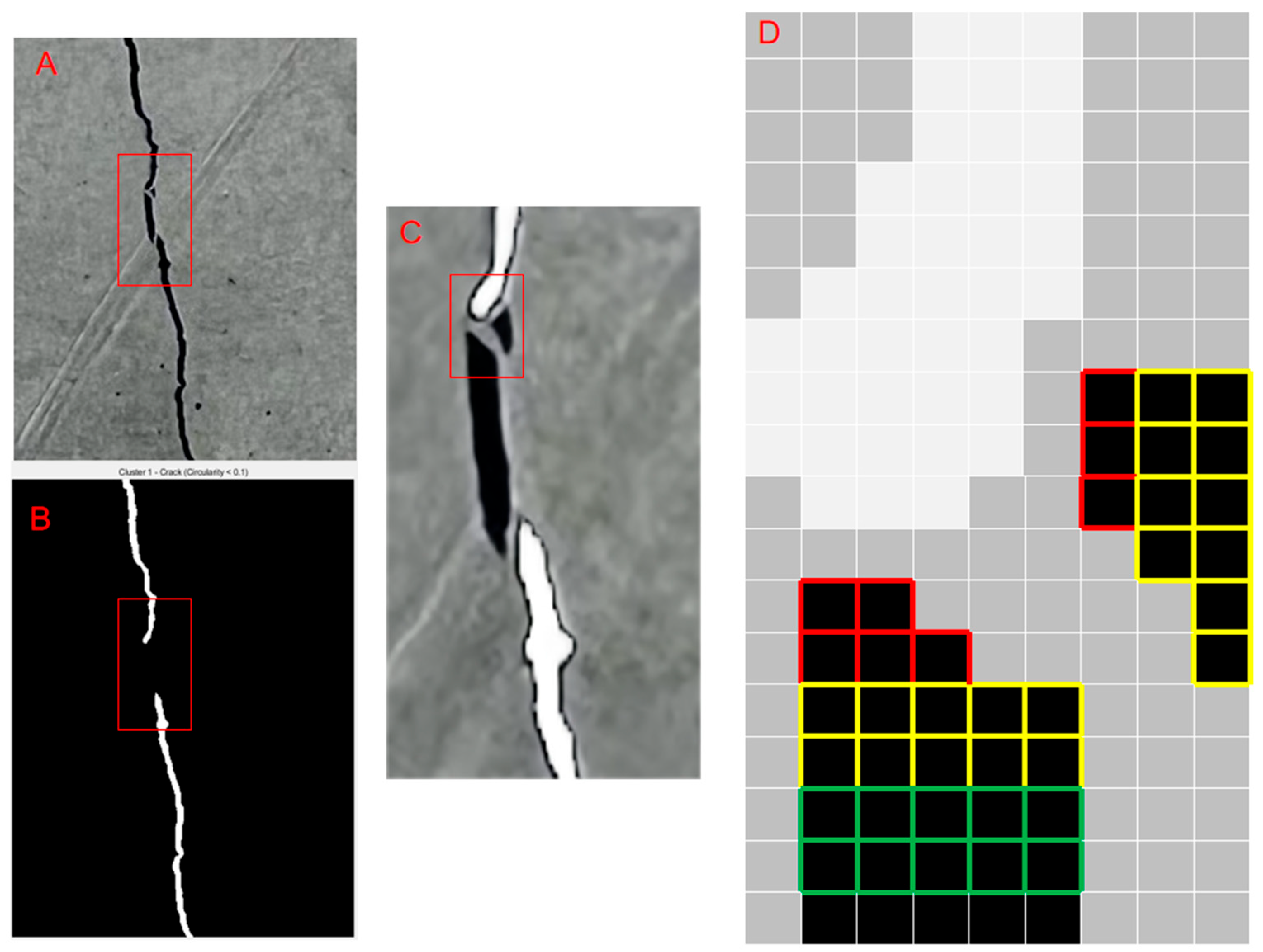

2. The algorithm proposed in the article uses the crack with the lowest circularity as a reference and searches for pixels within a neighborhood range that meet the pixel intensity criteria. This process aims to compensate for small cracks mistakenly deleted due to geometric feature misjudgments. After the search, the circularity corresponding to the number of pixels is matched to jointly identify cracks.

Experiments are conducted to validate the neighborhood search range and k value for images of a fixed size. The study assesses the sensitivity of different neighborhood window sizes and k values to crack continuity restoration and noise introduction in 256 256 images. The experimental method tests neighborhood window sizes of 3 3, 5 5, and 7 7, with k values of 1.5, 2, and 2.5. The segmentation accuracy, recall rate, and Dice coefficient are evaluated, with a focus on crack discontinuity restoration and noise misidentification rates.

The impact of window size on computational complexity is also analyzed. Results are presented in

Figure 15. The analysis shows that for fixed 256

256 images, the best segmentation results are achieved with a neighborhood size of 5

5 and a k value of 2.

3.4. Performance Evaluation of the FCM-Based Feature Fusion Crack Segmentation Algorithm

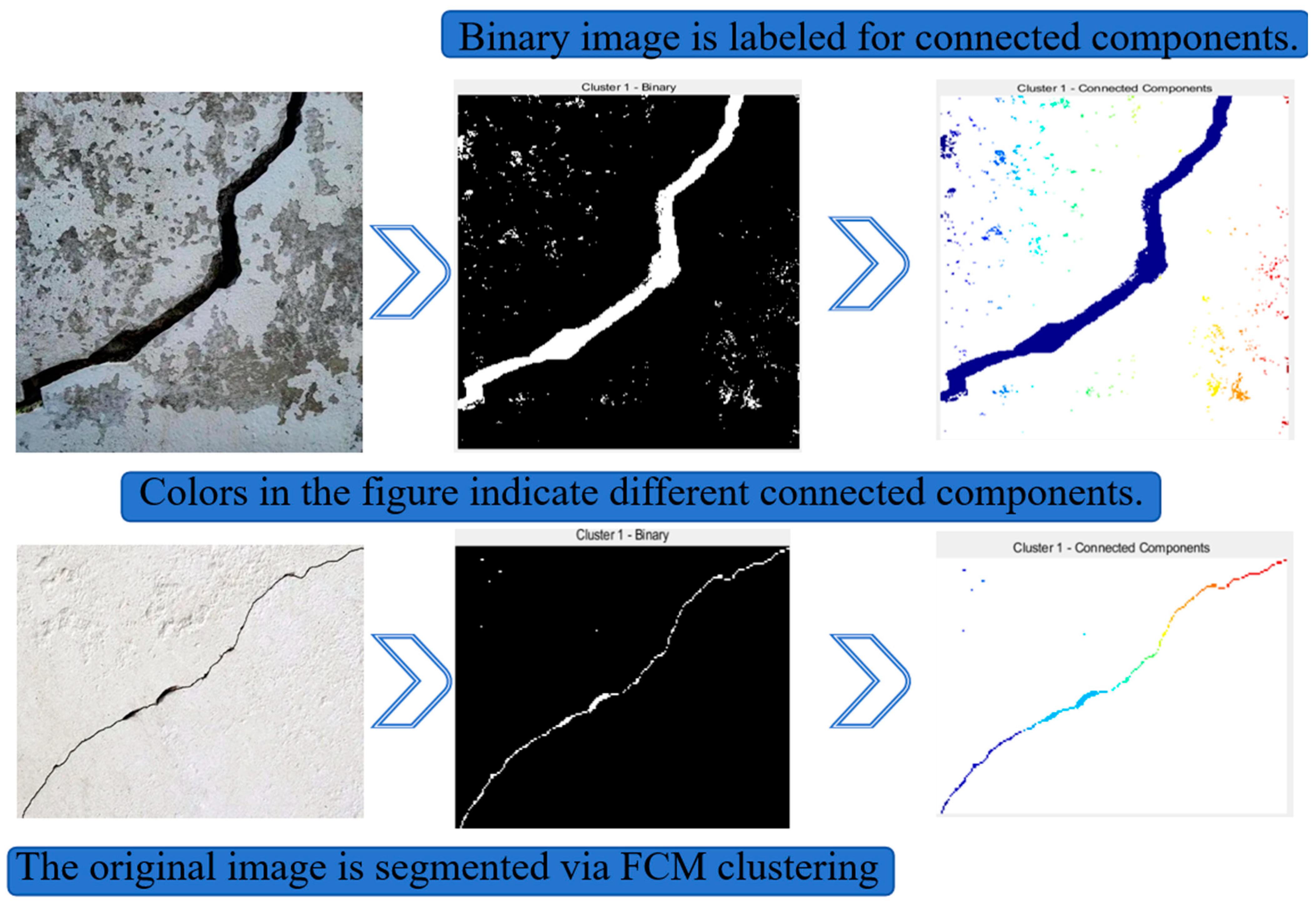

After demonstrating the rationale for choosing the FCM algorithm in clustering algorithms, this paper further enhances the semantic segmentation of cracks in concrete crack images by integrating connected region labeling with roundness and pixel feature analysis. The segmented data is organized and divided into training and testing datasets to train a model and evaluate the accuracy of image segmentation using the testing dataset. The proposed algorithm is compared with several existing methods, including traditional image segmentation algorithms like the Otsu algorithm (V. Vivekananthan et al., 2023) [

23] and the region-growing algorithm (Li Yi et al., 2025) [

24], deep learning-based segmentation algorithms, such as the basic U-Net network (Zhu Suya et al., 2019) [

25] and SegNet (Badrinarayanan et al., 2017) [

26], FCM-related improved algorithms like the genetic algorithm combined FCM (GACM) and the multi-space cooperative clustering FCM algorithm (Chen et al., 2020) [

18], and other advanced algorithms in relevant fields, such as Mask R-CNN (Yoro et al., 2020) [

27] and DeepLabv3+ (Chen et al., 2017) [

8].

Implementation details of the comparative algorithms are as follows:

To ensure the fairness of the experiments, all comparative algorithms are evaluated based on the same dataset, the same preprocessing procedure, and the same test set, and their hyperparameter settings and operating environment are recorded.

In the experiment, the detailed specifications of the computational platform are as follows:

Operating System: Windows 10 64-bit

CPU: Intel Core i7-9700K @ 3.60 GHz (Intel, Santa Clara, CA, USA)

GPU: NVIDIA GeForce RTX 2080 Ti (11 GB VRAM) (Nvidia, Santa Clara, CA, USA)

RAM: 32 GB DDR4

Programming Language: Python 3.8

Deep Learning Frameworks: TensorFlow 2.4.1, PyTorch 1.9.0

Image Processing Libraries: OpenCV 4.5.1, PIL 8.2.0

1. Deep Learning Algorithms

(1) U-Net Network Structure: based on the original U-Net architecture [

25], it consists of 4 downsampling layers and 4 upsampling layers.

The convolutional kernel size in each layer is 3 × 3, with ReLU as the activation function.

The output layer uses Sigmoid.

Optimizer: Adam optimizer with an initial learning rate of 0.001, decay coefficients β1 = 0.9 and β2 = 0.999.

Loss Function: Binary Cross-Entropy.

Training Strategy: Batch Size: 16

Epochs: 100 Early Stopping: Training terminates when the validation loss does not decrease for 5 consecutive rounds.

Data Augmentation: random horizontal flipping (probability 0.5), rotation (±15°), and brightness adjustment (±10%).

Pretrained Model: none (trained from scratch).

(2) SegNet

Network Structure: uses SegNet-Basic [

26], which has a symmetric encoder–decoder structure with max-pooling index preservation.

Optimizer: SGD (momentum 0.9) with an initial learning rate of 0.01, decaying by 0.1 times every 20 epochs.

Loss Function: Dice Loss + L2 regularization (weight decay coefficient 1 × 10−4).

Training Strategy: Batch Size: 8 Epochs: 120Data Augmentation: Same as U-Net.

(3) Mask R-CNN Backbone Network [

26]: ResNet-50 (pre-trained on ImageNet).

Optimizer: AdamW with a learning rate of 0.0001.

Anchor Settings: anchor scales [32, 64, 128, 256] and aspect ratios [0.5, 1, 2].

Training Strategy: the first 3 layers of the backbone network are frozen, and fine-tuning is performed for 50 epochs.

2. Traditional Image Processing Algorithms

(1) Otsu Thresholding [

23]

Gray-Level Number: 256-levels (consistent with the input image).

Multi-Threshold Extension: employs dual-threshold optimization [

3] to segment background, noise, and cracks (number of classes = 3).

Post-processing: morphological opening (3 × 3 rectangular kernel) to remove small noise.

(2) Region-Growing Algorithm [

24]

Seed Point Selection: Automatically selects the 10 points with the lowest B-channel pixel values as initial seeds.

Similarity Threshold: The difference between neighboring pixels and seed points is ≤15 (normalized threshold 0.06).

Stopping Condition: When the growth rate of the region area is <1% or the number of iterations ≥ 100.

(3) Improved FCM Algorithm

Fuzzy Factor: m = 2 (consistent with the method in this paper).

Spatial Constraint: introduces neighborhood spatial weights [

6,

9], with weight coefficient λ = 0.5.

These comparative experiments aim to validate the effectiveness and superiority of the proposed algorithm across multiple dimensions, including accuracy, recall, Dice coefficient, and runtime. The specific results are presented in

Table 4. The qualitative results of different algorithms in complex scenarios are shown in

Figure 16.

In the

Figure 16, A–H are the original image, including region growing algorithm, SegNet, genetic algorithm combined FCM, multi-space cooperative clustering FCM algorithm, Mask R-CNN, DeepLabv3 +, and FCM-based feature fusion algorithm, respectively. As can be seen from the

Figure 16, the algorithm proposed in this paper achieves good image segmentation performance in complex scenarios, such as presence of shadows, severe concrete spalling, and fine cracks.

3.5. Validation of Algorithm Reliability for Actual Bridge Detection Through Practical Experiments

To conduct a thorough evaluation of the crack detection capabilities of our proposed FCM-based clustering algorithm with multi-feature fusion within actual bridge environments, systematic in situ bridge tests were meticulously designed and implemented. Data acquisition encompassed a diverse range of bridge types, varying environmental conditions, and different structural components, thereby ensuring a comprehensive assessment of the algorithm’s applicability and robustness.

3.5.1. Experimental Design Details

(1) Test Bridge Descriptions

To meet the test requirements, we selected three representative bridges for bridge inspection. During the inspection process, aiming at bridge diseases, we will compare the algorithm proposed in this paper with deep learning algorithms and traditional threshold algorithms. The specific bridge information is as follows:

Beam Bridge No. 1: Situated along a key urban thoroughfare, it is a 15-year-old reinforced concrete bridge with a main span of 30 m. It shows local concrete spalling and cracks.

Arch Bridge No. 2: It is a concrete-decked bridge with a 50-m span located in a suburban area. It has water stains and shadow obstructions.

Cable-Stayed Bridge No. 3: a modern bridge with complex surface patterns and uneven deck lighting.

(2) Data Acquisition

A DJI Matrice 300 RTK unmanned aerial vehicle (UAV),manufactured by DJI (Shenzhen, China),was equipped with a high-resolution 20 MP optical camera and an inertial measurement unit (IMU), was used for imaging. The UAV flew along predetermined routes to capture images of decks, piers, abutments, and beam soffits. To test the algorithm’s adaptability to varying illumination, images were acquired in the morning, at noon, and in the evening. In total, 120 raw images with a resolution of 5472 × 3078 pixels were collected, covering linear, mesh-like, and dendritic-type cracks.

(3) Data Annotation and Preprocessing

Two professional bridge inspectors manually labeled the images to guarantee objectivity and precision. The preprocessing steps included Gaussian filtering for noise reduction, contrast-limited adaptive histogram equalization (CLAHE) for contrast enhancement, and perspective correction to mitigate environmental interference and ensure image quality.

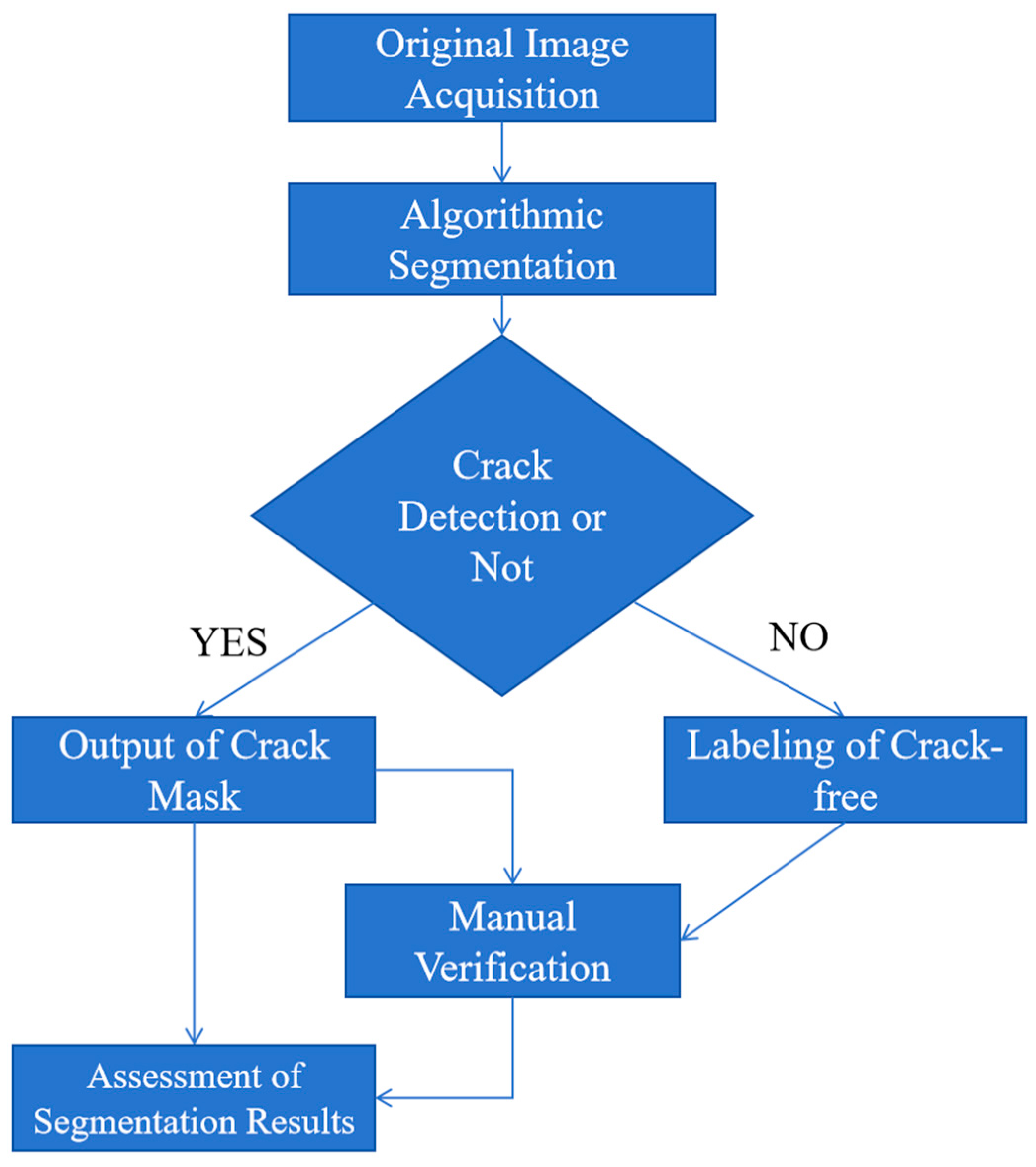

3.5.2. Algorithm Testing Procedure

The specific experimental procedure is shown in

Figure 17. Under the premise of using manual annotation as a benchmark, the experimental procedure evaluates the crack detection capability of the proposed algorithm across different environments and reasonably categorizes the image segmentation outcomes based on accuracy, recall, and Dice coefficient, while summarizing the algorithm’s limitations and advantages.

3.5.3. Experimental Data Analysis

(1) Statistical Analysis of Experimental Results

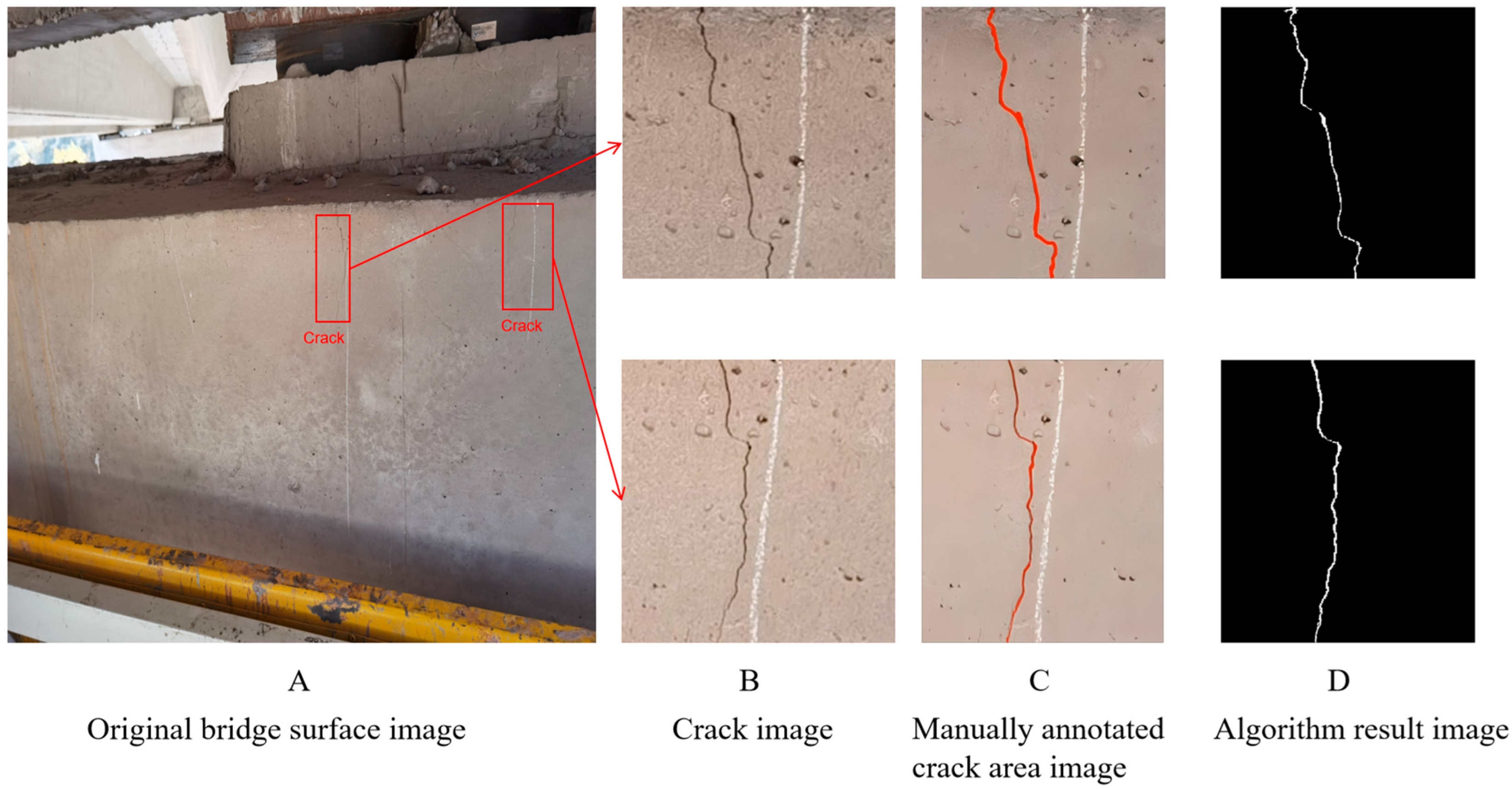

The experimental results, as presented in

Table 5, indicate that the proposed FCM-clustering-based crack detection algorithm with multi-feature fusion demonstrates exceptional performance in both precision and recall metrics. The algorithm achieves a mean accuracy of 87.5% and maintains an average processing time of approximately 0.86 s per image.

Figure 18 offers a visual representation of the algorithm’s performance on real-bridge images, providing an intuitive validation of its effectiveness in complex practical scenarios. As depicted in

Figure 18, the left-side image is the original bridge surface image acquired by a drone, reflecting the complexity of actual field conditions. The middle image presents the crack areas that have been manually annotated by experts, which serve as the benchmark for evaluating the algorithm’s performance. The right-side image illustrates the crack detection results achieved using the proposed FCM clustering-based algorithm with multi-feature fusion. The visual comparison among these images confirms the algorithm’s ability to accurately identify cracks in complex environments, thus demonstrating its effectiveness and robustness in real bridge detection situations.

(2) Comparison with Other Algorithms

To verify the effectiveness of the proposed algorithm, the collected dataset was tested using the following three different methods: the traditional thresholding algorithm, the deep learning algorithm, and the algorithm presented in this paper. The focus was on analyzing the segmentation performance of each algorithm on images collected from real bridge sites. The specific performance results are shown in

Table 6.

(3) Multi-Condition Robustness Analysis

As shown in

Figure 19, we compared the image segmentation performance of different algorithms under complex conditions (dense cracks, intersecting cracks). The limitations of each algorithm under complex conditions are as follows:

1. Traditional thresholding algorithm:

Highly sensitive: sensitive to image brightness and contrast changes, prone to over-segmentation or under-segmentation in dense crack areas [

30].

Poor adaptability: struggles to adapt to complex conditions, with a high probability of misjudgment or incorrect connection at intersecting cracks.

Low robustness: performance drops significantly in complex conditions.

2. Deep learning U-Net ++ algorithm:

Data dependent: requires high diversity and representativeness of the training dataset; insufficient data in complex conditions can lead to overfitting or poor generalization [

31].

High computational resource demand: training and inference require substantial computational resources and time, limiting practical applications [

32].

3. Proposed FCM with feature fusion algorithm:

Limited feature fusion: despite performance improvements from feature fusion, its adaptability to extremely complex conditions (e.g., extremely dense cracks with multi-directional intersections) is still restricted.

Imperfect detail processing: there is room for improvement in handling details at intersecting crack junctions, with a tendency for misjudgment.