An Overview of Autonomous Parking Systems: Strategies, Challenges, and Future Directions

Abstract

1. Introduction

1.1. Context and Motivation

1.2. Recent Drivers of Progress

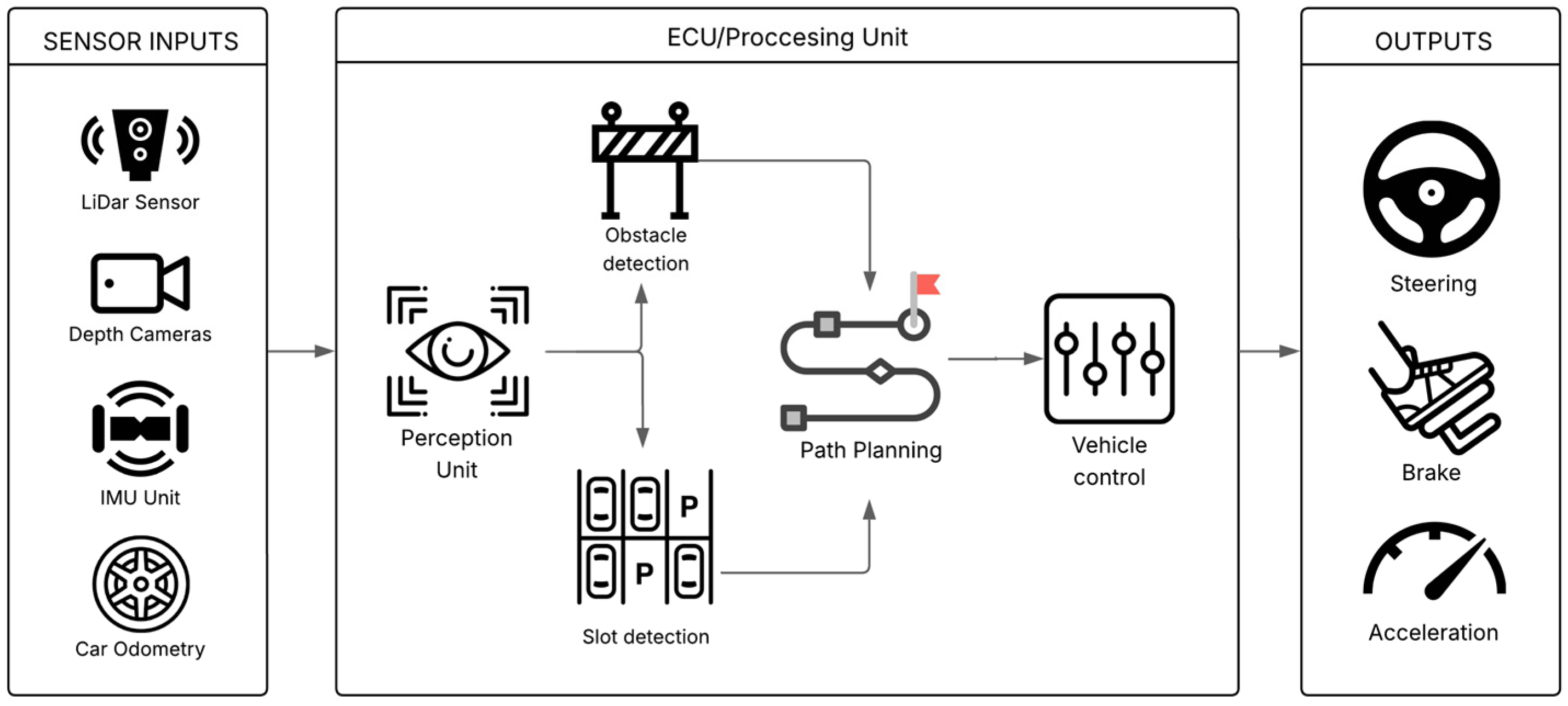

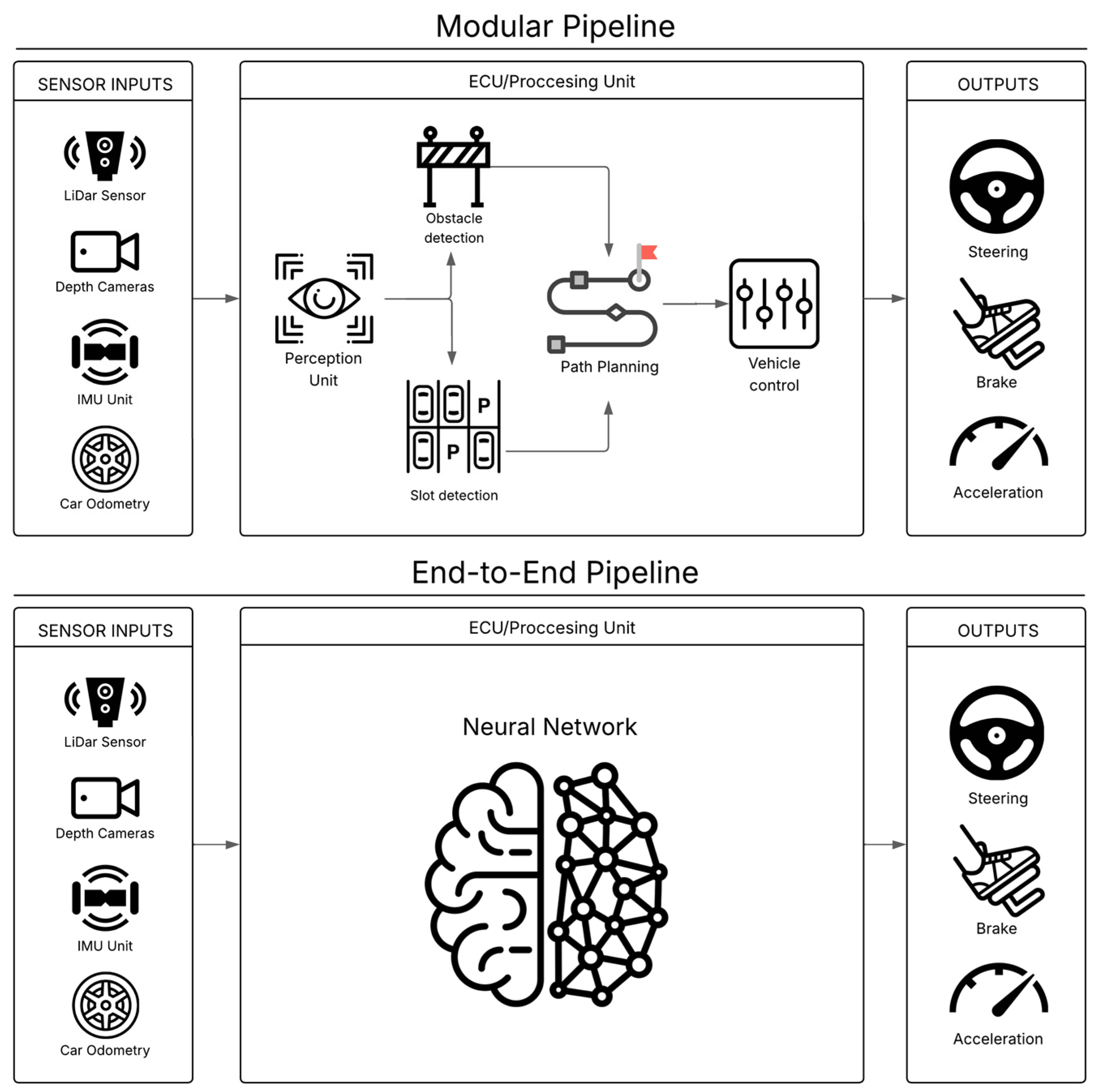

2. Perception Methods for Parking Environments

2.1. Sensor Technologies and Fusion

2.1.1. Critical Analysis of Sensor Technologies

2.1.2. Critical Analysis of Sensor Fusion Approaches

2.2. Deep Learning for Parking Space and Obstacle Detection

2.3. Achieving Robustness in Diverse Conditions

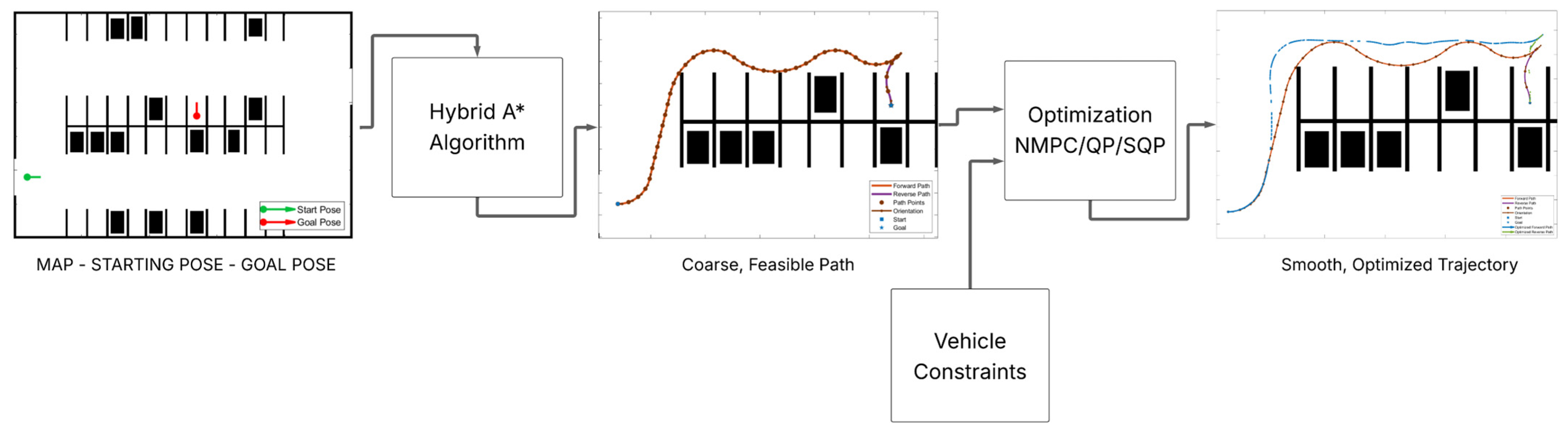

3. Path Planning Algorithms for APS

3.1. Optimization and Control-Based Planning

- minimize the cost functional :subject to system dynamics:initial conditions:and path/terminal constraints:where is the performance index, is the terminal cost, is the instantaneous cost (Lagrangian), is the state vector, is the control input vector, represents the vehicle dynamics, are path constraints (e.g., actuator limits, obstacle avoidance), and are terminal constraints (e.g., reaching the desired parking pose).

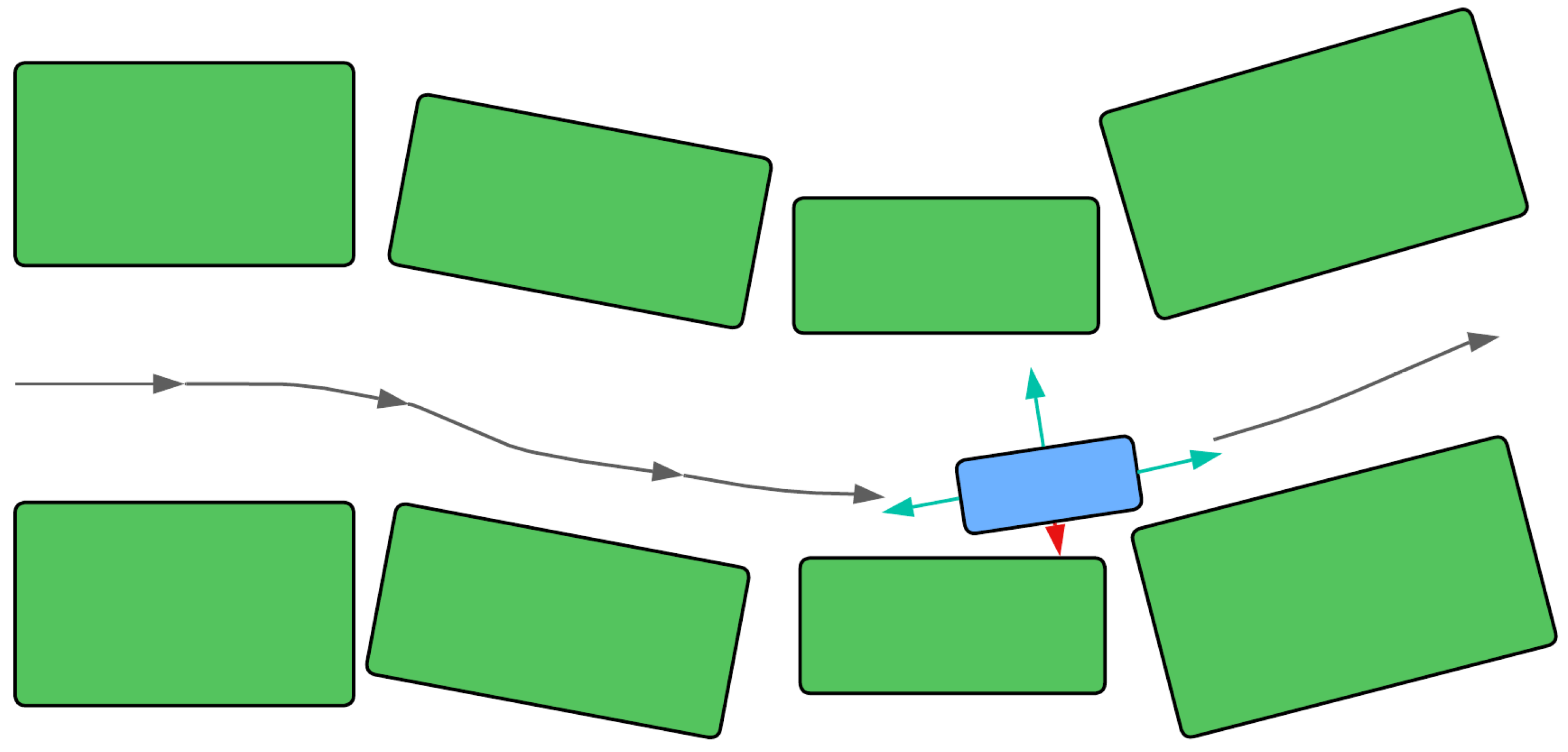

3.2. Search and Sampling-Based Planning

3.3. Addressing Constraints and Complexities

3.4. Path Smoothing and Refinement

4. Vehicle Control Strategies

4.1. Advanced Trajectory Tracking Controllers

- Model Predictive Control (MPC) remains a prominent technique due to its inherent ability to handle constraints (on states and inputs) explicitly and optimize control actions over a future prediction horizon [13,75]. It can anticipate future path requirements and adjust current inputs accordingly, leading to smoother control [4]. A common discrete-time cost function for MPC in trajectory tracking is:

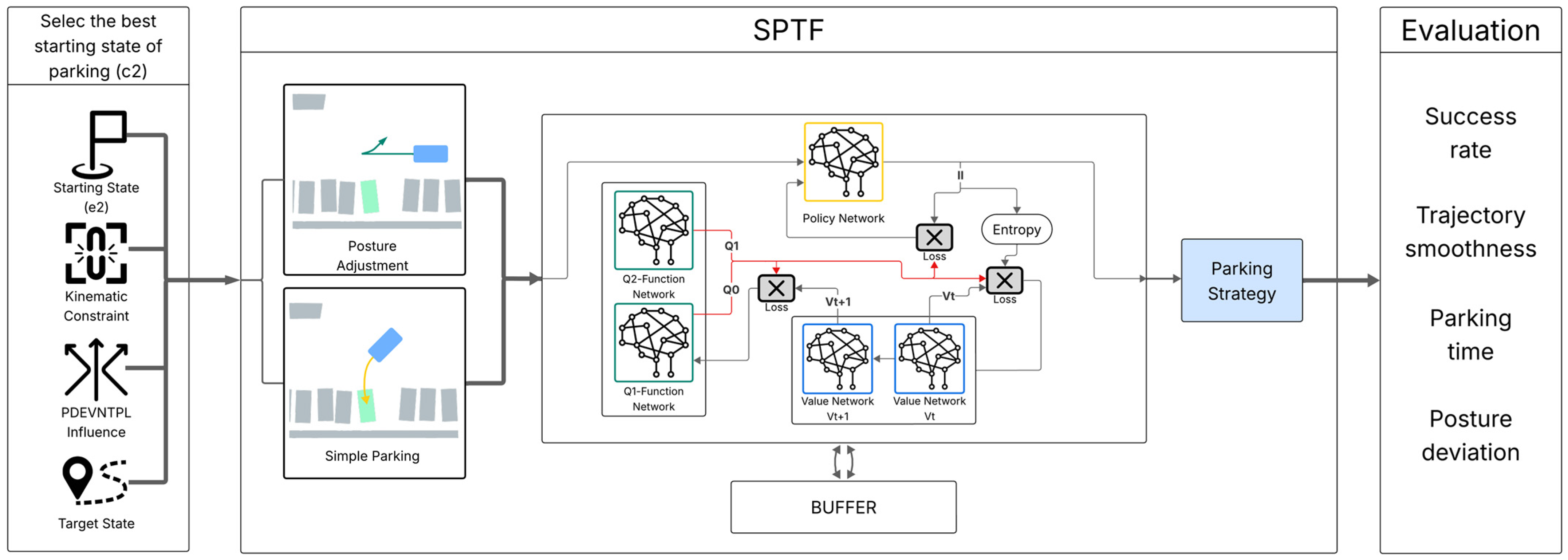

- Reinforcement Learning (RL) techniques, which are fundamentally based on agents learning optimal behavior through trial-and-error interactions with an environment to maximize a cumulative reward signal, are gaining traction for learning control policies directly from these interactions (or, more commonly, a simulation) [84,85]. Algorithms like Soft Actor-Critic (SAC), whose architecture is detailed in Figure 7, have been used to train parking strategies that explicitly balance multiple objectives, including safety, comfort (e.g., minimizing jerk), efficiency (e.g., minimizing time), and accuracy (e.g., final pose error) [15,82]. SAC maximizes an entropy-regularized objective:

- Neural Network (NN)-Based Controllers; Beyond standard RL algorithms, researchers are developing bespoke NN-based controllers. One example is a pseudo-neural network (PNN) steering controller designed with a physics-driven structure, using feedforward components and feedback terms, trained via supervised learning on data from high-fidelity simulations [12].

4.2. Ensuring Precision, Stability, and Handling Constraints

4.3. Critical Evaluation of Vehicle Control Strategies in Autonomous Parking Systems (APS)

Introduction to the Comparative Table

5. Comparative Analysis of Recent APS Research and System Developments

5.1. Introduction to Comparative Framework

5.2. Comparative Table of Pivotal APS Studies and Systems

5.3. Analysis of Overarching Trends and Innovations

5.4. Identified Gaps and Future Research Pointers from Comparative Analysis

6. System Aspects and Emerging Challenges

6.1. System Integration, Simulation, and Validation

6.2. Security Vulnerabilities in APS

6.3. User Interaction, Acceptance, and Trust

6.4. Operational Challenges

7. Future Directions

- End-to-End Learning with Verifiability and Safety Guarantees. A key emerging research direction is the development of end-to-end learning approaches that map sensor inputs directly to control actions. While still a nascent area for production systems, this paradigm holds significant potential for simplifying the traditional, modular APS pipeline and discovering novel, holistic solutions [7,72]. However, this approach intensifies challenges related to data dependency, interpretability, and safety verification. Future work must integrate mechanisms for robust interpretability, comprehensive uncertainty quantification, and, where possible, formal verification or runtime monitoring with safety fallbacks into these architectures.

- Robust Multi-Modal Perception and Advanced Fusion. Research into sensor fusion techniques that can gracefully handle sensor degradation or complete failure of one or more modalities, resolve conflicting information with high reliability using uncertainty-aware methods, and adapt dynamically to extreme environmental conditions is crucial [6,7,8,9,10,17,62,64,65]. This includes developing better domain adaptation methods and creating self-assessment capabilities within perception systems to flag low-confidence situations.

- Safety Verification and Validation (V&V) for AI-based Systems. Developing more rigorous, scalable, and widely accepted V&V methodologies specifically tailored for AI-driven systems is paramount. This includes advancing formal methods applicable to neural networks, investing in large-scale realistic simulation platforms with a strong focus on automated edge case generation, and establishing standardized safety metrics and benchmarks aligned with automotive safety standards like ISO 26,262 and SOTIF (ISO 21448) [4,119,122,123,124].

- Human-Centric APS Design and Trust Calibration. Deeper investigation into XAI techniques that provide causal, contrastive, and actionable explanations is needed [117,118]. Research should focus on adaptive HMIs that manage user expectations and reduce cognitive load. Longitudinal studies on trust dynamics—how trust is built, lost, and potentially repaired over time—are needed to ensure users neither dangerously over-trust an imperfect system nor under-utilize its capabilities [5].

- Cooperative and Multi-Agent Systems with Scalable Coordination. As vehicle connectivity (Vehicle-to-Everything (V2X)) increases, research into decentralized, robust, and scalable algorithms for coordinating multiple autonomous vehicles in shared parking environments (e.g., efficient allocation of spots, collision-free maneuvering, negotiation for shared resources) will become increasingly important [79,120,125].

- This includes not only vehicle-to-vehicle (V2V) coordination but also vehicle-to-infrastructure (V2I) cooperation, which presents significant opportunities. For instance, future work could explore smart parking lots that communicate directly with vehicles to guide them to available spots, or cloud services that offload some of the complex computational logic for path planning, reducing the burden on the vehicle’s onboard systems.

- Proactive Cybersecurity for Connected APS Architectures. Dedicated research is urgently needed to identify specific vulnerabilities in distributed APS architectures—spanning sensors, ECUs, V2X links, and backend cloud infrastructure—and to develop tailored, adaptive, and resilient intrusion detection and prevention mechanisms [68,120,125].

- Addressing the Data Bottleneck Systematically and Collaboratively. Concerted efforts towards creating large-scale, high-quality, diverse, and well-annotated public datasets are essential [7,65,71,72]. This may involve exploring novel data collection strategies, advancing synthetic data generation techniques, and investigating federated learning approaches.

8. Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| AAPS | Advanced Automated Parking System |

| ADAS | Advanced Driver Assistance Systems |

| AI | Artificial Intelligence |

| ANGS | Number of Gear Shifts |

| APA | Autonomous Parking Assist |

| APF | Artificial Potential Field |

| APS | Autonomous Parking Systems |

| ATE | Absolute Trajectory Error |

| AV | Autonomous Vehicle |

| AVP | Autonomous Valet Parking |

| BEV | Bird’s Eye View |

| Bi-RRT* | Bidirectional Rapidly exploring Random Tree* |

| CAN | Controller Area Network |

| CAV | Connected Automated Vehicles |

| CFAR | Constant False Alarm Rate |

| CILQR | Constrained Iterative Linear Quadratic Regulator |

| CMDP | Constraint-driven Markov Decision Process |

| CMOS | Complementary Metal-Oxide Semiconductor |

| CNN | Convolutional Neural Networks |

| CP | Control Points |

| CV | Computer Vision |

| DAVIS | Dynamic and Active Pixel Vision Sensor |

| DL | Deep Learning |

| DRL | Deep Reinforcement Learning |

| DVS | Dynamic Vision Sensor |

| ECU | Electronic Control Unit |

| EKF | Extended Kalman Filter |

| EMPC | Economic Model Predictive Control |

| E2E | End-to-End |

| FAST-LIO | Fast LiDAR-Inertial Odometry |

| FoV | Field-of-View |

| FPGA | Field-Programmable Gate Array |

| FPS | Frames Per Second |

| GA | Genetic Algorithm |

| GF-LIO | Global Factor-based LiDAR-Inertial Odometry |

| GP | Gaussian Process |

| GPS | Global Positioning System |

| GPU | Graphics Processing Unit |

| HDR | High Dynamic Range |

| HER | Hindsight Experience Replay |

| HMI | Human–Machine Interface |

| HMRP | Human-Manipulated Risk Perception |

| ICBS | Improved Conflict-Based Search |

| IDS | Intrusion Detection Systems |

| IMU | Inertial Measurement Unit |

| IR | Infrared |

| I-STC | Improved Safe Travel Corridor |

| LIO-SAM | LiDAR-Inertial Odometry via Smoothing and Mapping |

| LKF | Linear Kalman Filter |

| LPV | Linear Parameter Varying |

| LSS | Lift Scene Splatting |

| LWIR | Long-Wave Infrared |

| mAP | mean Average Precision |

| MBSE | Model-Based Systems Engineering |

| MEMS | Micro-Electro-Mechanical Systems |

| ML | Machine Learning |

| MORL | Multi-Objective Reinforcement Learning |

| MPC | Model Predictive Control |

| MPS | Mobis Parking System |

| MSCKF | Multi-State Constraint Kalman Filter |

| MTBF | Mean Time Between Failures |

| NIR | Near-Infrared |

| NMPC | Nonlinear Model Predictive Control |

| NN | Neural Network |

| OCP | Optimal Control Problem |

| ODD | Operational Design Domain |

| OGM | Occupancy Grid Map |

| OPA | Optical Phased Array |

| PDM | Probabilistic Diffusion Model |

| PID | Proportional Integral Derivative |

| PINS | Pontryagin’s Indirect Method Solver |

| PL | Path Lenght |

| PNN | Pseudo-Neural Network |

| PSR | Parking Success Rate |

| QP | Quadratic Programming |

| ReLU | Rectified Linear Unit |

| RGB | Red |

| RL | Reinforcement Learning |

| RPROP | Resilient Propagation |

| RS | Reeds-Shepp |

| RRT | Rapidly exploring Random Tree |

| RRT* | Rapidly exploring Random Tree Star |

| RRT-Connect | Rapidly exploring Random Tree Connect |

| SAC | Soft Actor-Critic |

| SLAM | Simultaneous Localization and Mapping |

| SMC | Satisfiability Modulo Convex |

| SNR | Signal-to-Noise Ratio |

| SoC | System-on-Chip |

| SOTA | State-Of-The-Art |

| SOTIF | Safety Of The Intended Functionality |

| SQP | Sequential Quadratic Programming |

| SSL | Solid-State LiDAR |

| SWIR | Short-Wave Infrared |

| T-ITS | IEEE Transactions on Intelligent Transportation Systems |

| T-IV | IEEE Transactions on Intelligent Vehicles |

| UGV | Unmanned Ground Vehicle |

| UKF | Unscented Kalman Filter |

| UX | User Experience |

| V&V | Verification and Validation |

| V2X | Vehicle-to-Everything |

| V2I | Vehicle-to-infrastructure |

| VIO | Visual-Inertial Odometry |

| XAI | Explainable AI |

| YOLO | You Only Look Once |

References

- Wang, W.; Song, Y.; Zhang, J.; Deng, H. Automatic parking of vehicles: A review of literatures. Int. J Automot. Technol. 2014, 15, 967–978. [Google Scholar] [CrossRef]

- Hossain, M.; Rahim, M.; Rahman, M.; Ramasamy, D. Artificial Intelligence Revolutionising the Automotive Sector: A Comprehensive Review of Current Insights, Challenges, and Future Scope. Comput. Mater. Contin. 2025, 82, 3643–3692. [Google Scholar] [CrossRef]

- Yu, B.; Lin, L.; Chen, J. (Eds.) Recent Advance in Intelligent Vehicle; MDPI-Multidisciplinary Digital Publishing Institute: Basel, Switzerland, 2024; ISBN 978-3-7258-1469-5. [Google Scholar]

- Samak, T.V.; Samak, C.V.; Brault, J.; Harber, C.; McCane, K.; Smereka, J.; Brudnak, M.; Gorsich, D.; Krovi, V. A Systematic Digital Engineering Approach to Verification & Validation of Autonomous Ground Vehicles in Off-Road Environments. arXiv 2025. [Google Scholar] [CrossRef]

- Deng, M.; Guo, Y.; Guo, Y.; Wang, C. The Role of Technical Safety Riskiness and Behavioral Interventions in the Public Acceptance of Autonomous Vehicles in China. J. Transp. Eng. Part A Syst. 2023, 149, 04022122. [Google Scholar] [CrossRef]

- Feng, Z. Application and Development of Radar Sensors in Autonomous Driving Technology. Appl. Comput. Eng. 2025, 140, 48–52. [Google Scholar] [CrossRef]

- Gao, K.; Zhou, L.; Liu, M.; Knoll, A. E2E Parking Dataset: An Open Benchmark for End-to-End Autonomous Parking. arXiv 2025. [Google Scholar] [CrossRef]

- Chen, J.; Li, F.; Liu, X.; Yuan, Y. Robust Parking Space Recognition Approach Based on Tightly Coupled Polarized Lidar and Pre-Integration IMU. Appl. Sci. 2024, 14, 9181. [Google Scholar] [CrossRef]

- Wang, X.; Miao, H.; Liang, J.; Li, K.; Tan, J.; Luo, R.; Jiang, Y. Multi-Dimensional Research and Progress in Parking Space Detection Techniques. Electronics 2025, 14, 748. [Google Scholar] [CrossRef]

- Chinnaiah, M.C.; Vani, G.D.; Karumuri, S.R.; Srikanthan, T.; Lam, S.-K.; Narambhatla, J.; Krishna, D.H.; Dubey, S. Geometry-Based Parking Assistance Using Sensor Fusion for Robots With Hardware Schemes. IEEE Sens. J. 2024, 24, 8821–8834. [Google Scholar] [CrossRef]

- Jiang, J.; Tang, R.; Kang, W.; Xu, Z.; Qian, C. Two-Stage Efficient Parking Space Detection Method Based on Deep Learning and Computer Vision. Appl. Sci. 2025, 15, 1004. [Google Scholar] [CrossRef]

- Pagot, E.; Piccinini, M.; Bertolazzi, E.; Biral, F. Fast Planning and Tracking of Complex Autonomous Parking Maneuvers With Optimal Control and Pseudo-Neural Networks. IEEE Access 2023, 11, 124163–124180. [Google Scholar] [CrossRef]

- Zhang, P.; Zhou, S.; Hu, J.; Zhao, W.; Zheng, J.; Zhang, Z.; Gao, C. Automatic parking trajectory planning in narrow spaces based on Hybrid A* and NMPC. Sci. Rep. 2025, 15, 1384. [Google Scholar] [CrossRef]

- Li, Y.; Li, G.; Wang, X. Research on Trajectory Planning of Autonomous Vehicles in Constrained Spaces. Sensors 2024, 24, 5746. [Google Scholar] [CrossRef] [PubMed]

- Tang, X.; Yang, Y.; Liu, T.; Lin, X.; Yang, K.; Li, S. Path Planning and Tracking Control for Parking via Soft Actor-Critic Under Non-Ideal Scenarios. IEEE/CAA J. Autom. Sin. 2024, 11, 181–195. [Google Scholar] [CrossRef]

- Ahn, S.; Oh, T.; Yoo, J. Collision Avoidance Path Planning for Automated Vehicles Using Prediction Information and Artificial Potential Field. Sensors 2024, 24, 7292. [Google Scholar] [CrossRef]

- Nahata, D.; Othman, K.; Nahata, D.; Othman, K. Exploring the challenges and opportunities of image processing and sensor fusion in autonomous vehicles: A comprehensive review. AIMS Electron. Electr. Eng. 2023, 7, 271–321. [Google Scholar] [CrossRef]

- Hasanujjaman, M.; Chowdhury, M.Z.; Jang, Y.M. Sensor Fusion in Autonomous Vehicle with Traffic Surveillance Camera System: Detection, Localization, and AI Networking. Sensors 2023, 23, 3335. [Google Scholar] [CrossRef] [PubMed]

- Automated Parking|Develop APS Efficiently. Available online: https://www.appliedintuition.com/use-cases/automated-parking (accessed on 19 May 2025).

- Mehta, M.D. Sensor Fusion Techniques in Autonomous Systems: A Review of Methods and Applications. Int. Res. J. Eng. 2025, 12, 1902–1908. [Google Scholar]

- Beránek, F.; Diviš, V.; Gruber, I. Soiling Detection for Advanced Driver Assistance Systems. In Proceedings of the Seventeenth International Conference on Machine Vision (ICMV 2024), Edinburgh, UK, 10–13 October 2024; SPIE: Bellingham, WA, USA, 2024; Volume 13517, pp. 174–182. [Google Scholar]

- Heimberger, M.; Horgan, J.; Hughes, C.; McDonald, J.; Yogamani, S. Computer vision in automated parking systems: Design, implementation and challenges. Image Vis. Comput. 2017, 68, 88–101. [Google Scholar] [CrossRef]

- Kumar, S.; Truong, H.; Sharma, S.; Sistu, G.; Scanlan, T.; Grua, E.; Eising, C. Minimizing Occlusion Effect on Multi-View Camera Perception in BEV with Multi-Sensor Fusion. arXiv 2025. [Google Scholar] [CrossRef]

- The Rise of Ultrasonic Sensor Technology in Park Assist Systems Market to Grow to USD 3,277.4 Million, with a CAGR of 14.4%|Future Market Insights, Inc. Morningstar, Inc. 2025. Available online: https://www.accessnewswire.com/newsroom/en/automotive/the-rise-of-ultrasonic-sensor-technology-in-park-assist-systems-market-to-grow-to-usd-3-2-987599 (accessed on 19 May 2025).

- Automotive Applications. Available online: https://www.monolithicpower.com/en/learning/mpscholar/sensors/real-world-applications/automotive-applications (accessed on 19 May 2025).

- Lim, B.S.; Keoh, S.L.; Thing, V.L.L. Autonomous vehicle ultrasonic sensor vulnerability and impact assessment. In Proceedings of the 2018 IEEE 4th World Forum on Internet of Things (WF-IoT), Singapore, 5–8 February 2018; pp. 231–236. [Google Scholar]

- Acharya, R. Sensor blockage in autonomous vehicles: AI-driven detection and mitigation strategies. World J. Adv. Eng. Technol. Sci. 2025, 15, 321–331. [Google Scholar] [CrossRef]

- Yeong, D.J.; Panduru, K.; Walsh, J. Exploring the Unseen: A Survey of Multi-Sensor Fusion and the Role of Explainable AI (XAI) in Autonomous Vehicles. Sensors 2025, 25, 856. [Google Scholar] [CrossRef] [PubMed]

- Lopac, N.; Jurdana, I.; Brnelić, A.; Krljan, T. Application of Laser Systems for Detection and Ranging in the Modern Road Transportation and Maritime Sector. Sensors 2022, 22, 5946. [Google Scholar] [CrossRef]

- Mak, H. Explore the Different Types of Lidar Technology: From Mechanical to Solid-State Lidar. Available online: https://globalgpssystems.com/lidar/explore-the-different-types-of-lidar-technology-from-mechanical-to-solid-state-lidar/ (accessed on 19 May 2025).

- Solid State Lidar. Neuvition|Solid-State Lidar, Lidar Sensor Suppliers, Lidar Technology, Lidar Sensor. Available online: https://www.neuvition.com/solid-state-lidar-neuvition (accessed on 19 May 2025).

- Wei, W.; Shirinzadeh, B.; Nowell, R.; Ghafarian, M.; Ammar, M.M.A.; Shen, T. Enhancing Solid State LiDAR Mapping with a 2D Spinning LiDAR in Urban Scenario SLAM on Ground Vehicles. Sensors 2021, 21, 1773. [Google Scholar] [CrossRef] [PubMed]

- Royo, S.; Ballesta-Garcia, M. An Overview of Lidar Imaging Systems for Autonomous Vehicles. Appl. Sci. 2019, 9, 4093. [Google Scholar] [CrossRef]

- Emilio, M.D.P. The Future of LiDAR Lies in ADAS. EE Times. 2023. Available online: https://www.eetimes.com/the-future-of-lidar-lies-in-adas/ (accessed on 19 May 2025).

- Han, Z.; Wang, J.; Xu, Z.; Yang, S.; He, L.; Xu, S.; Wang, J.; Li, K. 4D Millimeter-Wave Radar in Autonomous Driving: A Survey. arXiv 2024. [Google Scholar] [CrossRef]

- Lee, J.-E. 4D Radar Reimagining Next-Gen Mobility. Geospatial World. 2023. Available online: https://geospatialworld.net/prime/business-and-industry-trends/4d-radar-reimagining-next-gen-mobility/ (accessed on 19 May 2025).

- Ding, F.; Wen, X.; Zhu, Y.; Li, Y.; Lu, C.X. RadarOcc: Robust 3D Occupancy Prediction with 4D Imaging Radar. arXiv 2024. [Google Scholar] [CrossRef]

- Gallego, G.; Delbruck, T.; Orchard, G.; Bartolozzi, C.; Taba, B.; Censi, A.; Leutenegger, S.; Davison, A.J.; Conradt, J.; Daniilidis, K.; et al. Event-Based Vision: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 154–180. [Google Scholar] [CrossRef]

- Huang, J.-T. Indoor Localization and Mapping with 4D mmWave Imaging Radar. Master’s Thesis, Carnegie Mellon University, Pittsburgh, PA, USA, 2024. Available online: https://www.ri.cmu.edu/publications/indoor-localization-and-mapping-with-4d-mmwave-imaging-radar/ (accessed on 19 May 2025).

- 4D Single-Chip Waveguide Radar & Multi-Chip Cascade 4D Imaging Radar Report Analysis-Electronics Headlines-EEWORLD. Available online: https://en.eeworld.com.cn/mp/ICVIS/a387462.jspx (accessed on 19 May 2025).

- Wang, H.; Guo, R.; Ma, P.; Ruan, C.; Luo, X.; Ding, W.; Zhong, T.; Xu, J.; Liu, Y.; Chen, X. Towards Mobile Sensing with Event Cameras on High-agility Resource-constrained Devices: A Survey. arXiv 2025. [Google Scholar] [CrossRef]

- Chen, Y.; Gilitshenski, M.I.; Amini, A. Real World Application of Event-Based End to End Autonomous Driving; MIT Department of Mathematics: Cambridge, MA, USA, 2020. [Google Scholar]

- Vollmer, M.; Möllmann, K. Infrared Thermal Imaging: Fundamentals, Research and Applications, 1st ed.; Wiley: Hoboken, NJ, USA, 2017; ISBN 978-3-527-41351-5. [Google Scholar]

- Kebbati, Y.; Puig, V.; Ait-Oufroukh, N.; Vigneron, V.; Ichalal, D. Optimized adaptive MPC for lateral control of autonomous vehicles. In Proceedings of the 2021 9th International Conference on Control, Mechatronics and Automation (ICCMA), Belval, Luxembourg, 11 November 2021; IEEE: Belval, Luxembourg, 2021; pp. 95–103. [Google Scholar]

- Mirlach, J.; Wan, L.; Wiedholz, A.; Keen, H.E.; Eich, A. R-LiViT: A LiDAR-Visual-Thermal Dataset Enabling Vulnerable Road User Focused Roadside Perception. arXiv 2025. [Google Scholar] [CrossRef]

- Ni, X.; Kuehnel, C.; Jiang, X. Thermal Detection of People with Mobility Restrictions for Barrier Reduction at Traffic Lights Controlled Intersections. arXiv 2025. [Google Scholar] [CrossRef]

- Multispectral Drones & Cameras. Available online: https://advexure.com/collections/multispectral-drones-sensors (accessed on 19 May 2025).

- Snapshot Multispectral Cameras. Spectral Devices. Available online: https://spectraldevices.com/collections/snapshot-multispectral-cameras (accessed on 19 May 2025).

- Sun, J.; Yin, M.; Wang, Z.; Xie, T.; Bei, S. Multispectral Object Detection Based on Multilevel Feature Fusion and Dual Feature Modulation. Electronics 2024, 13, 443. [Google Scholar] [CrossRef]

- Zhang, Y.; Shi, P.; Li, J. LiDAR-Based Place Recognition For Autonomous Driving: A Survey. ACM Comput. Surv. 2025, 57, 1–36. [Google Scholar] [CrossRef]

- Kuang, Y.; Hu, T.; Ouyang, M.; Yang, Y.; Zhang, X. Tightly Coupled LIDAR/IMU/UWB Fusion via Resilient Factor Graph for Quadruped Robot Positioning. Remote Sens. 2024, 16, 4171. [Google Scholar] [CrossRef]

- Ibrahim, Q.; Ali, Z. A Comprehensive Review of Autonomous Vehicle Architecture, Sensor Integration, and Communication Networks: Challenges and Performance Evaluation. Preprint 2025. [Google Scholar] [CrossRef]

- Yang, L.; Tao, Y.; Li, M.; Zhou, J.; Jiao, K.; Li, Z. Lidar-Inertial SLAM Method Integrated with Visual QR Codes for Indoor Mobile Robots. Res. Sq. 2025. [Google Scholar] [CrossRef]

- Huang, C.; Wang, Y.; Sun, X.; Yang, S. Research on Digital Terrain Construction Based on IMU and LiDAR Fusion Perception. Sensors 2024, 25, 15. [Google Scholar] [CrossRef]

- Sahoo, L.K.; Varadarajan, V. Deep learning for autonomous driving systems: Technological innovations, strategic implementations, and business implications—A comprehensive review. Complex Eng. Syst. 2025, 5, 83. [Google Scholar] [CrossRef]

- Liu, L.; Lee, J.; Shin, K.G. RT-BEV: Enhancing Real-Time BEV Perception for Autonomous Vehicles. In Proceedings of the 2024 IEEE Real-Time Systems Symposium (RTSS), York, UK, 10 December 2024; IEEE: New York, NY, USA, 2024; pp. 267–279. [Google Scholar]

- Shi, K.; He, S.; Shi, Z.; Chen, A.; Xiong, Z.; Chen, J.; Luo, J. Radar and Camera Fusion for Object Detection and Tracking: A Comprehensive Survey. arXiv 2024. [Google Scholar] [CrossRef]

- Yang, B.; Li, J.; Zeng, T. A Review of Environmental Perception Technology Based on Multi-Sensor Information Fusion in Autonomous Driving. World Electr. Veh. J. 2025, 16, 20. [Google Scholar] [CrossRef]

- Paz, D.; Zhang, H.; Li, Q.; Xiang, H.; Christensen, H. Probabilistic Semantic Mapping for Urban Autonomous Driving Applications. arXiv 2020. [Google Scholar] [CrossRef]

- Wang, S.; Ahmad, N.S. A Comprehensive Review on Sensor Fusion Techniques for Localization of a Dynamic Target in GPS-Denied Environments. IEEE Access 2025, 13, 2252–2285. [Google Scholar] [CrossRef]

- Zaim, H.Ç.; Yolaçan, E.N. Taxonomy of sensor fusion techniques for various application areas: A review. NOHU J. Eng. Sci. 2025, 14, 392–411. [Google Scholar] [CrossRef]

- Jiang, M.; Xu, G.; Pei, H.; Feng, Z.; Ma, S.; Zhang, H.; Hong, W. 4D High-Resolution Imagery of Point Clouds for Automotive mmWave Radar. IEEE Trans. Intell. Transport. Syst. 2024, 25, 998–1012. [Google Scholar] [CrossRef]

- Edwards, A.; Giacobbe, M.; Abate, A. On the Trade-off Between Efficiency and Precision of Neural Abstraction. arXiv 2023. [Google Scholar] [CrossRef]

- Marti, E.; de Miguel, M.A.; Garcia, F.; Perez, J. A Review of Sensor Technologies for Perception in Automated Driving. IEEE Intell. Transp. Syst. Mag. 2019, 11, 94–108. [Google Scholar] [CrossRef]

- Fan, Z.; Zhang, L.; Wang, X.; Shen, Y.; Deng, F. LiDAR, IMU, and camera fusion for simultaneous localization and mapping: A systematic review. Artif. Intell. Rev. 2025, 58, 174. [Google Scholar] [CrossRef]

- Ganesh, N.; Shankar, R.; Mahdal, M.; Murugan, J.S.; Chohan, J.S.; Kalita, K. Exploring Deep Learning Methods for Computer Vision Applications across Multiple Sectors: Challenges and Future Trends. Comput. Model. Eng. Sci. 2024, 139, 103–141. [Google Scholar] [CrossRef]

- Yan, S.; O’Connor, N.E.; Liu, M. U-Park: A User-Centric Smart Parking Recommendation System for Electric Shared Micromobility Services. IEEE Trans. Artif. Intell. 2024, 5, 5179–5193. [Google Scholar] [CrossRef]

- Kalbhor, A.; Nair, R.S.; Phansalkar, S.; Sonkamble, R.; Sharma, A.; Mohan, H.; Wong, C.H.; Lim, W.H. PARKTag: An AI–Blockchain Integrated Solution for an Efficient, Trusted, and Scalable Parking Management System. Technologies 2024, 12, 155. [Google Scholar] [CrossRef]

- Ajeenkya Dy Patil School of Engineering, P. International Conference on International Conference on Multidisciplinary Research in Engineering & Technology (ICMRET- 2025). Int. J. Multidiscip. Res. Sci. Eng. Technol. 2025, 3, 1–375. [Google Scholar]

- ISO 26262-1:2018. Available online: https://www.iso.org/standard/68383.html (accessed on 6 July 2025).

- Zhang, R.; Ma, Y.; Li, T.; Lin, Z.; Wu, Y.; Chen, J.; Zhang, L.; Hu, J.; Qiu, T.Z.; Guo, K. A Robust Real-Time Lane Detection Method with Fog-Enhanced Feature Fusion for Foggy Conditions. arXiv 2025. [Google Scholar] [CrossRef]

- Wang, Y.; Xing, S.; Can, C.; Li, R.; Hua, H.; Tian, K.; Mo, Z.; Gao, X.; Wu, K.; Zhou, S.; et al. Generative AI for Autonomous Driving: Frontiers and Opportunities. arXiv 2025. [Google Scholar] [CrossRef]

- Xiao, R.; Zhong, C.; Zeng, W.; Cheng, M.; Wang, C. Novel Convolutions for Semantic Segmentation of Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–13. [Google Scholar] [CrossRef]

- Han, Z.; Sun, H.; Huang, J.; Xu, J.; Tang, Y.; Liu, X. Path Planning Algorithms for Smart Parking: Review and Prospects. World Electr. Veh. J. 2024, 15, 322. [Google Scholar] [CrossRef]

- Hsu, T.-H.; Liu, J.-F.; Yu, P.-N.; Lee, W.-S.; Hsu, J.-S. Development of an automatic parking system for vehicle. In Proceedings of the 2008 IEEE Vehicle Power and Propulsion Conference, Harbin, China, 3–5 September 2008; pp. 1–6. [Google Scholar]

- Cui, G.; Yin, Y.; Xu, Q.; Song, C.; Li, G.; Li, S. Efficient Path Planning for Automated Valet Parking: Integrating Hybrid A* Search with Geometric Curves. Int.J Automot. Technol. 2025, 26, 243–253. [Google Scholar] [CrossRef]

- Tao, F.; Ding, Z.; Fu, Z.; Li, M.; Ji, B. Efficient path planning for autonomous vehicles based on RRT* with variable probability strategy and artificial potential field approach. Sci. Rep. 2024, 14, 24698. [Google Scholar] [CrossRef]

- Li, J.; Huang, C.; Pan, M. Path-planning algorithms for self-driving vehicles based on improved RRT-Connect. Transp. Saf. Environ. 2023, 5, tdac061. [Google Scholar] [CrossRef]

- Zeng, D.; Chen, H.; Yu, Y.; Hu, Y.; Deng, Z.; Leng, B.; Xiong, L.; Sun, Z. UGV Parking Planning Based on Swarm Optimization and Improved CBS in High-Density Scenarios for Innovative Urban Mobility. Drones 2023, 7, 295. [Google Scholar] [CrossRef]

- Hu, Z.; Chen, X.; Yang, Z.; Yu, M.G.; Qin, H.; Gao, M. Path Planning with Multiple Obstacle-Avoidance Modes for Intelligent Vehicles. Automob. Eng. 2025, 47, 402. [Google Scholar] [CrossRef]

- Jin, X.; Tao, Y.; Opinat Ikiela, N.V. Trajectory Planning Design for Parallel Parking of Autonomous Ground Vehicles with Improved Safe Travel Corridor. Symmetry 2024, 16, 1129. [Google Scholar] [CrossRef]

- Wang, Z.; Chen, Z.; Jiang, M.; Qin, T.; Yang, M. RL-OGM-Parking: Lidar OGM-Based Hybrid Reinforcement Learning Planner for Autonomous Parking. arXiv 2025. [Google Scholar] [CrossRef]

- Aryan Rezaie, A. Development of Path Tracking Control Strategies for Autonomous Vehicles and Validation Using a High-Fidelity Driving Simulator. Master’s Thesis, Politecnico di Torino, Torino, Italia, 2025. [Google Scholar]

- Sutton, R.S.; Barto, A. Reinforcement learning: An introduction. In Adaptive Computation and Machine Learning, 2nd ed.; The MIT Press: Cambridge, MA, USA; London, UK, 2020; ISBN 978-0-262-03924-6. [Google Scholar]

- Zhang, Z.; Luo, Y.; Chen, Y.; Zhao, H.; Ma, Z.; Liu, H. Automated Parking Trajectory Generation Using Deep Reinforcement Learning. arXiv 2025. [Google Scholar] [CrossRef]

- Zhang, C.; Zhou, R.; Lei, L.; Yang, X. Research on Automatic Parking System Strategy. World Electr. Veh. J. 2021, 12, 200. [Google Scholar] [CrossRef]

- Wang, J.; Li, Q.; Ma, Q. Research on Active Avoidance Control of Intelligent Vehicles Based on Layered Control Method. World Electr. Veh. J. 2025, 16, 211. [Google Scholar] [CrossRef]

- Nuhel, A.K.; Al Amin, M.; Paul, D.; Bhatia, D.; Paul, R.; Sazid, M.M. Model Predictive Control (MPC) and Proportional Integral Derivative Control (PID) for Autonomous Lane Keeping Maneuvers: A Comparative Study of Their Efficacy and Stability. In Cognitive Computing and Cyber Physical Systems; Pareek, P., Gupta, N., Reis, M.J.C.S., Eds.; Lecture Notes of the Institute for Computer Sciences, Social Informatics and Telecommunications Engineering; Springer Nature: Cham, Switzerland, 2024; Volume 537, pp. 107–121. ISBN 978-3-031-48890-0. [Google Scholar]

- Li, Y.; Huang, C.; Yang, D.; Liu, W.; Li, J. Learning Based MPC for Autonomous Driving Using a Low Dimensional Residual Model. arXiv 2024. [Google Scholar] [CrossRef]

- Zhao, Y. Automatic parking planning control method based on improved A* algorithm. arXiv 2024. [Google Scholar] [CrossRef]

- Yu, L.; Wang, X.; Hou, Z. Path Tracking for Driverless Vehicle Under Parallel Parking Based on Model Predictive Control; SAE International: Warrendale, PA, USA, 2021. [Google Scholar]

- Batkovic, I. Enabling Safe Autonomous Driving in Uncertain Environments: Based on a Model Predivtive Control Approach; Chalmers University of Technology: Göteborg, Sweden, 2022; ISBN 978-91-7905-623-0. [Google Scholar]

- Liang, K.; Yang, G.; Cai, M.; Vasile, C.-I. Safe Navigation in Dynamic Environments Using Data-Driven Koopman Operators and Conformal Prediction. arXiv 2025. [Google Scholar] [CrossRef]

- Ammaturo, P. Energy-Efficient Adaptive Cruise Control: An Economic MPC Framework Based on Constant Time Gap. Master’s Thesis, Politecnico di Torino: Torino TO, Italia, 2025. [Google Scholar]

- Kiran, B.R.; Sobh, I.; Talpaert, V.; Mannion, P.; Sallab, A.A.A.; Yogamani, S.; Pérez, P. Deep Reinforcement Learning for Autonomous Driving: A Survey. arXiv 2021. [Google Scholar] [CrossRef]

- Zhang, P.; Xiong, L.; Yu, Z.; Fang, P.; Yan, S.; Yao, J.; Zhou, Y. Reinforcement Learning-Based End-to-End Parking for Automatic Parking System. Sensors 2019, 19, 3996. [Google Scholar] [CrossRef]

- Kim, T.; Kang, T.; Son, S.; Ko, K.W.; Har, D. Goal-Conditioned Reinforcement Learning Approach for Autonomous Parking in Complex Environments. In Proceedings of the 2025 International Conference on Artificial Intelligence in Information and Communication (ICAIIC), Fukuoka, Japan, 18 February 2025; pp. 465–470. [Google Scholar]

- pg3328 Autonomous-Parking-Using-Reinforcement-Learning. Available online: https://github.com/pg3328/Autonomous-Parking-Using-Reinforcement-Learning (accessed on 19 May 2025).

- Gao, F.; Wang, X.; Fan, Y.; Gao, Z.; Zhao, R. Constraints Driven Safe Reinforcement Learning for Autonomous Driving Decision-Making. IEEE Access 2024, 12, 128007–128023. [Google Scholar] [CrossRef]

- Li, Z.; Jin, G.; Yu, R.; Chen, Z.; Li, N.; Han, W.; Xiong, L.; Leng, B.; Hu, J.; Kolmanovsky, I.; et al. A Survey of Reinforcement Learning-Based Motion Planning for Autonomous Driving: Lessons Learned from a Driving Task Perspective. arXiv 2025. [Google Scholar] [CrossRef]

- Haarnoja, T.; Zhou, A.; Abbeel, P.; Levine, S. Soft Actor-Critic: Off-Policy Maximum Entropy Deep Reinforce-ment Learning with a Stochastic Actor. In Proceedings of the 35th International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018; Dy, J., Krause, A., Eds.; Volume 80, pp. 1861–1870. Available online: https://proceedings.mlr.press/v80/haarnoja18b.html (accessed on 19 May 2025).

- Sun, X.; Khedr, H.; Shoukry, Y. Formal Verification of Neural Network Controlled Autonomous Systems. arXiv 2018. [Google Scholar] [CrossRef]

- Heinen, M.R.; Osorio, F.S.; Heinen, F.; Kelber, C. SEVA3D: Using Artificial Neural Networks to Autonomous Vehicle Parking Control. In Proceedings of the 2006 IEEE International Joint Conference on Neural Network Proceedings, Vancouver, BC, Canadá, 16–21 July 2006; pp. 4704–4711. [Google Scholar]

- Ivanov, R.; Carpenter, T.J.; Weimer, J.; Alur, R.; Pappas, G.J.; Lee, I. Verifying the Safety of Autonomous Systems with Neural Network Controllers. ACM Trans. Embed. Comput. Syst. 2021, 20, 1–26. [Google Scholar] [CrossRef]

- Shen, X.; Choi, Y.; Wong, A.; Borrelli, F.; Moura, S.; Woo, S. Parking of Connected Automated Vehicles: Vehicle Control, Parking Assignment, and Multi-agent Simulation. arXiv 2024. [Google Scholar] [CrossRef]

- Yuan, Y.; Wang, S.; Su, Z. Precise and Generalized Robustness Certification for Neural Networks. arXiv 2023. [Google Scholar] [CrossRef]

- Chen, J.; Li, Y.; Wu, X.; Liang, Y.; Jha, S. Robust Out-of-distribution Detection for Neural Networks. arXiv 2021. [Google Scholar] [CrossRef]

- Jiang, M.; Li, Y.; Zhang, S.; Chen, S.; Wang, C.; Yang, M. HOPE: A Reinforcement Learning-Based Hybrid Policy Path Planner for Diverse Parking Scenarios. IEEE Trans. Intell. Transport. Syst. 2025, 26, 6130–6141. [Google Scholar] [CrossRef]

- Xu, G.; Chen, L.; Zhao, X.; Liu, W.; Yu, Y.; Huang, F.; Wang, Y.; Chen, Y. Dual-Layer Path Planning Model for Autonomous Vehicles in Urban Road Networks Using an Improved Deep Q-Network Algorithm with Proportional–Integral–Derivative Control. Electronics 2025, 14, 116. [Google Scholar] [CrossRef]

- Fulton, N.; Platzer, A. Safe AI for CPS (Invited Paper). In Proceedings of the 2018 IEEE International Test Conference (ITC), Phoenix, AZ, USA, 29 October–1 November 2018; pp. 1–7. [Google Scholar]

- Yuan, Z.; Wang, Z.; Li, X.; Li, L.; Zhang, L. Hierarchical Trajectory Planning for Narrow-Space Automated Parking with Deep Reinforcement Learning: A Federated Learning Scheme. Sensors 2023, 23, 4087. [Google Scholar] [CrossRef]

- Lu, Y.; Ma, H.; Smart, E.; Yu, H. Enhancing Autonomous Driving Decision: A Hybrid Deep Reinforcement Learning-Kinematic-Based Autopilot Framework for Complex Motorway Scenes. IEEE Trans. Intell. Transport. Syst. 2025, 26, 3198–3209. [Google Scholar] [CrossRef]

- Hamidaoui, M.; Talhaoui, M.Z.; Li, M.; Midoun, M.A.; Haouassi, S.; Mekkaoui, D.E.; Smaili, A.; Cherraf, A.; Benyoub, F.Z. Survey of Autonomous Vehicles’ Collision Avoidance Algorithms. Sensors 2025, 25, 395. [Google Scholar] [CrossRef] [PubMed]

- Chen, C.; Geneva, P.; Peng, Y.; Lee, W.; Huang, G. Monocular Visual-Inertial Odometry with Planar Regularities. In Proceedings of the 2023 IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May 2023; pp. 6224–6231. [Google Scholar]

- Cui, C.; Ma, Y.; Lu, J.; Wang, Z. REDFormer: Radar Enlightens the Darkness of Camera Perception With Transformers. IEEE Trans. Intell. Veh. 2024, 9, 1358–1368. [Google Scholar] [CrossRef]

- Li, Q.; He, H.; Hu, M.; Wang, Y. Spatio-Temporal Joint Trajectory Planning for Autonomous Vehicles Based on Improved Constrained Iterative LQR. Sensors 2025, 25, 512. [Google Scholar] [CrossRef]

- Ma, J.; Feng, X. Analysing the Effects of Scenario-Based Explanations on Automated Vehicle HMIs from Objective and Subjective Perspectives. Sustainability 2024, 16, 63. [Google Scholar] [CrossRef]

- Markets, R. Automated Parking System Market Report 2025, with Profiles of Key Players Including Westfalia Technologies, Unitronics Systems, Klaus Multiparking Systems, Robotic Parking Systems & City Lift Parking. Available online: https://www.globenewswire.com/news-release/2025/01/28/3016636/28124/en/Automated-Parking-System-Market-Report-2025-with-Profiles-of-Key-Players-including-Westfalia-Technologies-Unitronics-Systems-Klaus-Multiparking-Systems-Robotic-Parking-Systems-City.html (accessed on 19 May 2025).

- Liang, J.; Li, Y.; Yin, G.; Xu, L.; Lu, Y.; Feng, J.; Shen, T.; Cai, G. A MAS-Based Hierarchical Architecture for the Cooperation Control of Connected and Automated Vehicles. IEEE Trans. Veh. Technol. 2023, 72, 1559–1573. [Google Scholar] [CrossRef]

- Fonzone, A.; Fountas, G.; Downey, L. Automated bus services—To whom are they appealing in their early stages? Travel Behav. Soc. 2024, 34, 100647. [Google Scholar] [CrossRef]

- Patel, M.; Jung, R.; Khatun, M. A Systematic Literature Review on Safety of the Intended Functionality for Automated Driving Systems. arXiv 2025, arXiv:2503.02498. [Google Scholar]

- Song, Q.; Tan, K.; Runeson, P.; Persson, S. Critical scenario identification for realistic testing of autonomous driving systems. Softw. Qual. J. 2023, 31, 441–469. [Google Scholar] [CrossRef]

- Zhao, X.; Yan, Y. A Deep Reinforcement Learning and Graph Convolution Approach to On-Street Parking Search Navigation. Sensors 2025, 25, 2389. [Google Scholar] [CrossRef]

- Aledhari, M.; Razzak, R.; Rahouti, M.; Yazdinejad, A.; Parizi, R.M.; Qolomany, B.; Guizani, M.; Qadir, J.; Al-Fuqaha, A. Safeguarding connected autonomous vehicle communication: Protocols, intra- and inter-vehicular attacks and defenses. Comput. Secur. 2025, 151, 104352. [Google Scholar] [CrossRef]

- Chen, Z.; Xu, H.; Zhao, J.; Liu, H. Curbside Parking Monitoring With Roadside LiDAR. Transp. Res. Rec. J. Transp. Res. Board 2023, 2677, 824–838. [Google Scholar] [CrossRef]

| Sensor Technology | Key Reported Strengths | Inherent Weaknesses/Practical Limitations | Specific Fusion Challenges | Cost vs. Performance Considerations |

|---|---|---|---|---|

| Standard Cameras (RGB, Fisheye, Rectilinear) | - Rich semantic info (color, texture) for classification, recognition, identification [18]. - Fisheye: Wide FoV for near-vehicle sensing [19]. - Cost-effective; foundational ADAS/APS component [18]. - Note: Performance limits are a system challenge. - Advanced AI/DL support; mature algorithms [18]. - Passive sensing; avoids interference [18]. | - Illumination dependent: Degrades in low light, glare, shadows [18]. Critical for APSs in garages/night. - Susceptible to adverse weather (rain, fog, snow) [20]. - Limited direct depth (monocular); stereo adds cost/complexity [18]. - Lens occlusion/soiling: Critical reliability issue [18,21]. - Fisheye distortion: Requires correction, adds overhead [22]. | - Calibration (intrinsic/extrinsic) with 3D sensors critical [20]. - Temporal synchronization with other sensors essential [20]. - Data association: Matching features/objects across sensors. - Resolving conflicting data from different sensor types. - Handling sensor degradation (soiling, occlusion) and adapting and fusion [21,23]. | - Low unit cost: Enables multi-camera 360° APS [18]. - High processing cost: Rich data needs powerful ECUs (Electronic Control Units)/SoCs [22]. - Data deluge can be a bottleneck. - Central to basic APSs (with ultrasonics); limited in diverse conditions [22]. - Insufficient alone for advanced APS safety/ODDs (Operational Design Domains); needs fusion [21,22]. |

| Ultrasonic Sensors | - Extremely low cost: Ubiquitous for basic parking aid [24]. - Reliable short-range detection (<5–10 m): Crucial for final maneuvers, low obstacles [18]. - Acts as “last-centimeter” guardian. - Independent of lighting conditions [18]. - Detects various material types (large, hard surfaces) [18]. | - Limited range (very short distances) [18]. - Poor object classification/identification; only distance [18]. - Sparse data, hard to fuse intelligently. - Narrow FoV per sensor; multiple units needed, may leave gaps [25]. - Susceptible to environment (wind, temp, heavy rain/snow) [18]. - Struggles with soft, curved, thin, small, sound-absorbing objects [18]. - Contamination/damage: Susceptible to dirt, ice; silent failures possible [26]. | - Complementary fusion only: Provides short-range safety bubble [19]. - Data association: Linking sparse pings to rich camera/LiDAR data. - Handling false positives/negatives: Robust filtering needed. - Sensor degradation detection (obstruction/damage) important [26,27]. - Self-check or cross-validation needed. | - Extremely low cost: Main driver for adoption [24]. - Essential for low-speed maneuver safety [28]. - Not standalone for advanced APS; part of larger suite [19]. |

| Solid-State LiDAR (SSL) (e.g., MEMS, OPA, Flash) | - Improved reliability/durability: No/fewer moving parts [29]. - Compact size and lower weight: Easier vehicle integration [29]. - Potential for lower cost (mass production) [30]. - “Democratization” of LiDAR is key. - High data rate/resolution: Detailed 3D mapping/detection [29]. - Fast scanning (some types); Flash LiDAR illuminates entire scene [30]. - Good performance in various lighting (active sensor) [18]. | - Limited FoV per unit: Multiple units often needed for 360°, offsetting cost benefits [31]. - Shorter range (historically/certain types), though improving [29]. - Adverse weather performance degraded (rain, snow, fog) [18]. - Thermal management challenges: Heat build-up affects performance/durability [31]. - Near-field detection issues/blind spots (sub-meter to few meters) for some types [32]. - Irregular scan patterns (some MEMS): Can complicate processing [33]. | - Calibration of multiple SSL units: Crucial and complex [20]. - Data synchronization: Multiple SSLs and other sensors. - Point cloud registration/stitching: Merging data from multiple SSLs. - High data bandwidth and processing demands [20]. - Handling sensor degradation (soiling, thermal effects). | - Current cost still a factor, especially for multiple units [29]. - Trade-off: FoV/Range vs. Cost (lower-cost SSLs may be limited). - Performance benefits for APS: High resolution/accuracy for small obstacles, mapping, localization [30]. - Mass-market viability target: Achieving performance at suitable price [34]. |

| 4D Imaging Radar | - Adverse weather robustness: Excellent in rain, fog, snow [18]. - Crucial baseline sensor for APS continuity. - Direct velocity measurement (Doppler) [18]. - Elevation information (4th D): Better 3D object detection/classification [35]. - Long detection range [36]. - Can “see through” some non-metallic obstructions [35]. - Improved angular resolution (vs. traditional radar) [36]. | - Lower resolution than LiDAR/Cameras: Difficult for detailed classification/boundaries [18]. - Point cloud sparsity (vs. LiDAR) [37]. - Noisy data/clutter/multipath: Especially in dense metallic environments (garages) [18]. - Limited material differentiation (no color/texture) [18]. - Challenges with stationary objects (differentiation) [35]. - Frequency regulation hurdles for higher resolution [35]. - Short-range blind spots (ground reflection/DC noise) [35]. | - Fusing sparse radar with dense camera/LiDAR: Sophisticated techniques needed [38]. - Calibration and Synchronization: Precise spatio-temporal alignment crucial [20]. - Resolving conflicting detections. - Computational load for raw 4D radar tensor processing (e.g., RadarOcc [37]). - Handling sensor degradation. | - Higher cost for high performance (vs. traditional radar/cameras); projected cheaper than LiDAR [35]. - Production costs a barrier [39]. - Single-chip solutions aim to reduce cost for mass-market [40]. - Value for APS: All-weather, velocity, elevation data for reliability [35]. - Trade-off: Resolution vs. Cost (higher-res is more expensive) [35]. |

| Event-Based Cameras (DVS, DAVIS) | - High temporal resolution (µs-scale): Captures fast dynamics (e.g., sudden pedestrian) [38]. - Low latency (µs to sub-ms): Critical for reactive APSs [38]. - High Dynamic Range (HDR >120 dB): Adapts to extreme lighting (garage entry/exit) [38]. - Reduced motion blur [38]. - Potential low power consumption (data only on change) [38]. - Data sparsity: Efficiently represents dynamic scenes [41]. | - No/Limited static scene info: Major limitation for APSs (stationary obstacles/lines) [38]. - Positioned as “dynamic specialists.” - Grayscale info: Typically event polarity, not absolute intensity/color (DAVIS adds frames) [38]. - Noisy events/data interpretation: Requires specialized algorithms [38]. - Lack of inherent semantic info [41]. - High data volume (highly dynamic scenes): Can negate low-bandwidth advantage [41]. - The “data paradox.” - Maturity and Cost and: Emerging tech, cost may be higher, algorithms less mature [38]. | - Fusing asynchronous data with synchronous frame-based data: Significant challenge [19]. - Requires new algorithmic paradigms (change-driven). - Event representation for fusion: Converting sparse events can lose info/add cost. - Calibration: Precise spatio-temporal needed. - Complementary fusion for static scenes: Heavily rely on frame-based cameras/LiDAR [42]. | - Higher unit cost (currently) vs. standard CMOS cameras [38]. - Potential system-level savings (low power/data in some scenarios)—depends on processing. - Niche performance benefits: Unmatched HDR/high-speed. Useful for sudden intrusions/extreme lighting in APS. - Not a standalone APS solution: Due to static scene issues; more for specialized dynamic threat detection. |

| Thermal Cameras (LWIR) | - Low/No visible light detection: Operates in complete darkness (e.g., underground garages) [18]. - “Lifesaver” for detecting pedestrians/animals when visible cameras fail. - Robustness to visual obscurants (smoke, haze, light fog) [43]. - Good for detecting animate objects (pedestrians/animals via heat) [43]. - Reduced glare issues (sunlight/headlights) [28]. - High contrast imaging (for warm objects) [43]. | - Lower resolution vs. visible cameras [44]. - Lack of color and fine and texture: Grayscale (temperature-based); no detailed visual ID [43]. - “What, not who” limitation. - Cannot see through glass/water [43]. - Temperature-dependent contrast: Image quality affected by ambient/object temp difference [43]. - Difficulty with isothermal scenes/cold obstacles [43]. - Higher cost than standard cameras [43]. | - Fusing low-res thermal with high-res visible: Requires careful registration/scaling. - Different data modalities: Fusing heat-based with reflection-based (visible, LiDAR) images. - Calibration and Synchronization and: Precise spatio-temporal essential [45]. - Complementary role: Often supplements visible cameras (low-light pedestrian detection) [45]. - Fusion logic needs to weigh inputs based on conditions. | - Higher cost component: Increases sensor suite cost [46]. - Cost–benefit often positions for premium APS/specific ODDs. - Significant performance boost in specific APS scenarios (dark lots, underground, missed pedestrians/animals) [46]. - Niche capability vs. Cost trade-off: Depends on target ODD/safety requirements. - Deep Learning for Thermal (e.g., YOLO-Thermal [44]) aims to improve performance. |

| Multispectral Cameras (RGB + NIR, Red Edge, etc.) | - Improved object/material discrimination: Captures multiple spectral bands (visible, NIR, SWIR) for detailed material analysis [47]. - Potential “surface condition specialist” (black ice, oil slicks with SWIR). - Enhanced performance in specific conditions (e.g., NIR for light haze/fog penetration) [47]. - Simultaneous multiband imaging (snapshot cameras) for dynamic scenes [48]. | - Higher cost and complexity and vs. standard RGB cameras [47]. - Data volume and processing and: Multiple bands increase data/computational load [48]. - Data richness can be “curse of dimensionality.” - Limited bands vs. hyperspectral: Less finesse in spectral analysis [47]. - Application-specific band selection: General APSs might not leverage all specialized bands. - Illumination dependent: Still reliant on external/active illumination in specific bands. | - Registration of multiple bands: Ensuring perfect alignment crucial (snapshot cameras mitigate) [48]. - Fusion with other sensor modalities: Integrating multiband with LiDAR, radar, thermal. - Feature extraction and selection: Identifying relevant spectral features for APSs (e.g., wet vs. dry pavement). - Real-time processing: Can be computationally demanding [49]. | - High cost for automotive integration: Likely prohibitive for mass APS [47]. - Niche APS benefits: E.g., road surface condition detection. - Justifying performance gain vs. Cost: Incremental gain must be significant. - Visible–Infrared focus: Current research often on visible–IR fusion for object detection [49]. - Could be cost–performance step if key bands identified for critical APS issues. |

| Sensor Fusion Approach | Key Reported Strengths | Inherent Weaknesses/Practical Limitations | Specific Fusion Challenges | Cost vs. Performance Considerations |

|---|---|---|---|---|

| Tightly-Coupled LiDAR-IMU Fusion (e.g., LIO-SAM, FAST-LIO, GF-LIO) | - Improved SLAM (Simultaneous Localization and Mapping)/Odometry: Higher accuracy/robustness in state estimation/mapping, especially GPS-denied (garages) [50]. - De facto standard for robust localization in critical APS ODDs. - Motion distortion correction: IMU de-skews LiDAR clouds [50]. - Enhanced state estimation (degenerate scenarios): IMU aids when LiDAR data sparse [50]. - Improved attitude estimation (roll, pitch, yaw) [51]. - Real-time capability with modern algorithms [50]. | - Computational complexity: Joint optimization is intensive [50]. - Balancing accuracy vs. real-time on embedded hardware. - Sensitivity to initialization and calibration: Small errors amplified [50]. - IMU noise and bias drift: Needs accurate online estimation [52]. - Dependence on accurate sensor models [53]. | - Precise spatio-temporal calibration (LiDAR-IMU) [20]. - Complexity of IMU preintegration [50]. - Factor graph optimization management (size/complexity) [50]. - Loop closure integration with LIO factors [50]. - Handling reflective/symmetric structures (LiDAR-specific garage challenges) [51,54]. | - Adds IMU cost (higher-grade IMUs improve performance but cost more). - Significant performance gain for APS localization/mapping in GPS-denied areas [54]. - Enables higher automation levels (prerequisite for precise, continuous localization). - Computational cost implication: May need more powerful ECUs. |

| BEV (Bird’s Eye View) Fusion (Cameras, LiDAR, Radar) | - Unified spatial representation: Common top-down view for fusing heterogeneous data [55]. - BEV as “lingua franca” for sensor modalities. - Facilitates multimodal fusion: Combines features from different perspectives/structures [55]. - Improved situational awareness: 360° view for navigation/decision-making [56]. - Potential for dense fusion: Preserves more contextual info [57]. - Directly applicable to planning: BEV maps usable by motion planners. | - Information loss in view transformation: Projecting to BEV can cause distortion, ambiguity (distant/occluded objects) [7]. - Lack of depth-aware vision-to-BEV can cause gaps. - Standard 2D BEV loses height info; drives research to 3D/pseudo-3D BEV. - Handling occlusions: Difficult, especially with camera-only systems [55]. - Computational cost: Generating/processing BEV from multiple high-res sensors can be intensive [56]. - Dependence on accurate calibration and synchronization [20]. - Fixed grid resolution: Limits accuracy vs. computational load. | - Camera-to-BEV transformation: Accurate projection, often needs depth estimation or learned transformers (e.g., LSS) [7]. - LiDAR/Radar-to-BEV representation: Efficiently projecting sparse/dense data. - Cross-modal feature alignment and fusion: Camera appearance, LiDAR geometry, radar velocity [55]. - Temporal fusion in BEV: Incorporating history to improve consistency/handle occlusions [7]. - Synchronization delays for multi-camera BEV (RT-BEV aims to mitigate) [56]. - Handling sensor degradation: Affects fused BEV quality. | - Sensor suite cost (multi-camera, LiDAR, radar). - Vision-centric BEV aims for lower cost but faces performance limits [56]. - Computational hardware: Requires powerful ECUs/SoCs with GPUs [56]. - Performance gains for APS: Holistic understanding for complex maneuvers, spot finding, clutter navigation [19]. - Scalability: Sensor number/resolution impacts performance/cost. |

| Probabilistic Fusion Frameworks (e.g., Kalman Filters, Particle Filters, Bayesian Networks, Occupancy Grids) | - Uncertainty management: Explicitly model/manage sensor noise, environmental variability, model inaccuracies [20]. - Crucial for safety-critical APS decisions. - Conflict resolution: Principled ways to fuse conflicting info by weighting data based on reliability/uncertainty [20]. - Improved SNR and fault tolerance (Raw Data Fusion) [58]. - State estimation and tracking: Kalman/Particle filters widely used [20]. - Semantic mapping: Probabilistic generation of semantic maps (road, curb) with confidence levels [59]. - Occupancy Grids: Represent free space/obstacles probabilistically for APS path planning [58]. | - Model dependence: Performance relies on accuracy of system/sensor models [20]. - Computational complexity: Some methods (Particle Filters for high-dim, full Bayesian) very expensive for real-time APS [20]. - Assumptions (e.g., Gaussian noise for LKF/EKF): May not hold in real-world APSs [60]. - Data association challenges: Associating measurements to tracks/map features in clutter. - Scalability: Maintaining real-time performance with increasing objects/map size. | - Data alignment and synchronization: Critical prerequisite [20]. - Handling heterogeneous data: Integrating diverse sensor types (point clouds, images, radar) with different noise/resolutions [20]. - Dynamic noise covariance estimation: Adaptive estimation important but challenging [28]. - Non-linearities and Non-Gaussianities: Standard KFs struggle; need EKF, UKF, Particle Filters (with own trade-offs) [61]. - Computational latency for high-bandwidth data. | - Trade-off: Robustness vs. Computational Cost (sophisticated models cost more). - Enabling safer decisions: Quantifying uncertainty allows more informed APS actions. - Development cost: Implementing/validating complex probabilistic fusion requires expertise/testing. - Use of lower-cost sensors (with Raw Data Fusion): May enable by improving SNR/overcoming individual failures. |

| Method Category | Specific Method/Key Innovation | Dataset(s) Used | Key Performance Metrics Reported | Robustness Aspects Addressed/Target Platform | Reference(s) |

|---|---|---|---|---|---|

| Object Detection (YOLO) | Improved YOLOv5-OBB (Oriented BBox, Backbone opt., CA mechanism) | Homemade | mAP +8.4%, FPS +2.87, Size −1 M | Lighting variations; low-compute embedded | [3] |

| Object Detection (YOLO) | YOLOv5 (Fine-tuned) | PKLot, Custom | Valid. Acc: 92.9% | Real time (PARKTag system) | [68] |

| Hybrid (DL + CV) | Two-Stage: YoloV11 (Key points) + CV (Special kernel for rotation) | ps2.0 (Public) | Acc: 98.24%, Inf. Time: 12.3 ms (Desktop), 16.8 ms (Laptop) | Speed; varied cond. (ps2.0) | [11] |

| Segmentation | Mask R-CNN | Tongji Parking-slot DS | Precision: 94.5%, Recall: 94.5% | Lighting variability; occlusions | [69] |

| Segmentation | Novel Convolutions (Directional, Large Field) for low-level features | Public Remote Sensing | Improved perf. vs. baseline | Potential for parking | [73] |

| End-to-End Learning | LSS-based Transformer/BEVFusion (Camera -> Control) | CARLA (Simulated) | Success: 85.16%, Pos Err: 0.24 m, Orient Err: 0.34 deg | End-to-end pipeline, dataset creation | [7] |

| Category | Specific Method/Combination | Key Features | Constraint Handling | Smoothing Method | Validation | Reference(s) |

|---|---|---|---|---|---|---|

| Search + Opt. | Hybrid A* + NMPC | - Hierarchical - NMPC optimizes coarse path | - Narrow spaces - Kinematics | Implicit (NMPC) | Sim, Real | [13] |

| Search + Opt. | Hybrid A* + QP Smoothing + S-Curve Speed Planning | - Adaptive search - Improved heuristic - QP smoothing - S-curve speed | - Constrained env. - Kinematics | - QP - S-Curve | Sim, Real | [14] |

| Search + Opt. | Graph Search (Hybrid A*) + Numerical Opt. + I-STC | - Hierarchical - Warm start - I-STC simplifies collision constraints | - Parallel parking - Narrow spaces | Numerical Opt. | Sim | [81] |

| Search + Opt. | Hybrid A* + GA (Genetic Algorithm) Opt. + Geometric Curves (Bézier and Clothoid) | - Hierarchical - GA local opt. - Curve smoothing | - Tight spaces - AVP | - Bézier - Clothoid | Sim | [76] |

| Search + APF | RRT* + Improved APF + Variable Probability Strategy | - Guided sampling - APF avoids local minima - Adaptive sampling | Obstacle avoidance | Enhanced APF | Sim | [77] |

| Optimal Control | Indirect OCP (Minimum Time) + pNN Controller | - Efficient OCP solver (PINS) - Smooth 3D penalty functions - Complex maneuvers | - Narrow spaces - Unstructured - Kinematics | Implicit (OCP) | Sim, Real | [12] |

| Prediction + Opt. | Prediction + APF + Bézier Curve + SQP Optimization | - Integrates prediction - APF target selection - SQP optimizes Bézier CPs | - Dynamic obstacles - Collision avoidance | Bézier Curve + SQP | Sim | [16] |

| Reinforcement Learning | Soft Actor-Critic (SAC) + Segmented Training Framework | - Optimizes safety, comfort, efficiency, accuracy - Handles neighbor deviation | - Non-ideal scenarios - Kinematics | Implicit (Learned Policy) | Sim | [15] |

| Reinforcement Learning | Hybrid RL (Rule-based RS + Learning-based Planner) | - Combines rule-based feasibility with learned adjustments | Complex environments | Implicit (Learned Policy) | Sim, Real | [82] |

| Multi-Agent | Improved Conflict-Based Search (ICBS) + Swarm Opt. (IACA-IA) + Adaptive A* | - Multi-UGV coordination - Conflict resolution - Slot allocation | - High-density - Multi-vehicle | Adaptive A* | Sim | [79] |

| Control Strategy | Operating Principles | Reported Advantages for APS | Verification/Safety and Robustness Challenges | Handling Precision vs. Comfort/Efficiency Trade-Offs |

|---|---|---|---|---|

| Model Predictive Control (MPC)—Standard | - Explicit vehicle model (e.g., bicycle [88]) predicts future states (Np) [89]. - Optimizes control sequence (Nc) by minimizing cost function (tracking error, effort, constraints) [88]. - Applies first control input; repeats (receding horizon) [90]. - Explicitly handles system constraints (actuator/state limits, obstacle avoidance) [89,90]. | - High precision/accuracy for tight spaces [90]. - Optimal performance and constraint handling (vehicle limits, obstacles) [89]. - Improved comfort (smoother motion vs. simpler controllers) [91]. - Enhanced robustness (vs. some traditional methods) [91]. - Predictive capability: Proactive control [89]. - Solves constrained motion problems optimally [46]. | - Model fidelity: Performance/safety depend on model accuracy; mismatches problematic [89]. - Obtaining perfect models for all conditions is challenging. - Computational complexity: Real-time optimization can be demanding [89]. - Recursive feasibility: Ensuring a solution exists at every step is a major challenge [92]. - Tuning complexity: Many parameters (weights, horizons) need careful tuning [46]. - Uncertainty handling: Standard MPC assumes deterministic models; robust variants increase complexity/conservatism [92]. | - Adjusting weights in cost function () [88]. - Precision: ↑ weight on tracking error. - Comfort: Penalize aggressive inputs (steering rate, acceleration). - Efficiency: Penalize path length/time. - Hard constraints for precision; soft for comfort/feasibility. - Prediction/control horizons () influence behavior. |

| Learning-Based MPC (e.g., residual models, Koopman, GP, NN-adaptive) | - Integrates ML to improve prediction model or compensate for deficiencies [89]. - Residual Model Learning: Learns unknown dynamics (GP, NN) to augment nominal model [89]. - Koopman Operator Theory: Lifts nonlinear dynamics to linear space for prediction [93]. - NN-Adaptive LPV-MPC: NN adapts LPV-MPC parameters online (e.g., tire stiffness) [44]. | - Enhanced model accuracy and control performance [89]. - Adaptability to varying conditions (road friction, tires) [46]. - Reduced dimensionality for learning (residual models) [89]. | - Safety of learned components (GP, NN): Formal verification difficult [93]. - Data requirements and generalization: Poor OOD performance risks safety [89]. - Verification of hybrid system (MPC + learned parts). - Computational overhead of online learning/adaptation. - Defining valid feature space for learned component to avoid unsafe extrapolation [89]. | - MPC cost function still key for balance. - Learned component might implicitly balance if trained on such data. - Economic MPC (EMPC): Cost function directly optimizes efficiency (e.g., energy [94]) alongside performance. - Learning helps achieve trade-offs if model is more accurate. |

| Reinforcement Learning (RL)—General | - Agent learns actions (steering, accel.) by interacting with environment (parking scenario) [95]. - Learns policy (state -> action) to maximize cumulative reward [95]. - Trial-and-error learning, often in simulation [95]. - Model-free (direct policy) or model-based (learns model) [49]. - End-to-end RL: Sensor inputs -> control commands [96]. | - Adaptability to complex/dynamic environments [95,97]. - Learning complex maneuvers for tight spaces [98]. - Optimization through interaction; potential for novel solutions [95]. - Reduced reliance on hand-engineered rules [99]. | - ‘Black box’ nature: Formal safety verification very difficult [95]. - Safety during learning (exploration): Mostly simulated, leading to sim-to-real gap [95]. - Sim-to-real transfer gap: Policies often perform poorly in real world [82]. - Bridging gap (domain randomization, OGM (Occupancy Grid Map) [82]) is critical. - Robustness to OOD states: Catastrophic failure possible [99]. - Reward hacking and specification: Defining correct reward function is hard [99]. - Stability and convergence issues [96]. - Data bias and sufficiency [95]. - Computational demands for training DRL [95]. | - Primarily via Reward Function Design [99]: - Precision: Reward proximity/alignment; penalize collisions/deviations. - Comfort: Penalize jerk, high accel/decel, large steering rates. - Efficiency: Reward speed, short paths, low energy; penalize maneuvers. - Multi-Objective RL (MORL): Explicitly multiple rewards, balancing complex. - Constraint-driven safety RL (e.g., CMDP): Safety as hard constraint [99]. - Hierarchical RL: Different rewards per level (strategic vs. maneuver) [100]. - Reward changes can lead to unpredictable policy changes. |

| Reinforcement Learning (RL)—Soft Actor-Critic (SAC) | - Off-policy, model-free, actor-critic DRL for continuous actions [99]. - Actor (policy) decides actions; Critic (value/Q-net) evaluates [99,101]. - Entropy regularization: Maximizes reward + policy entropy (encourages exploration) [101]. - Off-policy learning from replay buffer (sample efficiency) [99,101]. | - High parking success rates (simulated) [97]. - Reduced maneuver times (vs. traditional/other DRL) [101]. - Robust handling of dynamic obstacles [101]. - Fine-grained vehicle control (continuous actions) [101]. - Improved sample efficiency (with HER for sparse rewards) [97]. | - Same general RL challenges: Black box, verification, safety, sim-to-real, OOD, reward, stability, data, computation [95]. - SAC properties may mitigate some training issues (sample efficiency, exploration) but fundamental safety challenges persist. | - Similar to general RL (reward function design). - Entropy regularization might naturally lead to smoother policies (comfort) [101]. - Explicit control over trade-offs still heavily relies on reward component formulation. |

| Neural Network (NN) Based Controllers—Direct NN Controllers (End-to-End) | - NN (often deep) maps sensor inputs (LiDAR [102], camera, sonar [103]) or states to control commands [102]. - Learns mapping from data (imitation learning [102]) or RL. - Internal layers learn hierarchical features. - NNs with ReLU: Complex piecewise linear functions [102]. - Sigmoid/tanh: Smooth non-linearities [104]. | - Learning complex kinematics/dynamics from data [103]. - Automatic generation of control commands [103]. - Adaptability to different situations (if trained on diverse data) [103]. - Reduced parking time; system-level, connected CAVs (Connected Automated Vehicles ) [105]. - Handling high-dimensional inputs (e.g., LiDAR images) [102]. | - ‘Black box’ and lack of interpretability: Hard to debug, provide safety guarantees [102]. - Formal verification difficulties: Computationally challenging for deep NNs [102]. - Techniques exist (hybrid systems, SMC, reachability) but have limits. - System-level safety (closed-loop) very complex. - Robustness to OOD states/adversarial attacks: NNs can be brittle [106]. - Robust OOD detection essential [107]. - Data dependency and generalization issues [106]. - Sim-to-real gap. - Lack of precise mathematical specifications for “correct” behavior [102]. | - Implicitly learned from training data (imitation or RL reward). - If trained on human data, mimics that balance. - Hard to predictably adjust behavior post-deployment without retraining. - Neural abstractions trade abstraction precision vs. verification time [108]. |

| Neural Network (NN) Based Controllers—Jordan Neural Networks | - Recurrent NN: Output fed back as input via “context units” [103]. - Inputs: Sensor readings, odometer, current maneuver state. - Outputs: Control commands (speed, steering), next maneuver state [103]. - Learns from examples of successful maneuvers (supervised learning, e.g., RPROP) [103]. | - Automatic knowledge acquisition from examples [103]. - Adaptability and robustness: Potential generalization to new situations (vs. rigid rules) [103]. - Simplified development: Focus on collecting examples [103]. | - Similar to direct NN: Black box, verification, data dependency, generalization, sim-to-real. - Recurrent structure adds complexity to formal analysis. - Ensuring stability/convergence of recurrent dynamics can be challenging. | - Trade-offs primarily learned implicitly from training examples. - Quality of demonstrated maneuvers dictates learned policy balance. - Explicit tuning post-training difficult without new data. |

| Hybrid RL Approaches—RL + Rule-Based Planners (e.g., RL with Reeds-Shepp (RS) curves, A*) | - Combines stability/guarantees of traditional planners (RS, A*) with RL’s adaptability [82]. - Rule-based planner: Initial reference/candidate paths [109]. - RL agent: Refines trajectory, selects candidates, or makes high-level decisions [82]. - E.g., RS path + RL speed/steering adjustments from LiDAR OGM [82]. | - Improved generalizability/adaptability [82]. - Higher planning success rates (vs. pure rule-based/RL) [110]. - Enhanced training efficiency for RL (rule-based guidance) [110]. - Bridging sim-to-real gap (e.g., LiDAR OGM for consistent representation) [82]. | - Complexity of verifying hybrid systems: Interaction between learning and rule-based parts [111]. - Safety of the RL component (black box, OOD). - Interface consistency/robustness: Misinterpretations cause failures. - Balancing control authority between RL and traditional parts. - “Weakest link” problem: Safety depends on both components and interaction. | - Traditional planner: Focus on feasible/efficient paths (precision, efficiency) [109]. - RL agent: Refines for comfort, adapts to dynamics, optimizes further [100]. - Modular assignment simplifies design/tuning. |

| Hybrid RL Approaches—RL + Model Predictive Control (MPC) | - Integrates RL with MPC. - RL: High-level decisions (strategy, MPC objectives/constraints) [110]. - MPC: Low-level trajectory optimization/control [110]. - Alt: RL learns model for MPC or tunes MPC parameters. | - Optimal low-level control: MPC for constraint-aware execution [110]. - Strategic high-level learning: RL adapts MPC for different scenarios. - Improved trajectory quality and planning time (NN hierarchical DRL + opt. layer) [112]. | - Verification complexity of interacting learning/model-based parts [111]. - Safety of RL decision-making component. - Ensuring MPC recursive feasibility given RL goals. - Potential negative interference if RL gives unsuitable objectives to MPC. - “Weakest link” problem applies. | - Hierarchical task decomposition. - RL: Higher-level strategic goals (efficiency, context adaptation). - MPC: Manages precision/comfort for low-level execution (cost function). |

| Hybrid RL Approaches—DRL + Kinematic-Based Co-pilot | - DRL agent learns primary driving policy [113]. - Kinematic co-pilot: Guidance/constraints for DRL during training (efficiency) and operation (safety/decision support) [113]. - May include rule-based system to assess/mediate final actions for safety [113]. | - Enhanced training efficiency for DRL (kinematic guidance) [113]. - Flexible decision-making guidance from co-pilot [113]. - Improved safety/reliability: Rule-based system as safety net [113]. | - Verification of interaction (DRL, co-pilot, rule-supervisor) is complex. - Ensuring co-pilot guidance is always safe/beneficial. - Determining override logic for rules (not too conservative/missing DRL insights). - “Weakest link” principle applies. | - Kinematic co-pilot/rules enforce comfort/safety (precision) constraints, guiding DRL [113]. - DRL reward function still primary driver for efficiency/other aspects within safety envelope. |

| Study/System | Core Technological Innovation | Sensor Suite Utilized | AI/ML Methodologies Applied | Key Performance Metrics/Findings | Specific Relevance/Contribution to APS Advancement |

|---|---|---|---|---|---|

| RL-OGM-Parking [82] | Hybrid RL (Reeds-Shepp + SAC) planner using LiDAR OGM. | LiDAR | - DRL (SAC) - Rule-based (Reeds-Shepp) | - High PSR (Parking Success Rate) - Reduced ANGS (Number of Gear Shifts) and PL (Path Length) - Outperforms pure rule/learning. | Addresses sim-to-real for learned planners; stable/adaptive maneuvers. |

| SAC-based DRL for Parking [15] | DRL (SAC) for continuous vehicle control. | LiDAR, Camera | DRL (SAC) | - High PSR - Reduced maneuver times - Robust to dynamic obstacles - Outperforms traditional and other DRL. | Fine-grained control, efficient path gen in dynamic scenarios. |

| U-Park [67] | User-centric smart parking recommendation for e-micromobility. | Implies Camera (CNN for space detection) | CNN (parking space detection, hazy/foggy) | Tailored recommendations (user pref., conditions). | Extends smart parking to micromobility; user-centric, robust perception. |

| Diffusion Model Planning [114] | Diffusion models for diverse, feasible motion trajectories. | General AV sensors | Diffusion Models | SOTA PDM score (94.85) on NAVSIM. | Potential for diverse, smooth, context-aware parking trajectories. |

| 4D Imaging Radar for Automotive [62] | 4D mmWave radar for high-res point clouds (range, azimuth, elevation, velocity). | 4D mmWave Radar | Doppler/angle resolution algos, dynamic CFAR (Constant False Alarm Rate) | Demonstrated 4D high-res imagery in parking lots. | Enhances all-weather perception, velocity measurement for APS. |

| Monocular VIO (Visual-Inertial Odometry) with Planar Regularities [114] | Monocular VIO regularized by planar features using MSCKF (Multi-State Constraint Kalman Filter). | Monocular Camera, IMU | - MSCKF - Custom Plane Detection | Improved ATE (Absolute Trajectory Error) (1–3 cm accuracy, structured env.). | Precise, cost-effective ego-motion for structured parking (garages). |

| E2E Parking Dataset and Model) [7] | Open-source dataset (10k+ scenarios) and benchmark for E2E parking. | Multi-camera, Vehicle sensors | - E2E Learning (Transformers) - BEV representation | Baseline: 85.16% success, 0.24 m pos err, 0.34 deg orient err. | Facilitates reproducible research, standardized E2E model benchmarking. |

| REDFormer [115] | Transformer-based camera-radar fusion for 3D object detection (low-visibility). | Camera, Radar (nuScenes) | - Transformers - BEV Fusion | Improved perf. in rain/night on nuScenes. | Enhances APS perception robustness in adverse conditions. |

| Improved CILQR (Constrained Iterative Linear Quadratic Regulator) [116] | Enhanced Constrained Iterative LQR for stable, human-like, efficient trajectory planning. | Assumed std. AV sensors | - CILQR - Hybrid A* | Improved human-like driving, traffic efficiency, real-time capability. | Potential for higher quality, smoother, natural parking trajectories. |

| AVP HMI (Human–Machine Interface) Explanations [117] | Scenario-based XAI framework for AVP HMI. | N/A (HMI study) | XAI principles | Improved driver trust and UX; reduced mental workload; better user perf. | Improves user understanding, trust, acceptance of AVP via transparency. |

| Hyundai Mobis Parking System [118] | Commercial Advanced Automated Parking System (AAPS). | Ultrasonic, Surround-view cameras | Proprietary map gen and autonomous parking algos | Seamless autonomous parking (single touch). | Real-world deployment, commercialization of in-vehicle APS tech. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Olmos Medina, J.S.; Maradey Lázaro, J.G.; Rassõlkin, A.; González Acuña, H. An Overview of Autonomous Parking Systems: Strategies, Challenges, and Future Directions. Sensors 2025, 25, 4328. https://doi.org/10.3390/s25144328

Olmos Medina JS, Maradey Lázaro JG, Rassõlkin A, González Acuña H. An Overview of Autonomous Parking Systems: Strategies, Challenges, and Future Directions. Sensors. 2025; 25(14):4328. https://doi.org/10.3390/s25144328

Chicago/Turabian StyleOlmos Medina, Javier Santiago, Jessica Gissella Maradey Lázaro, Anton Rassõlkin, and Hernán González Acuña. 2025. "An Overview of Autonomous Parking Systems: Strategies, Challenges, and Future Directions" Sensors 25, no. 14: 4328. https://doi.org/10.3390/s25144328

APA StyleOlmos Medina, J. S., Maradey Lázaro, J. G., Rassõlkin, A., & González Acuña, H. (2025). An Overview of Autonomous Parking Systems: Strategies, Challenges, and Future Directions. Sensors, 25(14), 4328. https://doi.org/10.3390/s25144328