Abstract

Lane detection is an essential task in the field of computer vision and autonomous driving. This involves identifying and locating road markings on the road surface. This capability not only helps drivers keep the vehicle in the correct lane, but also provides critical data for advanced driver assistance systems and autonomous vehicles. Traditional lane detection models work mainly on the 2D image plane and achieve remarkable results. However, these models often assume a flat-world scenario, which does not correspond to real-world conditions, where roads have elevation variations and road markings may be curved. Our approach solves this challenge by focusing on 3D lane detection without relying on the inverse perspective mapping technique. Instead, we introduce a new framework using the MambaVision-S-1K backbone, which combines Mamba-based processing with Transformer capabilities to capture both local detail and global contexts from monocular images. This hybrid approach allows accurate modeling of lane geometry in three dimensions, even in the presence of elevation variations. By replacing the traditional convolutional neural network backbone with MambaVision, our proposed model significantly improves the capability of 3D lane detection systems. Our method achieved state-of-the-art performance on the ONCE-3DLanes dataset, thus demonstrating its superiority in accurately capturing lane curvature and elevation variations. These results highlight the potential of integrating advanced backbones based on Vision Transformers in the field of autonomous driving for more robust and reliable lane detection. The code will be available online.

1. Introduction

Detecting and pinpointing lanes on roads is an important task for computer vision and self-driving vehicles. Lane detection systems can help drivers stay in their lane and provide crucial information for advanced driver assistance systems and autonomous vehicles. Traditional lane detection methods use image processing techniques to recognize lane markings in camera images. Recently, deep learning has led to the creation of more precise and durable lane detection models based on convolutional neural networks (CNNs) and Transformer models. However, lane detection remains difficult due to varying road conditions, lighting conditions, camera perspectives, and the need for real-time performance.

There are two main approaches to lane detection: 2D and 3D models. Two-dimensional methods use CNNs such as ResNet [1], EfficientNet [2], and MobileNet [3,4] to detect lane markings in monocular images. These models are fast and effective in ideal conditions, but may struggle with poor weather, lighting, or degraded lane markings. In contrast, 3D models estimate the position and orientation of lane markings in 3D space, offering improved accuracy and robustness. Although most 3D models use inverse perspective mapping (IPM) [5] to create a bird’s-eye view (BEV), not all rely on this method. However, 3D models are generally more computationally intensive and depend on precise transformations.

Previous state-of-the-art methods hypothesized wrongly the construction of the BEV image, assuming that the lines have no height. This made the obtained image not accurate because they started from the premise that the world is flat. The authors of those works tried to determine the coordinates in the 3D space of the traffic lanes depending on the curvature of the lane lines. It is understandable that it was very difficult, nearly impossible, to generate a more accurate top-down view image because, at that time, there were no datasets in which the exact coordinates of the traffic lanes could be mapped in 3D space. At that time, there existed only synthetic datasets such as Apollo [6], which were obtained from various game engines.

Developing a 3D lane detection dataset poses a significant challenge, primarily due to the requirement of precise and comprehensive ground truth annotations. Typically, this process involves manually labeling lane markings in a 3D space, which can be time-consuming and requires specialized equipment, such as LiDAR sensors.

Another challenge relates to generating a diverse and representative set of scenes that accurately capture a wide range of real-world scenarios, including various road types, weather, and lighting conditions. This undertaking requires careful planning, execution, and access to appropriate data collection sites.

Fortunately, we now have at our disposal three large datasets that contain a multitude of driving scenarios from real situations, whose annotations are very well detailed. The datasets to which we refer are OpenLane [7], OpenLaneV2 [8], and ONCE-3DLanes [9]. One of the things that differentiates these datasets is the evaluation metrics. A well-constructed dataset plays a crucial role for lane detection tasks because, when annotations are inconsistent, training becomes noisy and slow, and the resulting model struggles to generalize. Imperfect ground truths such as missed or misaligned lane markings can degrade the performance of deep neural networks, which is supported by recent results from the PSSCL framework [10]. Furthermore, careful selection of cleaner data during training significantly improves the robustness to such label noise.

Certainly, there are several methods that can work alongside those mentioned in order to obtain better results. These methods involve the inclusion of several sensors, such as RADAR or LiDAR, in contemplation of better understanding the surrounding environment by generating point clouds or depth maps. The main disadvantage of those methods is that, as we add more sensors, the computational cost increases drastically and it becomes more and more difficult and expensive to be used in real life.

Transformers are increasingly being used in computer vision applications such as image classification, object detection, and semantic segmentation [11,12], as they are capable of capturing long-range dependencies and contextual information in the input data. Unlike CNNs, which process input data sequentially and extract features using local receptive fields, Transformers utilize a self-attention mechanism to compute global interactions between all input elements, thereby enabling them to capture both local and global contextual information. However, their computational requirements grow exponentially with sequence length, creating challenges for training and deployment.

For computer vision tasks, Transformer-based models such as Vision Transformers (ViT) [13], DETR [14], and SETR [15] have demonstrated state-of-the-art performance on challenging datasets such as ImageNet [16], COCO [17], and Cityscapes [18]. Transformers have several advantages over CNNs, including the ability to model long-range dependencies and context more effectively, less dependence on predefined architectures, and the ability to handle variable-sized inputs. However, with the recent introduction of Mamba Vision models, even better results have been achieved, surpassing the performance of ViTs in many computer vision tasks by combining the strengths of state-space models and Transformers for enhanced accuracy and efficiency.

The main objective of our research is to integrate the Transformer [19] and Mamba Vision [20] architectures into current state-of-the-art models and replace the classic convolutional neural networks in order to achieve better results.

The main contributions of the paper are as follows:

- We propose a simple hybrid backbone that mixes MambaVision-S-1K CNN blocks with a Transformer, so the model learns both fine details and global context.

- Our approach works directly on front-view images and skips any IPM or bird’s-eye view step.

- We fine-tune and optimize the whole model so it can run well on systems which do not require the latest and best configuration.

- We achieve state-of-the-art results on ONCE-3DLanes and also very good results on all subsets of the Apollo 3D Lane Detection benchmark.

The code will be available at https://github.com/raul-cap/mamba-3d-lane-detection (accessed on 29 July 2025).

2. Related Work

A study on 3D-LaneNet [21] has applied two new concepts: an intra-network inverse perspective mapping that provides a dual representation information flow for both a regular image captured by the camera and its top-down view, and an anchor per column representation, which makes the lane detection task more like an object detection problem, replacing the common methods such as outlier rejection and clustering. This is the first work to make use of the top-view representation of the standard image to determine the lane in 3D space.

Being the first work to approach this topic, 3D-LaneNet inspired most of the works that followed, as well as the work entitled “Gen-LaneNet: A Generalized and Scalable Approach for 3D Lane Detection” [6]. With this paper, the authors noticed and tried to remove the drawbacks of 3D-LaneNet. One of the drawbacks is that the bird’s-eye projection does not align with the image feature resulting from IPM in the presence of a non-zero slope. Ref. [21] uses an unsuitable coordinate frame in its anchor representation, causing ground truth lanes to be misaligned with visual features. On the other hand, the authors proposed the so-called “virtual top view”, which aligns with the IPM image. Ref. [22] is also an extension of the first state-of-the-art methods [6,21]. The authors have introduced a new attention mechanism called Dual Attention. Its purpose is to help the model work better and improve accuracy in more complicated driving situations and scenarios. Besides this attention module, another change compared to the previous models is the use of the linear-interpolation loss function, which is used to locate the 3D lane markings with greater precision.

Although other previously presented works all had 3D-LaneNet as their starting point and each work represented an improvement of the basic one, in CLGo [23], the authors applied a different strategy. Among the only similarities to the other works is that its architecture has two stages. However, the first stage is no longer used for image segmentation, but instead for finding the best camera pose and polynomial parameters using geometric constraints. In this case, finding the best camera pose is equivalent to finding the camera height and the pitch angle of the camera. This is performed by using a Transformer encoder–decoder.

The backbone of this work replaces the classic backbones that used convolutional neural networks with one based on Transformers, which are mainly used to enhance image features. The convolution is only used to extract the convolutional features that will be inputs for the Transformer encoder (TRE). By decoding the characteristics using the Transformer decoder (TRD), the camera pose is obtained. The authors added a lane branch to interpret 3D lanes to aid in camera pose learning. First, the 3D lanes are obtained, and then they are projected into the 2D plane by conducting a homographic transformation. Thus, the model learns the best camera pose by comparing the results to the ground truths in both 3D and 2D planes.

In [7], the authors proposed a method entitled PersFormer, which is an end-to-end monocular 3D lane detector model that can simultaneously detect 2D and 3D lanes. Like the latest state-of-the-art models, the method is based on the Transformer architecture. However, the Transformer is not used as the backbone for feature extraction, which is the first part of this network. The second part of the model is the Perspective Transformer, which is relatively complex, and also the inspiration for the name of the model. The general idea behind the Perspective Transformer is to generate an accurate BEV of the input image by taking into consideration the front view features generated by the ResNet [1] backbone, the coordinates of the transformation matrix from IPM, and the camera intrinsic and extrinsic parameters, such as camera height, and the pitch angle of the camera. The authors used a Transformer to attend local context and aggregate global features to produce a solid representation in BEV rather than just projecting the one-to-one feature correspondence from front view to BEV. In addition to the proposed model, the authors highlighted one of the existing problems in the 3D lane detection task, namely, the lack of data for the learning process. They addressed this problem by introducing a new large-scale dataset called OpenLane.

With the same purpose as [7], previously presented, ONCE-3DLanes [9] addresses the problem of insufficient data for 3D lane detection and introduces a new real-world autonomous driving dataset to encourage development on this subject. In addition to this, the authors also proposed a model for 3D lane detection called SALAD that does not require human-made anchors and also does not require transformation to BEV or top-view.

In [24], a lane-aware query generator is introduced, which adapts to lane-specific characteristics and improves detection accuracy by jointly leveraging lane-level and point-level embeddings. Furthermore, LATR uses a Dynamic 3D Ground Positional Embedding that iteratively refines a 3D ground plane to better align with the actual road geometry. Thus, it addresses the limitations of traditional assumptions related to a fixed 3D space. Using these algorithms, the method achieved state-of-the-art results on the ONCE-3DLanes dataset and very impressive results on other datasets without using BEV image generation.

The state-space model (SSM) introduced by Mamba [25] addresses ViT problems by providing linear-time complexity. Its results match or even exceed the performance of Transformers in natural language processing tasks. Mamba’s key innovation lies in the efficient handling of long sequences, making it a scalable solution compared to traditional Transformers.

Vision Mamba [26] further improves upon this by incorporating bidirectional SSMs to mitigate the weaknesses of Transformers in capturing global context and spatial relationships. However, the added complexity of processing sequences in bidirectional SSMs can lead to challenges such as increased latency, overfitting, and uncertainty in accuracy improvement. Despite these challenges, ViTs and CNNs often outperform Mamba-based models in visual tasks because of their efficiency and reliability.

Recently, hybrid models that combine Mamba and Transformer architectures have gained considerable attention. Ref. [20] introduced MambaVision, a novel hybrid model designed for computer vision tasks that combines the strengths of both Mamba and Transformers. It employs a hierarchical structure with multi-resolution CNN-based residual blocks to swiftly extract features across different resolutions.

Related to our design choices, we also consider advances in detection architectures beyond lane detection. Ref. [27] shows how combining multiple feature streams can remain robust even when one modality, for example, thermal or RGB, is noisy or unreliable. This idea is similar to our goal of making geometric cues robust against imperfect or uncertain signals. In another line of work, ref. [14] replaces dense attention with sparse, reference-point-based attention, which improves greatly the efficiency and accuracy in detection. This mechanism directly inspires the use of deformable attention in the decoder in our work.

3. Method Overview

Motivated by the success of the latest state-of-the-art LATR [24] model, we propose a 3D lane detection model with Dual Transformer. In this section, we will present the overall architecture and implementation details.

The objective of the proposed solution is to obtain the coordinates of the traffic lanes in a three-dimensional space, starting from an image that is captured by a camera mounted on the front of the vehicle. Because we do not generate a BEV image for the lane detection, we no longer require precise calibration of the well-defined intrinsic and extrinsic parameters of the camera, such as the height of the camera, the focal length, or the pitch angle, as we previously did in [6,21]. Concretely, we still use a simple pinhole projection to map 3D points to the image, but we let the ground plane be adjusted during training with two residuals corresponding to pitch and height , which are supervised in the lane pixels by . On ONCE-3DLanes, the camera extrinsics are unavailable. So, we follow previous works [7,24] and use approximate camera settings, and the learned plane compensates for the resulting mismatch. Thus, we can use this method to approximate the camera extrinsics on every dataset.

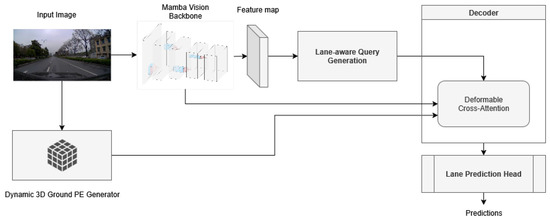

The architecture of our proposed model is shown in Figure 1. The process begins by extracting feature maps from the input image using the MambaVision backbone, which was chosen for its ability to capture both fine-grained details and broader contextual features effectively. After this important step, the incoming process is inspired and similar to LATR. Lane-aware queries are generated, where the number of queries corresponds to the number of lanes, and the number of points represents each lane. These queries are then designed to interact with the extracted feature maps using a deformable attention mechanism, which allows the model to dynamically focus on the most relevant parts of the image.

Figure 1.

Proposed method architecture. The model begins by extracting a feature map X using the MambaVision backbone, followed by generating lane-aware queries Q that refine the feature map through a deformable attention mechanism. Next, the Dynamic 3D Ground Positional Embedding introduces 3D context, updating the feature map to , after which the dynamic plane is iteratively updated to align with real-world geometry using a transformation matrix, and, finally, the prediction head processes the refined feature map to produce accurate 3D lane predictions.

To further enhance the model’s understanding of the scene, we employ a Dynamic 3D Ground Positional Embedding Generator. This component integrates three-dimensional spatial information into the two-dimensional features which ensure that the model accurately reflects lane geometry without relying on static 3D structures. Finally, a prediction head processes the enhanced queries and leads to the generation of the final lane predictions. Each component of this architecture will be explained in more detail in the following sections.

3.1. Backbone

MambaVision serves as the feature extraction backbone for the object detection and segmentation models. In these frameworks, the backbone network is responsible for extracting rich feature maps from the input image, which are then used by subsequent layers to detect objects and generate segmentation masks. The hierarchical architecture of MambaVision, which includes both CNN-based residual blocks and Transformer blocks, makes it highly effective for this role.

CNN layers in MambaVision, specifically in Stages 1 and 2, quickly process high-resolution features. Given an input image , where H and W are the height and width of the input image, which is an RGB image with three channels, the number of channels is denoted with C. These layers extract feature maps through a series of convolutions and pooling operations:

where denotes the convolutional operations, including residual connections, which are formulated as

Here, represents the activation function, typically ReLU, and BN denotes batch normalization. These residual connections help maintain spatial details crucial for accurately locating and identifying objects by capturing both low-level and high-level features.

In Stages 3 and 4, MambaVision employs a combination of MambaVision Mixer blocks and Transformer blocks. The MambaVision Mixer blocks utilize Structured SSMs to capture both short- and long-range dependencies. The SSM in MambaVision can be expressed using the following state-space equations:

where is the hidden state, is the input, and is the output. The parameters , , and are learnable. These parameters are discretized for computational efficiency. This allows the MambaVision Mixer to process sequences effectively and capture complex dependencies.

The output of the MambaVision Mixer block can be represented as

where are the lower-resolution feature maps. The Mixer block combines convolutional operations with SSMs, capturing both local and global context.

The Transformer blocks in Stages 3 and 4 further enhance the model’s ability to maintain global context. Using the multi-head self-attention mechanism, the Transformer blocks process the feature maps as

where , , and are the query, key, and value matrices derived from , and is the dimensionality of the key vectors. This self-attention mechanism allows the model to capture dependencies across the entire image to ensure a comprehensive understanding of object relationships and spatial configurations.

The combined output from the MambaVision Mixer and Transformer blocks provides enriched feature maps that are well-suited for generating accurate segmentation masks and object detections:

where denotes the transformation applied by the Transformer block. This hierarchical combination of CNNs, SSMs, and Transformers within MambaVision makes it a powerful backbone for object detection and segmentation tasks, which balances both computational efficiency and accuracy.

The LATR model begins by processing the input image I using the MambaVision-S-1K backbone to extract a feature map X. The feature map captures the necessary visual details from the front view of the driving scene and provides a rich set of features for further processing:

Here, C is the number of channels, H is the height, and W is the width of the feature map.

3.2. Lane-Aware Query Generation

After extracting the feature map, the model generates lane-aware queries Q using the lane-aware query generator. These queries are tailored to each detected lane and incorporate both lane- and point-level embeddings. The lane-aware queries Q are represented as

where captures the overall lane structure and is computed using instance activation maps (IAMs) derived from the feature map X, and captures detailed information at specific points along the lane, leveraging the learnable weights corresponding to the predefined longitudinal coordinates.

3.3. Interaction via Deformable Attention

The lane-aware queries Q then interact with the feature map X through a deformable attention mechanism. This mechanism allows the model to selectively focus on relevant regions in the image by dynamically adjusting its attention based on the queries:

where is the refined feature map after the deformable attention operation, which uses the lane-aware queries to capture relevant features for lane detection.

3.4. Dynamic 3D Ground Positional Embedding (PE) Generation

To integrate three-dimensional context, the model employs a Dynamic 3D Ground Positional Embedding Generator. This component enhances the feature map with 3D spatial information by projecting a hypothetical 3D ground plane into the 2D feature space:

where are the 2D coordinates on the feature map (in pixels), is the homogeneous scale factor of the projection, is a 3D point on the ground plane, and T is the pinhole camera projection matrix.

A 3D ground plane P is initialized and iteratively refined using a transformation matrix D to align with the real-world ground. This embedding step enhances the feature map , which allows the model to accurately represent the lane geometry:

During training, the ground plane is adjusted step by step by adjusting the pitch and height. We train it together with the lane losses using a simple plane alignment loss (Section 3.7). This learned, adjustable plane works better than a fixed plane or a fixed frustum.

3.5. Iterative Plane Update

We describe the transformation matrix D using the pitch angle and the vertical shift . With these, we construct the matrix:

D is used to update the 3D ground plane, adjusting its position to better fit the ground truth road surface. In the decoder layer t, the plane grid from the previous step, , is updated by multiplying it by this matrix:

The update is based on the residuals calculated between the predicted 3D ground plane and the actual 3D lane annotations.

3.6. Prediction Head for Final Lane Predictions

Finally, the enhanced feature map is fed into a prediction head that uses an MLP to output the final 3D lane predictions. This head estimates the 3D coordinates of the lane points and their visibility:

This final step produces the predicted 3D positions and classifications of the lanes, providing a comprehensive 3D lane model. This workflow avoids the use of traditional 3D surrogates like IPM and leverages Transformer-based attention mechanisms for efficient and accurate 3D lane detection.

3.7. Loss Function

The loss function is computed the same as in [24] and combines several components to ensure accurate 3D lane detection, focusing on regression, visibility, and classification.

The primary loss for 3D lane prediction consists of

where and are regression losses for the x- and z-coordinates of the lane points, is the visibility loss, using binary cross-entropy, and is the classification loss, handled by focal loss to manage class imbalance.

The overall loss function is a weighted combination of 3D lane prediction, segmentation, and 3D ground plane alignment losses:

where trains a simple lane versus background mask obtained by drawing the ground-truth lane lines on the image and makes the learned ground plane, composed of pitch and height, to match the 3D lane annotations at labeled pixels.

We supervise the ground plane with a simple alignment loss computed only at lane pixels:

where is the 3D canvas induced by the current ground plane and stores the metric 3D of the annotated lane points. Training is end-to-end, so this loss is optimized together with the lane losses, and gradients pass through the plane update, so the plane aligns to the road surface over time.

The values we have used for the base weights are the following: , , , , and all others . We find that they are stable across both datasets.

This structure ensures that the model optimizes not only for accurate lane positioning but also for visibility and correct ground plane alignment.

4. Experiments

In this section, we present the training details alongside the results of the proposed model on the ONCE-3DLanes (https://once-3dlanes.github.io/3dlanes/, accessed on 29 July 2025) and Apollo 3D Lane Detection (https://developer.apollo.auto/synthetic.html, accessed on 29 July 2025) datasets.

4.1. Training Details

In our experiments, we set the batch size to 2 and used three workers during training. The number of categories for classification is 2, one representing the lane and the other the background, and we defined a positive threshold of 0.3 for classification. The labels corresponding to the lane and background created labels by drawing the ground-truth lane lines on the image and making them a bit thicker, 3 pixels at 1/4 of the resolution of the feature map. The pixels on these thick lines are labeled ’lane’ and all other pixels are ’background’. This auxiliary head is used only during training and it is dropped at inference.

Our model follows an encoder–decoder architecture based on [24]. We resize the input images to a resolution of 720 × 960 and they are then fed to the backbone. The encoder is initialized with the MambaVision-S-1K pretrained model, and we employ a Feature Pyramid Network (FPN) as the neck, with input channels [192, 384, 768] and output dimension of 192. Those input channels correspond to the feature map channels extracted from the last three stages of the MambaVision backbone, which represent different levels of spatial detail. The FPN outputs four feature maps and each one is passed through additional convolutions with the largest scale aggregated as input for the decoder.

For the decoder, we apply deformable attention with four heads, eight sample points, and 192-dimensional embeddings. A six-layer Transformer decoder is used for object detection and segmentation, with 12 queries and 20 anchor points per query distributed across the y axis.

All models are trained using the AdamW optimizer with a learning rate of and a weight decay of 0.01. We set the learning rate multiplier for sampling_offsets to 0.1.

The proposed method was trained and evaluated on a system that has the following specifications: Intel 4790K CPU (Intel, Santa Clara, CA, USA), one NVIDIA RTX 3060 Ti GPU (NVIDIA, Santa Clara, CA, USA), 16GB DDR3 RAM, and 1TB SSD storage. We strongly believe that slightly better results can be achieved by fine-tuning the hyper-parameters, but due to our system limitation, these were the best results we could achieve.

4.2. Evaluation Metrics

The evaluation metrics used to evaluate the performance of the proposed model on the ONCE-3DLanes dataset are the F1 score, precision, recall, and CD error. Precision represents the ratio between all correctly identified positive instances and all instances that were predicted as positive. Recall refers to the ratio of true positives out of all the actual positive instances in the dataset. The F1 score represents the harmonic mean between precision and recall. Those three metrics can be mathematically expressed as

where is the number of true positives, the number of false positives, and the number of false negatives.

The CD error represents the Chamfer distance between the predicted lane line and the ground truth.

The Apollo dataset uses the F1 score and, besides it, errors that are computed using the Euclidean distance and measured in meters. It compares the matched lanes for the near range (0–40 m) and the far range (40–100 m).

4.3. Results on ONCE-3DLanes

The ONCE-3DLanes [9] dataset is a collection of annotated data for autonomous driving, which includes lane layout information in 3D space. To create this dataset, a pipeline was developed that can automatically generate precise 3D lane location data from 2D annotations. This is achieved by utilizing the explicit relationship between point clouds and image pixels, having over 211,000 road scenes, and resulting in a high-quality dataset. The model was trained for 20 epochs, with the best results being achieved in the 11th epoch.

The results obtained by our model on the ONCE-3DLanes dataset, as shown in Table 1, indicate a significant improvement over current state-of-the-art methods across all key metrics. Specifically, our model achieved an F1 score of 82.39%, outperforming the previous highest score of 80.59% achieved by LATR [24]. This indicates a superior balance between precision and recall in detecting lane markings.

Table 1.

Evaluation results on ONCE-3DLanes. Best values are highlighted in bold.

4.4. Results on Apollo 3D Lane Detection

The Apollo 3D Lane Detection dataset, introduced in [6], is an extension of the original Apollo Synthetic Dataset. The dataset was built using the Unity game engine to encourage research and development in the field of autonomous driving. The main reason behind it was the lack of labeled real-world data. The Apollo 3D Lane Detection dataset contains a total of 6000 samples from the virtual highway map, 1500 samples from the urban map, and 3000 samples from the residential area, along with the corresponding depth map, semantic segmentation map, and 3D lane line information.

The dataset is split into three sets to evaluate the algorithms. The “balanced scenes” set follows a standard five-fold split for unbiased data training and testing. The “rarely observed scenes” set uses a subset of testing data from a complex urban map to evaluate generalization capabilities. The “scenes with visual variations” set evaluates methods under illumination changes, such as 3D examples from before dawn.

The performance of our model on the Apollo 3D Lane Detection dataset, particularly in the balanced scenes category, Table 2, highlights its strength compared to current state-of-the-art methods. Our model achieved an F1 score of 97.0%, which is second after the best of 97.4% achieved by [30]. This performance in F1 score indicates that our model strikes an excellent balance between precision and recall, outperforming models like [24,28], which also performed well but fell slightly short in this aspect.

Table 2.

Evaluation results on Apollo 3D Lane Detection balanced scenes. Best values are highlighted in bold.

For the rarely observed and visual variant scenes, as shown in Table 3 and Table 4, our model delivers solid performance. In the rarely observed scenes, while [32] achieves the highest F1 score, our model remains competitive with an F1 score of 95.1%, positioning it closely to other top-performing methods. Similarly, in the visual variant scenes, our model achieves an F1 score of 94.5%, indicating reliable performance across diverse environmental and visual conditions.

Table 3.

Evaluation results on Apollo 3D Lane Detection rarely observed scenes. Best values are highlighted in bold.

Table 4.

Evaluation results on Apollo 3D Lane Detection visual variant scenes. Best values are highlighted in bold.

Our backbone, MambaVision-S-1K, is composed from the following hybrid blocks: Conv, Mamba, Transformer. The Mamba and the self-attention blocks from the Transformer have the ability to capture and model long-term relationships, but weaker inductive biases than CNNs. Thus, they rely more on the amount and diversity of data to learn these relationships correctly. In [20], it was observed that, when trained only on ImageNet-1K, which has around 1.2 M images, MambaVision-S performs comparably to ResNet, but really gains advantage on larger sets such as ImageNet-22K. As the Apollo subsets have much fewer images than ONCE-3DLanes, it was expected that our configuration would not perform as well on this dataset as on ONCE-3DLanes. Although there is room for improvement in these more challenging scenarios, our model still shows good overall performance.

4.5. Runtime and Complexity Comparison

At our evaluation resolution, 720 × 960, our MambaVision-S-based model counts 62.6 M parameters and 175.5 GFLOPs (FLOPs ), in comparison to LATR, which uses the ResNet-50 backbone and has 46.8 M parameters and 127.9 GFLOPs. Those results were not presented in [24], but we used their official GitHub repository to download a pretrained model and calculate the complexity under the same input. Although this may seem like an increase in complexity, our model remains substantially more efficient than PersFormer, which is the baseline model for LATR in this line of work, despite being moderately heavier than [24]. The authors of [7] did not publish the complexity of the model, but thanks to GroupLane’s work [33], we were able to obtain them. PersFormer working with EfficientNet-B7 [2] is much heavier, having 572.4 GFLOPs, which is ∼3.26 times more than our computational cost.

We ran the inference on our system, which uses NVIDIA RTX 3060 Ti GPU (NVIDIA, Santa Clara, CA, USA), with batch size 1 and excluded the first five warmup iterations. We measure 14.44 FPS for our model versus 15.84 FPS for LATR under the same configuration. In terms of latency, it is ∼69.3 ms per frame for ours and ∼63.1 ms for LATR, which represents a difference of ∼6.1 ms (∼8.8% in FPS). In practice, this is a modest difference on our system, and both models operate in essentially almost the same real-time regime at this resolution.

5. Conclusions

In conclusion, we have proposed an innovative 3D lane detection model that uses the MambaVision backbone integrated with Transformer-based attention mechanisms. This approach significantly improves lane detection performance in 3D space and addresses the challenges posed by non-flat surfaces and variable road conditions. Using deformable attention and lane-aware queries, the model dynamically focuses on relevant regions of the image, resulting in enhanced accuracy for lane detection tasks. Our method achieved state-of-the-art results on the ONCE-3DLanes dataset and competed closely with other methods on the Apollo 3D Lane Detection dataset, demonstrating its superiority in terms of precision, recall, and F1 score. These results highlight the potential of Transformer-enhanced backbones for robust 3D lane detection in autonomous driving applications.

Author Contributions

Conceptualization, R.-M.C. and C.-A.P.; methodology, R.-M.C. and C.-A.P.; software, R.-M.C.; validation, R.-M.C.; formal analysis, R.-M.C.; investigation, R.-M.C.; resources, C.-A.P.; data curation, R.-M.C.; writing—original draft preparation, R.-M.C.; writing—review and editing, C.-A.P.; visualization, R.-M.C.; supervision, C.-A.P.; project administration, C.-A.P.; funding acquisition, C.-A.P. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding. The APC was funded by Politehnica University of Timișoara.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. In Proceedings of the 36th International Conference on Machine Learning Research, Long Beach, CA, USA, 9–15 June 2019; Chaudhuri, K., Salakhutdinov, R., Eds.; PMLR: Cambridge, MA, USA, 2019; Volume 97, pp. 6105–6114. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. MobileNetV2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar] [CrossRef]

- Wang, J.; Mei, T.; Kong, B.; Wei, H. An approach of lane detection based on Inverse Perspective Mapping. In Proceedings of the 17th International IEEE Conference on Intelligent Transportation Systems (ITSC), Qingdao, China, 8–11 October 2014. [Google Scholar] [CrossRef]

- Guo, Y.; Chen, G.; Zhao, P.; Zhang, W.; Miao, J.; Wang, J.; Choe, T.E. Gen-LaneNet: A Generalized and Scalable Approach for 3D Lane Detection. In Proceedings of the Computer Vision—ECCV 2020, Glasgow, UK, 23–28 August 2020; Springer International Publishing: Berlin/Heidelberg, Germany, 2020; pp. 666–681. [Google Scholar] [CrossRef]

- Chen, L.; Sima, C.; Li, Y.; Zheng, Z.; Xu, J.; Geng, X.; Li, H.; He, C.; Shi, J.; Qiao, Y.; et al. PersFormer: 3D Lane Detection via Perspective Transformer and the OpenLane Benchmark. In Proceedings of the Computer Vision—ECCV 2022, Tel Aviv, Israel, 23–27 October 2020; Springer Nature Switzerland: Cham, Switzerland, 2022; pp. 550–567. [Google Scholar] [CrossRef]

- Wang, H.; Li, T.; Li, Y.; Chen, L.; Sima, C.; Liu, Z.; Wang, B.; Jia, P.; Wang, Y.; Jiang, S.; et al. Openlane-v2: A topology reasoning benchmark for unified 3D HD mapping. Adv. Neural Inf. Process. Syst. 2024, 36, 18873–18884. [Google Scholar]

- Yan, F.; Nie, M.; Cai, X.; Han, J.; Xu, H.; Yang, Z.; Ye, C.; Fu, Y.; Mi, M.B.; Zhang, L. ONCE-3DLanes: Building Monocular 3D Lane Detection. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022. [Google Scholar] [CrossRef]

- Zhang, Q.; Zhu, Y.; Cordeiro, F.R.; Chen, Q. PSSCL: A progressive sample selection framework with contrastive loss designed for noisy labels. Pattern Recognit. 2025, 161, 111284. [Google Scholar] [CrossRef]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-End Object Detection with Transformers. In Proceedings of the Computer Vision—ECCV 2020, Glasgow, UK, 23–28 August 2020; Springer International Publishing: Berlin/Heidelberg, Germany, 2020; pp. 213–229. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16 × 16 Words: Transformers for Image Recognition at Scale. In Proceedings of the International Conference on Learning Representations, Online, 3–7 May 2021. [Google Scholar]

- Han, K.; Wang, Y.; Chen, H.; Chen, X.; Guo, J.; Liu, Z.; Tang, Y.; Xiao, A.; Xu, C.; Xu, Y.; et al. A Survey on Vision Transformer. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 87–110. [Google Scholar] [CrossRef] [PubMed]

- Zhu, X.; Su, W.; Lu, L.; Li, B.; Wang, X.; Dai, J. Deformable DETR: Deformable Transformers for End-to-End Object Detection. In Proceedings of the International Conference on Learning Representations, Online, 3–7 May 2021. [Google Scholar]

- Zheng, S.; Lu, J.; Zhao, H.; Zhu, X.; Luo, Z.; Wang, Y.; Fu, Y.; Feng, J.; Xiang, T.; Torr, P.H.; et al. Rethinking Semantic Segmentation from a Sequence-to-Sequence Perspective with Transformers. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 19–25 June 2021. [Google Scholar] [CrossRef]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. ImageNet Large Scale Visual Recognition Challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Proceedings of the Computer Vision—ECCV, Zurich, Switzerland, 6–12 September 2014; Springer International Publishing: Berlin/Heidelberg, Germany, 2014; pp. 740–755. [Google Scholar] [CrossRef]

- Cordts, M.; Omran, M.; Ramos, S.; Rehfeld, T.; Enzweiler, M.; Benenson, R.; Franke, U.; Roth, S.; Schiele, B. The Cityscapes Dataset for Semantic Urban Scene Understanding. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.u.; Polosukhin, I. Attention is All you Need. In Advances in Neural Information Processing Systems; Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; Curran Associates, Inc.: New York, NY, USA, 2017; Volume 30. [Google Scholar]

- Hatamizadeh, A.; Kautz, J. Mambavision: A hybrid mamba-transformer vision backbone. In Proceedings of the Computer Vision and Pattern Recognition Conference, Nashville, TN, USA, 11–15 June 2025; pp. 25261–25270. [Google Scholar]

- Garnett, N.; Cohen, R.; Pe’er, T.; Lahav, R.; Levi, D. 3D-LaneNet: End-to-End 3D Multiple Lane Detection. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar] [CrossRef]

- Jin, Y.; Ren, X.; Chen, F.; Zhang, W. Robust Monocular 3D Lane Detection with Dual Attention. In Proceedings of the 2021 IEEE International Conference on Image Processing (ICIP), Anchorage, AK, USA, 19–22 September 2021. [Google Scholar] [CrossRef]

- Liu, R.; Chen, D.; Liu, T.; Xiong, Z.; Yuan, Z. Learning to Predict 3D Lane Shape and Camera Pose from a Single Image via Geometry Constraints. In Proceedings of the AAAI Conference on Artificial Intelligence, online, 22 February–1 March 2022; Association for the Advancement of Artificial Intelligence (AAAI): Washington, DC, USA, 2022; Volume 36, pp. 1765–1772. [Google Scholar] [CrossRef]

- Luo, Y.; Zheng, C.; Yan, X.; Kun, T.; Zheng, C.; Cui, S.; Li, Z. Latr: 3d lane detection from monocular images with transformer. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 7941–7952. [Google Scholar]

- Gu, A.; Dao, T. Mamba: Linear-Time Sequence Modeling with Selective State Spaces. In Proceedings of the 1st Conference on Language Modeling, Philadelphia, PA, USA, 7–9 October 2024. [Google Scholar]

- Zhu, L.; Liao, B.; Zhang, Q.; Wang, X.; Liu, W.; Wang, X. Vision Mamba: Efficient visual representation learning with bidirectional state space model. In Proceedings of the 41st International Conference on Machine Learning (ICML’24), Vienna, Austria, 21–27 July 2024. [Google Scholar]

- Tang, H.; Li, Z.; Zhang, D.; He, S.; Tang, J. Divide-and-Conquer: Confluent Triple-Flow Network for RGB-T Salient Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2025, 47, 1958–1974. [Google Scholar] [CrossRef] [PubMed]

- Huang, S.; Shen, Z.; Huang, Z.; Ding, Z.h.; Dai, J.; Han, J.; Wang, N.; Liu, S. Anchor3dlane: Learning to regress 3D anchors for monocular 3D lane detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 17451–17460. [Google Scholar]

- Ai, J.; Ding, W.; Zhao, J.; Zhong, J. WS-3D-Lane: Weakly Supervised 3D Lane Detection With 2D Lane Labels, 2023. arXiv 2022, arXiv:2209.11523. [Google Scholar] [CrossRef]

- Pittner, M.; Janai, J.; Condurache, A.P. LaneCPP: Continuous 3D Lane Detection using Physical Priors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 10639–10648. [Google Scholar]

- Bai, Y.; Chen, Z.; Fu, Z.; Peng, L.; Liang, P.; Cheng, E. CurveFormer: 3D Lane Detection by Curve Propagation with Curve Queries and Attention. In Proceedings of the 2023 IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023; pp. 7062–7068. [Google Scholar] [CrossRef]

- Wang, R.; Qin, J.; Li, K.; Li, Y.; Cao, D.; Xu, J. Bev-lanedet: An efficient 3d lane detection based on virtual camera via key-points. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 1002–1011. [Google Scholar]

- Li, Z.; Han, C.; Ge, Z.; Yang, J.; Yu, E.; Wang, H.; Zhang, X.; Zhao, H. GroupLane: End-to-End 3D Lane Detection with Channel-wise Grouping. IEEE Robot. Autom. Lett. 2024, 9, 10487–10494. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).