Abstract

Optical coherence tomography (OCT) employs light to acquire high-resolution 3D images and is widely applied in fields such as ophthalmology and forensic science. A popular technique for visualizing the top view (en face) is to slice it with flat horizontal plane or apply statistical functions along the depth axis. However, when the target appears as a thin layer, strong reflections from other layers can interfere with the target, rendering the flat-plane approach ineffective. We apply Otsu-based thresholding to extract the object’s foreground, then use least squares (with Tikhonov regularization) to fit a polynomial curve that describes the sample’s structural morphology. The surface is then used to obtain the latent fingerprint image and its residues at different depths from a translucent tape, which cannot be analyzed using conventional en face OCT due to strong reflection from the diffusive surface, achieving FSIM of 0.7020 compared to traditional en face of 0.6445. The method is also compatible with other signal processing techniques, as demonstrated by a thermal-printed label ink thickness measurement confirmed by a microscopic image. Our approach empowers OCT to observe targets embedded in samples with arbitrary postures and morphology, and can be easily adapted to various optical imaging technologies.

1. Introduction

Optical coherence tomography (OCT) is a powerful imaging technique that employs light to capture detailed, non-invasive images of subsurface structures. With superior axial resolution, OCT has revolutionized 3D imaging in various fields, including ophthalmology [1,2,3], dentistry [4], and industrial applications such as precision component testing [5]. Moreover, OCT has found significant use in forensic science, particularly in identifying counterfeits [6,7], automotive paint trace inspection [8], and analyzing fingerprints [9,10,11], due to its ability to reveal details obscured under a surface without damaging the evidence.

Standard OCT imaging retrieves a single spatial-axis intensity, which can be extended to form volumetric structural information by scanning in two additional orthogonal directions. In order to display the internal structure, cross-sectional images of the sample can be obtained by slicing along the depth direction of the OCT volume [12,13,14], while en face images are typically extracted by slicing horizontally at a specific depth [6] or applying statistical functions (such as average or maximum intensities) along the depth axis to realize a global projection [15].

Traditional en face image uses a flat horizontal plane applied as a “knife” to slice the volume, where the sample needs to be manually flattened to obtain more information [16], which can cause contamination or damage. However, imaging of non-planar surfaces (especially in very thin layers) such as tilted or curved samples obscures the target layer due to strong reflection from non-target layers, resulting in low image contrast.

Although a possible solution is to disregard strong reflection layers by selecting only target layers using “virtual flattening” via surface thresholding [6], its potential for curved en face imaging has been largely overlooked in general OCT applications. Existing works either are designed specifically for ophthalmology [17,18], require special lens design [19], or do not focus on visualizing en face images [20,21]. We have added a comparison of existing literature in Table A1.

In this study, we propose an arbitrary curved OCT en face extraction with adaptive threshold selection and data processing capability. Our algorithm adaptively selects appropriate thresholds, then calculates the shape of curved surface, while mitigating strong interferences caused by reflection from highly reflective samples. To verify the feasibility of this method, we applied our method to two challenging scenarios: (1) detecting thin latent fingerprints on a diffusive translucent tape and (2) measuring the ink thickness (by image frequency analysis) of a thermal-printed label—both of which are beyond the capabilities of traditional processing techniques due to low contrast within an image plane and the targets’ thin nature (as they are only a few microns thick). Since our method can also be adapted for other optical imaging techniques, and for rapid knowledge dissemination, we have also made our data and code publicly available.

2. Experimental Setup

2.1. SD-OCT System

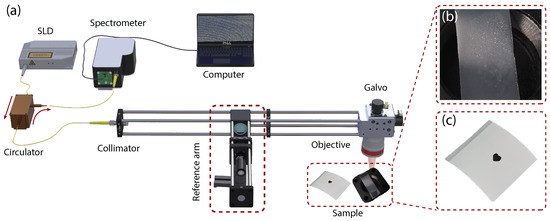

The spectral-domain optical coherence tomography (SD-OCT) system built in this study is shown in Figure 1a. The light source is a super luminescent light-emitting diode with a center wavelength of 850 nm, a bandwidth of 50 nm, and an output power of 15 mW. The axial resolution of the system is . The incident light is split into the sample arm and the reference arm by a beam splitter with a splitting ratio of 90:10 to enhance the signal from the samples. A dispersion compensator with the focusing lens is incorporated in the reference arm to minimize the dispersion mismatch between two arms. The interferogram formed from the backscattering of the sample arm and the back-reflecting of the reference arm is then recorded using a spectrometer with 0.05 nm spectral resolution.

Figure 1.

Experimental setup: (a) equipment and samples, (b) translucent tape with latent fingerprint, and (c) a thermal-printed label.

2.2. OCT Volume

Three-dimensional OCT volume data consists of two lateral axes (named X and Y) and one axial axis (frequency). Our OCT volume contains 1024 voxels along the X-axis, 256 voxels along the Y-axis, and 2048 frequency-domain voxels along another othorgonal axis. To extract spatial information from this raw data, the source spectra are determined by calculating the median of each X-frequency-plane. Then a Fourier transform with the Hann window (to minimize spectral leakage) is applied to the source-subtracted frequency axis, resulting in 1024 voxels of spatial depth information (whereas the other half of the mirror image is discarded due to the limitation by Nyquist criterion in a real-valued signal). One can combine multiple scan volumes to improve the signal-to-noise ratio by a factor of square root of the scan count.

3. Curved Slicing Method

To automatically discover objects’ boundaries and layers, we extract foreground voxels (by Otsu-based binarization), detect the object’s structural morphology (by performing curve-fitting), and select the target layer (by weighing technique). The resulting 3D curve is then ready to be used for further analysis.

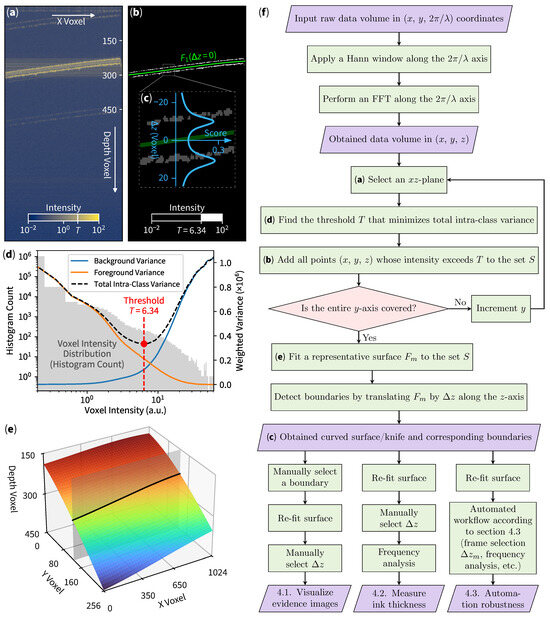

3.1. Foreground Voxels Extraction

The binarization process separates the object of interest from the background, where Otsu’s method is utilized. Otsu’s technique automatically determines an optimal threshold value by maximizing the interclass variance between the background and foreground voxels. In simpler terms, if the total variance within each (foreground or background) group is minimized, the corresponding threshold is optimal. After a proper threshold is found, each voxel’s intensity in the original slice is compared to the calculated threshold. Voxels with intensities above the threshold, marked with T in Figure 2a, are classified as foreground (regions of interest), while those below are considered background. This process (illustrated in Figure 2d) creates a binary mask for each slice (Figure 2b).

Figure 2.

Curved slicing method. (a) Original B-scan (cross-sectional) slice with threshold indicated as T. (b) Image after binarization (white voxels) and surface-fitting (green line). (c) Inset indicating boundary score for automated boundary detection. (d) Otsu’s thresholding operation, with slice voxel histogram in background (left axis) and variances calculations in foreground (right axis). (e) 3D surface indicating curved en face slice with the gray sheet as a cross-sectional B-scan used in (a,b). (f) Flow chart describing our method.

3.2. Structural Morphology Detection

As the foreground voxels are identified through a binarization process, their locations are extracted to determine the object’s boundary. Curve fitting is performed on these 3D-voxel coordinates to best fit the boundary layers characterized by a surface equation (such as polynomial, spline, and others). If the surface is rough, one may use periodic functions or even use deep learning methods to model a relatively complicated surface. In our case, a polynomial surface equation will suffice:

with the polynomial degree (due to the nature of our sample) being used throughout this paper. To fit the curve, we select N representative voxels and find every that minimizes . We use Tikhonov regularization [22] to prevent overfitting by introducing a fix regularization variable for minimization of , according to the Scikit-learn library [23]. This step obtains a surface that best represents objects’ structural morphology while minimizing shot noises and rejecting reflection artifacts. We then describe the fitted surface as

with unique label m to represent individual instances capable of shifting the depth axis up or down by . We can then choose to represent the initial fitted curve (the green line in Figure 2b) as . The full 3D representation of the surface illustrated in Figure 2e can be drawn by respectively iterating x and y over and .

3.3. Target Layer Selection

To automatically select a specific boundary layer, we can apply the weighing technique used for outlier rejection [24] to obtain

The key idea is that by shifting along the depth axis z, the total distance between the points near the surface will decrease the denominator term, which consequently increases the sum of the inverse of distances. The addition of 1 is used to prevent each term from overflowing and is inherently used as a normalizing factor. From Figure 2c, when the green surface crosses each boundary, the boundary score (blue graph) will rise due to decreasing distances. Moreover, the magnitude of this score could be used as an approximation for inlier points selection. For example, if we would like to select the second (bottom) layer for further analysis, we first move the green surface down the depth axis to , then the points that represents the second boundary will be the closest to the surface, which increases the boundary score (totalling around 30% of the volume foreground points). We then finally cut 70% of the points, classifying them as outliers, and repeat the curve-fitting process. The second curve-fitting process inherently eliminates noises from other layers due to outlier rejection.

4. Results and Discussions

We demonstrate the (1) capability of curved slicing by exploration of latent fingerprint (multi-layer surface extraction and visualization) and (2) extensibility by integrating additional 2D image frequency analysis for measuring the thickness of ink (image processing integrations).

4.1. Multi-Layer Surface Extraction and Visualization

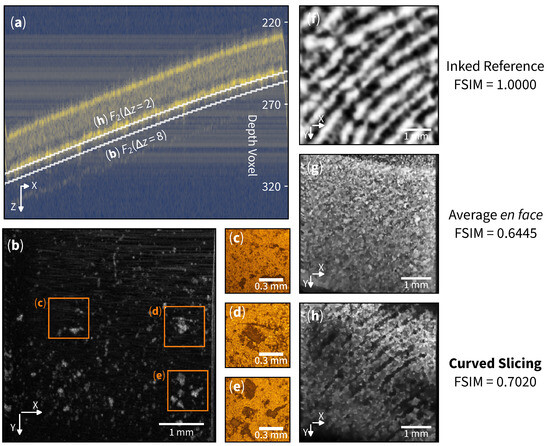

Curved en face slicing emerges as a promising technique in forensic science, specifically in non-invasive exploration of latent fingerprint evidence. We have collected a fingerprint sample from a volunteer using the glue side of a translucent tape, followed by an OCT scan of the matte side, as depicted in Figure 1b. The resulting cross-section B-scan is shown in Figure 3a.

Figure 3.

Latent fingerprint residue detection. (a) Cross-section of translucent tape with bright yellow lines indicating tape boundaries. indicates the curved slicing function (selecting the second layer). (b) Curved en face slice at showing another layer of residue. (c–e) Microscopic images at each region. (f) Inked reference from fingerprint. (g) Traditional average en face from to . (h) Curved en face slice at showing a layer of resolvable latent fingerprint pattern. Images (b,f–h) are enhanced for clarity.

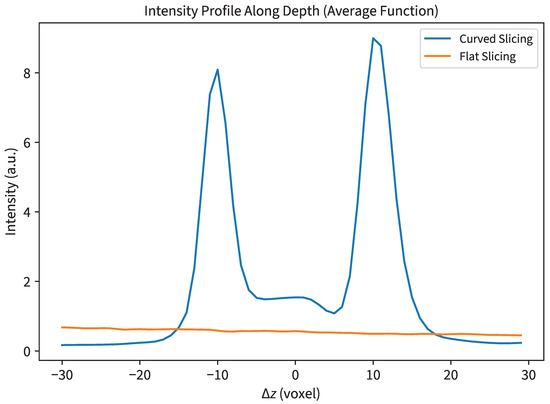

The average en face image traditionally employed in biological sample analysis, as illustrated in Figure 3g, faces a significant challenge in visualizing sub-surface structures within the volume. A profile of curved versus flat slicing is also shown in the appendix (Figure A1). This limitation arises from the image (signal) being overshadowed by strong material reflections (interference). Unlike biological samples with complex structures, where each substructure typically exhibits high contrast (either high reflection or high absorption), the fingerprint information in this sample is confined to the superficial layers of the tape with relatively low contrast.

The flexibility of curved slicing around the glue layer of the tape allows us to explore specific layers in detail. We first define a curved slice on the second boundary as . Then, slicing on layer reveals surface structures that exhibit latent fingerprint-like patterns. See Figure 3h. The Feature Similarity Index (FSIM) can be used to evaluate the structures’ similarity [25]; the curved slicing exhibits an FSIM of 0.7020 when compared to the inked reference Figure 3f.

Examining layer uncovers dust-like residues scattered across the surface (see Figure 3b). The presence of these patterns exhibits a resemblance and is in good agreement with the microscopic images shown in Figure 3c–e. The evidence revealed by the curved slicing method can be crucial in forensic investigations, allowing detailed identification and analysis of foreign matter associated with a crime scene.

4.2. Image Processing Integrations

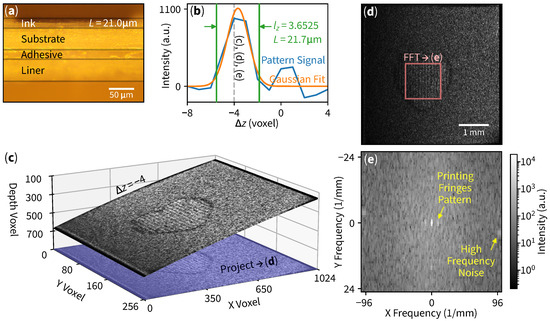

The interoperability between our curved slicing method and digital signal processing techniques facilitates ink thickness measurement applications. Our objective is to effectively detect and count layers within the material’s structure. We used OCT to scan a thermal-printed label (surface-side), as indicated in Figure 1c, with its corresponding cross-sectional microscopic image Figure 4a.

Figure 4.

Ink thickness measurement in a thermal-printed label. (a) Cross-sectional microscopic image of a printed label paper showing ink thickness . (b) Pattern signals at each slice. The of the Gaussian curve fit amplitude determines the layer count , which converts to ink thickness . (c) Three-dimensional curved slice at showing a heart pattern with its projection (or flattening). Images are enhanced for clarity. (d) Unprocessed en face at ; the middle square region is used to perform 2D Fourier transform. (e) A two-dimensional frequency-domain image with DC in the center. Arrows indicate a periodic printing pattern and high frequency noise. The subtraction of intensities results in a data point of the pattern signal on (b).

The processing workflow begins with curved slicing at each layer, which in this example is at (Figure 4c). The central region of the flattened curved slice reveals a periodic printing pattern in Figure 4d, which is then subjected to 2D FFT analysis as shown in Figure 4e.

The frequency-domain image reveals three bright low-spatial-frequency spots near the center: one representing the DC offset and two symmetric spots indicating fringes from the thermal printing process. Areas close to the edge of the image exhibit high-frequency behavior (speckle noise). Finally, we subtract the printing pattern spot with high-frequency noise to be a single “pattern signal” data point on Figure 4b on a blue line (with the current example slice denoted by a dashed line). By repeating the aforementioned workflow of slicing, projection, and Fourier transform for every layer, we are able to obtain the pattern signal.

The measurement process starts with the raw signal in Figure 4b. Subsequently, a Gaussian curve fitting is applied to this signal. A cutoff point (or signal width representative [26]) at of the Gaussian fit amplitude is considered for layer counting. To translate from voxel lengths to real-world lengths, we define physical domain coordinates as , , and where , , are 4.8828, 19.5312, 4.2068 μm/voxel, respectively. Since the surface is tilted, the real thickness is proportional to the projected length of the gradient plane. The vector that defines a gradient plane at point is defined by

with its corresponding unit vector . The layer thickness L is calculated by projecting layer count (in voxel) with basis onto vector , yielding

Substituting paper refractive index [27], voxels and other parameters into (4) results in calculated thickness . The thickness obtained from our curved slicing method is consistent with that of the cross-sectional microscopic image, demonstrating the high compatibility of our approach when integrated with other data processing techniques.

4.3. Automated Workflow Robustness Analysis

To inspect the robustness of our curved slicing method, we have constructed an automated workflow of ink thickness measurement. We have printed five samples and selected a random sample to obtain OCT volumes in six different orientations. The experiment is conducted as follows:

- 1.

- Obtain the OCT volume.

- 2.

- Perform curved slicing to obtain the surface equation and its corresponding boundary (according to the flowchart in Figure 2f).

- 3.

- For each slice, perform dimensionality reduction:

- 3.1.

- Apply 2D FFT to obtain the 2D frequency domain.

- 3.2.

- Reduce the dimension by applying maxpool to the frequency domain image.

- 3.3.

- Extract locations and intensities in the frequency domain.

- 3.4.

- Sort the information by intensity (as shown in Figure 5a, where each line represents each slice).

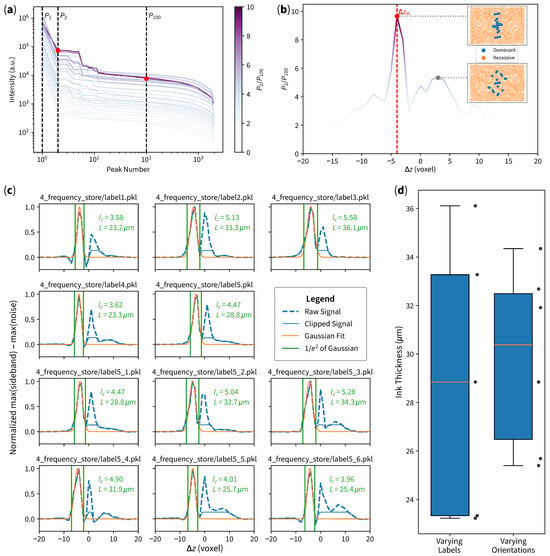

Figure 5. Automated ink thickness measurement results. (a) Intensity distribution sorted in descending order; each line represents each slice , and the color of each line represents the ratio of each slice. (b) Ratio according to each , where the maximum ratio corresponds to candidate slice . The top inset shows dominant and recessive frequency-domain data points of , where the points are aligned according to the inked pattern. The bottom inset shows frequency-domain data points of a non-candidate slice, where points are distributed in a random manner (with higher spatial frequency indicating possible noise). (c) Signal processing results of each case. (d) Box plot of measurement results, with standard deviations of for different labels and for different orientations.

Figure 5. Automated ink thickness measurement results. (a) Intensity distribution sorted in descending order; each line represents each slice , and the color of each line represents the ratio of each slice. (b) Ratio according to each , where the maximum ratio corresponds to candidate slice . The top inset shows dominant and recessive frequency-domain data points of , where the points are aligned according to the inked pattern. The bottom inset shows frequency-domain data points of a non-candidate slice, where points are distributed in a random manner (with higher spatial frequency indicating possible noise). (c) Signal processing results of each case. (d) Box plot of measurement results, with standard deviations of for different labels and for different orientations.

- 4.

- Find the candidate slice which has the largest ratio, where is the second-largest intensity (probably the sideband, shown in Figure 5a as the middle dashed line), and is the 100th-largest intensity (probably noise, shown in Figure 5a as the right-most dashed line). The ratios of all slices are separately plotted as shown in Figure 5b for visualization purposes.

- 5.

- In the candidate slice , divide frequency-domain data points into two sets: the 23 largest intensities as dominant locations and the rest as recessive locations (shown as the top inset in Figure 5b).

- 6.

- For each :

- 6.1.

- The largest intensity near dominant locations of is considered as the sideband.

- 6.2.

- The largest intensity near recessive locations of is considered as noise.

- 6.3.

- Signal is considered as sideband subtract noise. Refer to the blue dashed line plotted in Figure 5c.

- 7.

- Perform the initial Gaussian fit to the signal.

- 8.

- Clip the signal at the right side ( of Gaussian) for curve-fitting stability. Refer to the blue solid line plotted in Figure 5c.

- 9.

- Perform the second Gaussian fit to the clipped signal. Refer to the orange line of plots in Figure 5c

- 10.

- Calculate the ink thickness according to (5).

The results are as shown in Figure 5, where panel (c) shows different measurement results (with a raw signal, clipped signal, and Gaussian fit), and panel (d) shows a box plot of both varying labels and varying orientations.

The candidate frame obtained by our workflow is very stable, achieving either or . After the initial Gaussian optimization, signal clipping, and second Gaussian fit, the second Gaussian curve fitting achieves the peak of the curve at voxel, which is also stable, despite the changing samples and orientations. The consistency in peak locations and signal characteristics confirms the robustness of our method. The different labels achieved the standard deviation of , while different orientations of one of the same labels achieved standard deviation of , which indicates that the method is more sensitive to different labels rather than different orientations.

As evidenced by the insensitivity of samples and the varying orientations, our method is robust to different 3D curves samples that may exhibit different random noises. The surface re-fitting and candidate slice selection workflow ensures effective noise rejection as long as the curve can be explained as a surface equation. While some surface equations can be numerically unstable, we have not observed any overfitting tendency in our experiments. In fact, with a robust automated workflow, our technique can be extended to serve other imaging technologies that require 3D data processing, such as 3D X-ray and CT scans [28].

5. Conclusions

In this paper, curved en face slicing is a powerful technique for analyzing samples with complex surface structures. An adaptive threshold selection based on Otsu’s method is used for foreground selection, which serves as a basis for polynomial surface equation curve fitting (with Tikhonov regularization to prevent overfitting). A boundary score can also be used for automated boundary selection, where we can repeat the curve-fitting process for each boundary (which vary slightly). We can use the obtained “curved knife” to slice through each depth layer and extract en face images, in order to (1) reveal the latent fingerprint and its residues on different depths embedded under a translucent tape, (2) integrate data processing techniques (2D Fourier transform and Gaussian curve fitting) for ink thickness measurement of a thermal-printed label, and (3) automate the ink thickness measurement workflow. Future works may include complex surface modeling by means of generative adversarial networks for applications that require high precision in handling multilayer structures.

Our approach demonstrates versatility and potential for wide-ranging applications. For example, it can be used to detect counterfeit bank notes (with thickness measurement or pattern inspections), perform curved slicing to inspect surface damage in biological samples, and conduct quality inspections, all without destroying the evidence, specimens, or samples. With an automated workflow, curved slicing can be applied to any application that requires 3D volume processing to achieve meaningful results. As such, along with provided data and code, we envision numerous future research findings and practical applications.

Author Contributions

Conceptualization, M.L., P.L. and L.S.; formal analysis, P.L. and M.L.; investigation, M.L., P.L. and Y.L. (Yueyang Liu); methodology, P.L. and M.L.; resources, L.S. and Y.L. (Yang Li); software, P.L., X.L. and M.L.; validation, M.L., P.L. and Y.L. (Yueyang Liu); visualization, P.L., M.L. and Y.L. (Yueyang Liu); writing—original draft, M.L., P.L.; writing—review and editing, M.L., P.L., Y.L. (Yueyang Liu), Y.L. (Yang Li) and L.S.; supervision, L.S. and Y.L. (Yang Li). All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original data and code presented in the study are openly available in FigShare at http://dx.doi.org/10.6084/m9.figshare.28645661, accessed on 3 May 2025.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| OCT | Optical coherence tomography. |

| SD-OCT | Spectral-domain optical coherence tomography. |

Appendix A

Table A1.

Comparison of surface-flattening algorithms from existing studies.

Table A1.

Comparison of surface-flattening algorithms from existing studies.

| Criteria | RTS a | FMC b | MAI c | LED d | HSL e | This Work |

|---|---|---|---|---|---|---|

| Good contrast and sharpness of images | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Designed for different samples | ✓ | ✓ | ||||

| Discovery of multiple boundaries | ✓ | ✓ | ✓ | |||

| Slicing/flattening methodology | SW 1 | SW 1 | SW 1 | SW 1 | HW 2 | SW 1 |

| Focusing on obtaining en face images | ✓ | ✓ |

a Regularized thin-plate spline [20]. b Flat mirror calibration [21]. c Maximum A-scan intensity [17]. d Laplace edge detection [18]. e A hypercentric scanning lens with self-reference [19]. 1 Software-based flattening or slicing. 2 Hardware-based flattening or slicing.

Figure A1.

Average profile along the depth axis comparing curve slicing and flat slicing; sample according to multi-layer surface in Section 4.1.

References

- Dhalla, A.H.; Nankivil, D.; Bustamante, T.; Kuo, A.; Izatt, J.A. Simultaneous swept source optical coherence tomography of the anterior segment and retina using coherence revival. Opt. Lett. 2012, 37, 1883–1885. [Google Scholar] [CrossRef] [PubMed]

- Ngo, L.; Cha, J.; Han, J.H. Deep Neural Network Regression for Automated Retinal Layer Segmentation in Optical Coherence Tomography Images. IEEE Trans. Image Process. 2020, 29, 303–312. [Google Scholar] [CrossRef] [PubMed]

- Han, S.B.; Liu, Y.C.; Noriega, K.M.; Mehta, J.S. Applications of Anterior Segment Optical Coherence Tomography in Cornea and Ocular Surface Diseases. J. Ophthalmol. 2016, 2016, 4971572. [Google Scholar] [CrossRef] [PubMed]

- Luong, M.N.; Shimada, Y.; Araki, K.; Yoshiyama, M.; Tagami, J.; Sadr, A. Diagnosis of Occlusal Caries with Dynamic Slicing of 3D Optical Coherence Tomography Images. Sensors 2020, 20, 1659. [Google Scholar] [CrossRef]

- Wu, X.; Gao, W.; He, Y.; Liu, H. Quantitative measurement of subsurface damage with self-referenced spectral domain optical coherence tomography. Opt. Mater. Express 2017, 7, 3919–3933. [Google Scholar] [CrossRef]

- Marques, M.J.; Green, R.; King, R.; Clement, S.; Hallett, P.; Podoleanu, A. Sub-surface characterisation of latest-generation identification documents using optical coherence tomography. Sci. Justice 2021, 61, 119–129. [Google Scholar] [CrossRef]

- Zhang, N.; Jiang, P.; Wang, W.; Wang, C.; Xie, L.; Li, Z.; Huang, W.; Shi, G.; Wang, L.; Yan, Y.; et al. Initial Study for the Determination of the Sequence of Intersecting Lines between Gel Pens and Seals by Optical Coherence Tomography*. J. Forensic Sci. 2020, 65, 2071–2079. [Google Scholar] [CrossRef]

- Zhang, N.; Wang, C.; Sun, Z.; Mei, H.; Huang, W.; Xu, L.; Xie, L.; Guo, J.; Yan, Y.; Li, Z.; et al. Characterization of automotive paint by optical coherence tomography. Forensic Sci. Int. 2016, 266, 239–244. [Google Scholar] [CrossRef]

- He, B.; Shi, Y.; Sun, Z.; Li, X.; Hu, X.; Wang, L.; Xie, L.; Yan, Y.; Li, Z.; Li, Z.; et al. Rapid, autonomous and ultra-large-area detection of latent fingerprints using object-driven optical coherence tomography. Opt. Express 2024, 32, 31090. [Google Scholar] [CrossRef]

- Zhang, N.; Wang, C.; Sun, Z.; Li, Z.; Xie, L.; Yan, Y.; Xu, L.; Guo, J.; Huang, W.; Li, Z.; et al. Detection of latent fingerprint hidden beneath adhesive tape by optical coherence tomography. Forensic Sci. Int. 2018, 287, 81–87. [Google Scholar] [CrossRef]

- Strąkowski, M.R.; Strąkowska, P.; Pluciński, J. Latent fingerprint imaging by spectroscopic optical coherence tomography. Opt. Lasers Eng. 2023, 167, 107622. [Google Scholar] [CrossRef]

- Margolis, R.; Spaide, R.F. A Pilot Study of Enhanced Depth Imaging Optical Coherence Tomography of the Choroid in Normal Eyes. Am. J. Ophthalmol. 2009, 147, 811–815. [Google Scholar] [CrossRef]

- Li, A.; Cheng, J.; Yow, A.P.; Wall, C.; Wong, D.W.K.; Tey, H.L.; Liu, J. Epidermal segmentation in high-definition optical coherence tomography. In Proceedings of the 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Milan, Italy, 25–29 August 2015; pp. 3045–3048. [Google Scholar] [CrossRef]

- Weissman, J.; Hancewicz, T.; Kaplan, P. Optical coherence tomography of skin for measurement of epidermal thickness by shapelet-based image analysis. Opt. Express 2004, 12, 5760. [Google Scholar] [CrossRef] [PubMed]

- Kashani, A.H.; Chen, C.L.; Gahm, J.K.; Zheng, F.; Richter, G.M.; Rosenfeld, P.J.; Shi, Y.; Wang, R.K. Optical coherence tomography angiography: A comprehensive review of current methods and clinical applications. Prog. Retin. Eye Res. 2017, 60, 66–100. [Google Scholar] [CrossRef] [PubMed]

- Yu, X.; Xiong, Q.; Luo, Y.; Wang, N.; Wang, L.; Tey, H.L.; Liu, L. Contrast Enhanced Subsurface Fingerprint Detection Using High-Speed Optical Coherence Tomography. IEEE Photonics Technol. Lett. 2017, 29, 70–73. [Google Scholar] [CrossRef]

- Auksorius, E.; Borycki, D.; Stremplewski, P.; Liżewski, K.; Tomczewski, S.; Niedźwiedziuk, P.; Sikorski, B.L.; Wojtkowski, M. In vivo imaging of the human cornea with high-speed and high-resolution Fourier-domain full-field optical coherence tomography. Biomed. Opt. Express 2020, 11, 2849. [Google Scholar] [CrossRef]

- Jones, C.K.; Li, B.; Wu, J.H.; Nakaguchi, T.; Xuan, P.; Liu, T.Y.A. Comparative analysis of alignment algorithms for macular optical coherence tomography imaging. Int. J. Retin. Vitr. 2023, 9, 60. [Google Scholar] [CrossRef]

- Ruggeri, M.; Giuffrida, F.P.; Vy Truong, N.L.; Parel, J.M.; Cabot, F.; Shousha, M.A.; Manns, F.; Ho, A. Wide-field self-referenced optical coherence tomography imaging of the corneal microlayers. Opt. Lett. 2025, 50, 1204. [Google Scholar] [CrossRef]

- Garvin, M.; Abramoff, M.; Wu, X.; Russell, S.; Burns, T.; Sonka, M. Automated 3-D Intraretinal Layer Segmentation of Macular Spectral-Domain Optical Coherence Tomography Images. IEEE Trans. Med Imaging 2009, 28, 1436–1447. [Google Scholar] [CrossRef]

- Van der Jeught, S.; Buytaert, J.A.N.; Bradu, A.; Podoleanu, A.G.; Dirckx, J.J.J. Real-time correction of geometric distortion artefacts in large-volume optical coherence tomography. Meas. Sci. Technol. 2013, 24, 057001. [Google Scholar] [CrossRef]

- Rifkin, R.M.; Lippert, R.A. Notes on Regularized Least Squares; Technical report; Massachusetts Institute of Technology: Cambridge, MA, USA, 2007. [Google Scholar]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Farin, G.E.; Hansford, D. Mathematical Principles for Scientific Computing and Visualization; A K Peters: Wellesley, MA, USA, 2008. [Google Scholar]

- Zhang, L.; Zhang, L.; Mou, X.; Zhang, D. FSIM: A Feature Similarity Index for Image Quality Assessment. IEEE Trans. Image Process. 2011, 20, 2378–2386. [Google Scholar] [CrossRef] [PubMed]

- Dickson, L.D. Characteristics of a Propagating Gaussian Beam. Appl. Opt. 1970, 9, 1854. [Google Scholar] [CrossRef] [PubMed]

- Fabritius, T.; Saarela, J.; Myllyla, R. Determination of the refractive index of paper with clearing agents. Proc. SPIE 2006, 6053, 60530X. [Google Scholar] [CrossRef]

- Paluszny, M.; Ríos, D. Retrieving 3D medical data along fitted curved slices and their display. BMC Med Inform. Decis. Mak. 2020, 20, 23. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).