Abstract

With the advent of the Internet of Things (IoT), large volumes of sensitive data are produced from IoT devices, driving the adoption of Machine Learning as a Service (MLaaS) to overcome their limited computational resources. However, as privacy concerns in MLaaS grow, the demand for Privacy-Preserving Machine Learning (PPML) has increased. Fully Homomorphic Encryption (FHE) offers a promising solution by enabling computations on encrypted data without exposing the raw data. However, FHE-based neural network inference suffers from substantial overhead due to expensive primitive operations, such as ciphertext rotation and bootstrapping. While previous research has primarily focused on optimizing the efficiency of these computations, our work takes a different approach by concentrating on the rotation keyset design, a pre-generated data structure prepared before execution. We systematically explore three key design spaces (KDS) that influence rotation keyset design and propose an optimized keyset that reduces both computational overhead and memory consumption. To demonstrate the effectiveness of our new KDS design, we present two case studies that achieve up to 11.29× memory reduction and 1.67–2.55× speedup, highlighting the benefits of our optimized keyset.

1. Introduction

Smart devices and sensors are now used in various fields such as the Internet of Things (IoT). Being very close to our daily lives, they generate vast amounts of data and process the data to provide meaningful results for diverse applications. For instance, wearable devices detect human behavior and collect privacy-sensitive health-related data, such as electrocardiograms (ECGs) [1,2] and blood pressure [3,4,5].

Driven by advances in AI, the analysis of such data has continually developed and evolved. Table 1 presents prior works demonstrating AI models trained on datasets that could be acquired through various sensors embedded in wearable or mobile devices. In general, the larger the AI model, the higher the quality (accuracy) of the AI service [6,7,8]. Such large models require larger amounts of memory to store codes, parameters, and hyperparameters. However, conventional smart devices typically have limited resources, which makes it infeasible to implement and run the full inference computation model on them [9,10].

Table 1.

Examples of AI models in the literature using datasets collected via sensors.

To address such concerns, AI service providers typically perform inference tasks on remote servers, a paradigm known as Machine Learning as a Service (MLaaS). Specifically, the user sends a query containing input data to the server, which performs inference and returns the result to the user’s device. However, this practice raises another issue related to the privacy of the users’ sensitive personal data [41,42,43,44,45]. A somewhat contradictory characteristic of modern AI services is that, as they become more deeply integrated into our daily lives, they increasingly rely on highly sensitive and private user data as input. Although such data are typically transmitted in encrypted form, they are often processed in raw and unencrypted form at the remote server. This creates a serious risk—unauthorized access or data leakage during processing can result in serious privacy violations, raising significant ethical and security concerns [46,47,48,49,50]. Although regulations such as the General Data Protection Regulation (GDPR) have been enacted to address privacy issues, they do not alter the following fundamental limitation: the need to decrypt data during processing.

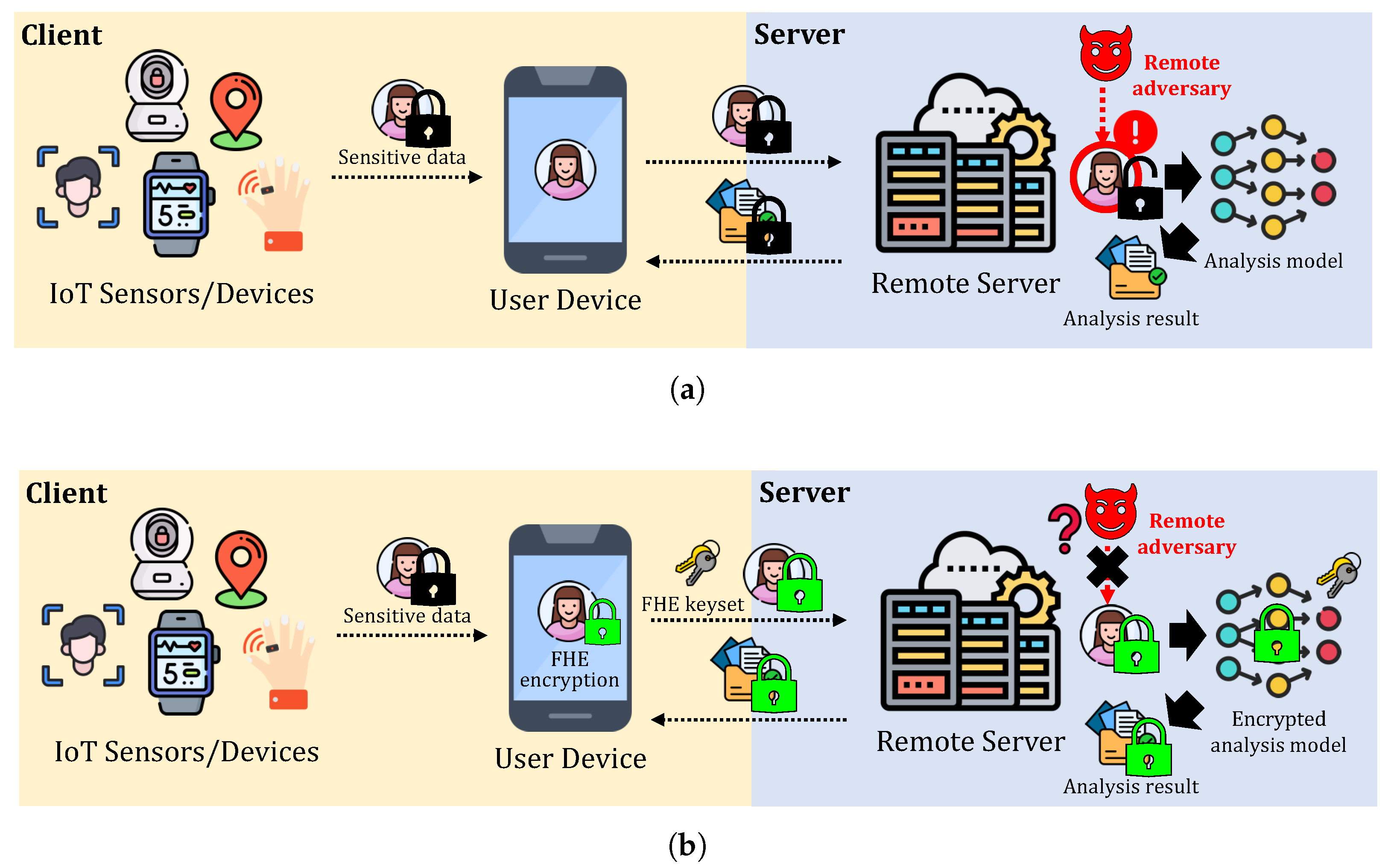

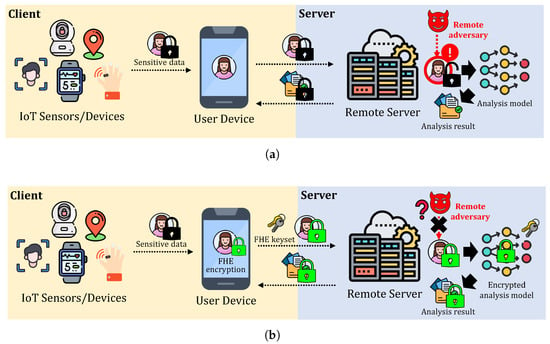

Privacy-Preserving Machine Learning (PPML) has emerged as a critical research area to address these challenges, aiming to enable secure model inference while preventing the exposure of sensitive data. Among the various cryptographic techniques proposed for PPML, Fully Homomorphic Encryption (FHE) is one of the promising privacy-preserving techniques that is widely researched for PPML. FHE allows computations to be performed directly on encrypted data, ensuring that the sensitive information remains secure throughout the entire computation process. Figure 1 illustrates the examples of MLaaS with and without FHE, where FHE-encrypted MLaaS ensures data protection throughout the entire remote inference, including results, while the other is vulnerable to the exposure of sensitive data. However, this enhanced security comes at the cost of increased computational overhead. FHE ciphertexts are typically represented as high-dimensional polynomial structures, leading to significant memory and computational requirements. In addition to basic arithmetic operations such as addition and multiplication, FHE computations involve expensive primitives, including relinearization, rotation, and bootstrapping, all of which contribute to considerable performance degradation compared to unencrypted computations. As a result, improving the efficiency of FHE-based machine learning has been a key challenge in the deployment of practical PPML solutions.

Figure 1.

Comparison of two-party MLaaS to a remote server using data from IoT sensors/devices. The black lock represents data encryption using conventional encryption schemes (e.g., AES), while the green lock represents encryption with the FHE. (a): MLaaS with conventional encryption, where both sensitive data and analysis results are exposed to the server due to the lack of support for computations on encrypted data. (b): MLaaS with FHE, where sensitive data remains protected throughout the entire analysis process. Note that the transmitted FHE rotation keyset does not include a secret key.

While prior works [51,52,53,54,55] have significantly contributed to improving FHE efficiency, the configuration of the FHE keyset directly affects both performance and memory consumption but has received little attention in prior research. This paper presents a comprehensive analysis of the FHE keyset design space (KDS) and introduces novel methodologies to optimize keysets tailored to specific applications. An FHE keyset typically consists of public keys, relinearization keys, and rotation keys, and it also includes bootstrapping keys if bootstrapping is used. These keys are pre-generated on the client side and transmitted to the server before execution, where key assignment can be tailored dynamically. For instance, a rotation by index 7 can be computed either directly by using the rotation key for index 7 or indirectly by decomposing 7 into 4 + 2 + 1 and applying three separate rotations using the corresponding keys. Despite its significance, existing studies have largely treated the keyset as a fixed control variable rather than an optimizable component. In contrast, our work identifies the keyset’s design as a manipulable variable that can be systematically optimized to improve both execution speed and memory efficiency.

Considering the keyset design as a manipulative variable, we observe that the keyset can be designed with three parameters: rotation index, ciphertext level, and the number of temporal primes, α (we provide details of their specifications in the following sections). Although some prior work has suggested the possibility of modified keysets, they typically consider only one or two of these three factors. Moreover, they lack application awareness, focusing only on shallow analyses involving single multiplications or rotations without considering end-to-end inference workloads. A key novelty of our work is the introduction of a path-finding methodology for discovering optimal keysets tweaked for a specific neural network architecture. Our exploration of the keyset design space (KDS) encompasses all three parameters in a comprehensive way. To demonstrate the practical utility of our approach, we consider the well-known PPML as an application, designing different keysets for different neural networks. Based on our experiments, we propose several keyset designs that are different from the customary ones, resulting in better performance or memory consumption. The results suggest that our keyset design approach achieves 1.67–2.55× faster inference latency compared to previous approaches, reducing memory consumption up to 11.29×.

The remainder of this paper is organized as follows. Section 2 provides the essential preliminaries for understanding this work. Section 3 presents two key motivations behind our work, explaining the rationale for this work. Then, in Section 5, we introduce three factors of the keyset design space in detail and propose our approach, which aims to minimize both latency and memory consumption. The experimental results are presented in Section 6, while Section 4 discusses related research, and Section 7 covers additional aspects and the broader implications of our work. Finally, Section 8 concludes our paper.

2. Background

This section presents the essential theoretical background of the RNS-CKKS scheme that will appear throughout our study. For readers who are interested and are seeking more details about RNS-CKKS or FHE, we leave references with more elaborate explanations [56,57] about them.

2.1. RNS-CKKS

The CKKS scheme [56,57] is a homomorphic encryption scheme designed for computation over real and complex numbers, allowing arithmetic operations to be performed directly on encrypted data. It encodes vectors of real or complex numbers into plaintext polynomials by the inverse of the canonical embedding with a scaling factor , which returns for a given vector . The plaintext space is defined as a polynomial ring , where N is a power of two (), determining the degree of the polynomial, and Q is a ciphertext modulus that influences both precision and security. To improve computational efficiency, RNS-CKKS utilizes the Residue Number System (RNS). In RNS-CKKS, the ciphertext modulus Q is decomposed into a product of smaller primes based on the Chinese Remainder Theorem (CRT), which is defined as . This decomposition enables parallel computation and eliminates expensive large-integer arithmetic. RNS-CKKS supports arithmetic operations directly on ciphertexts, including addition and multiplication. The addition is performed element-wise between two ciphertexts and , and their sum is computed as . The addition does not significantly increase noise, making it inexpensive. Multiplication is also element-wise, for given two ciphertexts and , their product is defined as . The other types of operation RNS-CKKS supports are rescaling and rotation. Unlike addition, multiplication increases noise and doubles the scale factor (), requiring rescaling to restore the scale factor (). Rescaling prevents excessive scale growth by dividing the ciphertext coefficients by a prime modulus, . Rescaling ensures numerical stability and prevents overflow. Rotation is another crucial operation that requires a special pre-computed rotation key, which will be detailed in the following sections.

2.2. Ciphertext Level and Bootstrapping

As previously mentioned, the ciphertext modulus is expressed as , where is the base modulus, and denotes the prime moduli forming the RNS representation [57]. Here, the parameter l represents the ciphertext level, which indicates how many homomorphic multiplications can still be performed. When homomorphic multiplications are performed, the ciphertext level must be reduced to keep the ciphertext scale constant as . This process, known as rescale, reduces the ciphertext modulus Q by removing its last prime factor. After each multiplication, the ciphertext modulus is updated as . Since ciphertexts in RNS-CKKS have a fixed scaling factor , rescaling reduces the scale by dividing by , , and the ciphertext level is reduced from l to .

At the lowest level of a ciphertext, for more multiplications, it should recover the exhausted levels. Bootstrapping [51,58] enables this recovery by performing homomorphic operations in four steps: slotToCoeff, modRaise, modReduction, and coeffToSlot. Bootstrapping begins with slotToCoeff at the lowest level of a ciphertext, which homomorphically performs CKKS decoding operations (i.e., evaluation of the DFT matrix), including several rotations and multiplications. When modRaise raises the ciphertext modulus, the encrypted space is converted, so the message cannot be decrypted correctly. Thus, homomorphic modular reduction is evaluated to achieve a properly encrypted message. Finally, coeffToSlot homomorphically performs CKKS encoding (i.e., evaluation of the iDFT matrix), including rotations and multiplications.

2.3. Key Switching

Key switching is a fundamental operation in RNS-CKKS that transforms ciphertexts into an equivalent form under a different secret key. This process is necessary for rotation operations to enable ciphertext slot manipulations and for linearization operations to ensure that the ciphertext remains decryptable after multiplication under the same secret key. Key switching allows a ciphertext encrypted under a secret key to be transformed into an equivalent ciphertext under a secret key , ensuring continued compatibility in homomorphic computations. For example, after multiplication between two ciphertexts, the result dimension increases , where the term is under the squared secret key . At this time, we need to switch the key to s for correct decryption, which can be achieved by key switching.

Let a ciphertext at level l consist of two polynomials . The decryption under a secret key is performed as . Key switching transforms the ciphertext such that it can be decrypted under , preserving correctness: . To accomplish this transformation, the key-switching procedure expresses the ciphertext in decomposed form and applies key-switching keys. Key switching relies on digit decomposition, breaking down the second polynomial component, , where is the number of decomposition digits, and B is the decomposition base. The final transformed ciphertext is computed as , where is the pre-computed key-switching key for level l.

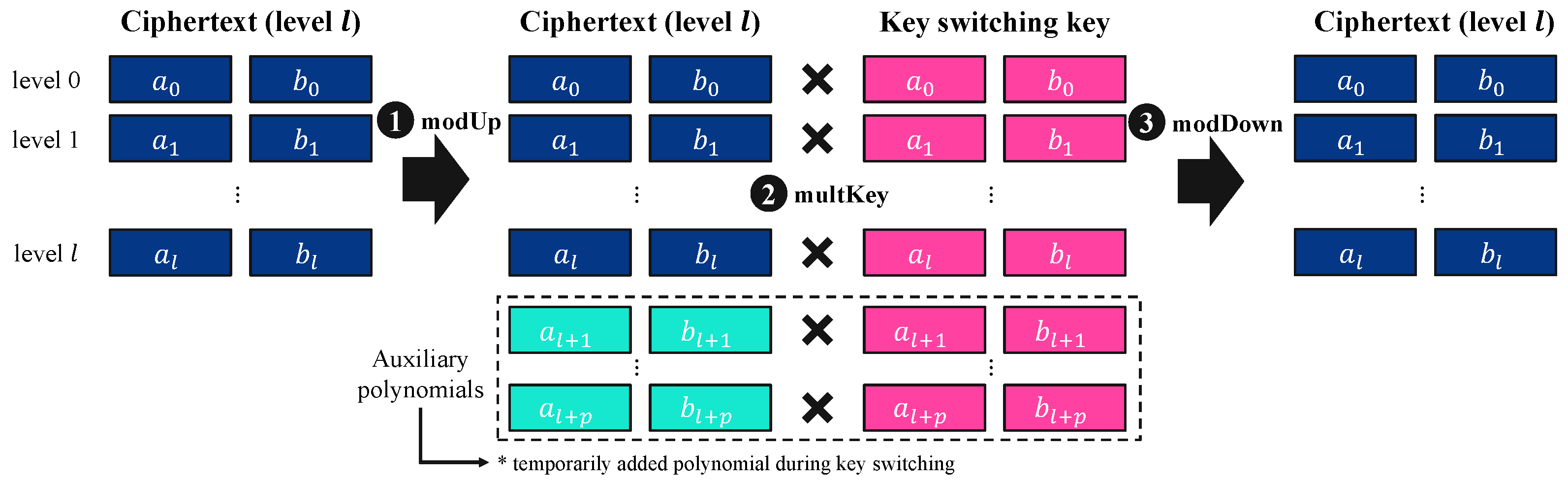

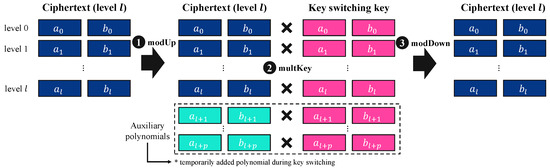

Figure 2 illustrates the key-switching process, which consists of three main steps: modUp, multKey, and modDown. For a given ciphertext at level l, the initial modulus is , and modUp expands the modulus to . This ensures that the ciphertext and the key-switching key are compatible for the upcoming transformation. The modulus-raised ciphertext is now expressed as , where each component corresponds to a different level. Then, in multKey, the ciphertext components are multiplied by the precomputed key-switching key , enabling the secret key transition. Each component of the modulus-raised ciphertext undergoes the transformation as and . This step effectively transforms the ciphertext to be decryptable under a different secret key. After the key-switching transformation, through modDown, the ciphertext modulus is reduced back to . This step ensures that the ciphertext returns to its original modulus level and that the secret key is successfully switched to the new secret key. At the end of this process, the transformed ciphertext remains valid for decryption under the new secret key.

Figure 2.

Overview of key-switching process.

2.4. Rotation

Rotation enables the slot-wise manipulations of encrypted vectors, which is crucial for matrix–vector multiplications and convolutional operations in deep learning. Since RNS-CKKS batches multiple plaintext values into a single ciphertext, an efficient rotation mechanism is required. Rotation in RNS-CKKS operates at different ciphertext levels and requires specific rotation indices to determine how far the ciphertext slots should be shifted. Since ciphertexts decrease in modulus over multiplication, rotation must be performed at the correct level. Each level l requires a corresponding rotation key. The rotation index r determines how far the slots are shifted. A ciphertext encoding rotated by r positions results in . Since storing keys for all rotation indices is impractical, widely used FHE libraries [59,60] only precompute power-of-two rotation keys (e.g., 1, 2, 4, 8, …), computing arbitrary rotations recursively. Rotation is performed using a Galois automorphism , , where g is derived from the rotation index r. Since applying alters the ciphertext’s structure, key switching is needed. The modified ciphertext is . Since is not encrypted with the correct key, it is decomposed and key-switched, . The final rotated ciphertext is , where is the rotation key at level l.

2.5. FHE Keyset Structure and Generation

The FHE keyset in RNS-CKKS consists of multiple precomputed keys that facilitate key switching for relinearization and rotation. The keyset includes relinearization keys (), which are used to reduce the ciphertext’s size after ciphertext multiplication, and rotation keys (), which enable homomorphic slot shifting by r for different indices. Since both keys rely on key switching, FHE keyset generation is based on the same key-switching key-generation algorithm , where . generates the switching keys:

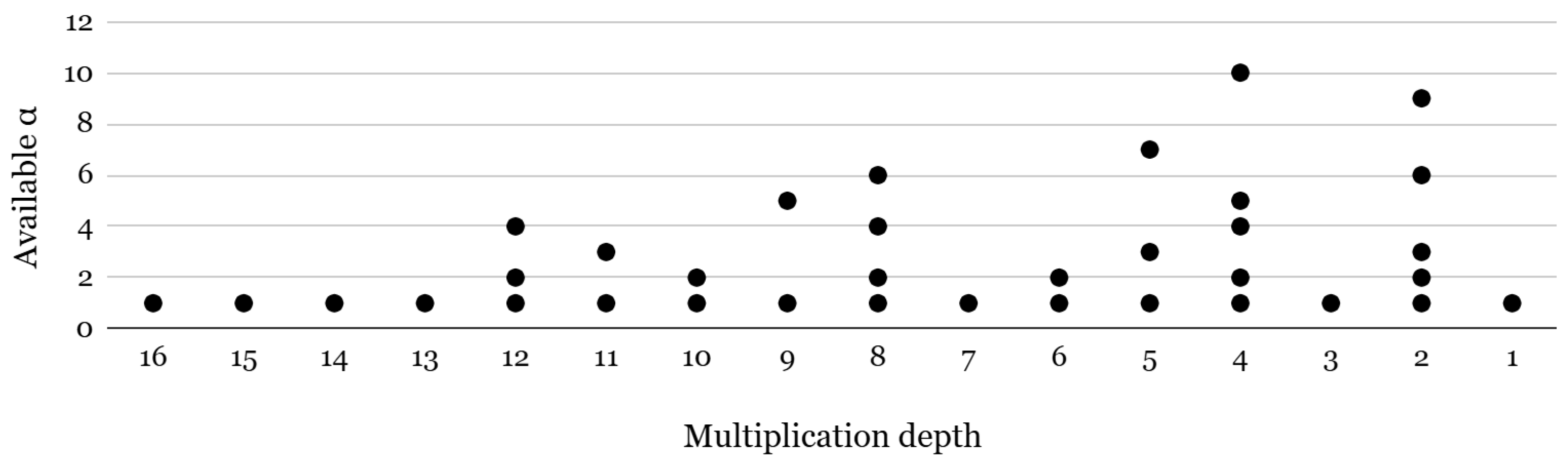

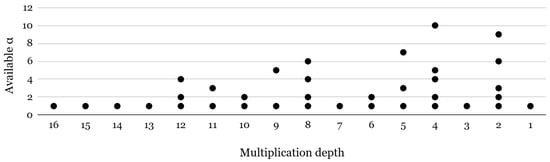

where (mod ) for and (mod ) for by randomly sampling . Based on and using a secret key s, the relinearization key is generated by denoting , and the rotation key for the index r is generated as . Note that these keys are selectively generated based on the application. For example, if the application only consists of arithmetic operations, is never used and therefore does not need to be generated. We hereafter focus on the generation of rotation keys, which are dynamically selected based on the rotation indices required by a given application, as relinearization keys exhibit comparatively lower variability. Using the above definition, the rotation keyset structure is determined by three main parameters: the rotation index (r), the ciphertext level (l), and the number of temporal primes (). The rotation index (r) defines how far the ciphertext slots are shifted. To support all possible rotations, a separate rotation key must be precomputed for each index. However, storing keys for every possible rotation would consume a lot of memory. To reduce memory overhead, widely used FHE libraries [59,60] store rotation keys only for power-of-two indices. When a non-power-of-two rotation is needed (e.g., ), it is decomposed into a sequence of power-of-two rotations (e.g., 4 + 2 + 1), requiring multiple operations instead of one. The ciphertext level (l) represents how many more multiplications can be performed before bootstrapping is needed, as the ciphertexts are initialized at the highest modulus and gradually decrease through modulus switching as the computations progress. Since key switching depends on the modulus level, each level requires a corresponding key. The number of temporal primes () indicates how many primes are appended to split ciphertext coefficients before key switching. Figure 3 shows the available options of at different multiplication depths in a bootstrappable setup with . For example, for a multiplication depth of 9, = 5 and = 1 are two options that developers can choose.

Figure 3.

Available α options per multiplication depth for 128-bit security.

3. Motivation

This section introduces two key observations that motivated our research. First, we examine the challenges associated with selecting the appropriate parameters for the rotation keyset design, which significantly impact the efficiency of FHE-based computations. Then, we present a preliminary analysis of the trade-off between memory consumption and computational performance in encrypted neural network inference.

3.1. Difficulty in Parameter Setting for Key Switching

Key switching is one of the most computationally expensive operations among RNS-CKKS operations, particularly in ciphertext multiplication and rotation. As shown in Table 2, these operations exhibit significantly higher latency than other FHE primitives due to the key-switching process. Moreover, rotation plays a dominant role in bootstrapping latency, as it is executed multiple times during the bootstrapping process, especially in the coeffToSlot and slotToCoeff transformations. Thus, the efficiency of key switching directly affects the overall performance of an FHE-based application.

Table 2.

Latency of RNS-CKKS operations for N = 216 (ms, α = 1).

Among the various parameters in FHE, (or dnum) is the main parameter directly affecting the key-switching efficiency. The variables and dnum are used during the key-switching process: denotes the number of temporary primes temporarily added to a ciphertext, and dnum represents the number of decomposition digits, which determine the extent to which the ciphertext is expanded. When the maximum multiplicative depth (#Q) is present, and dnum need to satisfy the condition,

To simplify the discussion, we consider only cases where is divisible by , which aligns with the default setting of the widely used FHE libraries [59,61]. The ciphertext modulus () should be split into two parts: one for and another for temporal primes, . Since the total modulus budget (i.e., the pool) is limited to achieve a certain security level (e.g., 128-bit security), developers must carefully allocate it. This allocation directly impacts the performance of RNS-CKKS operations, including bootstrapping and rotation, as well as the total multiplicative depth supported by the circuit. For example, bootstrapping with is 1.65× faster than with , although the former supports a maximum multiplicative depth of 9 compared to 16 for the latter. Despite its significant impact, it has not been thoroughly analyzed. Prior works [53,62] typically rely on pre-defined parameter settings from existing libraries.

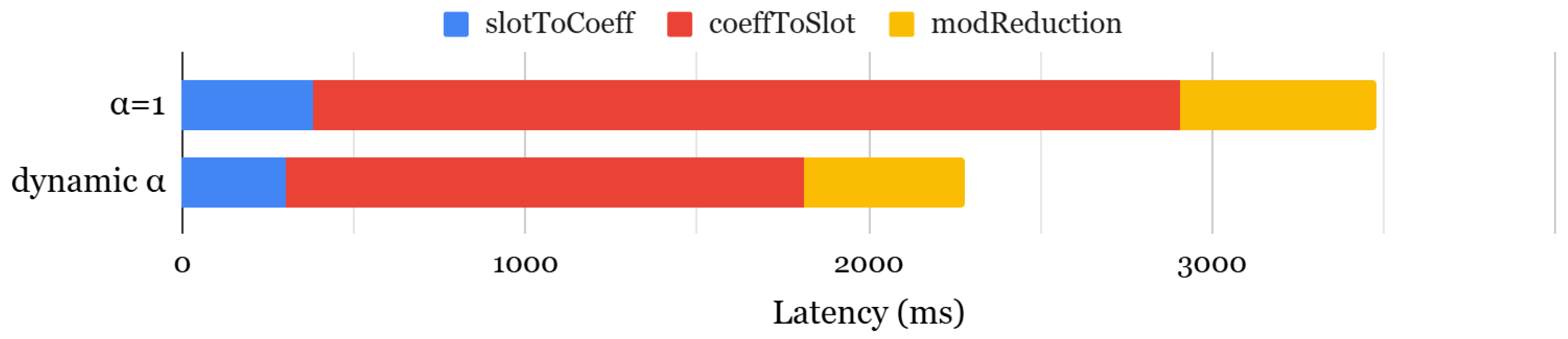

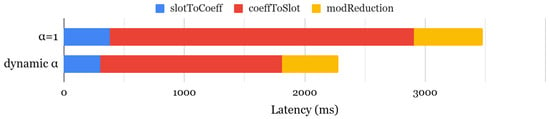

Recent work [63] has proposed a dynamic selection framework to optimize key-switching efficiency by dynamically selecting the best configuration at each ciphertext level. Unlike static configurations, this approach evaluates various options at runtime and chooses the optimal one while preserving the required security level. Figure 4 compares bootstrapping latency between static and dynamic configurations, showing that the dynamic approach accelerates the slotToCoeff and coeffToSlot operations by 1.27× and 1.67×, respectively, leading to an overall 1.52× speedup. However, this performance gain comes at the cost of increased memory usage, as rotation keys must be generated separately for different values, resulting in 1.52× higher memory consumption. While the original work focused on polynomial evaluation, we extended this analysis to assess the impact of dynamic selection on both bootstrapping and encrypted neural network inference, providing a more comprehensive understanding.

Figure 4.

Comparison of bootstrapping latency.

3.2. Memory–Latency Trade-Off of Rotations in Neural Networks

FHE-based neural networks require frequent ciphertext rotations, particularly in arithmetic layers such as convolutional and fully connected layers. This overhead is further amplified in multiplexed convolution, a technique that maximizes slot utilization by packing multiple data points, including multiple channels, into a single ciphertext. This method employs single-input single-output (SISO) convolution [64], improving ciphertext slot utilization but increasing the number of required rotations. For example, FHE-MP-CNN [54] generates a rotation keyset of 257 indices for CIFAR-10 ResNet-20. To support multiple rotation indices in neural networks, existing approaches typically employ one of two key-generation strategies:

- All-Required Key Generation: This approach precomputes rotation keys for all required indices in the neural network. Although it eliminates additional rotations, it demands excessive memory storage, making it impractical for large-scale models.

- Power-of-Two Key Generation: Instead of generating keys for all indices, this approach generates rotation keys only for power-of-two indices and performs recursive rotations to achieve arbitrary shifts. Although this method reduces memory consumption, it introduces additional computational latency. For example, a rotation of index 3 requires two consecutive rotations (2 + 1) in the power-of-two approach, whereas the all-required method completes rotation in a single step.

Several widely used FHE libraries, including HEAAN [59] and SEAL [60], implement the power-of-two approach to optimize memory usage. However, this optimization comes at the cost of an increased inference latency due to additional rotations. Conversely, FHE-MP-CNN adopts the all-required strategy to minimize rotation overhead, but this results in excessive memory consumption. In our experiments, we found that storing rotation keys for all required indices in FHE-MP-CNN needs approximately 307 GB, which is 9× larger than the memory footprint of the power-of-two approach. This stark contrast highlights a fundamental trade-off. While aggressive key generation improves computational efficiency, it leads to impractically high memory usage, whereas memory-efficient key-generation strategies increase inference latency due to recursive rotations.

4. Related Works

4.1. Performance Optimization on FHE and FHE-Based Neural Networks

To mitigate the performance bottlenecks associated with FHE, extensive research efforts have been devoted to accelerating its computations. Some of them introduced hardware accelerator designs [51,52,65,66] that take advantage of the inherent parallelism of FHE operations and efficiently map them to fully utilize the high computational capabilities of various hardware platforms such as FPGA [52], cloud service instances with GPUs [66], and customized ASIC chips [51]. Some others introduced FHE operation schedulers [53,67,68,69,70], micro-scheduling the FHE operation primitives in order to reduce computational latency. Others devised customized sets of FHE operations tweaked for specific applications. For example, FHEMPCNN [54] and HCNN [71] introduced efficient methods for computing convolutional layers using FHE. AutoFHE [55] and AESPA [72] adopt re-training and post-processing to design FHE-friendly neural networks. Although these prior works have contributed significantly to improving FHE efficiency, they have primarily focused on optimizing computation itself. However, a critical aspect that has been largely overlooked is the design of the FHE keyset, which plays a crucial role in balancing computational efficiency and memory consumption. In this work, we focus on the FHE keyset and propose a method that selectively generates only the necessary keys by considering not only rotation indices but also ciphertext levels, thereby improving overall system efficiency.

4.2. Keyset Design Space Exploration

Several prior works have investigated the potential of extending keysets to improve performance. Two studies [63,73] explored increasing dnum to enhance key-switching efficiency. These works introduced methodologies to ensure that the keyset configuration achieves optimal latency for single multiplications. Other studies [61,74,75] focused on generating extended rotation keys to accelerate ciphertext rotations. However, these approaches fail to explore the full keyset design space, as they do not account for all three keyset components simultaneously. Our work differs in that it systematically explores the entire keyset design space by considering all three factors—α selection, rotation index selection, and ciphertext-level optimization—as interdependent variables. Doing so provides a more comprehensive framework for optimizing keyset configurations in homomorphic encryption.

4.3. Keyset Design in Other Homomorphic Encryption Schemes

Although our study focuses on the CKKS scheme, we note that our findings are applicable to other Ring Learning With Error (RLWE)-based schemes such as BGV and BFV. These schemes share similar structural properties with CKKS, suggesting that our keyset optimization approach can also be extended to optimize their performance. On the other hand, FHEW and TFHE rely on Learning With Errors (LWEs), which exhibits fundamentally different characteristics. Unlike CKKS, these schemes execute operations in a long sequential loop, where each component of the secret key is computed in order. Despite this difference, they still rely on keyset configurations, albeit with different key size and structure determinants. We anticipate that similar research could be conducted for these schemes to explore keyset optimization strategies tailored to LWE-based cryptosystems, which we leave as a future research direction.

5. Keyset Design Space (KDS) Exploration

In this section, we analyze the impact of three key factors that influence the efficiency of key switching: α (or dnum), rotation index, and ciphertext level. To ensure that our analysis focuses solely on key switching, we fix the bootstrapping parameters and set the scaling factor to Δ = 250. For a bootstrappable FHE setting with N = 216, the bootstrapping procedure consumes 15 levels. In this configuration, the maximum multiplicative depth of a ciphertext is 31 when = 1, including bootstrapping.

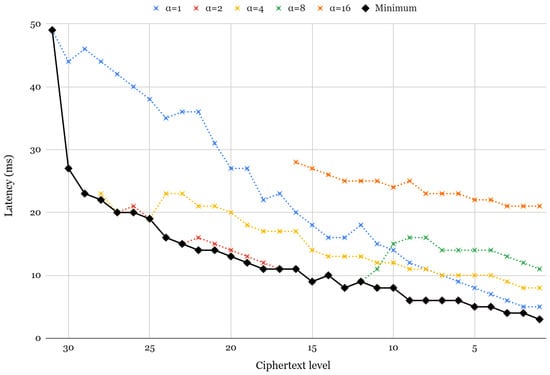

5.1. Impact of

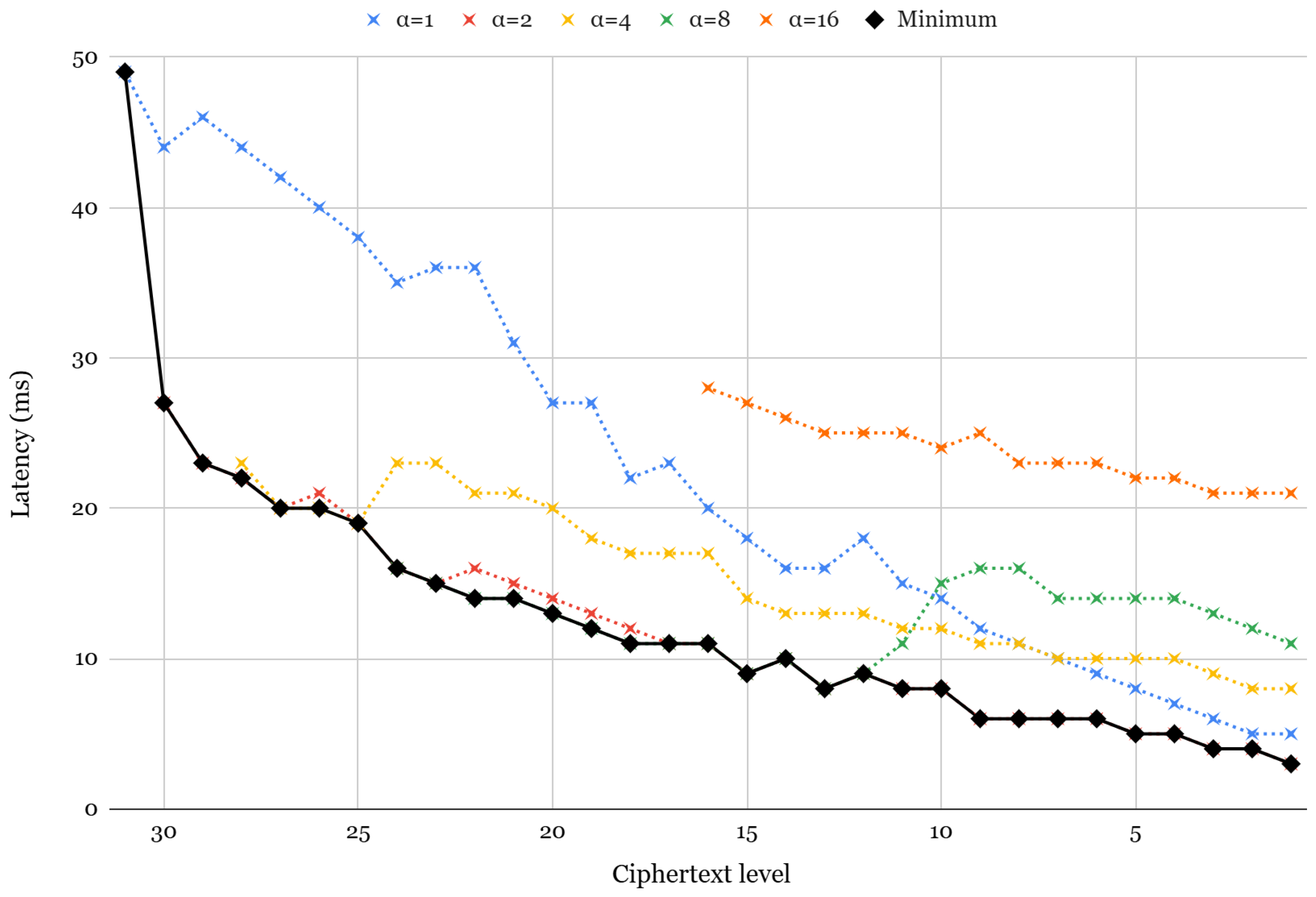

To evaluate the impact of on key-switching performance, we first measure the latency of ciphertext multiplication (with relinearization) on different values, as shown in Figure 5. This analysis follows the framework proposed in [63] and provides insight into how varying influences key-switching efficiency. Note that since all ciphertext must satisfy 128-bit security during key switching, the starting level to apply varies. Each dotted line in the figure represents the rotation latency for static values of 1, 2, 4, 8, and 16, whereas the solid line indicates the optimal selection at each level. Key switching involves a transformation known as modUp, where the ciphertext is expanded to a higher modulus before performing operations with the switching key. The effective size of the ciphertext during key switching at level l is given by the following:

Figure 5.

Latency of relinearization for different α values.

Thus, for lower ciphertext levels, increasing does not always improve latency, as additional polynomials introduced by modUp can create computational overhead.

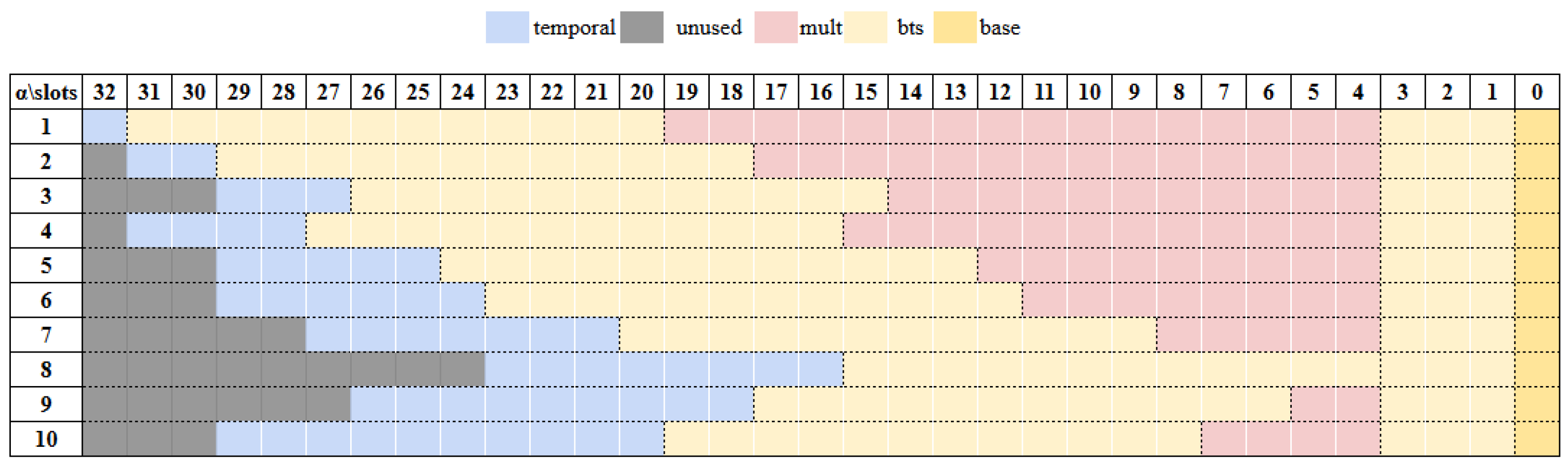

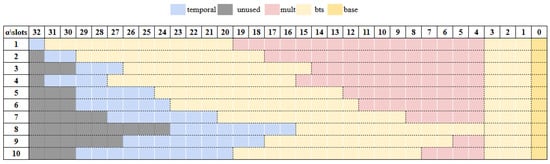

Moreover, the impact of varies depending on whether bootstrapping is used. In this study, we fix the bootstrapping configuration (e.g., approximated function for the modReduction phase) to isolate the impact of selection. During bootstrapping, ciphertexts are first transformed in slotToCoeff; then, their modulus is raised to the highest level, followed by a sequence of modReduction. Figure 6 provides a visualization of the prime usage in different values of , optimized for the maximum multiplication depth. Each configuration determines a unique multiplicative depth, and the gray slots in the figure represent unused primes that are excluded from the total modulus count, providing a clearer view of the allocation. The yellow slots indicate primes dedicated to bootstrapping, which consumes 15 levels. For a 128-bit security setting, a ciphertext achieves a maximum multiplicative depth of 31 when using a single temporal prime ( = 1), which allows up to 16 sequential multiplications. As increases, the available multiplicative depth generally decreases; however, due to the inverse relationship between dnum and , this dependency leads to non-uniform trade-offs between selection and computational efficiency.

Figure 6.

Grid of prime usage.

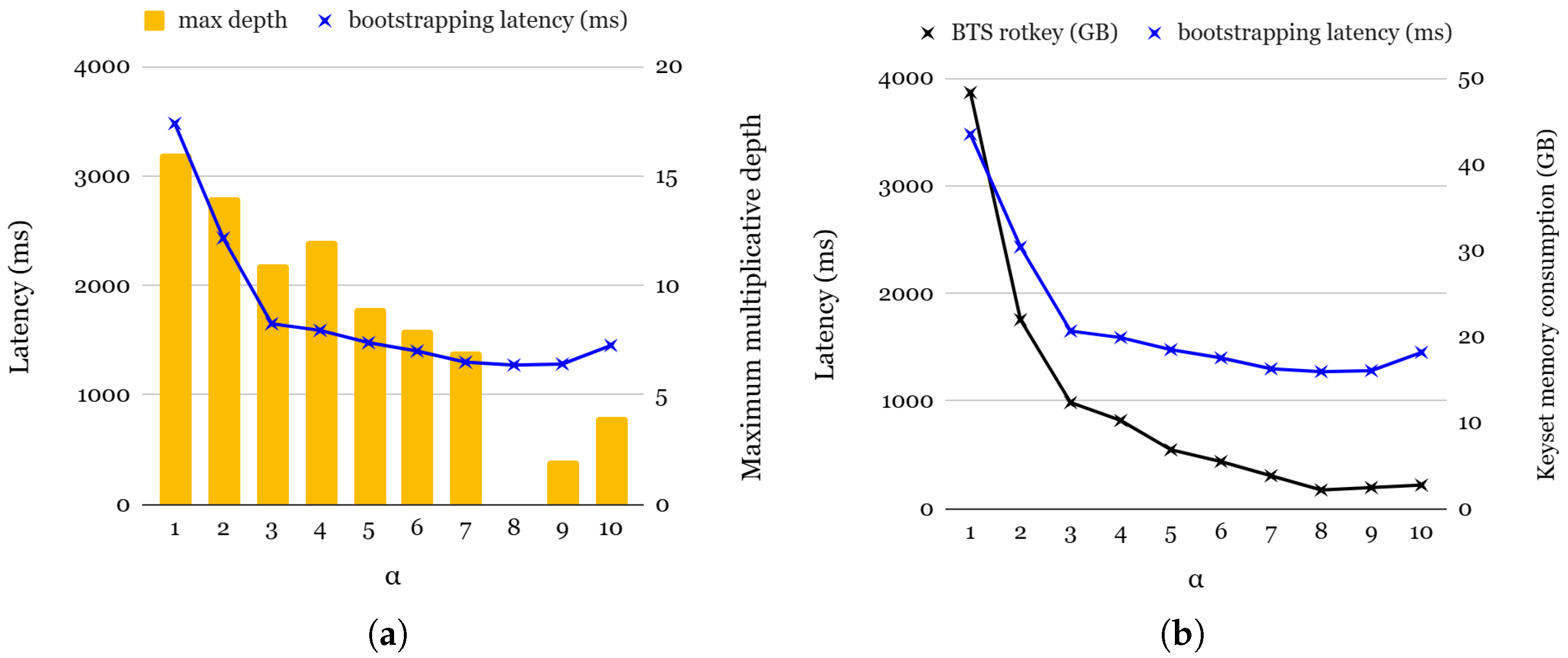

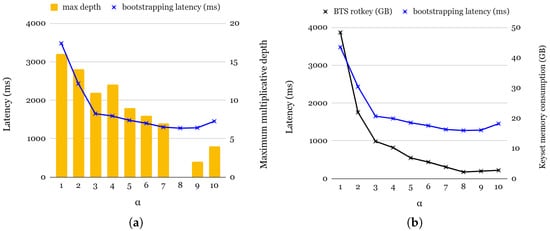

To further analyze the impact of , we measure both the bootstrapping latency and the memory consumption of the rotation keysets. Figure 7a presents the bootstrapping latency and maximum multiplication depth across different values. Since bootstrapping involves multiple key switchings, including ciphertext rotations in coeffToSlot and slotToCoeff, as well as Cmult operations in modReduction, the choice of significantly influences the overall latency. Lower values correspond to higher starting levels in the coeffToSlot step, resulting in slower bootstrapping. Although higher values generally improve bootstrapping performance, we observe an anomalous slowdown for = 9 and = 10. This is attributed to the ciphertext’s expansion caused by modUp, which offsets the expected benefits of higher values. For practical applications requiring various multiplication depths, selecting an optimal is essential. Among the range of viable values (3 to 7), all exhibit similar bootstrapping latency. However, = 4 provides the best trade-off by achieving the highest multiplicative depth while maintaining a reasonable bootstrapping efficiency.

Figure 7.

(a): Bootstrapping latency and rotation keyset memory consumption for bootstrapping; (b): bootstrapping latency and maximum depth for multiplication.

Figure 7b presents the memory consumption of the rotation keyset during bootstrapping. Interestingly, memory consumption decreases as increases. The total memory footprint of the rotation keyset at level l is determined by the following:

Since and dnum are inversely proportional, lower values typically result in lower memory consumption. However, there are exceptions for = 9 and = 10, where an increase in #Q leads to slightly higher memory usage compared to the case of = 8.

5.2. Impact of Rotation Index Selection

To generate a rotation keyset for many rotations in neural networks, two main approaches have been proposed: all-required and power-of-two. The all-required approach generates all necessary rotation keys by performing a pre-analysis of the neural networks’ architecture to identify the required rotation indices. This allows all rotation calls in the neural network to be handled in a single rotation call, minimizing rotation latency. On the other hand, the power-of-two approach generates keys for indices that are a power of two, including both positive and negative values. It then performs recursive rotation calls using these generated keys, which introduces additional computational overhead, as shown in Table 3. For example, a rotation index k with a hamming weight of 3 is approximately 3.8× slower than a rotation index of 1.

Table 3.

Relative latency of rotations for N = 216.

This difference also affects the overall latency of neural network inferences. Table 4 shows the latency of CIFAR-10 ResNet-20 using two different types of ReLU evaluations. Since MPCNN uses a higher degree of ReLU compared to AESPA, it consumes more levels, resulting in greater multiplication depth and more frequent bootstrapping. For the rotations in each neural network evaluation result, the all-required approach achieves faster latency compared to the power-of-two approach: 1.95× for MPCNN and 1.93× for AESPA, respectively. The other operations and bootstrapping are not affected by the index selection approach. As a result, the overall latency of the all-required approach is 1.14–1.36× faster than the power-of-two approach, thanks to the non-recursive rotation calls. However, there is also a disadvantage, which is the increase in memory usage for the rotation keyset. Although the power-of-two approach generates rotation keys for the indices, the all-required approach should generate additional keys for all required, necessary indices. This typically exceeds the number of rotation keys in a keyset generated by power-of-two (e.g., 257 indices are required for ResNet-20), resulting in higher memory consumption.

Table 4.

Comparison of CIFAR-10 ResNet-20 inference latency.

5.3. Impact of the Ciphertext Level

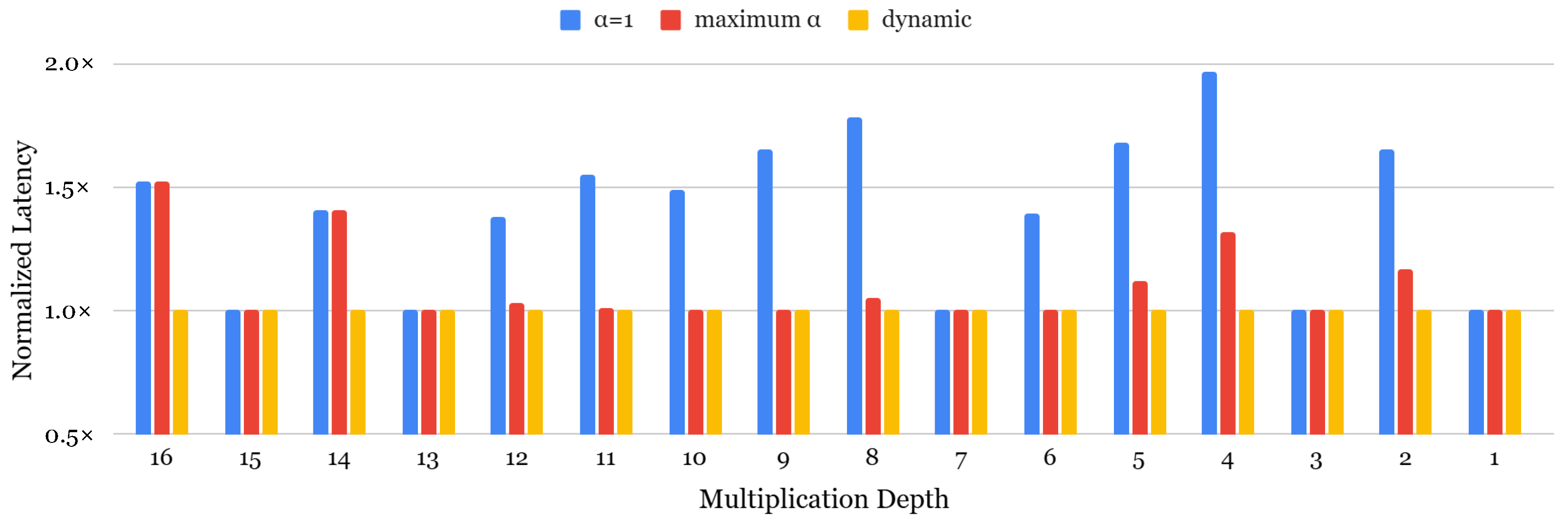

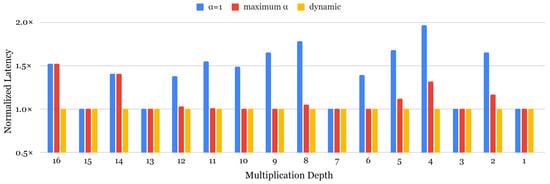

The ciphertext level is a new factor when considering a rotation keyset, which has not been the main focus of previous work. Our key observation is that ciphertext levels for rotations are often set lower than the maximum level, even though rotation keys are typically generated up to the maximum level by default in FHE libraries. This implies that rotation keys for higher levels are never used, introducing unnecessary memory waste. Note that this consideration of levels does not significantly impact latency in our evaluation environment. Therefore, we aim to optimize this unused memory allocation to minimize overall memory consumption. To advance our dynamic selection approach with level-aware rotation keyset generation, we first analyze the impact of bootstrapping latency in the dynamic approach [63], as shown in Figure 8. For almost all multiplication depths, the dynamic selection strategy achieves the fastest latency. Since the smaller multiplication depths allow more to be used at higher levels, the acceleration effect is particularly evident compared to = 1. There are two special cases for the effects of dynamic . For one special case, for multiplication depths with 15, 13, 7, 3, and 1 values, the dynamic strategy does not show a noticeable acceleration effect compared to = 1 due to the fact that is a prime, where the only available is 1 for these depths. Similarly, for multiplication depths of 9 and 6, the latency results for dynamic and max are identical. This is because, when evaluating all possible values for each depth, with {1, 5} for depth 9 and {1, 2} for depth 6, the ciphertexts at each level are optimized to = 5 and = 2, respectively. As a higher is not beneficial for low-level ciphertexts, a dynamic achieves the fastest performance in all cases, averaging 1.41× in speed-up value compared to = 1 and 1.12× in maximized cases.

Figure 8.

Bootstrapping speed up of dynamic α compared to static α.

To reduce memory consumption, we propose a level-aware rotation keyset generation strategy, which only generates the keys that are actually needed considering the ciphertext level, including the rotation keyset for bootstrapping. The rotation keyset is generally generated from level 0 to the maximum available level (1 + #Q), as defined by the parameter setting. This also generates rotation keys that will never be used, inducing memory waste. Table 5 describes the total memory consumption of the rotation keyset for bootstrapping. As mentioned earlier, for multiplication depths of 15, 13, 7, 3, and 1, dynamic does not provide a benefit, since the only available option is 1. For other cases, dynamic results in lower memory consumption compared to the = 1 case. However, compared to the max approach, pure dynamic uses relatively larger memory because it dynamically generates keys for multiple values. To optimize this, we generated a rotation keyset in a level-aware manner, which is noted as dynamic+level-aware in the table. In some cases, the memory usage of our strategy is greater than the max approach, but this trade-off enables a comparable level of performance improvement, as shown in Figure 8.

Table 5.

Rotation keyset memory consumption for bootstrapping.

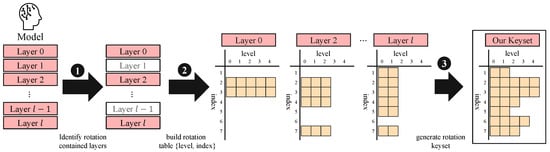

5.4. Combining the Three Factors to Generate a Rotation Keyset for Neural Networks

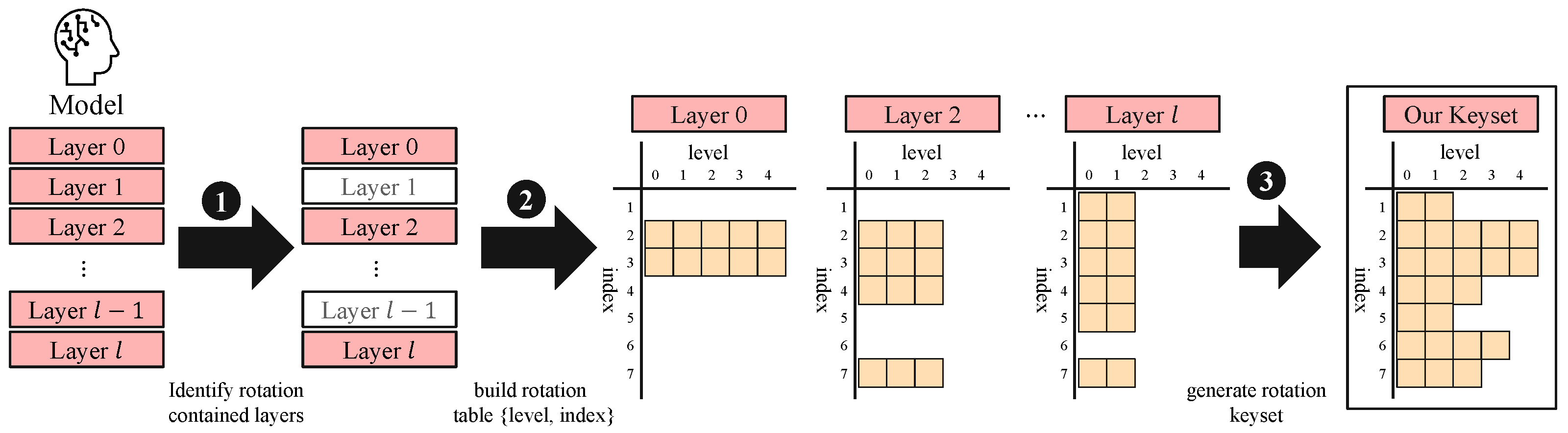

Combining insights from the three factors of KDS presented above, we extend our level-aware strategy to neural networks, as demonstrated in Figure 9. We begin by analyzing the neural network’s computation to identify the specific level status of rotation calls at certain layers, determining which levels are actually used. Since our goal is to generate the rotation keyset considering the neural network, we exclude layers such as activation or batch normalization, which operate in an SIMD manner and do not perform rotations. Then, we construct a rotation table for each layer that involves rotations and mapping rotation indices against ciphertext levels. This provides a comprehensive view of rotation requirements across the entire neural network. By doing so, we can construct a minimal set of required rotation keys for the target neural network. Finally, we generate level-aware rotation keys based on the rotation table with optimized for each level. This approach ensures compatibility with the RNS structure while avoiding the generation of unnecessary keys. By precisely tailoring the key generation to the actual needs of the neural network, we achieve substantial memory savings without sacrificing the ability to perform any required rotation.

Figure 9.

Overview of our approach.

6. Evaluation

6.1. Experimental Setup

We conducted our experiments on a system equipped with two Intel Xeon Gold 6326 CPUs (2.9 GHz), providing 64 threads and 1TB of main memory. All evaluations were performed on Ubuntu 22.04 LTS using the HEaaN library [59]. We measured the memory consumption of rotation keys used for inference and bootstrapping to evaluate the trade-offs between latency and memory efficiency. To demonstrate the effectiveness of our approach in real-world scenarios, we present two case studies with experimental results.

6.2. Compared Baselines

We compared our method with several baseline approaches based on different keyset design space (KDS) factors, including index selection, selection, and level selection.

- Index Selection Baselines: We considered two widely adopted index selection strategies:

- –

- Power-of-Two (Pot): Generates a default rotation keyset consisting of power-of-two indices (both positive and negative) and performs recursive rotations for arbitrary indices.

- –

- All-Required: Generates rotation keys for all indices required during inference, eliminating the need for recursive rotation calls.

The keyset generated by all-required depends on the benchmark, whereas power-of-two produces the same keyset across all benchmarks. - Selection Baselines: We compared our approach with two different configurations:

- –

- = 1 (P1): A standard option in several FHE libraries [60], which maximizes the available multiplicative depth.

- –

- Dynamic (Pdyn): A strategy that dynamically selects the optimal to minimize key-switching latency.

- Level-Selection Baselines: We compared our approach with a naive full-level key-generation strategy, where rotation keys are generated for all ciphertext levels. Since prior research has not explicitly considered level-aware key selection, this serves as a reference for evaluating the effectiveness of our approach.

6.3. Case Study 1: Wireless Intrusion Detection System (IDS) Based on Gated Recurrent Unit (GRU)

This intrusion detection system (IDS) case involves a wireless sensor that captures network traffic and analyzes it to determine whether the activity is malicious. As identified in previous works [76,77,78], attributes extracted from network traffic can reveal sensitive internal device behaviors, such as root privileges or user actions. Although a single packet may not appear sensitive on its own, it can indirectly expose private information, for example, by inferring the presence of a specific individual [79]. For our evaluation of IDS, we use the NSL-KDD dataset [38], an improved version of the widely used KDD99 [37] dataset. Each record in NSL-KDD contains 41 features and is labeled in one of five categories: benign or one of four attack types—Denial-of-Service (DoS), User-to-Root (U2R), Remote-to-Local (R2L), and Probing (Probe) attacks. As our baseline model, we adopt a GRU-based model proposed in prior work [39], demonstrating a high attack classification accuracy of 99.19%. We followed the data pre-processing method and used the same model architecture proposed in the original paper. The model consists of one GRU layer with 128 hidden units followed by three fully connected layers using the sigmoid activation function. To evaluate non-arithmetic functions under FHE, we use the degree-7 polynomial approximation implemented in MHEGRU [80].

6.3.1. End-to-End Inference Latency

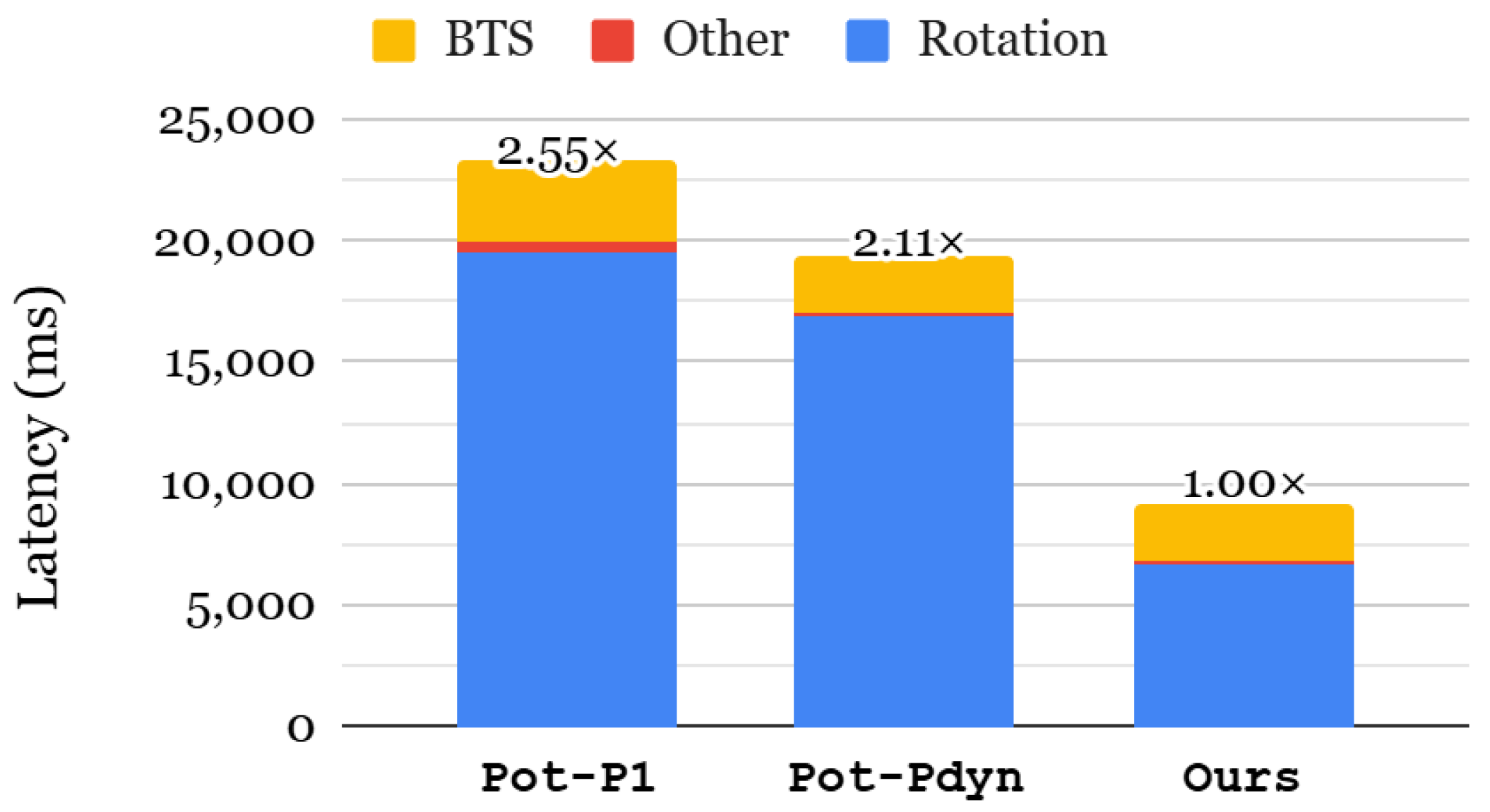

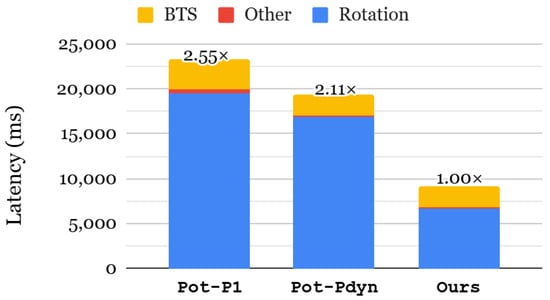

Figure 10 shows the end-to-end latency of the GRU-MLP model, comparing different KDS approaches. Since the latency of our method is identical to the all-required for index selection, we omit its result. The most time-dominant part of the GRU-MLP model is rotation because both GRU and MLP layers rely heavily on matrix–vector multiplications, which involve numerous rotations. We first evaluate the impact of dynamic selection by comparing Pot-P1 and Pot-Pdyn. Dynamic selection reduces rotation and BTS cost by 1.16× and 1.52×, respectively, resulting in an overall inference speedup of 1.21×. Since the GRU-MLP model requires 254 distinct rotation indices, the all-required index selection results in a 2.53× speedup in rotation, leading to a 2.11× improvement in the end-to-end inference latency.

Figure 10.

End-to-end latency of GRU-MLP using NSL-KDD dataset.

6.3.2. Memory Consumption

Table 6 presents the memory consumption of the rotation keyset for the GRU+MLP model. As previously discussed, the model requires 254 distinct rotation indices, resulting in huge memory overhead with the all-required index selection. In contrast, the power-of-two index selection substantially reduces the memory requirement by 5.50–6.61× compared to all-required in different configurations. However, as shown in Figure 10, power-of-two selection is 2.11–2.55× slower than all-required. To retain the performance benefits of all-required while mitigating its memory overhead, we adopted a level-selection strategy that only generates the necessary keys. Since dynamic selection includes many unused keys at different levels, our selective key-generation approach efficiently reduces the overall memory usage. As a result, our proposed KDS improves both latency and memory efficiency over prior methods.

Table 6.

Memory consumption for rotation keyset of GRU+MLP model.

6.4. Case Study 2: Image Classification Based on Convolutional Neural Network (CNN)

Convolutional neural networks (CNNs) are widely adopted for analyzing image data, making them well-suited for sensors such as cameras that produce image data. To assess the effectiveness of our approach on CNNs, we evaluated two widely used architectures—ResNet-20 [6] and SqueezeNet [81]—on the CIFAR-10 dataset. Additionally, we compared our results with two baseline FHE CNNs that differ in ReLU depth: one using a low-depth ReLU function (AESPA) and another employing a high-depth ReLU function (MPCNN). AESPA utilizes a square function as its activation function, which consumes only one level, whereas MPCNN employs a composite function composed of multiple polynomials [82], resulting in significantly deeper multiplicative depths (e.g., 14).

6.4.1. End-to-End Inference Latency

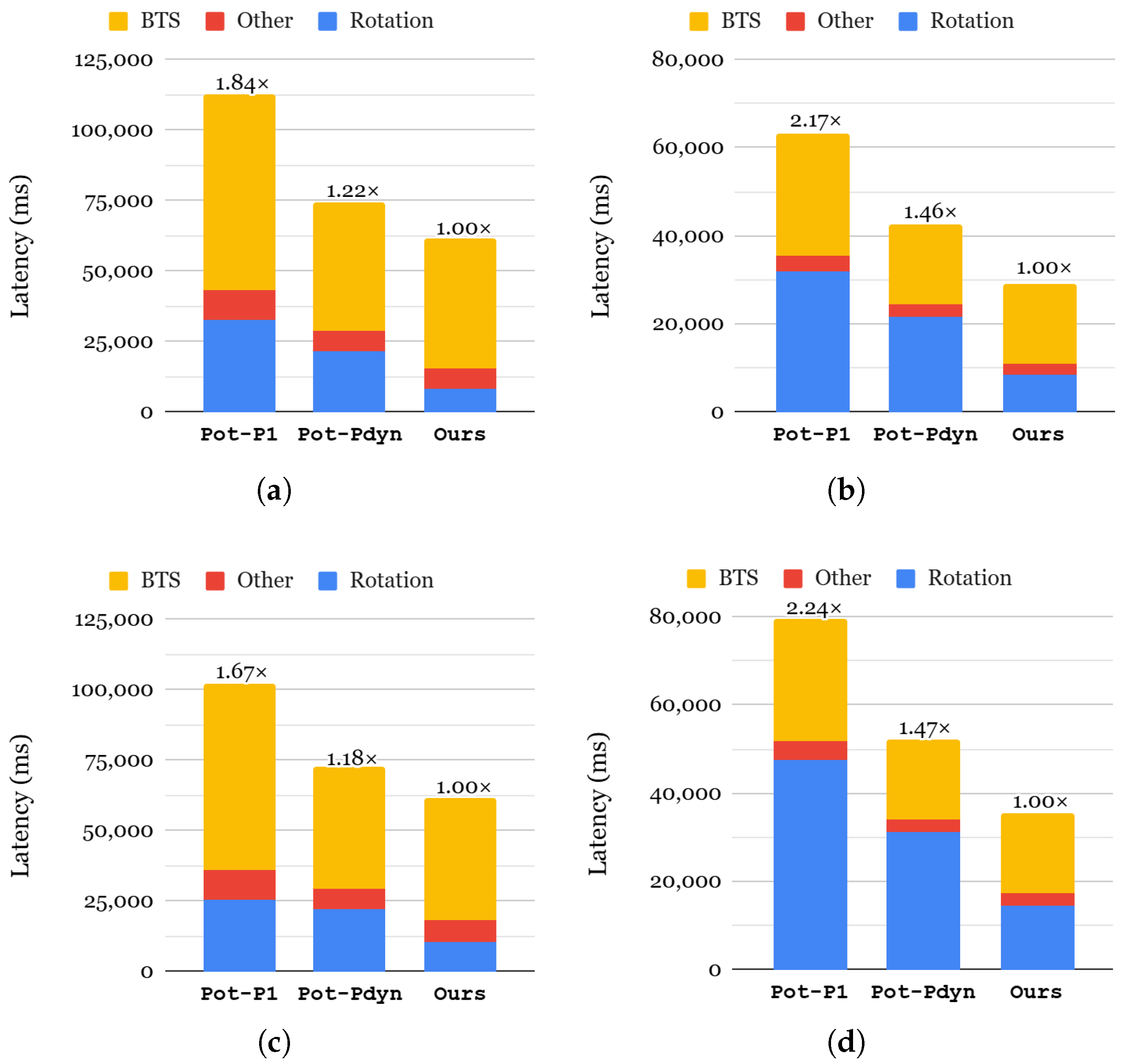

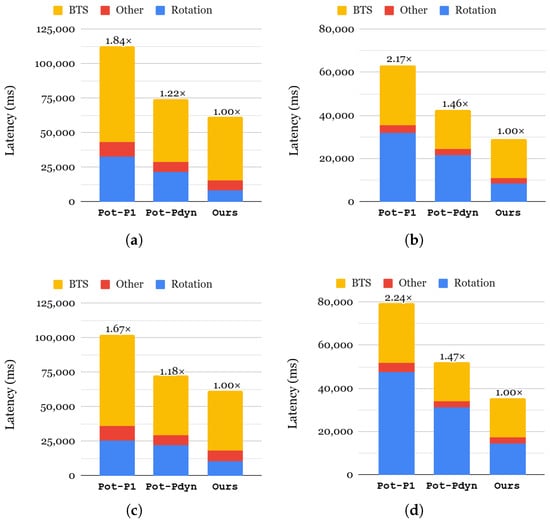

Figure 11 presents the end-to-end inference latency results for our approach compared to two different selection strategies. Note that level selection does not impact inference latency but rather memory consumption. Therefore, we do not provide an evaluation result of the latency between different level-selection approaches. For two approaches, Pot-P1 and Pot-Pdyn, we chose power-of-two as their index selection to highlight the effectiveness of each KDS factor sequentially. Compared to Pot-P1, Pot-Pdyn achieves 1.41–1.52× faster inference for each neural network evaluation thanks to the acceleration in key switching. As represented in each bar, the latencies of all components are reduced, especially by 1.16–1.52× in rotation and 1.49× in bootstrapping. This demonstrates the effectiveness of Pot-Pdyn selection compared to Pot-P1 for neural networks. The difference between our approach and the Pot-Pdyn approach lies in index selection, and we use all-required. By generating all indices required for a network, our approach improves the rotation latency, which in turn enhances the performance of overall inferences: 1.22–1.45× for SqueezeNet and 1.18–1.47× for ResNet-20.

Figure 11.

Comparison of the end-to-end latency of neural networks using the CIFAR-10 dataset (a): SqueezeNet using MPCNN; (b): SqueezeNet using AESPA; (c): ResNet-20 using MPCNN; (d): ResNet-20 using AESPA.

6.4.2. Memory Consumption

Table 7 summarizes the memory consumption of rotation keysets under different KDS factor selections. The all-required index selection requires the highest memory overhead of over 490 GBs as it generates all indices that are needed, resulting in greater memory consumption compared to the power-of-two index selection. For selection, the dynamic strategy nearly doubles memory consumption because it requires storing multiple sets of rotation keys for different values. While this configuration improves computational efficiency, it introduces a substantial trade-off in terms of storage requirements. The last row of Table 7 presents the results for our approach, which achieves minimal memory consumption compared to all other configurations. Our rotation keyset is carefully designed to generate only the necessary keys up to the required ciphertext level for each neural network. For example, instead of generating rotation keys up to the maximum multiplicative depth, we limit key generation to the highest level required after bootstrapping (e.g., level 19 for a multiplication depth of 16). This significantly reduces memory usage while maintaining computational efficiency. Another notable finding is that our method is the only one that generates distinct keysets for all four benchmark cases, demonstrating its adaptability to different neural network architectures. Unlike baseline approaches that apply the same keyset to all benchmarks, our strategy dynamically adjusts key selection based on application-specific requirements, further optimizing memory efficiency.

Table 7.

Comparison of rotation keyset memory consumption.

7. Discussion and Future Work

7.1. Deploying Neural Networks Directly on IoT Devices

The simplest way to protect a user’s private data is to perform inference locally on the IoT device itself. To accommodate local inferences on IoT devices, several works have sought wisdom from quantization or pruning [83,84,85,86], which made it possible to efficiently deploy AI models on edge devices by reducing the computational and memory overhead [8,87,88]. However, such approaches are not one-way optimizations but induce trade-offs with accuracy, degrading the quality of the model. The impact is frequently severe, having an accuracy drop by up to 60% [83]. To deliver high-quality models without compromise (i.e., without the accuracy loss associated with quantization), AI service providers typically perform inference on remote servers through MLaaS, which is the scenario adopted in this work. Additionally, from the perspective of service providers, who consider their trained models intellectual property, it is possible to avoid disclosing the model to clients.

7.2. Application to Diverse Neural Networks

Numerous studies have explored efficient methods for evaluating neural networks over FHE, including AESPA and MPCNN. For instance, AutoFHE [55] dynamically assigns polynomial approximations to different ReLU layers within a convolutional neural network (CNN), using a neural architecture search to predict their impact on accuracy and performance. In our evaluation on the AESPA model, which adopts the lowest-degree polynomial as its activation function, we observed the most varied distribution of ciphertext levels across layers. Based on this observation, we envision that our approach can effectively support the dynamic selection of activation functions. In addition, our approach is broadly applicable to a wide range of FHE-based neural networks, as it does not impose limitations on analyzing rotation indices. By only generating the necessary rotation keys while accounting for ciphertext levels, we anticipate that our method can improve efficiency across different architectures.

7.3. Future Works

In this subsection, we outline several directions for future work to help the research community further advance this study:

- Automated FHE Keyset Configuration: The automation of FHE-applied applications has been widely studied in recent years. Several works have aimed to improve usability by automating various aspects of FHE deployment, such as FHE parameter selection [67], bootstrapping placement [53,55], and FHE-specific operation scheduling (e.g., rescaling) [89,90]. Building on these efforts, developing an automated algorithm to determine the optimal FHE keyset configuration represents a promising research direction. Given our observation that optimized keysets can lead to significant improvements in either memory efficiency or inference performance, designing keyset selection algorithms tailored to application-level requirements is a valuable next step.

- FHE Keyset Configuration via Key Extension: While our work focuses on dynamic selection, a recent study [73] proposed extending key sizes to improve performance. Specifically, they decompose keys for certain operations (including rotations), which increases memory overhead but reduces the complexity of NTT/iNTT transformations. Although this approach shows promise, several challenges remain before it can be practically implemented, including managing the trade-off between memory usage and execution speed, as well as integration with existing FHE toolchains. First, extending the key obviously induces much higher memory consumption, so performance will highly depend on the memory bandwidth of the running hardware platform. Considering that the minimal keyset already consumed tens of GB, the increase leads to higher memory transfer latency. Meanwhile, note that the results we acquired are from CPUs with DDR4 DRAM memory, where the memory bandwidth is merely 64 bits. Since memory bandwidth is already low and each key is worth MBs (thus, not many cache hits), memory transfer is already at the peak, requiring reading keys per FHE operations. As a result, the increased memory consumption from the key extension does not lead to extensive performance degradation. The contrast between the impacts suggests that we need to consider the characteristics of the running hardware platform in order to choose the appropriate keyset design because an efficient keyset for a platform does not necessarily lead to good performance on others.

8. Conclusions

In this work, we presented a comprehensive analysis of the key design space and introduced an approach to designing an efficient keyset for FHE-based neural networks. We presented three components of the key design space (KDS) and analyzed the impact of each on latency and memory consumption in neural networks. To demonstrate the applicability of our approach to sensor-based devices, we conducted two case studies: one for intrusion detection systems and the other for image classification. Our evaluation demonstrated that our level-aware approach reduces memory consumption by up to 11.29× while achieving an average 2.09× faster latency across different neural networks. We envision that our approach facilitates the practical deployment of FHE-based neural networks for resource-constrained environments, making PPML more feasible in real-world applications.

Author Contributions

Conceptualization, Y.P., H.O. and Y.J.; methodology, Y.J.; validation, H.O. and Y.J.; formal analysis, Y.P.; investigation, Y.J.; resources, Y.J.; data curation, Y.J. and S.H.; writing—original draft preparation, Y.P.; writing—review and editing, Y.P. and H.O.; visualization, Y.J. and S.H.; supervision, Y.P.; project administration, Y.J.; funding acquisition, Y.P. All authors have read and agreed to the published version of the manuscript.

Funding

This work was partly supported by the National Research Foundation of Korea (NRF) grant funded by the Korean government (MSIT) (RS-2023-00277326 and RS-2022-00166529); the Institute of Information & Communications Technology Planning & Evaluation (IITP) grant funded by the Korean government (MSIT) (No. 2021-0-00528, Development of Hardware-centric Trusted Computing Base and Standard Protocol for Distributed Secure Data Box; No. RS-2023-00277060, Development of open edge AI SoC hardware and software platform; No. RS-2024-00438729, Development of Full Lifecycle Privacy-Preserving Techniques using Anonymized Confidential Computing); the Korea Planning & Evaluation Institute of Industrial Technology (KEIT) grant funded by the Korean Government (MOTIE) (No. RS-2024-00406121, Development of an Automotive Security Vulnerability-based Threat Analysis System (R&D)); and by the BK21 FOUR program of the Education and Research Program for Future ICT Pioneers, Seoul National University, in 2025.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| IoT | Internet of Things |

| MLaaS | Machine Learning as a Service |

| PPML | Privacy-Preserving Machine Learning |

| RLWE | Ring Learning with Error |

| FHE | Fully Homomorphic Encryption |

| KDS | Keyset design space |

| RNS | Residue Number System |

| IDS | Intrusion detection system |

References

- Xie, L.; Li, Z.; Zhou, Y.; He, Y.; Zhu, J. Computational diagnostic techniques for electrocardiogram signal analysis. Sensors 2020, 20, 6318. [Google Scholar] [CrossRef] [PubMed]

- Neri, L.; Oberdier, M.T.; van Abeelen, K.C.; Menghini, L.; Tumarkin, E.; Tripathi, H.; Jaipalli, S.; Orro, A.; Paolocci, N.; Gallelli, I.; et al. Electrocardiogram monitoring wearable devices and artificial-intelligence-enabled diagnostic capabilities: A review. Sensors 2023, 23, 4805. [Google Scholar] [CrossRef] [PubMed]

- Arakawa, T. Recent research and developing trends of wearable sensors for detecting blood pressure. Sensors 2018, 18, 2772. [Google Scholar] [CrossRef] [PubMed]

- Konstantinidis, D.; Iliakis, P.; Tatakis, F.; Thomopoulos, K.; Dimitriadis, K.; Tousoulis, D.; Tsioufis, K. Wearable blood pressure measurement devices and new approaches in hypertension management: The digital era. J. Hum. Hypertens. 2022, 36, 945–951. [Google Scholar] [CrossRef]

- Lazazzera, R.; Belhaj, Y.; Carrault, G. A new wearable device for blood pressure estimation using photoplethysmogram. Sensors 2019, 19, 2557. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Tan, M.; Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; PMLR: Cambridge, MA, USA, 2019; pp. 6105–6114. [Google Scholar]

- Li, X.; Li, Y.; Li, Y.; Cao, T.; Liu, Y. Flexnn: Efficient and adaptive dnn inference on memory-constrained edge devices. In Proceedings of the 30th Annual International Conference on Mobile Computing and Networking, Washington, DC, USA, 30 September–4 October 2024; pp. 709–723. [Google Scholar]

- Manage Your App’s Memory. Android Developers. 2023. Available online: https://developer.android.com/topic/performance/memory (accessed on 5 May 2025).

- Liu, Z.; Luo, P.; Wang, X.; Tang, X. Large-scale celebfaces attributes (celeba) dataset. Retrieved August 2018, 15, 11. [Google Scholar]

- Said, Y.; Barr, M. Human emotion recognition based on facial expressions via deep learning on high-resolution images. Multimed. Tools Appl. 2021, 80, 25241–25253. [Google Scholar] [CrossRef]

- Ning, X.; Xu, S.; Nan, F.; Zeng, Q.; Wang, C.; Cai, W.; Li, W.; Jiang, Y. Face editing based on facial recognition features. IEEE Trans. Cogn. Dev. Syst. 2022, 15, 774–783. [Google Scholar] [CrossRef]

- Rombach, R.; Blattmann, A.; Lorenz, D.; Esser, P.; Ommer, B. High-resolution image synthesis with latent diffusion models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 10684–10695. [Google Scholar]

- Liu, Z.; Luo, P.; Wang, X.; Tang, X. Deep learning face attributes in the wild. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 3730–3738. [Google Scholar]

- Kim, M.; Jain, A.K.; Liu, X. Adaface: Quality adaptive margin for face recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 18750–18759. [Google Scholar]

- Korshunova, I.; Shi, W.; Dambre, J.; Theis, L. Fast face-swap using convolutional neural networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 3677–3685. [Google Scholar]

- Li, Z.; Cao, M.; Wang, X.; Qi, Z.; Cheng, M.M.; Shan, Y. Photomaker: Customizing realistic human photos via stacked id embedding. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 8640–8650. [Google Scholar]

- Fei, B.; Lyu, Z.; Pan, L.; Zhang, J.; Yang, W.; Luo, T.; Zhang, B.; Dai, B. Generative diffusion prior for unified image restoration and enhancement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 9935–9946. [Google Scholar]

- Andriluka, M.; Pishchulin, L.; Gehler, P.; Schiele, B. 2D Human Pose Estimation: New Benchmark and State of the Art Analysis. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 3686–3693. [Google Scholar]

- Goel, S.; Pavlakos, G.; Rajasegaran, J.; Kanazawa, A.; Malik, J. Humans in 4D: Reconstructing and tracking humans with transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Vancouver, BC, Canada, 17–24 June 2023; pp. 14783–14794. [Google Scholar]

- Zheng, C.; Wu, W.; Chen, C.; Yang, T.; Zhu, S.; Shen, J.; Kehtarnavaz, N.; Shah, M. Deep learning-based human pose estimation: A survey. ACM Comput. Surv. 2023, 56, 1–37. [Google Scholar] [CrossRef]

- Busso, C.; Bulut, M.; Lee, C.C.; Kazemzadeh, A.; Mower, E.; Kim, S.; Chang, J.N.; Lee, S.; Narayanan, S.S. IEMOCAP: Interactive emotional dyadic motion capture database. Lang. Resour. Eval. 2008, 42, 335–359. [Google Scholar] [CrossRef]

- Chudasama, V.; Kar, P.; Gudmalwar, A.; Shah, N.; Wasnik, P.; Onoe, N. M2fnet: Multi-modal fusion network for emotion recognition in conversation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 4652–4661. [Google Scholar]

- Moody, G.B.; Mark, R.G. The impact of the MIT-BIH arrhythmia database. IEEE Eng. Med. Biol. Mag. 2001, 20, 45–50. [Google Scholar] [CrossRef]

- Mousavi, S.; Afghah, F. Inter-and intra-patient ecg heartbeat classification for arrhythmia detection: A sequence to sequence deep learning approach. In Proceedings of the ICASSP 2019-2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1308–1312. [Google Scholar]

- Tuli, S.; Casale, G.; Jennings, N.R. Tranad: Deep transformer networks for anomaly detection in multivariate time series data. arXiv 2022, arXiv:2201.07284. [Google Scholar] [CrossRef]

- Wagner, P.; Strodthoff, N.; Bousseljot, R.D.; Kreiseler, D.; Lunze, F.I.; Samek, W.; Schaeffter, T. PTB-XL, a large publicly available electrocardiography dataset. Sci. Data 2020, 7, 1–15. [Google Scholar] [CrossRef]

- Xiao, Q.; Lee, K.; Mokhtar, S.A.; Ismail, I.; Pauzi, A.L.b.M.; Zhang, Q.; Lim, P.Y. Deep learning-based ECG arrhythmia classification: A systematic review. Appl. Sci. 2023, 13, 4964. [Google Scholar] [CrossRef]

- Malakouti, S.M. Heart disease classification based on ECG using machine learning models. Biomed. Signal Process. Control 2023, 84, 104796. [Google Scholar] [CrossRef]

- Reiss, A.; Stricker, D. Introducing a new benchmarked dataset for activity monitoring. In Proceedings of the 2012 16th International Symposium on Wearable Computers, Newcastle, UK, 18–22 June 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 108–109. [Google Scholar]

- Zhang, S.; Li, Y.; Zhang, S.; Shahabi, F.; Xia, S.; Deng, Y.; Alshurafa, N. Deep learning in human activity recognition with wearable sensors: A review on advances. Sensors 2022, 22, 1476. [Google Scholar] [CrossRef]

- Dua, N.; Singh, S.N.; Semwal, V.B. Multi-input CNN-GRU based human activity recognition using wearable sensors. Computing 2021, 103, 1461–1478. [Google Scholar] [CrossRef]

- Anguita, D.; Ghio, A.; Oneto, L.; Parra, X.; Reyes-Ortiz, J.L. A public domain dataset for human activity recognition using smartphones. In Proceedings of the Esann, Bruges, Belgium, 24–26 April 2013; Volume 3, pp. 3–4. [Google Scholar]

- Mutegeki, R.; Han, D.S. A CNN-LSTM approach to human activity recognition. In Proceedings of the 2020 International Conference on Artificial Intelligence in Information and Communication (ICAIIC), Fukuoka, Japan, 19–21 February 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 362–366. [Google Scholar]

- Murad, A.; Pyun, J.Y. Deep recurrent neural networks for human activity recognition. Sensors 2017, 17, 2556. [Google Scholar] [CrossRef]

- Dua, D.; Graff, C. UCI Machine Learning Repository. 2017. Available online: https://kdd.ics.uci.edu/databases/kddcup99/kddcup99.html (accessed on 5 May 2025).

- Tavallaee, M.; Bagheri, E.; Lu, W.; Ghorbani, A.A. A detailed analysis of the KDD CUP 99 data set. In Proceedings of the 2009 IEEE Symposium on Computational Intelligence for Security and Defense Applications, Ottawa, ON, Canada, 8–10 July 2009; IEEE: Piscataway, NJ, USA, 2009; pp. 1–6. [Google Scholar]

- Xu, C.; Shen, J.; Du, X.; Zhang, F. An intrusion detection system using a deep neural network with gated recurrent units. IEEE Access 2018, 6, 48697–48707. [Google Scholar] [CrossRef]

- Fu, Y.; Du, Y.; Cao, Z.; Li, Q.; Xiang, W. A deep learning model for network intrusion detection with imbalanced data. Electronics 2022, 11, 898. [Google Scholar] [CrossRef]

- Winkler, T.; Rinner, B. Privacy and security in video surveillance. In Intelligent Multimedia Surveillance: Current Trends and Research; Springer: Berlin/Heidelberg, Germany, 2013; pp. 37–66. [Google Scholar]

- Liranzo, J.; Hayajneh, T. Security and privacy issues affecting cloud-based IP camera. In Proceedings of the 2017 IEEE 8th Annual Ubiquitous Computing, Electronics and Mobile Communication Conference (UEMCON), New York City, NY, USA, 19–21 October 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 458–465. [Google Scholar]

- Li, J.; Zhang, Z.; Yu, S.; Yuan, J. Improved secure deep neural network inference offloading with privacy-preserving scalar product evaluation for edge computing. Appl. Sci. 2022, 12, 9010. [Google Scholar] [CrossRef]

- Zhu, W.; Sun, Y.; Liu, J.; Cheng, Y.; Ji, X.; Xu, W. Campro: Camera-based anti-facial recognition. arXiv 2023, arXiv:2401.00151. [Google Scholar]

- Bigelli, L.; Contoli, C.; Freschi, V.; Lattanzi, E. Privacy preservation in sensor-based Human Activity Recognition through autoencoders for low-power IoT devices. Internet Things 2024, 26, 101189. [Google Scholar] [CrossRef]

- Newaz, A.I.; Sikder, A.K.; Rahman, M.A.; Uluagac, A.S. A survey on security and privacy issues in modern healthcare systems: Attacks and defenses. ACM Trans. Comput. Healthc. 2021, 2, 1–44. [Google Scholar] [CrossRef]

- Celik, Z.B.; McDaniel, P.; Tan, G. Soteria: Automated {IoT} safety and security analysis. In Proceedings of the 2018 USENIX Annual Technical Conference (USENIX ATC 18), Boston, MA, USA, 11–13 July 2018; pp. 147–158. [Google Scholar]

- Ometov, A.; Molua, O.L.; Komarov, M.; Nurmi, J. A survey of security in cloud, edge, and fog computing. Sensors 2022, 22, 927. [Google Scholar] [CrossRef]

- Nassif, A.B.; Talib, M.A.; Nasir, Q.; Albadani, H.; Dakalbab, F.M. Machine learning for cloud security: A systematic review. IEEE Access 2021, 9, 20717–20735. [Google Scholar] [CrossRef]

- Attaullah, H.; Sanaullah, S.; Jungeblut, T. Analyzing Machine Learning Models for Activity Recognition Using Homomorphically Encrypted Real-World Smart Home Datasets: A Case Study. Appl. Sci. 2024, 14, 9047. [Google Scholar] [CrossRef]

- Kim, S.; Kim, J.; Kim, M.J.; Jung, W.; Kim, J.; Rhu, M.; Ahn, J.H. Bts: An accelerator for bootstrappable fully homomorphic encryption. In Proceedings of the 49th Annual International Symposium on Computer Architecture, New York, NY, USA, 18–22 June 2022; pp. 711–725. [Google Scholar]

- Riazi, M.S.; Laine, K.; Pelton, B.; Dai, W. HEAX: An architecture for computing on encrypted data. In Proceedings of the Twenty-Fifth International Conference on Architectural Support for Programming Languages and Operating Systems, Lausanne, Switzerland, 16–20 March 2020; pp. 1295–1309. [Google Scholar]

- Cheon, S.; Lee, Y.; Kim, D.; Lee, J.M.; Jung, S.; Kim, T.; Lee, D.; Kim, H. {DaCapo}: Automatic Bootstrapping Management for Efficient Fully Homomorphic Encryption. In Proceedings of the 33rd USENIX Security Symposium (USENIX Security 24), Philadelphia, PA, USA, 14–16 August 2024; pp. 6993–7010. [Google Scholar]

- Lee, E.; Lee, J.W.; Lee, J.; Kim, Y.S.; Kim, Y.; No, J.S.; Choi, W. Low-complexity deep convolutional neural networks on fully homomorphic encryption using multiplexed parallel convolutions. In Proceedings of the International Conference on Machine Learning, Baltimore, MD, USA, 17–23 July 2022; PMLR: Cambridge, MA, USA, 2022; pp. 12403–12422. [Google Scholar]

- Ao, W.; Boddeti, V.N. {AutoFHE}: Automated Adaption of {CNNs} for Efficient Evaluation over {FHE}. In Proceedings of the 33rd USENIX Security Symposium (USENIX Security 24), Philadelphia, PA, USA, 14–16 August 2024; pp. 2173–2190. [Google Scholar]

- Cheon, J.H.; Kim, A.; Kim, M.; Song, Y. Homomorphic encryption for arithmetic of approximate numbers. In Advances in Cryptology—ASIACRYPT 2017, Proceedings of the 23rd International Conference on the Theory and Applications of Cryptology and Information Security, Hong Kong, China, 3–7 December 2017; Proceedings, Part I 23; Springer: Berlin/Heidelberg, Germany, 2017; pp. 409–437. [Google Scholar]

- Cheon, J.H.; Han, K.; Kim, A.; Kim, M.; Song, Y. A full RNS variant of approximate homomorphic encryption. In Selected Areas in Cryptography—SAC 2018, Proceedings of the 25th International Conference, Calgary, AB, Canada, 15–17 August 2018; Revised Selected Papers 25; Springer: Berlin/Heidelberg, Germany, 2019; pp. 347–368. [Google Scholar]

- Han, K.; Ki, D. Better bootstrapping for approximate homomorphic encryption. In Proceedings of the Cryptographers’ Track at the RSA Conference, San Francisco, CA, USA, 24–28 February 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 364–390. [Google Scholar]

- CryptoLab. HEaaN Private AI Homomorphic Encryption Library. 2025. Available online: https://heaan.it/ (accessed on 5 May 2025).

- Microsoft SEAL, Version 4.1; Microsoft Research, Redmond, WA, USA. 2023. Available online: https://github.com/Microsoft/SEAL (accessed on 5 May 2025).

- Lattigo v5. EPFL-LDS, Tune Insight SA. 2023. Available online: https://github.com/tuneinsight/lattigo (accessed on 5 May 2025).

- Bae, Y.; Cheon, J.H.; Kim, J.; Park, J.H.; Stehlé, D. HERMES: Efficient ring packing using MLWE ciphertexts and application to transciphering. In Proceedings of the Annual International Cryptology Conference, Santa Barbara, CA, USA, 20–24 August 2023; Springer: Berlin/Heidelberg, Germany, 2023; pp. 37–69. [Google Scholar]

- Hwang, I.; Seo, J.; Song, Y. Optimizing HE operations via Level-aware Key-switching Framework. In Proceedings of the 11th Workshop on Encrypted Computing & Applied Homomorphic Cryptography, Copenhagen, Denmark, 26 November 2023; pp. 59–67. [Google Scholar]

- Juvekar, C.; Vaikuntanathan, V.; Chandrakasan, A. {GAZELLE}: A low latency framework for secure neural network inference. In Proceedings of the 27th USENIX Security Symposium (USENIX Security 18), Baltimore, MD, USA, 15–17 August 2018; pp. 1651–1669. [Google Scholar]

- Wang, W.; Huang, X.; Emmart, N.; Weems, C. VLSI design of a large-number multiplier for fully homomorphic encryption. IEEE Trans. Very Large Scale Integr. (VLSI) Syst. 2013, 22, 1879–1887. [Google Scholar] [CrossRef]

- Turan, F.; Roy, S.S.; Verbauwhede, I. HEAWS: An Accelerator for Homomorphic Encryption on the Amazon AWS FPGA. IEEE Trans. Comput. 2020, 69, 1185–1196. [Google Scholar] [CrossRef]

- Dathathri, R.; Kostova, B.; Saarikivi, O.; Dai, W.; Laine, K.; Musuvathi, M. EVA: An Encrypted Vector Arithmetic Language and Compiler for Efficient Homomorphic Computation. In Proceedings of the 41st ACM SIGPLAN Conference on Programming Language Design and Implementation, London, UK, 15–20 June 2020; pp. 546–561. [Google Scholar]

- Dathathri, R.; Saarikivi, O.; Chen, H.; Laine, K.; Lauter, K.; Maleki, S.; Musuvathi, M.; Mytkowicz, T. CHET: An optimizing compiler for fully-homomorphic neural-network inferencing. In Proceedings of the 40th ACM SIGPLAN Conference on Programming Language Design and Implementation, Phoenix, AZ, USA, 22–26 June 2019; pp. 142–156. [Google Scholar]

- Malik, R.; Sheth, K.; Kulkarni, M. Coyote: A Compiler for Vectorizing Encrypted Arithmetic Circuits. In Proceedings of the 28th ACM International Conference on Architectural Support for Programming Languages and Operating Systems, Vancouver, BC, Canada, 25–29 March 2023; Volume 3, pp. 118–133. [Google Scholar]

- Viand, A.; Jattke, P.; Haller, M.; Hithnawi, A. HECO: Automatic code optimizations for efficient fully homomorphic encryption. arXiv 2022, arXiv:2202.01649. [Google Scholar]

- Al Badawi, A.; Jin, C.; Lin, J.; Mun, C.F.; Jie, S.J.; Tan, B.H.M.; Nan, X.; Aung, K.M.M.; Chandrasekhar, V.R. Towards the Alexnet Moment for Homomorphic Encryption: Hcnn, the First Homomorphic CNN on Encrypted Data with GPUs. IEEE Trans. Emerg. Top. Comput. 2020, 9, 1330–1343. [Google Scholar] [CrossRef]

- Park, J.; Kim, M.J.; Jung, W.; Ahn, J.H. AESPA: Accuracy preserving low-degree polynomial activation for fast private inference. arXiv 2022, arXiv:2201.06699. [Google Scholar]

- Kim, M.; Lee, D.; Seo, J.; Song, Y. Accelerating HE operations from key decomposition technique. In Proceedings of the Annual International Cryptology Conference, Santa Barbara, CA, USA, 20–24 August 2023; Springer: Berlin/Heidelberg, Germany, 2023; pp. 70–92. [Google Scholar]

- Chan, J.L.; Lee, W.K.; Wong, D.C.K.; Yap, W.S.; Goi, B.M. ARK: Adaptive Rotation Key Management for Fully Homomorphic Encryption Targeting Memory Efficient Deep Learning Inference. Cryptol. ePrint Arch. 2024; 2024/1948. [Google Scholar]

- Joo, Y.; Ha, S.; Oh, H.; Paek, Y. Rotation Keyset Generation Strategy for Efficient Neural Networks Using Homomorphic Encryption. In Proceedings of the International Conference on Artificial Intelligence Computing and Systems (AIComps), Jeju, Republic of Korea, 16–18 December 2024; KIPS: Seoul, Republic of Korea, 2024; pp. 37–42. [Google Scholar]

- Li, J.; Li, Z.; Tyson, G.; Xie, G. Your privilege gives your privacy away: An analysis of a home security camera service. In Proceedings of the IEEE INFOCOM 2020-IEEE Conference on Computer Communications, Toronto, ON, Canada, 6–9 July 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 387–396. [Google Scholar]

- Apthorpe, N.; Huang, D.Y.; Reisman, D.; Narayanan, A.; Feamster, N. Keeping the smart home private with smart (er) iot traffic shaping. arXiv 2018, arXiv:1812.00955. [Google Scholar] [CrossRef]

- Acar, A.; Fereidooni, H.; Abera, T.; Sikder, A.K.; Miettinen, M.; Aksu, H.; Conti, M.; Sadeghi, A.R.; Uluagac, S. Peek-a-boo: I see your smart home activities, even encrypted! In Proceedings of the 13th ACM Conference on Security and Privacy in Wireless and Mobile Networks, Linz, Austria, 8–10 July 2020; pp. 207–218. [Google Scholar]

- Copos, B.; Levitt, K.; Bishop, M.; Rowe, J. Is Anybody Home? Inferring Activity From Smart Home Network Traffic. In Proceedings of the 2016 IEEE Security and Privacy Workshops (SPW), San Jose, CA, USA, 22–26 May 2016; pp. 245–251. [Google Scholar] [CrossRef]

- Jang, J.; Lee, Y.; Kim, A.; Na, B.; Yhee, D.; Lee, B.; Cheon, J.H.; Yoon, S. Privacy-preserving deep sequential model with matrix homomorphic encryption. In Proceedings of the 2022 ACM on Asia Conference on Computer and Communications Security, Nagasaki, Japan, 30 May–3 June 2022; pp. 377–391. [Google Scholar]

- Iandola, F.N. SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and <0.5 MB model size. arXiv 2016, arXiv:1602.07360. [Google Scholar]

- Lee, E.; Lee, J.W.; No, J.S.; Kim, Y.S. Minimax approximation of sign function by composite polynomial for homomorphic comparison. IEEE Trans. Dependable Secur. Comput. 2021, 19, 3711–3727. [Google Scholar] [CrossRef]

- Wu, H.; Judd, P.; Zhang, X.; Isaev, M.; Micikevicius, P. Integer quantization for deep learning inference: Principles and empirical evaluation. arXiv 2020, arXiv:2004.09602. [Google Scholar]

- Li, Z.; Li, H.; Meng, L. Model compression for deep neural networks: A survey. Computers 2023, 12, 60. [Google Scholar] [CrossRef]

- Molchanov, P.; Tyree, S.; Karras, T.; Aila, T.; Kautz, J. Pruning convolutional neural networks for resource efficient inference. arXiv 2016, arXiv:1611.06440. [Google Scholar]

- Kuzmin, A.; Nagel, M.; Van Baalen, M.; Behboodi, A.; Blankevoort, T. Pruning vs quantization: Which is better? Adv. Neural Inf. Process. Syst. 2023, 36, 62414–62427. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 4510–4520. [Google Scholar]

- Sun, Z.; Yu, H.; Song, X.; Liu, R.; Yang, Y.; Zhou, D. Mobilebert: A compact task-agnostic bert for resource-limited devices. arXiv 2020, arXiv:2004.02984. [Google Scholar]

- Lee, Y.; Cheon, S.; Kim, D.; Lee, D.; Kim, H. {ELASM}: {Error-Latency-Aware} Scale Management for Fully Homomorphic Encryption. In Proceedings of the 32nd USENIX Security Symposium (USENIX Security 23), Anaheim, CA, USA, 9–11 August 2023; pp. 4697–4714. [Google Scholar]

- Lee, Y.; Cheon, S.; Kim, D.; Lee, D.; Kim, H. Performance-aware scale analysis with reserve for homomorphic encryption. In Proceedings of the 29th ACM International Conference on Architectural Support for Programming Languages and Operating Systems, La Jolla, CA, USA, 27 April–1 May 2024; Volume 1, pp. 302–317. [Google Scholar]