Improvement of SAM2 Algorithm Based on Kalman Filtering for Long-Term Video Object Segmentation

Abstract

1. Introduction

2. Related Work

2.1. Visual Object Segmentation (VOS)

2.2. Segment Anything Model (SAM)

3. Methods

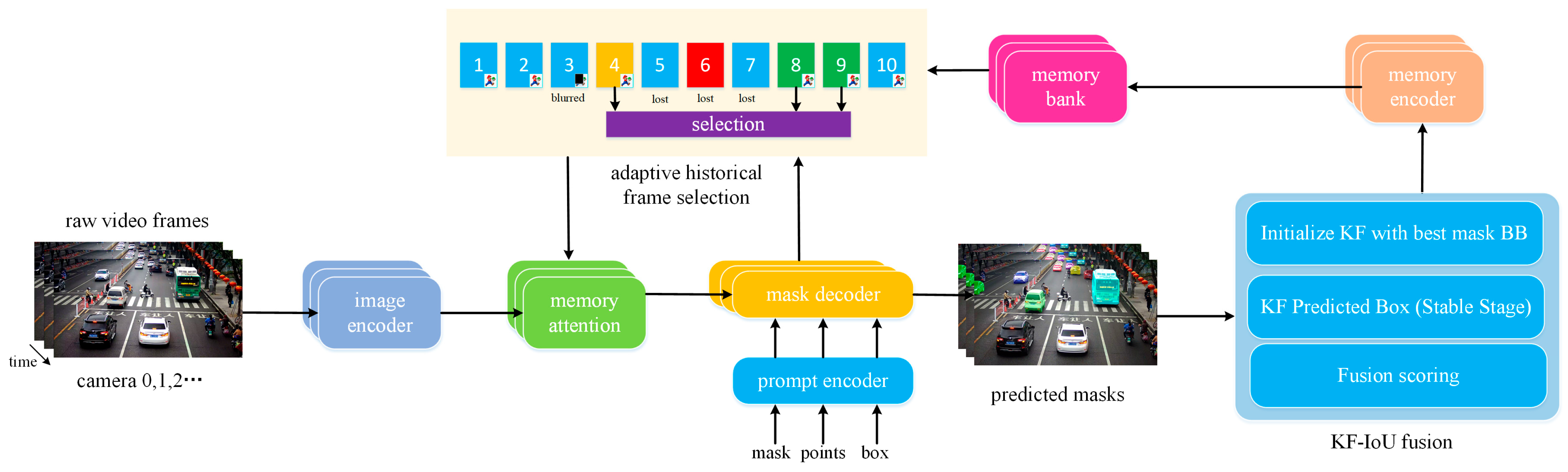

3.1. Baseline SAM2 Overview

- Image Encoder: SAM2 employs a ViT-Hiera backbone [38] to extract hierarchical visual embeddings. These dense features are used for both image and video inference.

- Prompt Encoder: User-defined prompts (e.g., clicks, boxes, and masks) are encoded into task-specific embeddings to guide segmentation.

- Memory Bank: SAM2 maintains a memory bank of previous frame features to support video tracking. Features are encoded using a memory encoder and aligned through memory attention.

- Mask Decoder: Based on fused visual and memory features, the mask decoder generates segmentation masks conditioned on prompts. In video sequences, it also ensures temporal stability.

3.2. Kalman Filtering-IoU Fusion Framework

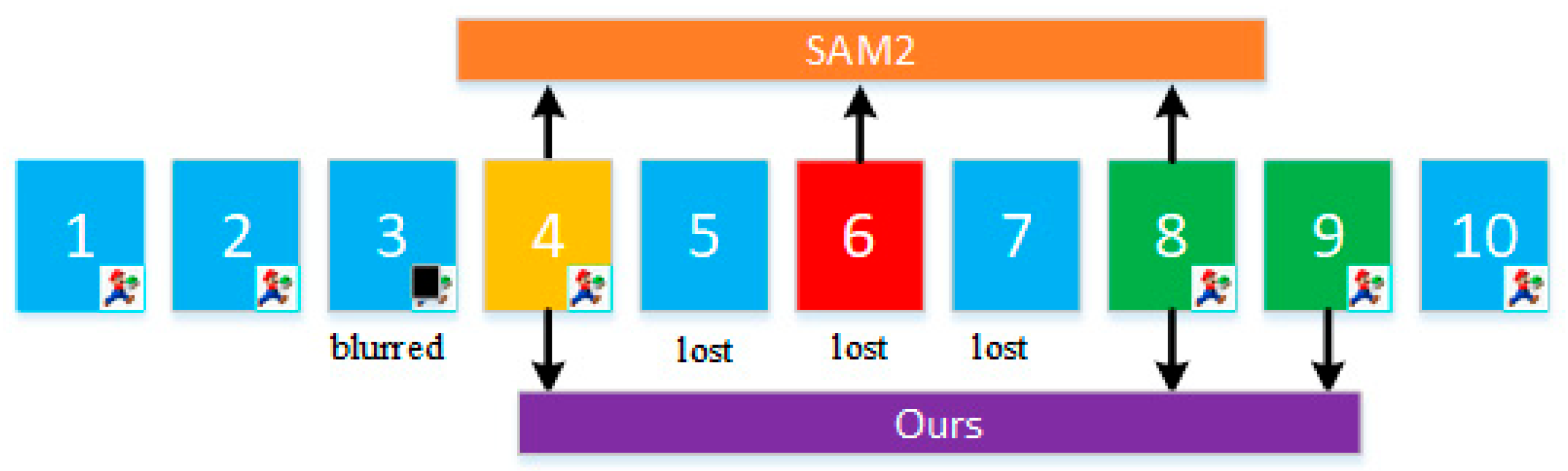

3.3. Adaptive Historical Frame Selection Strategy Based on Dynamic Threshold

3.4. Memory Management and Optimization for Efficient Video Segmentation

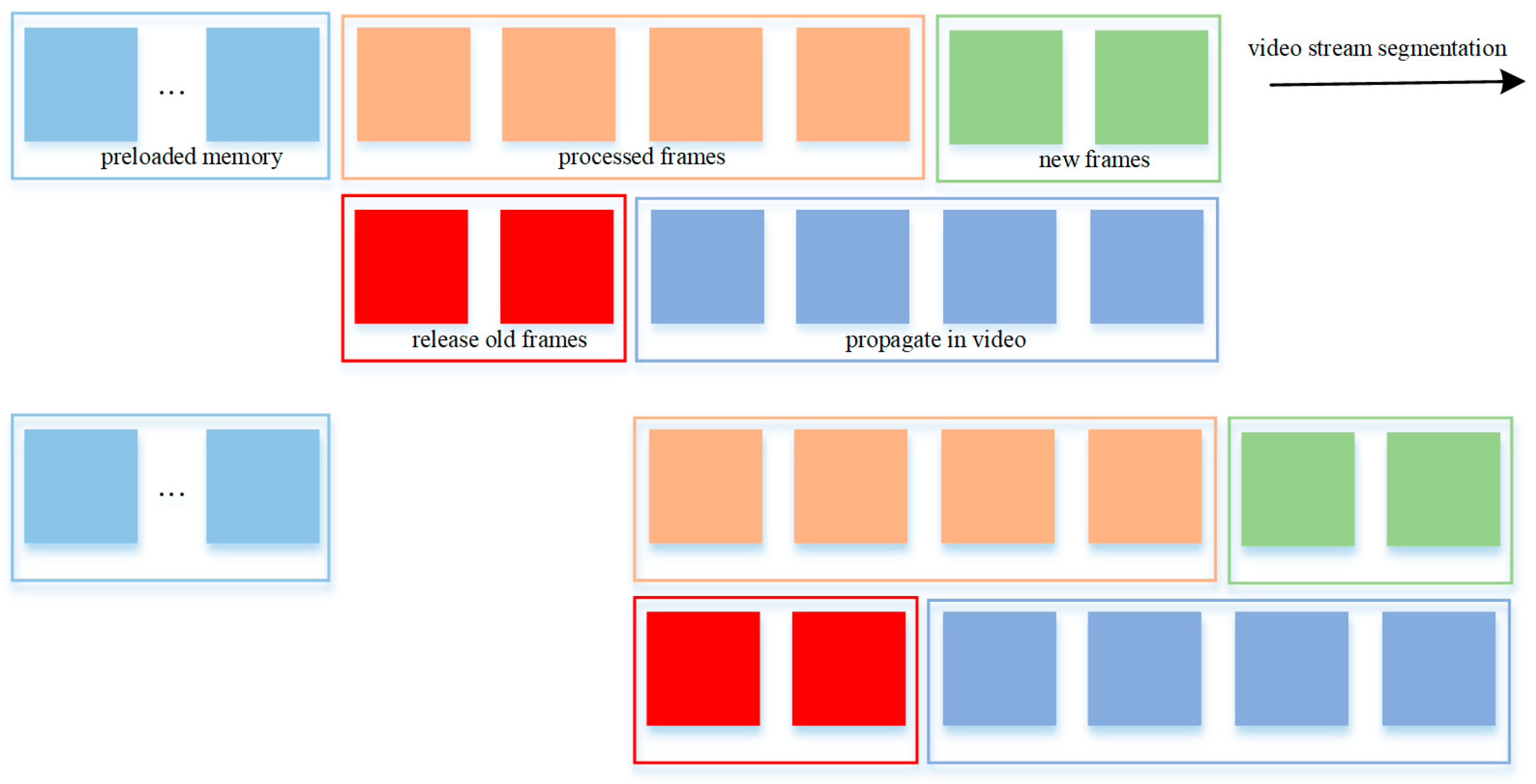

3.4.1. Dynamic Memory Management

- Offloading frames to CPU RAM: By setting the offload_video_to_cpu parameter in the init_state method [39], video frames are transferred from GPU VRAM to CPU RAM, reducing the GPU memory footprint without sacrificing availability during inference.

- Manual VRAM release after memory attention calculation: Intermediate variables generated during the Memory Attention computation were found to persist unnecessarily in VRAM. We added explicit cleanup operations to release these variables immediately after use, significantly lowering peak memory usage.

3.4.2. Efficient Propagation and Result Management

- Limiting propagation length: We cap the propagation length at M, ensuring that each correction operation only affects a fixed number of historical frames. This balances correction robustness with computational cost, reducing the total number of frames processed during inference from N to approximately(M/K) * N, where K is the frame buffer size.

- Immediate release of processed segmentation results: We observed that caching segmentation results in the video_segments dictionary led to linear memory growth. To counteract this, we implemented proactive deallocation, releasing each frame’s segmentation results immediately after use. This prevents unbounded dictionary expansion and stabilizes memory usage.

3.4.3. Continuous Old Frame Clearing

- Retention Threshold Mechanism: Only frames beyond the max_inference_state_frames threshold from the current frame are cleared. Crucially, max_inference_state_frames [39,40] must be greater than max_frame_num_to_track in the propagate_in_video() function to ensure that historically relevant frames are retained until no longer needed.

- Automatic Cleanup After Propagation: Following each propagation step, frames exceeding the retention limit are automatically released from memory. Frames stored in the preload memory bank are preserved indefinitely.

- Memory Bounds During Inference: The upper bound of VRAM usage is dictated by the memory required for newly processed frames, retained frames, and the fixed-size preload memory bank. The lower bound corresponds to the memory required for the maximum propagation length plus the preload bank. This strategy ensures memory usage remains within a predictable range, effectively balancing efficiency and safety.

3.4.4. Advanced Memory Optimization Techniques

- Index Mapping Decoupling: We refactored frame access logic to use an independent frame index mapping table, decoupling logical frame references from physical storage. This allows precise tracking of required frames without relying on sequential indexing.

- Dynamic State Synchronization: The frame index mapping table is dynamically maintained during state initialization and updates, ensuring real-time alignment between logical indices and the current frame sequence.

- Coordinated Cleanup Strategy: A dedicated function systematically removes outdated frames that exceed retention thresholds. This function synchronizes updates to both the image cache container and the logical index table, preserving data-reference consistency.

- Global Frame Statistics: We modified the historical frame counter to track the total number of frames ever loaded rather than just those currently active. This distinction enables accurate memory accounting and avoids overestimating active storage needs.

4. Experiments

4.1. Metrics and Datasets

4.2. Environment and Parameters

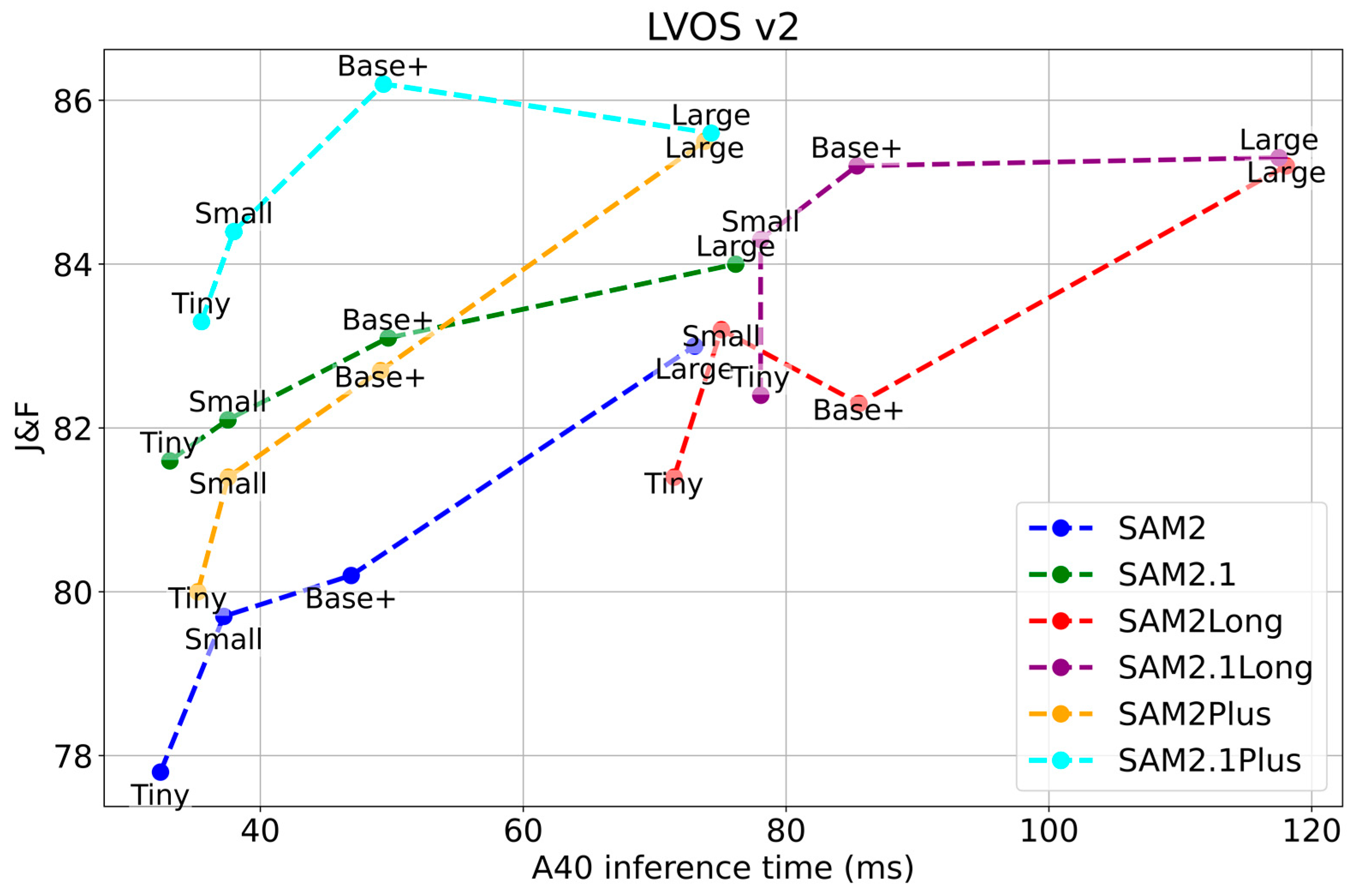

4.3. Quantitative Results

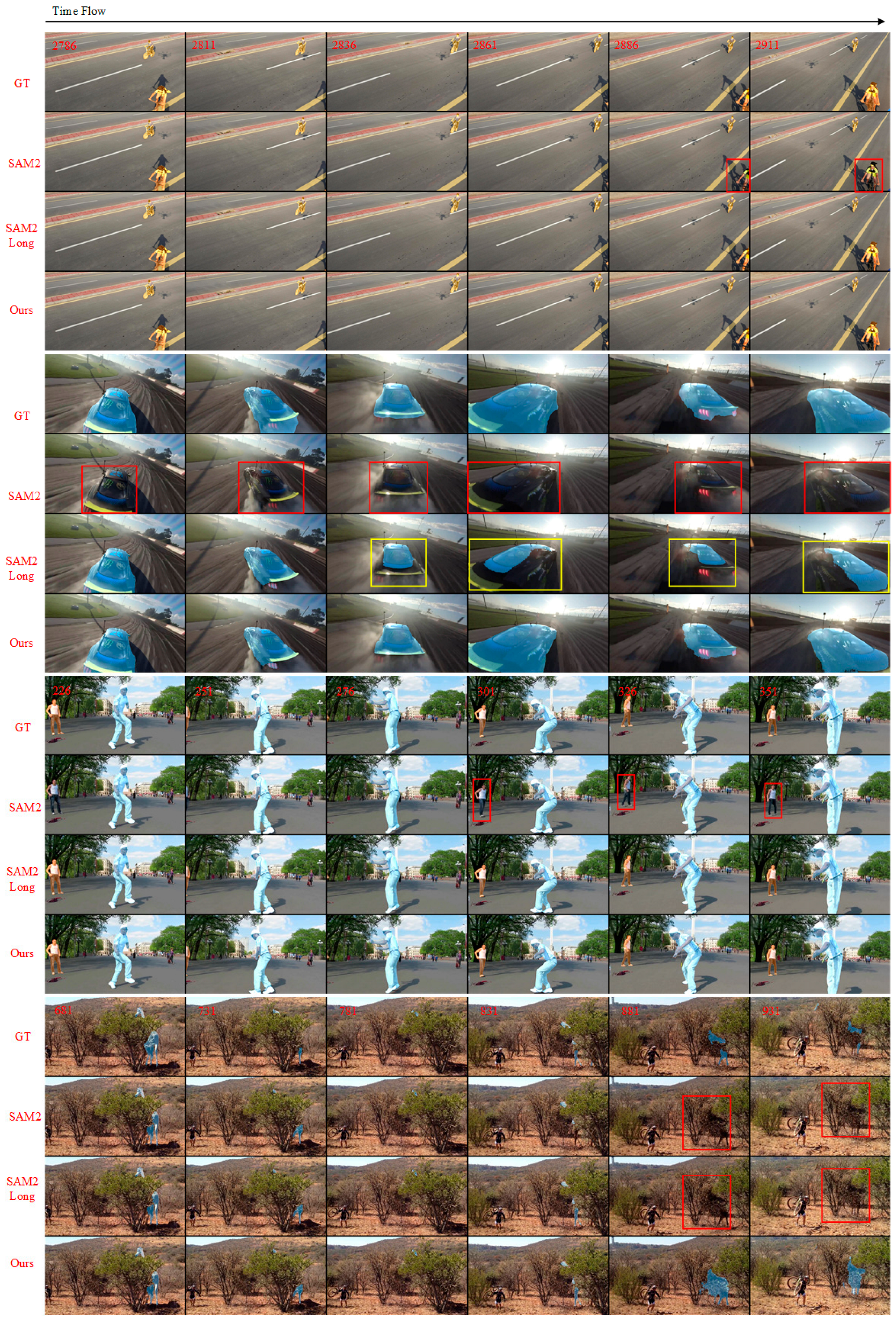

4.4. Qualitative Results

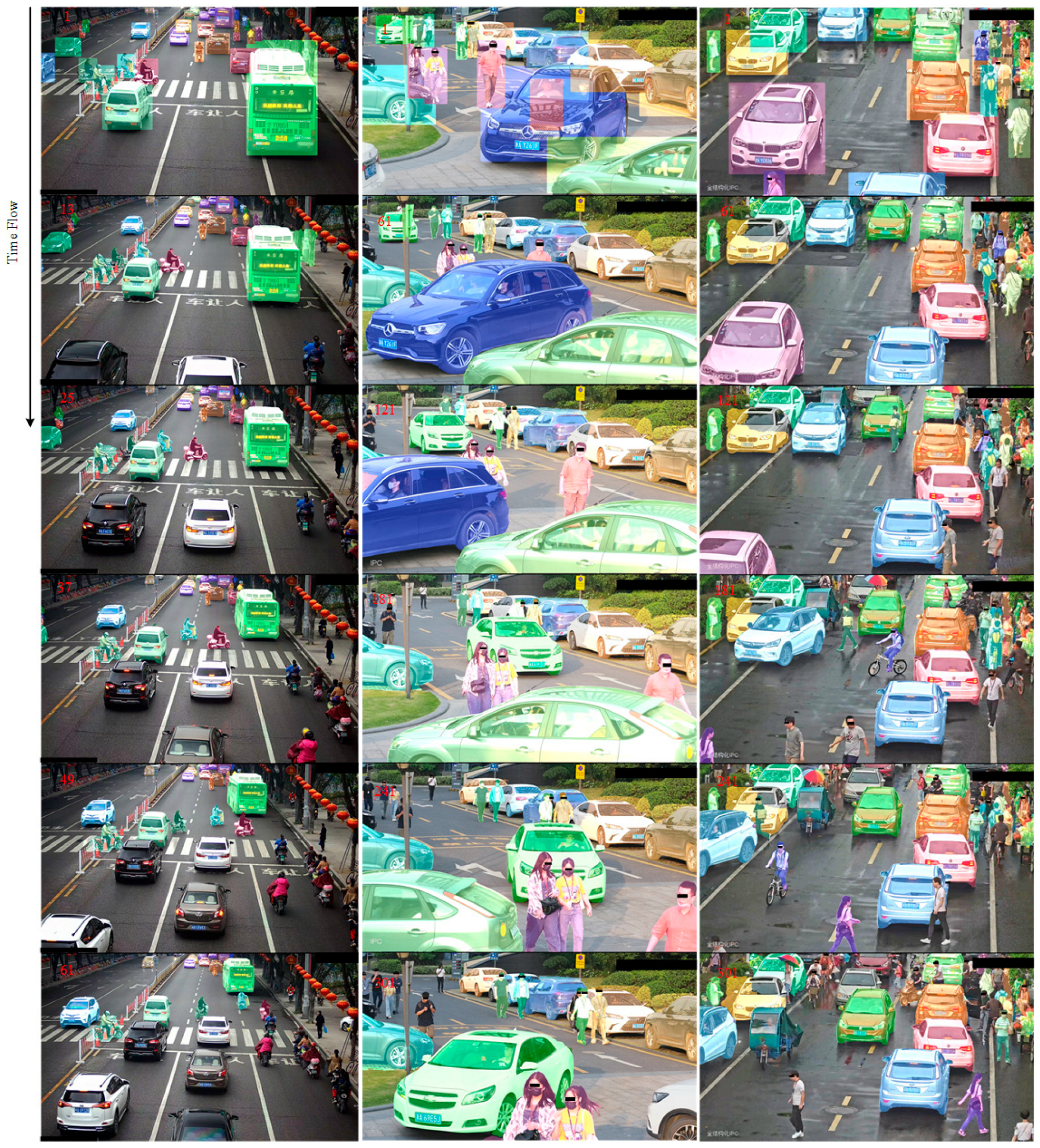

4.5. Long Video Segmentation in Real Scenes with SAM2Plus

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Kirillov, A.; Mintun, E.; Ravi, N.; Mao, H.; Rolland, C.; Gustafson, L.; Xiao, T.; Whitehead, S.; Berg, A.C.; Lo, W.Y. Segment Anything. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 2–6 October 2023; pp. 4015–4026. [Google Scholar]

- Ravi, N.; Gabeur, V.; Hu, Y.-T.; Hu, R.; Ryali, C.K.; Ma, T.; Khedr, H.; Rädle, R.; Rolland, C.; Gustafson, L.; et al. SAM 2: Segment Anything in Images and Videos. arXiv 2024, arXiv:2408.00714. [Google Scholar]

- Jiaxing, Z.; Hao, T. SAM2 for Image and Video Segmentation: A Comprehensive Survey. arXiv 2025, arXiv:2503.12781. [Google Scholar]

- Ding, S.; Qian, R.; Dong, X.-W.; Zhang, P.; Zang, Y.; Cao, Y.; Guo, Y.; Lin, D.; Wang, J. SAM2Long: Enhancing SAM 2 for Long Video Segmentation with a Training-Free Memory Tree. arXiv 2024, arXiv:2410.16268. [Google Scholar]

- Yang, C.-Y.; Huang, H.-W.; Chai, W.; Jiang, Z.; Hwang, J.-N. SAMURAI: Adapting Segment Anything Model for Zero-Shot Visual Tracking with Motion-Aware Memory. arXiv 2024, arXiv:2411.11922. [Google Scholar]

- Basar, T. Contributions to the Theory of Optimal Control. 2001, pp. 147–166. Available online: https://www.jstor.org/stable/1993703 (accessed on 2 July 2025).

- Cao, J.; Weng, X.; Khirodkar, R.; Pang, J.; Kitani, K. Observation-Centric SORT: Rethinking SORT for Robust Multi-Object Tracking. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 18–22 June 2022; pp. 9686–9696. [Google Scholar]

- Wojke, N.; Bewley, A.; Paulus, D. Simple online and realtime tracking with a deep association metric. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 3645–3649. [Google Scholar]

- Zhang, Y.; Sun, P.; Jiang, Y.; Yu, D.; Yuan, Z.; Luo, P.; Liu, W.; Wang, X. ByteTrack: Multi-Object Tracking by Associating Every Detection Box. In Computer Vision—ECCV 2022, Proceedings of the 17th European Conference, Tel Aviv, Israel, 23–27 October 2022; Springer: Berlin/Heidelberg, Germany, 2022. [Google Scholar]

- Bewley, A.; Ge, Z.; Ott, L.; Ramos, F.; Upcroft, B. Simple Online and Realtime Tracking. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016. [Google Scholar]

- Yang, S.; Thormann, K.; Baum, M. Linear-Time Joint Probabilistic Data Association for Multiple Extended Object Tracking. In Proceedings of the 2018 IEEE 10th Sensor Array and Multichannel Signal Processing Workshop (SAM), Sheffield, UK, 8–11 July 2018; pp. 6–10. [Google Scholar]

- Blackman, S.S. Multiple hypothesis tracking for multiple target tracking. IEEE Aerosp. Electron. Syst. Mag. 2004, 19, 5–18. [Google Scholar] [CrossRef]

- Chaoqun, Y.; Xiaowei, L.; Zhiguo, S.; Heng, Z.; Xianghui, C. Augmented LRFS-based filter: Holistic tracking of group objects. Signal Process. 2025, 226, 109665. [Google Scholar] [CrossRef]

- Pont-Tuset, J.; Perazzi, F.; Caelles, S.; Arbeláez, P.; Sorkine-Hornung, A.; Gool, L.V. The 2017 DAVIS Challenge on Video Object Segmentation. arXiv 2017, arXiv:1704.00675. [Google Scholar]

- Oh, S.W.; Lee, J.-Y.; Xu, N.; Kim, S.J. Space-Time Memory Networks for Video Object Segmentation with User Guidance. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 442–455. [Google Scholar] [CrossRef] [PubMed]

- Yang, Z.; Wei, Y.; Yang, Y. Collaborative Video Object Segmentation by Foreground-Background Integration. In Proceedings of the Computer Vision—ECCV 2020, Glasgow, UK, 23–28 August 2020; pp. 332–348. [Google Scholar]

- Qin, Z.; Zhou, S.; Wang, L.; Duan, J.; Hua, G.; Tang, W. MotionTrack: Learning Robust Short-Term and Long-Term Motions for Multi-Object Tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 17939–17948. [Google Scholar]

- Caelles, S.; Maninis, K.K.; Pont-Tuset, J.; Leal-Taixé, L.; Cremers, D.; Van Gool, L. One-Shot Video Object Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 22–25 July 2017; pp. 5320–5329. [Google Scholar]

- Chou, Y.S.; Wang, C.Y.; Lin, S.D.; Liao, H.Y.M. How Incompletely Segmented Information Affects Multi-Object Tracking and Segmentation (MOTS). In Proceedings of the 2020 IEEE International Conference on Image Processing (ICIP), Abu Dhabi, United Arab Emirates, 25–28 October 2020; pp. 2086–2090. [Google Scholar]

- Chen, Z.; Fang, G.; Ma, X.; Wang, X. SlimSAM: 0.1\% Data Makes Segment Anything Slim. In Proceedings of the Advances in Neural Information Processing Systems 37 (NeurIPS 2024), Vancouver, BC, Canada, 10–15 December 2024; pp. 39434–39461. [Google Scholar]

- Cuttano, C.; Trivigno, G.; Rosi, G.; Masone, C.; Averta, G. SAMWISE: Infusing wisdom in SAM2 for Text-Driven Video Segmentation. arXiv 2024, arXiv:2411.17646. [Google Scholar]

- Hong, L.; Liu, Z.; Chen, W.; Tan, C.; Feng, Y.; Zhou, X.; Guo, P.; Li, J.; Chen, Z.; Gao, S.; et al. LVOS: A Benchmark for Large-scale Long-term Video Object Segmentation. arXiv 2024, arXiv:2404.19326. [Google Scholar]

- Xu, N.; Yang, L.; Fan, Y.; Yang, J.; Yue, D.; Liang, Y.; Price, B.; Cohen, S.; Huang, T. YouTube-VOS: Sequence-to-Sequence Video Object Segmentation. In Proceedings of the Computer Vision—ECCV 2018: 15th European Conference, Munich, Germany, 8–14 September 2018; Proceedings, Part V. pp. 603–619. [Google Scholar]

- Ding, H.; Liu, C.; He, S.; Jiang, X.; Torr, P.H.S.; Bai, S. MOSE: A New Dataset for Video Object Segmentation in Complex Scenes. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 2–6 October 2023; pp. 20167–20177. [Google Scholar]

- Zhao, X.; Ding, W.-Y.; An, Y.; Du, Y.; Yu, T.; Li, M.; Tang, M.; Wang, J. Fast Segment Anything. arXiv 2023, arXiv:2306.12156. [Google Scholar]

- Ultralytics. Available online: https://github.com/ultralytics/ultralytics (accessed on 6 May 2025).

- Varghese, R.; Sambath, M. YOLOv8: A Novel Object Detection Algorithm with Enhanced Performance and Robustness. In Proceedings of the 2024 International Conference on Advances in Data Engineering and Intelligent Computing Systems (ADICS), Chennai, India, 17–18 April 2024. [Google Scholar]

- Zhang, C.; Han, D.; Qiao, Y.; Kim, J.U.; Bae, S.-H.; Lee, S.; Hong, C.-S. Faster Segment Anything: Towards Lightweight SAM for Mobile Applications. arXiv 2023, arXiv:2306.14289. [Google Scholar]

- Zhang, C.; Han, D.; Zheng, S.; Choi, J.H.; Kim, T.-H.; Hong, C.-S. MobileSAMv2: Faster Segment Anything to Everything. arXiv 2023, arXiv:2312.09579. [Google Scholar]

- Xiong, Y.; Varadarajan, B.; Wu, L.; Xiang, X.; Xiao, F.; Zhu, C.; Dai, X.; Wang, D.; Sun, F.; Iandola, F.; et al. EfficientSAM: Leveraged Masked Image Pretraining for Efficient Segment Anything. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 17–21 June 2024. [Google Scholar]

- Song, Y.; Pu, B.; Wang, P.; Jiang, H.; Dong, D.; Cao, Y.; Shen, Y. SAM-Lightening: A Lightweight Segment Anything Model with Dilated Flash Attention to Achieve 30 times Acceleration. arXiv 2024, arXiv:2403.09195. [Google Scholar]

- Zhang, Z.; Cai, H.; Han, S. EfficientViT-SAM: Accelerated Segment Anything Model Without Performance Loss. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Seattle, WA, USA, 17–21 June 2024; pp. 7859–7863. [Google Scholar]

- Zhou, C.; Li, X.; Loy, C.C.; Dai, B. EdgeSAM: Prompt-In-the-Loop Distillation for On-Device Deployment of SAM. arXiv 2023, arXiv:2312.06660. [Google Scholar]

- Ma, J.; He, Y.; Li, F.; Han, L.; You, C.; Wang, B. Segment anything in medical images. Nat. Commun. 2024, 15, 654. [Google Scholar] [CrossRef] [PubMed]

- Chen, T.; Lu, A.; Zhu, L.; Ding, C.; Yu, C.; Ji, D.; Li, Z.; Sun, L.; Mao, P.; Zang, Y. SAM2-Adapter: Evaluating & Adapting Segment Anything 2 in Downstream Tasks: Camouflage, Shadow, Medical Image Segmentation, and More. arXiv 2024, arXiv:2408.04579. [Google Scholar]

- Videnovic, J.; Lukezic, A.; Kristan, M. A Distractor-Aware Memory for Visual Object Tracking with SAM2. In Proceedings of the Computer Vision and Pattern Recognition Conference (CVPR), Nashville, TN, USA, 11–15 June 2025. [Google Scholar]

- Jiang, J.; Wang, Z.; Zhao, M.; Li, Y.; Jiang, D.S. SAM2MOT: A Novel Paradigm of Multi-Object Tracking by Segmentation. arXiv 2025, arXiv:2504.04519. [Google Scholar]

- Ryali, C.; Hu, Y.-T.; Bolya, D.; Wei, C.; Fan, H.; Huang, P.-Y.; Aggarwal, V.; Chowdhury, A.; Poursaeed, O.; Hoffman, J.; et al. Hiera: A hierarchical vision transformer without the bells-and-whistles. In Proceedings of the 40th International Conference on Machine Learning, Honolulu, HI, USA, 23–29 July 2023; Volume 202, pp. 29441–29454. [Google Scholar]

- sam2. Available online: https://github.com/facebookresearch/sam2 (accessed on 6 May 2025).

- Wang, Z.; Zhou, Q.; Liu, Z. Det-SAM2: Technical Report on the Self-Prompting Segmentation Framework Based on Segment Anything Model 2. arXiv 2024, arXiv:2411.18977. [Google Scholar]

- lvos-Evaluation. Available online: https://github.com/LingyiHongfd/lvos-evaluation (accessed on 6 May 2025).

- Bhat, G.; Lawin, F.J.; Danelljan, M.; Robinson, A.; Felsberg, M.; Van Gool, L.; Timofte, R. Learning What to Learn for Video Object Segmentation. In Proceedings of the Computer Vision—ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020. [Google Scholar]

- Cheng, H.K.; Tai, Y.W.; Tang, C.K. Rethinking Space-Time Networks with Improved Memory Coverage for Efficient Video Object Segmentation. Adv. Neural Inf. Process. Syst. 2021, 34, 11781–11794. [Google Scholar]

- Li, M.; Hu, L.; Xiong, Z.; Zhang, B.; Pan, P.; Liu, D. Recurrent Dynamic Embedding for Video Object Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022. [Google Scholar]

- Yang, Z.; Wei, Y.; Yang, Y. Associating Objects with Transformers for Video Object Segmentation. Adv. Neural Inf. Process. Syst. 2021, 34, 2491–2502. [Google Scholar]

- Cheng, H.K.; Schwing, A.G. XMem: Long-Term Video Object Segmentation with an Atkinson-Shiffrin Memory Model. In Computer Vision—ECCV 2022, Proceedings of the 17th European Conference, Tel Aviv, Israel, 23–27 October 2022; Springer: Cham, Switzerland, 2022. [Google Scholar]

- Khanam, R.; Hussain, M. YOLOv11: An Overview of the Key Architectural Enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar]

| Method | Backbone | SA-V Val | SA-V Test | ||||

|---|---|---|---|---|---|---|---|

| SAM2 | Tiny | 73.5 | 70.1 | 76.9 | 74.6 | 71.1 | 78.0 |

| SAM2Long | 77.0 | 73.2 | 80.7 | 78.7 | 74.6 | 82.7 | |

| SAM2Plus | 74.3 | 70.4 | 78.1 | 76.4 | 72.4 | 80.3 | |

| SAM2.1 | 75.1 | 71.6 | 78.6 | 76.3 | 72.7 | 79.8 | |

| SAM2.1Long | 78.9 | 75.2 | 82.7 | 79.0 | 75.2 | 82.9 | |

| SAM2.1Plus | 75.5 | 71.7 | 79.4 | 76.7 | 72.8 | 80.7 | |

| SAM2 | Small | 73.0 | 69.7 | 76.3 | 74.6 | 71.0 | 78.1 |

| SAM2Long | 77.7 | 73.9 | 81.5 | 78.1 | 74.1 | 82.0 | |

| SAM2Plus | 74.3 | 70.3 | 78.2 | 75.4 | 71.4 | 79.4 | |

| SAM2.1 | 76.9 | 73.5 | 80.3 | 76.9 | 73.3 | 80.5 | |

| SAM2.1Long | 79.6 | 75.9 | 83.3 | 80.4 | 76.6 | 84.1 | |

| SAM2.1Plus | 77.3 | 73.5 | 81.1 | 77.6 | 73.8 | 81.5 | |

| SAM2 | Base+ | 75.4 | 71.9 | 78.8 | 74.6 | 71.2 | 78.1 |

| SAM2Long | 78.4 | 74.7 | 82.1 | 78.5 | 74.7 | 82.2 | |

| SAM2Plus | 75.4 | 71.6 | 79.2 | 77.1 | 73.1 | 81.1 | |

| SAM2.1 | 78.0 | 74.6 | 81.5 | 77.7 | 74.2 | 81.2 | |

| SAM2.1Long | 80.5 | 76.8 | 84.2 | 80.8 | 77.1 | 84.5 | |

| SAM2.1Plus | 77.4 | 73.6 | 81.2 | 78.3 | 74.5 | 82.1 | |

| SAM2 | Large | 76.3 | 73.0 | 79.5 | 75.5 | 72.2 | 78.9 |

| SAM2Long | 80.8 | 77.1 | 84.5 | 80.8 | 76.8 | 84.7 | |

| SAM2Plus | 77.4 | 73.6 | 81.2 | 79.0 | 74.9 | 83.0 | |

| SAM2.1 | 78.6 | 75.1 | 82.0 | 79.6 | 76.1 | 83.2 | |

| SAM2.1Long | 81.1 | 77.5 | 84.7 | 81.2 | 77.6 | 84.9 | |

| SAM2.1Plus | 79.6 | 75.8 | 83.4 | 79.6 | 75.8 | 83.4 | |

| Method | Backbone | LVOS v2 Train | LVOS v2 Val | ||||

|---|---|---|---|---|---|---|---|

| SAM2 | Tiny | 94.5 | 92.9 | 96.1 | 77.8 | 74.5 | 81.2 |

| SAM2Long | 95.2 | 93.6 | 96.9 | 81.4 | 77.7 | 85.0 | |

| SAM2Plus | 95.2 | 93.6 | 96.9 | 80.0 | 76.3 | 83.6 | |

| SAM2.1 | 95.0 | 93.4 | 96.7 | 81.6 | 77.9 | 85.2 | |

| SAM2.1Long | 95.2 | 93.6 | 96.9 | 82.4 | 78.8 | 85.9 | |

| SAM2.1Plus | 95.1 | 93.5 | 96.8 | 83.5 | 79.7 | 87.3 | |

| SAM2 | Small | 94.6 | 92.9 | 96.2 | 79.7 | 76.2 | 83.3 |

| SAM2Long | 95.2 | 93.5 | 96.9 | 83.2 | 79.5 | 86.8 | |

| SAM2Plus | 95.1 | 93.5 | 96.8 | 81.4 | 77.7 | 85.2 | |

| SAM2.1 | 95.4 | 93.7 | 97.0 | 82.1 | 78.6 | 85.6 | |

| SAM2.1Long | 95.6 | 94.0 | 97.3 | 84.3 | 80.7 | 88.0 | |

| SAM2.1 Plus | 95.4 | 93.7 | 97.0 | 84.8 | 81.0 | 88.5 | |

| SAM2 | Base+ | 95.3 | 93.7 | 96.9 | 80.2 | 76.8 | 83.6 |

| SAM2Long | 95.5 | 93.9 | 97.2 | 82.3 | 78.8 | 85.9 | |

| SAM2 Plus | 95.5 | 93.9 | 97.2 | 82.9 | 79.3 | 86.5 | |

| SAM2.1 | 95.6 | 94.0 | 97.2 | 83.1 | 79.6 | 86.5 | |

| SAM2.1Long | 95.6 | 94.0 | 97.2 | 85.2 | 81.5 | 88.9 | |

| SAM2.1Plus | 95.6 | 94.0 | 97.2 | 86.3 | 82.6 | 90.0 | |

| SAM2 | Large | 95.2 | 93.6 | 96.8 | 83.0 | 79.6 | 86.4 |

| SAM2Long | 95.7 | 94.1 | 97.3 | 85.2 | 81.8 | 88.7 | |

| SAM2Plus | 95.7 | 94.1 | 97.4 | 85.5 | 81.9 | 89.1 | |

| SAM2.1 | 95.3 | 93.7 | 96.9 | 84.0 | 80.7 | 87.4 | |

| SAM2.1Long | 95.7 | 94.0 | 97.3 | 85.3 | 81.9 | 88.8 | |

| SAM2.1Plus | 95.8 | 94.1 | 97.4 | 85.6 | 82.0 | 89.3 | |

| Method | Backbone | GPU Memory (MB) | Speed (FPS) |

|---|---|---|---|

| SAM2 | Tiny | 4165 | 30.87 |

| SAM2Long | 5707 | 13.99 | |

| SAM2Plus | 4171 | 28.38 | |

| SAM2.1 | 4165 | 30.21 | |

| SAM2.1Long | 5707 | 12.81 | |

| SAM2.1Plus | 4173 | 28.16 | |

| SAM2 | Small | 4195 | 26.87 |

| SAM2Long | 5763 | 13.32 | |

| SAM2Plus | 4205 | 26.63 | |

| SAM2.1 | 4193 | 26.65 | |

| SAM2.1Long | 5811 | 12.81 | |

| SAM2.1Plus | 4203 | 26.33 | |

| SAM2 | Base+ | 4447 | 21.32 |

| SAM2Long | 5971 | 11.69 | |

| SAM2Plus | 4453 | 20.35 | |

| SAM2.1 | 4449 | 20.11 | |

| SAM2.1Long | 5971 | 11.71 | |

| SAM2.1Plus | 4453 | 20.26 | |

| SAM2 | Large | 5497 | 13.69 |

| SAM2Long | 6779 | 8.47 | |

| SAM2Plus | 5505 | 13.55 | |

| SAM2.1 | 5499 | 13.13 | |

| SAM2.1Long | 6913 | 8.51 | |

| SAM2.1Plus | 5503 | 13.46 |

| Method | LVOS v2 Val | ||||

|---|---|---|---|---|---|

| LWL [42] | 60.6 | 58.0 | 64.3 | 57.2 | 62.9 |

| CFBI [16] | 55.0 | 52.9 | 59.2 | 51.7 | 56.2 |

| STCN [43] | 60.6 | 57.2 | 64.0 | 57.5 | 63.8 |

| RDE [44] | 62.2 | 56.7 | 64.1 | 60.8 | 67.2 |

| DeAOT [45] | 63.9 | 61.5 | 69.0 | 58.4 | 66.6 |

| XMem [46] | 64.5 | 62.6 | 69.1 | 60.6 | 65.6 |

| SAM 2 [2] | 79.8 | 80.0 | 86.6 | 71.6 | 81.1 |

| SAM 2.1 [2] | 84.1 | 80.7 | 87.4 | 80.6 | 87.7 |

| SAM2Long [4] | 84.2 | 82.3 | 89.2 | 79.1 | 86.2 |

| SAM2.1Long [4] | 85.9 | 81.7 | 88.6 | 83.0 | 90.5 |

| SAM2Plus | 84.8 | 82.2 | 89.4 | 80.3 | 87.3 |

| SAM2.1Plus | 86.3 | 81.6 | 89.0 | 83.3 | 91.2 |

| Method | KF-IoU | AHFSS-DT | LVOS v2 Val | GPU Memory (MB) | Speed (FPS) | ||

|---|---|---|---|---|---|---|---|

| SAM2 | 80.2 | 76.8 | 83.6 | 4447 | 21.32 | ||

| √ | 81.2 | 77.5 | 84.8 | 4450 | 20.86 | ||

| √ | √ | 82.9 | 79.3 | 86.5 | 4453 | 20.35 | |

| SAM2.1 | 83.1 | 79.6 | 86.5 | 4449 | 20.11 | ||

| √ | 84.5 | 80.8 | 88.2 | 4452 | 20.19 | ||

| √ | √ | 86.3 | 82.6 | 90.0 | 4453 | 20.26 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yin, J.; Wu, F.; Su, H.; Huang, P.; Qixuan, Y. Improvement of SAM2 Algorithm Based on Kalman Filtering for Long-Term Video Object Segmentation. Sensors 2025, 25, 4199. https://doi.org/10.3390/s25134199

Yin J, Wu F, Su H, Huang P, Qixuan Y. Improvement of SAM2 Algorithm Based on Kalman Filtering for Long-Term Video Object Segmentation. Sensors. 2025; 25(13):4199. https://doi.org/10.3390/s25134199

Chicago/Turabian StyleYin, Jun, Fei Wu, Hao Su, Peng Huang, and Yuetong Qixuan. 2025. "Improvement of SAM2 Algorithm Based on Kalman Filtering for Long-Term Video Object Segmentation" Sensors 25, no. 13: 4199. https://doi.org/10.3390/s25134199

APA StyleYin, J., Wu, F., Su, H., Huang, P., & Qixuan, Y. (2025). Improvement of SAM2 Algorithm Based on Kalman Filtering for Long-Term Video Object Segmentation. Sensors, 25(13), 4199. https://doi.org/10.3390/s25134199