1. Introduction

Teleoperation has been employed across various domains including on-road vehicles [

1], military operations [

2], industrial automation [

3], and medical surgery [

4]. Recent studies have focused on improving teleoperation performance under varying communication conditions, frequently incorporating human-in-the-loop approaches that enhance overall control performance and safety, as summarized in

Table 1. The variability in operational environments and network quality is largely influenced by the type of network connecting operators and devices, as well as the specific teleoperation application. One of the most critical characteristics of teleoperation systems is the presence of communication delays, which can lead to a discrepancy between the operator’s intent and the actual movement of the controlled device [

5,

6,

7]. These communication delays can pose significant risks to system performance and operator safety. To mitigate the adverse effects of communication delays, recent research has focused on developing delay compensation techniques, both through physical modeling approaches and model-free methodologies.

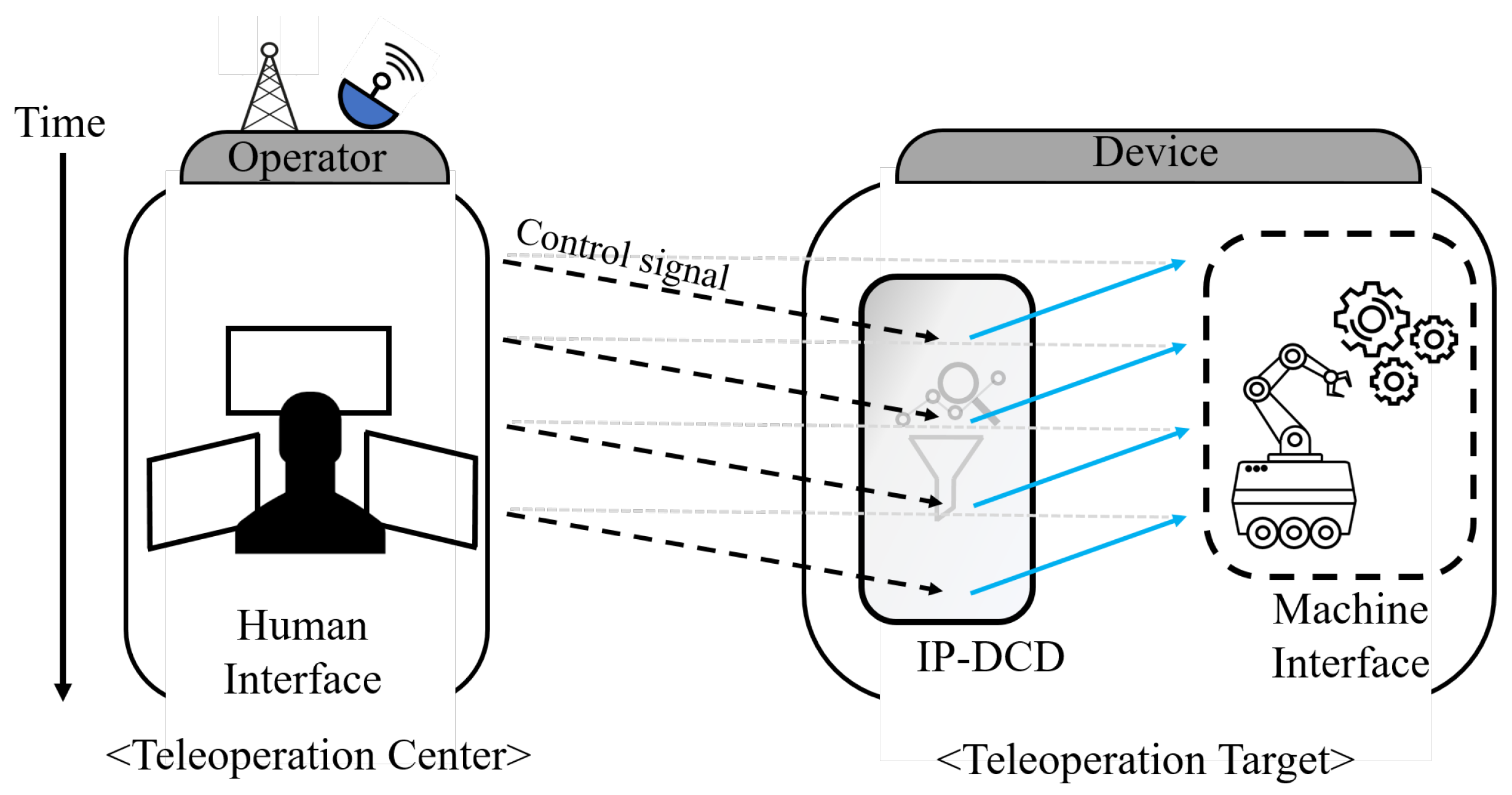

As depicted in

Figure 1, communication delays can generally be classified into control delay, which emerges during the transmission of a remote control signal, and sensor delay, which occurs during the reception of a sensor signal [

15]. Numerous techniques have been proposed to ensure the stability and performance of teleoperation systems by mitigating both control and sensor delays, with some methods achieving success through model predictive control (MPC) [

16]. Subsequently, more generalized predictive models, such as the Smith predictor, gained prominence due to the limitations of strict MPC-based predictors that require highly accurate plant models [

17]. Early work improved sliding-mode control,

design, and networked control by introducing probabilistic analyses and robust filtering [

18,

19,

20]. Additionally, while the model-based approach can predict control commands and sensor values based on physical movement, it may introduce discrepancies due to accumulated model errors [

15]. Therefore, some studies have adopted model-free predictors and pursued more robust passivity-based approaches [

21]. Variable-transformation (wave-scattering) methods later proved robust to nonlinear dynamics and time-varying delays precisely because they bypass explicit plant modelling [

22,

23]. In addition, several studies have applied model-free predictors to passivity control and energy balance monitoring, which have contributed greatly to improving the stability of teleoperation as a bilateral controller [

24,

25,

26,

27]. Meanwhile, the model-free approach often exhibits lower performance than the model-based approach; researchers have therefore proposed various improvements [

11,

15,

21,

28,

29]. Since then, these model-free predictors have been supplemented and modified to adapt to delay fluctuation caused by uncertain network conditions [

30]. Nevertheless, some methods are still criticised for residual high-frequency oscillations [

31].

Table 2 summarizes these approaches.

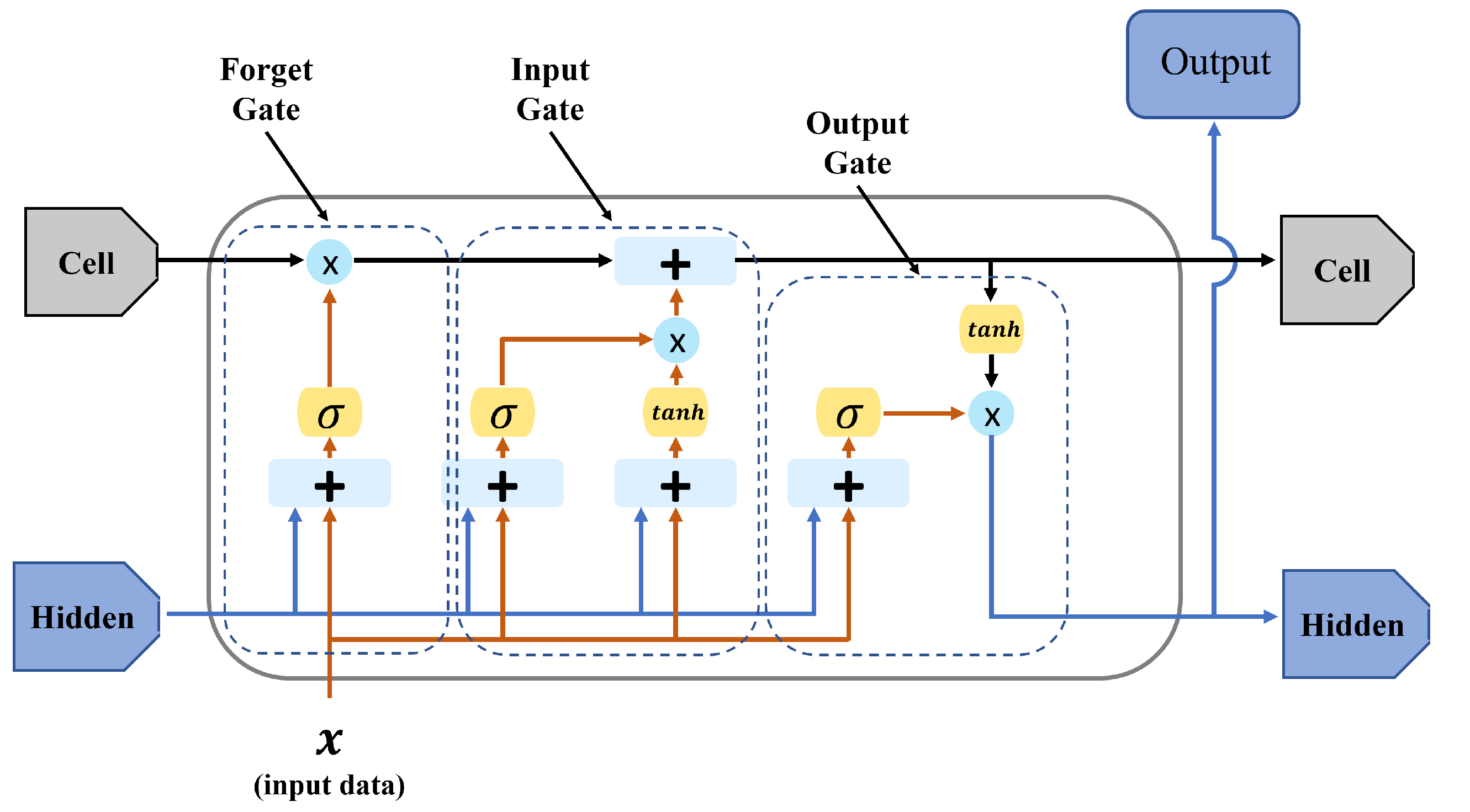

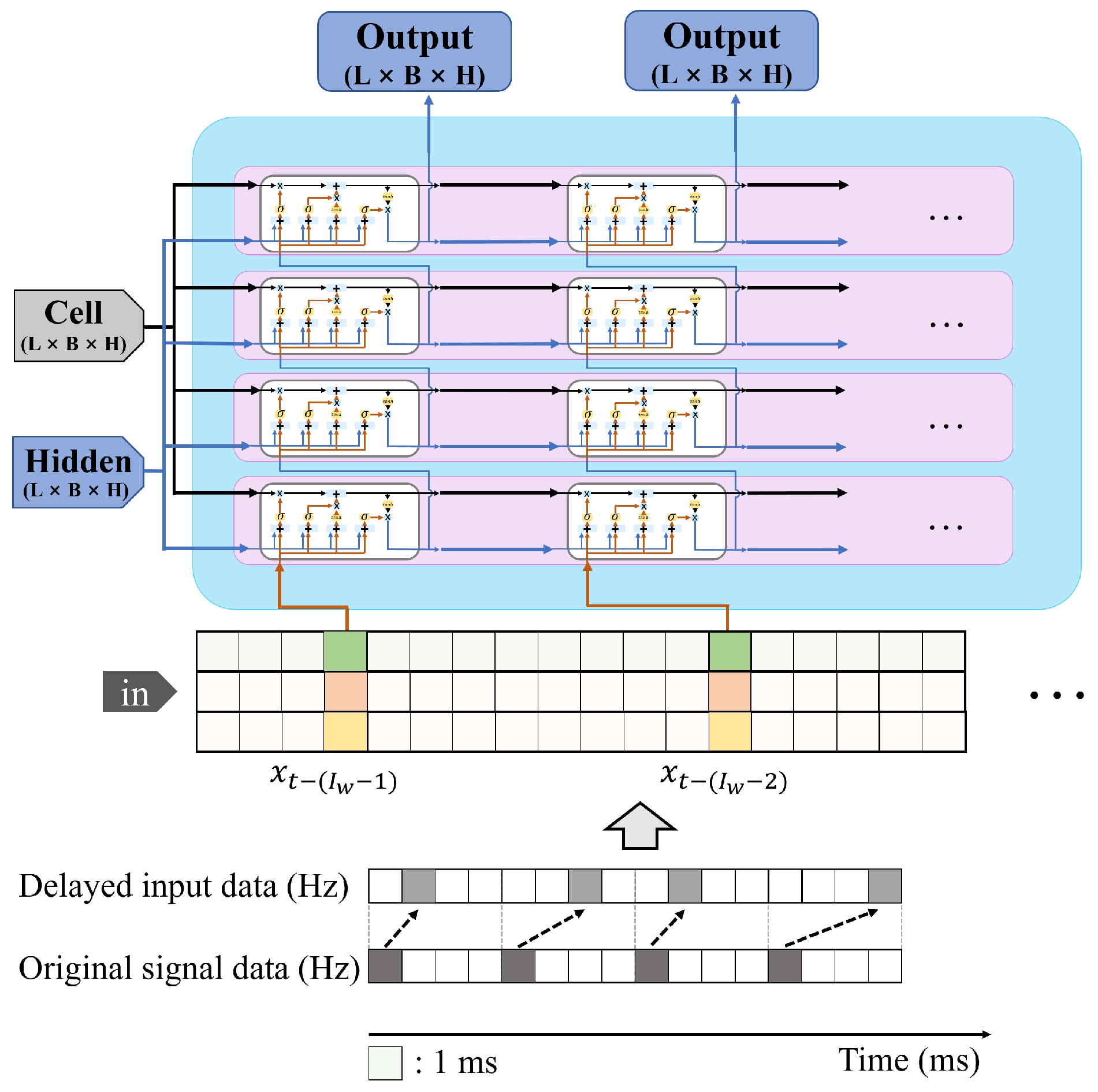

Another important trend is the rapid development of AI. Deep learning-based predictors utilizing RNN (Recurrent Neural Network)-based time series forecasting (TSF) are now actively studied [

39]. Sophisticated models using LSTM and GRU, which represent RNNs, can be interpreted as data-driven model-free predictors; unlike other model-free approaches, they do not require strict dynamics. Recently, predictors utilizing techniques such as LSTM, a type of RNN, and high-degree polynomial linear regression for unavoidable transmission delays have been reported, and demonstrations for real-time operation have been conducted [

40]. In addition, studies have explored LSTM-based predictors that compensate for input delays using LSTM-integrated MPC in nonlinear time-delay systems [

41]. LSTM or GRU offers many strengths in time series processing, and recently, Attention-based models such as the Transformer, famous for GPT, have also been widely studied in the TSF field [

42]. Although incorporating Attention mechanisms could enhance prediction accuracy by selectively emphasizing critical input features, their application introduces additional computational overhead and increased memory requirements. Specifically, Attention mechanisms involve frequent computation of weight matrices, thereby elevating computational complexity and inference latency, which are critical drawbacks for real-time teleoperation tasks performed in resource-constrained edge computing environments. Furthermore, Attention mechanisms typically offer meaningful benefits primarily when modeling longer sequences or large-scale datasets. In contrast, LSTM and GRU are more computationally efficient for short-term predictions (∼few seconds), aligning precisely with the goals of this research. Therefore, this study applied supplementary techniques as a preprocessing step to enhance LSTM-based prediction under dynamic network conditions [

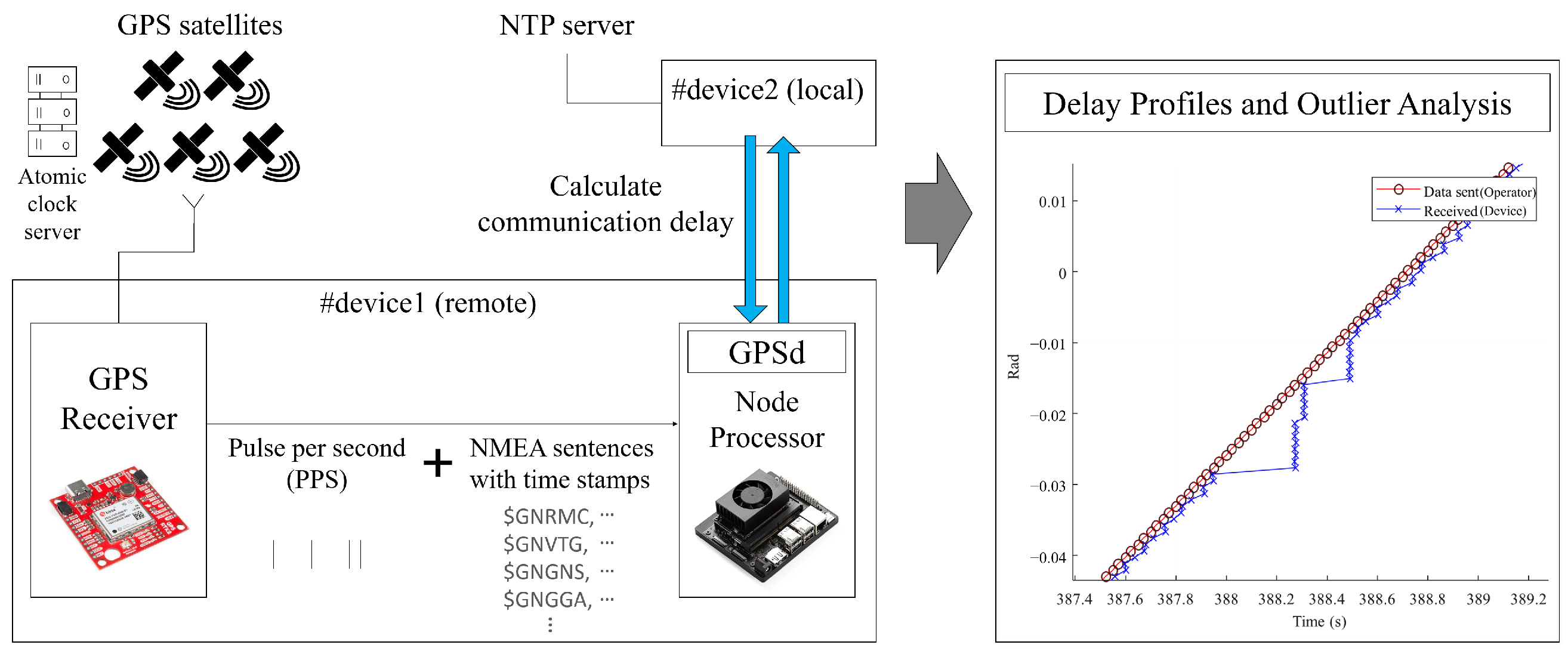

43]. Between GRU and LSTM, GRU converges faster during training, but LSTM typically demonstrates superior accuracy for slightly longer sequence predictions. Considering these trade-offs and the requirements of this research, LSTM was selected for this study. To the best of the authors’ knowledge, most model-free predictors using neural networks estimate the next physical value over time, and there are few studies on predicting values after a specific communication delay

. In particular, to effectively compensate for control delay, it is important to precisely measure the one-way communication delay rather than round-trip time (RTT). Such compensation should be based on this one-way delay measurement, especially when dealing with realistic and time-varying delays instead of constant delays. For this reason, we propose the IPT-DCD (Interpolation Predictor for Teleoperation under Dynamic Communication Delay), as shown in

Figure 2.

Contribution

This paper proposes IPT-DCD, a method designed to compensate for communication delays by predicting delayed signals with an interpolation method.

The proposed IPT-DCD is formulated as a data-driven, model-free framework, which enables flexible adaptation to various systems through training on samples collected from the target operating environment.

- -

IPT-DCD enhances the previously introduced PT-DCD by integrating the BSI (Backward Shifting and Interpolation) technique, thereby achieving improved robustness in prediction performance.

We conducted a series of experiments across diverse scenarios by varying the proportion of delay outliers, showing that IPT-DCD can maintain the performance even in unstable conditions.

The proposed approach exhibits robustness against communication delays for a wide range of teleoperation applications, particularly offering reliable dynamic delay outlier compensation capabilities.

5. Discussion

5.1. Performance Comparison Between the PT-DCD and the IPT-DCD

The statistical analysis clearly showed a significant difference between IPT-DCD and PT-DCD, proving that the method applied to IPT-DCD is indeed a novel approach distinct from PT-DCD. By introducing the BSI process, IPT-DCD is able to perform predictions at uniform time intervals, effectively reducing the RMSE under all experimental conditions. Under the training condition of , PT-DCD achieved a greater RMSE reduction (24.38%) compared to IPT-DCD (16.37%), indicating relatively lower performance by IPT-DCD in stable conditions. However, as increases, the two methods exhibit opposite performance trends, demonstrating the distinct operating characteristics of each and reinforcing the novelty of the BSI-based approach.

5.2. Impact of Outliers on the Performance of the PT-DCD and the IPT-DCD

PT-DCD and IPT-DCD were evaluated under four different conditions, with = 0, 0.1, 0.3, and 0.5 representing the proportion of outliers in communication delay. The case of corresponds to a relatively extreme communication environment, where outliers account for half of the total delay. Even under this condition, PT-DCD achieved an RMSE reduction of nearly 20%. Moreover, IPT-DCD achieved an RMSE reduction of approximately 30%, demonstrating a greater improvement than PT-DCD.

5.3. Efficient Application of the PT-DCD and the IPT-DCD

An interesting observation is that IPT-DCD does not consistently outperform PT-DCD; rather, it demonstrates an opposite trend depending on the variation in the contamination ratio (

). Although this was an unexpected result, it provides a potentially beneficial option for practical applications. By combining PT-DCD and IPT-DCD into a hybrid system, using a threshold of

, the system can effectively adapt across a wide range of communication conditions—from typical delays to extreme cases. Although real-time measurement of the contamination ratio (

) has not been extensively studied, the method was previously discussed for estimating

in real time in [

51]. Utilizing such real-time estimation methods to select appropriate predictors dynamically is expected to significantly improve the performance of the predictor in reducing communication delay.

5.4. Towards Practical Application and Extension

Although teleoperation technologies can be widely applied in various fields (e.g., surgery, mobility, and unmanned ground vehicles), the degree of performance improvement may vary across systems, requiring system-specific research. To achieve the desired performance of the predictors that mitigate communication delays, it is highly recommended to consider resampling the control target datasets. Readers should recognize that this study specifically focused on vehicle steering angle in remote driving systems, and thus the proposed method may not perform optimally under different conditions. In particular, due to the sensitivity of the model to training parameters, sufficient performance improvement may not be achieved unless the training data are collected directly from the target system. Additionally, even though high integrity in measurement is required, real-world data can often be noisy and sensitive. With the aid of synchronization tools such as chrony, this study assumed that the precise measurement of steering torque data and accurate delay computation were possible. Therefore, in order to apply the proposed predictor to real-time systems, accurate communication delay estimation and an environment capable of providing reliable measurement data are essential.

6. Conclusions

We proposed IPT-DCD, a deep learning-based interpolation predictor designed to mitigate dynamic communication delays in teleoperation environments. Experimental results under varying outlier contamination ratios (

) of communication delays demonstrated that IPT-DCD exhibited greater robustness than PT-DCD under high contamination conditions, whereas PT-DCD demonstrated better performance under passive communication delays. These findings suggest that the two methods possess complementary strengths in delay compensation: PT-DCD is more effective for passive delays, while IPT-DCD excels under delay outliers. Consequently, by dynamically selecting predictors based on

, delay compensation can be optimized across a wide range of real-world communication scenarios. This work lays a foundation for practical and delay-resilient teleoperation. The proposed approach can be applied not only to teleoperation systems such as microsurgery [

4], mobile robots [

14], and unmanned ground vehicles (UGVs) [

11], but also to other domains requiring predictive compensation mechanisms, such as impedance control in human–robot collaboration [

52]. Future work may explore advanced hybrid frameworks, real-time estimation of

, and multi-modal prediction architectures.