1. Introduction

Meteorological forecasts play a critical role in disaster preparedness and significantly impact decision-making processes in various aspects of daily life and industry operations. Accurate weather forecasting is vital not only for personal choices such as attire selection or travel plans but also for industries such as agriculture, construction, aviation, maritime operations, and energy production, aiding them in efficient resource management [

1,

2,

3,

4,

5,

6,

7]. Furthermore, accurate forecasts can significantly reduce risks and optimise future activities. The real-time processing of extensive datasets generated by sensor measurements and ensuring the accuracy and reliability of such data are paramount for effective forecasting.

Accurate weather forecasts are also crucial for reducing human casualties and property losses during natural disasters. Timely predictions facilitate early warnings and effective mitigation strategies [

8]. Traditionally, weather data is collected via electronic sensors, manual methods, and various Internet of Things (IoT) devices, which provide detailed measurements of atmospheric conditions, including temperature, pressure, humidity, wind speed, and direction. IoT sensors significantly streamline data collection processes and enhance forecast accuracy through continuous monitoring and comprehensive data analysis [

9].

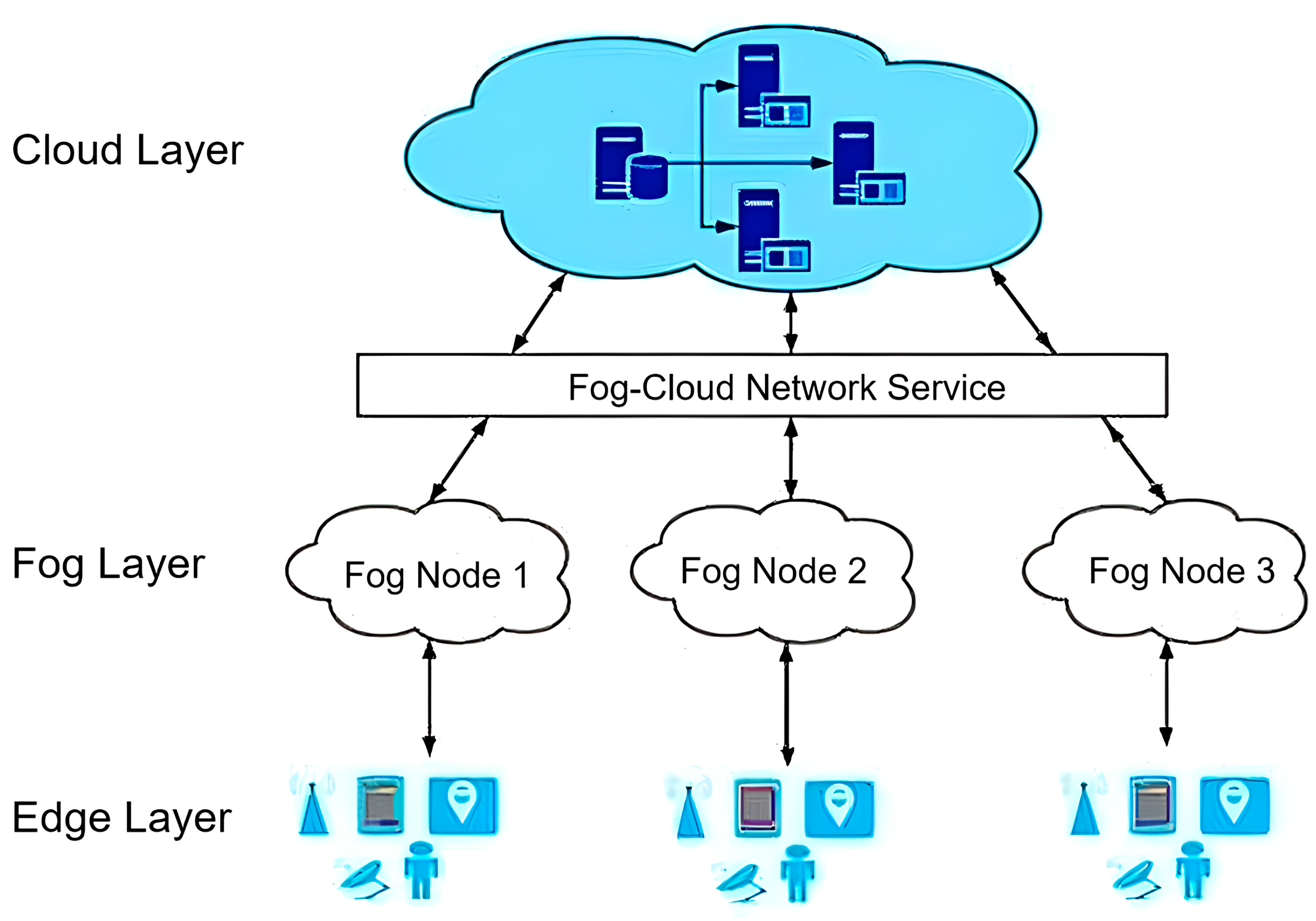

Following data collection, sensor data undergo preliminary transformations, including noise reduction, normalisation, and data structuring, to prepare for advanced analyses. Fog computing, a distributed computing model processing data close to its source rather than distant cloud servers, significantly improves processing efficiency by reducing latency and response time. This capability makes fog computing particularly beneficial for time-sensitive weather forecasts, ensuring swift and reliable predictions [

10,

11,

12]. Fog computing and IoT technologies are increasingly pivotal in developing critical systems reliant on big data analysis across domains such as energy management, smart grids, and weather forecasting. Integrating these technologies enhances reliability, efficiency, and accuracy, leading to more informed decision-making [

13,

14].

Artificial Neural Networks (ANNs) have emerged as robust analytical tools in weather forecasting due to their ability to model complex, nonlinear data patterns, surpassing traditional predictive models in accuracy. ANNs, trained on extensive datasets, uncover previously unnoticed patterns, making them ideal for predicting intricate atmospheric processes [

15]. Their application significantly improves forecast accuracy, positively impacting sectors such as agriculture, transportation, and disaster management. In particular, ANNs demonstrate substantial benefits in scenarios requiring rapid, precise forecasts, enabling efficient resource utilisation and early risk detection [

16].

Long Short-Term Memory (LSTM) networks have recently shown remarkable effectiveness in modelling time-series meteorological data, notably addressing long-term dependency limitations common to traditional Recurrent Neural Networks (RNNs). Studies indicate the superior performance of LSTM networks in forecasting variables such as temperature, precipitation, wind speed, and air pollution. For instance, convolutional LSTM networks have shown significant success in short-term precipitation forecasts, while LSTM-based models have demonstrated effectiveness in wind speed predictions and air pollution forecasts [

17,

18,

19,

20,

21]. Furthermore, deep-learning methods, including LSTM models, have proven their efficiency in short-term temperature forecasts [

22].

In addition to standard LSTM architectures, Bidirectional Long Short-Term Memory (BiLSTM) networks have recently gained prominence in meteorological forecasting due to their ability to process temporal sequences in both forward and backward directions. This bidirectional structure enables BiLSTM models to capture richer contextual dependencies, thereby improving predictive accuracy in complex, nonlinear atmospheric phenomena. Recent studies have demonstrated that BiLSTM models outperform unidirectional LSTM and traditional ANN models in various temperature forecasting tasks. For instance, Miao et al. [

23] demonstrated that a hybrid GCN-BiLSTM model significantly reduced RMSE values in multi-city daily temperature forecasts when compared to LSTM, ANN, and ARIMA models. Khokhar et al. [

24] further validated BiLSTM’s advantage by applying it to over a century of monthly climate data, where it achieved lower error rates compared to traditional statistical models. Zrira et al. [

25] showed that an attention-enhanced BiLSTM model provided superior accuracy in sea surface temperature prediction compared to several advanced machine learning algorithms, including LSTM and Transformer models. More recently, Zhang et al. [

26] proposed a CEEMDAN–BO–BiLSTM hybrid framework that yielded a remarkably low MAPE of 0.31% in monthly temperature forecasting, significantly surpassing conventional BiLSTM and LSTM models. Collectively, these findings underscore BiLSTM’s capacity to enhance temperature prediction accuracy, especially when integrated with signal decomposition or spatial learning components, making it a powerful tool for weather forecasting in data-scarce or complex environments.

The existing literature underlines the advantages of integrating fog computing with machine learning methods. Abdulkareem et al. (2019) explored machine learning’s applications in fog computing, addressing aspects like resource management, accuracy, and security [

27]. Kaur et al. (2020) developed an energy-efficient framework leveraging IoT and fog-cloud computing for early wildfire predictions [

28]. Farooq et al. (2021) demonstrated ANN integration with fog computing for enhanced energy efficiency, and recent studies have focused on optimising energy consumption forecasts using IoT and deep learning [

29]. Hybrid modelling approaches also show significant promise in enhancing forecasting accuracy, as demonstrated in recent studies [

26,

27,

28,

29,

30,

31,

32].

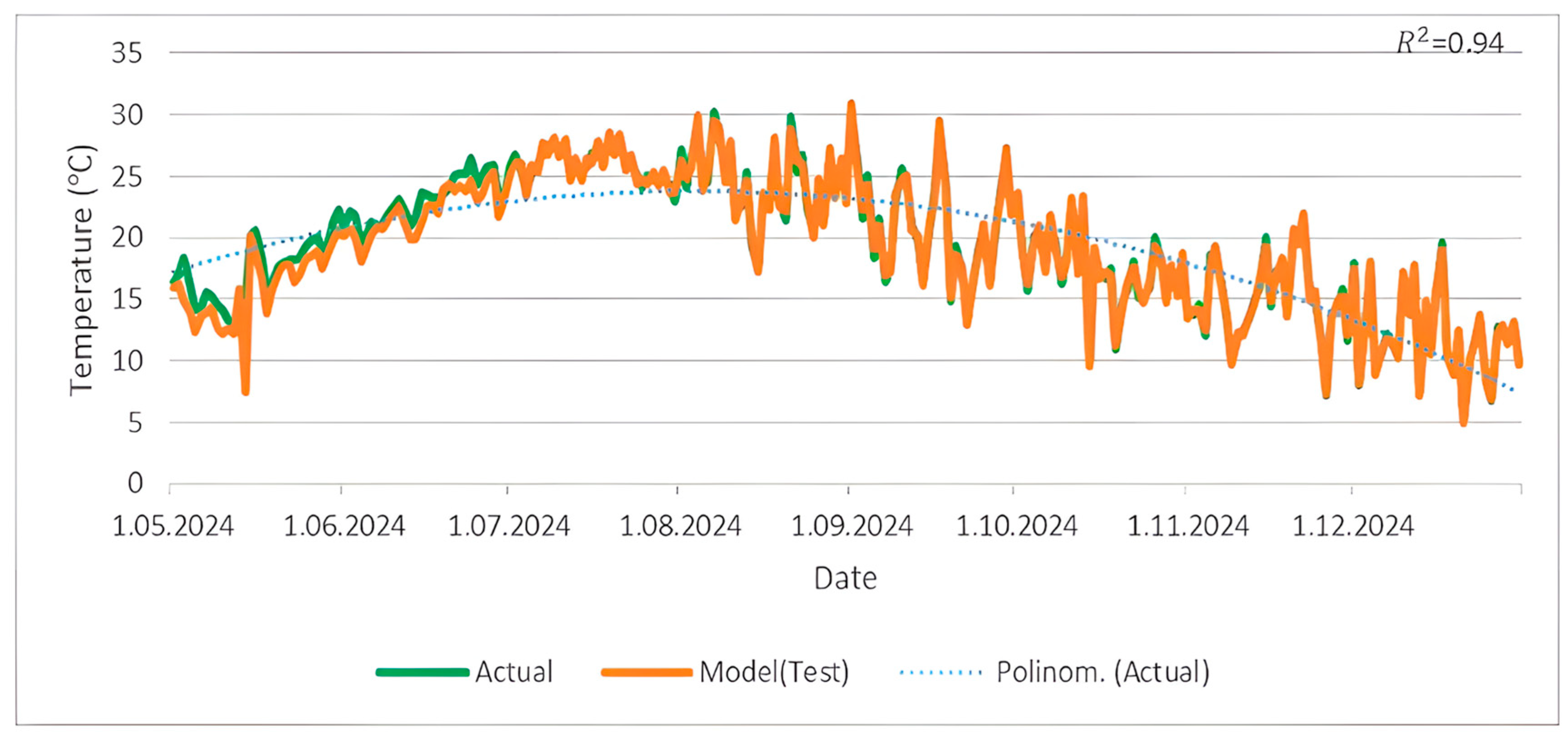

Although several studies have explored temperature forecasting using deep learning or IoT individually, few have combined wavelet-enhanced hybrid models with fog computing in real-time urban deployments under infrastructural constraints. To address this gap, this study presents a novel real-time temperature forecasting framework integrating IoT-based data acquisition, fog computing, and hybrid deep-learning models. A custom-built IoT system was installed atop a residential building in Şişli, Istanbul—selected for its representative urban characteristics—to collect hourly temperature, pressure, and humidity data from July 2022 to December 2024. The dataset was pre-processed using Discrete Wavelet Transform (DWT) to enhance signal quality and remove noise. Three hybrid models—W-ANN, W-LSTM, and W-BiLSTM—were then comparatively evaluated using TSMS ground-truth data for the May–December 2024 period. Among these, the W-BiLSTM model achieved the best performance, with a Mean Absolute Percentage Error (MAPE) of 2%, a test accuracy of 97%, and 94% concordance with official meteorological records. Although all models performed well in high-temperature scenarios, challenges remained in accurately predicting low-temperature events. The primary methodological contributions of this study are threefold: (i) the design and implementation of an IoT-based weather monitoring system in an urban environment characterised by data scarcity; (ii) the integration and comparative evaluation of three wavelet-enhanced hybrid deep-learning models (W-ANN, W-LSTM, and W-BiLSTM); and (iii) the proposal of a fog computing architecture, based on ESP32 (Espressif Systems, Shanghai, China) and AWS (Amazon Web Services, Seattle, WA, USA), enabling low-latency, edge-level data processing. These contributions collectively aim to provide a feasible and accurate approach to weather forecasting in regions with insufficient official infrastructure, thereby offering a valuable foundation for future smart city deployments and adaptive environmental monitoring systems.

3. Materials and Methods

3.1. Data Used

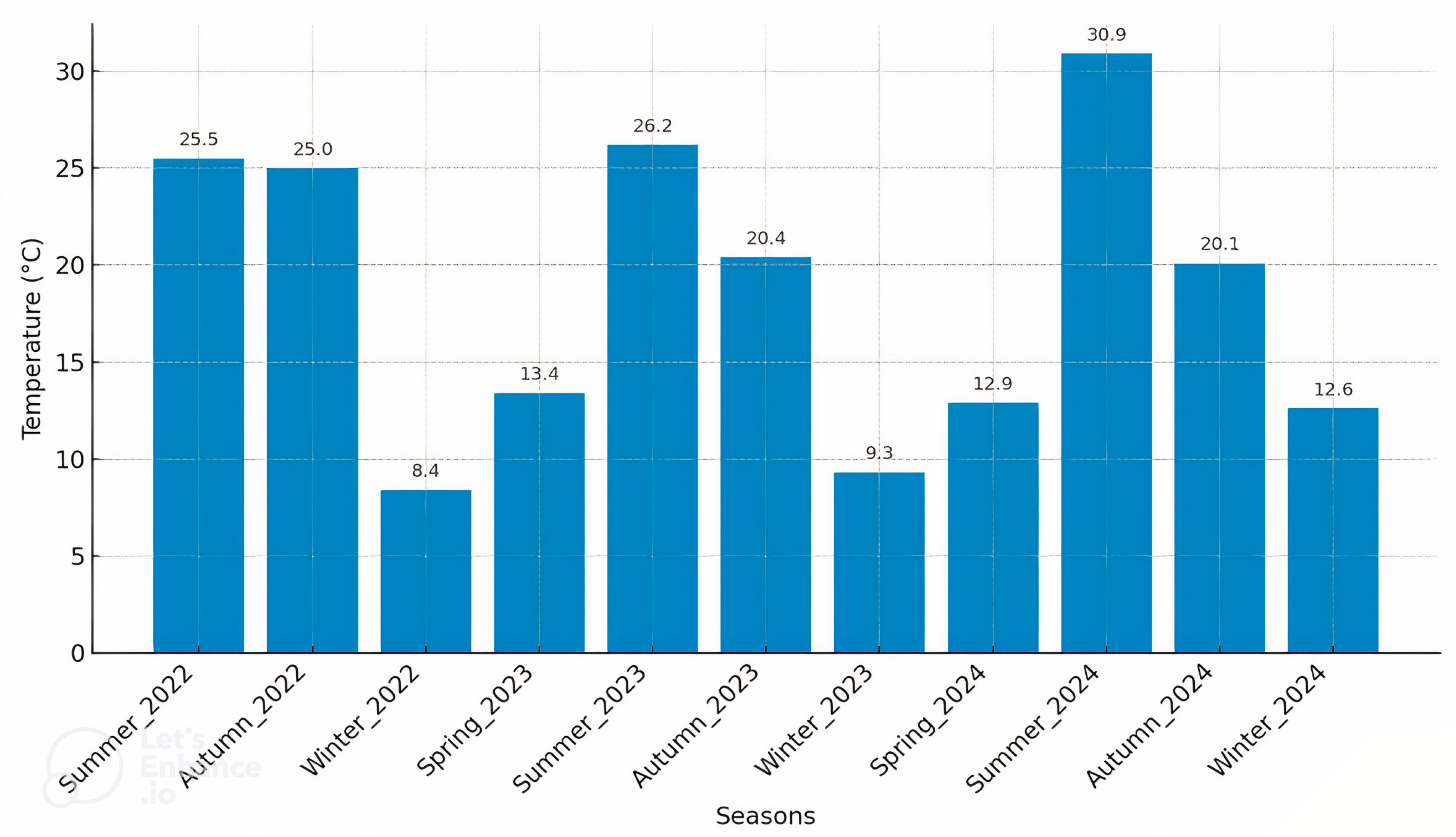

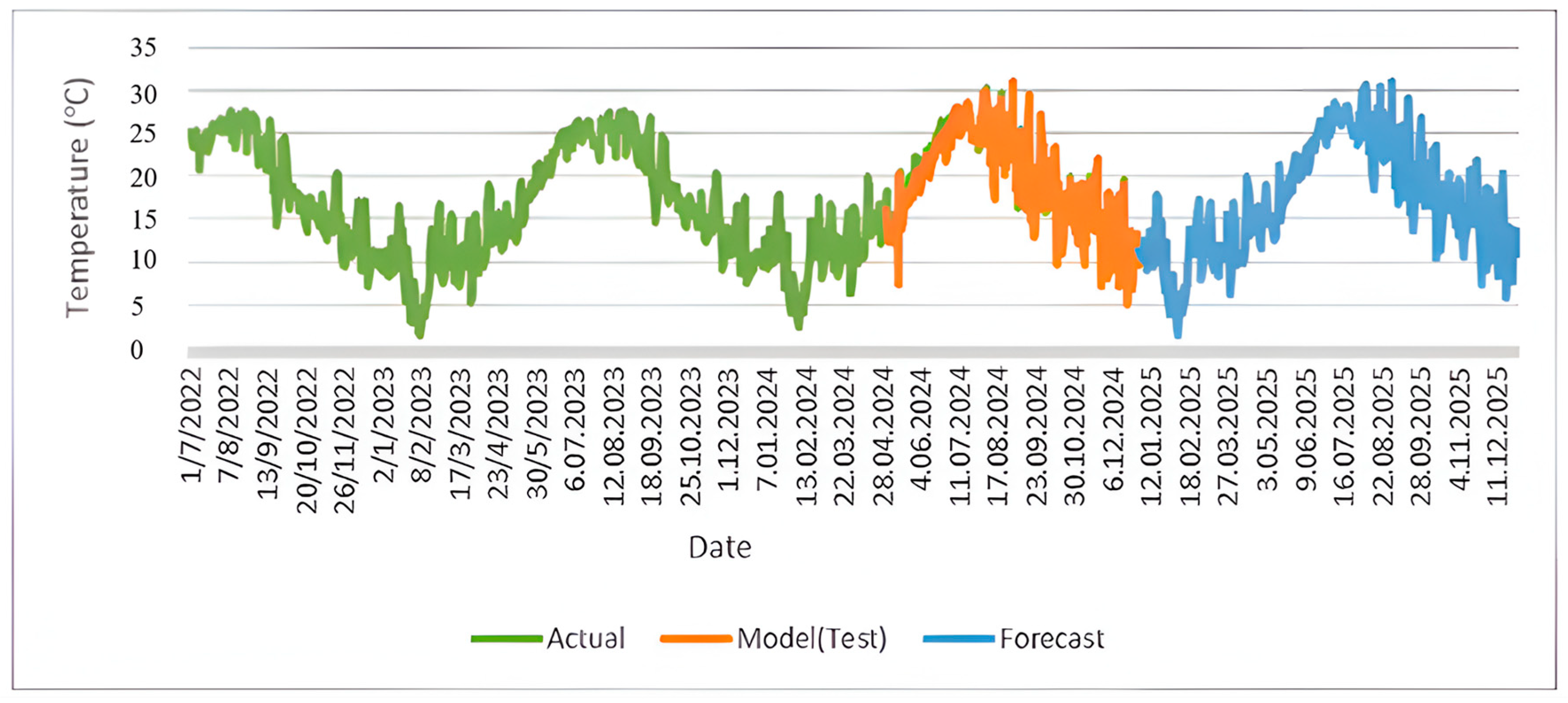

Critical weather parameters, including temperature, pressure, and humidity, were systematically collected using a custom-built IoT sensor system strategically positioned atop a residential building in Şişli, Istanbul. This location was specifically chosen due to its representative urban characteristics. The data collection spanned hourly measurements from July 2022 to December 2024, resulting in a comprehensive dataset comprising 21,960 hourly observations per parameter. Annual average temperatures were calculated to characterise the observed climatic conditions clearly. The average temperature for the partial measurement period from July to December 2022 was found to be 19.4 °C, while the subsequent full-year averages for 2023 and 2024 were determined as 16.1 °C and 16.8 °C, respectively. A detailed seasonal temperature analysis, illustrated in

Figure 2, highlights distinct fluctuations across seasons. Notably, the summer of 2022 recorded an average temperature of 25.5 °C, transitioning to 25 °C in autumn 2022 and sharply declining to 8.4 °C in winter 2022. Similarly, fluctuations persisted throughout subsequent years; summer 2023 had an average of 26.2 °C, whereas winter 2023 averaged only 9.3 °C. The substantial variation observed from summer 2024 (30.9 °C) to winter 2024 (12.6 °C) further emphasises pronounced seasonal variability. Overall, these patterns demonstrate warmer temperatures during summer and autumn, contrasted by notably cooler conditions in winter and spring.

Due to the absence of direct temperature measurements for the year 2025, predictive models developed within this study were employed to generate temperature forecasts. The accuracy and reliability of these model predictions were rigorously validated through comparisons with observational data obtained from the TSMS. The close alignment between predicted and observed values confirmed the predictive capability and potential applicability of the developed forecasting models for practical meteorological purposes.

Generating Data for Weather Forecasting

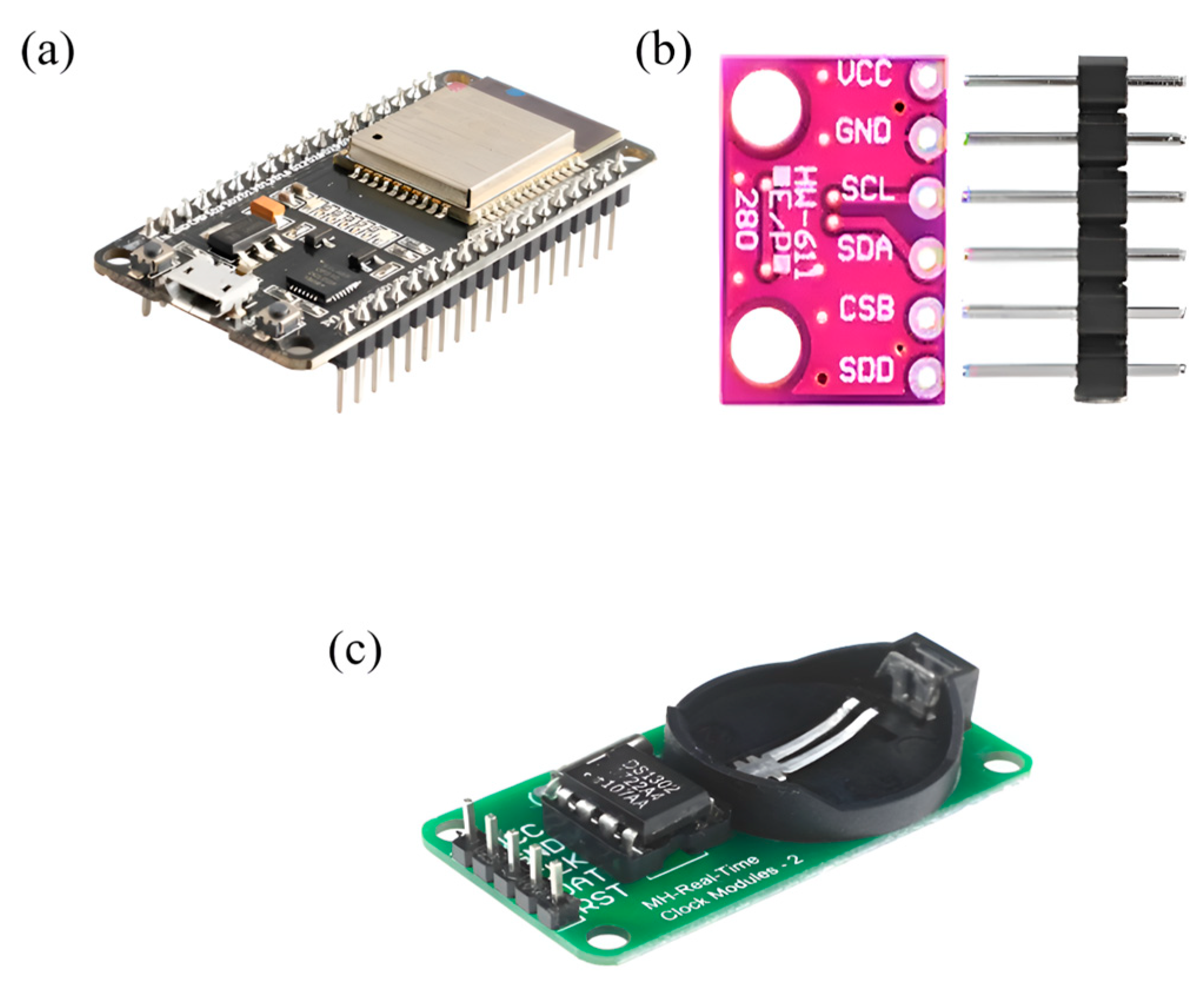

In the development of the platform, raw data were generated using an ESP32 microcontroller, supported by environmental sensors and a time regulator. As shown in

Figure 3, the data acquisition system included (a) an ESP32 microcontroller for wireless processing and communication; (b) a BME280 (Bosch Sensortec GmbH, Reutlingen, Germany) sensor module for temperature, humidity, and pressure measurement; and (c) a DS1302 (Maxim Integrated, San Jose, CA, USA) real-time clock (RTC) module for maintaining accurate time-stamping throughout data collection.

In this study, a Bosch BME280 sensor was employed as a combined digital module for measuring humidity, pressure, and temperature. It operates based on proven detection principles and is housed in a compact metal-lidded LGA package with a height of 0.93 mm and a footprint of 2.5 mm

2. The sensor provides measurements with an accuracy of ±1 °C for temperature, ±1 hPa for pressure, and ±3% for relative humidity [

34]. To accurately log the time of each observation, the DS1302 real-time clock (RTC) module was utilised. This chip operates within a wide voltage range of 2.5 V to 5.5 V and supports two power supplies. It also includes 31 bytes of static RAM and communicates with a microcontroller via a simple serial interface. The RTC module records real-time information such as seconds, minutes, hours, day, date, month, and year, thus ensuring the precise temporal annotation of meteorological data. Additionally, the ESP32 microcontroller was integrated into the system due to its high performance and embedded Wi-Fi and Bluetooth capabilities, which are essential for developing IoT-based applications. The ESP32 operates reliably across a temperature range from −40 °C to +125 °C, making it suitable for deployment in industrial environments [

35].

3.2. Methodology

This study aims to enhance the accuracy and reliability of weather forecasting through a multi-layered approach incorporating IoT, fog computing, and advanced data processing techniques. The methodology is structured as follows.

Weather parameters, including temperature, humidity, and pressure, were collected on an hourly basis from July 2022 to December 2024, resulting in a total of 21,960 data points per parameter, corresponding to 915 days of observation. During the data acquisition process, partial data losses occurred due to sensor malfunctions and intermittent transmission failures. Specifically, temperature data exhibited a 1.5% loss, equating to approximately 329 missing values; humidity data showed a 2.3% loss, corresponding to approximately 505 missing values; and pressure data experienced a 1.8% loss, amounting to approximately 395 missing values. These missing observations were addressed through imputation techniques during the pre-processing stage, wherein missing values were estimated and replaced based on statistical and temporal patterns within the dataset. This procedure ensured the continuity and integrity of the data. Subsequently, all parameters were normalised using min–max scaling in preparation for further analysis and predictive modelling.

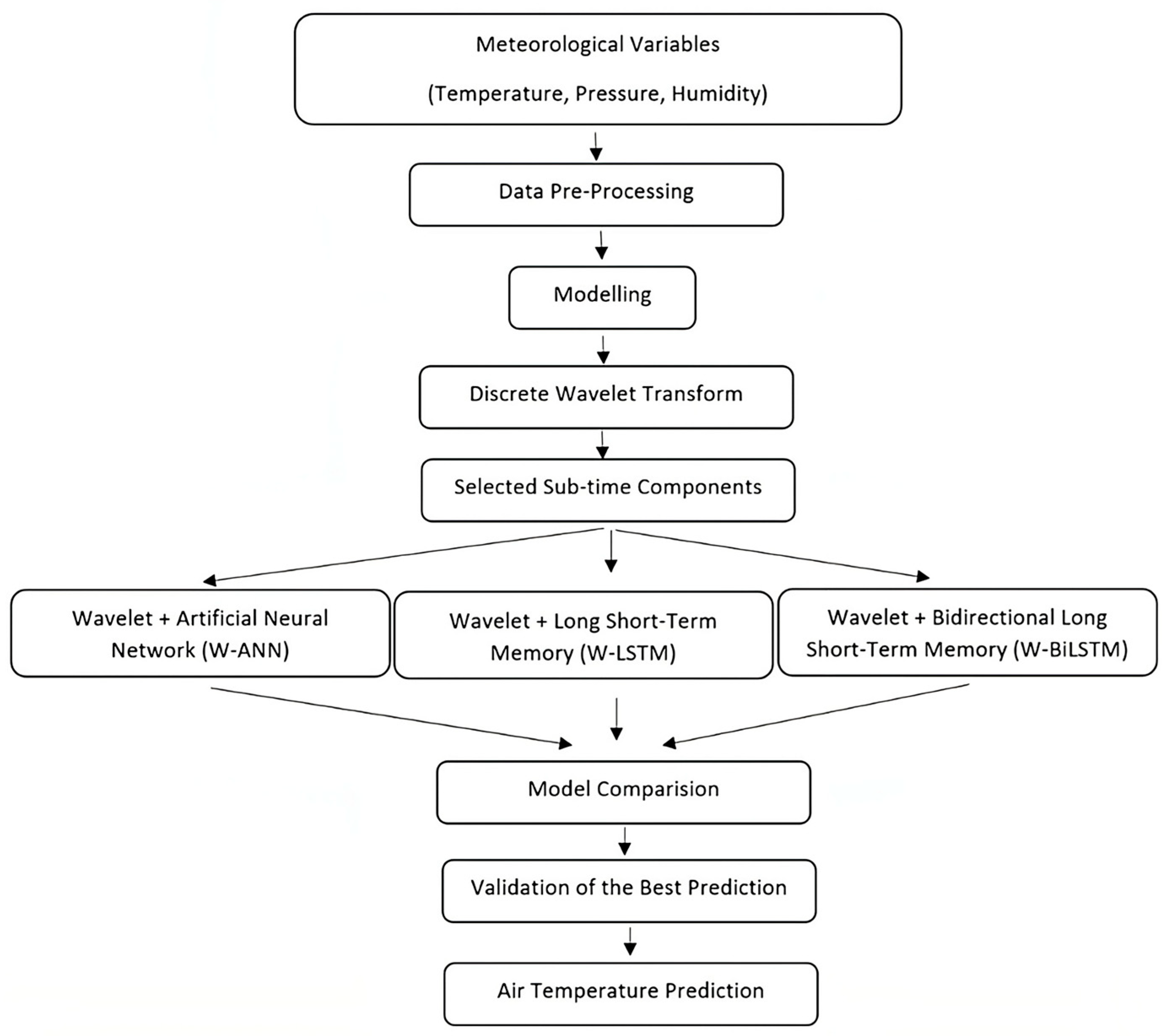

The forecasting process was conducted using three advanced hybrid models: W-ANN, W-LSTM, and W-BiLSTM. These models integrated Wavelet Transform for feature extraction with ANN- and LSTM-based architectures to enhance predictive performance. By leveraging the capacity of wavelet transformation to represent both temporal and frequency domain characteristics, the models exhibited improved capability in capturing complex patterns and trends inherent in meteorological variables.

A comparative analysis was performed to assess the forecasting accuracy of the W-ANN, W-LSTM, and W-BiLSTM models, with a particular focus on temperature prediction performance. The findings of this analysis provide valuable insights for the implementation of intelligent forecasting systems in real-world meteorological applications, particularly in environments where reliable measurement infrastructure is lacking.

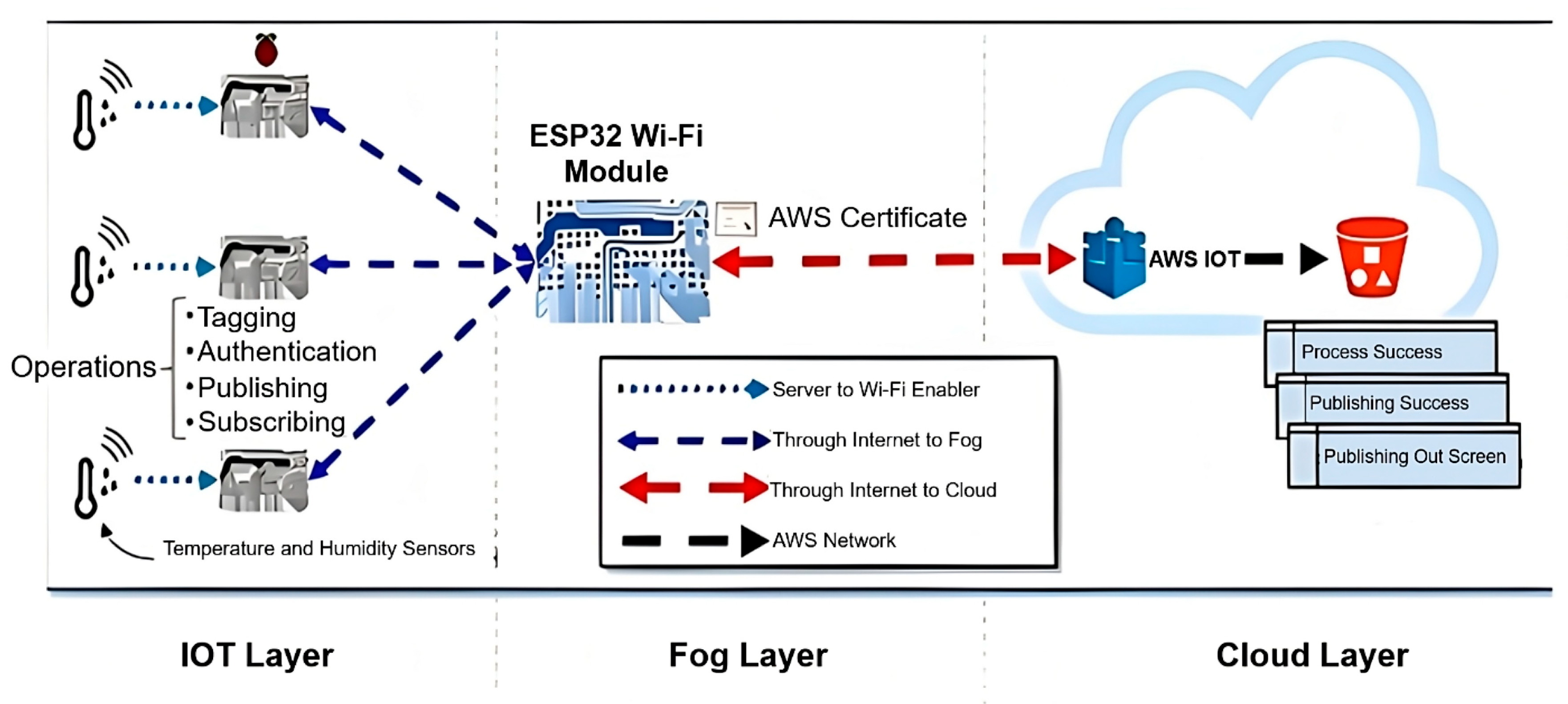

The architecture of the fog computing layer, as illustrated in

Figure 4, was designed to enable efficient data processing between the IoT and cloud layers. This intermediate layer plays a crucial role in reducing latency and enhancing the performance of real-time predictions by bringing computational operations closer to the data source. Specifically, the fog layer—implemented using an ESP 32 Wi-Fi Module—was tasked with performing key functions such as data tagging, authentication, and publishing. The ESP32 was selected due to its technical advantages over other microcontrollers such as the ESP8266. In particular, the ESP32 features a dual-core processor, higher clock speed (up to 240 MHz), larger SRAM (520 KB), and integrated Wi-Fi and Bluetooth capabilities. These specifications make it more suitable for multitasking, low-latency communication, and energy-efficient IoT operations, all of which are essential for the fog computing environment required in this study. Temperature and humidity data collected via sensors were initially processed within this layer before being transmitted to the cloud for further analysis. This architecture ensured both data integrity and system reliability, while also significantly reducing processing delays [

36]. To assess the practical viability of the proposed system, a basic financial estimation was also conducted. The total cost incurred in 2025 for deploying the fog-based forecasting system—including hardware components (e.g., ESP32, BME280, RTC module), cloud services, and system integration—amounted to approximately USD 520. This cost-effective structure underscores the feasibility of implementing the proposed solution in real-world IoT or smart city applications.

The developed models were tested through simulations, in which temperature forecasts for the year 2025 were generated in the absence of direct observational data. The reliability of the predictions was validated by comparing the models’ outputs with historical and real-time data obtained from the TSMS, thereby confirming the consistency and accuracy of the forecasting approach. The integration of fog computing with IoT infrastructure and advanced predictive models substantially enhances the efficiency and responsiveness of weather forecasting systems.

3.3. Modelling

3.3.1. LSTM Model

LSTM models are recognised for their innovative and scalable approach to learning from sequential data, often outperforming CNN and RNN models, particularly in managing long-term dependencies. While RNNs are effective for short-term dependencies, they encounter difficulties with long-term dependencies due to challenges such as the “vanishing gradient” and “gradient explosion” problems. These issues occur when small gradients slow learning or large gradients cause instability. To mitigate these limitations, LSTM models incorporate memory cells regulated by three key gates: the Forget Gate, which determines which information to discard; the Input Gate, which controls the new information stored; and the Output Gate, which regulates the information passed to the next layer. This gating mechanism allows LSTM models to effectively capture complex, long-term temporal relationships, making them particularly well-suited for tasks that require the analysis of sequential data.

3.3.2. ANN_NARX Model

Defining artificial intelligence (AI) is challenging due to its application across diverse fields, including medicine, physics, psychology, and computer science. AI technology aims to simulate complex human-like processes such as problem-solving, learning, decision-making, and language or image processing. It achieves these goals through machine learning, deep learning, natural language processing, and expert systems. Among these, ANNs are crucial, providing a solid foundation for AI in various applications, including modelling, prediction, and classification [

37,

38,

39,

40]. ANNs are inspired by biological nervous systems and mimic their functions, using artificial neurons and synapses to process data and generate outputs. The performance of an ANN improves through training. In this study, time series estimation was conducted using the ANN method. Specifically, the NARX model, known for its success in time series prediction, was employed [

41,

42]. The NARX model is noted for providing more accurate and faster predictions than traditional feedback networks [

43,

44]. The mathematical formulation of the NARX model is given in Equation (1) [

45].

In the equation, (y (t − 1), y (t − 2),…, y(t − ny) represents the output values of the network; x(t −1), x(t −2),…, x(t − nx) are the input values of the network. The number of inputs in the network is represented by the value nx. The parameter ny indicates how many previous outputs will be used as feedback.

3.3.3. BiLSTM Model

BiLSTM networks represent an extension of the conventional LSTM architecture, specifically designed to enhance the modelling of sequential data by incorporating information from both past and future time steps. Unlike standard LSTM networks, which process input sequences in a single (forward) temporal direction, BiLSTM networks employ two separate LSTM layers: one processes the sequence from the beginning to the end (forward pass), while the other processes it from the end to the beginning (backward pass). The outputs of both directions are subsequently concatenated or combined, allowing the model to access a more comprehensive temporal context at each time step.

This bidirectional structure enables BiLSTM networks to capture dependencies that span both directions in time, which is particularly valuable in tasks where future states influence the interpretation of previous ones—such as in speech recognition, natural language processing, and time-series forecasting. Internally, each LSTM cell in the BiLSTM model consists of memory gates (input, forget, and output gates) that regulate the flow of information, effectively mitigating the vanishing gradient problem commonly observed in traditional RNNs. As a result, BiLSTM networks are capable of learning long-term dependencies and subtle temporal patterns more effectively.

Due to their enhanced ability to model complex temporal relationships, BiLSTM networks have become increasingly prevalent in applications involving nonlinear, noisy, or highly dynamic data—such as meteorological forecasting and environmental monitoring.

3.3.4. Wavelet Transform

Wavelet is a concept used in signal processing, data analysis, and image processing [

46,

47]. Wavelet functions are capable of detecting and analysing the details of signals at different scales. In this way, they provide a more comprehensive understanding by separating the time- and frequency-changing properties of signals and provide the opportunity to obtain successful results in various application areas.

The wavelet transform is mathematically computable and uses wavelet functions as in Equation (2) [

48].

where ψ

(a,b) = continuous wavelet transforms,

a = scaling parameter,

b = conversion parameter,

Ψ = wavelet function (main wavelet).

This process determines how a signal is represented in both time and frequency, revealing its spectral information. Wavelet functions and transforms are effective tools for analysing signal properties and are widely applied in signal processing, data analysis, and image processing [

49,

50]. The significance of pre-processing techniques in time series forecasting has been extensively highlighted in the literature, as such methods substantially enhance both the accuracy and robustness of predictive models. In this context, the present study proposes a hybrid forecasting framework that integrates DWT with deep-learning models to improve the predictive performance in air temperature forecasting. Meteorological variables including temperature, humidity, and pressure were used as inputs for the proposed system. These variables were decomposed using the Daubechies ‘d4’ wavelet, which is widely acknowledged for its effectiveness in denoising time series data and revealing latent patterns. A three-level DWT decomposition was applied to each variable, resulting in four sets of wavelet coefficients: one approximation (a

3) and three detail coefficients (d

3, d

2, d

1). These coefficients enabled a structured and multi-resolution representation of the original signals, thereby facilitating noise reduction and the identification of meaningful signal characteristics. Following the decomposition, a correlation-based feature selection method was applied. For each wavelet subcomponent, the correlation with the target variable (air temperature) was calculated. Unlike prior studies by Partal and Kişi (2007) and Partal and Cigizoglu (2009), which utilised stricter thresholds of 0.2 and 0.3, respectively [

51,

52], the present study employed a more inclusive threshold of 0.1. This approach was adopted to preserve weaker yet potentially informative relationships that may reflect subtle dynamics within atmospheric systems. The selected subcomponents were used as input for three hybrid forecasting models: W-ANN, W-LSTM, and W-BiLSTM. Their overall framework is illustrated in

Figure 5. The W-ANN model was implemented using a Nonlinear Autoregressive with Exogenous Input (NARX) structure, while W-LSTM and W-BiLSTM employed advanced recurrent neural network architectures. Notably, W-BiLSTM utilised a bidirectional processing strategy, enabling the capture of information from both past and future time steps, thereby improving temporal representation capabilities. All models were built and trained using the TensorFlow framework, ensuring efficient implementation and optimal hyperparameter configuration.

3.3.5. Model Performance Measures

Error measures such as Mean Squared Error (MSE) and Root Mean Squared Error (RMSE) are essential for evaluating the performance of regression models. RMSE is particularly advantageous because it retains the same unit as the dependent variable, making interpretation more intuitive. MAPE is commonly used for comparing model performance across different datasets, as it expresses prediction errors in percentage terms. The coefficient of determination (R-squared) evaluates the proportion of the variance in the dependent variable that is explained by the model, thereby indicating the overall goodness of fit.

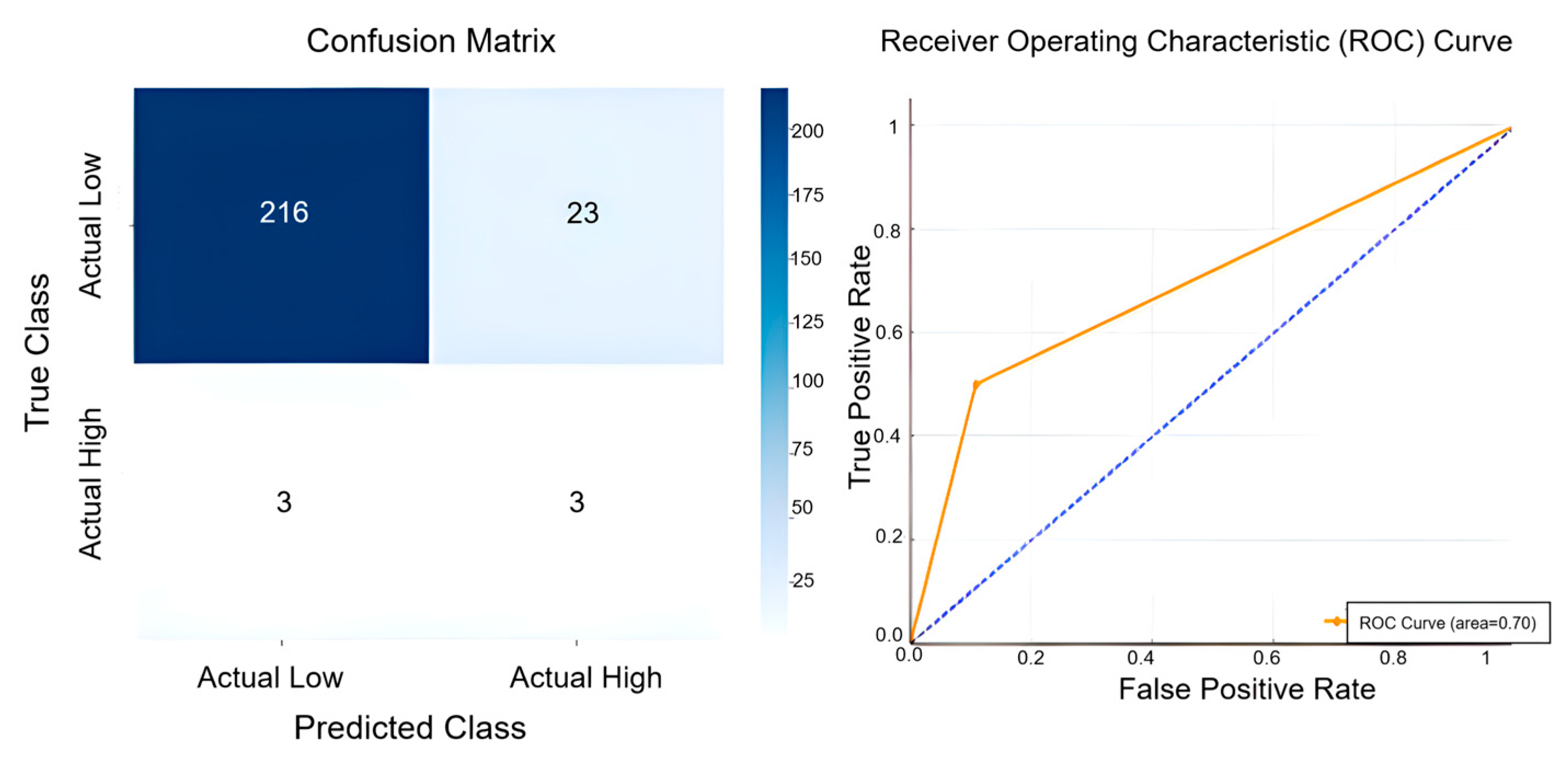

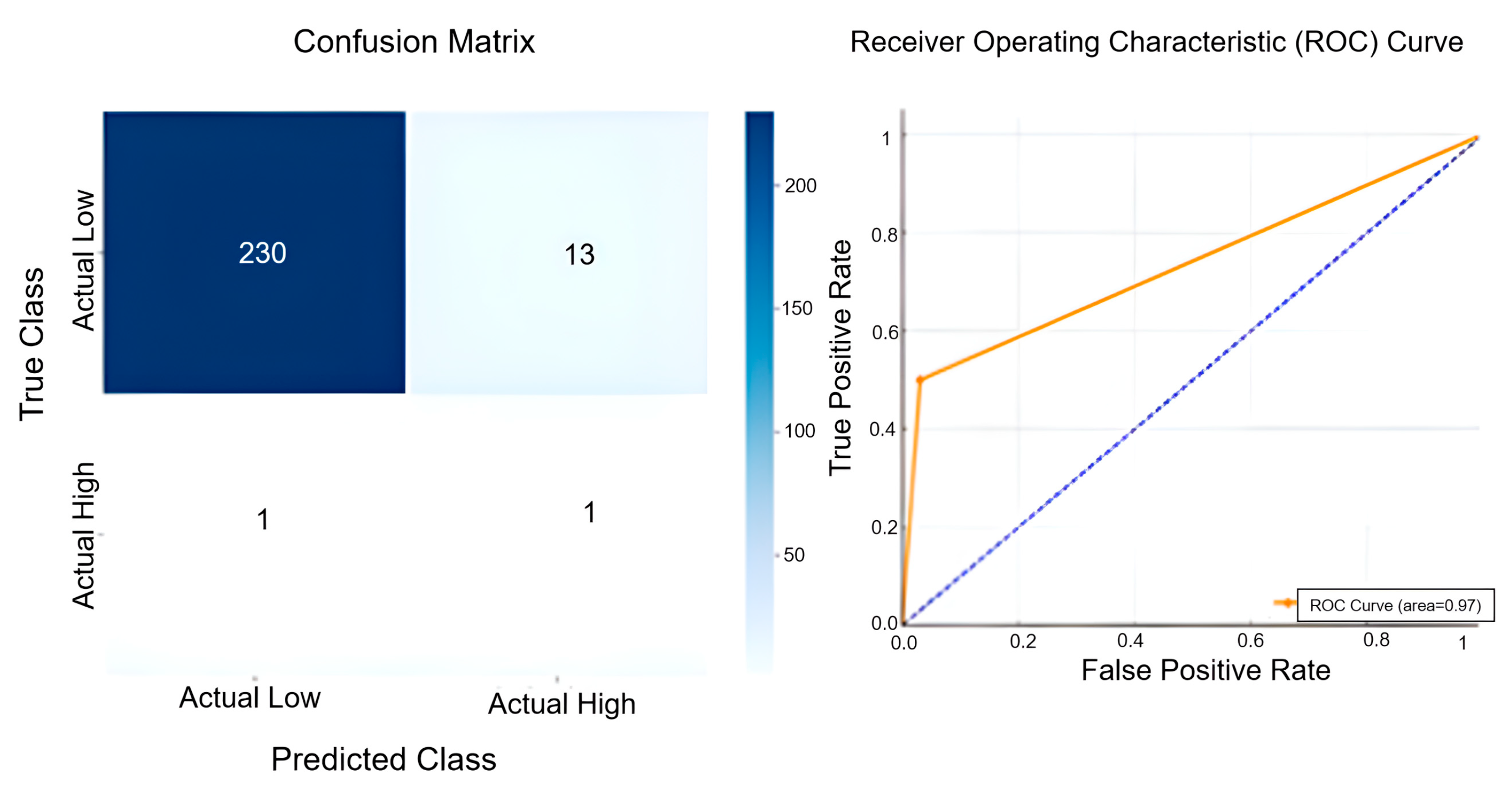

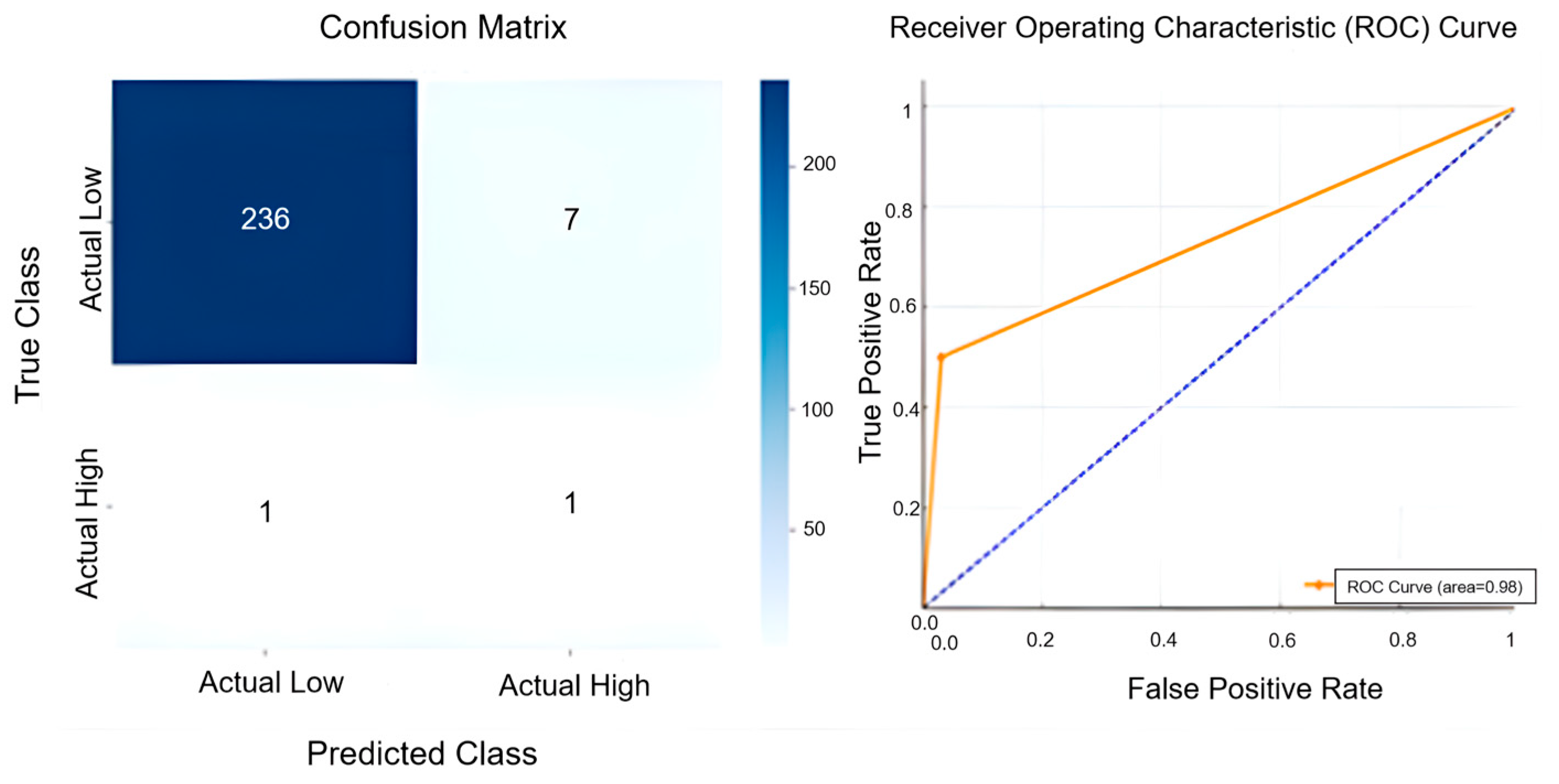

In cases where classification is involved, particularly when temperature predictions are divided into categories (e.g., low and high temperatures), the Confusion Matrix becomes a valuable tool. It provides insight into the model’s ability to correctly classify data points into different categories by showing the true positives, true negatives, false positives, and false negatives. This enables a more detailed analysis of where the model excels or struggles, such as distinguishing between low and high temperatures. With their role in evaluating the model’s overall performance, the ROC (Receiver Operating Characteristic) curve and AUC (Area Under the Curve) provide reassurance about the model’s discriminatory power.

These error measures and evaluation tools comprehensively assess model performance, compare different models, and make informed decisions to improve predictive accuracy and reliability.

6. Conclusions and Future Work

Accurate weather forecasting plays a vital role in safeguarding societies and supporting decision-making processes across various sectors. This study presented and evaluated three hybrid forecasting models—W-ANN, W-LSTM, and W-BiLSTM—each integrating DWT as a pre-processing technique. The experimental results demonstrated that all three hybrid approaches provided reasonable forecasting accuracy; however, the W-BiLSTM model yielded the highest overall performance, particularly in minimising prediction errors and improving classification around threshold values. The BiLSTM architecture’s ability to capture bidirectional temporal dependencies significantly contributed to its superior results. These findings support previous research indicating the effectiveness of hybrid deep-learning methods in managing the nonlinearity and complexity inherent in meteorological data. In addition, the study highlighted the importance of carefully optimising neural network architectures, such as through the number of hidden layer neurons, to maximise predictive performance.

Future studies may focus on testing these hybrid models across diverse climatic regions and extended temporal datasets to further validate generalisability. Moreover, the incorporation of attention mechanisms, ensemble learning strategies, or integration with Internet of Things (IoT)-enabled fog computing systems could further enhance forecasting capabilities. Such advancements hold the potential to improve the accuracy, responsiveness, and practical applicability of weather prediction systems in real-world contexts.