Abstract

In a multi-cell network, interference management between adjacent cells is a key factor that determines the performance of the entire cellular network. In particular, in order to control inter-cell interference while providing a high data rate to users, it is very important for the base station (BS) of each cell to appropriately control the transmit power in the downlink. However, as the number of cells increases, controlling the downlink transmit power at the BS becomes increasingly difficult. In this paper, we propose a multi-agent deep reinforcement learning (MADRL)-based transmit power control scheme to maximize the sum rate in multi-cell networks. In particular, the proposed scheme incorporates a long short-term memory (LSTM) architecture into the MADRL scheme to retain state information across time slots and to use that information for subsequent action decisions, thereby improving the sum rate performance. In the proposed scheme, the agent of each BS uses only its local channel state information; consequently, it does not need to receive signal messages from adjacent agents. The simulation results show that the proposed scheme outperforms the existing MADRL scheme by reducing the amount of signal messages exchanged between links and improving the sum rate.

1. Introduction

As the number of users requiring high-data-rate services in cellular networks rises, the cell radius becomes smaller, increasing the importance of managing interference between cells [1,2,3]. In multi-cell networks, effective interference management is crucial for maintaining signal quality, maximizing spectral efficiency, ensuring a reliable quality of service (QoS), and expanding network capacity [4]. The transmit power of a base station (BS) not only reaches the intended user but also creates unintended interference with other users in other cells. Hence, effective transmit power control not only improves data rates by delivering stronger signals to intended users but also improves overall spectral efficiency by mitigating inter-cell interference with other users [5]. Hence, optimizing the transmit power of BSs is essential to maintain signal quality, mitigate interference, and maximize spectral efficiency. Such intelligent transmit power control strategies enhance overall system capacity, improve user QoS, and achieve fair radio resource control across cells [6]. However, with the addition of more BSs, controlling the transmit power of each BS in a multi-cell network becomes an increasingly difficult problem.

Recently, an increasing number of studies have applied reinforcement learning (RL) to solve the power control problem in wireless networks [7,8,9]. RL is a branch of machine learning (ML) in which an agent interacts with an environment and learns, through trial and error, a behavioral strategy that maximizes reward [10]. Because RL can capture long-term dependencies and rapidly adapt to dynamically changing wireless environments, it has seen significant use in solving transmit power control problems [11]. Deep reinforcement learning (DRL), which integrates deep neural network (DNN) with RL, effectively models state–action relationships in complex, high-dimensional environments, making it suitable for even more challenging wireless environments [12]. However, solving the power control problem using RL or DRL for interacting BSs in a multi-cell network can be challenging because it requires considering both the dynamic nature of the wireless environment and the interactions between BSs [13]. Consequently, many researchers have introduced multi-agent deep reinforcement learning (MADRL) to solve the power control problem in multi-cell networks. In MADRL, each agent learns an optimal policy in dynamic environments by cooperating or competing with each other [12,14].

The transmit power control problem is inherently non-convex and computationally complex, and the challenge becomes more severe as the network size increases. Researchers have integrated techniques from geometric programming, game theory, information theory, and Pareto theory into multi-agent algorithms to address these issues [15]. These integrated approaches aim to maximize the system capacity and scalability of large-scale networks by supporting distributed decision-making and have prompted investigations into various MADRL-based transmit power control strategies, including centralized, distributed, and decentralized learning methods [8,16]. Centralized methods leverage complete, real-time state information to achieve superior performance, but each agent transmits their state information to a central server, which incurs significant signaling overhead and increases the complexity of the central server. In contrast, distributed and decentralized learning methods limit each agent’s observation to a portion of the environment, thereby reducing the signaling overhead; however, this approach may result in slower convergence and lower model accuracy [17]. To overcome these challenges, researchers have widely adopted the centralized training with decentralized execution (CTDE) framework in power control studies [18,19]. Researchers have leveraged the CTDE framework, which combines the benefits of centralized training and decentralized execution, to effectively model multi-agent systems. They have widely applied this approach to solve various wireless radio resource management (RRM) problems, such as the problem of controlling the BS’s transmit power [20,21]. In recent wireless resource management research, long short-term memory (LSTM) has been actively studied to effectively handle time-varying wireless environments [22,23,24,25]. LSTM, a variant of recurrent neural networks (RNNs), can learn long-term data dependencies more accurately than traditional DNNs or convolutional neural networks (CNNs), and can efficiently model the interrelationship between past and future states through a sequence-oriented structure. Recent researchers have been conducting research combining LSTM and RL to leverage the long-term dependency and predictive excellence of LSTM in time-series data [24,25].

The contributions of this paper are as follows. First, we propose a novel LSTM-based multi-agent actor–critic (MAAC) network to control the transmit power of each BS in multi-cell networks, thereby aiming to maximize the sum rate. Second, the proposed scheme uses only local channel state information (CSI), which dramatically reduces the signaling overhead caused by signaling messages used to exchange information between agents. Third, the simulation results show that the proposed scheme outperforms conventional deep Q-network (DQN)-based or QMIX-based MADRL schemes. In particular, the DQN-based MADRL scheme requires the signaling messages to exchange information between agents, which results in significant signaling overhead, whereas the proposed scheme does not require information from other agents.

2. Related Works

Researchers have extensively studied transmit power control in cellular networks using a wide range of approaches, including model-based optimization techniques, data-driven methods, and RL. For instance, a weighted minimum mean square error (WMMSE)-based scheme transforms the sum rate maximization problem into a weighted least mean square error problem for each user or transmitter–receiver pair and iteratively solves the resulting convex subproblems to find a solution to the original non-convex problem [26]. Similarly, fractional programming (FP) addresses an optimization problem with the objective expressed as the ratios of functions by employing techniques such as the Dinkelbach method and quadratic transform [27]. Although these model-driven optimization techniques perform powerfully, they encounter high computational complexity, sensitivity to initial conditions, and poor scalability because they rely on the instantaneous global CSI of all receivers.

To overcome the above problems of model-based optimization techniques, researchers have recently proposed data-based methods for transmit power control in wireless networks. For example, the authors of [28] proposed a method for training a DNN that allocates transmit power to reused channels to suppress interference. However, this approach relies on the quality of existing model-based optimization techniques and has limitations such as high offline computational costs and difficulty in adapting to dynamic environmental changes. The authors of [29] introduced a CNN-based distributed processing method to solve the transmit power control problem, aiming to reduce signaling overhead due to signal message exchanges and to support real-time processing. However, since the CNN is originally optimized for image processing, it may be limited in terms of scalability and generalization in complex multi-cell network environments. The authors of [30] proposed a scalable graph neural network (GNN)-based architecture for transmit power control and beamforming optimization that satisfies permutation equivalence, provides high computational efficiency, and generalizes well to large-scale problems. However, these methods rely on data that may not accurately represent real-world conditions, making the tracking of changes over time difficult and incurring high training data costs.

To address the limitations of model-based optimization techniques and data-based methods, several studies have proposed RL-based approaches for RRM and interference management in various wireless networks [31,32,33]. The authors of [31] proposed a distributed resource control scheme based on DRL that determines the resource blocks and transmit power of vehicle-to-vehicle (V2V) links using a DQN in an environment where V2V networks and vehicle-to-infrastructure (V2I) networks share radio resources and cause interference, reducing power consumption and delay while maximizing the sum of V2I and V2V links. The authors of [32] proposed an RL-based joint beamforming scheme for V2I systems that considers alignment overhead and optimizes antenna beamwidths and beam alignment spacing by leveraging past and future compensation, thereby achieving better average throughput and link stability compared with existing approaches. The authors of [33] proposed an RL-based joint power control and channel allocation scheme that utilizes statistical CSI to address severe interference caused by high-density access points in dense wireless local area network environments. Based on the correlation between transmit power and channel, the researchers derived the optimal joint optimization strategy through offline Q-learning.

A practical cellular network consists of multiple cells, where the cells interfere with each other. Hence, the transmit power control based on RL should take the inter-cell interference in multi-cell networks into consideration. Accordingly, research based on the MADRL or CTDE framework is actively being conducted to effectively model the characteristics of multi-cell networks and to improve performance [34]. The authors of [34] used a DQN network parameter-sharing method based on a single meta-agent approach to solve the problem of controlling the transmit power of a BS in a multi-cell network. However, this method may incur additional signal message overhead because parameter-sharing between agents also occurs during the inference phase of the model. The authors of [19] used the QMIX-based MADRL scheme to control the transmit power of BSs in multi-cell networks. This approach significantly reduces the signal message overhead because each BS performs transmit power control using only local CSI, eliminating the need to share CSI with neighboring BSs. The authors of [25] examined a dynamic multi-channel access scenario in which users connect to a single channel at each time slot and randomly join and leave. To improve throughput and fairness among active users, this study proposes a distributed multi-agent reinforcement learning (MARL) approach that deterministically selects channel access policies over multiple consecutive time slots and employs LSTM to continuously track changes in user states and traffic over time. This approach supports effective policy learning and adaptation in wireless environments because it captures strong temporal dependencies.

The remainder of this paper is organized as follows: Section 2 reviews the related work and its limitations. Section 3 describes the system model. Section 4 details the proposed scheme, including the states, actions, and rewards used in MADRL. Section 5 presents the simulation results, and Section 6 concludes this paper. For the sake of clarity, the main symbols and their descriptions used in this paper are summarized in Table 1.

Table 1.

Symbols and descriptions.

3. System Model

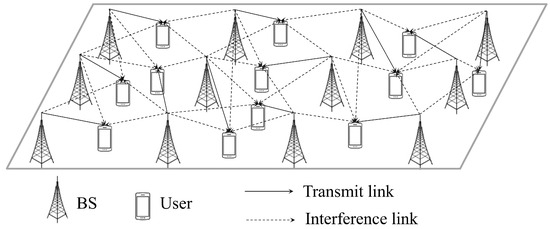

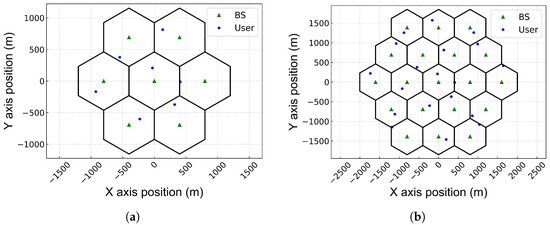

We consider a downlink communication scenario in a multi-cell network comprising multiple BSs and multiple users, as shown in Figure 1. This type of network scenario has been extensively used to model wireless ad hoc networks [35] and is equally applicable for modeling multi-cell wireless networks [34]. The set of BS indices is denoted by , where K is the total number of BSs in the multi-cell network. Each user is assumed to be served by a single BS, i.e., each user has one transmit link and multiple interference links, where the user served by BS k is denoted by . Each BS and user has a single antenna. Each BS is located at the center of a cell, while users are randomly distributed within the boundaries of that cell.

Figure 1.

A system model.

The system operates as a fully synchronized time-slotted network with a fixed slot duration T. Each cell uses the same frequency band, making inter-cell interference a critical issue. We adopt a block-fading model, which assumes that the wireless channel remains constant during each time slot and changes independently between slots. Specifically, the downlink channel gain from BS k to user j at time slot t is modeled as follows [34]:

where represents the small-scale Rayleigh fading component, and represents the large-scale fading component, which includes both path loss and log-normal shadowing. is the modulus operation. The small-scale fading is modeled using Jake’s fading model as a first-order complex Gauss–Markov process as follows [36]:

where are independent and identically distributed (i.i.d.) circularly symmetric complex Gaussian (CSCG) random variables with unit variance and the correlation coefficient is given by , with being the zeroth-order Bessel function of the first kind. T and are the slot duration and the maximum Doppler frequency, respectively. The large-scale fading component is modeled as follows:

where d is the distance between BS k and user j, and is the distance-based path loss derived from the 3GPP TR 25.942 standard [37]. The term represents log-normal shadowing, which remains nearly constant over many time slots. The shadowing process is updated as follows:

where denotes the log-normal shadowing standard deviation, is drawn from , and are i.i.d. Gaussian random variables with unit variance. The downlink transmit power vector of BSs at time slot t is denoted by

where is the transmit power of BS k at time slot t. The signal-to-interference-plus-noise ratio (SINR) received by user k in time slot t can then be expressed as follows:

where is the additive white Gaussian noise (AWGN) power spectral density (PSD). The downlink spectral efficiency of user k at time slot t is given by

At each time slot t, the transmit power control problem is the maximization of the sum rate, as follows:

where is the maximum power of each BS. However, finding the optimal parameter of the transmit power vector is known to be an NP-hard problem [38].

4. Proposed LSTM-Based MAAC Network for Transmit Power Control

4.1. Typical MADRL Network

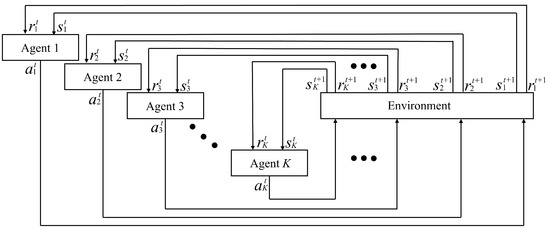

Conventional RL uses a single agent to maximize long-term rewards based on environment observations, and therefore, optimizing the individual rewards of multiple devices with different characteristics is difficult. In contrast, MARL tries to enhance the overall system performance by taking each device’s unique properties into consideration. Specifically, MADRL extends a single-agent RL with DNNs that enable cooperation or competition among agents in complex environments. Figure 2 shows a typical structure of the MARL framework [39]. Each agent (agent 1, agent 2, …, agent K) observes its own state , selects an action based on its policy, and then receives a corresponding reward from the environment. The collective actions of all agents affect the environment, which updates the state and reward for the next time step, .

Figure 2.

A typical MARL structure.

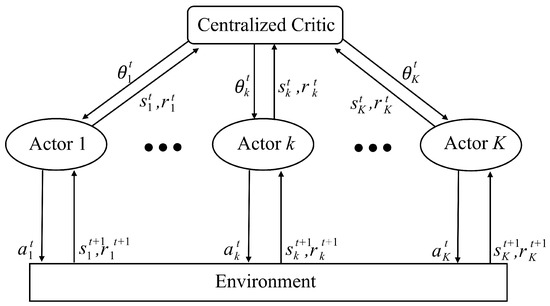

Multi-agent systems (MASs) are distributed systems where autonomous agents interact to achieve a common goal, each making decisions based solely on its local observations [40]. However, individual agents often cannot fully capture the global state, making traditional Markov decision process (MDP) assumptions hard to satisfy and necessitating more complex models like partially observable Markov decision processes (POMDPs) or Markov games [41]. Moreover, the joint action space expands exponentially with the number of agents, complicating the evaluation of each agent’s impact on overall performance. To address these challenges, the CTDE framework is widely adopted [17,18,19]. In CTDE, a centralized critic evaluates the joint state–action value using local information from all agents during training, and during execution, each agent makes real-time decisions based solely on its local observations. This approach combines the benefits of robust, centralized learning with the efficiency of decentralized execution. Figure 3 illustrates a CTDE structure, where each actor (actor 1, actor 2, …, actor K) independently interacts with the environment by selecting actions based on its local state and receiving rewards.

Figure 3.

A typical CTDE-based MARL structure.

The decentralized POMDP can be expressed as a tuple [41]. Here, is the set of agent indices, which are regarded as the distributed BSs in this paper; is the global state space, which contains local observations of all agents; and is the global action space such that , where × is the Cartesian product operator. is the probability of transitioning into a state by taking action at state . At time t, and denote the global state and action, respectively. is the reward function for being in state when taking action , and all agents might share the same reward function. denotes the local observation of agent k, and the joint observation space is given by . is the discount factor, , which determines the present value of future rewards.

4.2. Proposed MAAC Network

In the MAS described in this paper, for all , BS k is considered identical to agent k. That is, each BS is treated as an independent agent within the MADRL, and the two terms can be used interchangeably. Through this perspective, by analyzing the decision-making and interaction of the BS as the behavior of the agents, the MADRL can be effectively applied to the transmit power control problem. We define the local state at agent k in time slot t as follows:

where represents the instantaneous channel gain between users associated with BS k at time slot t. denotes the interference-plus-noise power observed by the user served by BS k, where the interference is estimated based on the previously used transmit powers of other BSs, combined with the noise power. The can then be expressed as follows:

At the beginning of time slot t, each user calculates the instantaneous interference by combining the transmit power in the time slot with the channel gains measured in the current time slot t [19]. After adding the instantaneous interference and the noise, each user produces the interference-plus-noise, which is used as the input of the RL agent.

In MADRL, reducing the state feature simplifies learning by decreasing the size of the neural network’s input layer. In MADRL, reducing state features reduces the dimensionality of the input layer, which reduces unnecessary noise that may occur during model learning, improves learning stability and generalization performance, and ultimately helps the agent find the optimal policy more effectively. The global state based on the local state can be expressed as follows:

Agent k estimates or computes based on local observations . Agent k uses the pilot signal or interference level of to determine or update . We define the action of each agent as the transmit power. With local state , agent k selects an action . The current policy represents the probability of action being selected under local state . We define the global action as follows:

After all agents simultaneously select actions, the environment provides a reward according to the reward function . We define the reward function as follows:

where is a weighting factor. In the learning process of MADRL, if the scale of the reward is too large, the result does not converge. To prevent this lack of convergence, the scale of the reward is adjusted through a weighting factor. The reward function is defined as the weighted sum of the spectral efficiencies of all users. The summation aggregates the spectral efficiencies of all users. This reward structure promotes cooperative behavior between agents, accelerating and stabilizing the learning process, ultimately enabling more efficient transmit power control in the entire network.

The proposed MAS is based on the DDPG approach, a model-free deterministic actor–critic method designed for continuous action spaces. In this framework, the actor directly outputs an action value, and the critic evaluates the state–action pair. Target networks are updated via soft updates to stabilize training, and experience replay is used to reduce data correlations and to ensure more stable learning. The critic network takes the states of all agents and the actions of all agents as the input and computes the Q-values , where represents the parameters of the critic network. Each agent’s target actor network generates the action for the next state , and the target critic network computes the target Q-value as follows:

where is the reward vector that may include individual rewards for each agent. is the discount factor, and denotes the parameters of the target critic network. The critic network is updated to minimize the mean squared error (MSE) between the current Q-value and the target Q-value as follows:

where B represents the distribution of experiences sampled from the replay buffer. Each agent k′s actor network selects an action based on its local observation . Each agent updates its policy to maximize the Q-value evaluated by the critic network as follows:

The joint action composed of all agents’ actor outputs is updated in a way that maximizes the critic’s Q-value evaluation. For agent k, whose expected return is denoted by , the deterministic policy gradient theorem states that the gradient of its expected cumulative reward, , is as follows:

When each agent k updates its policy , it first calculates how its action affects the Q-function using . Since the actor network determines , we then apply the chain rule to combine with in order to compute the gradient with respect to .

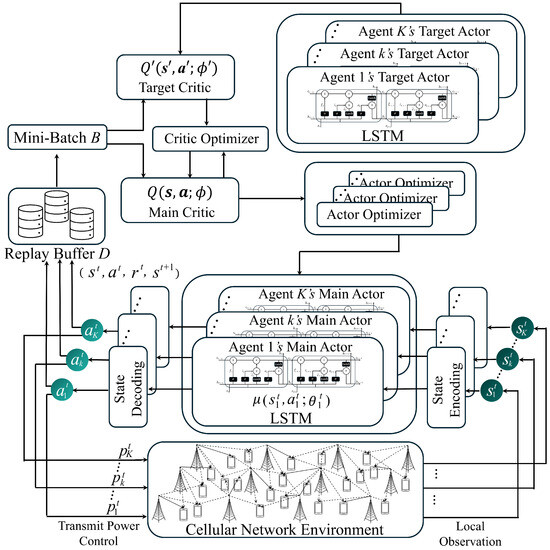

Figure 4 shows the proposed MADRL network to determine the transmit power of BSs in the multi-cell network. The main components of the actor network are an encoder, a forward LSTM, and a decoder. First, the encoder maps the local state to a hidden representation using a fully connected layer followed by ReLU activation. Next, the LSTM processes these encoded features to capture temporal dependencies. Finally, the decoder refines the LSTM output using batch normalization and LeakyReLU and then passes it through the final fully connected layer using sigmoid activation to produce a transmit power value that is bounded by . The critic network has a similar structure, consisting of an encoder, an LSTM, and a decoder that use the same activation functions. However, the critic takes the concatenated states and actions of all agents as the input and the Q-value for each agent’s state–action pair as the output.

Figure 4.

A proposed LSTM-based MAAC network.

The proposed network includes the LSTM to continuously track state changes over time and preserve historical observation data. The DRL combined with the LSTM has been shown to have better convergence speed and performance than the traditional DQN algorithm [24]. Moreover, the LSTM addresses the weakness of neural networks in processing long-term memory information and has excellent prediction performance in time-series wireless environments. Each LSTM gate helps reduce the influence of unnecessary past information during training. As a result, the final output generates a transmit power value in the range , which is used to update the LSTM cell state/gates. This mechanism allows the model to autonomously summarize and utilize important state information without separately inputting the transmit power of the previous time slot.

In the proposed LSTM-based MAAC network, the main and target actor parameters are updated as follows:

where is the soft update parameter; and represent the current parameters of the actor and critic networks, respectively; and and are the parameters of the corresponding target network. This strategy gradually blends new parameters into the target networks, ensuring consistent representation and more stable training. The critic optimizer is responsible for updating the parameters of the critic network by minimizing the MSE between the current Q-values and the target Q-values. That is, it performs gradient descent on the critic loss so that the critic network can accurately estimate the value of state–action pairs. On the other hand, the actor optimizer updates the parameters of each agent’s actor network by minimizing the negative Q-value output expected by the critic network. This effectively maximizes the estimated Q-value for the actions chosen by the actor, guiding each agent to select actions that are expected to yield higher rewards. Hence, while the critic optimizer focuses on learning accurate value estimates, the actor optimizer enhances the policy by driving action selection toward those that maximize future rewards.

The total number of parameters for the actor network is calculated by summing the contributions from each component. In particular, let I denote the input dimension, H denote the hidden dimension, and A denote the output dimension. The encoder projects the input dimension I into the hidden dimension H using fully connected layers with biases. The LSTM has four gates: input, forget, output, and candidate cell. Each gate requires parameters for both the input dimension I and the hidden dimension H. In an LSTM, these gates collectively account for . The decoder then maps the hidden dimension H to the output dimension A. The actor network parameter count can then be expressed as follows:

Similarly, for a critic network that receives connected inputs of states and actions, which have the dimension of , the total number of parameters can be expressed as follows:

The number of parameters of actor and critic networks in (20) and (21) quantify the model complexity and the computational requirements for training the networks. The procedure of the proposed scheme is summarized in Algorithm 1. The training of the proposed network begins by initializing the parameters for the actor and centralized critic networks, along with their corresponding target networks (lines 1–2). An empty replay buffer D is created to store state, action, and reward tuples (line 3). The main training loop proceeds over a specified number of episodes and time steps (lines 4–6). At each time step t, each agent observes local state and selects an action based on the policy of its actor network (lines 7–11). These actions are then executed jointly in the environment, which returns the next states and corresponding rewards () for all agents (lines 12). The resulting experience tuples () are stored in the replay buffer (line 13). A mini-batch of data is randomly sampled from D (lines 15–16) and used to update the critic network by computing target Q-values and minimizing a loss function (lines 18–22). The actor network of each agent is then updated by maximizing the Q-value output of the critic with respect to its local actions. Finally, the parameters of the main (online) networks are softly updated to the target networks using a soft update parameter (lines 23–34), ensuring training stability by gradually reflecting the newly learned parameters in the target networks.

| Algorithm 1 The training of the proposed network with K agents. |

|

5. Simulation Results

5.1. Simulation Environment

We evaluate the performance of the proposed power control scheme based on the LSTM-based MAAC network in hexagonal 7- or 19-cell networks, where each BS serves one user, i.e., there is one user per cell. We assume a hexagonal network topology because the hexagonal grid has been widely adopted in various fields due to its tiling efficiency. Moreover, we employ a 7-cell or 19-cell network topology because most of the conventional power control studies have considered these configurations [19,34]. The proposed MADRL network can be extended to other configurations, such as a triangular 6-cell layout or alternative geometries. However, the hexagonal layout is generally preferred over a triangular layout in cellular networks due to its uniform coverage, equal distance between cells, efficient frequency reuse, geometric properties, and simplicity in network planning. As the network topology expands, the number of agents increases, making DQN-based methods that require signaling between agents less advantageous. Consequently, methods without signaling exchanges between agents, such as QMIX or the proposed scheme, are superior. Furthermore, as the network topology grows, the simulation time also increases. Each BS is positioned at the center of its cell, where the distance between BSs is 800 m. Users are randomly distributed within the cell, but they are assumed to be located at least 100 m away from the BS. We assume that the link between a BS and a user remains during the simulation. Figure 5 illustrates the layout of 7- or 19-cell networks.

Figure 5.

Multi-cell network layout. (a) A 7-cell network.; (b) A 19-cell network..

The maximum transmit power of each BS is 30 dBm, and the AWGN power is set to dBm over a 10 MHz channel. The path loss between a BS and a user is modeled according to 3GPP TR 25.942, i.e., the path loss is given by (in dB), where d is the BS-to-user distance in km. The simulation parameters used in this paper are summarized in Table 2.

Table 2.

Simulation parameters.

We set the replay buffer size and the mini-batch size to be 1000 and 32, respectively. To ensure that each episode is an independent learning process, we reset the agent’s channel state at the end of each episode. The parameters for training the proposed network are shown in Table 3.

Table 3.

Proposed scheme parameters.

We compared the performance of the proposed scheme with those of five schemes: FP, DQN-based MADRL, QMIX-based MADRL, maximum power, and random power schemes. First, the FP scheme is a centralized scheme, and it requires immediate global CSI from all receivers [27]. Hence, the FP scheme is an ideal technique that is not practical because obtaining immediate CSI from all receivers is difficult. For the FP scheme, we ran up to 100 iterations to determine the transmit power of each time slot. Second, the DQN-based MADRL scheme uses a single meta-agent approach to share the DQN network parameters (weights and biases) among agents [34]. This approach can form consistent behavior patterns in the entire system because all agents follow the same policy, but it may entail additional signaling overhead and computational complexity because the DQN network parameters are shared among the agents even during the inference phase. Third, the QMIX-based MADRL scheme estimates the global Q-value by combining the local Q-values of each individual agent [19]. Additionally, we consider a maximum power scheme, where all BSs use the maximum transmit power, and a random power scheme, where each BS randomly selects its transmit power.

5.2. Signaling Overhead Due to Information Exchanges Between Agents

In a multi-cell network, it is highly impractical for an agent to obtain instantaneous global CSI of desired and interfering links. Moreover, exchanging information between agents to obtain the CSI of neighboring agents incurs signaling overhead and hinders scalability according to the network size. The DQN-based MADRL scheme of [34] collects both global and local CSI from neighboring agents, nearby BSs, and BSs connected via desired links while considering time delays. Hence, the DQN-based MADRL scheme incurs significant signaling overhead. In contrast, the QMIX-based MADRL scheme collects only local CSI, and it uses three defined states per agent [19]. Similarly, the proposed scheme also collects only local CSI, and it uses only two defined states per agent, which is the least amount of CSI required.

The signaling overhead depends on the number of signaling messages exchanged between agents and the number of parameters per signaling message, during each time slot. The DQN-based scheme of [34] requires information from other agents, which causes signaling message exchanges. In contrast, the QMIX-based and proposed schemes use the local information of each agent, so there is no signaling message exchange exchange. In the DQN-based scheme of [34], the defined states of each agent include 57 parameters, 50 of which are received from neighboring agents [19]. Consequently, the signaling overhead in the DQN-based scheme is approximately bits, and if 32-bit single precision is used, equals 32 bits [42].

5.3. Average Data Rate Performance

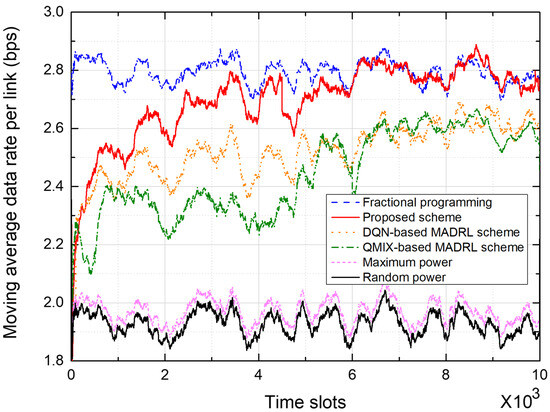

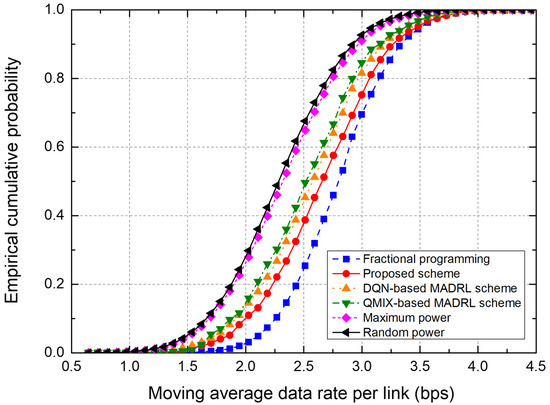

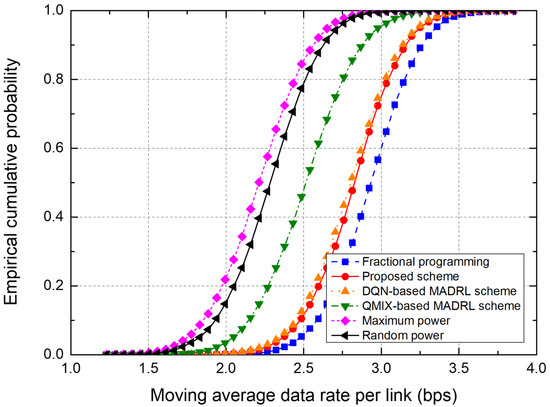

To evaluate the performance of the power control schemes, we use the metrics, “moving average data rate per link” and “empirical cumulative distribution function (CDF).” The moving average data rate, representing the data rate averaged over 300 time slots, is one of the major performance metrics because it indicates cell capacity in terms of data rate. Therefore, we plot the moving average data rate over time and the probability distribution of the moving average data rate for the entire simulation duration.

Figure 6 and Figure 7 show the performance of power control schemes in the 7-cell networks. Figure 6 shows the moving average data rate per link during the training of a network. In the MADRL-based power control schemes, the data rate performance initially fluctuates somewhat but gradually stabilizes and converges. In particular, the proposed scheme outperforms conventional DQN-based and QMIX-based schemes; furthermore, the performance of the proposed scheme converges quickly. Over the last 200 time slots, the proposed scheme outperforms the DQN-based scheme by about % and the QMIX-based scheme by about %, in terms of the moving average data rate.

Figure 6.

Moving average data rate in a 7-cell network.

Figure 7.

Empirical CDF of the moving average data rate in a 7-cell network.

Figure 7 shows the empirical CDF of the moving average data rate from the test on the 7-cell network. The FP scheme shows the best performance because the transmit powers of all BSs are controlled in a centralized manner on the basis of the instantaneous CSI from all receivers. The proposed scheme outperforms conventional DQN- and QMIX-based schemes. Note that the proposed scheme uses only local CSI at each agent, which significantly reduces the signaling overhead by eliminating information exchange between agents. In particular, the DQN-based MADRL scheme requires information exchange between agents, thus incurring significant signaling overhead.

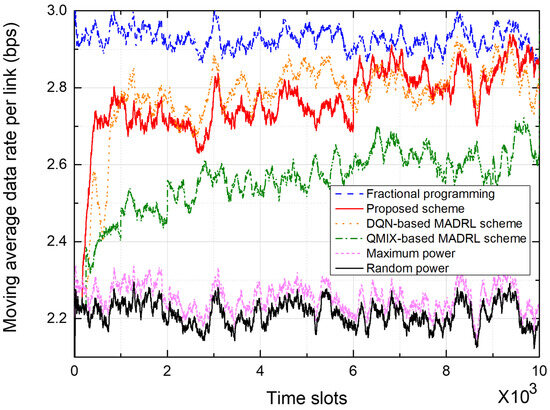

Figure 8 and Figure 9 show the performance of power control schemes in 19-cell networks. Figure 8 shows the moving average data rate per link during the training of a network. Over the last 200 time slots, the proposed scheme outperforms the DQN-based scheme by about % and the QMIX-based scheme by about %, in terms of the moving average data rate. In particular, while the DQN-based MADRL scheme shows a slow convergence rate in a 7-cell network, as shown in Figure 6, it shows a similar convergence rate to the proposed scheme in a 19-cell network. In a DQN-based MADRL network, as the number of agents increases, various channel datasets can be learned from more neighboring agents, and therefore, it has a fast convergence rate due to the properties of the DQN depending on the size of the channel dataset. In the DQN-based scheme, parameter sharing is more prevalent in the 19-cell network compared to the 7-cell network, which results in improved performance in the 19-cell network because a single centralized model can comprehensively learn the wireless channels [34]. However, notice that the DQN-based scheme requires the signaling messages to exchange information between agents, which causes a significant increase in signaling overhead depending on the number of agents.

Figure 8.

Moving average data rate in a 19-cell network.

Figure 9.

Empirical CDF of the moving average data rate in a 19-cell network.

Figure 9 shows the empirical CDF of the moving average data rate from the test on the 19-cell network. The FP scheme shows the best performance thanks to the centralized control of the transmit power. The proposed and DQN-based schemes show similar performance, although the proposed scheme uses only local CSI at each agent. Moreover, the QMIX-based MADRL scheme shows relatively much lower performance than the proposed and DQN-based schemes.

As the network topology expands, the number of agents increases, which in turn raises the number of states and complicates the interference patterns, leading to an increase in convergence time. Figure 6 and Figure 8 show that the convergence time increased by approximately 15–20% in a 19-cell network compared to a 7-cell network. We can anticipate a similar linear or mildly polynomial increase in larger networks. However, because the LSTM in the proposed scheme effectively compresses and retains relevant past sequence information in its hidden state, the proposed scheme is expected to show improved convergence speed [24].

In Figure 6, Figure 7, Figure 8 and Figure 9, the proposed scheme outperforms the conventional QMIX-based scheme of [19]. Both CTDE and QMIX utilize only the agents’ instantaneous observations and actions at the current time slot as inputs. Consequently, they cannot capture the temporal variability of the wireless channel or any history of past power-control decisions, making them overly sensitive to transient noise or interference spikes and prone to unstable policy convergence. Furthermore, QMIX’s mixing network combines only single-time-step Q-values in a monotonic manner, which means it cannot account for dynamic changes in agent interactions or any history of those interactions. In contrast, the proposed network incorporates the LSTM to continuously track state changes over time and preserve historical observation data, where the state consists of local CSI and neighbor observations. Hence, the proposed LSTM-based MAAC network can make decisions based not only on the current channel state but also on historical interference patterns and previous power-control actions. Consequently, embedding an LSTM-based sequence model within each agent effectively overcomes the lack of temporal modeling and partial observability issues found in CTDE and QMIX.

5.4. Complexity Performance

We evaluate the complexity of the proposed scheme in terms of space and time, in comparison with the DQN- and QMIX-based MADRL schemes. The space complexity, which is the amount of memory used for high-dimensional problems, can be represented by the number of parameters used; moreover, the time complexity can be represented by the number of multiply–accumulate (MAC) operations.

In the DQN-based scheme, a fully connected network is used per agent, consisting of an input layer with 57 neurons, an output layer with 10 neurons, and three hidden layers with 200, 100, and 40 neurons, respectively. The QMIX-based scheme has a decision network consisting of one actor network and two critic networks, a mixing network, and a hypernetwork. Here, each subnetwork of the decision network has an input layer with three neurons, an output layer with a single neuron, and three hidden layers with 128 neurons each. The mixing network and hypernetwork also use a fully connected layer of 128 neurons to generate a global Q-value and corresponding weights and biases.

Table 4 summarizes the number of parameters and the number of MAC operations obtained by profiling the DQN-based, QMX-based, and proposed schemes using the “thop.profile” library of PyTorch version 1.13.1 [43]. The proposed scheme increases the complexity compared with the DQN-based scheme, but significantly reduces the space and time complexity compared with the QMX-based scheme. In particular, compared with the QMIX-based scheme, the proposed scheme reduces the number of parameters by about % and the number of MAC operations by about %, in a 7-cell network. Compared with the DQN-based scheme, the proposed scheme increases the complexity, but it should be noted that the DQN-based scheme increases the signaling overhead according to the number of agents.

Table 4.

Complexity comparison.

6. Conclusions

In multi-cell networks, controlling the transmit power of each BS is highly important to mitigate inter-cell interference and maximize the overall cell capacity. In this paper, we developed a novel transmit power control scheme that leverages a MADRL approach within the framework of CTDE. In particular, the proposed scheme uses only local CSI at each agent without exchanging information between agents, which significantly reduces the signaling overhead between agents. The proposed MADRL network is based on an actor–critic network and incorporates an LSTM architecture to process local CSI at each agent, thereby allowing the algorithm to dynamically adapt to variations in the wireless channel over time. Moreover, by processing local CSI through an LSTM network, the proposed method is able to retain state information over multiple time slots, allowing for more informed and adaptive decision-making in the face of non-stationary channel conditions. The proposed LSTM-based MAAC network outperforms the conventional DQN-based or QMIX-based MADRL networks in terms of the average data rate of the entire network. Moreover, the proposed network uses only two states in each agent, which makes convergence faster. Furthermore, by using the CTDE framework, our approach benefits from robust centralized training while retaining the scalability and practicality of decentralized execution. This combination enables our solution to effectively capture the complex temporal and spatial dynamics present in multi-cell networks.

When applying the proposed LSTM-based MAAC network to practical systems, it is important to consider the inference latency and its integration with existing RRM systems into account. First, the inference time cannot be non-negligible depending on the BS hardware. Although the proposed scheme significantly reduces the space and time complexity compared with the QMIX-based scheme. Therefore, by quantizing the LSTM network from 32-bit floating point to 8-bit integers depending on the hardware specifications, we can reduce both the required memory and computational complexity while sacrificing some performance. Second, it may be difficult to integrate the proposed machine learning-based technology into existing RRM systems due to vendor-specific implementations. However, 3GPP has recently introduced machine learning techniques in RRM, and the open radio access network (O-RAN) alliance has also adopted machine learning techniques [44]. Therefore, the proposed distributed power control scheme is expected to be applicable to 6G systems.

Future research may include learning models such as federated training or distributed training to reduce centralized training overhead, and practical environments such as multi-cell, multi-user networks under dynamic user mobility. First, federated learning or distributed learning models can be studied to reduce centralized learning overhead. Generally, distributed learning is about making centralized training faster, whereas federated learning is about enabling collaborative training. The choice between federated and distributed learning depends on specific requirements related to data privacy, resource availability, and the scale of the data and computation involved. In particular, the signaling overhead exchanged between nodes should be accounted for. Second, future research could consider user selection, scheduling, and power control simultaneously in a multi-cell multi-user network that serves multiple users per cell, with users moving at different speeds.

Author Contributions

Conceptualization, H.K. and J.S.; software, H.K.; validation, H.K. and J.S.; investigation, H.K. and J.S.; writing—original draft preparation, H.K. and J.S.; writing—review and editing, J.S.; supervision, J.S.; project administration, J.S.; funding acquisition, J.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the National Research Foundation of Korea (NRF) grant funded by the Korean government (MSIT) (No. 2022R1F1A1062696).

Data Availability Statement

Data is contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Xu, Y.; Gui, G.; Gacanin, H.; Adachi, F. A Survey on resource allocation for 5G heterogeneous networks: Current Research, future trends, and challenges. IEEE Commun. Surv. Tutor. 2021, 23, 668–695. [Google Scholar] [CrossRef]

- Lohan, P.; Kantarci, B.; Ferrag, M.A.; Tihanyi, N.; Shi, Y. From 5G to 6G networks: A survey on AI-based jamming and interference detection and mitigation. IEEE Open J. Commun. Soc. 2024, 5, 3920–3974. [Google Scholar] [CrossRef]

- Yi, M.; Yang, P.; Chen, M. A DRL-driven intelligent joint optimization strategy for computation offloading and resource allocation in ubiquitous edge IoT systems. IEEE Trans. Emerg. Top. Comput. Intell. 2023, 7, 39–54. [Google Scholar] [CrossRef]

- Agarwal, B.; Togou, M.A.; Marco, M.; Muntean, G.-M. A comprehensive survey on radio resource management in 5G hetNets: Current solutions, future trends and open issues. IEEE Commun. Surv. Tutor. 2022, 24, 2495–2534. [Google Scholar] [CrossRef]

- Hussain, F.; Hassan, S.A.; Hussain, R.; Hossain, E. Machine learning for resource management in cellular and IoT networks: Potentials, current solutions, and open challenges. IEEE Commun. Surv. Tutor. 2020, 22, 1251–1275. [Google Scholar] [CrossRef]

- Xiang, H.; Yang, Y.; He, G.; Huang, J.; He, D. Multi-agent deep reinforcement learning-based power control and resource allocation for D2D communications. IEEE Commun. Lett. 2022, 11, 1659–1663. [Google Scholar] [CrossRef]

- Giannopoulos, A.; Spantideas, S.; Capsalis, N.; Gkonis, P.; Karkazis, P.; Sarakis, L.; Trakadas, P.; Capsalis, C. WIP: Demand-driven power allocation in wireless networks with deep Q-learning. In Proceedings of the 2021 IEEE 22nd International Symposium on a World of Wireless, Mobile and Multimedia Networks (WoWMoM), Pisa, Italy, 7–11 July 2021; pp. 1–4. [Google Scholar]

- Zhu, H.; Wu, Q.; Wu, X. Wu, X.-J.; Fan, Q.; Fan, P.; Wang, J. Decentralized power allocation for MIMO-NOMA vehicular edge computing based on deep reinforcement learning. IEEE Internet Things J. 2022, 9, 12770–12782. [Google Scholar] [CrossRef]

- Ji, Z.; Qin, Z. Federated learning for distributed energy-efficient resource allocation. In Proceedings of the 2022 IEEE International Conference on Communications (ICC), Seoul, Republic of Korea, 16–20 September 2022; pp. 1–6. [Google Scholar]

- Wang, X.; Wang, S.; Liang, X.; Zhao, D.; Huang, J.; Xu, X.; Dai, B.; Miao, Q. Deep reinforcement learning: A survey. IEEE Trans. Neural Netw. Learn. Syst. 2024, 35, 5064–5078. [Google Scholar] [CrossRef] [PubMed]

- Bi, Z.; Zhou, W. Deep reinforcement learning based power allocation for D2D network. In Proceedings of the 2020 IEEE 91st Vehicular Technology Conference (VTC2020-Spring), Antwerp, Belgium, 25–28 May 2020; pp. 1–5. [Google Scholar]

- Luong, N.C.; Hoang, D.T.; Gong, S.; Niyato, D.; Wang, P.; Liang, Y.-C.; Kim, D.I. Applications of deep reinforcement learning in communications and networking: A survey. IEEE Commun. Surv. Tutor. 2019, 21, 3133–3174. [Google Scholar] [CrossRef]

- Meng, F.; Chen, P.; Wu, L.; Cheng, J. Power allocation in multi-user cellular networks: Deep reinforcement learning approaches. IEEE Trans. Wireless. Commun. 2020, 19, 6255–6267. [Google Scholar] [CrossRef]

- Wang, J.; Hong, Y.; Wang, J.; Xu, J.; Tang, Y.; Han, Q.-L.; Kurths, J. Cooperative and competitive multi-agent systems: From optimization to games. IEEE/CAA J. Autom. Sin. 2022, 9, 763–783. [Google Scholar] [CrossRef]

- Das, S.K.; Mudi, R.; Rahman, M.S.; Rabie, K.M.; Li, X. Federated reinforcement learning for wireless networks: Fundamentals, challenges and future research trends. IEEE Open J. Veh. Technol. 2024, 5, 1400–1440. [Google Scholar] [CrossRef]

- Lee, S.; Yu, H.; Lee, H. Multiagent Q-Learning-based multi-UAV wireless networks for maximizing energy efficiency: Deployment and power control strategy design. IEEE Internet Things J. 2022, 9, 6434–6442. [Google Scholar] [CrossRef]

- Kopic, A.; Perenda, E.; Gacanin, H. A collaborative multi-agent deep reinforcement learning-based wireless power allocation with centralized training and decentralized execution. IEEE Trans. Commun. 2024, 72, 7006–7016. [Google Scholar] [CrossRef]

- Dai, P.; Wang, H.; Hou, H.; Qian, X.; Yu, W. Joint spectrum and power allocation in wireless networks: A two-stage multi-agent reinforcement learning method. IEEE Trans. Emerg. Top. Comput. Intell. 2024, 8, 2364–2374. [Google Scholar] [CrossRef]

- Wang, Z.; Zong, J.; Zhou, Y.; Shi, Y.; Wong, V.W.S. Decentralized multi-agent power control in wireless networks with frequency reuse. IEEE Trans. Commun. 2021, 70, 1666–1681. [Google Scholar] [CrossRef]

- Park, C.; Kim, G.S.; Park, S.; Jung, S.; Kim, J. Multi-agent reinforcement learning for cooperative air transportation services in city-wide autonomous urban air mobility. IEEE Trans. Intell. Veh. 2023, 8, 4016–4030. [Google Scholar] [CrossRef]

- Nasir, Y.S.; Guo, D. Deep actor-critic learning for distributed power control in wireless mobile networks. In Proceedings of the 2020 54th Asilomar Conference on Signals Systems, and Computers, Pacific Grove, CA, USA, 1–4 November 2020; pp. 1–5. [Google Scholar]

- Yao, Y.; Zhou, H.; Erol-Kantarci, M. Deep reinforcement learning-based radio resource allocation and beam management under location uncertainty in 5G mm wave networks. In Proceedings of the 2022 IEEE Symposium on Computers and Communications (ISCC), Rhodes, Greece, 30 June–3 July 2022; pp. 1–6. [Google Scholar]

- Xu, Y.; Liy, X.; Zhou, W.; Yu, G. Generative adversarial LSTM networks learning for resource allocation in UAV-served M2M communications. IEEE Wirel. Commun. Lett. 2021, 26, 1601–1605. [Google Scholar] [CrossRef]

- Lin, Y.; Wang, M.; Zhou, X.; Ding, G.; Mao, S. Dynamic spectrum interaction of UAV flight formation communication with priority: A deep reinforcement learning approach. IEEE Trans. Cogn. Commun. Netw. 2020, 6, 892–903. [Google Scholar] [CrossRef]

- Sohaib, M.; Jeong, J.; Jeon, S.-W. Dynamic multichannel access via multi-agent reinforcement learning: Throughput and fairness guarantees. IEEE Trans. Wirel. Commun. 2021, 21, 3994–4008. [Google Scholar] [CrossRef]

- Shi, Q.; Razaviyayn, M.; Luo, Z.-Q.; He, C. An iteratively weighted MMSE approach to distributed sum-utility maximization for a MIMO interfering broadcast channel. IEEE Trans. Signal Process. 2011, 59, 4331–4340. [Google Scholar] [CrossRef]

- Shen, K.; Yu, W. Fractional programming for communication systems—Part I: Power control and beamforming. IEEE Trans. Signal Process. 2018, 66, 2616–2630. [Google Scholar] [CrossRef]

- Ahmad, I.; Becvar, Z.; Mach, P. Coordinated machine learning for channel reuse and transmission power allocation for D2D communication. In Proceedings of the IEEE Global Communications Conference, Cape Town, South Africa, 8–12 December 2024; pp. 2701–2706. [Google Scholar]

- Lee, W.; Kim, M.; Cho, D.-H. Deep power control: Transmit power control scheme based on convolutional neural network. IEEE Commun. Lett. 2018, 22, 1276–1279. [Google Scholar] [CrossRef]

- Shen, Y.; Yuanming, S.; Zhang, J.; Letaief, K. Graph neural networks for scalable radio resource management: Architecture design and theoretical analysis. IEEE J. Sel. Areas Commun. 2021, 39, 101–115. [Google Scholar] [CrossRef]

- Han, D.; So, J. Energy-Efficient Resource Allocation Based on Deep Q-Network in V2V Communications. Sensors 2023, 23, 1295. [Google Scholar] [CrossRef] [PubMed]

- Lee, L.; Kim, H.; So, J. Reinforcement Learning-Based Joint Beamwidth and Beam Alignment Interval Optimization in V2I Communications. Sensors 2024, 24, 837. [Google Scholar] [CrossRef]

- Zhao, G.; Li, Y.; Xu, C.; Han, Z.; Xing, Y.; Yu, S. Joint power control and channel allocation for interference mitigation based on reinforcement learning. IEEE Access 2019, 7, 177254–177265. [Google Scholar] [CrossRef]

- Nasir, Y.S.; Guo, D. Multi-agent deep reinforcement Learning for dynamic power allocation in wireless networks. IEEE J. Sel. Areas Commun. 2019, 37, 2239–2250. [Google Scholar] [CrossRef]

- Zhou, Y.; Zhuang, W. Throughput analysis of cooperative communication in wireless ad hoc networks with frequency reuse. IEEE Trans. Wirel. Commun. 2014, 14, 205–218. [Google Scholar] [CrossRef]

- Liang, L.; Kim, J.; Jha, S.C.; Sivanesan, K.; Li, G.Y. Spectrum and power allocation for vehicular communications with delayed CSI feedback. IEEE Commun. Lett. 2017, 6, 458–461. [Google Scholar] [CrossRef]

- Technical Specification Group Radio Access Network; Radio Frequency (RF) System Scenarios (Release 18), Document 3GPP TR 25.942 V18.0.0, 3rd Generation Partnership Project. March 2024. Available online: https://www.3gpp.org/ftp/Specs/archive/25_series/25.942/25942-i00.zip (accessed on 1 November 2024).

- Luo, Z.-Q.; Zhang, S. Dynamic spectrum management: Complexity and duality. IEEE J. Sel. Top. Signal Process. 2008, 2, 57–73. [Google Scholar]

- Majid, A.Y.; Saaybi, S.; Francois-Lavet, V.; Prasad, R.V.; Verhoeven, C. Deep reinforcement learning versus evolution strategies: A comparative survey. IEEE Trans. Neural Netw. Learn. Syst. 2023, 35, 11939–11957. [Google Scholar] [CrossRef] [PubMed]

- Rosenberger, J.; Urlaub, M.; Rauterberg, F.; Lutz, T.; Selig, A.; Bühren, M.; Schramm, D. Deep reinforcement learning multi-agent system for resource allocation in industrial internet of things. Sensors 2022, 22, 4099. [Google Scholar] [CrossRef] [PubMed]

- Zhao, X.; Zhang, Y.A. Joint beamforming and scheduling for integrated sensing and communication systems in URLLC: A POMDP approach. IEEE Trans. Commun. 2024, 72, 6145–6161. [Google Scholar] [CrossRef]

- IEEE Std 754-2019 (Revision of IEEE 754-2008); IEEE Standard for Floating-Point Arithmetic. IEEE: New York, NY, USA, 2019; pp. 1–84.

- Liu, K.; Li, L.; Zhang, X. Fast and accurate identification of kiwifruit diseases using a lightweight convolutional neural network architecture. IEEE Access 2025, 13, 84826–84843. [Google Scholar] [CrossRef]

- Mhatre, S.; Adelantado, F.; Ramantas, K.; Verikoukis, C. Intelligent QoS-aware slice resource allocation with user association parameterization for beyond 5G O-RAN-based architecture using DRL. IEEE Trans. Veh. Technol. 2025, 74, 3096–3109. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).