Benchmarking Accelerometer and CNN-Based Vision Systems for Sleep Posture Classification in Healthcare Applications

Abstract

1. Introduction

- The gap between vision and inertial sensing modalities is bridged through comparative benchmarking.

- Insights into practical trade-offs is analyzed, such as interpretability, intrusiveness, and robustness that are often overlooked in modality-specific research.

- Recommendations for modality selection or hybrid system design are provided, based on application constraints, such as home care, hospital monitoring, or elderly assistance.

- By comparing these two complementary modalities under consistent conditions with accuracy, confusion matrices, and performance metrics such as precision, recall, and F1-score, this study provides critical insights into their relative strengths. For instance, image-based models offer high spatial resolution and visual interpretability but require consistent lighting and unobstructed views, making them suitable for controlled environments. In contrast, accelerometer-based systems are privacy-preserving, unobtrusive, and cost-effective, well-suited for wearable or in-bed monitoring in home or clinical settings.

2. Materials and Methods

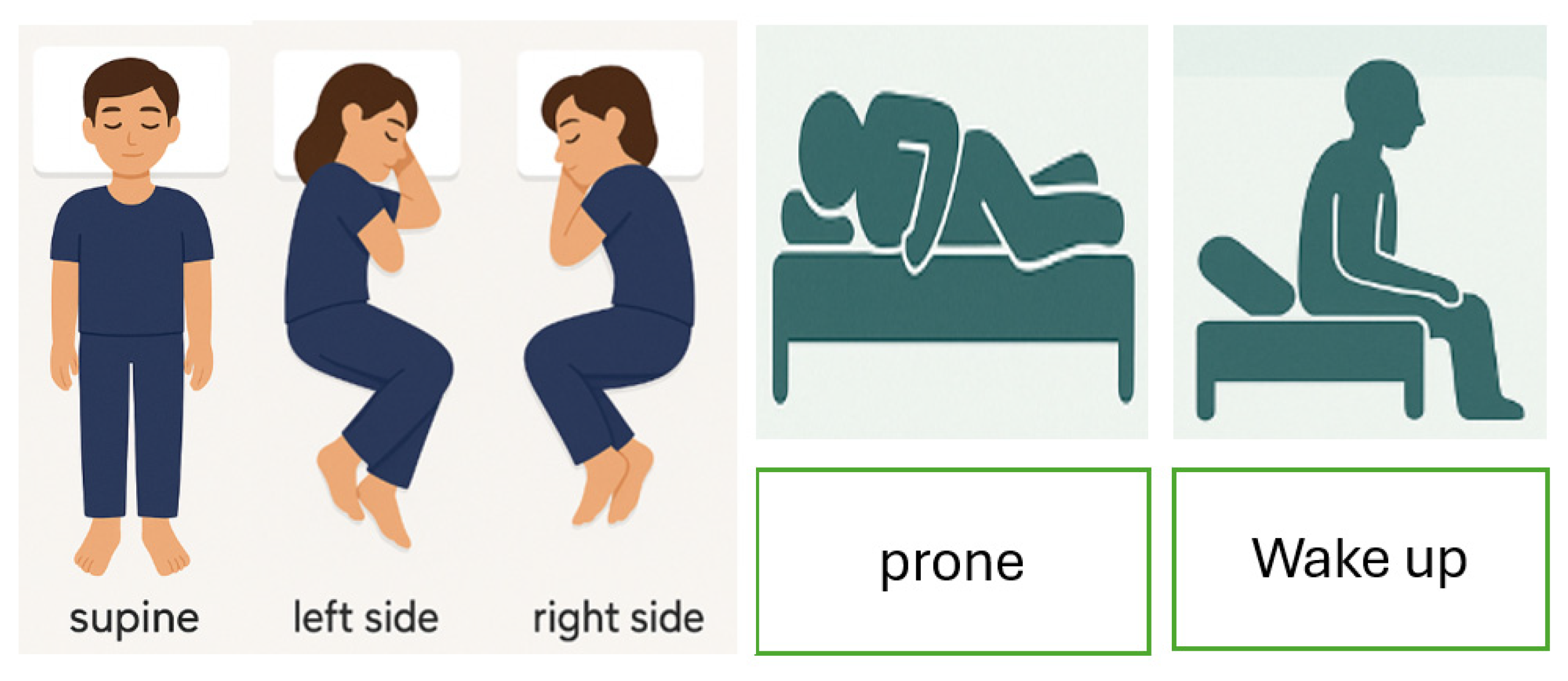

2.1. Dataset

2.1.1. Camera-Based Method

- Random Rotation: Each input image is randomly rotated within a range of −15 to +15 degrees, which helps the model become invariant to slight angular variations in body posture. A range of ±15° maintains the semantic integrity of the posture (e.g., “supine” rotated 10° is still clearly “supine”). Larger rotations could distort the posture enough to confuse the label (e.g., a 90° rotation could make “left side” look like “supine”).

- Random X and Y Reflection: Images are randomly flipped horizontally and vertically. This simulates mirrored sleep positions such as switching from left to right side and improves robustness to sensor or camera orientation.

- Random Scaling: Each image is scaled by a random factor between 0.1× and 3× its original size which accounts for differences in camera zoom or distance from the subject.

- Random Translation in X and Y: Images are randomly shifted up to ±15 pixels horizontally and vertically. This process allows the model to handle positional shifts of the person in the bed or frame.

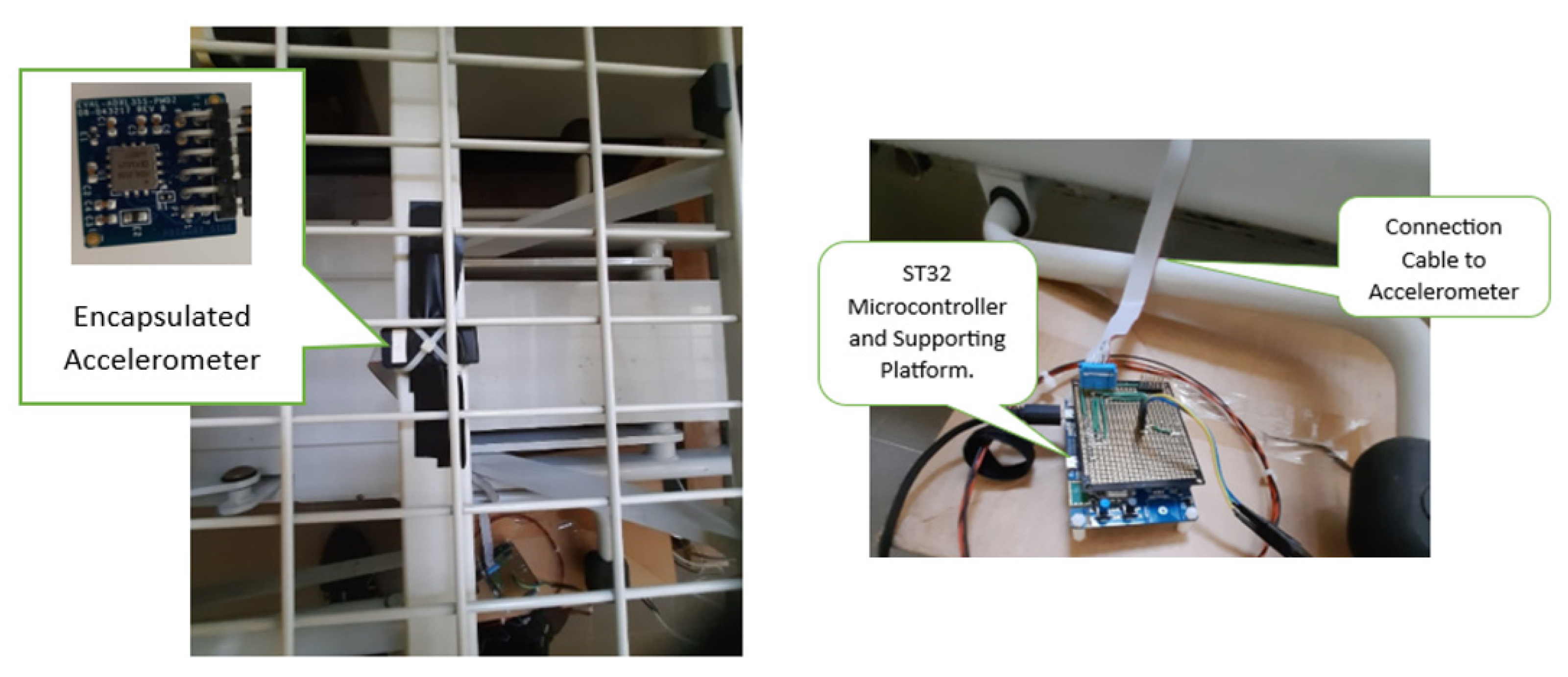

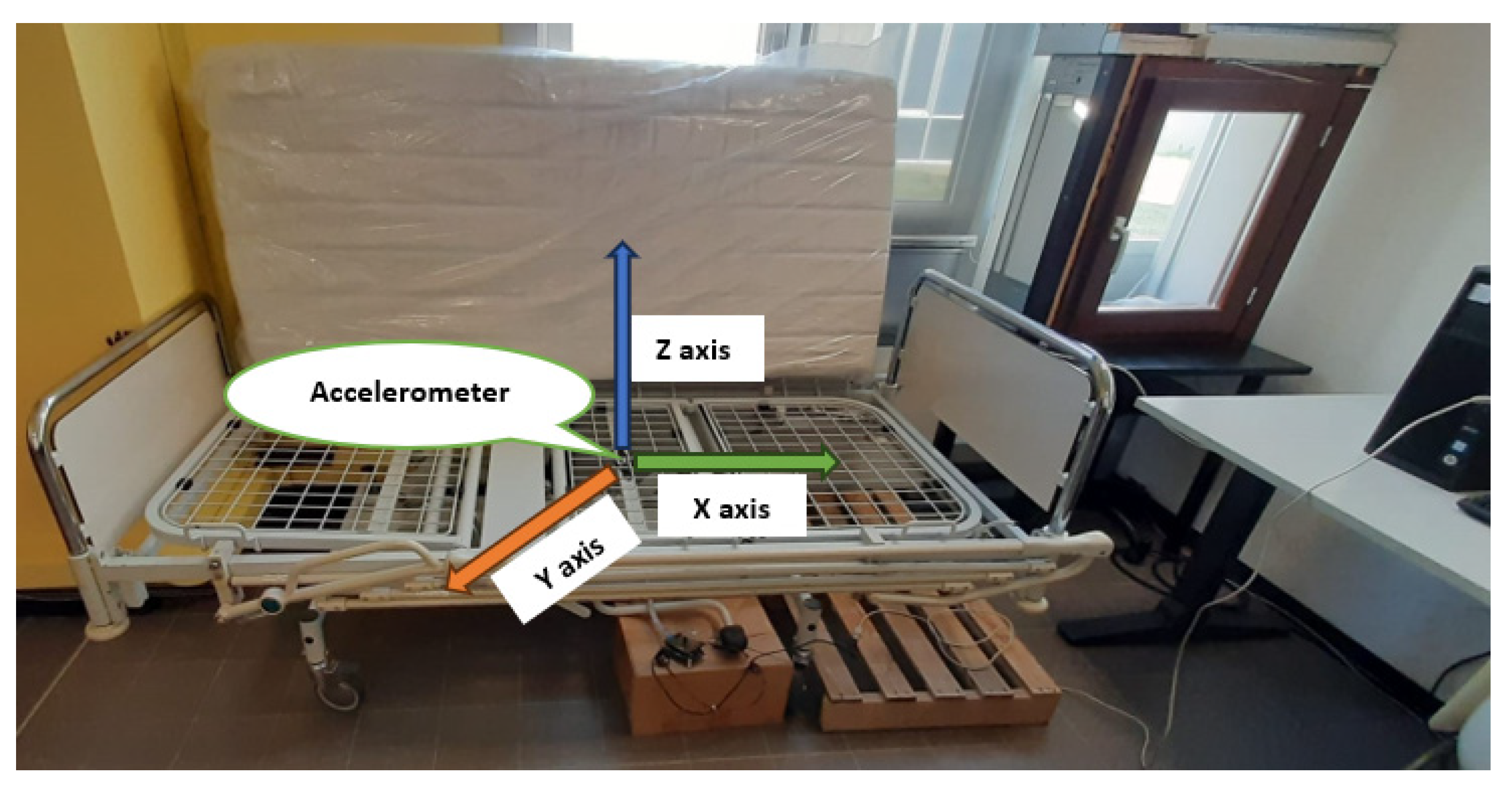

2.1.2. Accelerometer-Based Method

- Sum of segment values represents the total accumulated acceleration like energy-like measure. Different postures involve varying amounts of movement and body orientation relative to gravity.

- Standard deviation captures the variability or spreads of values in the segment. The variability of acceleration differs by posture. In supine or prone, movement is generally less variable as the body is more stable. Side posture usually has more variability due to how the limbs and torso interact with the bed surface or slight adjustments made to maintain comfort.

- Count above the global mean number of samples in the segment that are above the mean of the full signal, indicating activity level. This feature helps distinguish postures by measuring how often acceleration exceeds a typical baseline.

- Maximum value in the segment indicates the peak acceleration during that segment.

- Spike count indicates the number of values in the segment that exceeds 10% of the global signal range which is the difference between the maximum and minimum value among these 143 samples. This feature captures sharp changes or movements such as twitching. Sharp accelerations indicate micro-movements or twitches that vary by posture.

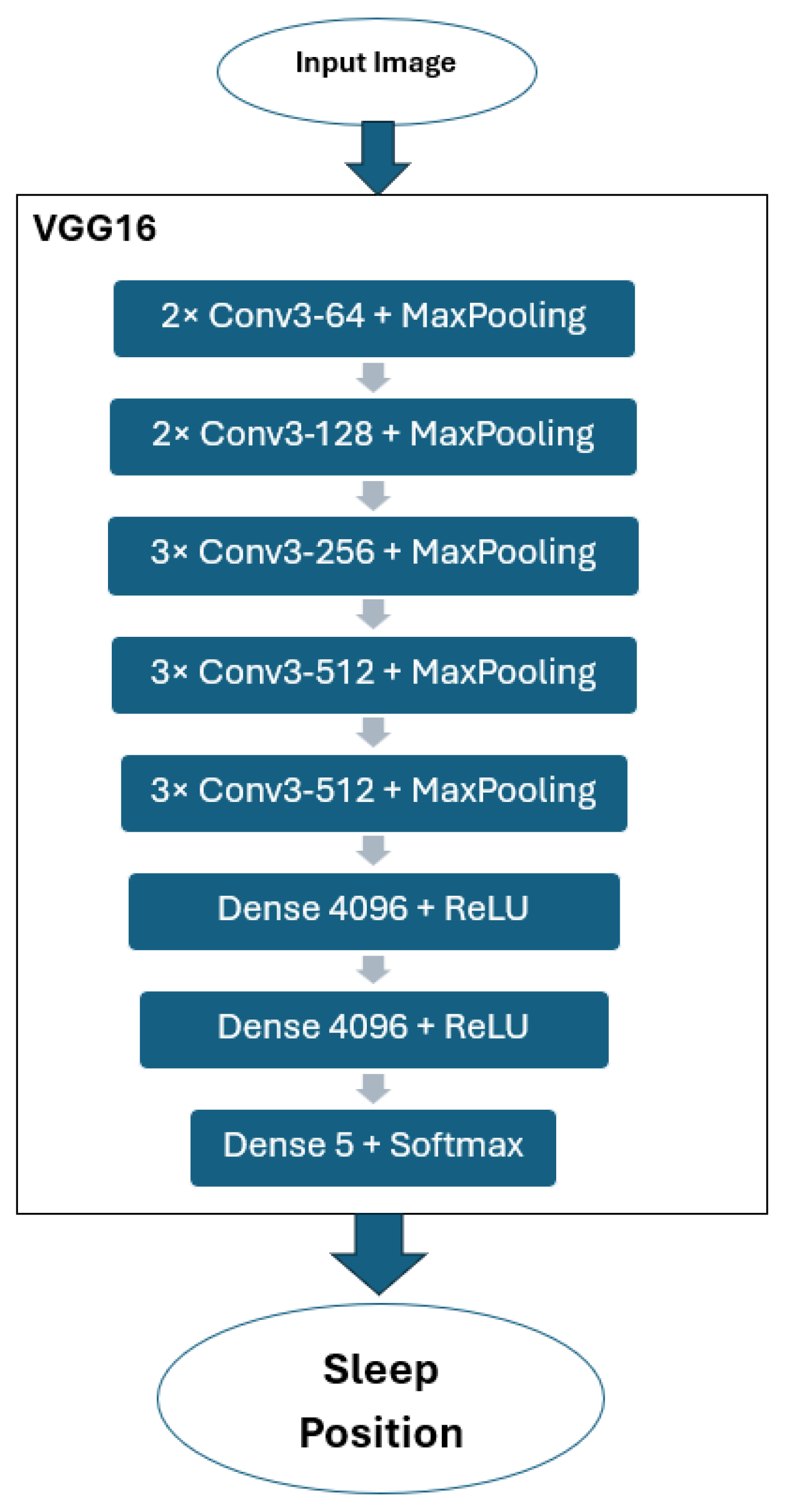

2.2. VGG16

- Conv3-64 means a 3 × 3 convolution with 64 filters.

- MaxPooling is a 2 × 2 pooling operation with stride 2.

- ReLU activation is applied after each convolutional and dense layer (except the last).

2.3. Feedforward Neural Network

- -

- Input data are passed through the network layer by layer.

- -

- Each neuron computes a weighted sum of its inputs, adds a bias, and applies an activation function.

- -

- w: weights vector.

- -

- x: input vector.

- -

- b: bias term.

- -

- f: activation function (e.g., ReLU, sigmoid, tanh).

- -

- a: output of the neuron.

- -

- This continues through all hidden layers to produce the final output.

- -

- The network’s predicted output ŷ is compared with the true target y using a loss function based on mean squared error for regression:

- -

- The error is propagated backward through the network.

- -

- The gradient of the loss function is computed with respect to each weight using the chain rule.

- -

- Weights are updated using gradient descent based on the formula below:

- wold: current weight before the update.

- wnew: updated weight after applying gradient descent.

- η: learning rate: a small positive value that controls how big a step to take in the direction of the gradient.

- If η is too large → training may overshoot or diverge.

- If η is too small → training becomes very slow.

- -

- : gradient of the loss function L with respect to weights.

3. Experiment and Result Analysis

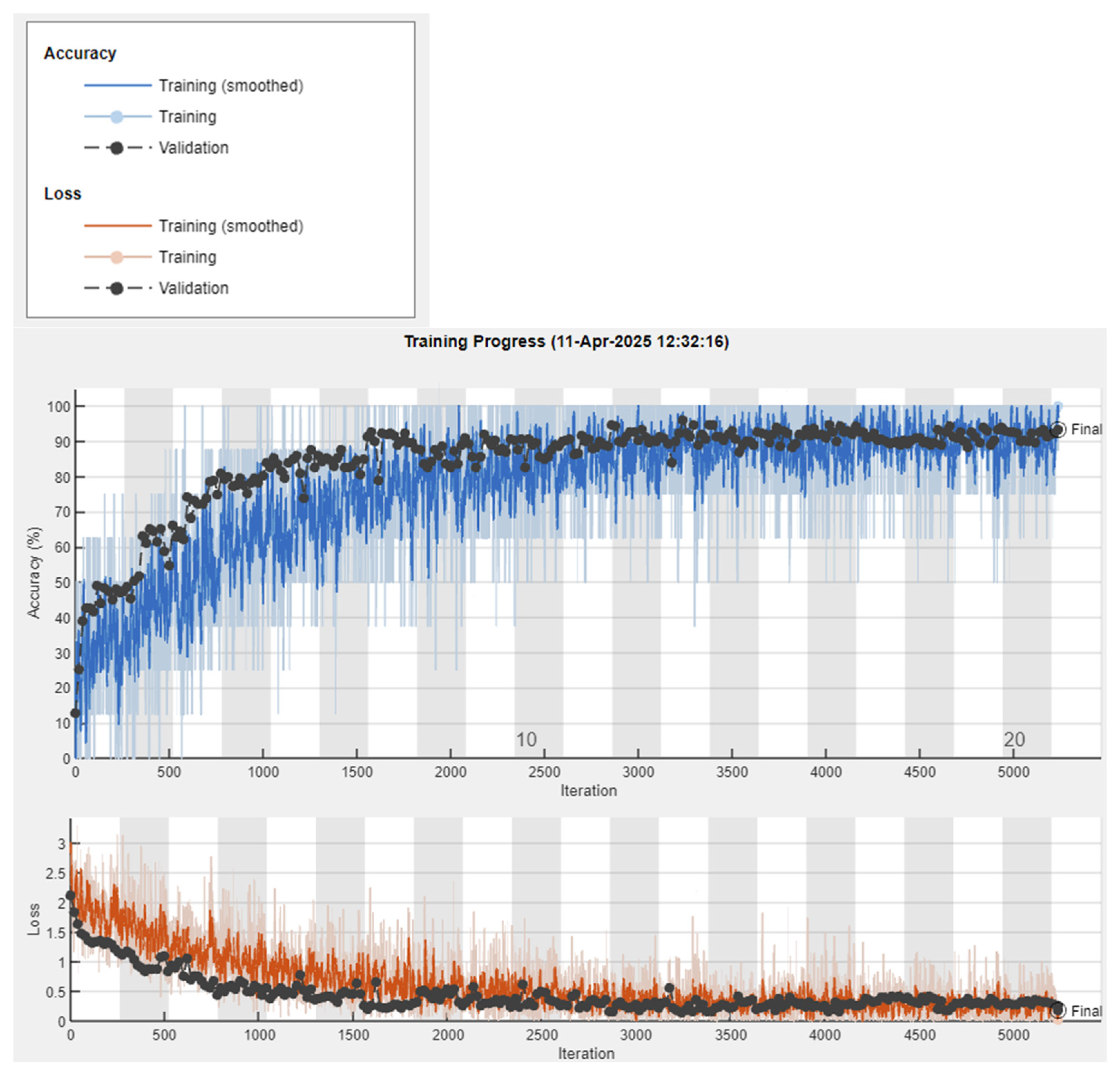

- The convolutional base of VGG16 (Transfer layer) is used as a fixed feature extractor.

- On top of this, a new classification head was added. This head includes three fully connected layers with 1024 neurons in hidden layer 1 and 512 neurons in hidden layer 2. To enhance training stability and generalization, each of the first two fully connected layers is followed by batch normalization, a Leaky ReLU activation function with a slope of 0.2, and a dropout layer with a rate of 0.4.

- To prevent overfitting, L2 regularization with a small weight decay factor of 10−5 is applied to all fully connected layers. This setup helps the model maintain generalization on unseen data.

- The network is trained using the Adam optimizer with an initial learning rate of 10−5. A piecewise learning rate schedule is applied, which reduces the learning rate by a factor of 0.5 every 10 epochs.

- Training is configured to run for up to 100 epochs, with a mini-batch size of 8. Validation is performed using an augmented version of the test dataset. Validation frequency is set to every 20 iterations, and early stopping is enabled with the patience of 100 validation checks.

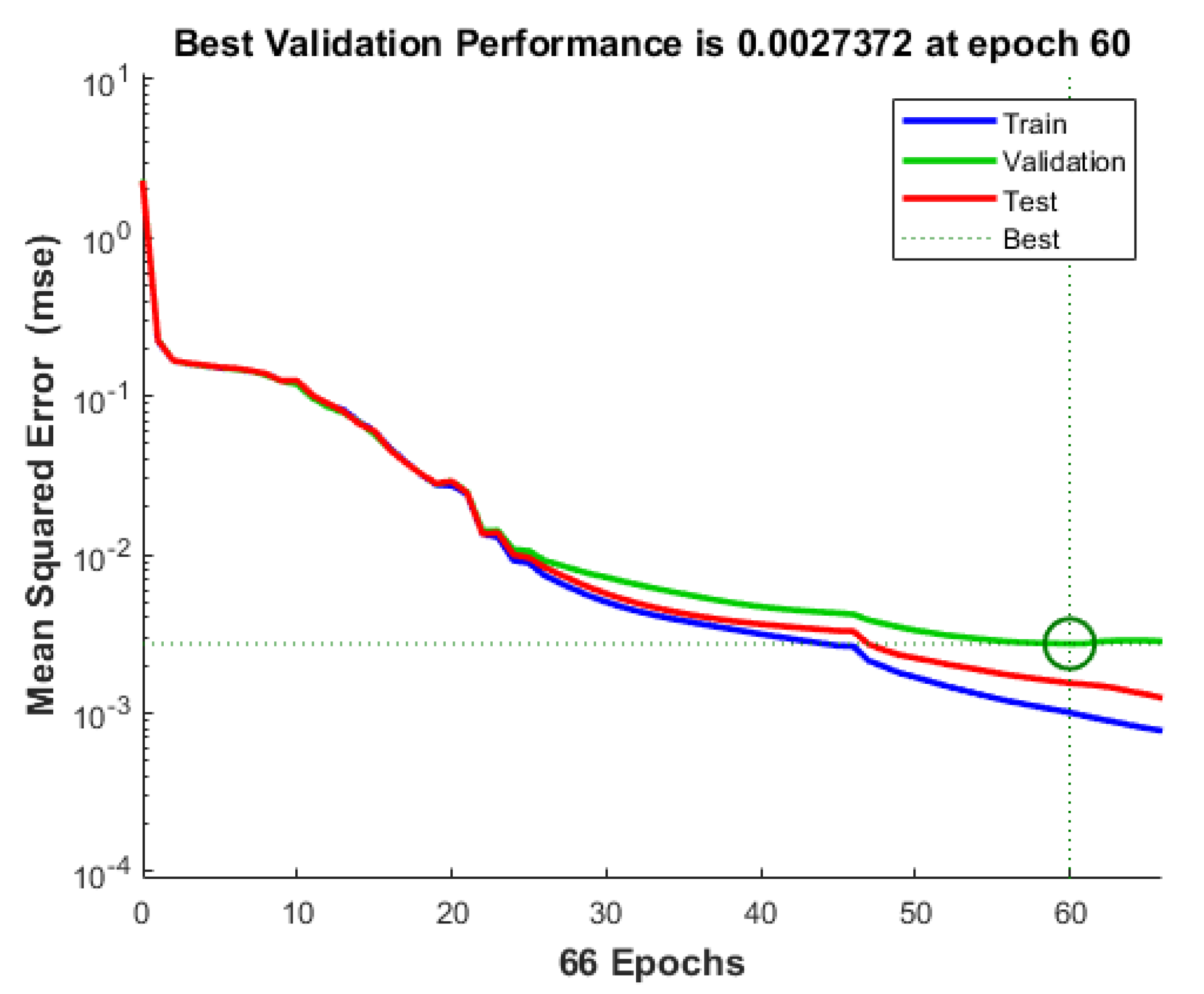

- Two hidden layers, each containing 20 neurons.

- Maximum number of epoch is 1000.

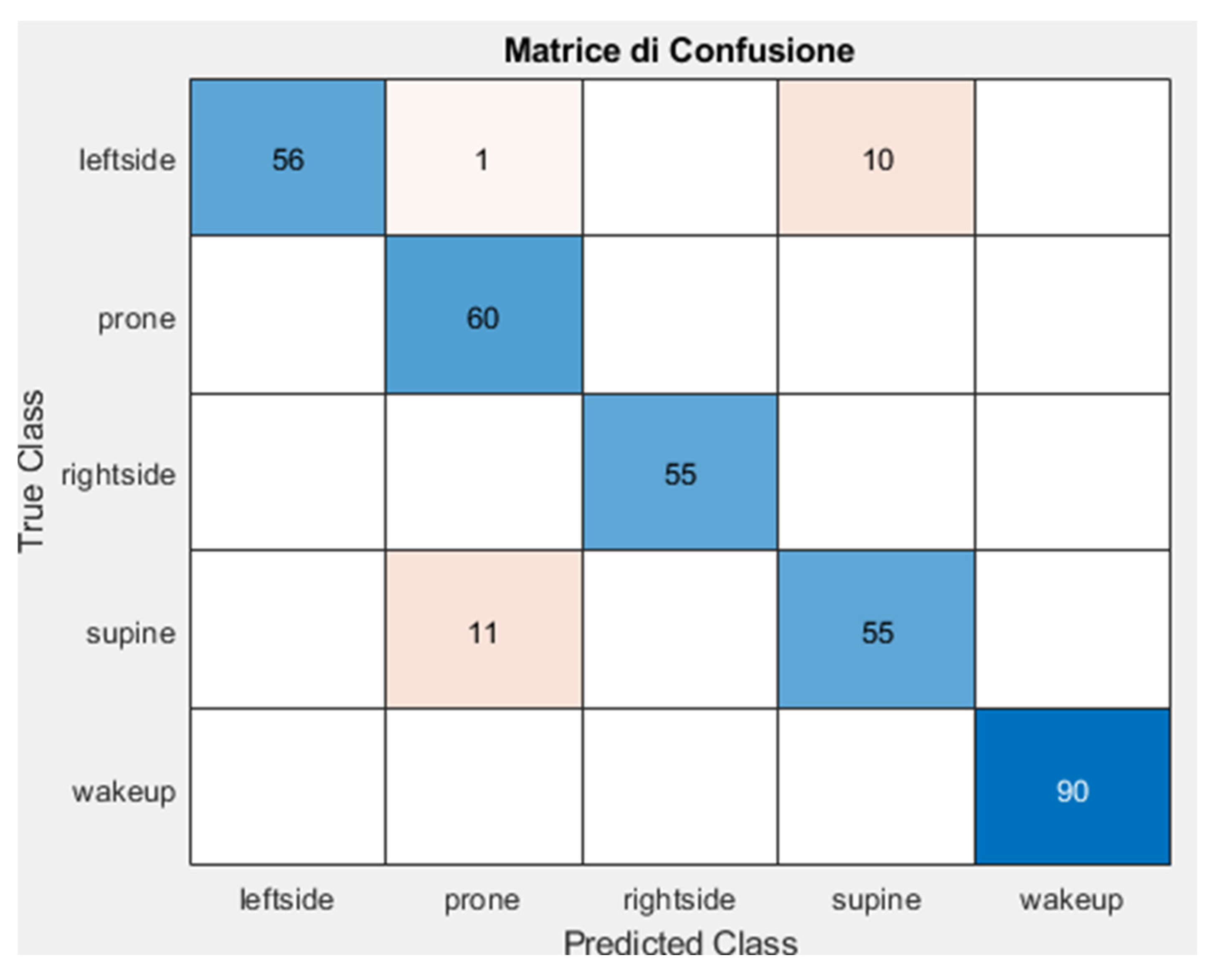

3.1. Image Classification with Visual Geometry Group 16 (VGG16)

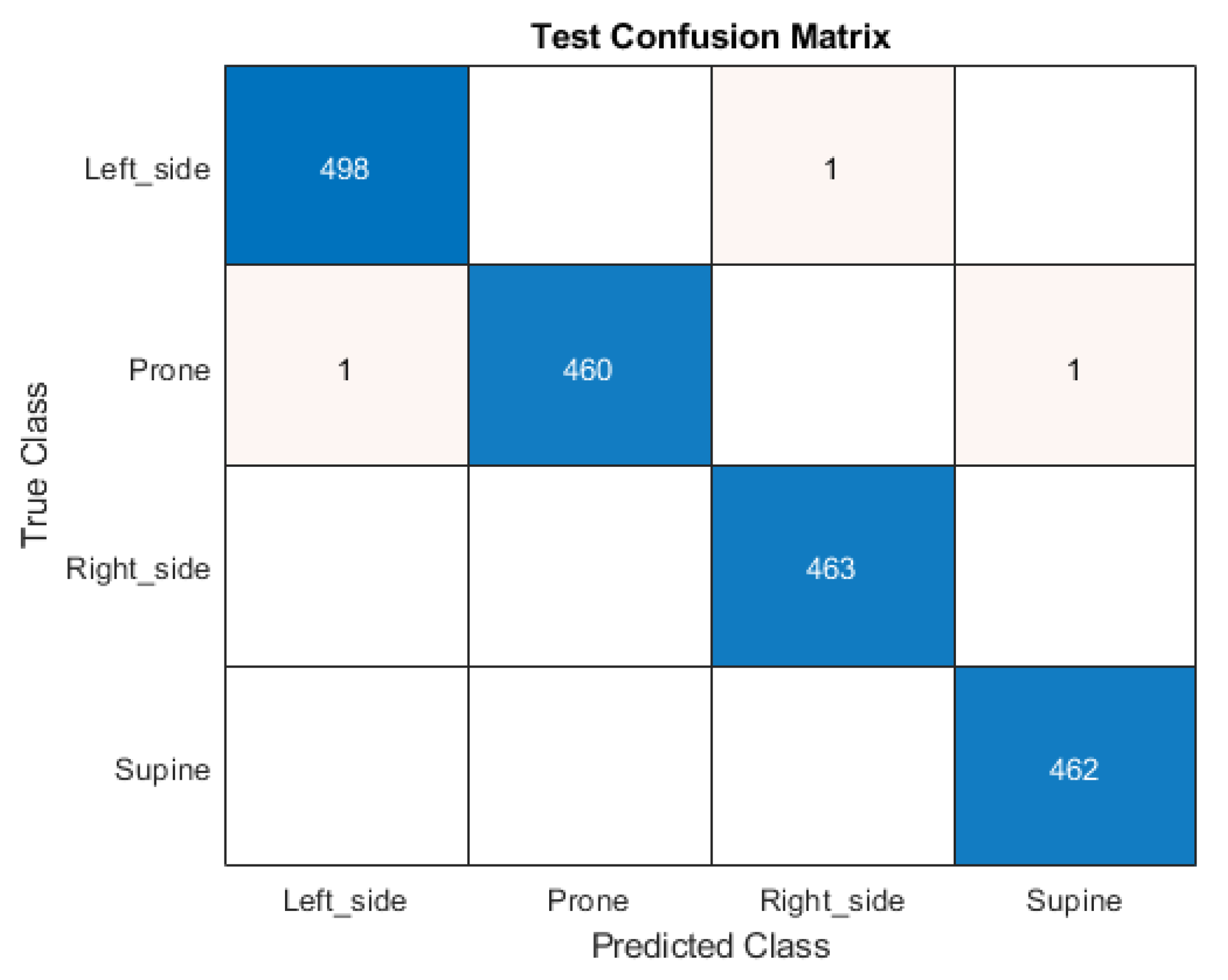

3.2. Accelerometer-Based Sleep Posture Classification

3.3. Comparison of Image-Based Sleep Position Classification and Accelerometer-Based

4. Limits and Future Work

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Al Oweidat, K.; Toubasi, A.A.; Albtoosh, A.S.; Al-Mefleh, E.; Hasuneh, M.M.; Abdulelah, A.A.; Sinan, R.A. Comparing the Characteristics of Positional and Nonpositional Sleep Apnea Patients Among the Jordanian Population. Ann. Thorac. Med. 2022, 17, 207–213. [Google Scholar] [CrossRef] [PubMed]

- Ghosh, P.; Vachana, M.N.; Pavish, S.R.; Pereira, P.; Tejeswini, C.J.; Ramesh, M.; Syed, J.; Chalasani, S.H. Advances in Technology-Driven Strategies for Preventing and Managing Bedsores: A Comprehensive Review. Arch. Gerontol. Geriatr. Plus 2024, 1, 100029. [Google Scholar] [CrossRef]

- Gnarra, O.; Calvello, C.; Schirinzi, T.; Beozzo, F.; De Masi, C.; Spanetta, M.; Fernandes, M.; Grillo, P.; Cerroni, R.; Pierantozzi, M.; et al. Exploring the Association Linking Head Position and Sleep Architecture to Motor Impairment in Parkinson’s Disease: An Exploratory Study. J. Pers. Med. 2023, 13, 1591. [Google Scholar] [CrossRef]

- Zampogna, A.; Manoni, A.; Asci, F.; Liguori, C.; Irrera, F.; Suppa, A. Shedding Light on Nocturnal Movements in Parkinson’s Disease: Evidence from Wearable Technologies. Sensors 2020, 20, 5171. [Google Scholar] [CrossRef] [PubMed]

- Chi, P.; Bai, Y.; Du, W.; Wei, X.; Liu, B.; Zhao, S.; Jiang, H.; Chi, A.; Shao, M. Altered Muscle–Brain Connectivity During Left and Right Biceps Brachii Isometric Contraction Following Sleep Deprivation: Insights from PLV and PDC. Sensors 2025, 25, 2162. [Google Scholar] [CrossRef]

- Giovannini, S.; Brau, F.; Galluzzo, V.; Santagada, D.A.; Loreti, C.; Biscotti, L.; Laudisio, A.; Zuccalà, G.; Bernabei, R. Falls among Older Adults: Screening, Identification, Rehabilitation, and Management. Appl. Sci. 2022, 12, 7934. [Google Scholar] [CrossRef]

- Gharghan, S.K.; Hashim, H.A. A Comprehensive Review of Elderly Fall Detection Using Wireless Communication and Artificial Intelligence Techniques. Measurement 2024, 226, 114186. [Google Scholar] [CrossRef]

- Peruzzi, G.; Galli, A.; Giorgi, G.; Pozzebon, A. Sleep Posture Detection via Embedded Machine Learning on a Reduced Set of Pressure Sensors. Sensors 2025, 25, 458. [Google Scholar] [CrossRef]

- Hu, D.; Gao, W.; Ang, K.K.; Hu, M.; Huang, R.; Chuai, G.; Li, X. CHMMConvScaleNet: A Hybrid Convolutional Neural Network and Continuous Hidden Markov Model with Multi-Scale Features for Sleep Posture Detection. Sci. Rep. 2025, 15, 12206. [Google Scholar] [CrossRef]

- Abdulsadig, R.S.; Singh, S.; Patel, Z.; Rodriguez-Villegas, E. Sleep Posture Detection Using an Accelerometer Placed on the Neck. In Proceedings of the 2022 44th Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Glasgow, UK, 11–15 July 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 2430–2433. [Google Scholar]

- Hoang, M.L. Artificial Intelligence Development in Sensors and Computer Vision for Health Care and Automation Application; Bentham Science Publishers: Sharjah, United Arab Emirates, 2024; ISBN 9789815313055. [Google Scholar]

- Hoang, M.L.; Pietrosanto, A. An Effective Method on Vibration Immunity for Inclinometer based on MEMS Accelerometer. In Proceedings of the 2020 International Semiconductor Conference (CAS), Sinaia, Romania, 7–9 October 2020; pp. 105–108. [Google Scholar] [CrossRef]

- Hoang, M.L.; Matrella, G.; Ciampolini, P. Comparison of Machine Learning Algorithms for Heartbeat Detection Based on Accelerometric Signals Produced by a Smart Bed. Sensors 2024, 24, 1900. [Google Scholar] [CrossRef]

- Djaroudib, K.; Lorenz, P.; Belkacem Bouzida, R.; Merzougui, H. Skin Cancer Diagnosis Using VGG16 and Transfer Learning: Analyzing the Effects of Data Quality over Quantity on Model Efficiency. Appl. Sci. 2024, 14, 7447. [Google Scholar] [CrossRef]

- Younis, A.; Qiang, L.; Nyatega, C.O.; Adamu, M.J.; Kawuwa, H.B. Brain Tumor Analysis Using Deep Learning and VGG-16 Ensembling Learning Approaches. Appl. Sci. 2022, 12, 7282. [Google Scholar] [CrossRef]

- Srinivasan, S.; Gunasekaran, S.; Mathivanan, S.K.; Jayagopal, P.; Khan, M.A.; Alasiry, A.; Marzougui, M.; Masood, A. A Framework of Faster CRNN and VGG16-Enhanced Region Proposal Network for Detection and Grade Classification of Knee RA. Diagnostics 2023, 13, 1385. [Google Scholar] [CrossRef]

- Liu, X.; Zhu, H.; Song, W.; Wang, J.; Yan, L.; Wang, K. Research on Improved VGG-16 Model Based on Transfer Learning for Acoustic Image Recognition of Underwater Search and Rescue Targets. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 18112–18128. [Google Scholar] [CrossRef]

- Basamsetty, P.; Kagitha, S.; Nulu, P.; Pinniboyina, V. Medicine Recognition from Coversheet Using VGG16 Model. In Proceedings of the 2024 IEEE North Karnataka Subsection Flagship International Conference (NKCon), Bagalkote, India, 21–22 September 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–6. [Google Scholar]

- Alshammari, A. Construction of VGG16 Convolution Neural Network (VGG16_CNN) Classifier with NestNet-Based Segmentation Paradigm for Brain Metastasis Classification. Sensors 2022, 22, 8076. [Google Scholar] [CrossRef]

- Stienecker, M.; Hagemeier, A. Developing Feedforward Neural Networks as Benchmark for Load Forecasting: Methodology Presentation and Application to Hospital Heat Load Forecasting. Energies 2023, 16, 2026. [Google Scholar] [CrossRef]

- Sukharev, E.; Sukharev, M. Accelerometer Signal Classification Algorithms Based on Mel-Frequency Cepstrum and Fully Connected Neural Network. In Networked Control Systems for Connected and Automated Vehicles (NN 2022); Springer: Cham, Switzerland, 2023; pp. 367–374. [Google Scholar]

- He, Z.; Sun, Y.; Zhang, Z. Human Activity Recognition Based on Deep Learning Regardless of Sensor Orientation. Appl. Sci. 2024, 14, 3637. [Google Scholar] [CrossRef]

- Fridriksdottir, E.; Bonomi, A.G. Accelerometer-Based Human Activity Recognition for Patient Monitoring Using a Deep Neural Network. Sensors 2020, 20, 6424. [Google Scholar] [CrossRef] [PubMed]

- Phan, H.; Chen, O.Y.; Tran, M.C.; Koch, P.; Mertins, A.; De Vos, M. XSleepNet: Multi-View Sequential Model for Automatic Sleep Staging. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 5903–5915. [Google Scholar] [CrossRef]

- Piriyajitakonkij, M.; Warin, P.; Lakhan, P.; Leelaarporn, P.; Kumchaiseemak, N.; Suwajanakorn, S.; Pianpanit, T.; Niparnan, N.; Mukhopadhyay, S.C.; Wilaiprasitporn, T. SleepPoseNet: Multi-View Learning for Sleep Postural Transition Recognition Using UWB. IEEE J. Biomed. Health Inform. 2021, 25, 1305–1314. [Google Scholar] [CrossRef]

- Hoang, M.L.; Matrella, G.; Ciampolini, P. Metrological evaluation of contactless sleep position recognition using an accelerometric smart bed and machine learning. Sens. Actuators A Phys. 2025, 385, 116309. [Google Scholar] [CrossRef]

- Elnaggar, O.; Coenen, F.; Hopkinson, A.; Mason, L.; Paoletti, P. Sleep Posture One-Shot Learning Framework Using Kinematic Data Augmentation: In-Silico and In-Vivo Case Studies. arXiv 2022, arXiv:2205.10778. [Google Scholar]

- Li, C.; Ren, G.; Wang, Z. Sleep Posture Recognition Method Based on Sparse Body Pressure Features. Appl. Sci. 2025, 15, 4920. [Google Scholar] [CrossRef]

- Hu, D.; Gao, W.; Ang, K.K.; Hu, M.; Chuai, G.; Huang, R. Smart Sleep Monitoring: Sparse Sensor-Based Spatiotemporal CNN for Sleep Posture Detection. Sensors 2024, 24, 4833. [Google Scholar] [CrossRef]

- Papillon, O.; Goubran, R.; Green, J.; Larivière-Chartier, J.; Higginson, C.; Knoefel, F.; Robillard, R. Sleep Position Classification Using Transfer Learning for Bed-Based Pressure Sensors. arXiv 2025, arXiv:2505.08111. [Google Scholar]

- Al-Naji, A.; Gibson, K.; Lee, S.-H.; Chahl, J. Real Time Apnoea Monitoring of Children Using the Microsoft Kinect Sensor: A Pilot Study. Sensors 2017, 17, 286. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.-Y.; Wang, S.-J.; Hung, Y.-P. A Vision-Based System for In-Sleep Upper-Body and Head Pose Classification. Sensors 2022, 22, 2014. [Google Scholar] [CrossRef]

- Analog Device ADXL355. Available online: https://www.analog.com/en/products/adxl355.html (accessed on 29 January 2025).

- STMicroelectronics B-L475E-IOT01A-STM32L4 Discovery Kit IoT Node, Low-Power Wireless, BLE, NFC, SubGHz, Wi-Fi. Available online: https://www.st.com/en/evaluation-tools/b-l475e-iot01a.html (accessed on 19 January 2025).

- Mienye, I.D.; Swart, T.G.; Obaido, G. Recurrent Neural Networks: A Comprehensive Review of Architectures, Variants, and Applications. Information 2024, 15, 517. [Google Scholar] [CrossRef]

- Sayılar, B.C.; Ceylan, O. Grid Search Based Hyperparameter Optimization for Machine Learning Based Non-Intrusive Load Monitoring. In Proceedings of the 2023 58th International Universities Power Engineering Conference (UPEC), Dublin, Ireland, 30 August–1 September 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 1–6. [Google Scholar]

| Layer Type | Configuration |

|---|---|

| Input | 224 × 224 RGB image (three channels) |

| Conv Block 1 | 2 × Conv3-64 + MaxPooling |

| Conv Block 2 | 2 × Conv3-128 + MaxPooling |

| Conv Block 3 | 3 × Conv3-256 + MaxPooling |

| Conv Block 4 | 3 × Conv3-512 + MaxPooling |

| Conv Block 5 | 3 × Conv3-512 + MaxPooling |

| Fully Connected 1 | Dense 4096 + ReLU |

| Fully Connected 2 | Dense 4096 + ReLU |

| Fully Connected 3 (Output Layer) | Dense 5 + Softmax |

| Class | Precision | Recall | F1-Score |

|---|---|---|---|

| Left Side | 1.00 | 0.83582 | 0.91057 |

| Right Side | 1.00 | 1.00000 | 1.00000 |

| Supine | 0.84615 | 0.83333 | 0.83969 |

| Prone | 0.83333 | 1.00000 | 0.90909 |

| Wake-up | 1.00 | 1.00000 | 1.00000 |

| Overall Accuracy: 93.49% | |||

| Class | Precision (%) | Recall (%) | F1-Score (%) |

|---|---|---|---|

| Left Side | 99.8 | 99.8 | 99.8 |

| Prone | 100 | 99.567 | 99.783 |

| Right Side | 99.784 | 100 | 99.892 |

| Supine | 99.784 | 100 | 99.892 |

| Wake up | 100 | 100 | 100 |

| Overall accuracy: 99.84% | |||

| Class | Image-Based Model (Precision/Recall/F1) | Accelerometer Model (Precision/Recall/F1%) |

|---|---|---|

| Left Side | 100/83.58/91.06 | 99.8/99.8/99.8 |

| Prone | 83.33/100/90.91 | 100/99.57/99.78 |

| Right Side | 100/100/100 | 99.78/100/99.89 |

| Supine | 84.62/83.33/83.97 | 99.78/100/99.89 |

| Wake-up | 100/100/100 | 100/100/100 |

| Model | Data Size (MB) | Training Time (s) |

|---|---|---|

| Image-Based Model | 343.15 MB | 1680 s |

| Accelerometer Model | 22.3 MB | 18 s |

| Aspect | Image-Based Model | Accelerometer-Based Model |

|---|---|---|

| Data Size | Larger, requires high storage for RGB image sequences | Lightweight, low storage due to compact time-series data |

| Training Time | Slower, requires high computational resources (GPU) | Faster, it can be trained quickly due to the light dataset |

| Power Efficiency | High power consumption due to intensive computation and GPU use | Low-power devices like microcontrollers are sufficient |

| Privacy | Captures identifiable visual data, raising privacy concerns | Non-invasive and private, with no visual identity exposure |

| Accuracy | High, especially in controlled environments with clear visuals | Good accuracy using motion data; may drop if motion is minimal |

| Feature Engineering | CNNs automatically learn features from data | Pre-feature extraction (e.g., sum, std, max, spikes) needed |

| Environmental Sensitivity | Sensitive to lighting, occlusions, and camera angles | Sensitive to external vibrations and sensor placement |

| Hardware Requirements | Requires camera(s), stable lighting, and good angle setup | Requires microcontroller, accelerometer sensor, and cabling |

| Deployment Environment | Best in controlled, well-lit rooms with minimal occlusion | Works in dark or varied lighting, but needs vibration control |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hoang, M.L.; Matrella, G.; Giannetto, D.; Craparo, P.; Ciampolini, P. Benchmarking Accelerometer and CNN-Based Vision Systems for Sleep Posture Classification in Healthcare Applications. Sensors 2025, 25, 3816. https://doi.org/10.3390/s25123816

Hoang ML, Matrella G, Giannetto D, Craparo P, Ciampolini P. Benchmarking Accelerometer and CNN-Based Vision Systems for Sleep Posture Classification in Healthcare Applications. Sensors. 2025; 25(12):3816. https://doi.org/10.3390/s25123816

Chicago/Turabian StyleHoang, Minh Long, Guido Matrella, Dalila Giannetto, Paolo Craparo, and Paolo Ciampolini. 2025. "Benchmarking Accelerometer and CNN-Based Vision Systems for Sleep Posture Classification in Healthcare Applications" Sensors 25, no. 12: 3816. https://doi.org/10.3390/s25123816

APA StyleHoang, M. L., Matrella, G., Giannetto, D., Craparo, P., & Ciampolini, P. (2025). Benchmarking Accelerometer and CNN-Based Vision Systems for Sleep Posture Classification in Healthcare Applications. Sensors, 25(12), 3816. https://doi.org/10.3390/s25123816