1. Introduction

Federated learning (FL) is a novel approach to securing machine learning (ML) by distributing workloads across multiple machines in a specific domain, allowing clients to train ML models collaboratively without exposing raw data [

1]. Within the realm of SG infrastructure, the concept of FL is still novel, where the application has not been fully realized yet, including concerns around efficiency gains and security measures [

2]. Nair et al. [

3] proposed a privacy-preserving FL framework for IoMT that leverages edge computing to process sensitive medical data securely. Their approach ensures data privacy while enabling efficient distributed learning, highlighting the critical role of edge intelligence in safeguarding user information.

SG represents a significant evolution in energy management systems, integrating advanced technologies such as IoT, ML, and edge computing to optimize energy distribution and consumption. At present, there is wide industry adoption of IoT devices, such as smart meters, which automate the meter reading process for electricity providers through internet communication [

3,

4,

5]. These devices collect vast amounts of data on energy consumption, which is then used to extrapolate power consumption patterns and make predictions for individual homes and commercial buildings. These predictions are critical for ensuring that power grid requirements are met on a city-wide scale, enabling utilities to balance supply and demand, reduce energy waste, and improve overall grid reliability [

5]. Moreover, the implementation of IoT applications offers the interconnection of devices that collect and exchange data over the internet, enabling real-time monitoring and control [

3,

6]. In the context of SG, IoT devices such as smart meters play a critical role in automating energy consumption monitoring and optimization.

As more manual processes continue to be digitized within this domain, the use of data to make informed decisions through data-driven decision-making has become increasingly prevalent. However, this shift towards digitization and data reliance also introduces significant challenges, particularly data security and user privacy. For example, the detailed energy consumption data collected by smart meters can reveal intimate details about an individual’s lifestyle, habits, and daily routines [

7,

8,

9]. This raises serious privacy concerns, as unauthorized access to such data could lead to misuse or exploitation. Moreover, the aggregation and analysis of such data on centralized servers create vulnerabilities, as these servers become attractive targets for cyberattacks, such as Distributed Denial of Service (DDoS) attacks or data breaches [

10]. To address these challenges, there is a growing need for robust privacy-preserving techniques that can safeguard user data while still enabling the benefits of data-driven energy management. One such technique is HE, a cryptographic method that allows computations to be performed on encrypted data without the need for decryption [

4,

5,

11]. This unique property of HE ensures that sensitive information remains secure throughout the data processing pipeline, making it particularly well-suited for applications in FL. By integrating HE into FL frameworks, it is possible to perform secure model aggregation and training without exposing raw data, thereby preserving user privacy while still achieving accurate and reliable predictions [

12]. To build on the privacy preservation feature that FL offers, we introduce HE, a novel encryption scheme that behaves similarly to other encryption schemes, incorporating a key generation function, an encryption function, a decryption function, and an evaluation function as one of the core components of the FL framework [

13]. The novelty in HE is that it allows the data to be manipulated while in an encrypted state, which means that the data is never exposed in a decrypted state, thus preserving the privacy and integrity of security of the data during the model aggregation process [

13]. We include HE to improve the aspect of privacy preservation that P2PFL offers by incorporating it into model aggregation for federated energy consumption prediction models. Therefore, this paper aims to develop a novel IoT framework utilizing a combination of FL, Edge Computing (EC), and HE principles with the goal of predicting energy consumption patterns in an SG system while preserving user privacy End-to-End (E2E).

1.1. Research Challenges

A common challenge when working with ML is model tuning. An incorrectly tuned model overfits or underfits data, that can produce inaccurate predictions as the model fails to determine the true relationship of the data. Another challenge lies in utilizing HE to encrypt large amounts of data. While Cheon–Kim–Kim–Song (CKKS) and an HE schemes improve time complexity at the cost of accuracy, they still use a lot of memory when encrypting workloads, which is inefficient. Furthermore, a third challenge is the need to establish a secure communication protocol between peers in a secure P2P context, with a method of establishing P2P connections in the first place.

1.2. Research Scope and Contribution

The main contribution of this paper is the development and verification of an SG IoT framework for predicting energy consumption using peer-to-peer federated learning (P2PFL) and HE to preserve user privacy. This research offers a detailed review and analysis of existing frameworks and approaches to privacy preservation using FL and HE. We also explore the application of the framework in this space by developing a software simulation to evaluate the practicality of our framework in a real-world scenario. For the literature review, we looked at recent work over the last five years using well-known databases such as Google Scholar, Scopus, Elsevier Science Direct, and IEEE Xplore library. Search terms used in this paper include federated learning framework, smart grid federated learning framework, federated learning IoT framework, and machine learning for federated learning.

The main contributions of this paper are summarized as follows:

We develop an algorithm for homomorphic encryption (HE) to preserve user privacy in smart grid (SG) systems. To this end, we optimize (predict) energy consumption using the FL model reported in

Section 3.

We develop an SG IoT framework by integrating P2PFL architecture and HE principles. To this end, we formulated mathematical models by deriving efficient FHE encryption through Equations (1)–(6) reported in

Section 4.

In the context of IoT, we explore a practical application of the framework with IoT devices involving SG for predicting energy consumption and optimization. To this end, we develop a simulation model for system performance evaluation and validation. We also contribute code (written in Python) for system design and evaluation purposes. The source code is publicly available (

https://github.com/FilUnderscore/SG-P2PFL-HE (accessed on 10 April 2025)) for further exploration in the emerging field of SG IoT energy consumption.

1.3. Structure of the Article

The rest of this paper is organized as follows. The related work on FL frameworks in an SG setting, as well as the unification of P2PFL and HE, are presented in

Section 2. The SG IoT framework design is discussed in

Section 3. The system simulation, as well as an HE optimization, is presented in

Section 4. The research design and methodology are discussed in

Section 5. The system evaluation and test results are presented in

Section 6; the practical implications are also discussed in this section. Finally, the paper is concluded in

Section 7.

Table 1 lists the abbreviations used in this paper.

2. Related Work

Federated learning, as a distributed machine learning paradigm, enables multiple parties to collaboratively train a model while maintaining the privacy of their raw data [

1]. This approach has been examined across a range of application domains and has been outlined in terms of its types, simulation environments, and implementation challenges.

In smart energy systems, the adaptive federated learning methods have been developed for energy consumption forecasting based on smart meter data, where edge computing supports both privacy and efficiency in distributed environments [

2]. The short-term probabilistic load forecasting has also been explored using federated learning, demonstrating effective prediction accuracy and privacy preservation when individual-level data remains decentralized [

8]. Privacy-preserving frameworks using edge computing have additionally been applied in Internet of Things (IoT) contexts, enabling secure analytics on sensitive data without transmitting raw information to central servers [

3]. In the smart grids, the substantial volumes of consumption data are collected through metering infrastructure, so privacy remains a core concern. Various frameworks have been introduced to protect data confidentiality while ensuring functionality in distributed energy monitoring systems [

4].

The federated learning has been employed for electricity theft detection, where secure and decentralized training mechanisms support model development without requiring access to private consumption patterns [

5]. Recent advancements also include heterogeneous federated learning frameworks capable of addressing class imbalance, thereby improving detection accuracy in real-world smart grid scenarios while maintaining the privacy of client-side data [

14].

To overcome the limitations of centralized learning systems, peer-to-peer federated learning architectures have been adopted, allowing distributed devices to collaborate without a single aggregation server. The secure decentralized training protocols have been demonstrated to be effective in IoT-based settings [

6], and alternative designs using peer-to-peer model exchanges are found to be effective in achieving both resilience and scalability [

11]. Security enhancements in federated learning have increasingly incorporated homomorphic encryption to enable encrypted model updates to be processed without decryption. The federated learning frameworks with support for homomorphic encryption (HE) have enabled secure model aggregation and privacy-preserving training in edge-based environments [

13]. Additional encryption schemes have been proposed that support scalable and efficient encrypted computations using compact ciphertexts in multi-party learning scenarios [

15].

Privacy-preserving data aggregation techniques have also been developed using fog computing architectures that incorporate signcryption to protect data during query and aggregation operations [

9]. The secure classification models are designed for smart grid applications to achieve a balance between data utility and privacy, supporting accurate and confidential system-level analytics [

10]. In parallel, federated learning has been applied to detect intrusions in software-defined networks, showcasing the flexibility of this learning paradigm for broader infrastructure protection [

7].

Generative models have also been used in federated learning systems to support privacy-aware data sharing and model robustness when access to raw data is limited [

12]. Foundational research in fully homomorphic encryption [

16] and federated model aggregation algorithms [

17] underpins many of the secure and communication-efficient FL systems currently under development. Building on these foundations, recent work [

14] has introduced heterogeneous federated learning frameworks designed for smart grid applications, addressing challenges like data imbalance and non-IID distributions. While these approaches improve detection accuracy in scenarios such as electricity theft, they often lack evaluation under dynamic grid conditions, including fluctuating loads and intermittent connectivity. Validation of federated learning frameworks for smart grids often relies on real-world datasets. Publicly available smart meter datasets from London households have been widely used to evaluate model performance in decentralized energy forecasting scenarios [

18,

19]. These datasets offer high-frequency and multi-year readings, enabling the design and testing of scalable federated learning solutions suitable for smart grid environments.

2.1. Summary of Related Work

To contextualize our contribution, we present a comparative summary of existing research efforts that integrate various technologies within SG and FL frameworks.

Table 2 highlights the scope and limitations of recent studies that utilize combinations of FL, P2P networking, EC, HE, and IoT technologies. While many of these studies demonstrate significant progress in privacy-preserving model training, decentralized learning, and IoT-based energy forecasting, none offer a unified framework that simultaneously leverages all five core technologies. This underscores the novelty of our proposed SG IoT framework, which addresses these limitations by fully integrating P2PFL, EC, and CKKS-based HE within an IoT ecosystem for secure and scalable energy consumption prediction.

2.2. Research Gaps

While the existing literature (as summarized in

Table 2) addresses privacy preservation within machine learning model design, it largely overlooks security considerations across the broader FL pipeline, particularly the protection of inter-client communications and the safeguarding of localized data on individual client devices. Although [

14] utilizes CKKS homomorphic encryption to enhance privacy, its reliance on a centralized FL architecture introduces vulnerabilities, such as susceptibility to DDoS attacks and single points of failure. Meanwhile, ref. [

15] proposes P2PFL-E, which applies FHE to peer-based aggregation, but restricts model aggregation to a single selected peer at any one time, potentially limiting scalability and resilience. A clear research gap exists in designing an IoT-based smart grid framework that fully integrates P2P federated learning with robust homomorphic encryption to ensure privacy-preserving, decentralized model training. To address this, the present work proposes a novel framework that combines P2PFL and HE techniques for secure and efficient energy consumption prediction in smart grid IoT environments.

3. Description of the Proposed Framework

Given the knowledge of the types of FL architecture that can be implemented, we aim to propose an SG IoT framework by combining hierarchical and decentralized FL [

1]. The key idea is to use edge devices to perform both model computations for the clients and to communicate with other edge devices in a decentralized manner. All the devices are using end-to-end encryption to ensure that the chain of privacy starts with the client [

1].

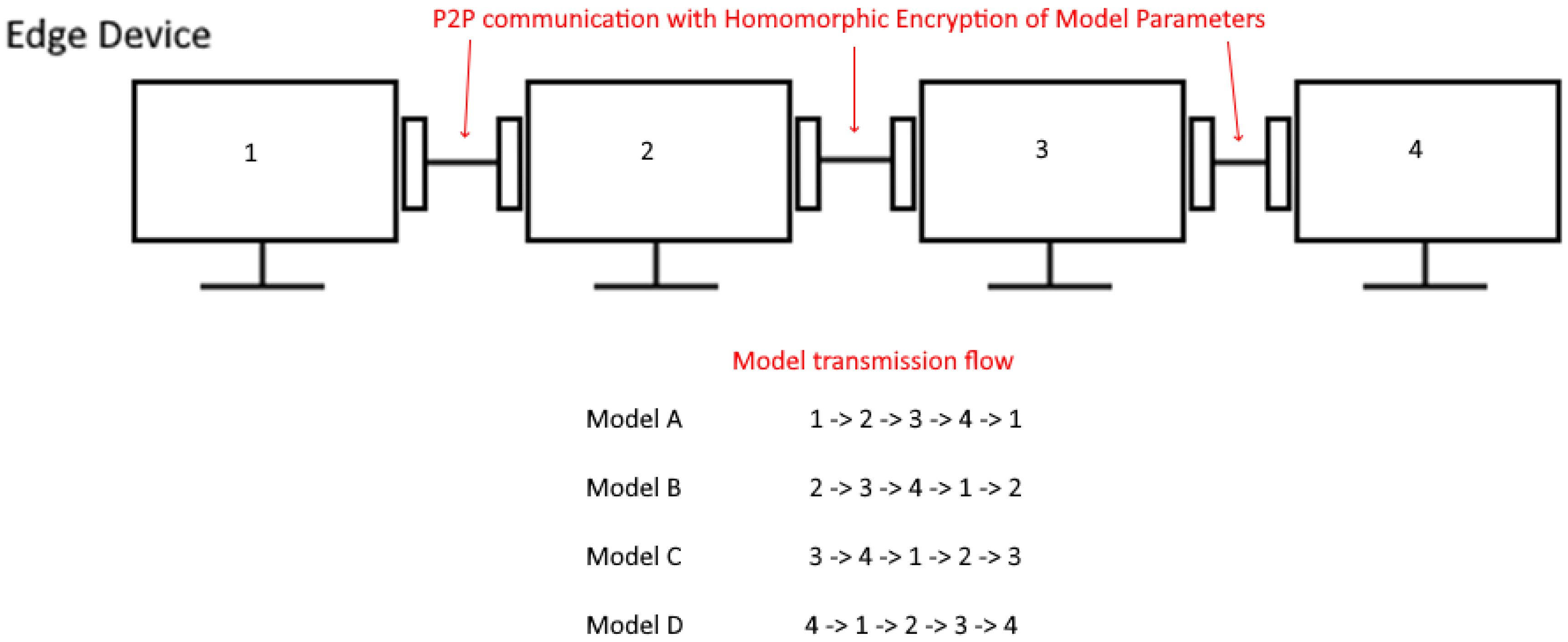

Our proposed framework is based on P2PFL principles (see

Figure 1) in which edge devices connect to each other using P2P networking. The exchanged data between peers is encrypted using CKKS fully homomorphic encryption (FHE). Initially, a peer establishes a connection with a Central Registration Node (CRN) acting as a repository for any peers in the network who can obtain connection details for other peers to be connected to the network. Likewise, any SG IoT device can be connected to specific edge devices using E2E encryption. These devices can act as “sensors” to collect data to be encrypted and transmitted to the edge devices for processing and model data training [

11].

3.1. Framework Architecture

The proposed framework (

Figure 1a) is designed such that each SG IoT device (e.g., Smart Meter) is connected in parallel with other SG IoT devices to an edge device in a secure manner. Each IoT device establishes a connection using public-key cryptography for end-to-end encryption with its respective edge device to send encrypted power consumption data for model training (see

Figure 1b). The power consumption data received by the edge device has been perturbed using Differential Privacy (DP) by the IoT device prior to the exchange. For public-key cryptography, we utilize the Hypertext Transfer Protocol Secure (HTTPS), a Transport Layer Security (TLS) protocol; a secure version of Hypertext Transfer Protocol (HTTP) strengthens security by preventing vulnerabilities such as Man-In-The-Middle (MITM) attacks ensuring E2E encryption on both the client and server side (client-to-client in a P2P context). In

Figure 1c, each edge device preprocesses the received data and merges the preprocessed data onto a single dataset to feed into an ML model. This model is then trained and encrypted for distribution to other edge devices in the network to construct the global model in a privacy-preserving manner using a modified FL aggregation algorithm with FHE support. The edge device then connects (using HTTPS) to the CRN to both register itself and query other edge devices to exchange FHE-encrypted models in a round-robin fashion (see

Figure 2). The model aggregation is then performed on all the exchanged models to find their way back to their originating device, where they are then decrypted, containing the global model in each. This global model is then used to create a forecast of future power consumption in the grid on a per-household Basis, through data visualization (see

Figure 3).

3.2. Framework Threat Model

The edge device and CRN are honest-but-curious entities as they follow the protocol specifications but may attempt to discern individual households’ power consumption data. The households are fully trusted entities as their smart meters cannot be altered, and data tampered with. The external entities are malicious. External entities may attempt to intercept, read, and modify data to influence the federated power consumption model. In our framework, since DP is applied to perturb the power consumption data from the households, there is no risk of data leakage in any communication between the households and edge devices. Likewise, since the models are homomorphically encrypted during the exchange between edge devices, there is no risk of data leakage in any communication between the edge devices and CRN.

4. Theoretical Contribution to Fully Homomorphic Encryption

The theoretical foundation of our work is the development of a smart grid IoT framework by integrating P2PFL architecture and homomorphic encryption (HE) principles. The proposed framework is discussed in detail in

Section 3. Another theoretical aspect of our work is the development of a fully HE technique. To this end, we mathematically derive efficient FHE encryption (see Equations (1)–(6)).

Specifically, we utilize the CKKS encryption scheme [

12] to encrypt floating-point values (used heavily by tensors, which represent the model parameters as matrices). The reason we opt to use the CKKS scheme over the Brakerski–Gentry–Vaikuntanathan (BGV) encryption scheme is. The CKKS scheme is much better at efficiently encrypting floating-point values, which are allowed to accumulate minor errors, resulting in approximate value encryption [

16]. While BGV is better suited for cases where numerical accuracy must be preserved throughout the encryption stage, which severely limits plaintext storage under hard memory constraints. Because of these differences, CKKS is better suited for encrypting values such as model parameters, as numerical accuracy is not of utmost importance in the case of ML workloads. The BGV is better suited to ensuring plaintext integrity. Equation (1) transforms a tensor from a matrix form to a vector form using the get_tensor_as_vector function for more efficient FHE encryption.

To perform FHE encryption on an entire ML model, we need to consider encrypting all relevant model parameters to ensure that no malicious actors can intercept and decipher any weight values, which could lead to data leakage in a secure setting. To achieve this, we perform encryption on all tensors that represent the model parameters in an ML model. However, this can be a very expensive operation to perform on tensors with dimensions larger than 784 × 69. Initial test results show that the memory usage skyrockets when attempting to encrypt tensors of these dimensions, which consume an excess of 4 GB, and beyond which would not be suitable for workloads carried out with especially large models with numerous parameters. For optimization, we derive a mathematical model (see Equation (1)) to transform each tensor using a transformation function which maps a tensor to a vector and stores the shape in a separate vector . This allows us to invert this mapping transformation later when we decrypt the encrypted tensor by first creating a 1-dimensional tensor which is then re-shaped using the encoded shape information that was derived from the initial encryption step.

It is vital that the shape information is kept and exchanged, as it is the only way that a tensor can be restored via inverse mapping once the initial mapping transformation has occurred. In this case, each peer has the same model hyperparameter, which specifies the number of parameters present in the model as well as the shape of each tensor that represents these parameters. We do not need to worry about encrypting the shape information as there is no concern of data leakage of any of the parameters, which would contain sensitive values during model aggregation. Equation (2) performs vector addition on an encrypted tensor

with an unencrypted tensor

, which has been transformed to a vector

temporarily using the mapping function defined in Equation (1).

Each peer generates a public key

and a secret key

and encrypts model parameters

with its own

.

, the ciphertext

is then exchanged with other peers (in turn retrieving their ciphertexts containing their model parameters) and can perform a variation in FHE to sum and multiply (divide using inverse multiplication) to each compute the global model parameters

. Equation (3) computes a shared ciphertext

given a list of ciphertexts with a specific order of transformations (i.e., addition). In Equation (4), we compute the shared model ‘

’ by decrypting the shared ciphertext

and finding the average of

models, where

is the number of peers. These transformations in this example represent computing the global model ciphertext using the Federated Averaging (FedAvg) algorithm [

17].

Once all the tensors representing the model parameters have been encrypted, we can then transmit these now encrypted tensors in a round-robin fashion to each peer within the P2P network and perform aggregation via the FedAvg algorithm with a different approach [

17]. We first perform addition of each peer’s local model parameters with the encrypted tensors (using Equation (3)) representing a certain peer’s local model parameters, with each peer passing the encrypted model forward to another peer in round-robin fashion (see

Figure 2) until the encrypted model returns to the initial peer that made an aggregation request in the network. The initial peer can then decrypt the encrypted model,

where

represents the sum of all peer model parameters in a decrypted state, which will now contain tensors which represent the sum of all model parameters in the P2P network. We then divide each of these tensors by the number of additional operations performed (see Equation (4)) on the encrypted tensors to determine the global FL model without the risk of model data leakage occurring at any stage in the aggregation process within the network while the model is in possession by a different peer. This process is repeated on each peer within the network, allowing each peer to derive the same global model while maintaining both security and privacy within the network.

6. Results and Discussion

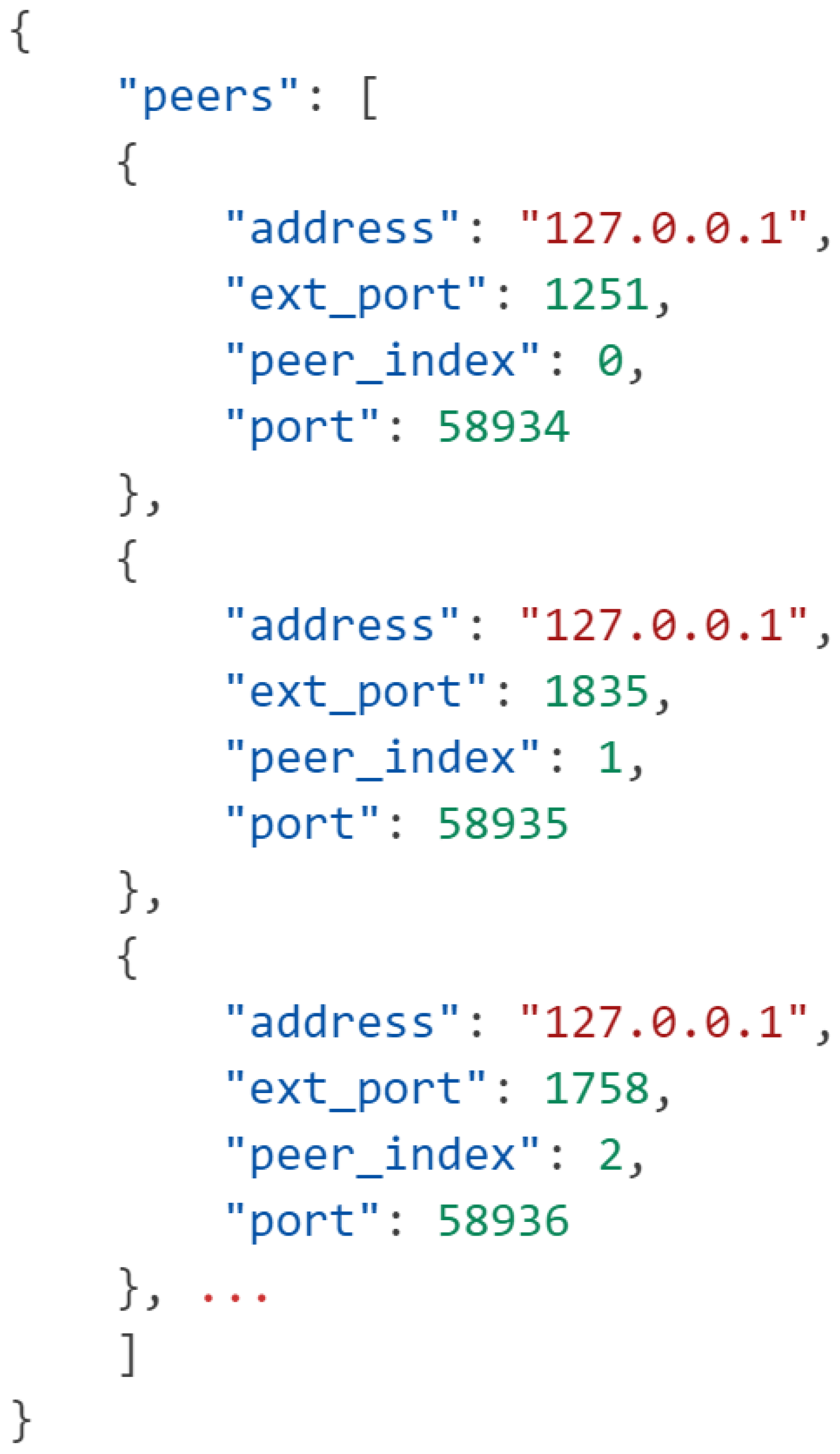

We observe that the framework can successfully compute an FL model from 100 separate ML models running on separate instances communicating through the previously established P2P architecture (see

Figure 6). The CRN registers each peer’s address and port from which a connection was established and receives the communication port from the client itself. Additionally, the CRN determines each peer’s index relative to each other to streamline the model aggregation process later. Because the P2P architecture does not require much information to establish, we eliminate any personally identifiable information that can be used to connect a client to a data source, thus preserving user privacy. The average runtime per peer is 25 s, with 0.08% of that time spent on performing CKKS FHE, and 82.66% of that time being spent on training local models, leaving 17.26% of that time being spent on model aggregation and prediction.

On scalability, we validate the system’s performance for 100 peers. The proposed framework can be scaled up to thousands of peers sharing one CRN; noting, however, that network congestion between peers would become a huge issue due to our round-robin approach of model aggregation. In simulation, the model parameters occupy around MB after encryption and encoding. For distributed across peers during model aggregation with the round-robin approach.

For

MB of model data that will be transmitted per peer (Equation (5)). Extrapolating this number to all 100 peers, we would look at network transmission costs of up to

GB within the network (Equation (6)).

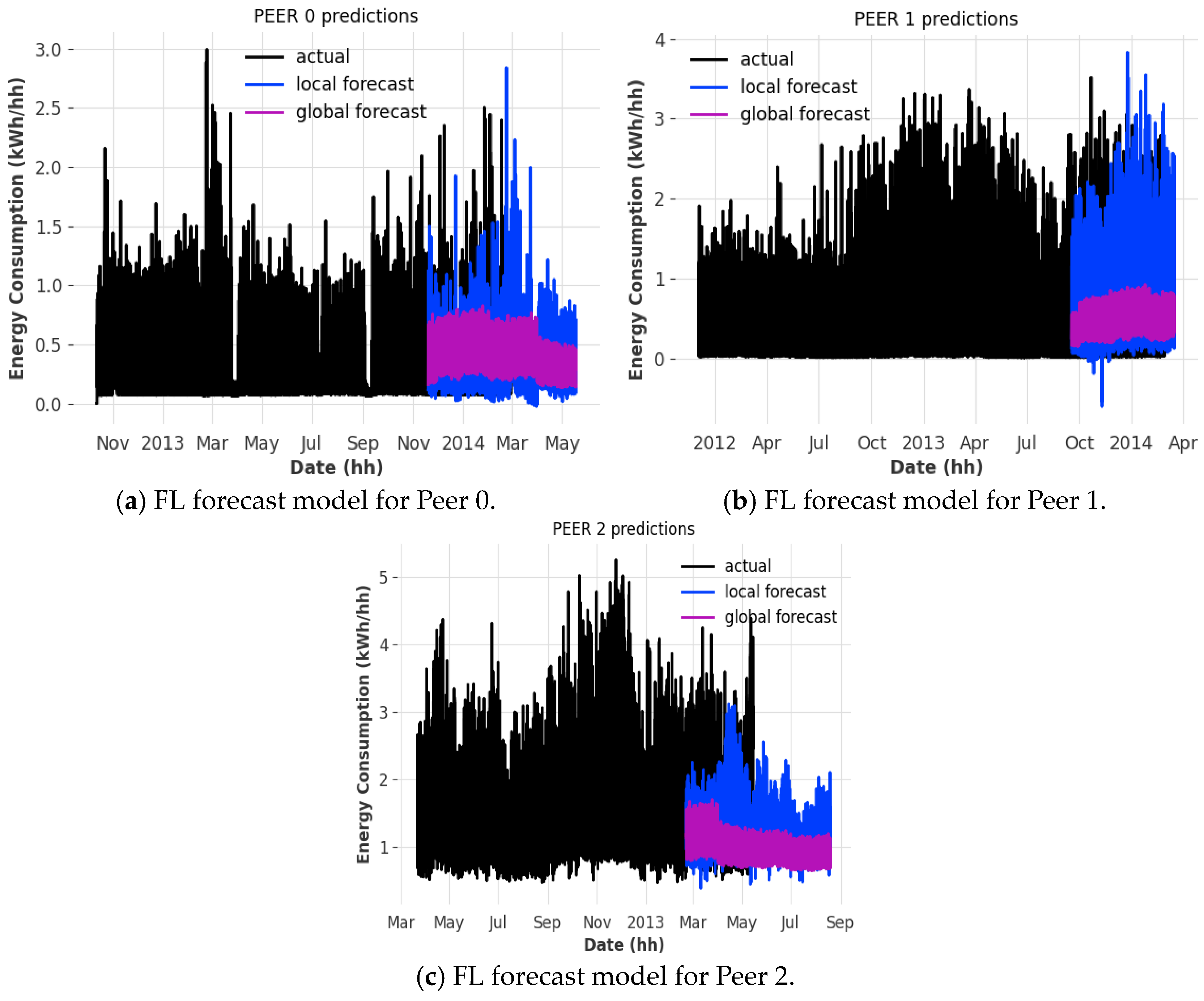

One can observe that the FL model can predict power consumption trends to some accuracy, depending on the client that it is running, compared to the local forecast model (similar trends and patterns depending on the date) (see

Figure 3).

We can observe that the individual losses of the localized models we train before aggregation occur (see

Figure 5) hover between 0.1% to 1%, given the simulation parameters provided when training and validating the model against the test dataset. As with all cases with FL models, the number of models that participate in the FL model aggregation process, the more accurate the FL model. Another thing to note is that each peer has been trained against the same hyperparameters (see

Table 3), which can result in non-optimal model performance due to varying data patterns in different datasets, which can cause dramatic fluctuations in the output of the FL model [

6]. A potential solution to this issue would be to use adaptive hyperparameter optimization, where each peer derives a set of hyperparameters from past data, which can then be used to train a model with better accuracy [

6]. Furthermore, this will enhance the performance of the global model as individual hyperparameters only affect model parameters during the training process.

6.1. Practical System Implications

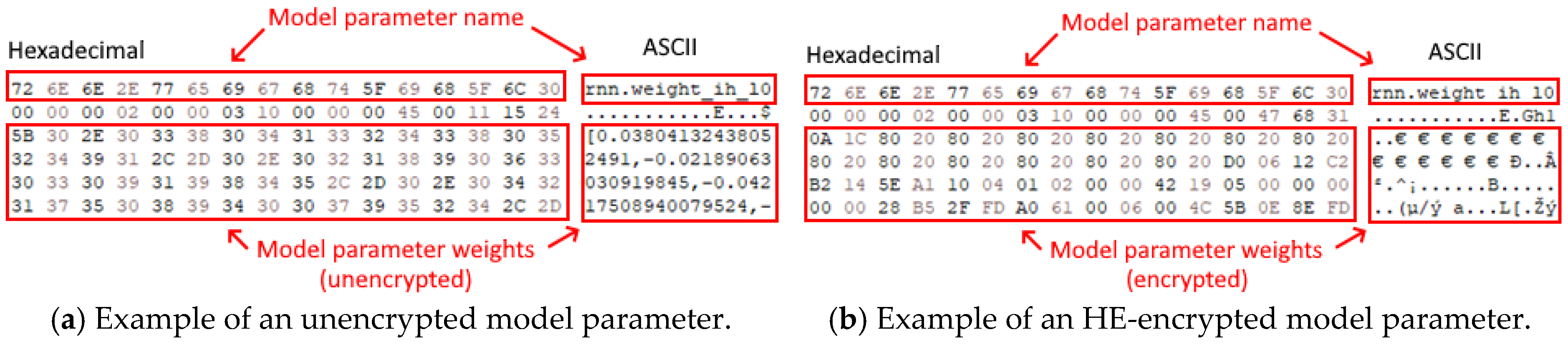

To ensure that the implementation of HE functions as expected, we can observe that a comparison between the model data stored locally on a client and the same model data fetched from another client shows differing payloads by comparing the binary output in hexadecimal form. By looking at

Figure 7, one can observe that the model parameter “rnn.weight_ih_10” is encrypted from its original value using HE, which is then distributed to other clients in the P2P network in which each client will then average their own weights against the received encrypted models from other clients using FedAvg to derive the FL model securely, preventing any data leakage from occurring from model data.

Network planners can benefit from the P2P architecture that the framework utilizes by reducing communication delays between peers through direct connection instead of through a middleman centralized server, as well as reducing cost by requiring less infrastructure. This does, however, come with a catch, as individual peers will need to be equipped with enough computational power to perform ML workloads in a reasonable amount of time. However, the cost will ultimately depend on whether a fast and performant output is required, sacrificing power efficiency and cheap costs, and vice versa. In both cases, however, privacy preservation remains a key benefit of utilizing a P2P architecture in this case, as it becomes harder to attack a decentralized network instead of one relying on a central authoritative host. In case of a DDoS attack, the single point of failure in a P2PFL network is the CRN. Should the primary CRN be taken offline, backup CRNs can be on standby, ready to take over as each peer has a list of addresses of backup registration nodes in numbered order to poll and connect to.

Following HE, the memory footprint of the encrypted model parameters increases by 126% (see

Figure 8) due to the additional data needed to support decryption and evaluation of the ciphertext. In the context of IoT devices, this additional footprint could be reduced by breaking up model parameters and sending chunks of parameters for aggregation between peers, allowing reconstruction of parameters once the round-robin cycle is completed, reducing memory consumption from HE.

6.2. Open Issues and Future Directions

While our proposed framework uses CKKS for single-key encryption, extending the framework to support MKFHE would enable secure aggregation across decentralized peers without requiring a shared secret key. This would eliminate the round-robin ciphertext exchange bottleneck and reduce network bandwidth saturation in large-scale P2P networks. Future work will explore MKFHE schemes combined with adaptive quantization techniques to minimize ciphertext size, enabling efficient multi-party computations on resource-constrained edge devices.

To address latency introduced by HTTPS/TLS in large networks, we plan to integrate lightweight cryptographic primitives (e.g., lattice-based signatures) with hardware-assisted trusted execution environments (TEEs) for peer authentication. This hybrid approach would balance security robustness with computational efficiency, particularly for time-sensitive smart grid applications. The memory overhead of CKKS remains a barrier for low-end IoT devices. Future studies will optimize HE operations for edge hardware by leveraging model pruning, federated dropout, and FPGA-accelerated encryption kernels. Collaborations with SG testbeds (e.g., IEEE PES GridLAB-D) will validate these optimizations in the real-world grid conditions, including dynamic load fluctuations and intermittent connectivity. Integrating the framework with SG testbed and adopting multi-key FHE (MKFHE) for scalable aggregation is also suggested as future work.

Moreover, in practical applications, SG systems often encounter dynamic load fluctuations and intermittent connectivity, which can disrupt P2P communication and affect FL model performance. To address these issues, future iterations of the framework will explore adaptive load-aware scheduling and asynchronous FL strategies to reduce sensitivity to fluctuating data availability and network instability. Integrating buffering and retry logic at the edge level can further enhance robustness against temporary disconnections.