A Multimodal Deep Learning Approach for Legal English Learning in Intelligent Educational Systems

Abstract

1. Introduction

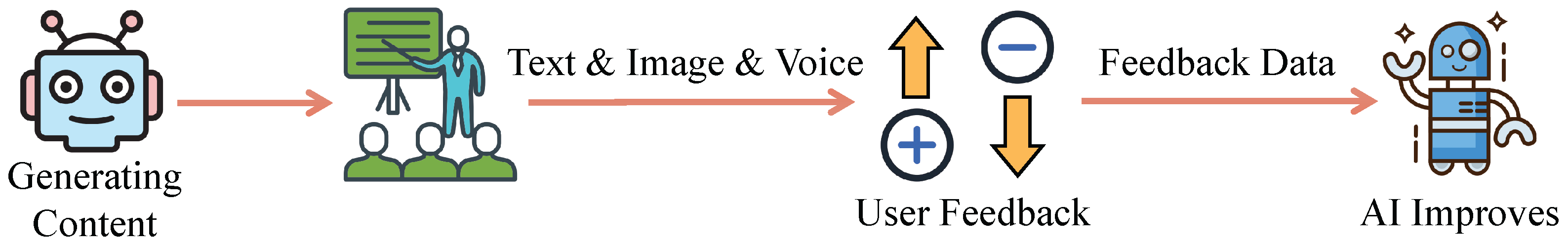

- Deep fusion of multimodal data: This system is the first to integrate data from image, speech, and text modalities for legal English question answering, extracting and understanding legal information from images and speech through deep learning models, breaking the limitations of traditional text-only input systems, and providing richer and more comprehensive learning materials.

- Cross-modal question-answering system: The system not only understands the semantic relationship between images and text but also allows legal question answering through speech input, incorporating image evidence for comprehensive analysis. Users interact with the system through speech or image inputs, and the system provides real-time feedback and scoring based on the learner’s responses, helping them better master the use of legal English.

- Multimodal semantic fusion model: The system integrates CLIP for image–text semantic alignment, a Transformer-based ASR model for speech transcription and parsing, and Sentence-BERT for deep legal question encoding. On this basis, we propose a unified vision–language–speech embedding framework that supports fine-grained semantic integration across modalities. Furthermore, an affordance-driven masking mechanism and multi-level alignment strategy are introduced to enhance the model’s focus on legally relevant information, enabling more accurate reasoning and feedback in complex legal scenarios.

- Real-world scenario simulation and teaching feedback: By integrating image and speech sensors, the system simulates real courtroom scenarios or case analysis tasks, providing a more practical interactive learning experience. In interacting with the system, learners not only improve their legal English expression abilities but also enhance their legal thinking in real-world legal contexts.

2. Related Work

2.1. Multimodal Semantic Understanding Technologies

2.2. Sensor-Supported Language Learning Systems

2.3. Legal Language Education and Interactive Question-Answering Technologies

3. Materials and Methods

3.1. Data Collection

3.2. Data Preprocessing and Augmentation

3.2.1. Image Data Preprocessing and Augmentation

3.2.2. Audio Data Preprocessing and Augmentation

3.3. Proposed Method

3.3.1. Visual-Language Encoding Module

3.3.2. Speech Recognition and Semantic Parsing Module

3.3.3. Question–Answer Matching and Feedback Module

4. Results and Discussion

4.1. Hardware and Software

4.2. Hyperparameters

4.3. Evaluation Metrics

4.4. Baseline

4.5. Experimental Results of Question-Answering Models

4.6. Experimental Results of Multimodal Matching Models

4.7. Experimental Results of User Study on Learning Improvement

4.8. Generalization Evaluation on Open Multimodal Dataset

4.9. Ablation Study on Learners with Varying English Proficiency

4.10. Discussion

4.10.1. Practical Implications and Performance Analysis

4.10.2. Methodological Reflections and Scientific Contributions

4.11. Limitations and Future Work

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Grifoni, P.; D’ulizia, A.; Ferri, F. When language evolution meets multimodality: Current status and challenges toward multimodal computational models. IEEE Access 2021, 9, 35196–35206. [Google Scholar] [CrossRef]

- Garner, B.A. Legal Writing in Plain English: A Text with Exercises; University of Chicago Press: Chicago, IL, USA, 2023. [Google Scholar]

- Chalkidis, I.; Jana, A.; Hartung, D.; Bommarito, M.; Androutsopoulos, I.; Katz, D.M.; Aletras, N. LexGLUE: A benchmark dataset for legal language understanding in English. arXiv 2021, arXiv:2110.00976. [Google Scholar] [CrossRef]

- Trott, K.C. Examining the Effects of Legal Articulation in Memory Accuracy. Master’s Thesis, The University of Regina (Canada), Regina, SK, Cananda, 2022. [Google Scholar]

- Danylchenko-Cherniak, O. Applying Disign Thinking Principles to Modern Legal English Education. Philol. Treatises 2024, 16, 32–50. [Google Scholar] [CrossRef]

- Pasa, B.; Sinni, G. New Frontiers of Legal Knowledge: How Design Provotypes Can Contribute to Legal Change. Ducato, R., Strowel, A., Marique, E., Eds.; 2024, p. 27. Available online: https://www.torrossa.com/en/resources/an/5826118#page=27 (accessed on 25 May 2025).

- Sheredekina, O.; Karpovich, I.; Voronova, L.; Krepkaia, T. Case technology in teaching professional foreign communication to law students: Comparative analysis of distance and face-to-face learning. Educ. Sci. 2022, 12, 645. [Google Scholar] [CrossRef]

- Salmerón-Manzano, E.; García, A.A.; Zapata, A.; Manzano-Agugliaro, F. Generic Skills Assessment in The Master Of Laws At University Of Almeria. In Proceedings of the INTED2021 Proceedings, Online, 8–9 March 2021; IATED: Valencia, Spain, 2021; pp. 1154–1163. [Google Scholar]

- Mazer, L.; Varban, O.; Montgomery, J.R.; Awad, M.M.; Schulman, A. Video is better: Why aren’t we using it? A mixed-methods study of the barriers to routine procedural video recording and case review. Surg. Endosc. 2022, 36, 1090–1097. [Google Scholar] [CrossRef] [PubMed]

- Hloviuk, I.; Zavtur, V.; Zinkovskyy, I.; Fedorov, O. The use of video and audio recordings provided by victims of domestic violence as evidence. Soc. Leg. Stud. 2024, 1, 145–154. [Google Scholar] [CrossRef]

- Mangaroska, K.; Martinez-Maldonado, R.; Vesin, B.; Gašević, D. Challenges and opportunities of multimodal data in human learning: The computer science students’ perspective. J. Comput. Assist. Learn. 2021, 37, 1030–1047. [Google Scholar] [CrossRef]

- Lewis, K.O.; Popov, V.; Fatima, S.S. From static web to metaverse: Reinventing medical education in the post-pandemic era. Ann. Med. 2024, 56, 2305694. [Google Scholar] [CrossRef]

- Al-Tarawneh, A.; Al-Badawi, M.; Hatab, W.A. Professionalizing legal translator training: Prospects and opportunities. Theory Pract. Lang. Stud. 2024, 14, 541–549. [Google Scholar] [CrossRef]

- Nhac, T.H. Enhancing Legal English skills for Law students through simulation-based activities. Int. J. Learn. Teach. Educ. Res. 2023, 22, 533–549. [Google Scholar] [CrossRef]

- Soto, J.H.B. Technology-Enhanced Approaches for Teaching English to Law Students: Innovations and Best Practices. Cienc. Lat. Rev. Multidiscip. 2024, 8, 4567–4592. [Google Scholar]

- Xatamova, N.; Ashurov, J. The Future of Legal English Learning: Integrating AI into ESP Education. SPAST Rep. 2024, 1, 87–102. [Google Scholar]

- Peng, Y.T.; Lei, C.L. Using Bidirectional Encoder Representations from Transformers (BERT) to predict criminal charges and sentences from Taiwanese court judgments. PeerJ Comput. Sci. 2024, 10, e1841. [Google Scholar] [CrossRef] [PubMed]

- Schmidt, T.; Strasser, T. Artificial intelligence in foreign language learning and teaching: A CALL for intelligent practice. Angl. Int. J. Engl. Stud. 2022, 33, 165–184. [Google Scholar]

- Tang, H.; Hu, Y.; Wang, Y.; Zhang, S.; Xu, M.; Zhu, J.; Zheng, Q. Listen as you wish: Fusion of audio and text for cross-modal event detection in smart cities. Inf. Fusion 2024, 110, 102460. [Google Scholar] [CrossRef]

- Yang, X.; Tan, L. Multimodal knowledge graph construction for intelligent question answering systems: Integrating text, image, and audio data. Aust. J. Electr. Electron. Eng. 2025, 1–10. [Google Scholar] [CrossRef]

- Al-Saadawi, H.F.T.; Das, B.; Das, R. A systematic review of trimodal affective computing approaches: Text, audio, and visual integration in emotion recognition and sentiment analysis. Expert Syst. Appl. 2024, 255, 124852. [Google Scholar] [CrossRef]

- Kim, D.; Kang, P. Cross-modal distillation with audio–text fusion for fine-grained emotion classification using BERT and Wav2vec 2.0. Neurocomputing 2022, 506, 168–183. [Google Scholar] [CrossRef]

- Yun, L. Application of English semantic understanding in multimodal machine learning. In Proceedings of the 2024 International Conference on Artificial Intelligence, Deep Learning and Neural Networks (AIDLNN), Guangzhou, China, 22–24 September 2024; pp. 233–237. [Google Scholar]

- Bandyopadhyay, D.; Hasanuzzaman, M.; Ekbal, A. Seeing through VisualBERT: A causal adventure on memetic landscapes. arXiv 2024, arXiv:2410.13488. [Google Scholar]

- Lim, J.; Kim, K. Wav2vec-vc: Voice conversion via hidden representations of wav2vec 2.0. In Proceedings of the ICASSP 2024-2024 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Seoul, Republic of Korea, 14–19 April 2024; pp. 10326–10330. [Google Scholar]

- Bonaccorsi, D. Speech-Text Cross-Modal Learning through Self-Attention Mechanisms. Ph.D. Thesis, Politecnico di Torino, Torino, Italy, 2023. [Google Scholar]

- Wang, Y.; Xiao, B.; Bouferguene, A.; Al-Hussein, M.; Li, H. Vision-based method for semantic information extraction in construction by integrating deep learning object detection and image captioning. Adv. Eng. Inform. 2022, 53, 101699. [Google Scholar] [CrossRef]

- Ge, Y.; Ge, Y.; Liu, X.; Wang, J.; Wu, J.; Shan, Y.; Qie, X.; Luo, P. Miles: Visual bert pre-training with injected language semantics for video-text retrieval. In Proceedings of the European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2022; pp. 691–708. [Google Scholar]

- Nguyen, C.D.; Vu-Le, T.A.; Nguyen, T.; Quan, T.; Luu, A.T. Expand BERT representation with visual information via grounded language learning with multimodal partial alignment. In Proceedings of the 31st ACM International Conference on Multimedia, Ottawa, ON, Canada, 29 October–3 November 2023; pp. 5665–5673. [Google Scholar]

- Li, Y.; Liang, F.; Zhao, L.; Cui, Y.; Ouyang, W.; Shao, J.; Yu, F.; Yan, J. Supervision exists everywhere: A data efficient contrastive language-image pre-training paradigm. arXiv 2021, arXiv:2110.05208. [Google Scholar]

- Lee, J.; Kim, J.; Shon, H.; Kim, B.; Kim, S.H.; Lee, H.; Kim, J. Uniclip: Unified framework for contrastive language-image pre-training. Adv. Neural Inf. Process. Syst. 2022, 35, 1008–1019. [Google Scholar]

- Pan, X.; Ye, T.; Han, D.; Song, S.; Huang, G. Contrastive language-image pre-training with knowledge graphs. Adv. Neural Inf. Process. Syst. 2022, 35, 22895–22910. [Google Scholar]

- Pepino, L.; Riera, P.; Ferrer, L. Emotion recognition from speech using wav2vec 2.0 embeddings. arXiv 2021, arXiv:2104.03502. [Google Scholar]

- Cai, J.; Song, Y.; Wu, J.; Chen, X. Voice disorder classification using Wav2vec 2.0 feature extraction. J. Voice 2024, in press. [Google Scholar] [CrossRef]

- Sadhu, S.; He, D.; Huang, C.W.; Mallidi, S.H.; Wu, M.; Rastrow, A.; Stolcke, A.; Droppo, J.; Maas, R. Wav2vec-c: A self-supervised model for speech representation learning. arXiv 2021, arXiv:2103.08393. [Google Scholar]

- Chen, L.; Deng, Y.; Wang, X.; Soong, F.K.; He, L. Speech bert embedding for improving prosody in neural tts. In Proceedings of the ICASSP 2021-2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; pp. 6563–6567. [Google Scholar]

- Jiang, Y.; Sharma, B.; Madhavi, M.; Li, H. Knowledge distillation from bert transformer to speech transformer for intent classification. arXiv 2021, arXiv:2108.02598. [Google Scholar]

- Xie, J.; Zhao, Y.; Zhu, D.; Yan, J.; Li, J.; Qiao, M.; He, G.; Deng, S. A machine learning-combined flexible sensor for tactile detection and voice recognition. ACS Appl. Mater. Interfaces 2023, 15, 12551–12559. [Google Scholar] [CrossRef]

- Rita, P.; Ramos, R.; Borges-Tiago, M.T.; Rodrigues, D. Impact of the rating system on sentiment and tone of voice: A Booking. com and TripAdvisor comparison study. Int. J. Hosp. Manag. 2022, 104, 103245. [Google Scholar] [CrossRef]

- Liu, Y.; Zhang, Y.A.; Zeng, M.; Zhao, J. A novel distance measure based on dynamic time warping to improve time series classification. Inf. Sci. 2024, 656, 119921. [Google Scholar] [CrossRef]

- Yu, S.; Fang, C.; Yun, Y.; Feng, Y. Layout and image recognition driving cross-platform automated mobile testing. In Proceedings of the 2021 IEEE/ACM 43rd International Conference on Software Engineering (ICSE), Virtual, 25–28 May 2021; pp. 1561–1571. [Google Scholar]

- al Khatib, A.; Shaban, M.S. Innovative Instruction of Law Teaching and Learning: Using Visual Art, Creative Methods, and Technology to Improve Learning. Int. J. Membr. Sci. Technol. 2023, 10, 361–371. [Google Scholar] [CrossRef]

- Balayn, A.; Soilis, P.; Lofi, C.; Yang, J.; Bozzon, A. What do you mean? Interpreting image classification with crowdsourced concept extraction and analysis. In Proceedings of the Web Conference 2021, Ljubljana, Slovenia, 19–23 April 2021; pp. 1937–1948. [Google Scholar]

- Zhang, J.; Hu, J. Enhancing English education with Natural Language Processing: Research and development of automated grammar checking, scoring systems, and dialogue systems. Appl. Comput. Eng. 2024, 102, 12–17. [Google Scholar] [CrossRef]

- Nypadymka, A.; Danchenko, L. From motivation to mastery: English language learning in legal education. In Proceedings of the Science and Education in the Conditions of Today’s Challenges, International Scientific and Practical Conference, Chernihiv, Ukraine, 23 May 2023; pp. 342–346. [Google Scholar]

- Danylchenko-Cherniak, O. Digital approaches in legal English education: Bridging tradition and technology. Stud. Comp. Educ. 2024, 77–83. [Google Scholar]

- Martinez-Gil, J. A survey on legal question–answering systems. Comput. Sci. Rev. 2023, 48, 100552. [Google Scholar] [CrossRef]

- Yang, X.; Wang, Z.; Wang, Q.; Wei, K.; Zhang, K.; Shi, J. Large language models for automated q&a involving legal documents: A survey on algorithms, frameworks and applications. Int. J. Web Inf. Syst. 2024, 20, 413–435. [Google Scholar]

- Hockly, N. Artificial intelligence in English language teaching: The good, the bad and the ugly. Relc J. 2023, 54, 445–451. [Google Scholar] [CrossRef]

- Moorhouse, B.L.; Li, Y.; Walsh, S. E-classroom interactional competencies: Mediating and assisting language learning during synchronous online lessons. Relc J. 2023, 54, 114–128. [Google Scholar] [CrossRef]

- Bellei, C.; Muñoz, G. Models of regulation, education policies, and changes in the education system: A long-term analysis of the Chilean case. J. Educ. Chang. 2023, 24, 49–76. [Google Scholar] [CrossRef]

- García-Cabellos, J.M.; Peláez-Moreno, C.; Gallardo-Antolín, A.; Pérez-Cruz, F.; Díaz-de María, F. SVM classifiers for ASR: A discussion about parameterization. In Proceedings of the 2004 12th European Signal Processing Conference, Vienna, Austria, 6–10 September 2004; pp. 2067–2070. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Minneapolis, MN, USA, 2–7 June 2019; Volume 1, pp. 4171–4186. [Google Scholar]

- Antol, S.; Agrawal, A.; Lu, J.; Mitchell, M.; Batra, D.; Zitnick, C.L.; Parikh, D. Vqa: Visual question answering. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 2425–2433. [Google Scholar]

- Guo, W.; Wang, J.; Wang, S. Deep Multimodal Representation Learning: A Survey. IEEE Access 2019, 7, 63373–63394. [Google Scholar] [CrossRef]

- Yang, S.; Luo, S.; Han, S.C.; Hovy, E. MAGIC-VQA: Multimodal And Grounded Inference with Commonsense Knowledge for Visual Question Answering. arXiv 2025, arXiv:2503.18491. [Google Scholar]

- Xing, Z.; Hu, X.; Fu, C.W.; Wang, W.; Dai, J.; Heng, P.A. EchoInk-R1: Exploring Audio-Visual Reasoning in Multimodal LLMs via Reinforcement Learning. arXiv 2025, arXiv:2505.04623. [Google Scholar]

- Bewersdorff, A.; Hartmann, C.; Hornberger, M.; Seßler, K.; Bannert, M.; Kasneci, E.; Kasneci, G.; Zhai, X.; Nerdel, C. Taking the next step with generative artificial intelligence: The transformative role of multimodal large language models in science education. Learn. Individ. Differ. 2025, 118, 102601. [Google Scholar] [CrossRef]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning Transferable Visual Models From Natural Language Supervision. arXiv 2021, arXiv:2103.00020. [Google Scholar]

- Li, J.; Selvaraju, R.R.; Gotmare, A.D.; Joty, S.; Xiong, C.; Hoi, S. Align before Fuse: Vision and Language Representation Learning with Momentum Distillation. arXiv 2021, arXiv:2107.07651. [Google Scholar]

- Chuang, Y.S.; Liu, C.L.; Lee, H.Y.; Lee, L.S. SpeechBERT: An Audio-and-text Jointly Learned Language Model for End-to-end Spoken Question Answering. arXiv 2020, arXiv:1910.11559. [Google Scholar]

| Data Type | Quantity |

|---|---|

| Case Scene Images (CourtListener) | 1247 |

| Evidence Document Images (FindLaw) | 1372 |

| Legal Document Scans (Wikimedia Commons) | 1098 |

| Legal QA Text Pairs (Supreme Court/FindLaw/Harvard Law Review) | 1520 |

| Learner Response Audios (Recorded by Blue Yeti) | 1214 |

| Module Name | Input Modalities | Core Models Used | Primary Function | Output Format |

|---|---|---|---|---|

| Visual-Language Encoding Module | Case image, question text | ResNet-50, SBERT, Multi-head Attention | Extracts deep visual and textual features and generates a unified semantic representation | 1024-dim fused vector |

| Speech Recognition and Semantic Parsing | Learner’s spoken response | Transformer ASR, Diffusion Transformer | Converts audio to text and extracts legal semantic structure aligned with visual-question context | Sequence of 512-dim vectors |

| Question–Answer Matching and Feedback | Fused visual, text, and speech features | ViT-B/16, OPT-1.3B LLM, Feedback Generator | Performs multimodal answer matching and generates adaptive, interpretable feedback for learners | Matching score and feedback |

| Model | BLEU | ROUGE | Precision | Recall | Accuracy |

|---|---|---|---|---|---|

| Traditional ASR+SVM Classifier [52] | 0.62 | 0.65 | 0.64 | 0.61 | 0.63 |

| Image-Retrieval-based QA Model [54] | 0.68 | 0.70 | 0.69 | 0.67 | 0.68 |

| Unimodal BERT QA System [53] | 0.74 | 0.77 | 0.76 | 0.74 | 0.75 |

| Proposed Method | 0.83 | 0.86 | 0.88 | 0.85 | 0.87 |

| Model | Matching Accuracy | Recall@1 | Recall@5 | MRR |

|---|---|---|---|---|

| VisualBERT | 0.71 | 0.69 | 0.77 | 0.73 |

| CLIP | 0.79 | 0.77 | 0.84 | 0.80 |

| Word2Vec | 0.72 | 0.71 | 0.78 | 0.75 |

| SpeechBERT | 0.77 | 0.75 | 0.82 | 0.80 |

| LXMERT | 0.74 | 0.72 | 0.80 | 0.76 |

| ALBEF | 0.80 | 0.78 | 0.84 | 0.82 |

| BLIP | 0.81 | 0.80 | 0.86 | 0.83 |

| Proposed Method | 0.85 | 0.83 | 0.90 | 0.87 |

| Method | Understanding Improvement | Expression Improvement | Satisfaction Score |

|---|---|---|---|

| Textbook-based Teaching | 0.45 | 0.40 | 0.60 |

| Traditional Oral Practice System | 0.52 | 0.48 | 0.65 |

| Unimodal QA System | 0.63 | 0.58 | 0.72 |

| Proposed Method | 0.78 | 0.75 | 0.88 |

| Model | BLEU | ROUGE | Precision | Recall | Accuracy |

|---|---|---|---|---|---|

| Traditional ASR+SVM | 0.62 | 0.64 | 0.63 | 0.60 | 0.63 |

| Image Retrieval QA | 0.67 | 0.68 | 0.68 | 0.65 | 0.67 |

| Unimodal BERT QA | 0.73 | 0.76 | 0.75 | 0.73 | 0.73 |

| Proposed Method | 0.83 | 0.85 | 0.87 | 0.83 | 0.86 |

| Group | Understanding Improvement | Expression Improvement | Satisfaction Score |

|---|---|---|---|

| High-Level Learners | 0.81 | 0.79 | 0.89 |

| Medium-Level Learners | 0.76 | 0.74 | 0.86 |

| Low-Level Learners | 0.72 | 0.69 | 0.83 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, Y.; Huang, C.; Gao, S.; Lyu, Y.; Chen, X.; Liu, S.; Bao, D.; Lv, C. A Multimodal Deep Learning Approach for Legal English Learning in Intelligent Educational Systems. Sensors 2025, 25, 3397. https://doi.org/10.3390/s25113397

Chen Y, Huang C, Gao S, Lyu Y, Chen X, Liu S, Bao D, Lv C. A Multimodal Deep Learning Approach for Legal English Learning in Intelligent Educational Systems. Sensors. 2025; 25(11):3397. https://doi.org/10.3390/s25113397

Chicago/Turabian StyleChen, Yanlin, Chenjia Huang, Shumiao Gao, Yifan Lyu, Xinyuan Chen, Shen Liu, Dat Bao, and Chunli Lv. 2025. "A Multimodal Deep Learning Approach for Legal English Learning in Intelligent Educational Systems" Sensors 25, no. 11: 3397. https://doi.org/10.3390/s25113397

APA StyleChen, Y., Huang, C., Gao, S., Lyu, Y., Chen, X., Liu, S., Bao, D., & Lv, C. (2025). A Multimodal Deep Learning Approach for Legal English Learning in Intelligent Educational Systems. Sensors, 25(11), 3397. https://doi.org/10.3390/s25113397