An Object Feature-Based Recognition and Localization Method for Wolfberry

Abstract

1. Introduction

- A novel feature fusion algorithm is proposed to achieve pixel-level fusion of feature from different color spaces through wavelet transformation.

- A K-means clustering algorithm is proposed to accurately locate the grasping points on the branches based on the Lab color space.

- A coordinate prediction method is proposed for branch clamping points based on the position of the fruit with high applicability and robustness.

2. Materials and Methods

2.1. Image Collection

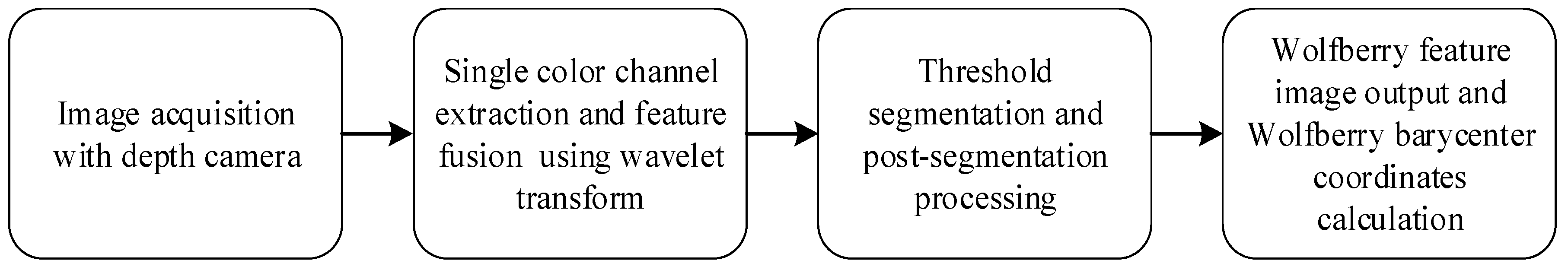

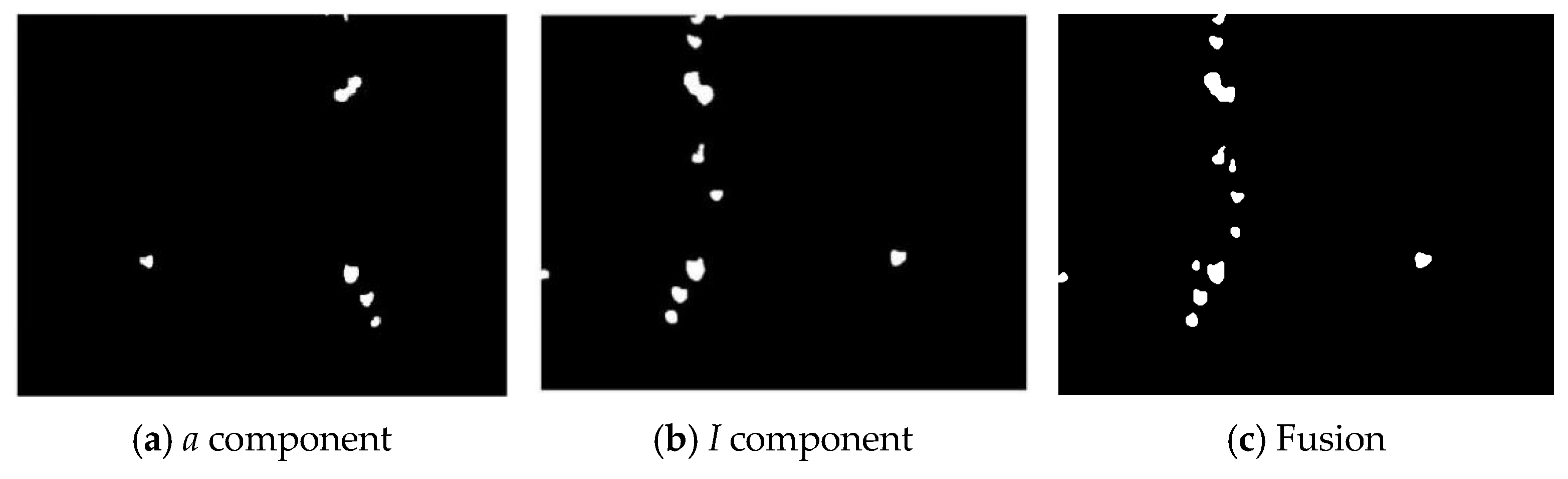

2.2. Image Segmentation of Wolfberry Fruits Based on Feature Fusion

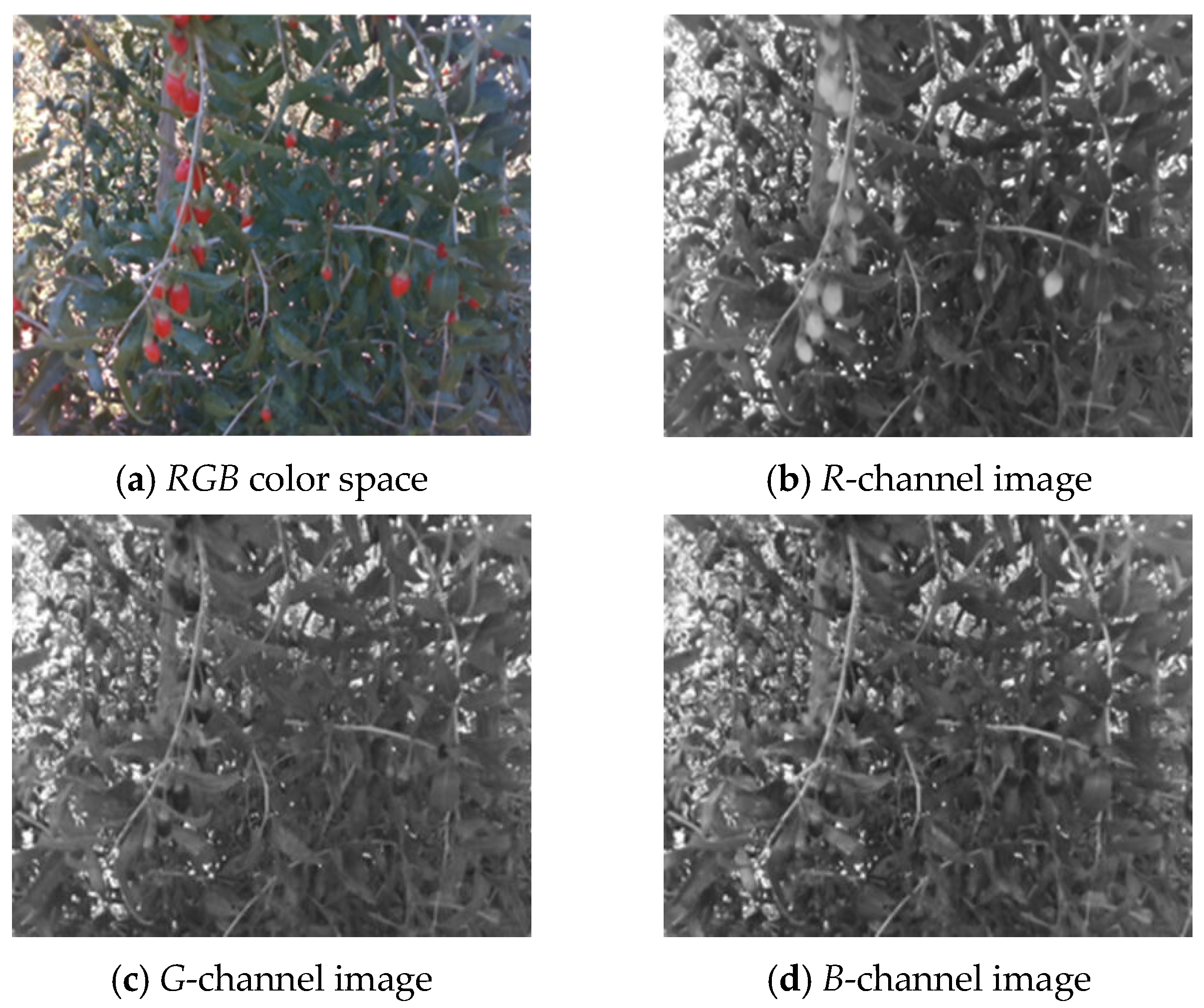

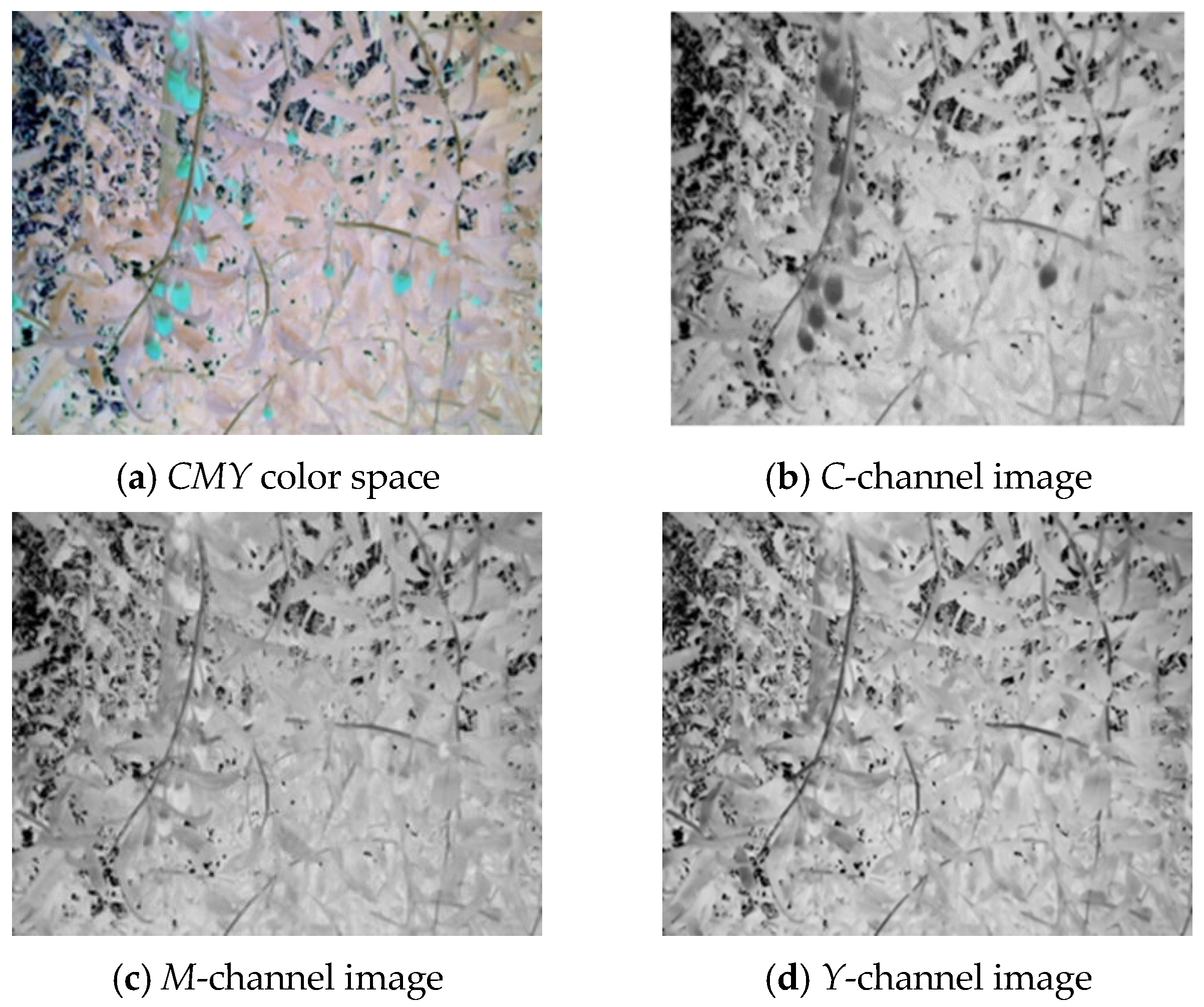

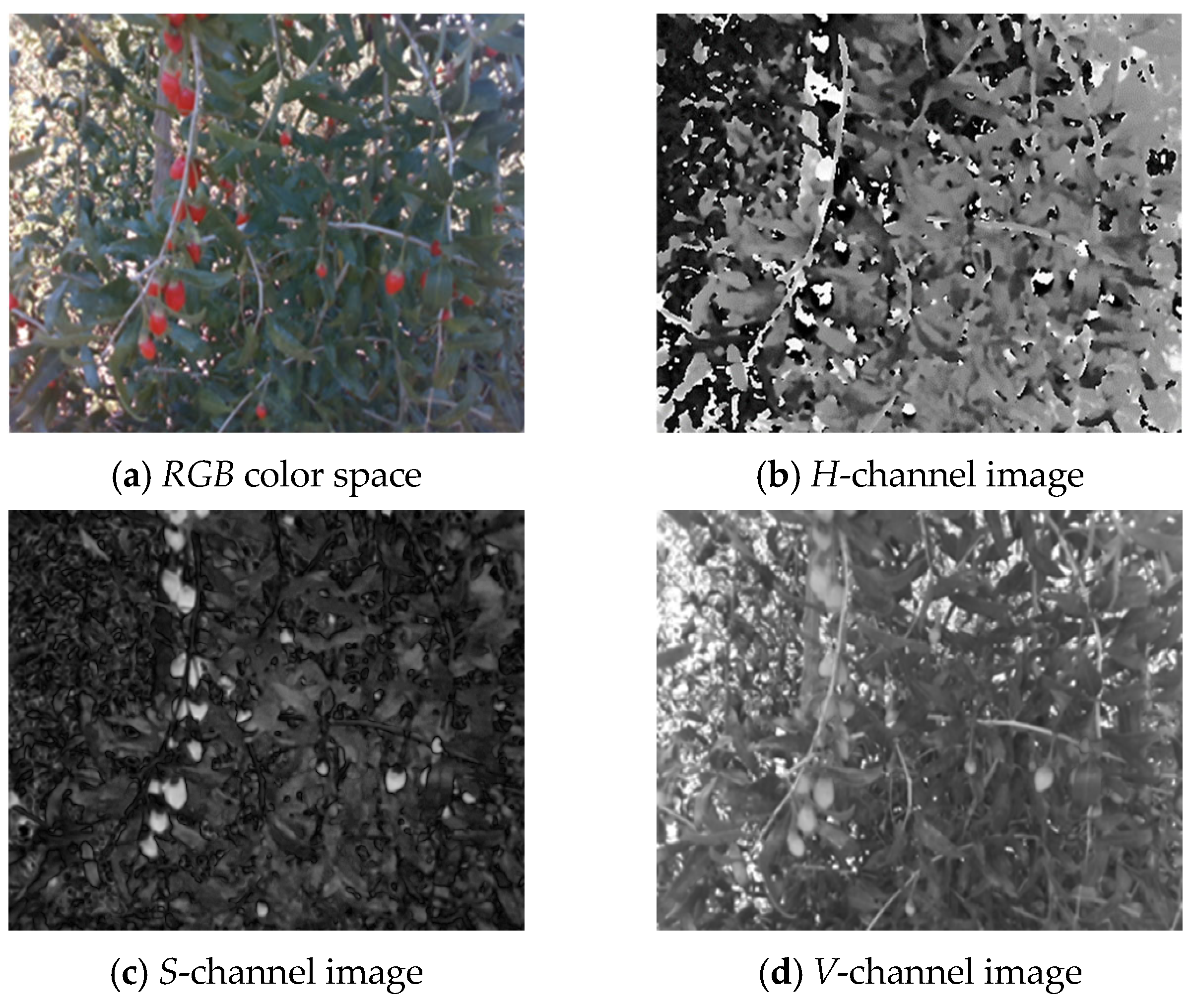

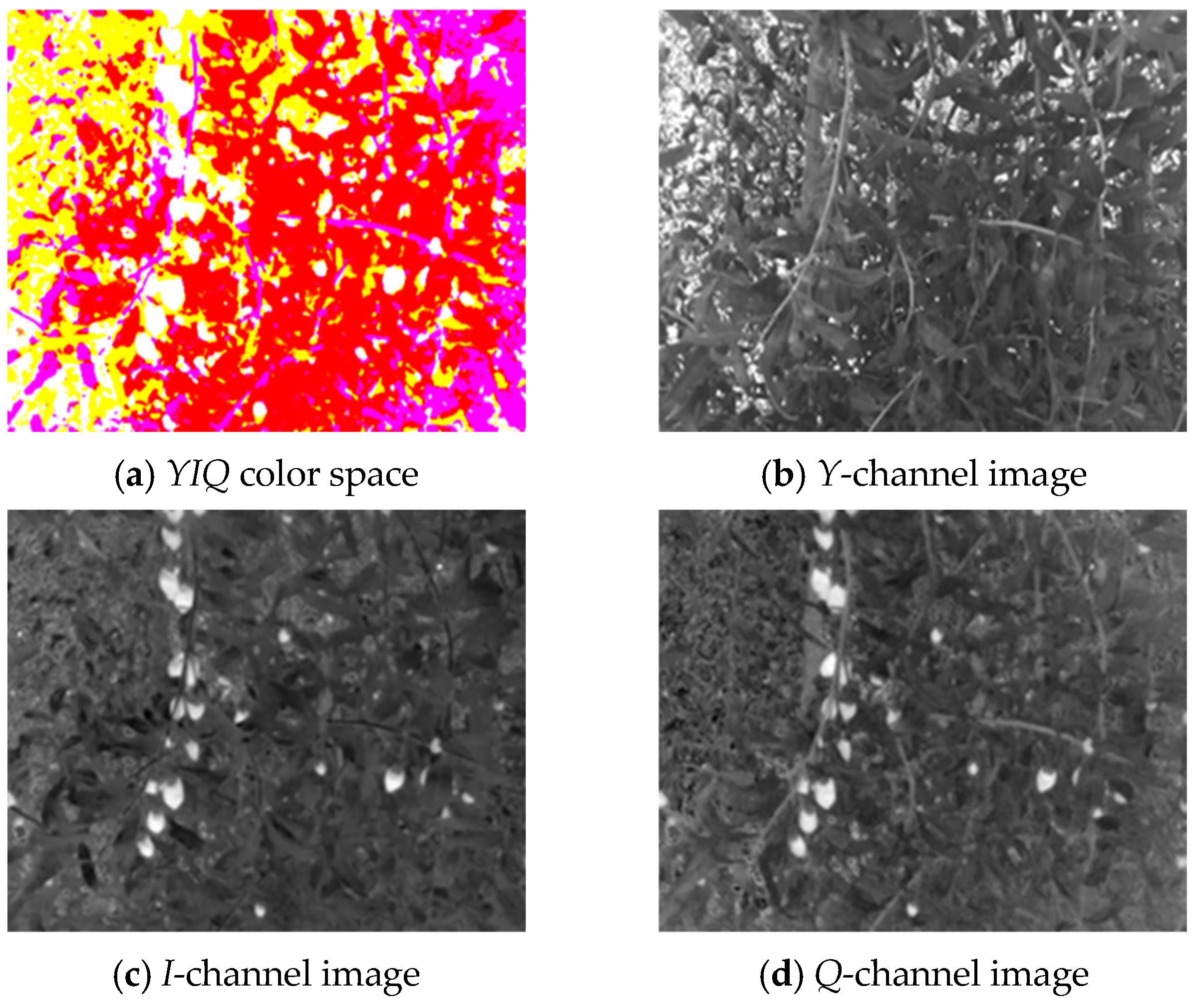

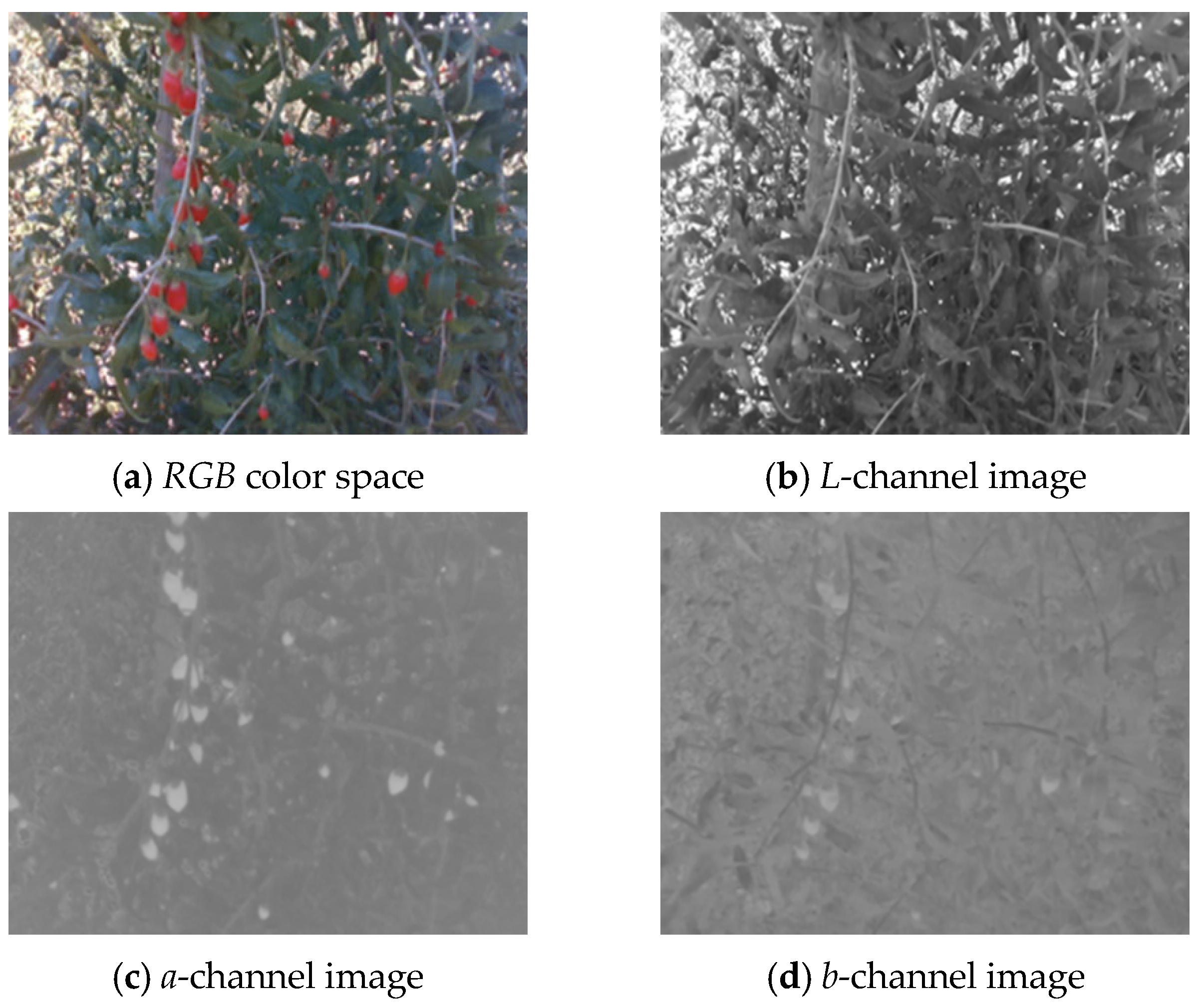

2.2.1. Feature Image Extraction Based on Color Space Models

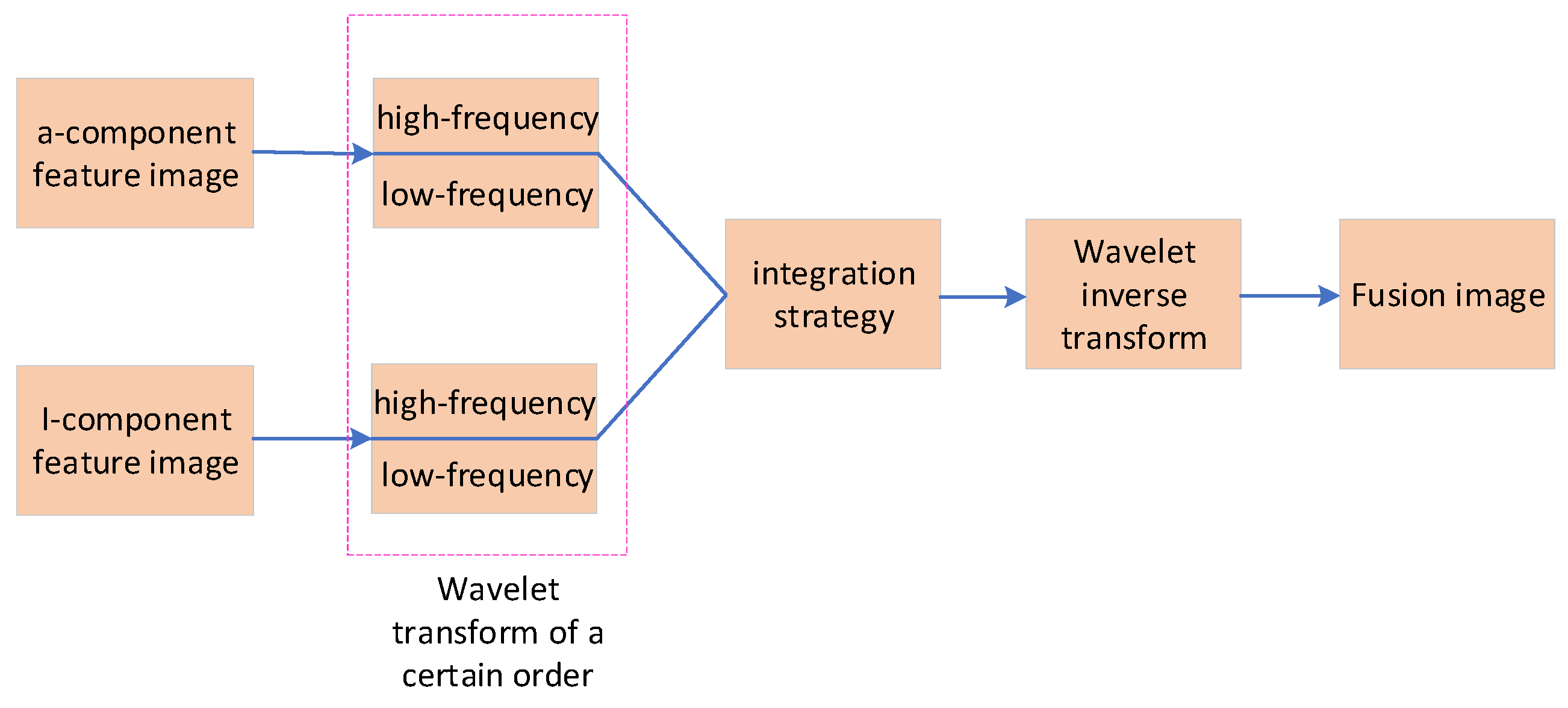

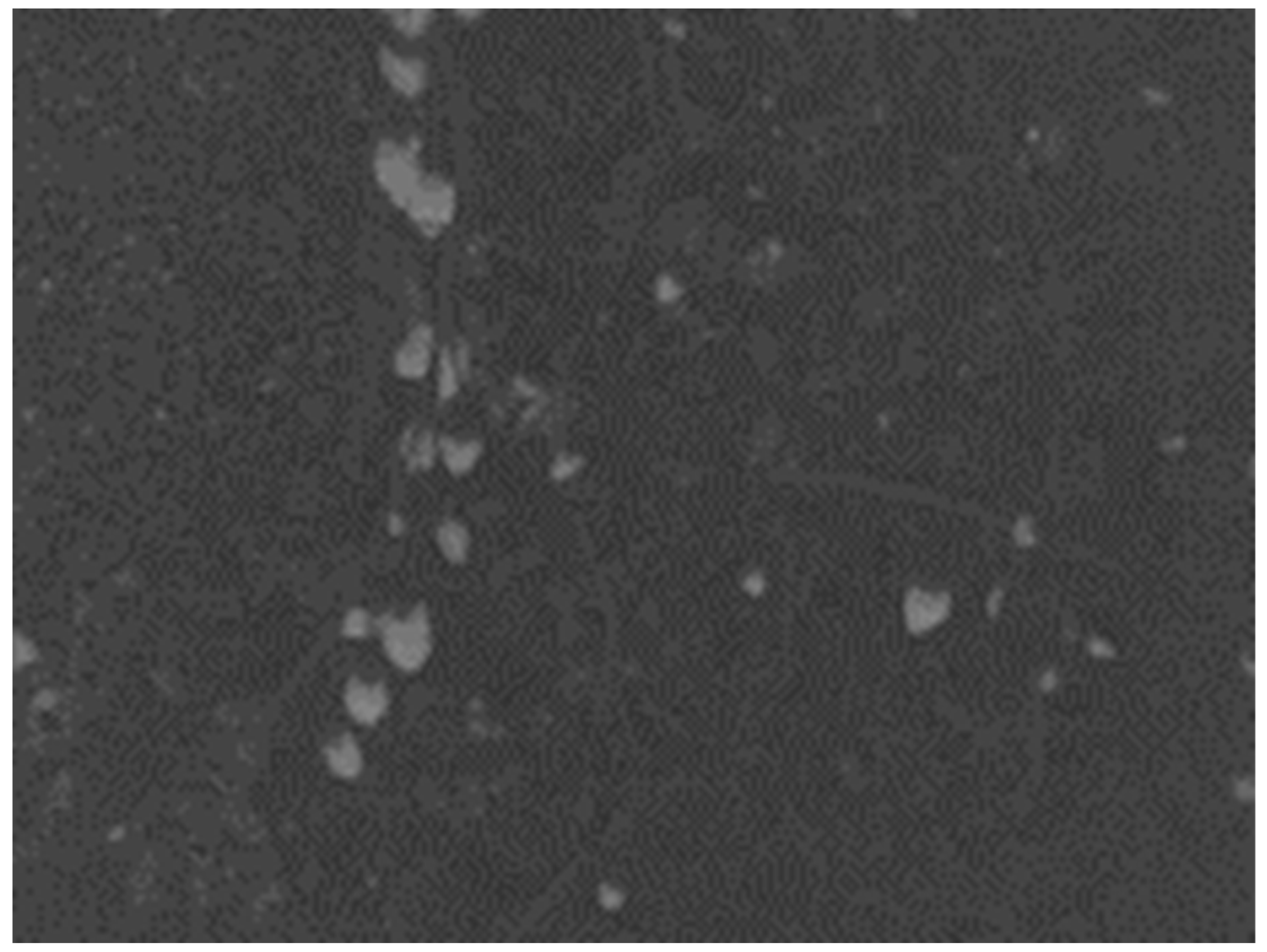

2.2.2. Wavelet Transformation-Based Feature Image Fusion Algorithm

- (1)

- Convert the RGB color space image into LAB and YIQ color space images.

- (2)

- Extract the a-channel image (a-component feature image) from the LAB color space and the I-channel image (I-component feature image) from the YIQ color space.

- (3)

- Convert the a-component feature image and the I-component feature image into double-type data.

- (4)

- Set the wavelet decomposition level to 3, perform wavelet decomposition on the a-component feature image and the I-component feature image, and obtain the high-frequency and low-frequency parts.

- (5)

- Fuse the high-frequency and low-frequency parts according to a specific fusion strategy, obtain multi-scale images, and reconstruct the fused wolfberry feature image using wavelet inverse transformation.

2.2.3. Post-Processing of the Fused Image

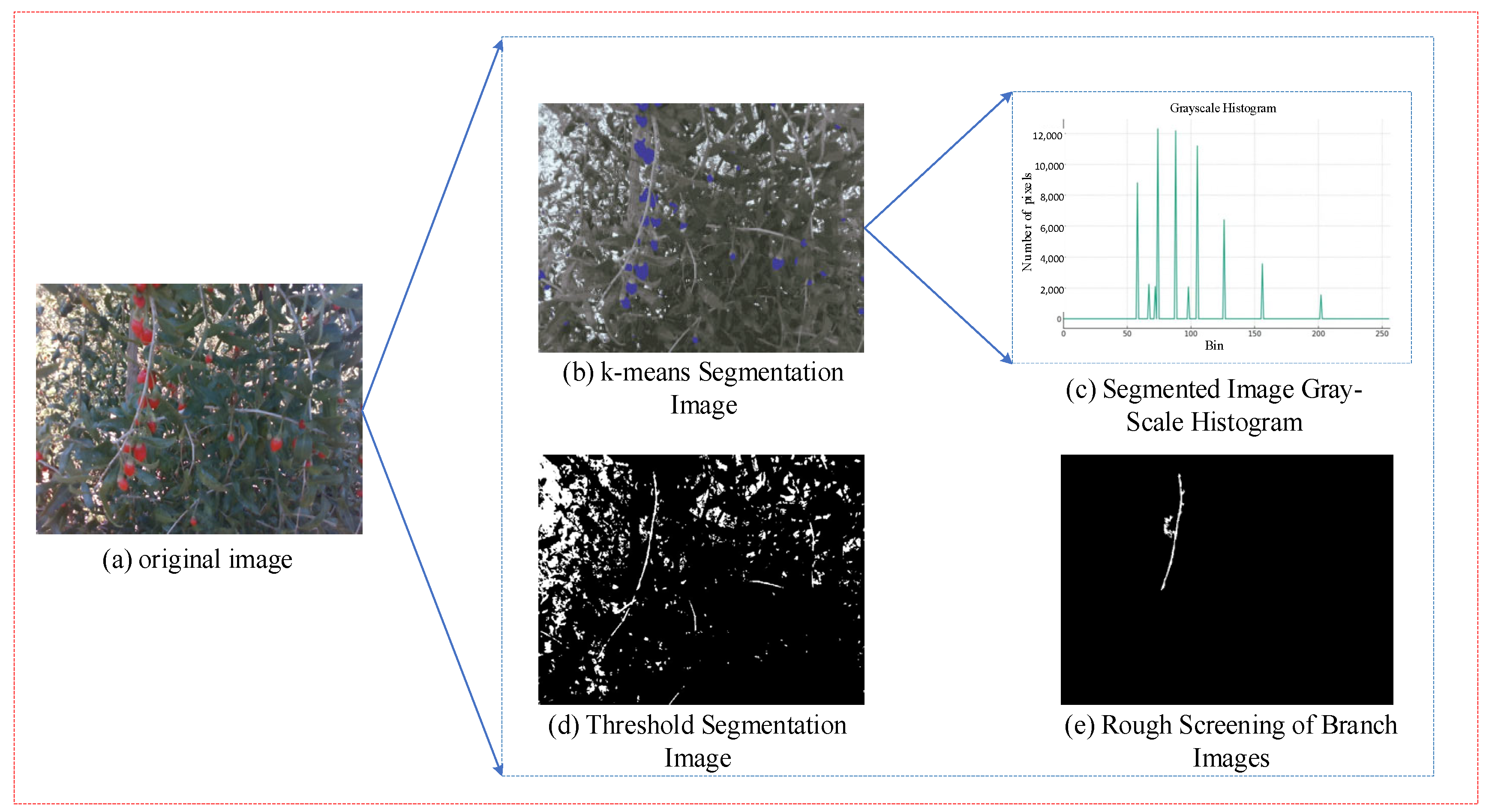

2.3. Clustering-Based Branch Recognition and Localization Method

2.3.1. Clustering Segmentation Based on LAB Color Space

- (1)

- Randomly select k points in the image as centers.

- (2)

- Compute the distance of each sample point to these centers and assign the sample points to the class of the center closest to them.

- (3)

- Recalculate the center of each class, using the mean of all sample features in that class as the center’s feature.

- (4)

- Repeat steps (2) to (3) until the termination condition is met.

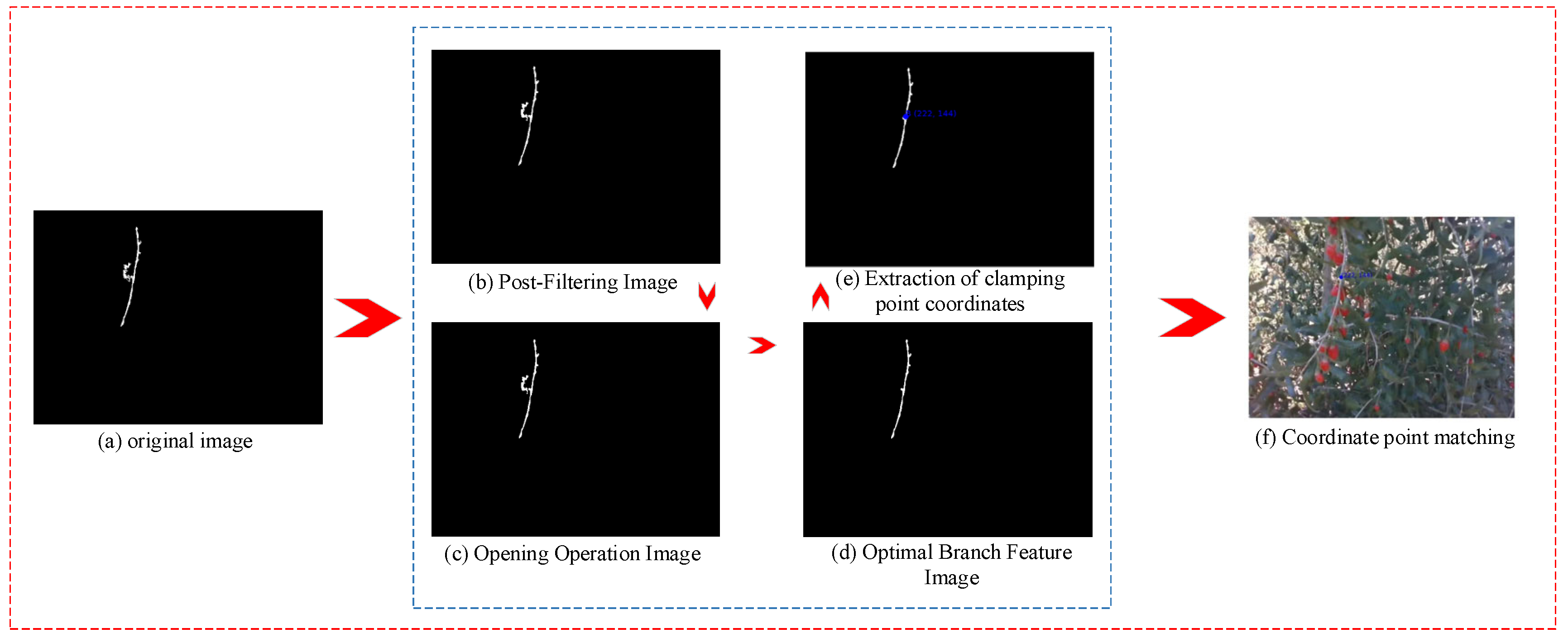

- (1)

- Convert the uploaded image into a grayscale image and perform binarization.

- (2)

- Find and analyze all contours in the image, identifying the longest contour by calculating the height of the bounding rectangle for each contour.

- (3)

- Create a mask image that only covers the longest contour.

- (4)

- Apply the mask to update the original image, thereby retaining only the highest region.

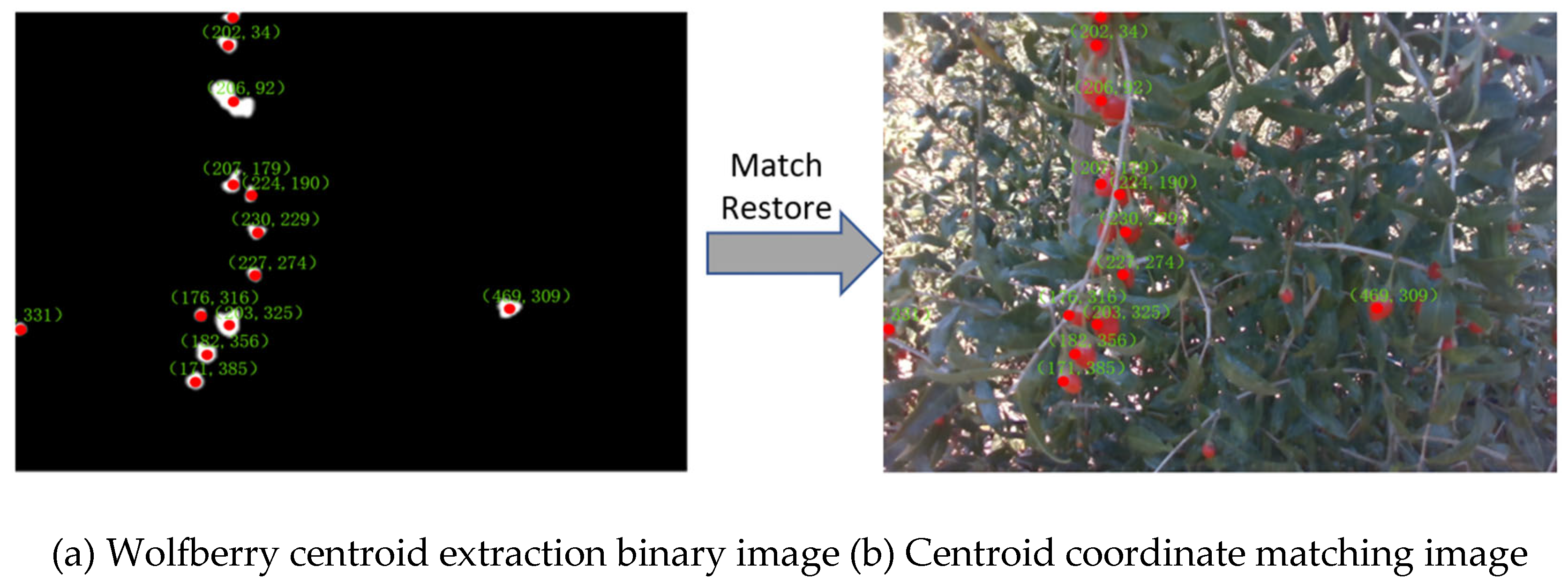

2.3.2. Branch Clamping Point Localization

3. Results and Discussion

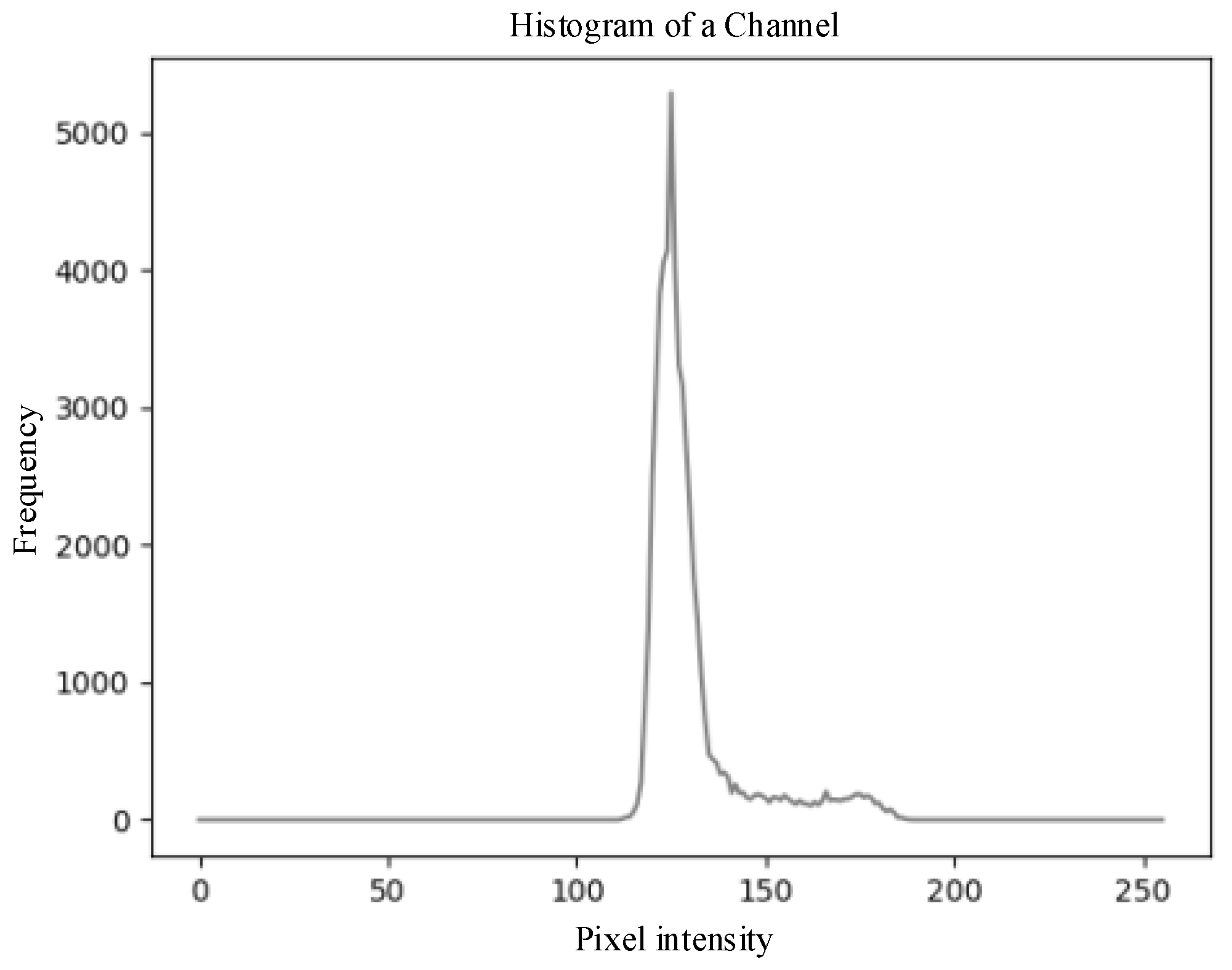

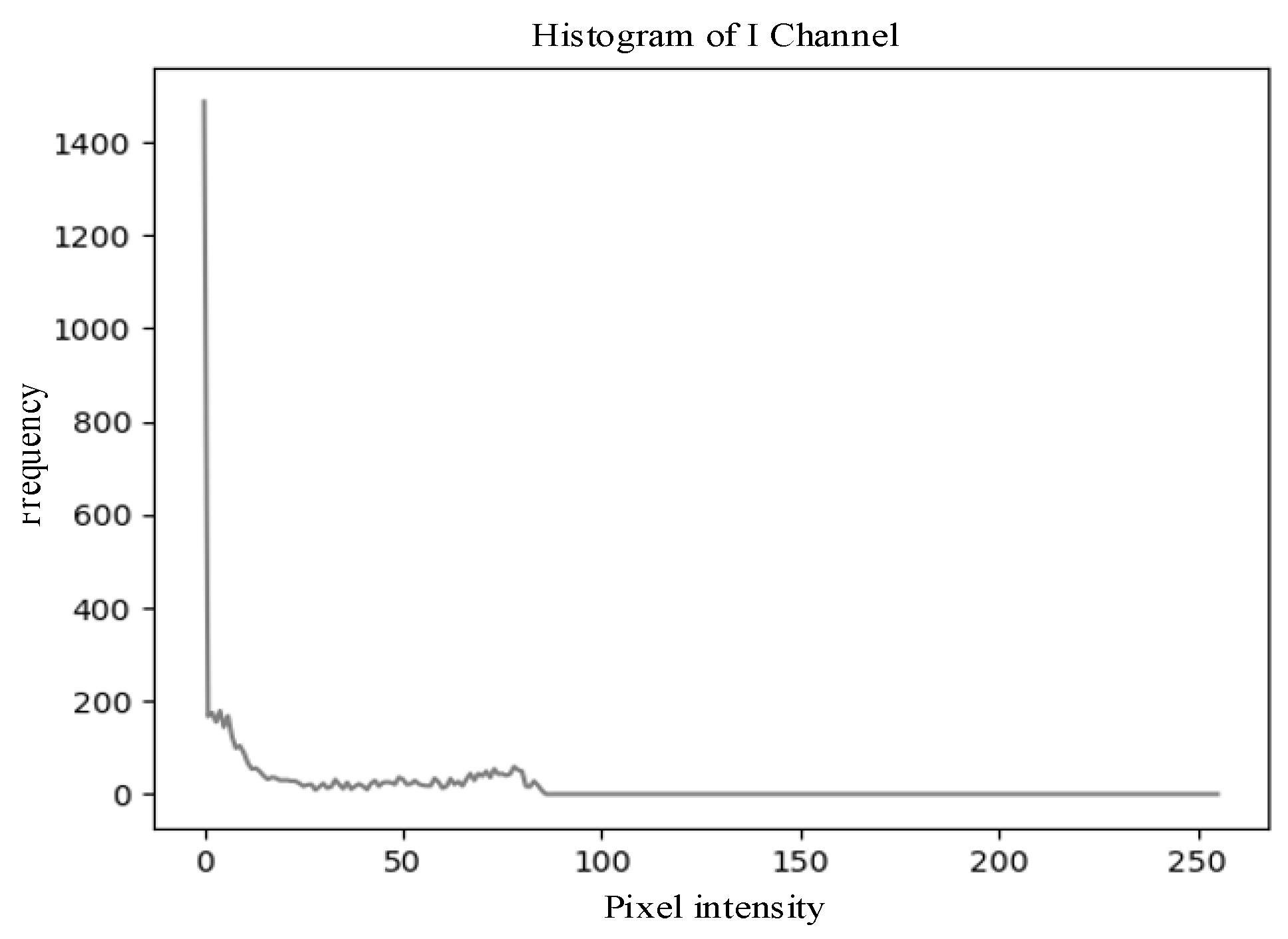

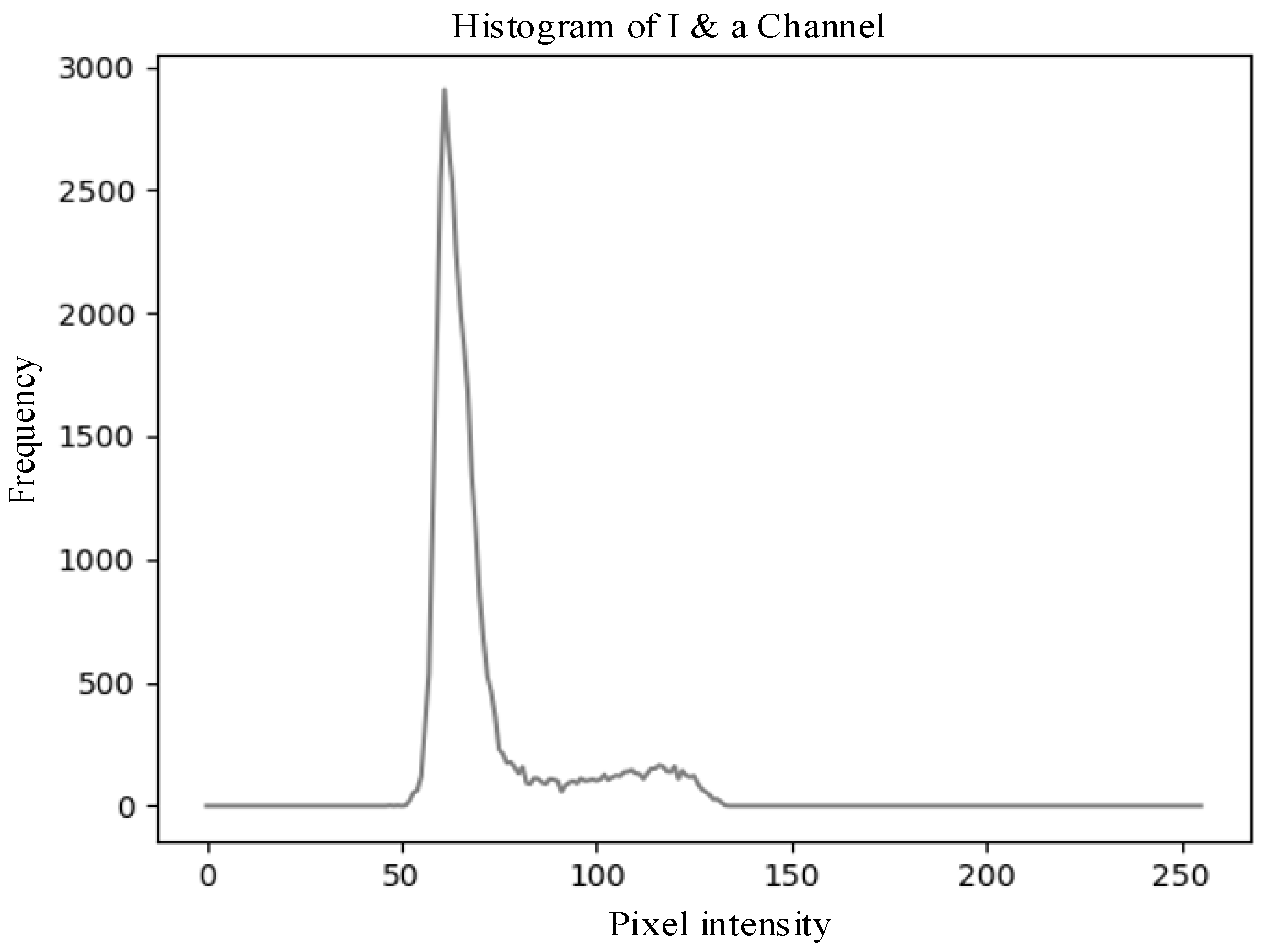

3.1. Feature Characteristic of Wolfberry Image

3.2. Wolfberry Fruit Image Segmentation Experiment

3.3. Wolfberry Branch Image Segmentation Experiment

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Sun, W.; Shahrajabian, M.; Cheng, Q. Health benefits of wolfberry (Gou Qi Zi, Fructus barbarum L.) on the basis of ancient Chineseherbalism and Western modern medicine. Avicenna J. Phytomed. 2021, 11, 109–119. [Google Scholar]

- Xing, Z.W.; Wang, Y.T.; Qu, A.L.; Yang, C. MFENet: Multi-scale feature extraction network for images deblurring and segmentation of swinging wolfberry branch. Comput. Electron. Agric. 2023, 215, 108413. [Google Scholar] [CrossRef]

- Dai, G.; Fan, J.; Dewi, C. ITF-WPI: Image and text based cross-modal feature fusion model for wolfberry pest recognition. Comput. Electron. Agric. 2023, 212, 108129. [Google Scholar] [CrossRef]

- Wang, J.; Tan, D.; Sui, L.; Guo, J.; Wang, R. Wolfberry recognition and picking-point localization technology in natural environments based on improved Yolov8n-Pose-LBD. Comput. Electron. Agric. 2024, 227, 109551. [Google Scholar] [CrossRef]

- Amagase, H.; Farnsworth, N.R. A review of botanical characteristics, phytochemistry, clinical relevance in efficacy and safety of Lycium barbarum fruit (Goji). Food Res. Int. 2011, 44, 1702–1717. [Google Scholar] [CrossRef]

- Pan, M.; Chiou, Y.; Tsai, M.; Ho, C. Anti-inflammatory activity of traditional Chinese medicinal herbs. J. Tradit. Complement. Med. 2011, 1, 8–24. [Google Scholar] [CrossRef]

- Chen, F.J.; Su, Y.; Zhang, F.; Guo, Y.L. Low-temperature headspace-trap gas chromatography with mass spectrometry for the determination of trace volatile compounds from the fruit of Lycium barbarum L. J. Sep. Sci. 2015, 38, 670–676. [Google Scholar] [CrossRef]

- Ma, Y.; Reddy, V.R.; Devi, M.J.; Song, L.; Cao, B. De novo characterization of the Goji berry (Lycium barbarium L.) fruit transcriptome and analysis of candidate genes involved in sugar metabolism under different CO2 concentrations. Tree Physiol. 2019, 39, 1032–1045. [Google Scholar]

- Byambasuren, S.-E.; Wang, J.; Gaudel, G. Medicinal value of wolfberry (Lycium barbarum L.). J. Med. Plants Stud. 2019, 7, 90–97. [Google Scholar]

- Wang, Y.; Liang, X.; Guo, S.; Li, Y.; Zhang, B.; Yin, Y.; An, W.; Cao, Y.; Zhao, J. Evaluation of nutrients and related environmental factors for wolfberry (Lycium barbarum) fruits grown in the different areas of China. Biochem. Syst. Ecol. 2019, 86, 103916. [Google Scholar]

- Tang, Y.; Qiu, J.; Zhang, Y.; Wu, D.; Cao, Y.; Zhao, K.; Zhu, L. Optimization strategies of fruit detection to overcome the challenge of unstructured background in field orchard environment: A review. Precis. Agric. 2023, 24, 1183–1219. [Google Scholar] [CrossRef]

- Song, Z.; Fu, L.; Wu, J.; Liu, Z.; Li, R.; Cui, Y. Kiwifruit detection in field images using Faster R-CNN with VGG16. IFAC-PapersOnLine 2019, 52, 76–81. [Google Scholar] [CrossRef]

- Liang, C.; Xiong, J.; Zheng, Z.; Zhong, Z.; Li, Z.; Chen, S.; Yang, Z. A visual detection method for nighttime litchi fruits and fruiting stems. Comput. Electron. Agric. 2020, 169, 105192. [Google Scholar] [CrossRef]

- Chen, J.; Wang, Z.; Wu, J.; Hu, Q.; Zhao, C.; Tan, C.; Teng, L.; Luo, T. An improved Yolov3 based on dual path network for cherry tomatoes detection. J. Food Process Eng. 2021, 44, e13803. [Google Scholar] [CrossRef]

- Qi, X.; Dong, J.; Lan, Y.; Zhu, H. Method for identifying litchi picking position based on YOLOv5 and PSPNet. Remote Sens. 2022, 14, 2004. [Google Scholar] [CrossRef]

- Arefi, A.; Motlagh, A.M.; Mollazade, K.; Teimourlou, R.F. Recognition and localization of ripen tomato based on machine vision. Aust. J. Crop Sci. 2011, 5, 1144–1149. [Google Scholar]

- Tanigaki, K.; Fujiura, T.; Akase, A.; Imagawa, J. Cherry-harvesting robot. Comput. Electron. Agric. 2008, 63, 65–72. [Google Scholar] [CrossRef]

- Malik, M.H.; Zhang, T.; Li, H.; Zhang, M.; Shabbir, S.; Saeed, A. Mature tomato fruit detection algorithm based on improved HSV and watershed algorithm. IFAC-PapersOnLine 2018, 51, 431–436. [Google Scholar] [CrossRef]

- Li, B.; Wang, M.; Wang, N. Development of a Real-Time Fruit Recognition System for Pineapple Harvesting Robots; American Society of Agricultural and Biological Engineers: Pittsburgh, PA, USA, 2010. [Google Scholar]

- Bulanon, D.M.; Kataoka, T.; Ota, Y.; Hiroma, T. AE—Automation and emerging technologies: A segmentation algorithm for the automatic recognition of Fuji apples at harvest. Biosyst. Eng. 2002, 83, 405–412. [Google Scholar] [CrossRef]

- Zhou, R.; Damerow, L.; Sun, Y.; Blanke, M.M. Using colour features of cv. ‘Gala’ apple fruits in an orchard in image processing to predict yield. Precis. Agric. 2012, 13, 568–580. [Google Scholar] [CrossRef]

- Whittaker, D.; Miles, G.; Mitchell, O.; Gaultney, L. Fruit location in a partially occluded image. Trans. ASAE 1987, 30, 591–0596. [Google Scholar] [CrossRef]

- Hannan, M.W.; Burks, T.F.; Bulanon, D.M. A machine vision algorithm combining adaptive segmentation and shape analysis for orange fruit detection. Agric. Eng. Int. CIGR J. 2009, XI, 1281. [Google Scholar]

- Kelman, E.E.; Linker, R. Vision-based localisation of mature apples in tree images using convexity. Biosyst. Eng. 2014, 118, 174–185. [Google Scholar] [CrossRef]

- Zhang, J.; Tan, T.; Ma, L. Invariant texture segmentation via circular Gabor filters. In Proceedings of the 2002 International Conference on Pattern Recognition, Quebec City, QC, Canada, 11–15 August 2002. [Google Scholar]

- Kurtulmus, F.; Lee, W.S.; Vardar, A. Green citrus detection using ‘eigenfruit’, color and circular Gabor texture features under natural outdoor conditions. Comput. Electron. Agric. 2011, 78, 140–149. [Google Scholar] [CrossRef]

- Chaivivatrakul, S.; Dailey, M.N. Texture-based fruit detection. Precis. Agric. 2014, 15, 662–683. [Google Scholar] [CrossRef]

- Song, H.; Zhang, C.; Pan, J.; Yin, X.; Zhuang, Y. Segmentation and reconstruction of overlapped apple images based on convex hull. Trans. Chin. Soc. Agric. Eng. 2013, 29, 163–168. [Google Scholar]

- Payne, A.; Walsh, K.; Subedi, P.; Jarvis, D. Estimating mango crop yield using image analysis using fruit at ‘stone hardening’ stage and night time imaging. Comput. Electron. Agric. 2014, 100, 160–167. [Google Scholar] [CrossRef]

- Payne, A.B.; Walsh, K.B.; Subedi, P.; Jarvis, D. Estimation of mango crop yield using image analysis—Segmentation method. Comput. Electron. Agric. 2013, 91, 57–64. [Google Scholar] [CrossRef]

- Zhang, W.; Liu, X.; Wang, W.; Zeng, Y. Multi-exposure image fusion based on wavelet transform. Int. J. Adv. Robot. Syst. 2018, 15, 172988141876893. [Google Scholar] [CrossRef]

| Image Type | Gray-Scale Distribution | Split Threshold Range |

|---|---|---|

| a-component Feature image | 110–180 | 80–180 |

| I-component Feature image | 0–90 | 20–80 |

| Fusion Image | 50–130 | 70–130 |

| Image Type | Segmentation Correctness |

|---|---|

| a-component Feature image | 57% |

| I-component Feature image | 73% |

| Fusion Image | 78% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, R.; Tan, D.; Ju, X.; Wang, J. An Object Feature-Based Recognition and Localization Method for Wolfberry. Sensors 2025, 25, 3365. https://doi.org/10.3390/s25113365

Wang R, Tan D, Ju X, Wang J. An Object Feature-Based Recognition and Localization Method for Wolfberry. Sensors. 2025; 25(11):3365. https://doi.org/10.3390/s25113365

Chicago/Turabian StyleWang, Renwei, Dingzhong Tan, Xuerui Ju, and Jianing Wang. 2025. "An Object Feature-Based Recognition and Localization Method for Wolfberry" Sensors 25, no. 11: 3365. https://doi.org/10.3390/s25113365

APA StyleWang, R., Tan, D., Ju, X., & Wang, J. (2025). An Object Feature-Based Recognition and Localization Method for Wolfberry. Sensors, 25(11), 3365. https://doi.org/10.3390/s25113365