YOLOv8-MFD: An Enhanced Detection Model for Pine Wilt Diseased Trees Using UAV Imagery

Abstract

1. Introduction

- (1)

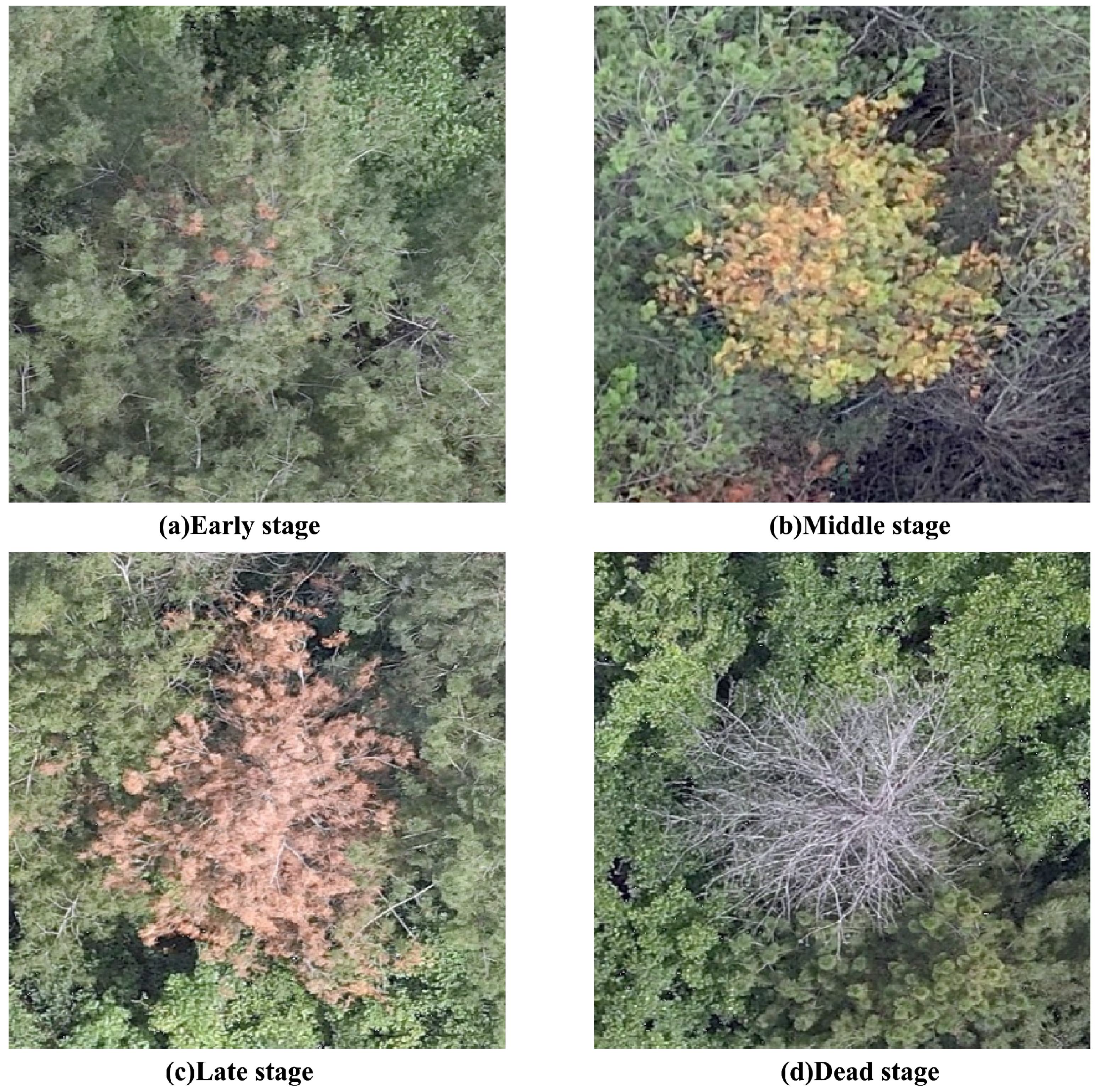

- Based on the UAV platform, we constructed a high-quality dataset of PWD that included early, middle, late, and death stages of disease characterization for both small and large targets.

- (2)

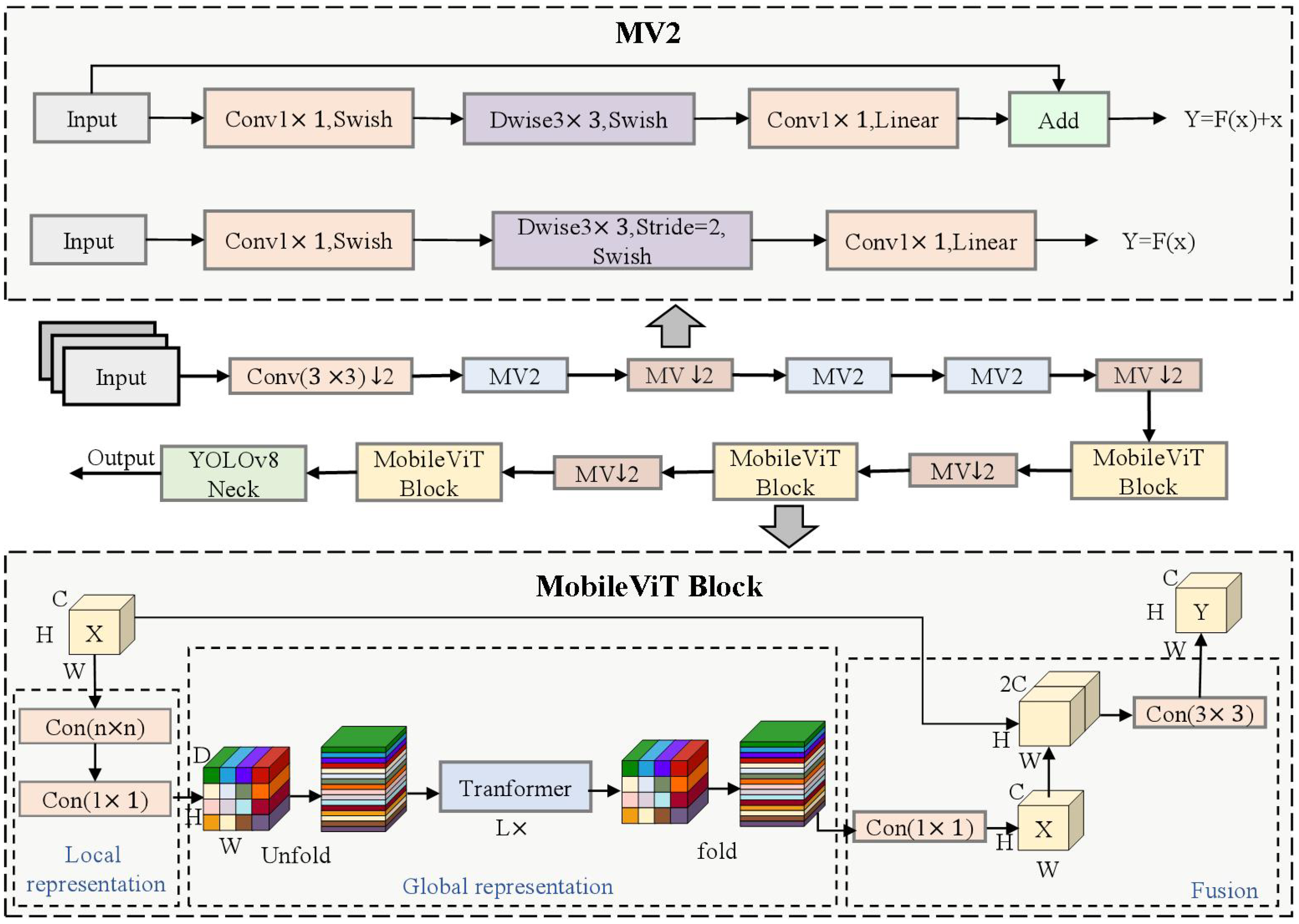

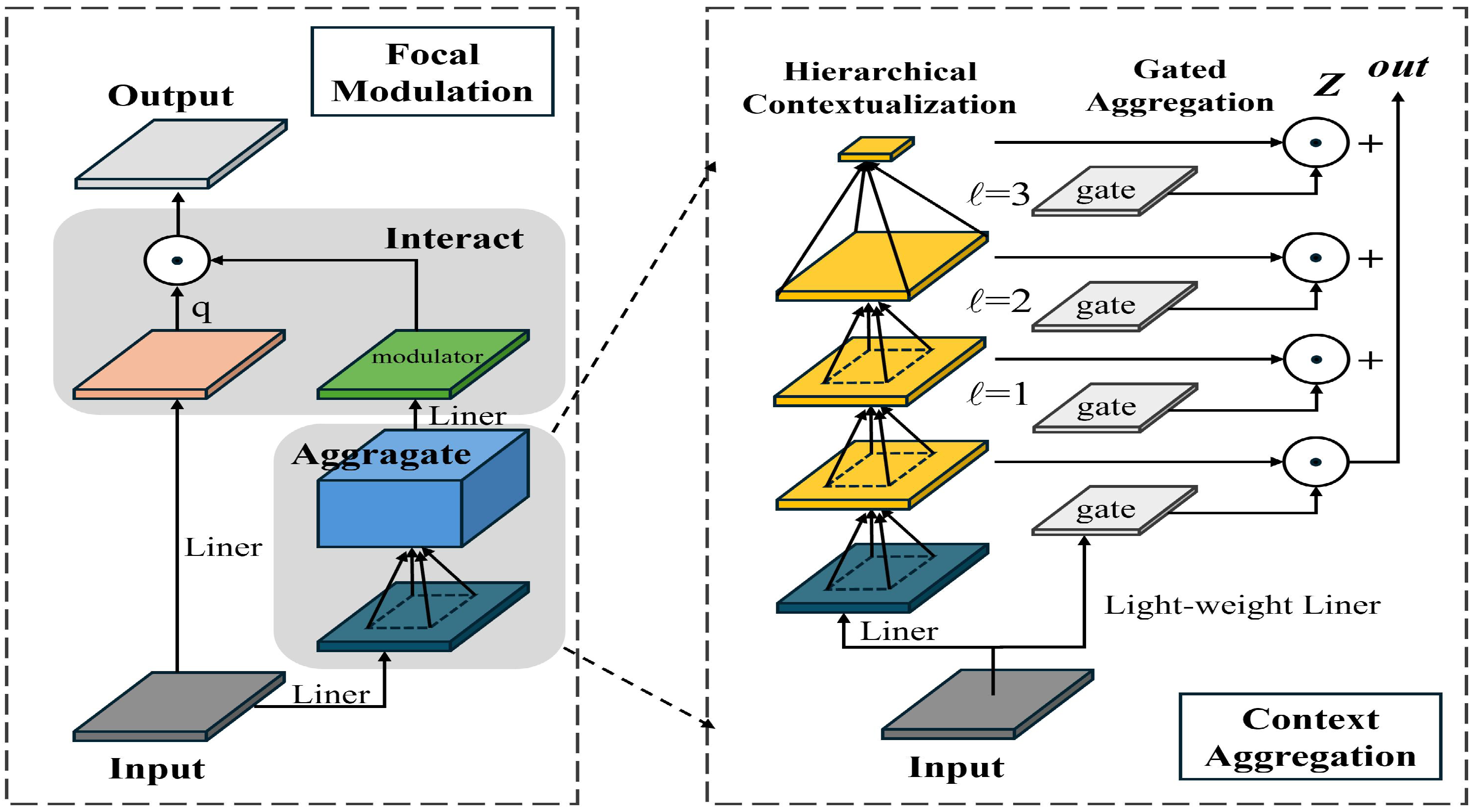

- We propose a method that integrates the MobileViT feature extraction network into the backbone of the YOLOv8 network, enabling it to extract both local details of the target and capture extensive contextual information. This allows the model to adapt to complex environments and minimize background interference, better distinguishing between red broad-leaved trees and diseased and withered pine trees. The incorporation of the Focal Modulation module effectively mitigates the impact of uneven illumination by fusing distant context information. Additionally, the Dynamic Head’s dynamic adjustment capability ensures that the model can adapt flexibly to targets of varying scales, thereby significantly reducing errors resulting from differences in target shapes or sizes.

- (3)

- In this study, a comprehensive evaluation was conducted on a homemade PWD dataset. The extent to which each module contributes to the model was verified through numerous ablation experiments, while the validity and superiority of our proposed model were confirmed through comparative experiments.

2. Materials and Methods

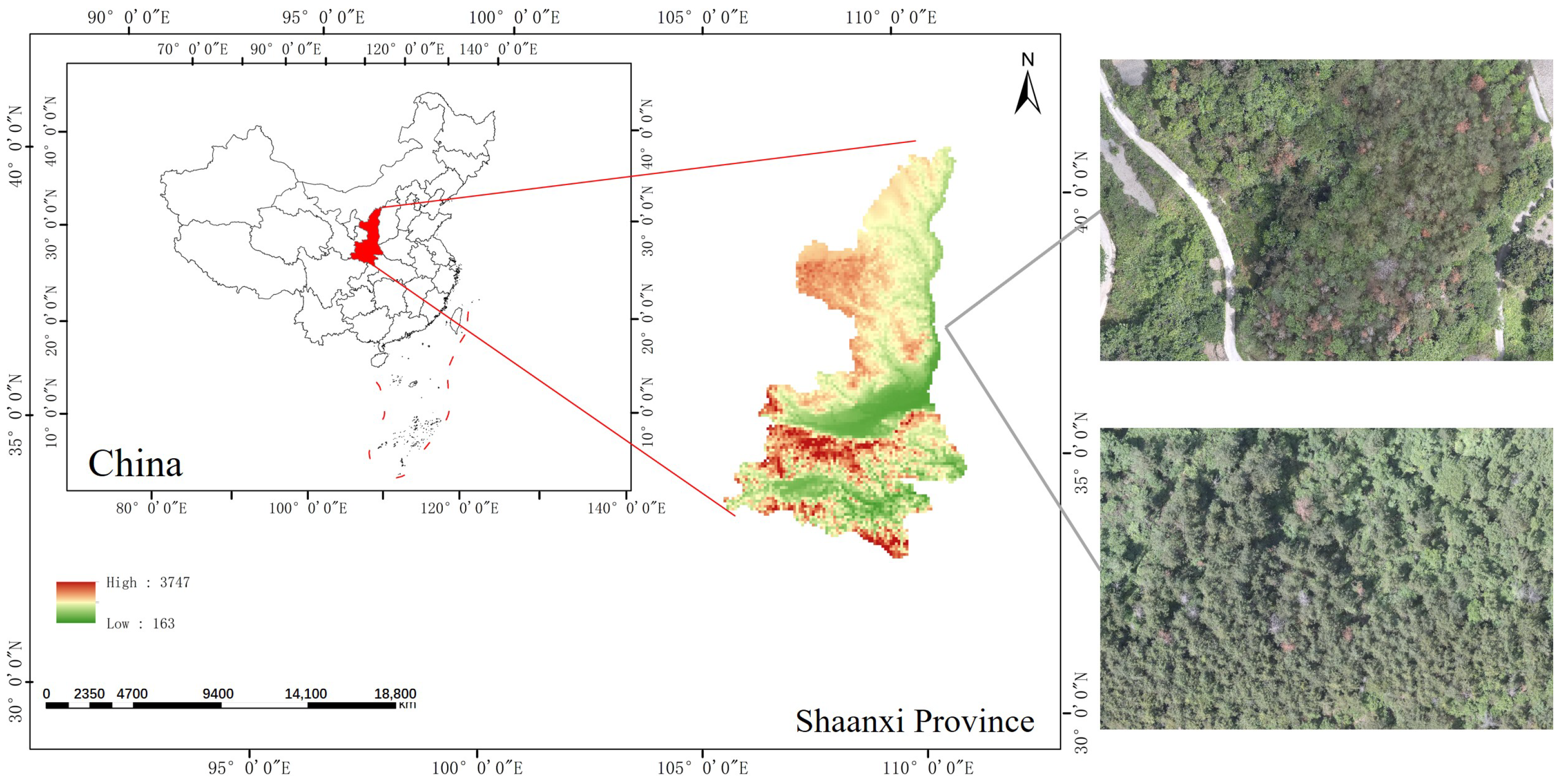

2.1. Study Area

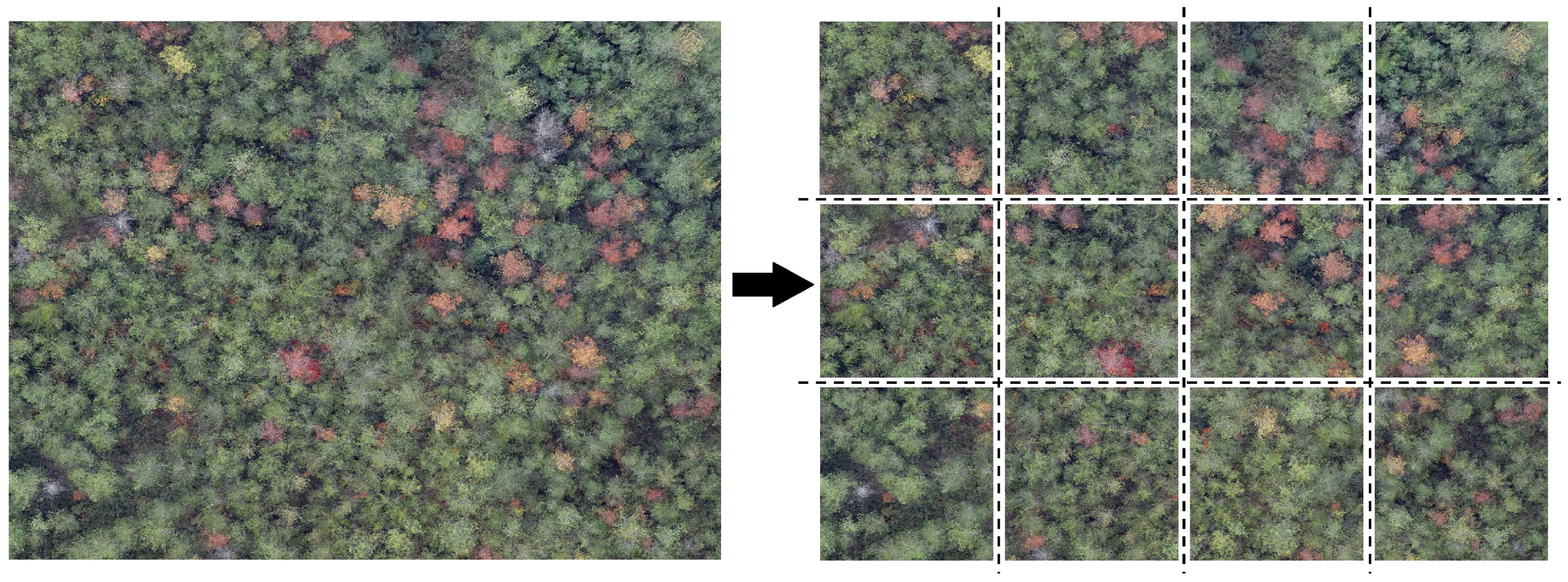

2.2. Data Collection and Annotation

- Geometric transformations: Horizontal and vertical flipping were applied to generate mirror images, thereby enriching the spatial diversity of the dataset;

- Photometric adjustments: Linear brightness modifications were introduced to simulate varying illumination conditions, including brightness attenuation (factor 0.7) and enhancement (factor 1.2);

2.3. Improved YOLOv8 by MFD

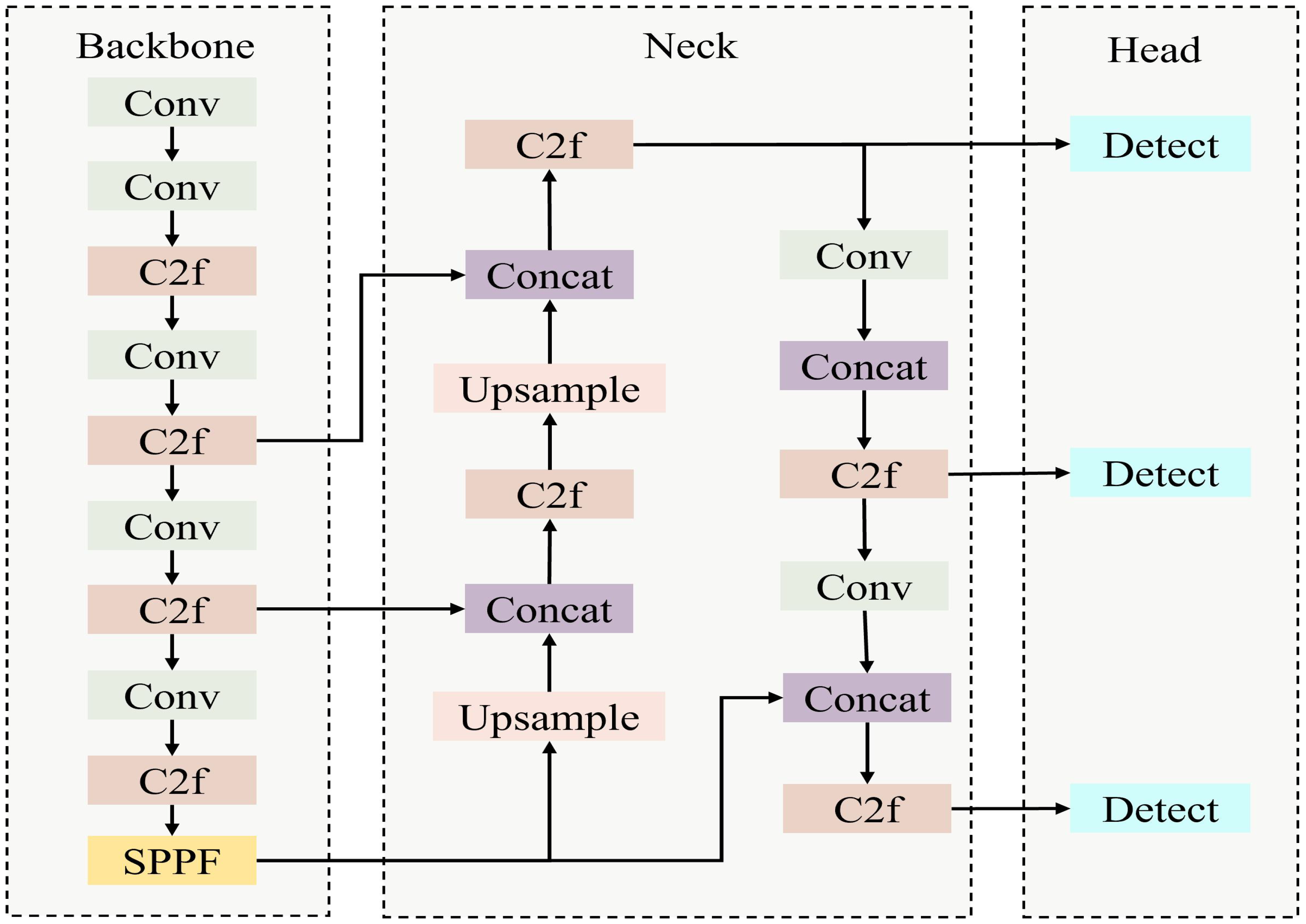

2.3.1. YOLOv8 as Basic Algorithm

2.3.2. MobileViT Backbone Module Principles

2.3.3. Focal Modulation SPPF Module Principles

2.3.4. Dynamic Head Module Principles

2.3.5. Fusion of YOLOv8 and MFD PWD Detection Method

2.4. Model Training and Evaluation

2.4.1. Training Environment and Parameters

2.4.2. Evaluation Indicators

3. Results

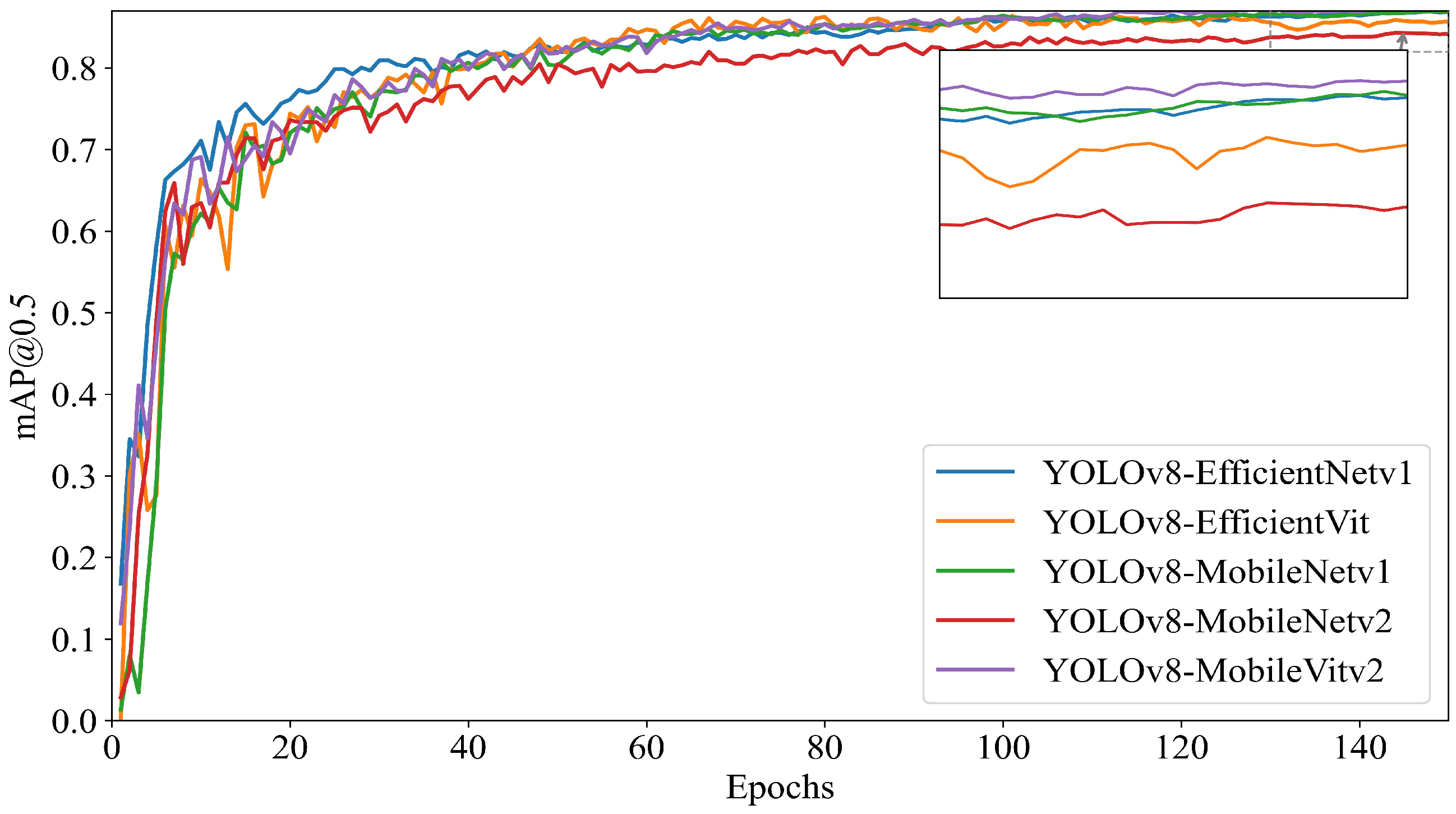

3.1. Performance Comparison of Backbone Networks in YOLOv8

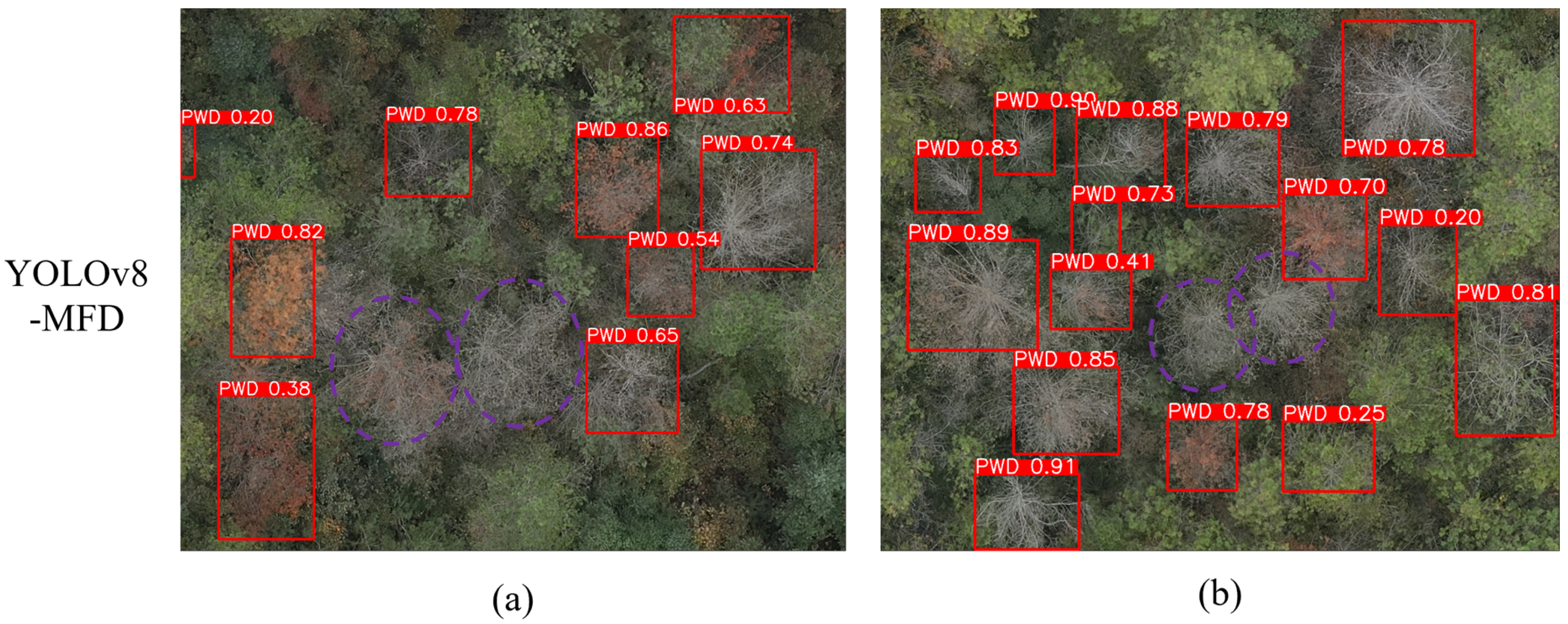

3.2. Enhanced Multi-Scale Detection in YOLOv8-MobileViT

3.3. Comparison of the Performance Metrics of Initial Models

4. Discussion

4.1. Model Innovations and Advantages

4.2. Limitations and Future Work

4.2.1. Limitations

4.2.2. Future Work

4.3. Practical Applications and Generalization Potential

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Liu, F.; Su, H.; Ding, T.; Huang, J.; Liu, T.; Ding, N.; Fang, G. Refined assessment of economic loss from pine wilt disease at the subcompartment scale. Forests 2023, 14, 139. [Google Scholar] [CrossRef]

- Shi, H.; Chen, L.; Chen, M.; Zhang, D.; Wu, Q.; Zhang, R. Advances in Global Remote Sensing Monitoring of Discolored Pine Trees Caused by Pine Wilt Disease: Platforms, Methods, and Future Directions. Forests 2024, 15, 2147. [Google Scholar] [CrossRef]

- Su, J.; Qin, B.; Sun, F.; Lan, P.; Liu, G. Identification of Pine Wilt-Diseased Trees Using UAV Remote Sensing Imagery and Improved PWD-YOLOv8n Algorithm. Drones 2024, 8, 404. [Google Scholar] [CrossRef]

- Du, Z.; Wu, S.; Wen, Q.; Zheng, X.; Lin, S.; Wu, D. Pine wilt disease detection algorithm based on improved YOLOv5. Front. Plant Sci. 2024, 15, 1302361. [Google Scholar] [CrossRef]

- Ye, X.; Pan, J.; Shao, F.; Liu, G.; Lin, J.; Xu, D.; Liu, J. Exploring the potential of visual tracking and counting for trees infected with pine wilt disease based on improved YOLOv5 and StrongSORT algorithm. Comput. Electron. Agric. 2024, 218, 108671. [Google Scholar] [CrossRef]

- Gong, H.; Ding, Y.; Li, D.; Wang, W.; Li, Z. Recognition of Pine Wood Affected by Pine Wilt Disease Based on YOLOv5. In Proceedings of the 2022 China Automation Congress (CAC), Xiamen, China, 25–27 November 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 4753–4757. [Google Scholar]

- Dong, X.; Zhang, L.; Xu, C.; Miao, Q.; Yao, J.; Liu, F.; Liu, H.; Lu, Y.B.; Kang, R.; Song, B. Detection of pine wilt disease infected pine trees using YOLOv5 optimized by attention mechanisms and loss functions. Ecol. Indic. 2024, 168, 112764. [Google Scholar] [CrossRef]

- Ren, D.; Li, M.; Hong, Z.; Liu, L.; Huang, J.; Sun, H.; Ren, S.; Sao, P.; Wang, W.; Zhang, J. MASFNet: Multi-level attention and spatial sampling fusion network for pine wilt disease trees detection. Ecol. Indic. 2025, 170, 113073. [Google Scholar] [CrossRef]

- Wang, S.; Cao, X.; Wu, M.; Yi, C.; Zhang, Z.; Fei, H.; Zheng, H.; Jiang, H.; Jiang, Y.; Zhao, X.; et al. Detection of pine wilt disease using drone remote sensing imagery and improved yolov8 algorithm: A case study in Weihai, China. Forests 2023, 14, 2052. [Google Scholar] [CrossRef]

- Yao, J.; Song, B.; Chen, X.; Zhang, M.; Dong, X.; Liu, H.; Liu, F.; Zhang, L.; Lu, Y.; Xu, C.; et al. Pine-yolo: A method for detecting pine wilt disease in unmanned aerial vehicle remote sensing images. Forests 2024, 15, 737. [Google Scholar] [CrossRef]

- Yuan, Q.; Zou, S.; Wang, H.; Luo, W.; Zheng, X.; Liu, L.; Meng, Z. A lightweight pine wilt disease detection method based on vision transformer-enhanced YOLO. Forests 2024, 15, 1050. [Google Scholar] [CrossRef]

- Wang, X.; Zhao, Q.; Jiang, P.; Zheng, Y.; Yuan, L.; Yuan, P. LDS-YOLO: A lightweight small object detection method for dead trees from shelter forest. Comput. Electron. Agric. 2022, 198, 107035. [Google Scholar] [CrossRef]

- Wang, L.; Cai, J.; Wang, T.; Zhao, J.; Gadekallu, T.R.; Fang, K. Detection of Pine Wilt Disease Using AAV Remote Sensing With an Improved YOLO Model. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 19230–19242. [Google Scholar] [CrossRef]

- Hu, G.; Zhu, Y.; Wan, M.; Bao, W.; Zhang, Y.; Liang, D.; Yin, C. Detection of diseased pine trees in unmanned aerial vehicle images by using deep convolutional neural networks. Geocarto Int. 2022, 37, 3520–3539. [Google Scholar] [CrossRef]

- Chen, Z.; Lin, H.; Bai, D.; Qian, J.; Zhou, H.; Gao, Y. PWDViTNet: A lightweight early pine wilt disease detection model based on the fusion of ViT and CNN. Comput. Electron. Agric. 2025, 230, 109910. [Google Scholar] [CrossRef]

- Xu, S.; Huang, W.; Wang, D.; Zhang, B.; Sun, H.; Yan, J.; Ding, J.; Wang, J.; Yang, Q.; Huang, T.; et al. Automatic pine wilt disease detection based on improved YOLOv8 UAV multispectral imagery. Ecol. Inform. 2024, 84, 102846. [Google Scholar] [CrossRef]

- Yu, R.; Luo, Y.; Zhou, Q.; Zhang, X.; Wu, D.; Ren, L. Early detection of pine wilt disease using deep learning algorithms and UAV-based multispectral imagery. For. Ecol. Manag. 2021, 497, 119493. [Google Scholar] [CrossRef]

- Rao, D.; Zhang, D.; Lu, H.; Yang, Y.; Qiu, Y.; Ding, M.; Yu, X. Deep learning combined with Balance Mixup for the detection of pine wilt disease using multispectral imagery. Comput. Electron. Agric. 2023, 208, 107778. [Google Scholar] [CrossRef]

- Wang, G.; Aierken, N.; Chai, G.; Yan, X.; Chen, L.; Jia, X.; Wang, J.; Huang, W.; Zhang, X. A novel BH3DNet method for identifying pine wilt disease in Masson pine fusing UAS hyperspectral imagery and LiDAR data. Int. J. Appl. Earth Obs. Geoinf. 2024, 134, 104177. [Google Scholar] [CrossRef]

- Pan, J.; Ye, X.; Shao, F.; Liu, G.; Liu, J.; Wang, Y. Impacts of pine species, infection response, and data type on the detection of Bursaphelenchus xylophilus using close-range hyperspectral remote sensing. Remote Sens. Environ. 2024, 315, 114468. [Google Scholar] [CrossRef]

- Li, H.; Chen, L.; Yao, Z.; Li, N.; Long, L.; Zhang, X. Intelligent identification of pine wilt disease infected individual trees using UAV-based hyperspectral imagery. Remote Sens. 2023, 15, 3295. [Google Scholar] [CrossRef]

- Yuan, J.; Wang, L.; Cai, J.; Yang, Z.; Deng, J.; Wen, Z.; Feng, H.; Fang, K. Robust Identification Algorithm for Pine Wilt Disease Based on Frequency Domain Feature. In Proceedings of the 2024 IEEE International Conference on Smart Internet of Things (SmartIoT), Shenzhen, China, 14–16 November 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 264–268. [Google Scholar]

- Ye, X.; Pan, J.; Liu, G.; Shao, F. Exploring the close-range detection of UAV-based images on pine wilt disease by an improved deep learning method. Plant Phenomics 2023, 5, 0129. [Google Scholar] [CrossRef] [PubMed]

- Terven, J.; Córdova-Esparza, D.M.; Romero-González, J.A. A comprehensive review of yolo architectures in computer vision: From yolov1 to yolov8 and yolo-nas. Mach. Learn. Knowl. Extr. 2023, 5, 1680–1716. [Google Scholar] [CrossRef]

- Mehta, S.; Rastegari, M. Mobilevit: Light-weight, general-purpose, and mobile-friendly vision transformer. arXiv 2021, arXiv:2110.02178. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar]

- Yang, J.; Li, C.; Dai, X.; Gao, J. Focal modulation networks. Adv. Neural Inf. Process. Syst. 2022, 35, 4203–4217. [Google Scholar]

- Dai, X.; Chen, Y.; Xiao, B.; Chen, D.; Liu, M.; Yuan, L.; Zhang, L. Dynamic head: Unifying object detection heads with attentions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 7373–7382. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Tahir, S.; Hassan, S.S.; Yang, L.; Ma, M.; Li, C. Detection Methods for Pine Wilt Disease: A Comprehensive Review. Plants 2024, 13, 2876. [Google Scholar] [CrossRef]

| Number | Date | Storage (GB) | Pixel Size |

|---|---|---|---|

| Image1 | July 2024 | 1.61 | 37,256 × 45,079 |

| Image2 | July 2024 | 2.42 | 43,790 × 33,858 |

| Image3 | August 2024 | 2.97 | 46,742 × 38,909 |

| Image4 | August 2024 | 4.81 | 34,807 × 37,098 |

| Image5 | September 2024 | 6.34 | 41,884 × 40,646 |

| Image6 | September 2024 | 5.91 | 43,939 × 36,080 |

| Device Name | Configuration |

|---|---|

| Operating System | Windows 10 |

| CPU | Intel Core i7-12700 (Intel Corporation, Santa Clara, CA, USA) |

| GPU | NVIDIA GeForce RTX 4060 Ti (NVIDIA Corporation, Santa Clara, CA, USA) |

| GPU Memory | 16 GB |

| Programming Language | Python 3.11.5 (Python Software Foundation, Wilmington, DE, USA) |

| Framework | PyTorch 2.0.0 + cu118 (Meta AI, Menlo Park, CA, USA) |

| CUDA Version | 12.6 (NVIDIA Corporation, Santa Clara, CA, USA) |

| Model | Precision | Recall | F1 | mAP@0.5 | Model Size (MB) |

|---|---|---|---|---|---|

| YOLOv8_Base | 0.914 | 0.799 | 0.853 | 0.842 | 5.96 |

| YOLOv8_EfficientNetv1 | 0.914 | 0.800 | 0.853 | 0.868 | 14.1 |

| YOLOv8_EfficientViT | 0.913 | 0.817 | 0.862 | 0.857 | 8.34 |

| YOLOv8_MobileNetv1 | 0.911 | 0.808 | 0.856 | 0.869 | 11.8 |

| YOLOv8_MobileNetv2 | 0.892 | 0.790 | 0.838 | 0.842 | 7.51 |

| YOLOv8_MobileViTv2 | 0.926 | 0.838 | 0.880 | 0.873 | 6.58 |

| Enhanced Modules | Performance Metrics | ||||||

|---|---|---|---|---|---|---|---|

| MobileViT | Focal Modulation | DyHead | Precision | Recall | F1 | mAP@0.5 | Size/MB |

| × | × | × | 0.914 | 0.799 | 0.853 | 0.842 | 5.96 |

| ✓ | × | × | 0.926 | 0.838 | 0.880 | 0.873 | 6.58 |

| × | ✓ | × | 0.889 | 0.792 | 0.838 | 0.847 | 6.17 |

| × | × | ✓ | 0.931 | 0.832 | 0.879 | 0.873 | 9.33 |

| ✓ | ✓ | × | 0.886 | 0.812 | 0.847 | 0.852 | 7.95 |

| ✓ | × | ✓ | 0.923 | 0.844 | 0.882 | 0.878 | 9.82 |

| × | ✓ | ✓ | 0.903 | 0.790 | 0.843 | 0.855 | 9.53 |

| ✓ | ✓ | ✓ | 0.925 | 0.847 | 0.884 | 0.882 | 10.2 |

| Model | Precision | Recall | F1 | mAP@0.5 | Model Size (MB) | GFLOPS | FPS |

|---|---|---|---|---|---|---|---|

| YOLOv3 | 0.872 | 0.774 | 0.820 | 0.803 | 17.4 | 13 | 142.7 |

| YOLOv5 | 0.902 | 0.806 | 0.851 | 0.837 | 3.72 | 4.2 | 177.3 |

| YOLOv8 | 0.914 | 0.799 | 0.853 | 0.842 | 5.96 | 8.2 | 164.38 |

| RT-DETR | 0.655 | 0.621 | 0.637 | 0.622 | 66.2 | 108 | 50.42 |

| YOLOv9 | 0.923 | 0.824 | 0.871 | 0.863 | 4.6 | 7.8 | 92.27 |

| YOLOv10 | 0.924 | 0.806 | 0.860 | 0.857 | 5.8 | 8.4 | 144.36 |

| Proposed | 0.925 | 0.847 | 0.884 | 0.882 | 10.2 | 11.8 | 60.76 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shi, H.; Wang, Y.; Feng, X.; Xie, Y.; Zhu, Z.; Guo, H.; Jin, G. YOLOv8-MFD: An Enhanced Detection Model for Pine Wilt Diseased Trees Using UAV Imagery. Sensors 2025, 25, 3315. https://doi.org/10.3390/s25113315

Shi H, Wang Y, Feng X, Xie Y, Zhu Z, Guo H, Jin G. YOLOv8-MFD: An Enhanced Detection Model for Pine Wilt Diseased Trees Using UAV Imagery. Sensors. 2025; 25(11):3315. https://doi.org/10.3390/s25113315

Chicago/Turabian StyleShi, Hua, Yonghang Wang, Xiaozhou Feng, Yufen Xie, Zhenhui Zhu, Hui Guo, and Guofeng Jin. 2025. "YOLOv8-MFD: An Enhanced Detection Model for Pine Wilt Diseased Trees Using UAV Imagery" Sensors 25, no. 11: 3315. https://doi.org/10.3390/s25113315

APA StyleShi, H., Wang, Y., Feng, X., Xie, Y., Zhu, Z., Guo, H., & Jin, G. (2025). YOLOv8-MFD: An Enhanced Detection Model for Pine Wilt Diseased Trees Using UAV Imagery. Sensors, 25(11), 3315. https://doi.org/10.3390/s25113315