Classification of Electroencephalography Motor Execution Signals Using a Hybrid Neural Network Based on Instantaneous Frequency and Amplitude Obtained via Empirical Wavelet Transform

Abstract

1. Introduction

2. Materials and Methods

2.1. Dataset Description

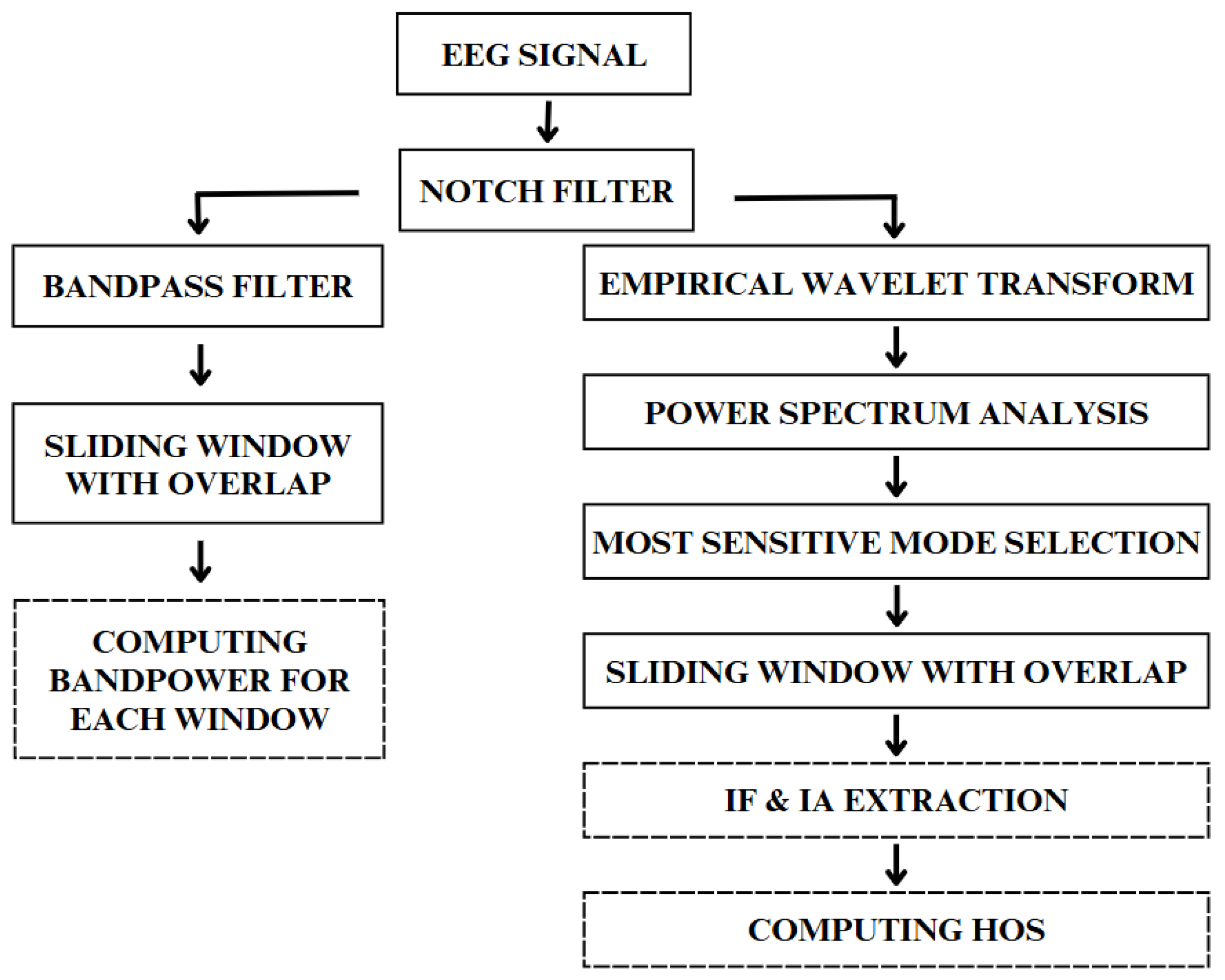

2.2. Preprocessing

- Mean absolute deviation (MAD) measures statistical data distribution (4).

- Skewness (Sk) describes the asymmetry of data distribution around the mean (6).

- Kurtosis (Kt) quantifies data flatness compared to a Gaussian distribution; combined with skewness, this helps identify linear, stationary, and Gaussian anomalies in signals (7) [45].

2.3. Deep Learning Models

- Input layer—input data in form of time–series matrices.

- Convolutional layers—a stack of two 1D convolutional layers (32 filters of size 5 and 64 filters of size 3, respectively) capture local temporal features from the multichannel signal.

- Pooling layers—max-pooling layers with a stride of 2 reduce the temporal dimension and help avoid overfitting [87].

- Bidirectional LSTM layer—a bidirectional long short-term memory (BiLSTM) layer with 64 units enables the model to learn long-range temporal dependencies in both forward and backward directions, which is crucial for modeling the dynamics of gestures [88].

- Dense layers—a fully connected layer with 64 neurons (ReLU activation) and an output layer with a single neuron (sigmoid activation) produce the binary prediction.

2.4. Performance Metrics

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wolpaw, J.R.; Birbaumer, N.; McFarland, D.J.; Pfurtscheller, G.; Vaughan, T.M. Brain–computer interfaces for communication and control. Clin. Neurophysiol. 2002, 113, 767–791. [Google Scholar] [CrossRef] [PubMed]

- Chaudhary, U.; Birbaumer, N.; Ramos-Murguialday, A. Brain–computer interfaces for communication and rehabilitation. Nat. Rev. Neurol. 2016, 12, 513–525. [Google Scholar] [CrossRef] [PubMed]

- Rao, R.P.N. Brain-Computer Interfacing: An Introduction; Cambridge University Press: Cambridge, UK, 2013. [Google Scholar]

- Sieghartsleitner, S.; Sebastián-Romagosa, M.; Ortner, R.; Cho, W.; Guger, C. BCIs for stroke rehabilitation. In Brain-Computer Interfaces; Elsevier: Amsterdam, The Netherlands, 2025; pp. 131–150. [Google Scholar]

- Ang, K.K.; Guan, C. Brain–computer interface for neurorehabilitation of upper limb after stroke. Proc. IEEE 2015, 103, 944–953. [Google Scholar] [CrossRef]

- Sieghartsleitner, S.; Sebastián-Romagosa, M.; Cho, W.; Grünwald, J.; Ortner, R.; Scharinger, J.; Kamada, K.; Guger, C. Upper extremity training followed by lower extremity training with a brain–computer interface rehabilitation system. Front. Neurosci. 2024, 18, 1346607. [Google Scholar] [CrossRef]

- Sebastián-Romagosa, M.; Cho, W.; Ortner, R.; Sieghartsleitner, S.; Von Oertzen, T.J.; Kamada, K.; Laureys, S.; Allison, B.Z.; Guger, C. Brain–computer interface treatment for gait rehabilitation in stroke patients. Front. Neurosci. 2023, 17, 1256077. [Google Scholar] [CrossRef]

- Farwell, L.A.; Donchin, E. Talking off the top of your head: Toward a mental prosthesis utilizing event-related brain potentials. Electroencephalogr. Clin. Neurophysiol. 1988, 70, 510–523. [Google Scholar] [CrossRef]

- Kohler, J.; Ottenhoff, M.C.; Goulis, S.; Angrick, M.; Colon, A.J.; Wagner, L.; Tousseyn, S.; Kubben, P.L.; Herff, C. Synthesizing speech from intracranial depth electrodes using an encoder-decoder framework. arXiv 2021, arXiv:2111.01457. [Google Scholar] [CrossRef]

- Wairagkar, M.; Card, N.S.; Singer-Clark, T.; Hou, X.; Iacobacci, C.; Hochberg, L.R.; Brandman, D.M.; Stavisky, S.D. An instantaneous voice synthesis neuroprosthesis. bioRxiv 2024. [Google Scholar] [CrossRef]

- Collinger, J.L.; Wodlinger, B.; Downey, J.E.; Wang, W.; Tyler-Kabara, E.C.; Weber, D.J.; McMorland, A.J.; Velliste, M.; Boninger, M.L.; Schwartz, A.B. High-performance neuroprosthetic control by an individual with tetraplegia. Lancet 2013, 381, 557–564. [Google Scholar] [CrossRef]

- Hochberg, L.R.; Bacher, D.; Jarosiewicz, B.; Masse, N.Y.; Simeral, J.D.; Vogel, J.; Haddadin, S.; Liu, J.; Cash, S.S.; Van Der Smagt, P.; et al. Reach and grasp by people with tetraplegia using a neurally controlled robotic arm. Nature 2012, 485, 372–375. [Google Scholar] [CrossRef]

- Müller-Putz, G.R.; Scherer, R.; Pfurtscheller, G.; Rupp, R. EEG-based neuroprosthesis control: A step towards clinical practice. Neurosci. Lett. 2005, 382, 169–174. [Google Scholar] [CrossRef] [PubMed]

- Murphy, D.P.; Bai, O.; Gorgey, A.S.; Fox, J.; Lovegreen, W.T.; Burkhardt, B.W.; Atri, R.; Marquez, J.S.; Li, Q.; Fei, D.Y. Electroencephalogram-based brain–computer interface and lower-limb prosthesis control: A case study. Front. Neurol. 2017, 8, 696. [Google Scholar] [CrossRef] [PubMed]

- Rebsamen, B.; Burdet, E.; Guan, C.; Zhang, H.; Teo, C.L.; Zeng, Q.; Ang, M.; Laugier, C. A brain-controlled wheelchair based on P300 and path guidance. In Proceedings of the First IEEE/RAS-EMBS International Conference on Biomedical Robotics and Biomechatronics, Pisa, Italy, 20–22 February 2006; IEEE: Piscataway Township, NJ, USA, 2006; pp. 1101–1106. [Google Scholar]

- Na, R.; Hu, C.; Sun, Y.; Wang, S.; Zhang, S.; Han, M.; Yin, W.; Zhang, J.; Chen, X.; Zheng, D. An embedded lightweight SSVEP-BCI electric wheelchair with hybrid stimulator. Digit. Signal Process. 2021, 116, 103101. [Google Scholar] [CrossRef]

- Choi, K. Control of a vehicle with EEG signals in real-time and system evaluation. Eur. J. Appl. Physiol. 2012, 112, 755–766. [Google Scholar] [CrossRef]

- Choudhari, V.; Han, C.; Bickel, S.; Mehta, A.D.; Schevon, C.; McKhann, G.M.; Mesgarani, N. Brain-Controlled Augmented Hearing for Spatially Moving Conversations in Multi-Talker Environments. Adv. Sci. 2024, 11, 2401379. [Google Scholar] [CrossRef]

- Münßinger, J.I.; Halder, S.; Kleih, S.C.; Furdea, A.; Raco, V.; Hösle, A.; Kübler, A. Brain painting: First evaluation of a new brain–computer interface application with ALS-patients and healthy volunteers. Front. Neurosci. 2010, 4, 182. [Google Scholar] [CrossRef]

- Zhu, H.Y.; Hieu, N.Q.; Hoang, D.T.; Nguyen, D.N.; Lin, C.T. A human-centric metaverse enabled by brain–computer interface: A survey. IEEE Commun. Surv. Tutor. 2024, 26, 2120–2145. [Google Scholar] [CrossRef]

- Baseler, H.; Sutter, E.; Klein, S.; Carney, T. The topography of visual evoked response properties across the visual field. Electroencephalogr. Clin. Neurophysiol. 1994, 90, 65–81. [Google Scholar] [CrossRef]

- Li, J.; Pu, J.; Cui, H.; Xie, X.; Xu, S.; Li, T.; Hu, Y. An online P300 brain–computer interface based on tactile selective attention of somatosensory electrical stimulation. J. Med Biol. Eng. 2019, 39, 732–738. [Google Scholar] [CrossRef]

- Huang, R.S.; Jung, T.P.; Makeig, S. Event-related brain dynamics in continuous sustained-attention tasks. In Proceedings of the International Conference on Foundations of Augmented Cognition, Beijing, China, 22–27 July 2007; Springer: Berlin/Heidelberg, Germany, 2007; pp. 65–74. [Google Scholar]

- Pfurtscheller, G.; Da Silva, F.L. Event-related EEG/MEG synchronization and desynchronization: Basic principles. Clin. Neurophysiol. 1999, 110, 1842–1857. [Google Scholar] [CrossRef]

- Pfurtscheller, G.; Neuper, C. Motor imagery and direct brain–computer communication. Proc. IEEE 2001, 89, 1123–1134. [Google Scholar] [CrossRef]

- Tang, Z.; Sun, S.; Zhang, S.; Chen, Y.; Li, C.; Chen, S. A brain-machine interface based on ERD/ERS for an upper-limb exoskeleton control. Sensors 2016, 16, 2050. [Google Scholar] [CrossRef] [PubMed]

- Dekleva, B.M.; Chowdhury, R.H.; Batista, A.P.; Chase, S.M.; Yu, B.M.; Boninger, M.L.; Collinger, J.L. Motor cortex retains and reorients neural dynamics during motor imagery. Nat. Hum. Behav. 2024, 8, 729–742. [Google Scholar] [CrossRef] [PubMed]

- Neuper, C.; Wörtz, M.; Pfurtscheller, G. ERD/ERS patterns reflecting sensorimotor activation and deactivation. Prog. Brain Res. 2006, 159, 211–222. [Google Scholar]

- Gruenwald, J.; Znobishchev, A.; Kapeller, C.; Kamada, K.; Scharinger, J.; Guger, C. Time-variant linear discriminant analysis improves hand gesture and finger movement decoding for invasive brain–computer interfaces. Front. Neurosci. 2019, 13, 901. [Google Scholar] [CrossRef]

- Schober, T.; Wenzel, K.; Feichtinger, M.; Schwingenschuh, P.; Strebel, A.; Krausz, G.; Pfurtscheller, G. Restless legs syndrome: Changes of induced electroencephalographic beta oscillations—An ERD/ERS study. Sleep 2004, 27, 147–150. [Google Scholar] [CrossRef][Green Version]

- Shuqfa, Z.; Belkacem, A.N.; Lakas, A. Decoding multi-class motor imagery and motor execution tasks using Riemannian geometry algorithms on large EEG datasets. Sensors 2023, 23, 5051. [Google Scholar] [CrossRef]

- Mohamed, A.F.; Jusas, V. Developing Innovative Feature Extraction Techniques from the Emotion Recognition Field on Motor Imagery Using Brain–Computer Interface EEG Signals. Appl. Sci. 2024, 14, 11323. [Google Scholar] [CrossRef]

- Molla, M.K.I.; Ahamed, S.; Almassri, A.M.; Wagatsuma, H. Classification of Motor Imagery Using Trial Extension in Spatial Domain with Rhythmic Components of EEG. Mathematics 2023, 11, 3801. [Google Scholar] [CrossRef]

- Sadiq, M.T.; Yu, X.; Yuan, Z.; Fan, Z.; Rehman, A.U.; Li, G.; Xiao, G. Motor imagery EEG signals classification based on mode amplitude and frequency components using empirical wavelet transform. IEEE Access 2019, 7, 127678–127692. [Google Scholar] [CrossRef]

- Siviero, I.; Brusini, L.; Menegaz, G.; Storti, S.F. Motor-imagery EEG signal decoding using multichannel-empirical wavelet transform for brain computer interfaces. In Proceedings of the 2022 IEEE-EMBS International Conference on Biomedical and Health Informatics (BHI), Ioannina, Greece, 27–30 September 2022; IEEE: Piscataway Township, NJ, USA, 2022; pp. 1–4. [Google Scholar]

- Jolliffe, I.T. Principal Component Analysis for Special Types of Data; Springer: Berlin/Heidelberg, Germany, 2002. [Google Scholar]

- Xu, N.; Gao, X.; Hong, B.; Miao, X.; Gao, S.; Yang, F. BCI competition 2003-data set IIb: Enhancing P300 wave detection using ICA-based subspace projections for BCI applications. IEEE Trans. Biomed. Eng. 2004, 51, 1067–1072. [Google Scholar] [CrossRef] [PubMed]

- Alomari, M.H.; Samaha, A.; AlKamha, K. Automated classification of L/R hand movement EEG signals using advanced feature extraction and machine learning. arXiv 2013, arXiv:1312.2877. [Google Scholar]

- Yu, X.; Chum, P.; Sim, K.B. Analysis the effect of PCA for feature reduction in non-stationary EEG based motor imagery of BCI system. Optik 2014, 125, 1498–1502. [Google Scholar] [CrossRef]

- Kim, H.; Yoshimura, N.; Koike, Y. Characteristics of kinematic parameters in decoding intended reaching movements using electroencephalography (EEG). Front. Neurosci. 2019, 13, 1148. [Google Scholar] [CrossRef]

- Mohamed, A.K.; Marwala, T.; John, L. Single-trial EEG discrimination between wrist and finger movement imagery and execution in a sensorimotor BCI. In Proceedings of the 2011 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Boston, MA, USA, 30 August–3 September 2011; IEEE: Piscataway Township, NJ, USA, 2011; pp. 6289–6293. [Google Scholar]

- Wan, B.; Zhou, Z.; Ming, D.; Qi, H. Detection of ERD/ERS signals based on ICA and PSD. J. Tianjin Univ. 2008, 41, 1383–1390. [Google Scholar]

- Zou, Y.; Zhao, X.; Chu, Y.; Xu, W.; Han, J.; Li, W. A supervised independent component analysis algorithm for motion imagery-based brain computer interface. Biomed. Signal Process. Control 2022, 75, 103576. [Google Scholar] [CrossRef]

- Lotte, F.; Bougrain, L.; Cichocki, A.; Clerc, M.; Congedo, M.; Rakotomamonjy, A.; Yger, F. A review of classification algorithms for EEG-based brain–computer interfaces: A 10 year update. J. Neural Eng. 2018, 15, 031005. [Google Scholar] [CrossRef]

- Kevric, J.; Subasi, A. Comparison of signal decomposition methods in classification of EEG signals for motor-imagery BCI system. Biomed. Signal Process. Control 2017, 31, 398–406. [Google Scholar] [CrossRef]

- Li, Y.; Wen, P.P. Clustering technique-based least square support vector machine for EEG signal classification. Comput. Methods Programs Biomed. 2011, 104, 358–372. [Google Scholar]

- Wu, W.; Gao, X.; Hong, B.; Gao, S. Classifying single-trial EEG during motor imagery by iterative spatio-spectral patterns learning (ISSPL). IEEE Trans. Biomed. Eng. 2008, 55, 1733–1743. [Google Scholar] [CrossRef]

- Wang, H.; Zhang, Y. Detection of motor imagery EEG signals employing Naïve Bayes based learning process. Measurement 2016, 86, 148–158. [Google Scholar]

- Siuly, S.; Li, Y. Improving the separability of motor imagery EEG signals using a cross correlation-based least square support vector machine for brain–computer interface. IEEE Trans. Neural Syst. Rehabil. Eng. 2012, 20, 526–538. [Google Scholar] [CrossRef] [PubMed]

- Sadiq, M.T.; Yu, X.; Yuan, Z.; Zeming, F.; Rehman, A.U.; Ullah, I.; Li, G.; Xiao, G. Motor imagery EEG signals decoding by multivariate empirical wavelet transform-based framework for robust brain–computer interfaces. IEEE Access 2019, 7, 171431–171451. [Google Scholar] [CrossRef]

- Shan, W.; Wang, Y.; He, Q.; Xie, P. EEG Recognition of Motor Imagery Based on EWT in Driving Assistance. In Proceedings of the 2017 7th International Conference on Mechatronics, Computer and Education Informationization (MCEI 2017), Shenyang, China, 16–18 June 2017; Atlantis Press: Dordrecht, The Netherlands, 2017; pp. 11–15. [Google Scholar]

- Lee, H.K.; Choi, Y.S. Application of continuous wavelet transform and convolutional neural network in decoding motor imagery brain–computer interface. Entropy 2019, 21, 1199. [Google Scholar] [CrossRef]

- Karbasi, M.; Jamei, M.; Malik, A.; Kisi, O.; Yaseen, Z.M. Multi-steps drought forecasting in arid and humid climate environments: Development of integrative machine learning model. Agric. Water Manag. 2023, 281, 108210. [Google Scholar] [CrossRef]

- Yücelbaş, C.; Yücelbaş, Ş.; Özşen, S.; Tezel, G.; Küççüktürk, S.; Yosunkaya, Ş. Automatic detection of sleep spindles with the use of STFT, EMD and DWT methods. Neural Comput. Appl. 2018, 29, 17–33. [Google Scholar] [CrossRef]

- Gilles, J. Empirical wavelet transform. IEEE Trans. Signal Process. 2013, 61, 3999–4010. [Google Scholar] [CrossRef]

- Elouaham, S.; Dliou, A.; Jenkal, W.; Louzazni, M.; Zougagh, H.; Dlimi, S. Empirical wavelet transform based ecg signal filtering method. J. Electr. Comput. Eng. 2024, 2024, 9050909. [Google Scholar] [CrossRef]

- Nayak, A.B.; Shah, A.; Maheshwari, S.; Anand, V.; Chakraborty, S.; Kumar, T.S. An empirical wavelet transform-based approach for motion artifact removal in electroencephalogram signals. Decis. Anal. J. 2024, 10, 100420. [Google Scholar] [CrossRef]

- Anuragi, A.; Sisodia, D.S. Empirical wavelet transform based automated alcoholism detecting using EEG signal features. Biomed. Signal Process. Control 2020, 57, 101777. [Google Scholar] [CrossRef]

- Al-Nashash, H.A.; Zalzala, A.M.; Thakor, N.V. A neural networks approach to EEG signals modeling. In Proceedings of the 25th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (IEEE Cat. No. 03CH37439), Cancun, Mexico, 17–21 September 2003; IEEE: Piscataway Township, NJ, USA, 2003; Volume 3, pp. 2451–2454. [Google Scholar]

- Tangwiriyasakul, C.; Mocioiu, V.; van Putten, M.J.; Rutten, W.L. Classification of motor imagery performance in acute stroke. J. Neural Eng. 2014, 11, 036001. [Google Scholar] [CrossRef] [PubMed]

- Chang, T.; Liao, J.; Wu, Y.; Quan, Y.; Chen, S.; Zhao, W.; Li, Y.; Yu, P.; Fang, Y.; Zong, Y.; et al. Improving Performance of Electroencephalography-Based Malignant Brain Tumors Screening from Neural Modulation of Motor Execution. SSRN. p. 5044979. Available online: https://ssrn.com/abstract=5044979 (accessed on 21 May 2025).

- Ahmed, S.S.; Khan, M.; Bukhari, S.M.; Khan, R.A. Robust Feature Engineering Techniques for Designing Motor Imagery-Based Bci Systems. SSRN. p. 5081953. Available online: https://ssrn.com/abstract=5081953 (accessed on 21 May 2025).

- Chen, C.; Chen, P.; Belkacem, A.N.; Lu, L.; Xu, R.; Tan, W.; Li, P.; Gao, Q.; Shin, D.; Wang, C.; et al. Neural activities classification of left and right finger gestures during motor execution and motor imagery. Brain-Comput. Interfaces 2021, 8, 117–127. [Google Scholar] [CrossRef]

- Rakhmatulin, I.; Dao, M.S.; Nassibi, A.; Mandic, D. Exploring convolutional neural network architectures for EEG feature extraction. Sensors 2024, 24, 877. [Google Scholar] [CrossRef]

- Lun, X.; Yu, Z.; Chen, T.; Wang, F.; Hou, Y. A simplified CNN classification method for MI-EEG via the electrode pairs signals. Front. Hum. Neurosci. 2020, 14, 338. [Google Scholar] [CrossRef]

- Shanmugam, S.; Dharmar, S. A CNN-LSTM hybrid network for automatic seizure detection in EEG signals. Neural Comput. Appl. 2023, 35, 20605–20617. [Google Scholar] [CrossRef]

- de Benito-Gorron, D.; Lozano-Diez, A.; Toledano, D.T.; Gonzalez-Rodriguez, J. Exploring convolutional, recurrent, and hybrid deep neural networks for speech and music detection in a large audio dataset. EURASIP J. Audio Speech Music Process. 2019, 2019, 9. [Google Scholar] [CrossRef]

- Rajagukguk, R.A.; Ramadhan, R.A.; Lee, H.J. A review on deep learning models for forecasting time series data of solar irradiance and photovoltaic power. Energies 2020, 13, 6623. [Google Scholar] [CrossRef]

- Haghighi, E.B.; Palm, G.; Rahmati, M.; Yazdanpanah, M.J. A new class of multi-stable neural networks: Stability analysis and learning process. Neural Netw. 2015, 65, 53–64. [Google Scholar] [CrossRef]

- Simard, P.Y.; Steinkraus, D.; Platt, J.C. Best practices for convolutional neural networks applied to visual document analysis. In Proceedings of the Icdar. Edinburgh, Edinburgh, UK, 6 August 2003; Volume 3. [Google Scholar]

- Smit, D.J.; Boomsma, D.I.; Schnack, H.G.; Pol, H.E.H.; de Geus, E.J. Individual differences in EEG spectral power reflect genetic variance in gray and white matter volumes. Twin Res. Hum. Genet. 2012, 15, 384–392. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Chen, A.C.; Feng, W.; Zhao, H.; Yin, Y.; Wang, P. EEG default mode network in the human brain: Spectral regional field powers. Neuroimage 2008, 41, 561–574. [Google Scholar] [CrossRef] [PubMed]

- Abadi, M.; Barham, P.; Chen, J.; Chen, Z.; Davis, A.; Dean, J.; Devin, M.; Ghemawat, S.; Irving, G.; Isard, M.; et al. {TensorFlow}: A system for {Large-Scale} machine learning. In Proceedings of the 12th USENIX symposium on operating systems design and implementation (OSDI 16), Savannah, GA, USA, 2–4 November 2016; pp. 265–283. [Google Scholar]

- Venugopal, A.; Resende Faria, D. Boosting EEG and ECG Classification with Synthetic Biophysical Data Generated via Generative Adversarial Networks. Appl. Sci. 2024, 14, 10818. [Google Scholar] [CrossRef]

- Dere, M.D.; Jo, J.H.; Lee, B. Event-driven edge deep learning decoder for real-time gesture classification and neuro-inspired rehabilitation device control. IEEE Trans. Instrum. Meas. 2023, 72, 4011612. [Google Scholar] [CrossRef]

- Chen, Y.; Akutagawa, M.; Emoto, T.; Kinouchi, Y. The removal of EMG in EEG by neural networks. Physiol. Meas. 2010, 31, 1567. [Google Scholar] [CrossRef]

- Chen, Y.; Akutagawa, M.; Katayama, M.; Zhang, Q.; Kinouchi, Y. Neural network based EEG denoising. In Proceedings of the 2008 30th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Virtual Event, 20–25 August 2008; IEEE: Piscataway Township, NJ, USA, 2008; pp. 262–265. [Google Scholar]

- McFarland, D.J.; Miner, L.A.; Vaughan, T.M.; Wolpaw, J.R. Mu and beta rhythm topographies during motor imagery and actual movements. Brain Topogr. 2000, 12, 177–186. [Google Scholar] [CrossRef]

- LEE, B. EMG-EEG Dataset for Upper-Limb Gesture Classification; IEEE: Piscataway Township, NJ, USA, 2023. [Google Scholar] [CrossRef]

- Center, M.H. Butterworth Filter Design. 2004. Available online: https://www.mathworks.com/help/signal/ref/butter.html (accessed on 14 May 2025).

- MATLAB Central File Exchange. Empirical Wavelets. 2025. Available online: https://ww2.mathworks.cn/help/wavelet/ug/empirical-wavelet-transform.html (accessed on 23 April 2025).

- Huang, N.E. Hilbert-Huang Transform and Its Applications; World Scientific: Singapore, 2014; Volume 16. [Google Scholar]

- Biju, K.; Hakkim, H.A.; Jibukumar, M. Ictal EEG classification based on amplitude and frequency contours of IMFs. Biocybern. Biomed. Eng. 2017, 37, 172–183. [Google Scholar]

- Al Ghayab, H.R.; Li, Y.; Siuly, S.; Abdulla, S. A feature extraction technique based on tunable Q-factor wavelet transform for brain signal classification. J. Neurosci. Methods 2019, 312, 43–52. [Google Scholar] [CrossRef]

- Kutlu, Y.; Kuntalp, D. Feature extraction for ECG heartbeats using higher order statistics of WPD coefficients. Comput. Methods Programs Biomed. 2012, 105, 257–267. [Google Scholar] [CrossRef]

- Barrowclough, J.; Nnamoko, N.; Korkontzelos, I. Personalised Affective Classification Through Enhanced EEG Signal Analysis. Appl. Artif. Intell. 2025, 39, 2450568. [Google Scholar] [CrossRef]

- Sharma, S.; Mehra, R. Implications of pooling strategies in convolutional neural networks: A deep insight. Found. Comput. Decis. Sci. 2019, 44, 303–330. [Google Scholar] [CrossRef]

- Yang, J.; Huang, X.; Wu, H.; Yang, X. EEG-based emotion classification based on bidirectional long short-term memory network. Procedia Comput. Sci. 2020, 174, 491–504. [Google Scholar] [CrossRef]

- Hayaty, M.; Muthmainah, S.; Ghufran, S.M. Random and synthetic over-sampling approach to resolve data imbalance in classification. Int. J. Artif. Intell. Res. 2020, 4, 86–94. [Google Scholar] [CrossRef]

- Berrar, D. Cross-validation. In Reference Module in Life Sciences Encyclopedia of Bioinformatics and Computational Biology; Elsevier: Amsterdam, The Netherlands, 2019. [Google Scholar]

- Nti, I.K.; Nyarko-Boateng, O.; Aning, J. Performance of machine learning algorithms with different K values in K-fold cross-validation. Int. J. Inf. Technol. Comput. Sci. 2021, 13, 61–71. [Google Scholar]

- Cho, J.H.; Jeong, J.H.; Lee, S.W. NeuroGrasp: Real-time EEG classification of high-level motor imagery tasks using a dual-stage deep learning framework. IEEE Trans. Cybern. 2021, 52, 13279–13292. [Google Scholar] [CrossRef]

- Kim, S.; Shin, D.Y.; Kim, T.; Lee, S.; Hyun, J.K.; Park, S.M. Enhanced recognition of amputated wrist and hand movements by deep learning method using multimodal fusion of electromyography and electroencephalography. Sensors 2022, 22, 680. [Google Scholar] [CrossRef]

- Tryon, J.; Trejos, A.L. Evaluating convolutional neural networks as a method of EEG–EMG fusion. Front. Neurorobot. 2021, 15, 692183. [Google Scholar] [CrossRef]

- Du, Y.; Xu, Y.; Wang, X.; Liu, L.; Ma, P. EEG temporal–spatial transformer for person identification. Sci. Rep. 2022, 12, 14378. [Google Scholar] [CrossRef]

| Classifier | Variant | Accuracy [%] | F1 Score [%] |

|---|---|---|---|

| HNN | 1st with feature extraction (bandpower) for all channels | 74.56 | 22.77 |

| LDA | 50.12 | 22.04 | |

| SVM | 67.82 | 25.33 | |

| HNN | 1st without feature extraction for all channels | 74.77 | 14.08 |

| HNN | 2nd with feature extraction (IF + IA) for all channels | 81.91 | 36.89 |

| LDA | 73.08 | 18.35 | |

| SVM | 75.75 | 17.78 | |

| HNN | 2nd without feature extraction for all channels | 76.36 | 16.80 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zych, P.; Filipek, K.; Mrozek-Czajkowska, A.; Kuwałek, P. Classification of Electroencephalography Motor Execution Signals Using a Hybrid Neural Network Based on Instantaneous Frequency and Amplitude Obtained via Empirical Wavelet Transform. Sensors 2025, 25, 3284. https://doi.org/10.3390/s25113284

Zych P, Filipek K, Mrozek-Czajkowska A, Kuwałek P. Classification of Electroencephalography Motor Execution Signals Using a Hybrid Neural Network Based on Instantaneous Frequency and Amplitude Obtained via Empirical Wavelet Transform. Sensors. 2025; 25(11):3284. https://doi.org/10.3390/s25113284

Chicago/Turabian StyleZych, Patryk, Kacper Filipek, Agata Mrozek-Czajkowska, and Piotr Kuwałek. 2025. "Classification of Electroencephalography Motor Execution Signals Using a Hybrid Neural Network Based on Instantaneous Frequency and Amplitude Obtained via Empirical Wavelet Transform" Sensors 25, no. 11: 3284. https://doi.org/10.3390/s25113284

APA StyleZych, P., Filipek, K., Mrozek-Czajkowska, A., & Kuwałek, P. (2025). Classification of Electroencephalography Motor Execution Signals Using a Hybrid Neural Network Based on Instantaneous Frequency and Amplitude Obtained via Empirical Wavelet Transform. Sensors, 25(11), 3284. https://doi.org/10.3390/s25113284