Violins Unveiled: A Photogrammetric Framework Integrating Multiband and Spectroscopic Data for In-Depth Examination of Two Musical Instruments

Abstract

1. Introduction

1.1. Fundamentals and Advantages of Photogrammetry in Cultural Heritage

1.2. Integration Challenges of Multiband Data in 3D Models

1.3. Multiband Photogrammetry and Spectroscopic Techniques for Historical Violin Analysis

2. Materials and Methods

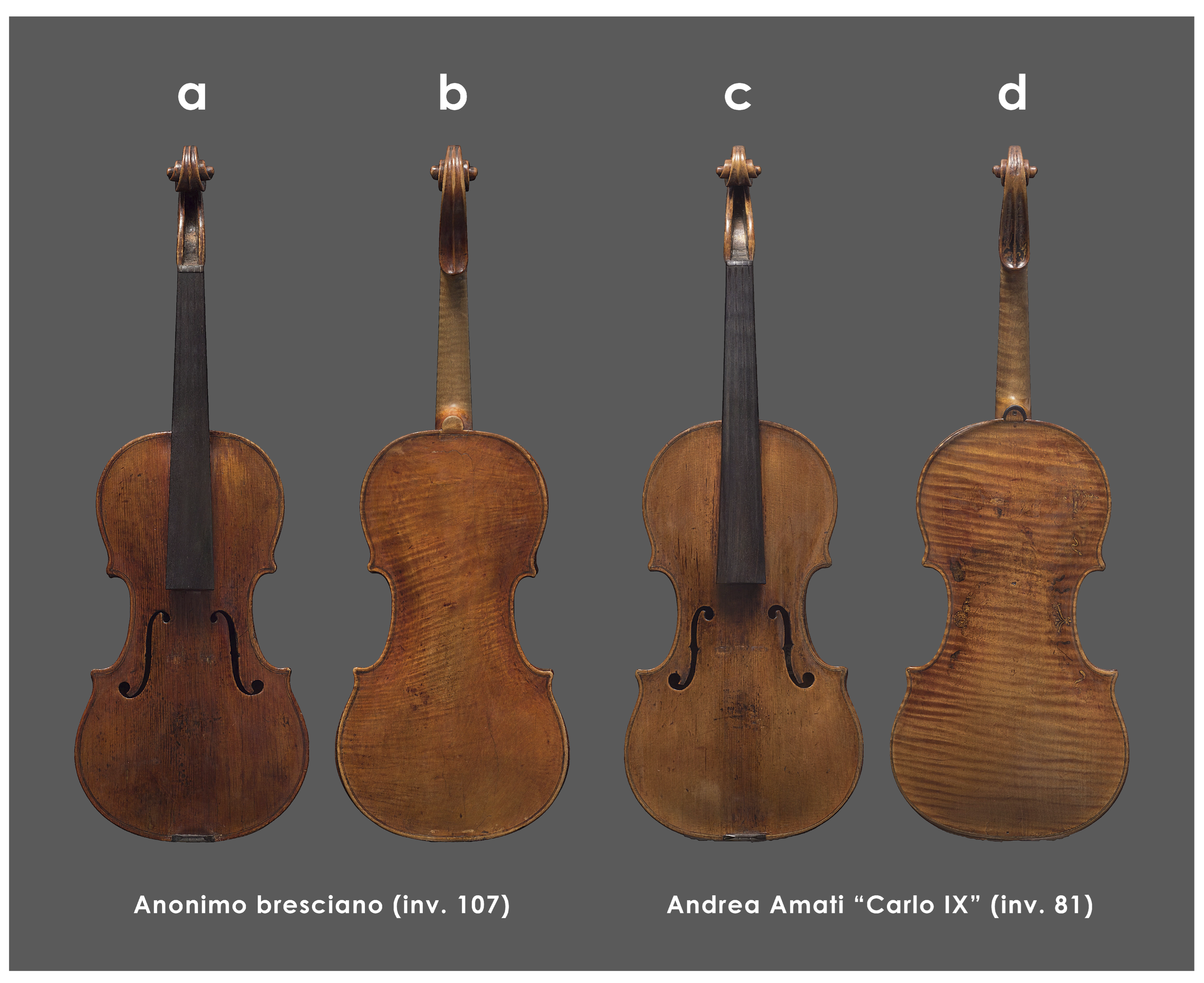

2.1. Historical Violins from the Museo Del Violino of Cremona

2.2. Analytical Methods

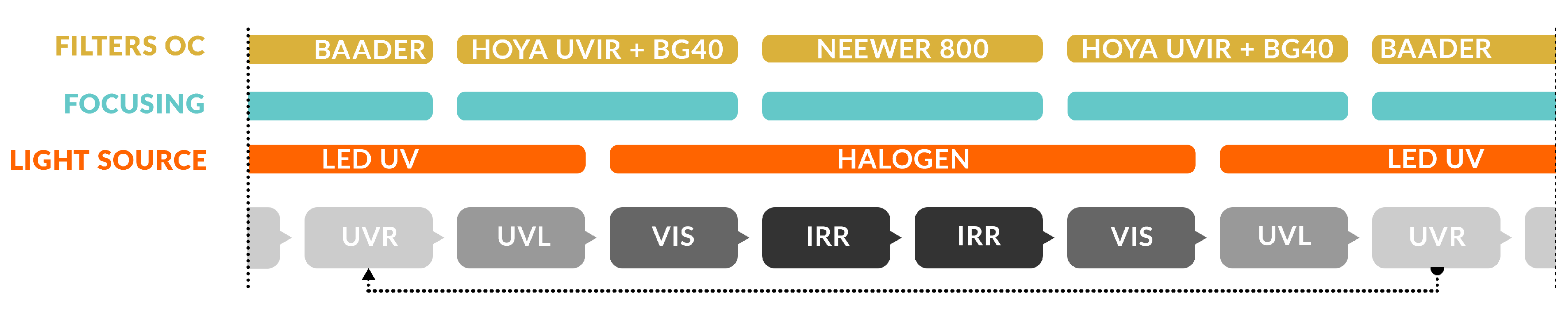

2.2.1. Multiband Photogrammetry

- 504 images (126 × 4 bands) for Inv. 107;

- 476 images (119 × 4 bands) for Inv. 81.

2.2.2. 3D Data Processing

- Image alignment and tie point sparse cloud generation;

- Filtering the worst point by Gradual selection (removing 1/10 of the tie points from projection accuracy, reprojection error, and reconstruction uncertainty);

- Camera optimization;

- Depth maps calculation (depth filtering: aggressive);

- Meshing (interpolation: enabled);

- Decimating (between 20k and 50k polygons);

- VIS texture generation;

- NM (Normal Map) generation based on the high–resolution model;

- Export of the VIS model (along with NM) in OBJ format;

- VIS images substitution with the MBI bands;

- Re-texturization (keep uv);

- Export of the MBI texture.

2.2.3. Morphological Evaluation with a Structured Light Scanner (Artec Eva)

2.2.4. Physically Based Rendering and Sketchfab

- VIS: Base Color;

- UVL: Anisotropy (value: 0.001);

- IRR: Cavity (value: 0.001);

- UVR: Clear Coat (value: 0.001);

- UVFC: Sheen (value: 0.001);

- IRFC: Emission (value: 0.01);

- NM: Normal Map.

2.2.5. Spectroscopic Analysis

3. Results and Discussion

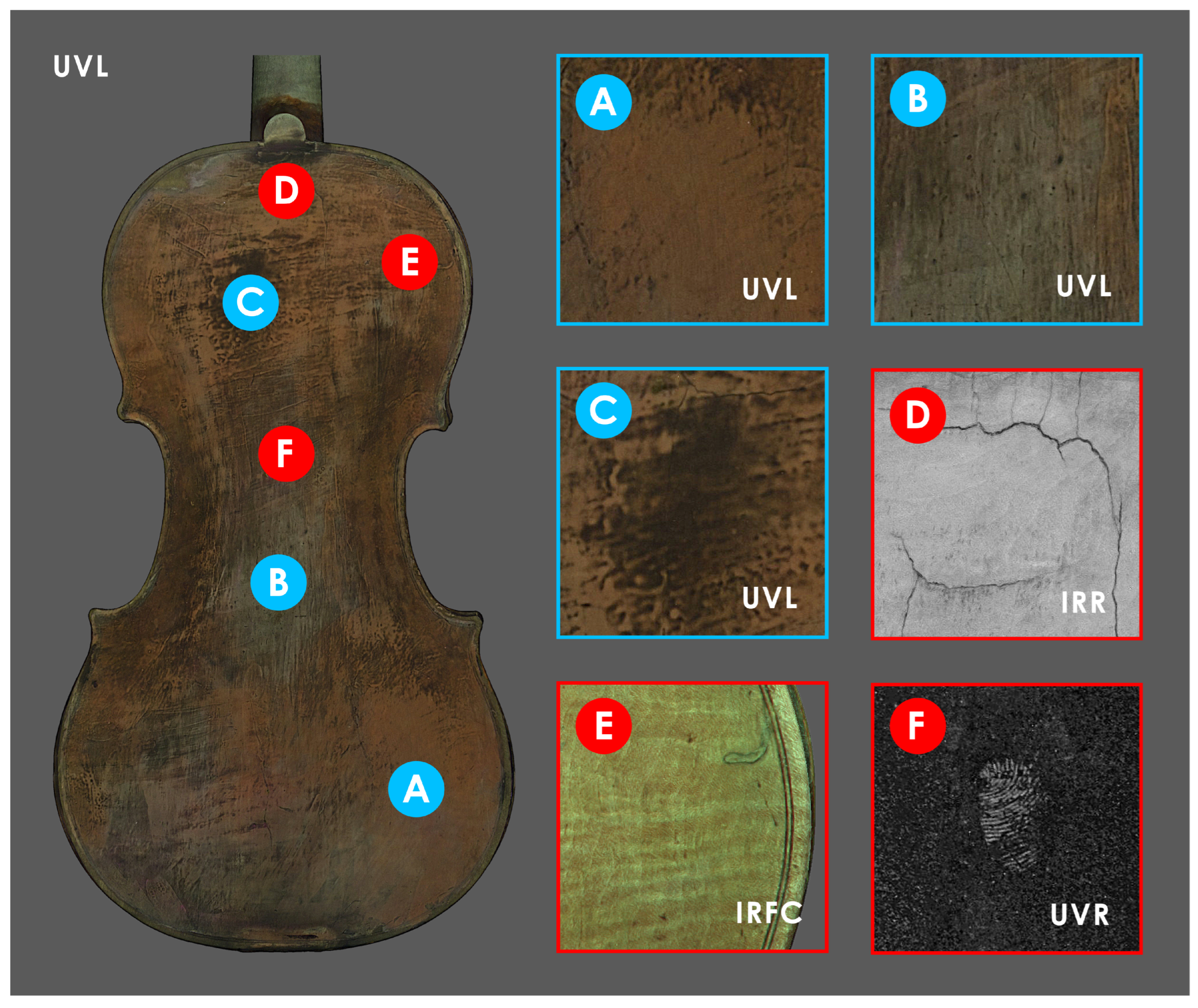

3.1. Multiband Photogrammetry

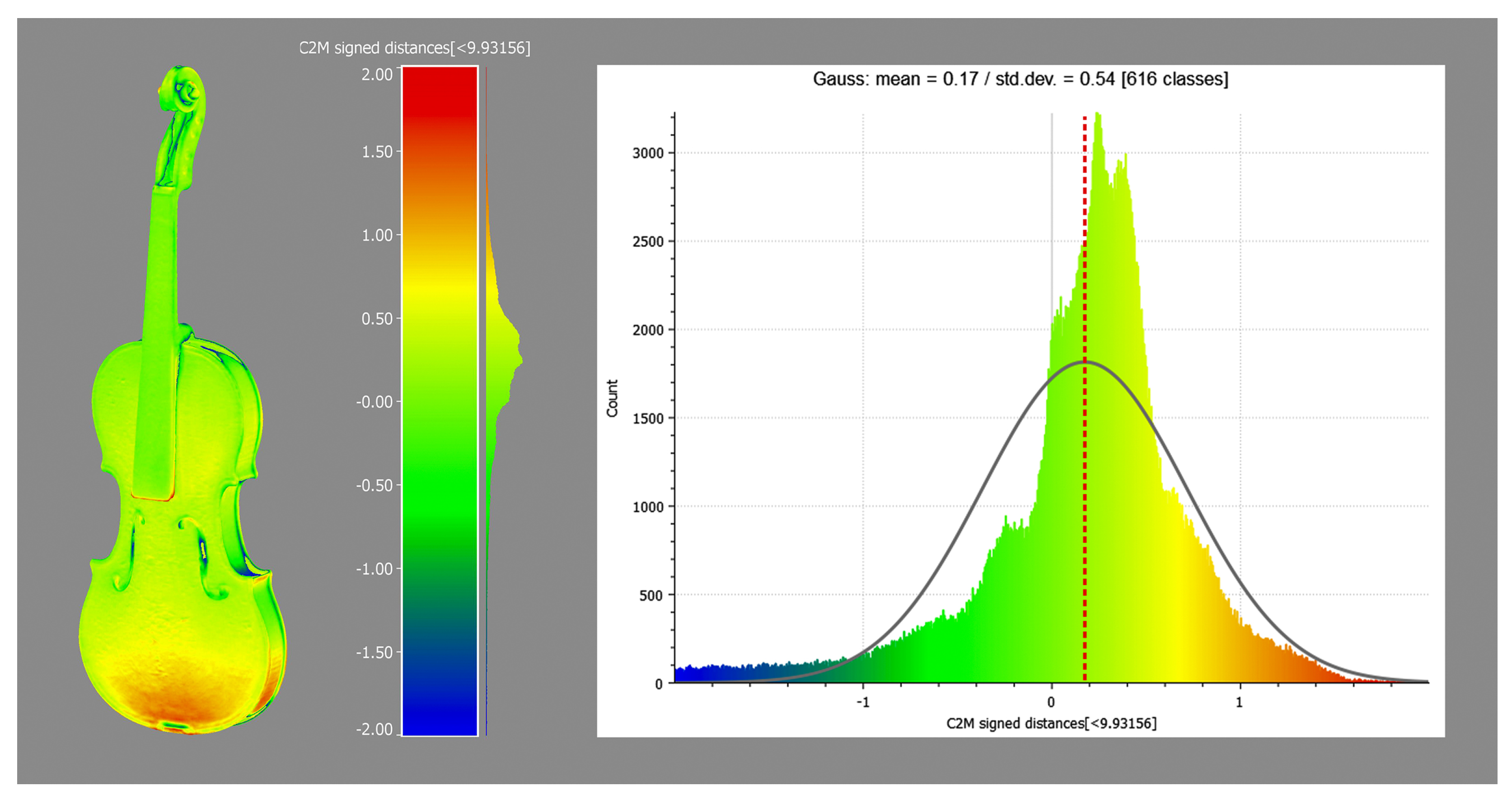

3.2. Metrological and Dimensional Assessment

3.3. Orthomosaics Projections

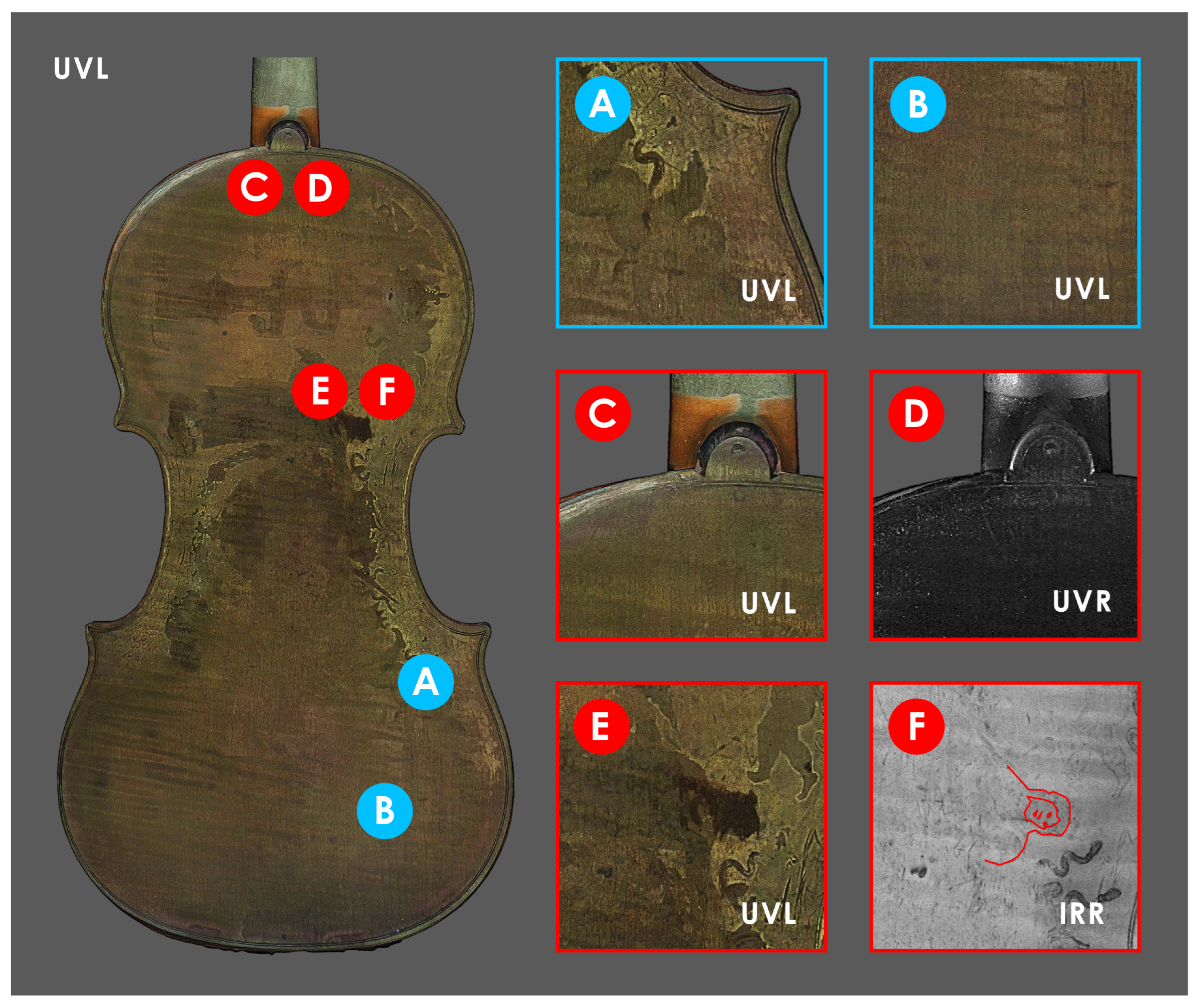

3.4. Identification of ROIs and Spectroscopic Investigation

3.4.1. Andrea Amati “Carlo IX” (Inv. 81)

3.4.2. Anonimo Bresciano (Inv. 107)

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| CH | Cultural Heritage |

| MBI | Multiband Imaging |

| PBR | Physically Based Rendering |

| LiDAR | Light Detection and Ranging |

| SLAM | Simultaneous Localization and Mapping |

| V–RTI | Virtual Reflectance Transformation Imaging |

| CAD | Computer-Aided Design |

| GIS | Geographical Information System |

| HBIM | Heritage Building Information Modelling |

| PCA | Principal Component Analysis |

| MBI 3D | Multiband Imaging 3D |

| ROI | Region of Interest |

| UVR | Ultraviolet Reflectography |

| VIS | Visible Light |

| IRR | Infrared Reflectography |

| UVL | Ultraviolet Luminescence |

| MMI | Maximization of Mutual Information |

| SURF | Speeded-Up Robust Features |

| IRFC | Infrared False Color |

| UVFC | Ultraviolet False Color |

| NM | Normal Map |

| ICP | Iterative Closest Point |

| RMSE | Root Mean Square Error |

| ER–FTIR | External Reflection Fourier-Transform Infrared spectroscopy |

| GCP | Ground Control Point |

| KKT | Kramers–Kronig Transformation |

References

- Es Sebar, L.; Grassini, S.; Parvis, M.; Lombardo, L. A low-cost automatic acquisition system for photogrammetry. In Proceedings of the 2021 IEEE International Instrumentation and Measurement Technology Conference (I2MTC), Glasgow, UK, 17–20 May 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Es Sebar, L.; Angelini, E.; Grassini, S.; Parvis, M.; Lombardo, L. A trustable 3D photogrammetry approach for cultural heritage. In Proceedings of the 2020 IEEE International Instrumentation and Measurement Technology Conference (I2MTC), Dubrovnik, Croatia, 25–28 May 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Apollonio, F.I.; Fantini, F.; Garagnani, S.; Gaiani, M. A Photogrammetry-Based Workflow for the Accurate 3D Construction and Visualization of Museums Assets. Remote Sens. 2021, 13, 486. [Google Scholar] [CrossRef]

- Porter, S.T.; Huber, N.; Hoyer, C.; Floss, H. Portable and low-cost solutions to the imaging of Paleolithic art objects: A comparison of photogrammetry and reflectance transformation imaging. J. Archaeol. Sci. Rep. 2016, 10, 859–863. [Google Scholar] [CrossRef]

- McCarthy, J. Multi-image photogrammetry as a practical tool for cultural heritage survey and community engagement. J. Archaeol. Sci. 2014, 43, 175–185. [Google Scholar] [CrossRef]

- Remondino, F. Heritage Recording and 3D Modeling with Photogrammetry and 3D Scanning. Remote Sens. 2011, 3, 1104–1138. [Google Scholar] [CrossRef]

- Menna, F.; Nocerino, E.; Morabito, D.; Farella, E.M.; Perini, M.; Remondino, F. An open source low-cost automatic system for image-based 3D digitization. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, XLII-2/W8, 155–162. [Google Scholar] [CrossRef]

- Aicardi, I.; Chiabrando, F.; Maria Lingua, A.; Noardo, F. Recent trends in cultural heritage 3D survey: The photogrammetric computer vision approach. J. Cult. Herit. 2018, 32, 257–266. [Google Scholar] [CrossRef]

- Fangi, G. The Multi-image spherical Panoramas as a tool for Architectural Survey. In Proceedings of the International Archives of Photogrammetry, Athens, Greece, 1–6 October 2007; Volume 36. [Google Scholar]

- Farella, E.M.; Morelli, L.; Grilli, E.; Rigon, S.; Remondino, F. Handling critical aspects in massive photogrammetric digitization of museum assets. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2022, XLVI-2/W1-2022, 215–222. [Google Scholar] [CrossRef]

- Bovero, A.; Canepa, M.C.; Cardinali, M.; De Blasi, S.; Girotto, M.; Nervo, M.; Piccirillo, A.; Volinia, M. Precettoria of Sant’Antonio di Ranverso, an Integrated Approach for the Study and Monitoring of the Jaquerian Painting Cycles. The Process for the Definition, Implementation and Verification of Conservation Strategies. Available online: https://www.ndt.net/article/art2023/papers/art2023_p259.pdf (accessed on 20 May 2025).

- Buscaglia, P.; Biondi, E.; Bovero, A.; Quirino, T. From the lab to the field: 3D technology supporting study and conservation processes on Ancient Egyptian artefacts. In Proceedings of the 21st World Multi-Conference on Systemics, Orlando, FL, USA, 8–11 July 2017; pp. 380–385. [Google Scholar]

- Di Iorio, F.; Es Sebar, L.; Croci, S.; Taverni, F.; Auenmüller, J.; Pozzi, F.; Grassini, S. The Use of Virtual Reflectance Transformation Imaging (V-RTI) in the Field of Cultural Heritage: Approaching the Materiality of an Ancient Egyptian Rock-Cut Chapel. Appl. Sci. 2024, 14, 4768. [Google Scholar] [CrossRef]

- Greaves, A.M.; Duffy, S.; Peterson, J.R.; Tekoglu, S.R.; Hirt, A. Carved in Stone: Field Trials of Virtual Reflectance Transformation Imaging (V-RTI) in Classical Telmessos (Fethiye). J. Field Archaeol. 2020, 45, 542–555. [Google Scholar] [CrossRef]

- Barazzetti, L.; Previtali, M.; Rose, W. Flattening complex architectural surfaces: Photogrammetric 3D models converted into 2D maps. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2024, XLVIII-2/W4-2024, 33–40. [Google Scholar] [CrossRef]

- Piemonte, A.; Caroti, G.; Martínez-Espejo Zaragoza, I.; Fantini, F.; Cipriani, L. A Methodology for Planar Representation of Frescoed Oval Domes: Formulation and Testing on Pisa Cathedral. ISPRS Int. J.-Geo-Inf. 2018, 7, 318. [Google Scholar] [CrossRef]

- Eramo, E.; Fantini, F. An integrated approach for investigating roman cupolas: From segmented models to trikentron analysis. DisegnareCon 2024, 17, 12. [Google Scholar] [CrossRef]

- Lerma, J.L.; Navarro, S.; Cabrelles, M.; Villaverde, V. Terrestrial laser scanning and close range photogrammetry for 3D archaeological documentation: The Upper Palaeolithic Cave of Parpalló as a case study. J. Archaeol. Sci. 2010, 37, 499–507. [Google Scholar] [CrossRef]

- Menna, F.; Nocerino, E.; Remondino, F.; Dellepiane, M.; Callieri, M.; Scopigno, R. 3D digitization of an heritage masterpiece—A critical analysis on quality assessment. ISPRS-Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, XLI-B5, 675–683. [Google Scholar] [CrossRef]

- Spadaro, A.; Chiabrando, F.; Losé, L.T. 3D Metric Survey of the Mezzagnone Arab Bath. From Point Clouds to 2D Drawings and Parametric Model. IMEKO TC. 2023. Available online: https://www.researchgate.net/publication/377547707_3D_metric_Survey_of_the_Mezzagnone_Arab_bath_From_point_clouds_to_2D_drawings_and_parametric_model (accessed on 20 May 2025).

- Diara, F.; Roggero, M. The Frinco Castle: From an Integrated Survey to 3D Modelling and a Stratigraphic Analysis for Helping Knowledge and Reconstruction. Remote Sens. 2023, 15, 4874. [Google Scholar] [CrossRef]

- Sammartano, G.; Avena, M.; Fillia, E.; Spanò, A. Integrated HBIM-GIS Models for Multi-Scale Seismic Vulnerability Assessment of Historical Buildings. Remote Sens. 2023, 15, 833. [Google Scholar] [CrossRef]

- Grilli, E.; Remondino, F. Classification of 3D Digital Heritage. Remote Sens. 2019, 11, 847. [Google Scholar] [CrossRef]

- Gómez, A.; Adineh, A.; Rahrig, M.; Lerma, J.L. Narrating Serranos Bridge Evolution in Valencia (1500–2022) Using Historic Building Information Modelling and Historical Data. Remote Sens. 2024, 16, 310. [Google Scholar] [CrossRef]

- Patrucco, G.; Gómez, A.; Adineh, A.; Rahrig, M.; Lerma, J.L. 3D Data Fusion for Historical Analyses of Heritage Buildings Using Thermal Images: The Palacio de Colomina as a Case Study. Remote Sens. 2022, 14, 5699. [Google Scholar] [CrossRef]

- Adamopoulos, E.; Patrucco, G.; Volinia, M.; Girotto, M.; Rinaudo, F.; Tonolo, F.G.; Spanò, A. 3D Thermal Mapping of Architectural Heritage. In Digital Heritage. Progress in Cultural Heritage: Documentation, Preservation, and Protection; Ioannides, M., Fink, E., Cantoni, L., Champion, E., Eds.; Springer: Cham, Switzerland, 2021; pp. 26–37. [Google Scholar]

- Amura, A.; Aldini, A.; Pagnotta, S.; Salerno, E.; Tonazzini, A.; Triolo, P. Analysis of Diagnostic Images of Artworks and Feature Extraction: Design of a Methodology. J. Imaging 2021, 7, 53. [Google Scholar] [CrossRef]

- IIIF. International Image Interoperability Framework. Available online: https://iiif.io/ (accessed on 23 January 2025).

- EUreka3D. Available online: https://eureka3d.eu/ (accessed on 23 January 2025).

- 3D Heritage Online Presenter. Available online: https://www.3dhop.net/ (accessed on 23 January 2025).

- ATON. Available online: https://osiris.itabc.cnr.it/aton/ (accessed on 23 January 2025).

- AIOLI. Available online: http://www.aioli.cloud/ (accessed on 23 January 2025).

- Pamart, A.; Roussel, R.; Hubert, E.; Colombini, A.; Saleri, R.; Mouaddib, E.M.; Castro, Y.; Le Goïc, G.; Mansouri, A. A Semantically enriched multimodal imaging approach dedicated to conservation and restoration studies. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2022, XLVI-2/W1-2022, 415–420. [Google Scholar] [CrossRef]

- Dirksen, J. Learning Three.js: The JavaScript 3D Library for WebGL; Community experience distilled; Packt Publishing: Birmingham, UK, 2013. [Google Scholar]

- Behr, J.; Eschler, P.; Jung, Y.; Zöllner, M. X3DOM: A DOM-based HTML5/X3D integration model. In Proceedings of the 14th International Conference on 3D Web Technology, Darmstadt Germany, 16–17 June 2009; pp. 127–135. [Google Scholar] [CrossRef]

- Jankowski, J.; Decker, S. On the design of a Dual-Mode User Interface for accessing 3D content on the World Wide Web. Int. J.-Hum.-Comput. Stud. 2013, 71, 838–857. [Google Scholar] [CrossRef]

- Jaspe-Villanueva, A.; Ahsan, M.; Pintus, R.; Giachetti, A.; Marton, F.; Gobbetti, E. Web-based Exploration of Annotated Multi-Layered Relightable Image Models. J. Comput. Cult. Herit. 2021, 14, 1–29. [Google Scholar] [CrossRef]

- Macchia, A.; Biribicchi, C.; Rivaroli, L.; Aureli, H.; Cerafogli, E.; Colasanti, I.A.; Carnazza, P.; Demasi, G.; La Russa, M.F. Combined Use of Non-Invasive and Micro-Invasive Analytical Investigations to Understand the State of Conservation and the Causes of Degradation of I Tesori del Mare (1901) by Plinio Nomellini. Methods Protoc. 2022, 5, 52. [Google Scholar] [CrossRef] [PubMed]

- Buccarella Hedeaard, S.; Brøns, C.; Drug, I.; Saulins, P.; Bercu, C.; Jakovlev, A.; Kjær, L. Multispectral Photogrammetry: 3D models highlighting traces of paint on ancient sculptures. Digit. Humanit. Nord. Balt. Ctries. Publ. 2019, 2, 181–189. [Google Scholar] [CrossRef]

- Remondino, F.; Rizzi, A.; Barazzetti, L.; Scaioni, M.; Fassi, F.; Brumana, R.; Pelagotti, A. Review of Geometric and Radiometric Analyses of Paintings. Photogramm. Rec. 2011, 26, 439–461. [Google Scholar] [CrossRef]

- Dyer, J.; Tamburini, D.; O’Connell, E.R.; Harrison, A. A multispectral imaging approach integrated into the study of Late Antique textiles from Egypt. PLoS ONE 2018, 13, e0204699. [Google Scholar] [CrossRef]

- Mathys, A.; Jadinon, R.; Hallot, P. Exploiting 3D multispectral texture for a better feature identification for cultural heritage. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, IV-2/W6, 91–97. [Google Scholar] [CrossRef]

- Dondi, P.; Lombardi, L.; Invernizzi, C.; Rovetta, T.; Malagodi, M.; Licchelli, M. Automatic Analysis of UV-Induced Fluorescence Imagery of Historical Violins. J. Comput. Cult. Herit. 2017, 10, 1–13. [Google Scholar] [CrossRef]

- Zainuddin, K.; Majid, Z.; Ariff, M.F.M.; Idris, K.M.; Abbas, M.A.; Darwin, N. 3D modeling for rock art documentation using lightweight multispectral camera. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-2/W9, 787–793. [Google Scholar] [CrossRef]

- Webb, E.K. Reflected Infrared and 3D Imaging for Object Documentation. J. Am. Inst. Conserv. 2017, 56, 211–224. [Google Scholar] [CrossRef]

- Es Sebar, L.; Lombardo, L.; Buscaglia, P.; Cavaleri, T.; Lo Giudice, A.; Re, A.; Borla, M.; Aicardi, S.; Grassini, S. 3D Multispectral Imaging for Cultural Heritage Preservation: The Case Study of a Wooden Sculpture of the Museo Egizio di Torino. Heritage 2023, 6, 2783–2795. [Google Scholar] [CrossRef]

- Nocerino, E.; Rieke-Zapp, D.H.; Trinkl, E.; Rosenbauer, R.; Farella, E.M.; Morabito, D.; Remondino, F. Mapping Vis and Uvl imagery on 3D geometry for non-invasive, non-contact analysis of a vase. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, XLII-2, 773–780. [Google Scholar] [CrossRef]

- Rahrig, M.; Herrero Cortell, M.A.; Lerma, J.L. Multiband Photogrammetry and Hybrid Image Analysis for the Investigation of a Wall Painting by Paolo de San Leocadio and Francesco Pagano in the Cathedral of Valencia. Sensors 2023, 23, 2301. [Google Scholar] [CrossRef]

- Tonazzini, A.; Salerno, E.; Abdel-Salam, Z.A.; Harith, M.A.; Marras, L.; Botto, A.; Campanella, B.; Legnaioli, S.; Pagnotta, S.; Poggialini, F.; et al. Analytical and mathematical methods for revealing hidden details in ancient manuscripts and paintings: A review. J. Adv. Res. 2019, 17, 31–42. [Google Scholar] [CrossRef]

- Safa, E.; Barreau, J.B.; Gaugne, R.; Duchemin, W.; Talma, J.D.; Arnaldi, B.; Dumont, G.; Gouranton, V. Digital and Handcrafting Processes Applied to Sound-Studies of Archaeological Bone Flutes. In Digital Heritage. Progress in Cultural Heritage: Documentation, Preservation, and Protection; Ioannides, M., Fink, E., Moropoulou, A., Hagedorn-Saupe, M., Fresa, A., Liestøl, G., Rajcic, V., Grussenmeyer, P., Eds.; Springer International Publishing: Cham, Switzerland, 2016; Volume 10058, pp. 184–195. [Google Scholar] [CrossRef]

- Bellia, A.; Pavone, D.P. Computed tomography and handcrafting processes of an ancient musical instrument: The aulos from Poseidonia. Archeol. Calc. 2021, 32, 375–401. [Google Scholar] [CrossRef]

- Invernizzi, C.; Daveri, A.; Rovetta, T.; Vagnini, M.; Licchelli, M.; Cacciatori, F.; Malagodi, M. A multi-analytical non-invasive approach to violin materials: The case of Antonio Stradivari “Hellier” (1679). Microchem. J. 2016, 124, 743–750. [Google Scholar] [CrossRef]

- Invernizzi, C.; Fiocco, G.; Iwanicka, M.; Kowalska, M.; Targowski, P.; Blümich, B.; Rehorn, C.; Gabrielli, V.; Bersani, D.; Licchelli, M.; et al. Non-invasive mobile technology to study the stratigraphy of ancient Cremonese violins: OCT, NMR-MOUSE, XRF and reflection FT-IR spectroscopy. Microchem. J. 2020, 155, 104754. [Google Scholar] [CrossRef]

- Rovetta, T.; Invernizzi, C.; Fiocco, G.; Albano, M.; Licchelli, M.; Gulmini, M.; Alf, G.; Fabbri, D.; Rombolà, A.; Malagodi, M. The case of Antonio Stradivari 1718 ex-San Lorenzo violin: History, restorations and conservation perspectives. J. Archaeol. Sci. Rep. 2019, 23, 443–450. [Google Scholar] [CrossRef]

- Echard, J.P.; Lavédrine, B. Review on the characterisation of ancient stringed musical instruments varnishes and implementation of an analytical strategy. J. Cult. Herit. 2008, 9, 420–429. [Google Scholar] [CrossRef]

- Fiocco, G.; Gonzalez, S.; Invernizzi, C.; Rovetta, T.; Albano, M.; Dondi, P.; Licchelli, M.; Antonacci, F.; Malagodi, M. Compositional and Morphological Comparison among Three Coeval Violins Made by Giuseppe Guarneri “del Gesù” in 1734. Coatings 2021, 11, 884. [Google Scholar] [CrossRef]

- Stani, C.; Invernizzi, C.; Birarda, G.; Davit, P.; Vaccari, L.; Malagodi, M.; Gulmini, M.; Fiocco, G. A Nanofocused Light on Stradivari Violins: Infrared s-SNOM Reveals New Clues Behind Craftsmanship Mastery. Anal. Chem. 2022, 94, 14815–14819. [Google Scholar] [CrossRef] [PubMed]

- Van der Perre, A.; Hameeuw, H.; Boschloos, V.; Delvaux, L.; Proesmans, M.; Vandermeulen, B.; Watteeuw, L. Towards a combined use of IR, UV and 3D-imaging for the study of small decorated and inscribed artefacts. In Proceedings of the Lights on… Cultural Heritage and Museums! Porto, Portugal, 20–22 July 2015; Homem, P.M., Ed.; University of Porto: Porto, Portugal, 2016; pp. 163–192. [Google Scholar]

- Spiess, F.; Waltenspül, R.; Schuldt, H. The Sketchfab 3D Creative Commons Collection (S3D3C). arXiv 2024, arXiv:2407.17205. [Google Scholar] [CrossRef]

- Aristov, M.M.; Geng, H.; Pavelic, A.; Berry, J.F. A new library of 3D models and problems for teaching crystallographic symmetry generated through Blender for use with 3D printers or Sketchfab. J. Appl. Crystallogr. 2022, 55, 172–179. [Google Scholar] [CrossRef]

- Sketchfab-Museo Egizio. Available online: https://sketchfab.com/Museoegizio (accessed on 23 January 2025).

- Sketchfab-Centro Conservazione Restauro “La Venaria Reale”. Available online: https://sketchfab.com/ccrvenaria (accessed on 23 January 2025).

- Sketchfab-CoMeTa Lab-Politecnico di Torino. Available online: https://sketchfab.com/cometapolito (accessed on 23 January 2025).

- Sketchfab-British Museum. Available online: https://sketchfab.com/britishmuseum (accessed on 13 May 2025).

- Cacciatori, F. Andrea Amati Magister di re, principi e regine. In Andrea Amati, Opera Omnia: Les Violons Du Roi; Ente Triennale Internazionale Degli Strumenti ad Arco, Ed.; Consorzio liutai & Archettai Antonio Stradivari Cremona: Cremona, Italy, 2007; pp. 71–88. [Google Scholar]

- Chiesa, C. An introduction to violin making in brescia in the 16th century. In 1520-1724 Liutai in Brescia; Eric Blot Edizioni: Cremona, Italy, 2008. [Google Scholar]

- Hargrave, R.G. The Amati Method. In E Furono Liutai in Cremona dal Rinascimento al Romanticismo; Chiesa, C., Dipper, A., Hargrave, R.G., Mosconi, A., Topham, J., Gindin, D., Eds.; Consorzio Liutai & Archettai A. Stradivari: Cremona, Italy, 2000; pp. 41–46. [Google Scholar]

- Lazzari, M.A.; Merlo, C. The scientific investigations. In Andrea Amati, Opera Omnia: Les Violons Du Roi; Ente Triennale Internazionale Degli Strumenti ad Arco, Ed.; Consorzio Liutai & Archettai Antonio Stradivari Cremona: Cremona, Italy, 2007; pp. 63–70. [Google Scholar]

- Jain, U. Characterization of CMOS Image Sensor. Master’s Thesis, Delft University of Technology, Delft, The Netherlands, 2016. [Google Scholar]

- Gilblom, D.L.; Yoo, S.K. Infrared and ultraviolet imaging with a CMOS sensor having layered photodiodes. In Sensors and Camera Systems for Scientific, Industrial, and Digital Photography Applications V; Blouke, M.M., Sampat, N., Motta, R.J., Eds.; Proc. SPIE 5301; SPIE: San Jose, CA, USA, 2004; Volume 5301, p. 186. [Google Scholar] [CrossRef]

- Chen, J.J.; Smith, T.J. Documentation of Salted Paper Prints with a Modified Digital Camera. J. Am. Inst. Conserv. 2020, 59, 271–285. [Google Scholar] [CrossRef]

- Cimino, D.; Marchioro, G.; De Paolis, P.; Daffara, C. Evaluating the integration of Thermal Quasi-Reflectography in manuscript imaging diagnostic protocols to improve non-invasive materials investigation. J. Cult. Herit. 2023, 62, 72–77. [Google Scholar] [CrossRef]

- UV Innovation-Ultraviolet Photography Standards. Available online: https://www.uvinnovations.com/target-uv (accessed on 23 January 2025).

- Jenoptik-High-Performance Multispectral Lenses. Available online: https://www.jenoptik.com/products/optical-systems/customized-and-standardized-objective-lenses/multispectral-objective-lenses (accessed on 23 January 2025).

- Agisoft Metashape-User Manual. Available online: https://www.agisoft.com/downloads/user-manuals/ (accessed on 23 January 2025).

- Agisoft Metashape-Masking from Model. Available online: https://agisoft.freshdesk.com/support/solutions/articles/31000163388-automatic-masking-from-the-model (accessed on 23 January 2025).

- Blender-User Manual. Available online: https://docs.blender.org/manual/it/dev/ (accessed on 23 January 2025).

- Ioannides, M.; Fink, E.; Cantoni, L.; Champion, E. Digital Heritage. Progress in Cultural Heritage: Documentation, Preservation, and Protection. In Proceedings of the 8th International Conference, EuroMed 2020, Virtual Event, 2–5 November 2020; Springer International Publishing: Cham, Switzerland, 2021; Volume 12642. [Google Scholar]

- McDermott, W. The PBR Guide: A Handbook for Physically Based Rendering Perfect Paperback, 3rd ed.; Allegorithmic: Clermont-Ferrand, France, 2018. [Google Scholar]

- Wikipedia-PBR, Physically Based Rendering. Available online: https://en.wikipedia.org/wiki/Physically_based_rendering (accessed on 23 January 2025).

- Cipriani, L.; Fantini, F. Digitalization culture vs archaeological visualization: Integration of pipelines and open issues. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, XLII-2/W3, 195–202. [Google Scholar] [CrossRef]

- Frost, A.; Mirashrafi, S.; Sánchez, C.M.; Vacas-Madrid, D.; Millan, E.R.; Wilson, L. Digital Documentation of Reflective Objects: A Cross-Polarised Photogrammetry Workflow for Complex Materials. In 3D Research Challenges in Cultural Heritage III: Complexity and Quality in Digitisation; Ioannides, M., Patias, P., Eds.; Springer International Publishing: Cham, Switzerland, 2023; pp. 131–155. [Google Scholar] [CrossRef]

- Sketchfab-Capturing Reflective Objects in 3D. Available online: https://sketchfab.com/blogs/community/capturing-reflective-objects-in-3d/ (accessed on 23 January 2025).

- Volpi, F.; Fiocco, G.; Rovetta, T.; Invernizzi, C.; Albano, M.; Licchelli, M.; Malagodi, M. New Insights on the Stradivari “Coristo” Mandolin: A Combined Non-Invasive Spectroscopic Approach. Appl. Sci. 2021, 11, 11626. [Google Scholar] [CrossRef]

- Dyer, J.; Verri, G.; Cupitt, J. Multispectral Imaging in Reflectance and Photo-Induced Luminescence Modes: A User Manual, 1st ed.; The British Museum: London, UK, 2013; Charisma Project. [Google Scholar]

- Paolini, C. The diagnostic technique of False Colour and the application of Artificial Intelligence for its interpretation. In FINAIS Collection-Roger Kunz & Andranik Ghazarian; Finais: Zurich, Switzerland, 2022; Volume 3, pp. 15–17. [Google Scholar]

- Derrick, M.R.; Stulik, D.C.; Landry, J.M. Infrared Spectroscopy in Conservation Science; Getty Publications: Los Angeles, CA, USA, 1999. [Google Scholar]

- Fiocco, G.; Invernizzi, C.; Rovetta, T.; Albano, M.; Malagodi, M.; Davit, P.; Gulmini, M. Surfing through the coating system of historic bowed instruments: A spectroscopic perspective. Spectrosc. Eur. 2021, 33, 19. [Google Scholar] [CrossRef]

- Volpi, F.; Vagnini, M.; Vivani, R.; Malagodi, M.; Fiocco, G. Non-invasive identification of red and yellow oxide and sulfide pigments in wall-paintings with portable ER-FTIR spectroscopy. J. Cult. Herit. 2023, 63, 158–168. [Google Scholar] [CrossRef]

- Papadopoulos, C.; Gillikin Schoueri, K.; Schreibman, S. And Now What? Three-Dimensional Scholarship and Infrastructures in the Post-Sketchfab Era. Heritage 2025, 8, 99. [Google Scholar] [CrossRef]

| Spectral Band | On Camera Filters | Spectral Range | Light Source |

|---|---|---|---|

| UVR | Baader UV | 230–390 nm | 4 UV LED 365 nm |

| UVL | Hoya UV–IR Cut + Schott BG40 | 400–700 nm | 4 UV LED 365 nm |

| VIS | Hoya UV–IR Cut + Schott BG40 | 400–700 nm | 2 Halogen bulbs |

| IRR | Neewer 800 | 800–1000 nm | 2 Halogen bulbs |

| FUJI X–T30 | Spectral Band | Camera Settings | |

|---|---|---|---|

| Sensor | CMOS, 26.1 megapixels | VIS | 3 s, f/8, ISO 200 |

| Sensor Size | APS–C, 23.5 × 15.6 mm | UVR | 3 s, f/8, ISO 200 |

| Image Size | 6240 × 4160 | IRR | 3 s, f/8, ISO 200 |

| Lens | Minolta MC Rokkor–PF 50 mm f/1.7 | UVL | 15 s, f/8, ISO 200 |

| Inv. 107 | Inv. 81 | |

|---|---|---|

| Images (each band) | 126 | 119 |

| Reprojection error | 0.499 pix | 0.459 pix |

| Polygons | 2,901,226 | 3,285,132 |

| Texture | 8192 × 8192 pix | 8192 × 8192 pix |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Di Iorio, F.; Fiocco, G.; Angeloni, R.; Es Sebar, L.; Croci, S.; Cacciatori, F.; Malagodi, M.; Pozzi, F.; Grassini, S. Violins Unveiled: A Photogrammetric Framework Integrating Multiband and Spectroscopic Data for In-Depth Examination of Two Musical Instruments. Sensors 2025, 25, 3278. https://doi.org/10.3390/s25113278

Di Iorio F, Fiocco G, Angeloni R, Es Sebar L, Croci S, Cacciatori F, Malagodi M, Pozzi F, Grassini S. Violins Unveiled: A Photogrammetric Framework Integrating Multiband and Spectroscopic Data for In-Depth Examination of Two Musical Instruments. Sensors. 2025; 25(11):3278. https://doi.org/10.3390/s25113278

Chicago/Turabian StyleDi Iorio, Federico, Giacomo Fiocco, Riccardo Angeloni, Leila Es Sebar, Sara Croci, Fausto Cacciatori, Marco Malagodi, Federica Pozzi, and Sabrina Grassini. 2025. "Violins Unveiled: A Photogrammetric Framework Integrating Multiband and Spectroscopic Data for In-Depth Examination of Two Musical Instruments" Sensors 25, no. 11: 3278. https://doi.org/10.3390/s25113278

APA StyleDi Iorio, F., Fiocco, G., Angeloni, R., Es Sebar, L., Croci, S., Cacciatori, F., Malagodi, M., Pozzi, F., & Grassini, S. (2025). Violins Unveiled: A Photogrammetric Framework Integrating Multiband and Spectroscopic Data for In-Depth Examination of Two Musical Instruments. Sensors, 25(11), 3278. https://doi.org/10.3390/s25113278