1. Introduction

Autonomous Underwater Vehicles (AUVs) have revolutionized oceanographic research and exploration by enabling autonomous operations in deep, inaccessible, and hazardous underwater environments. A pivotal challenge in their deployment, however, lies in achieving precise underwater navigation. Unlike terrestrial or aerial systems, AUVs cannot reliably utilize Global Navigation Satellite Systems (GNSSs) due to rapid signal attenuation in water, restricting GNSS availability to brief surface intervals [

1]. Consequently, navigation predominantly relies on inertial systems such as Fiber-Optic Gyroscopes (FOGs) in combination with dead reckoning based on Doppler Velocity Logs (DVLs). While these systems offer high accuracy, their substantial cost—particularly for high-precision FOG and DVL units—poses a significant barrier to affordability, especially for smaller AUVs, where they may constitute over

of total platform expenses.

To bridge this gap, recent advancements focus on integrating low-cost Micro-Electro-Mechanical System (MEMS)-based inertial sensors with acoustic positioning through sensor fusion techniques, such as Kalman filtering [

2].

In this work, we present a novel, cost-effective navigation solution for AUVs combining MEMS-based inertial measurement with acoustic positioning, optimized for real-time operation on low-power microcontrollers. By prioritizing computational efficiency without sacrificing performance, this system aims to democratize AUV access for scientific and commercial applications—from marine biodiversity mapping to underwater infrastructure inspection—where high-cost solutions remain prohibitive. The integration of modular, low-power hardware further ensures scalability across AUV classes, underscoring our goal to redefine the balance between precision, affordability, and operational versatility in underwater navigation.

2. Related Work

Underwater navigation makes extensive use of inertial navigation. Because pure inertial navigation is not applicable for more than a few minutes [

3], it is normally integrated in multi-sensor data fusion [

4].

Combined navigation and sensor data fusion in underwater applications is mostly done using extended Kalman filters [

5,

6,

7]. In [

8], an unscented Kalman filter is used to model the distribution of quantization noise. For three-dimensional navigation, error state Kalman filters are used in combination with quaternion calculations, as detailed in [

9]. Such filters are typically implemented with 32-bit or 64-bit floating-point calculation. Before sufficient calculation power was available, Kalman filters were often implemented in square root form [

10,

11]. Square root Kalman filters are typically used for simple systems with a small number of optimizable states. With digital signal processors capable of performing millions of fixed-point multiplications per second, it has become viable to use square root Kalman filters for complex navigation and data fusion tasks, while embedded class processors are often much more cost and power efficient than application class processors.

In [

12], a navigation solution for AUVs is proposed based on tightly coupled data fusion that combines an inertial sensor and DVL. A chi-square detection-aided dual-factor adaptive filter is used to suppress outliers. Ref. [

13] proposes Inertial Navigation System (INS)/DVL data fusion using a square-root unscented information filter to ensure the symmetry and positive definiteness of the covariance matrix or information matrix and to enhance the stability of the filter. Ref. [

14] proposes a virtual beam predictor based on multi-output least-squares support vector regression in order to allow for data fusion when not all DVL beams are available. In [

15], an extended exponential weighted Kalman filter is combined with a long short-term memory neural network to make the DVL/INS data fusion more robust against outliers. These advanced filtering algorithms often come with high computational complexity and are therefore difficult to realize in a small, low-cost AUVs. Therefore, ref. [

16] proposed a low-cost AUV navigation solution using an extended Kalman filter of only five dimensions in combination with complementary filtering.

In summary, this literature review highlights the importance of having high calculation precision. Our proposed method combines low-cost hardware with advanced implementation methods.

While data fusion between MEMS inertial sensors and USBL is well-established [

16,

17,

18], our contribution focuses on implementing this fusion using 16-bit fixed-point arithmetic and analyzing the impact of rounding errors in this context. Our method is able to effectively reduce the influence of rounding errors introduced by 16-bit fixed point representation. The reduced calculation cost allows us to use a high-dimensional state vector without losing real-time capabilities when implemented on an inexpensive microcontroller. This can, for example, be used to perform calibration and data fusion with the same filter.

3. System Overview

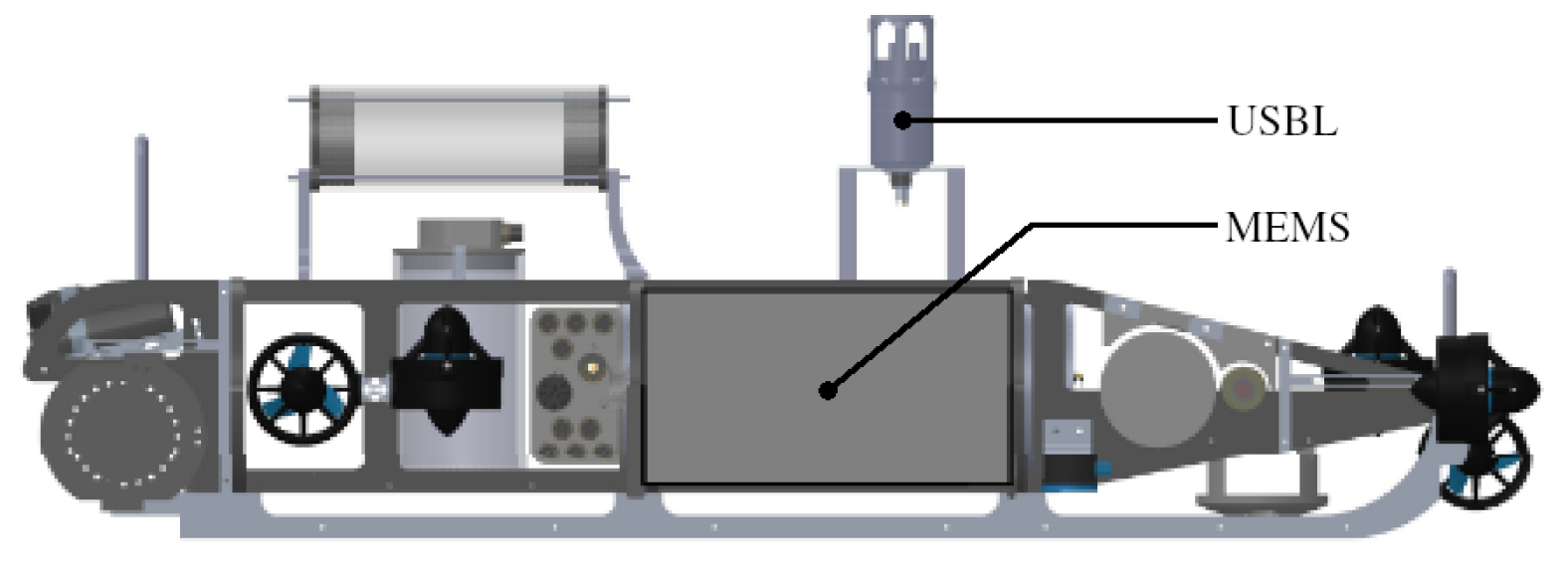

The navigation solution used in this work consists of a Seatrac X150 Ultra-Short Baseline (USBL) beacon, an Advanced Navigation Spatial MEMS inertial sensor, and a Microchip dsPIC33CH512MP508 microcontroller. For laboratory tests, the MEMS sensor was replaced by a much cheaper Bosch BNO055. The microcontroller and the MEMS sensor were directly connected via an RS232 interface, and acceleration, gyroscope, and magnetic measurements were sent to the microcontroller with a sampling rate of 100 Hz. The microcontroller’s main loop also operated at 100 Hz, synchronizing itself to the MEMS sampling rate. A second RS232 interface linked the microcontroller to the AUV’s main PC to transmit the position and velocity estimates. This connection also facilitated the transfer of additional data, such as USBL pings, to the microcontroller and enabled the storage of raw MEMS measurements for post-processing. When using the BNO055 sensor, an I2C interface was employed to connect it to the microcontroller. The dsPIC33CH microcontroller family is a dual-core system, with both cores featuring DSP units. In our setup, one core was dedicated to real-time signal processing, while the other handled communication with the host PC and the MEMS sensor.

4. Data Fusion Method

A common way to describe the position and motion of a vehicle in three-dimensional space is an indirect or error state Kalman filter combining a quaternion equation and linear algebra equations, as described in detail in [

9]. The proposed data fusion method uses an error state Kalman filter with a 15-dimensional state vector

including position

, velocity

, rotation vector

, accelerometer bias

, and gyroscope bias

. Each of them is three-dimensional.

The measurement input vector

consists of the accelerometer measurement

and the gyroscope measurement

.

For the nominal state, the rotation vector is replaced by unit quaternion , where is the quaternion exponential map. Quaternion multiplications are written as in order to be distinguished from normal matrix multiplications.

The state transition

is defined in the Equations (3)–(5), where the index

k marks the current time step and

marks the next time step of a time discrete system.

is the sampling period,

is the gravity vector, and

is the exponential map of the rotation vector

as rotation matrix.

The state transition function can be linearized around a given working point of

and

into a matrix

and

such that

. Similar to [

9],

and

can be derived as shown in Equations (6) and (7).

is the

identity matrix and

is the skew operator, such that

. The rotation matrix is written as

.

For data fusion and filtering, the state vector

will be estimated on each time step

k as a state estimate vector

with a covariance matrix

. The Kalman filter splits the estimation into a prediction and correction step. The prediction step is defined as shown in Equations (8) and (9), where

is the covariance matrix of

. The prediction step gives the a priori estimate marked with index

.

Given an external observation of the system state

and the associated observation matrix

and observation covariance matrix

, the correction step can be defined as shown in Equations (10)–(12). The intermediate matrix

is the Kalman gain.

The Kalman filter steps can be transferred into a square root form. In this work, the square root form of Cholesky factors is used. The Cholesky factor of a matrix

is here written as

, such that

. To create the square root form, all covariance matrices are replaced by their Cholesky factors. As shown in [

19], the prediction step in square root form can be written as shown in Equation (

13) where

is the Cholesky factor of the covariance of the state estimate and

is an orthogonal matrix used to compress

back in its original size.

The correction step is transferred into its square root form according to [

19], resulting in Equation (

14).

After extracting

and

from the result of Equation (

14), the new state estimate

can be obtained the same way as in Equation (11).

Each time when calculating Equations (

13) and (

14), an orthogonal transformation represented by

has to be found, which creates the required zeros in the bottom left part of the result matrix. This is the same process as applying a QR-decomposition, which splits a square matrix into a triangular and an orthogonal part. The orthogonal matrix can be discarded, and the triangular matrix corresponds to the left hand side of Equation (

13) or (

14). There are multiple methods for performing the QR-decomposition. The most common methods utilize either Givens rotations or Householder transformations. The method using Householder transformations is in general more efficient for non-sparse matrices, as it can be applied per column instead of per element. Therefore, Householder transformations are used in this work.

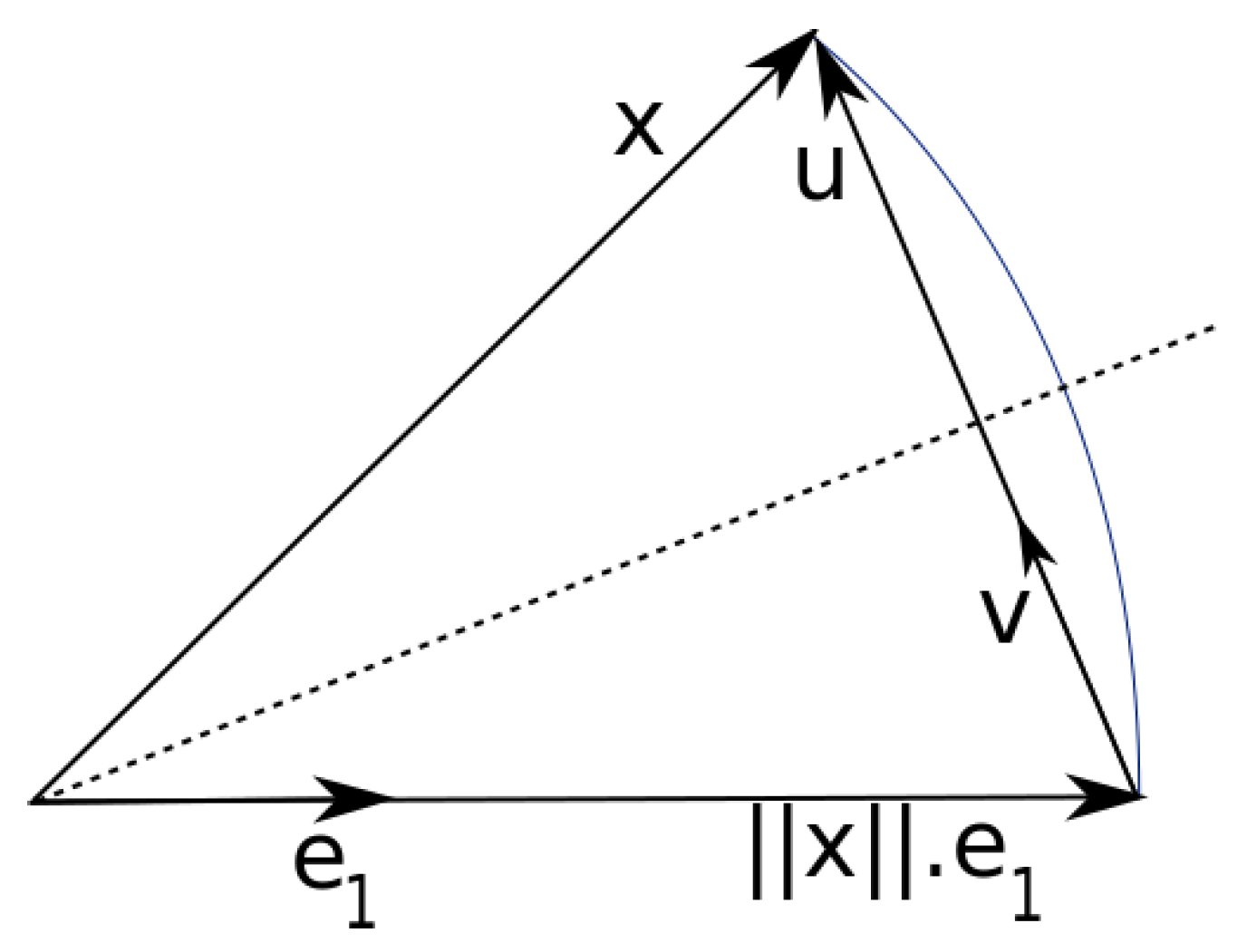

4.1. QR Decomposition Using the Householder Transformation

As described in [

20], the Householder transformation can be performed as a series of Householder reflections, where each column vector of a matrix

is mirrored in the same hyperplane. As shown in

Figure 1, the column vector is called

, the normal vector of the hyperplane is

, and the basis vector of the target direction is

. To create a triangular matrix, the reflection plane must be positioned in such a way that all elements of the first column vector except the first are 0 and the column vector lies on the first basis vector

. Because the amounts of the column vectors must not change during an orthogonal transformation, the first column vector

must therefore become

after the transformation.

The normal vector

of the hyperplane can be found by subtracting the desired first column vector

from the current first column vector

, as shown in Equation (

15). The normalized normal vector

is then calculated from this, as shown in Equation (16).

The new sign for the first element of the new column vector is arbitrary, but it should be the opposite of the previous sign. This prevents the vector

from becoming very small, leading to rounding errors.

To mirror each column vector

on the plane with the normal vector

,

must first be projected onto

and then subtracted twice from

.

In each step, two square root operations are required to determine the amounts of

and

. The second root operation can be saved by using

directly instead of

.

The same process can then be applied to matrix

again, except that one column and one row are omitted each time. This is repeated until

becomes an upper triangular matrix.

4.2. Fixed-Point Implementation

To implement the whole filter on a microcontroller with only fixed point arithmetic, a new data structure has been designed to hold the Cholesky factor

of the covariance of state estimate, which adds an additional per-row exponent to the matrix containing only fixed point representations of its elements. As shown in Equation (

17), the matrix

is split into a diagonal exponent matrix

containing exponents with base 2 and a mantissa matrix

containing fixed point values. This way, the

matrix can be ignored during the QR-decomposition, because it is diagonal and thus also triangular. Only the

matrix needs to be QR-decomposed. This means that the computation-intensive Householder transforms can be calculated completely in fixed point arithmetic, while the complete

matrix still has some advantages of floating point numbers.

4.3. Stochastic Rounding

When using limited precision values like 16-bit signed integers for the mantissas in

, each multiplication of two of these numbers results in a 32-bit integer, which needs to be rounded to be stored in a 16-bit register again. With the typical Round to Nearest (RN) approach, this can lead to some unfavorable effects, as the rounding errors often sum up quickly over the iterations of the filter. By replacing the RN method with a Stochastic Rounding (SR) method, the behavior can be made much more predictable, as the accumulated rounding error over time can now be described with a random walk function [

22], whose expected magnitude over time follows a square root function. In addition to multiplications, the Householder algorithm also requires square root and division operations whose results have to be rounded as well. The SR implementations for the different cases are shown in Algorithms 1–3. The given pseudo code uses ≫ to represent bit-wise right shift and ≪ for bit-wise left shift.

and

are 32-bit and 16-bit Pseudorandom Number Generator (PRNG) functions.

| Algorithm 1 Rounding last n bits of 32-bit integer with stochastic rounding |

|

| Algorithm 2 Integer division with stochastic rounding |

|

| Algorithm 3 Integer square root with stochastic rounding |

function SQRT_STOCHASTIC() while do if ( then end if end while ▹ rounding last bit with stochastic rounding if then end if return end function

|

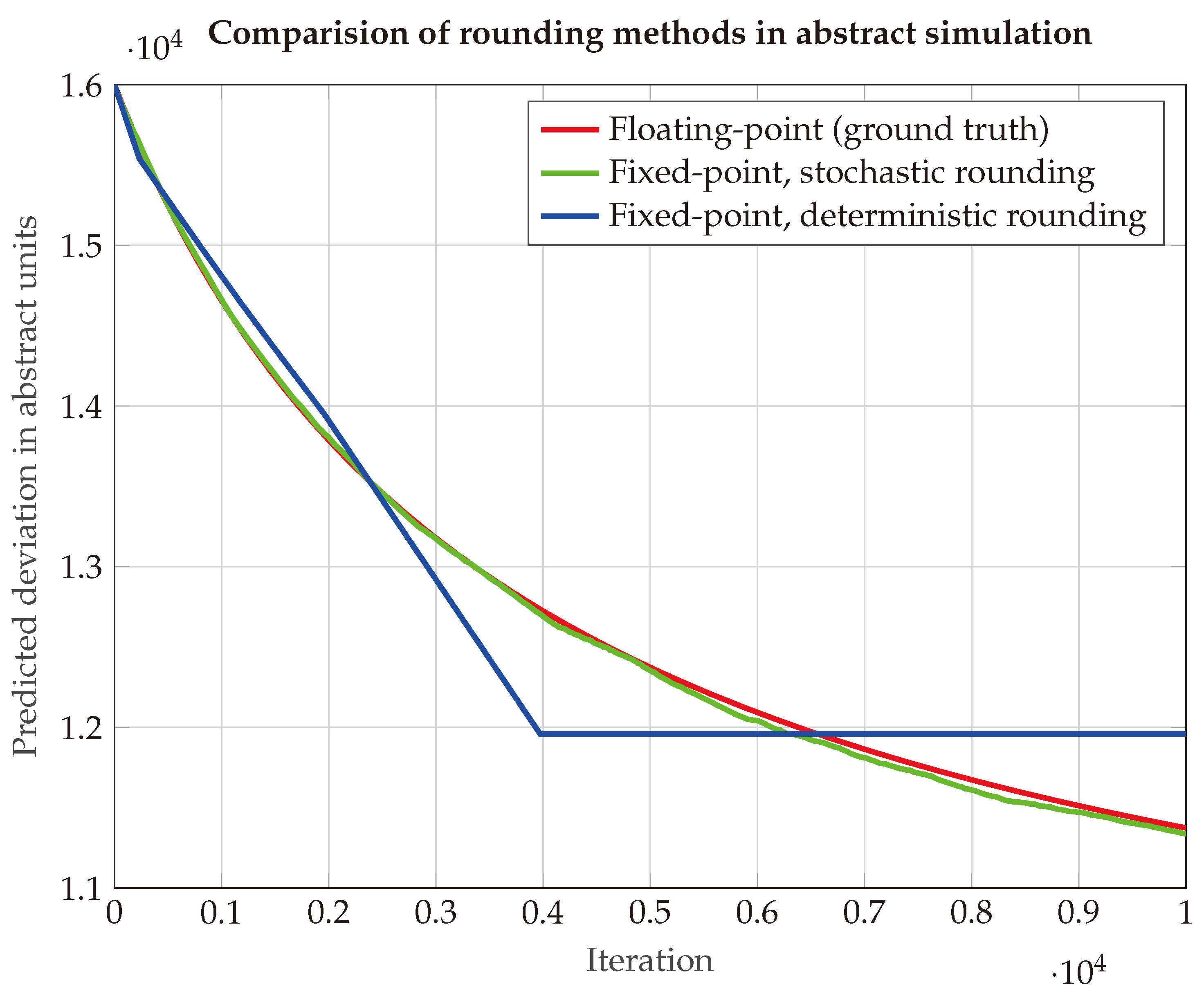

To illustrate the effectiveness of the stochastic rounding method, a simplified abstract system is constructed. The system consists of a three-dimensional state vector and uses an identity matrix as the state transition model. At each iteration, the filter receives a one-dimensional observation—a linear combination of the system states—with a constant standard deviation. Following each update, the predicted state uncertainty is evaluated using the filter’s covariance matrix, or the square root of the covariance matrix in the case of the fixed-point implementation. The deviation is expected to go down as the filter incorporates more observations over time.

As can be seen in

Figure 2, the SR implementation result is much closer to the result from floating-point arithmetic. Because of the low precision of the 16-bit numerical representation, there is only a very limited amount of possible step sizes. It can be clearly seen that the RN implementation utilizes the nearest possible step size, but it diverges quickly from the floating-point results.

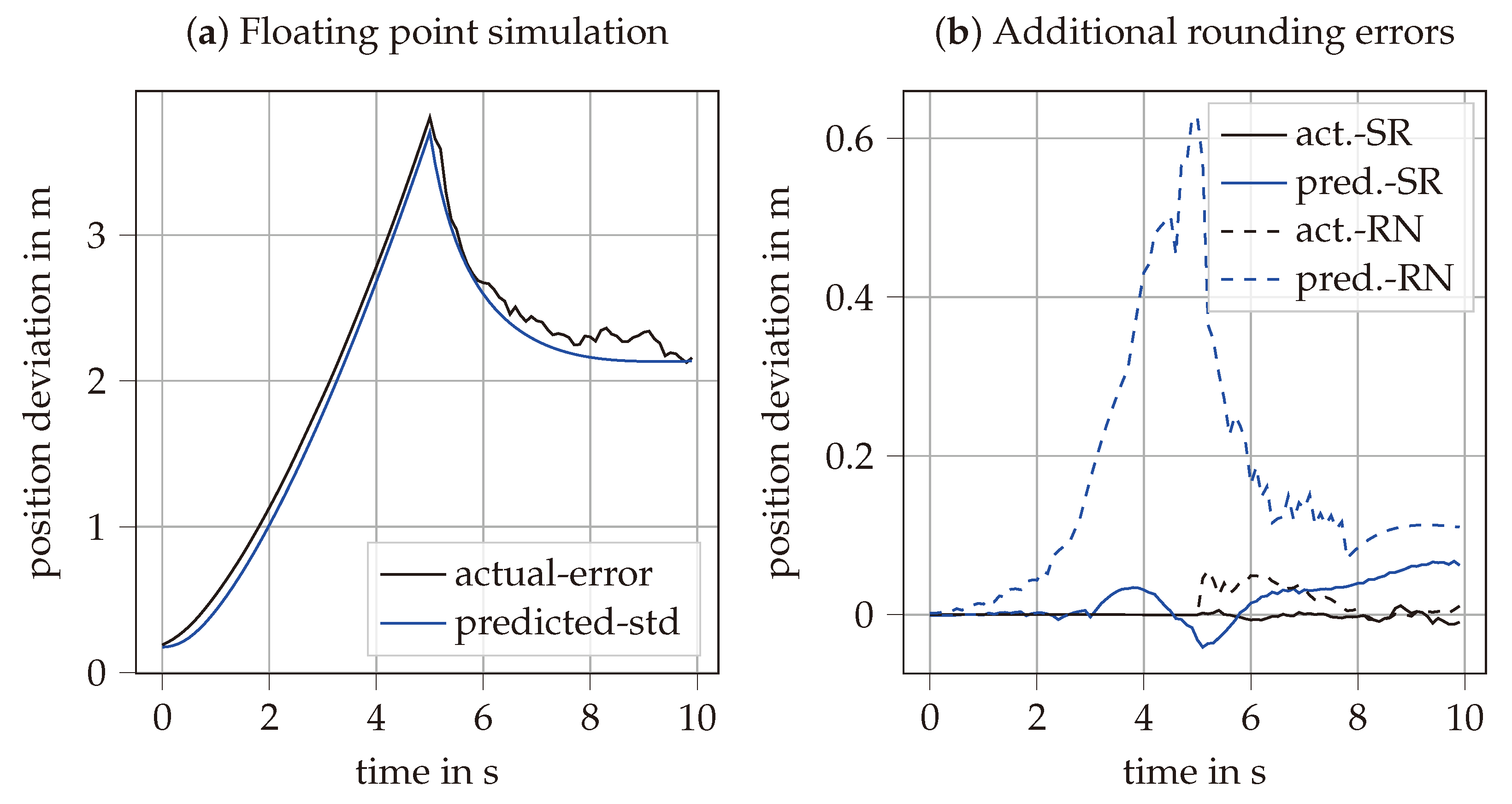

To assess the impact of rounding errors in a more realistic scenario, the following setup was simulated. The initial three-dimensional position, velocity, and orientation are assumed to be known. Then, for 5 s, only noisy inertial measurements are available. After the first 5 s, an additional noisy position measurement becomes available. The three-dimensional angular velocity measurement has a noise spectral density of

, and the acceleration measurement has a noise spectral density of

. The additional noisy position measurement has a noise spectral density of

.

Figure 3a shows the simulation results for floating-point arithmetic, averaged over 100 repeated simulations, with the absolute actual error plotted against the predicted error. In the first 5 s, the position estimation uncertainty increases mainly because of the acceleration measurement noise. After the position measurement becomes available, the uncertainty stabilizes at above 2 m error.

Figure 3b shows the difference between the results with floating point and fixed point arithmetic. Consistent with

Figure 2, the SR method provides significantly more accurate predicted uncertainty compared to RN. This difference has minimal impact on the actual error during the pure inertial phase. However, when the position measurement is available, the inaccurately predicted uncertainty affects data fusion, resulting in a slight increase in the actual error.

4.4. Tightly Coupled Data Fusion with USBL

The data fusion with USBL measurements can be implemented either using a Loosely Coupled (LC) or Tightly Coupled (TC) approach [

23]. The TC method is chosen here because it allows us to have separate certainty values for the measurement of range and angle. The known beacon position in global coordinates

is first transferred to the body coordinate system, as shown in Equation (

18). Then, the observation vector

including range, azimuth angle, and elevation angle can be predicted using the

function given in Equation (19).

This function is then linearized around the current state estimate to obtain the observation matrix

given in Equation (

20).

Here, the derivative of the observation vector relative to the position vector is given by

, with the derivative of the observation relative to the beacon position in body coordinates given in Equation (

21). The derivative of the observation relative to the rotation vector

is given by

.

5. Sensor Calibration

While the sensor calibration process typically requires precise reference measurement systems, the previously described Kalman filter can also be extended to estimate the sensors’ orientation together with calibration parameters. This way, the calibration process can be performed without additional reference measurements. Therefore, the state estimate

of Equation (

1) has to be extended as shown in Equation (

22), where

and

are the sensitivity correction factors for each axis of the accelerometer and the gyroscope, and

and

are the axis misalignment angles for the accelerometer and gyroscope.

To define the axis misalignment angles, a reference coordinate system is needed. This reference system is defined as the Accelerometer Orthogonal Frame (AOF) [

24], where the measured accelerometer x-axis lies directly on the x-axis of the AOF. The y-axis of the AOF is fixed to be in the plane spanned by the x-axis and y-axis of the accelerometer measurement axes. The z-axis of the AOF is then given solely by the orthogonality of the system. Therefore, three calibration angles are needed to align the accelerometer to the AOF, and six calibration angles are needed to align the gyroscope. An accelerometer measurement vector

can be transformed into the AOF by multiplying it matrix

as shown in Equation (

23). Similarly, the gyroscope measurement is aligned as shown in Equation (24).

The state transition

previously defined in Equations (3)–(5) is extended as shown in Equations (25)–(27), where the ⊙ operator represents an elementwise multiplication between two vectors.

The new state transition is linearized around a given working point of

, as shown in Equation (

28), replacing the

previously defined in (

6).

where submatrices

to

are defined as follows:

Often, integrated MEMS sensors come with integrated magnetometers. The magnetometer can be included in the calibration, as shown in [

25]. Since the magnetometer measurements are not involved in the prediction step, the state transition function and transition matrix are not effected.

6. Results

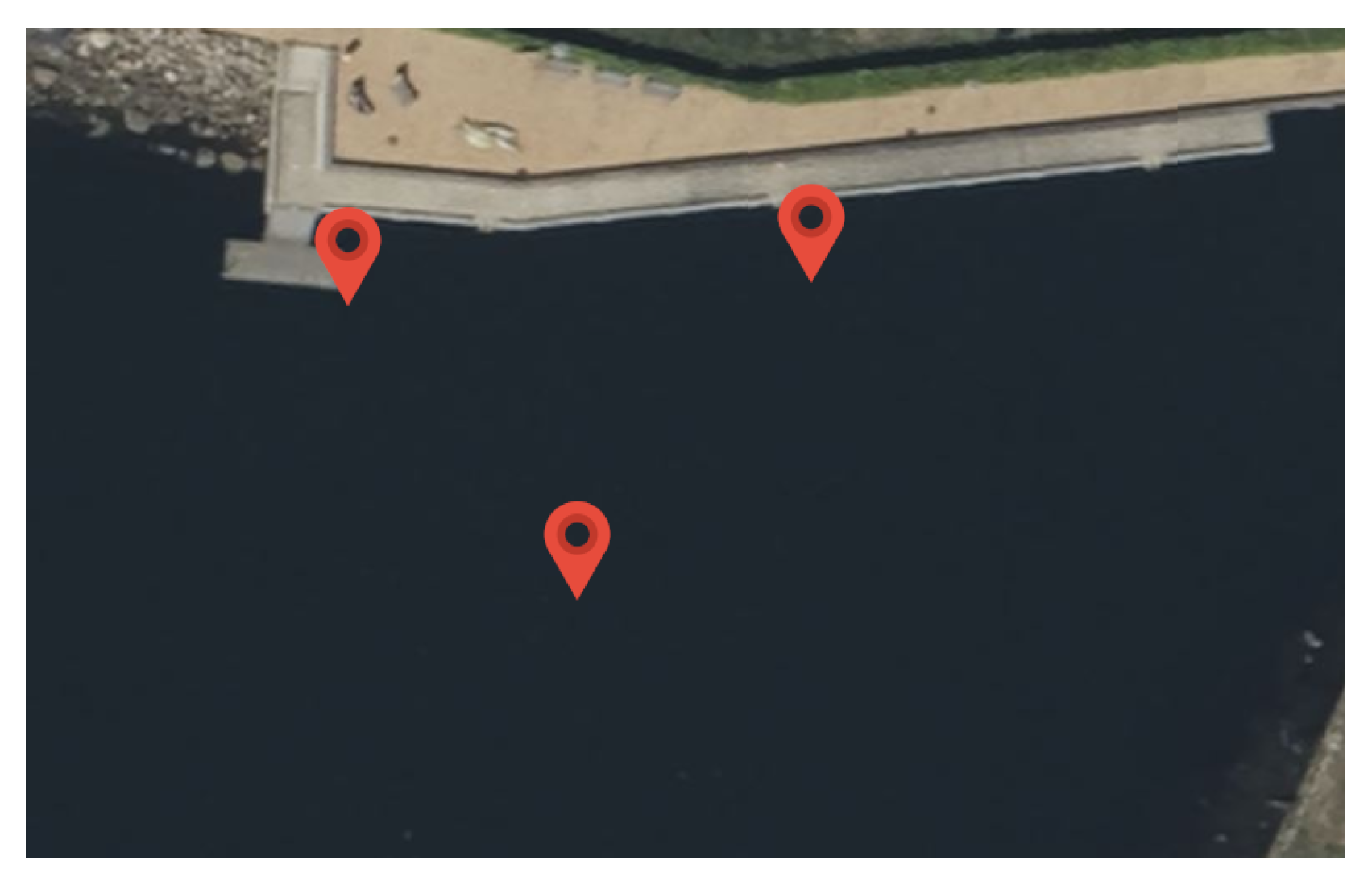

For the field test, the AUV was driving close to the surface on a triangular path marked with buoys, as shown in

Figure 4. The test side was located in the Kiel Fjord, and the triangle had a side length of around 30 m. The water depth at the test side was between 3 m and 8 m. The vehicle used for the test was a custom-designed AUV with a length of about 1 m and a weight of about 50 kg. The placement of the navigational sensors is shown in

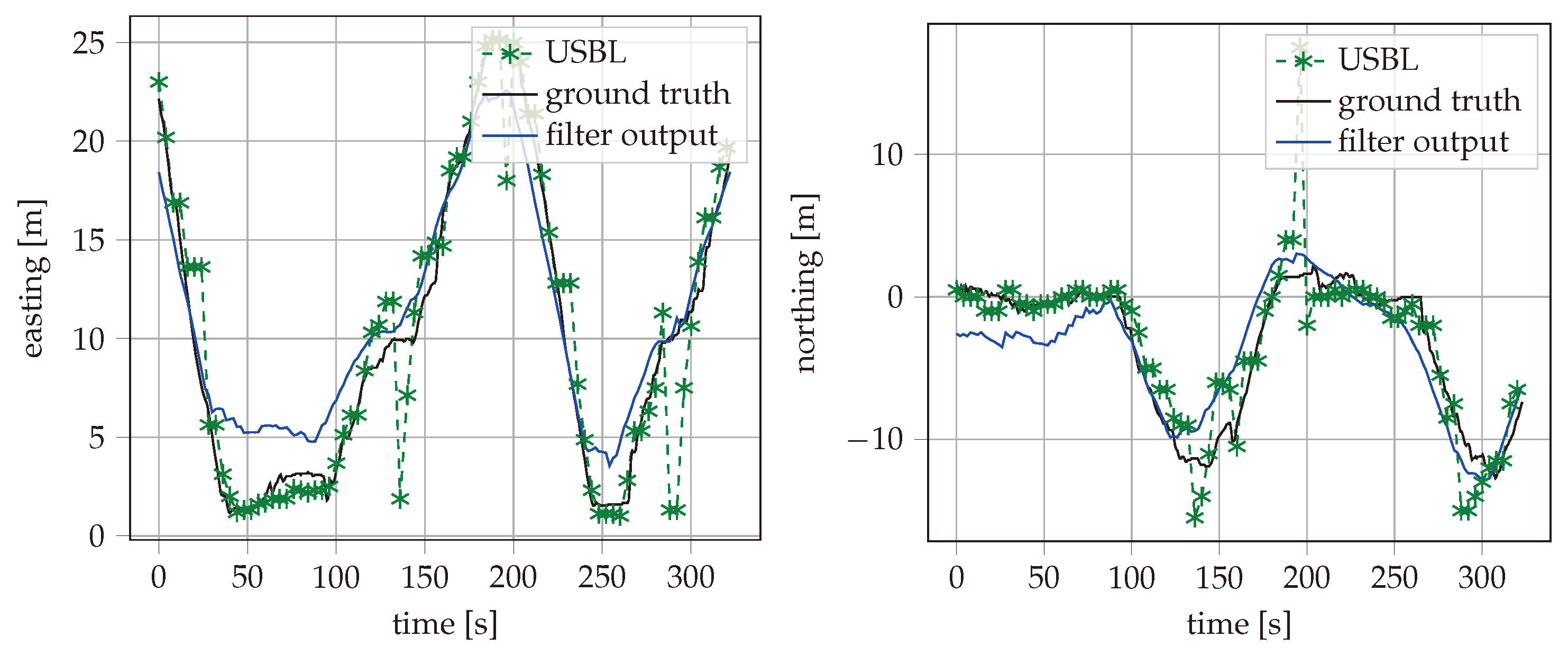

Figure 5. It is noted that both sensors were relative close to each other in the horizontal direction, so rotations around the vertical axes had less impact on the lever arm. The vehicle was driving in non-autonomous mode using a tethering cable, and the actual path was recorded from above the water to measure the reference position.

During the field test, the AUV was driving for about an hour while recording data from MEMS and USBL. As our method performs joined calibration and data fusion, the accuracy grows over time. For this reason, only the position accuracy during a 10 min time period at the end of the test was taken into account for the evaluation. The recorded reference position, the USBL fixes, and the estimated vehicle path with best filter configuration are shown in

Figure 6 for the time period used for evaluation. The east and north positions are also plotted separately over time in

Figure 7. Different configurations of the described filter are applied to the data recorded from the field test. The maximum error and the Root Mean Squared Error (RMSE) are calculated for the estimated positions over the same 10 min time period. The filter has been processed with different types of input data enabled. This includes inertial measurements of the MEMS, the USBL measurements with either TC or LC data fusion, and feedback of the thruster set points. Additionally, the implementation method can be floating-point or fixed-point with SR or RN rounding methods. The results are summarized in

Table 1. It can be seen that the RMSE of the filter output is close to the RMSE of the raw USBL measurements, despite the USBL measurements being only available in a 5 s interval. In addition, the maximum error is strongly reduced from

m to

m. It can be seen that the SR method clearly reduces the precision difference between the 16-bit fixed-point and 32-bit floating-point implementations. The RMSE of the SR fixed-point implementation is only 5% higher than with the floating-point results. In contrast, the RN implementation had a 16% higher error. The comparison with a few-state Kalman filter extended with complementary filtering, as described in [

16], showed a clear advantage of our proposed method. The complementary filtering setup was limited to LC USBL data fusion. As shown in

Table 1, the maximum error was almost identical between the complementary filtering setup and our method with LC USBL data fusion, while our method with TC USBL data fusion had a reduced maximum error.

An advantage of the proposed method is its ability to perform the calibration jointly with the data fusion. To test the effectiveness of this approach, the estimated bias parameters of the MEMS sensor were compared between a standalone calibration in the laboratory and a joined calibration during the field test. The results are presented in

Table 2. It can be seen that the estimated gyroscope bias parameters closely match between the two calibrations. The accelerometer bias parameters differ strongly, which is also reflected in the estimation uncertainty of around

. The accelerometer calibration works much better in the laboratory test because the sensor can be freely rotated. In the field test, the sensor always has the z-axis aligned with the gravity of the earth. For the same reason, the sensitivity and orthogonality parameters are difficult to estimate in a short field test. Therefore, these parameters were fixed during the field test and not included in the comparison.

The existing methods can be broadly classified into three categories. The first relies on high-precision sensors, offering high accuracy at a high cost [

2,

4,

12]. The second uses low-cost MEMS sensors combined with complex, high-rate, many-state data fusion algorithms, which reduce hardware costs but impose significant computational load [

6]. The third category also employs low-cost sensors, but it minimizes computational demand through simplified data fusion techniques [

16]. Our method belongs to the second category, with the key advantage of offloading the data fusion to a dedicated signal processing unit. This approach eliminates the computational burden on the application processor, making its full processing power available for other tasks. A comparison of the advantages and disadvantages can be seen in

Table 3.

7. Conclusions

This work presents a novel implementation method to achieve near-floating-point precision on a limited 16-bit microcontroller, enabling the development of a low-cost, low-power standalone navigation system. While the microcontroller’s power consumption may be minor in the overall power management of an AUV, this approach offers the advantage of enhanced numerical stability, regardless of computational constraints. The implementation leverages a square-root form of the error state Kalman filter to establish a numerically stable inertial navigation system that allows for data fusion from external sensors. The implementation has been adapted and optimized for fixed-point calculation to be used on a 16-bit microcontroller. A stochastic rounding method has been applied to increase the precision of the fixed-point implementation. The algorithm has been adapted for TC USBL data fusion. Additional optimizable states have been added for calibration of bias, sensitivity, and orthogonality errors of the MEMS sensor.

The proposed implementation has been tested on different calibration and data fusion tasks. Data collected from a field test have been used to compare the performance of different filter configurations. It was shown that the accuracy of the fixed-point calculation could be brought close to the floating-point performance using the SR method. Also, the use of TC data fusion with USBL and the inclusion of thruster feedback led significant error reduction.