Spatial Visual Imagery (SVI)-Based Electroencephalograph Discrimination for Natural CAD Manipulation

Abstract

1. Introduction

2. Related Work

2.1. Research on Spatial Visual Imagery (SVI) EEG

2.2. Research on the Spatial Features of Imagery-Related EEG

3. Experiment

3.1. Purpose of the Experiment

3.2. Experiment Details

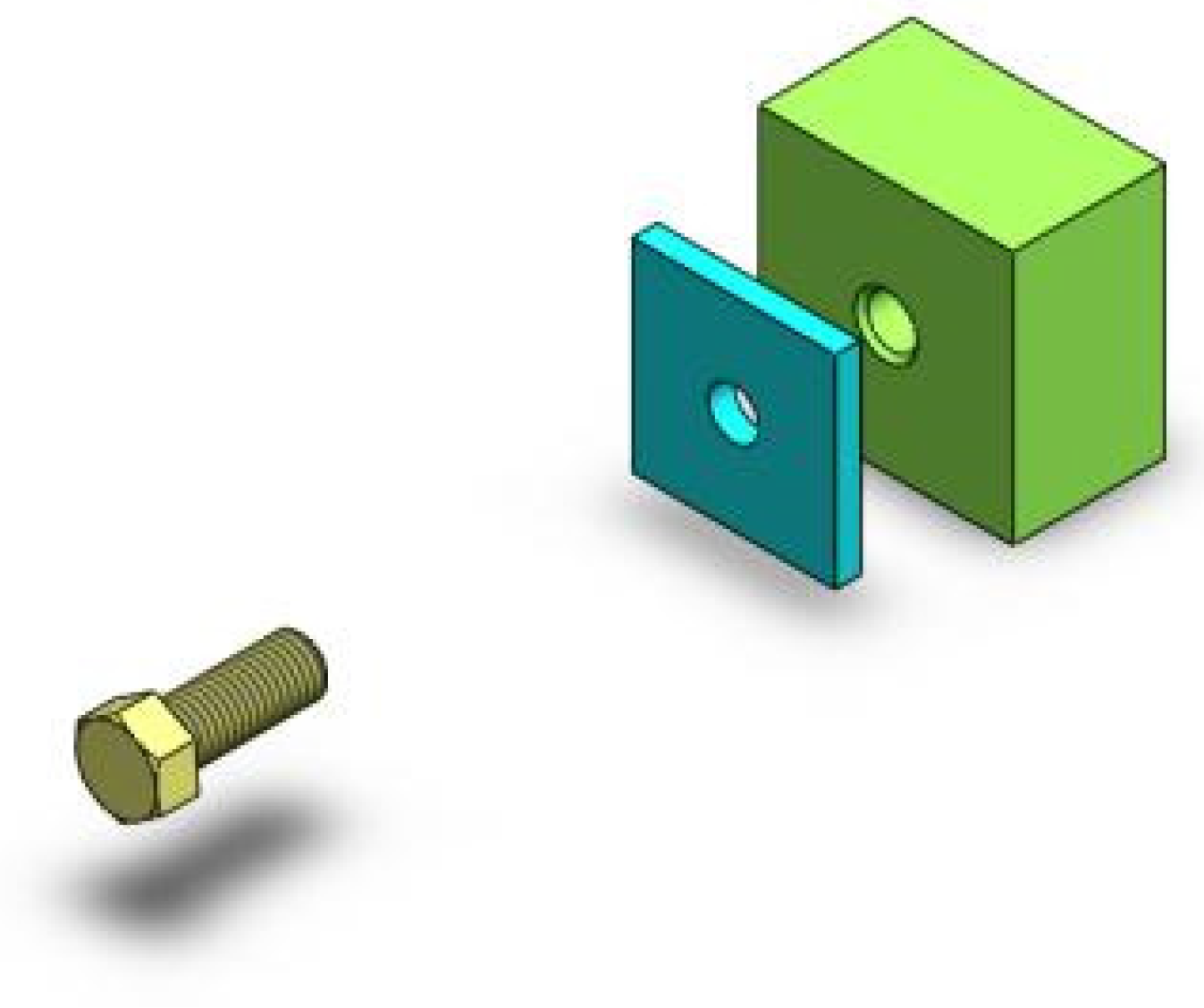

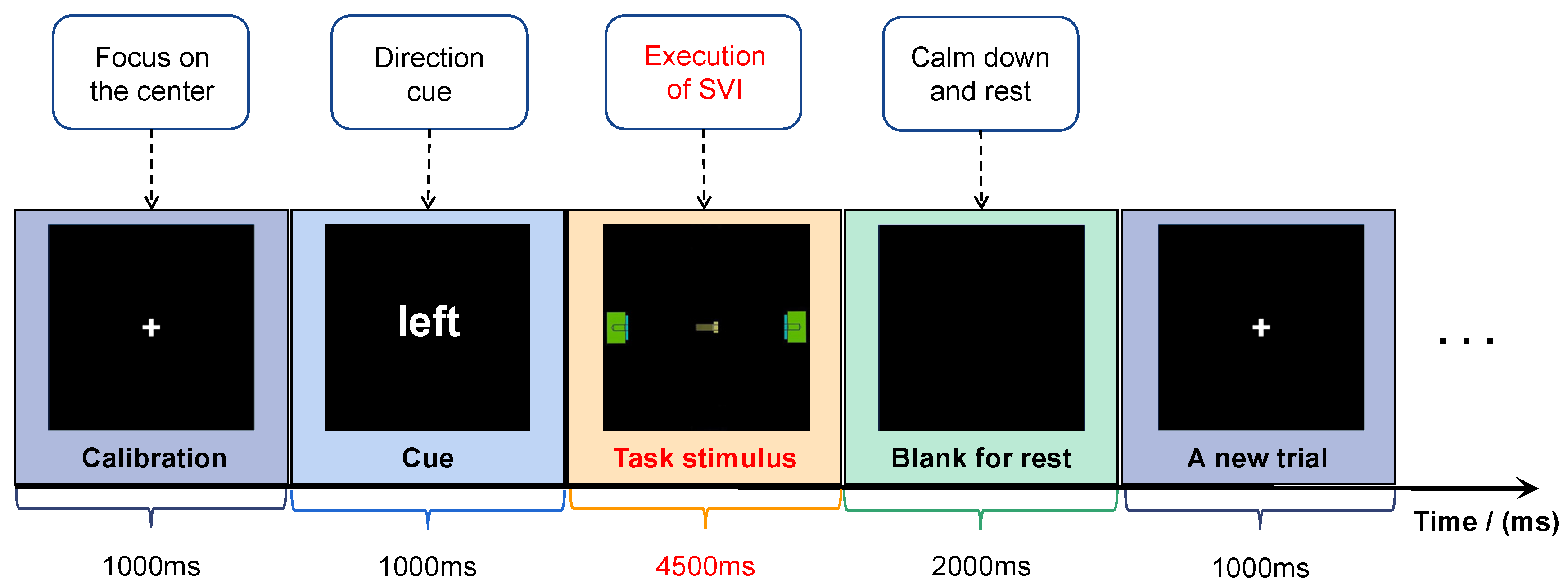

3.2.1. Experimental Protocol

3.2.2. Implementation of the Experiment

3.2.3. Subjects and Environment

3.2.4. Data Acquisition and Preprocessing

4. Feature Extraction of SVI

4.1. Spatial Feature Extraction for SVI

4.1.1. A Conduction Pathway-Based Hypothesis for Feature Extraction

4.1.2. CSP Features of SVI

4.1.3. Cross-Correlation (CC)-Based FC Features of SVI

4.1.4. Coherence-Based FC Features of SVI

4.2. Analysis of Spatial Features for SVI

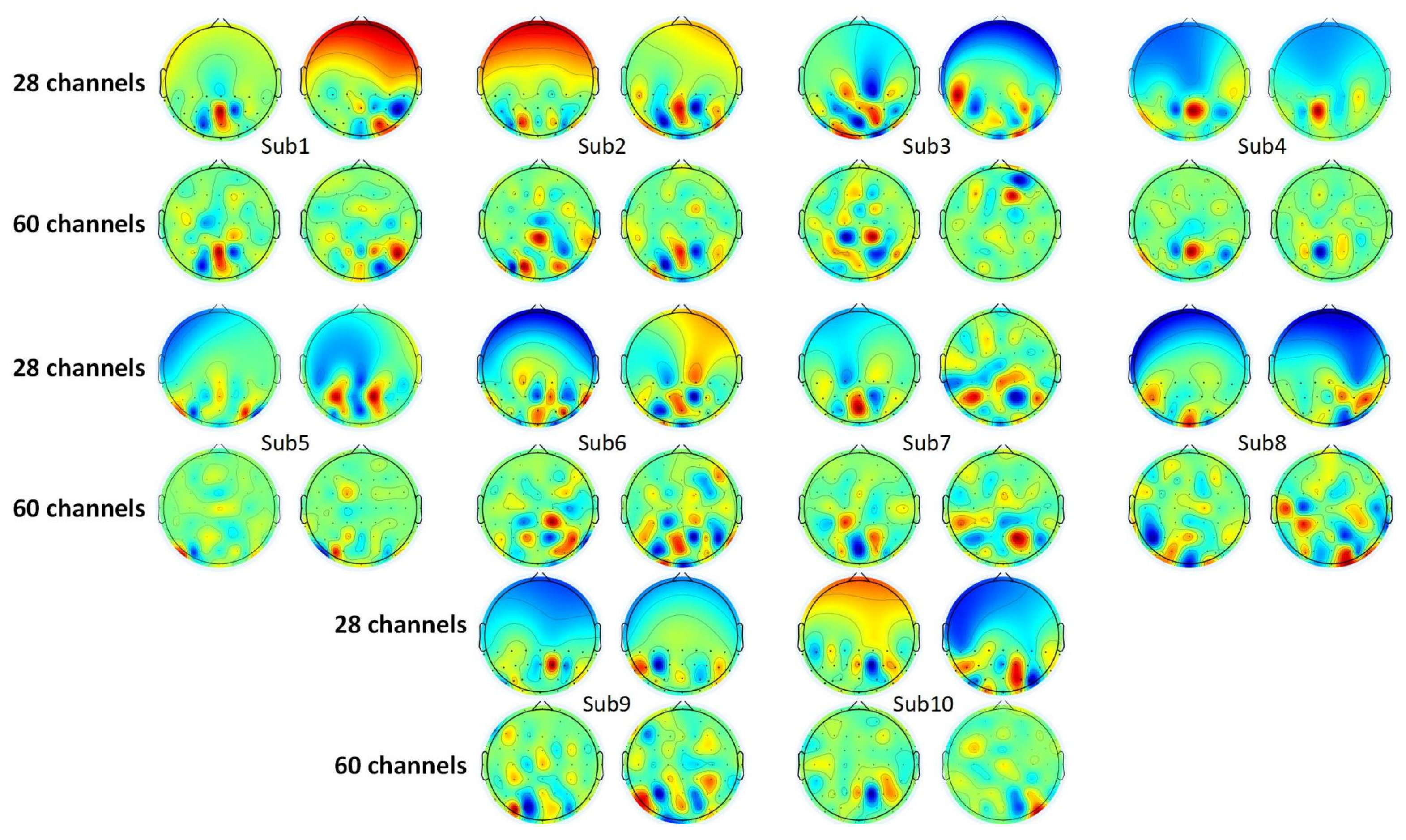

4.2.1. Analysis of CSP Features

4.2.2. Analysis of Cross-Correlation Features

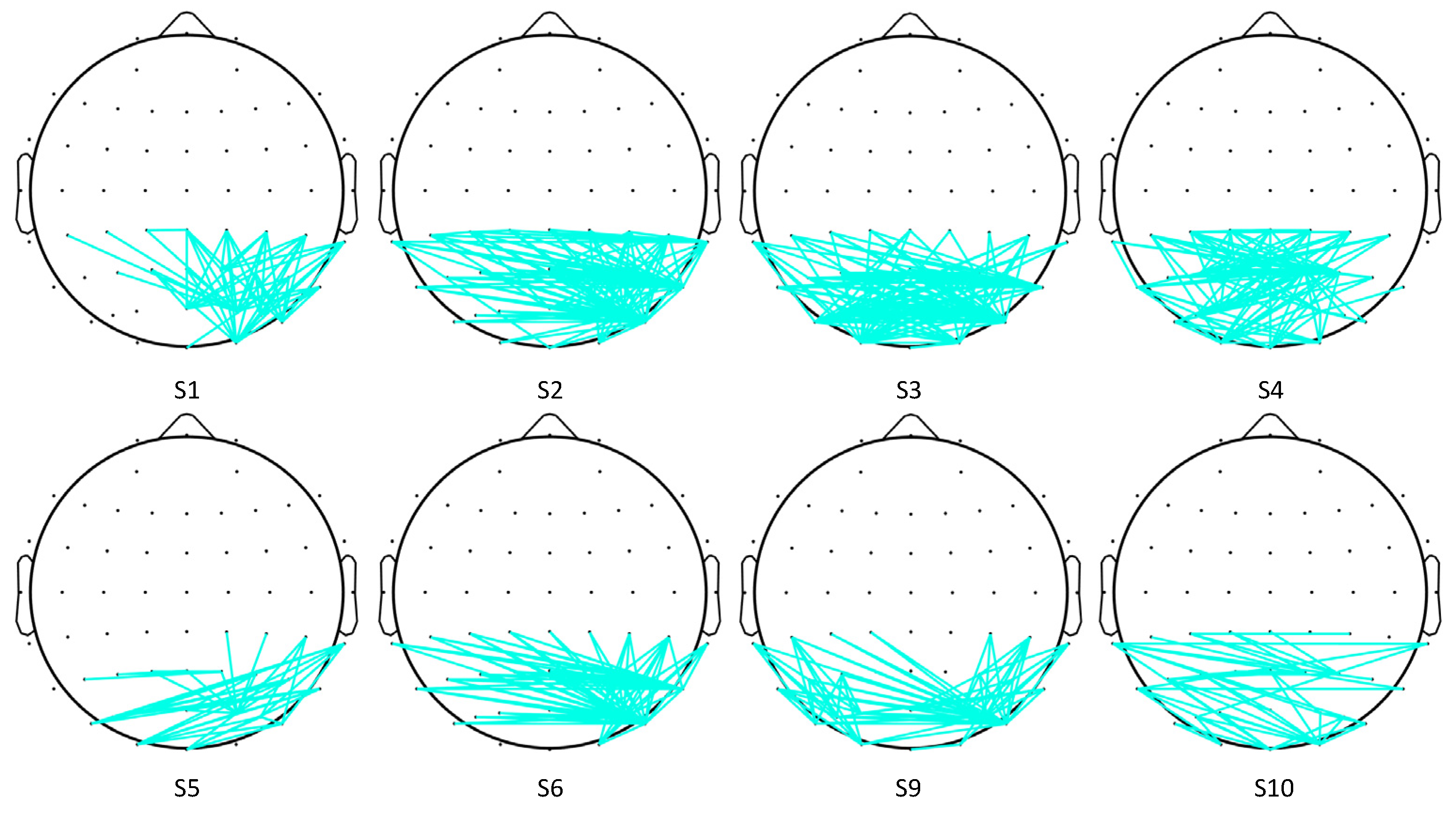

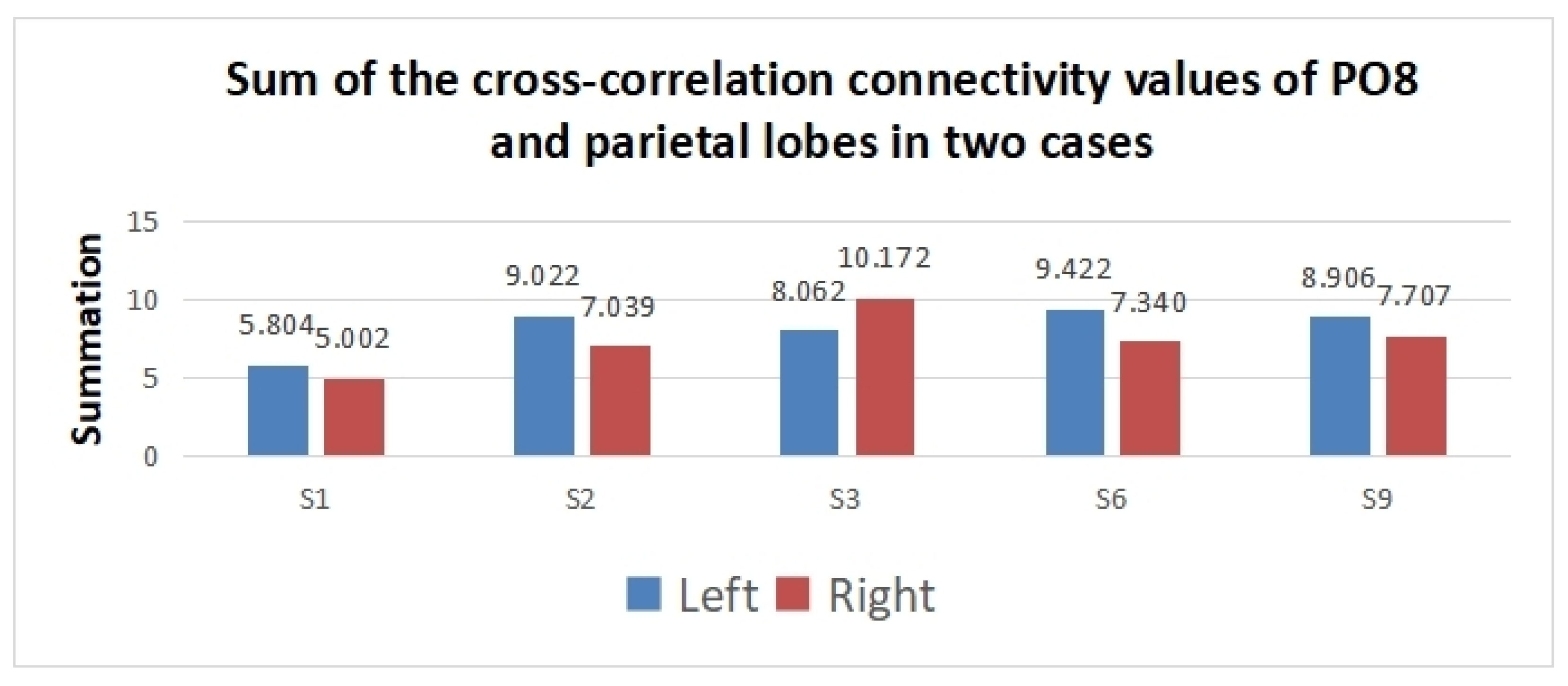

4.2.3. Analysis of Coherence Features

5. Spatial Feature-Based Discrimination Model for SVI

5.1. Structure of Discrimination Model

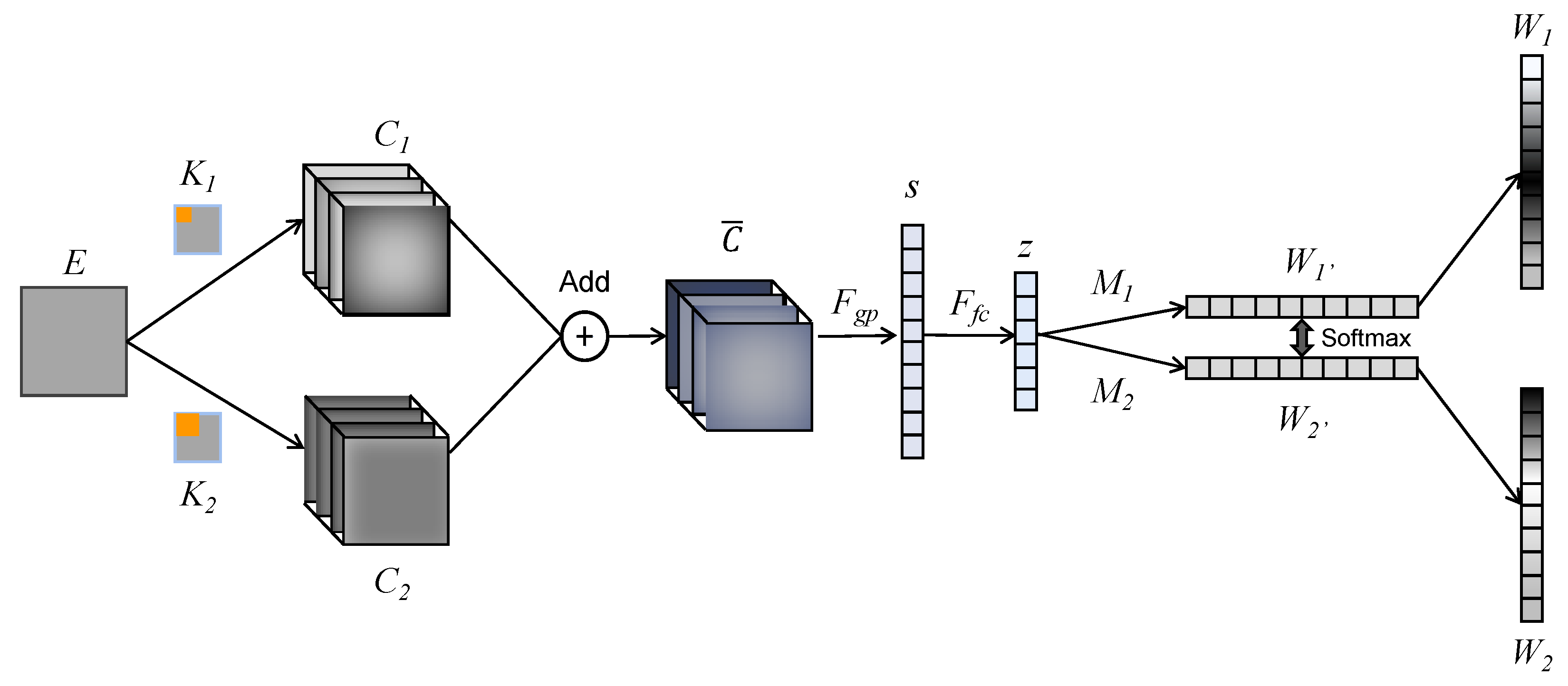

5.2. Selective Kernel Network (SKN)-Based SVI Discrimination Model

5.3. Data Processing

5.4. Discrimination Performance

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Bourdot, P.; Convard, T.; Picon, F.; Ammi, M.; Touraine, D.; Vézien, J.M. VR–CAD Integration: Multimodal Immersive Interaction and Advanced Haptic Paradigms for Implicit Edition of CAD Models. Comput.-Aided Des. 2010, 42, 445–461. [Google Scholar] [CrossRef]

- Jowers, I.; Prats, M.; McKay, A.; Garner, S. Evaluating an Eye Tracking Interface for a Two-Dimensional Sketch Editor. Comput.-Aided Des. 2013, 45, 923–936. [Google Scholar] [CrossRef]

- Kou, X.Y.; Xue, S.K.; Tan, S.T. Knowledge-Guided Inference for Voice-Enabled CAD. Comput.-Aided Des. 2010, 42, 545–557. [Google Scholar] [CrossRef]

- Song, J.; Cho, S.; Baek, S.-Y.; Lee, K.; Bang, H. GaFinC: Gaze and Finger Control Interface for 3D Model Manipulation in CAD Application. Comput.-Aided Des. 2014, 46, 239–245. [Google Scholar] [CrossRef]

- Fu, R.; Wang, H.; Han, M.; Han, D.; Sun, J. Scaling Analysis of Phase Fluctuations of Brain Networks in Dynamic Constrained Object Manipulation. Int. J. Neural Syst. 2020, 30, 2050002. [Google Scholar] [CrossRef] [PubMed]

- Milner, T.E.; Franklin, D.W.; Imamizu, H.; Kawato, M. Central Control of Grasp: Manipulation of Objects with Complex and Simple Dynamics. NeuroImage 2007, 36, 388–395. [Google Scholar] [CrossRef]

- Shankar, S.S.; Verma, A.; Rai, R. Creating by Imagining: Use of Natural and Intuitive BCI in 3D CAD Modeling. In Proceedings of the ASME Design Engineering Technical Conference, Portland, OR, USA, 4–7 August 2013; Volume 55850. [Google Scholar]

- Riečanský, I.; Katina, S. Induced EEG Alpha Oscillations Are Related to Mental Rotation Ability: The Evidence for Neural Efficiency and Serial Processing. Neurosci. Lett. 2010, 482, 133–136. [Google Scholar] [CrossRef]

- Bascil, M.S.; Tesneli, A.Y.; Temurtas, F. Multi-Channel EEG Signal Feature Extraction and Pattern Recognition on Horizontal Mental Imagination Task of 1-D Cursor Movement for Brain Computer Interface. Australas. Phys. Eng. Sci. Med. 2015, 38, 229–239. [Google Scholar] [CrossRef]

- Ajrawi, S.; Rao, R.; Sarkar, M. Cybersecurity in Brain-Computer Interfaces: RFID-Based Design-Theoretical Framework. Inform. Med. Unlocked 2021, 22, 100489. [Google Scholar] [CrossRef]

- Li, J.; Zhang, L. Bilateral Adaptation and Neurofeedback for Brain Computer Interface System. J. Neurosci. Methods 2010, 193, 373–379. [Google Scholar] [CrossRef]

- Allison, B.Z.; Brunner, C.; Altstätter, C.; Wagner, I.C.; Grissmann, S.; Neuper, C. A Hybrid ERD/SSVEP BCI for Continuous Simultaneous Two Dimensional Cursor Control. J. Neurosci. Methods 2012, 209, 299–307. [Google Scholar] [CrossRef] [PubMed]

- Shankar, S.S.; Rai, R. Human Factors Study on the Usage of BCI Headset for 3D CAD Modeling. Comput.-Aided Des. 2014, 54, 51–55. [Google Scholar] [CrossRef]

- Bi, L.; Lian, J.; Jie, K.; Lai, R.; Liu, Y. A Speed and Direction-Based Cursor Control System with P300 and SSVEP. Biomed. Signal Process. Control. 2014, 14, 126–133. [Google Scholar] [CrossRef]

- Fabiani, G.E.; McFarland, D.J.; Wolpaw, J.R.; Pfurtscheller, G. Conversion of EEG Activity into Cursor Movement by a Brain-Computer Interface (BCI). IEEE Trans. Neural Syst. Rehabil. Eng. 2004, 12, 331–338. [Google Scholar] [CrossRef] [PubMed]

- Lakshminarayanan, K.; Shah, R.; Daulat, S.R.; Moodley, V.; Yao, Y.; Madathil, D. The Effect of Combining Action Observation in Virtual Reality with Kinesthetic Motor Imagery on Cortical Activity. Front. Neurosci. 2023, 17, 1201865. [Google Scholar] [CrossRef] [PubMed]

- Lakshminarayanan, K.; Shah, R.; Yao, Y.; Madathil, D. The Effects of Subthreshold Vibratory Noise on Cortical Activity during Motor Imagery. Mot. Control 2023, 27, 559–572. [Google Scholar] [CrossRef]

- Kosmyna, N.; Lindgren, J.T.; Lécuyer, A. Attending to Visual Stimuli versus Performing Visual Imagery as a Control Strategy for EEG-Based Brain-Computer Interfaces. Sci. Rep. 2018, 8, 13222. [Google Scholar] [CrossRef]

- Yang, Y.J.; Jeon, E.J.; Kim, J.S.; Chung, C.K. Characterization of Kinesthetic Motor Imagery Compared with Visual Motor Imageries. Sci. Rep. 2021, 11, 3751. [Google Scholar] [CrossRef]

- Sousa, T.; Amaral, C.; Andrade, J.; Pires, G.; Nunes, U.J.; Castelo-Branco, M. Pure Visual Imagery as a Potential Approach to Achieve Three Classes of Control for Implementation of BCI in Non-Motor Disorders. J. Neural Eng. 2017, 14, 046026. [Google Scholar] [CrossRef]

- Wen, D.; Yuan, J.; Zhou, Y.; Xu, J.; Song, H.; Liu, Y.; Xu, Y.; Jung, T.-P. The EEG Signal Analysis for Spatial Cognitive Ability Evaluation Based on Multivariate Permutation Conditional Mutual Information-Multi-Spectral Image. IEEE Trans. Neural Syst. Rehabil. Eng. 2020, 28, 2113–2122. [Google Scholar] [CrossRef]

- Wen, D.; Li, R.; Jiang, M.; Li, J.; Liu, Y.; Dong, X.; Saripan, M.I.; Song, H.; Han, W.; Zhou, Y. Multi-Dimensional Conditional Mutual Information with Application on the EEG Signal Analysis for Spatial Cognitive Ability Evaluation. Neural Netw. 2022, 148, 23–36. [Google Scholar] [CrossRef]

- Brosch, R. What We ‘See’ When We Read: Visualization and Vividness in Reading Fictional Narratives. Cortex 2018, 105, 135–143. [Google Scholar] [CrossRef]

- Sack, A.T.; Schuhmann, T. Hemispheric Differences within the Fronto-Parietal Network Dynamics Underlying Spatial Imagery. Front. Psychol. 2012, 3, 214. [Google Scholar] [CrossRef]

- Seto, Y.; Ako, S.; Sakagami, K.; Miura, H.; Matsuda, N.; Soga, M.; Taki, H. Classification by EEG Frequency Distribution in Imagination of Directions. Procedia Comput. Sci. 2014, 35, 1300–1306. [Google Scholar] [CrossRef]

- Tomonaga, K.; Wakamizu, S.; Kobayashi, J. Experiments on Classification of Electroencephalography (EEG) Signals in Imagination of Direction Using a Wireless Portable EEG Headset. In Proceedings of the 2015 15th International Conference on Control, Automation and Systems (ICCAS), Busan, Republic of Korea, 13–16 October 2015; IEEE: New York, NY, USA, 2015; pp. 1805–1810. [Google Scholar]

- Koizumi, K.; Ueda, K.; Nakao, M. Development of a Cognitive Brain-Machine Interface Based on a Visual Imagery Method. In Proceedings of the 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Honolulu, HI, USA, 18–21 July 2018; IEEE: New York, NY, USA, 2018; pp. 1062–1065. [Google Scholar]

- Emmerling, T.C.; Zimmermann, J.; Sorger, B.; Frost, M.A.; Goebel, R. Decoding the Direction of Imagined Visual Motion Using 7 T Ultra-High Field FMRI. NeuroImage 2016, 125, 61–73. [Google Scholar] [CrossRef] [PubMed]

- Bascil, M.S.; Tesneli, A.Y.; Temurtas, F. Spectral Feature Extraction of EEG Signals and Pattern Recognition during Mental Tasks of 2-D Cursor Movements for BCI Using SVM and ANN. Australas. Phys. Eng. Sci. Med. 2016, 39, 665–676. [Google Scholar] [CrossRef] [PubMed]

- Leocani, L.; Toro, C.; Manganotti, P.; Zhuang, P.; Hallett, M. Event-Related Coherence and Event-Related Desynchronization/Synchronization in the 10 Hz and 20 Hz EEG during Self-Paced Movements. Electroencephalogr. Clin. Neurophysiol./Evoked Potentials Sect. 1997, 104, 199–206. [Google Scholar] [CrossRef] [PubMed]

- Li, M.-A.; Wang, Y.-F.; Jia, S.-M.; Sun, Y.-J.; Yang, J.-F. Decoding of Motor Imagery EEG Based on Brain Source Estimation. Neurocomputing 2019, 339, 182–193. [Google Scholar] [CrossRef]

- Qiu, Z.; Jin, J.; Lam, H.-K.; Zhang, Y.; Wang, X.; Cichocki, A. Improved SFFS Method for Channel Selection in Motor Imagery Based BCI. Neurocomputing 2016, 207, 519–527. [Google Scholar] [CrossRef]

- Zhang, R.; Zong, Q.; Dou, L.; Zhao, X. A Novel Hybrid Deep Learning Scheme for Four-Class Motor Imagery Classification. J. Neural Eng. 2019, 16, 066004. [Google Scholar] [CrossRef]

- Ramoser, H.; Muller-Gerking, J.; Pfurtscheller, G. Optimal Spatial Filtering of Single Trial EEG during Imagined Hand Movement. IEEE Trans. Rehabil. Eng. 2000, 8, 441–446. [Google Scholar] [CrossRef] [PubMed]

- Ang, K.K.; Chin, Z.Y.; Zhang, H.; Guan, C. Filter Bank Common Spatial Pattern (FBCSP) in Brain-Computer Interface. In Proceedings of the 2008 IEEE International Joint Conference on Neural Networks (IEEE World Congress on Computational Intelligence), Hong Kong, China, 1–8 June 2008; IEEE: New York, NY, USA, 2008; pp. 2390–2397. [Google Scholar]

- Fu, R.; Tian, Y.; Shi, P.; Bao, T. Automatic Detection of Epileptic Seizures in EEG Using Sparse CSP and Fisher Linear Discrimination Analysis Algorithm. J. Med. Syst. 2020, 44, 43. [Google Scholar] [CrossRef] [PubMed]

- Robinson, N.; Vinod, A.P.; Ang, K.K.; Tee, K.P.; Guan, C.T. EEG-Based Classification of Fast and Slow Hand Movements Using Wavelet-CSP Algorithm. IEEE Trans. Biomed. Eng. 2013, 60, 2123–2132. [Google Scholar] [CrossRef]

- Feng, Z.; Qian, L.; Hu, H.; Sun, Y. Functional Connectivity for Motor Imaginary Recognition in Brain-Computer Interface. In Proceedings of the 2020 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Toronto, ON, Canada, 11–14 October 2020; IEEE: New York, NY, USA, 2020; pp. 3678–3682. [Google Scholar]

- Wang, Y.; Hong, B.; Gao, X.; Gao, S. Phase Synchrony Measurement in Motor Cortex for Classifying Single-Trial EEG during Motor Imagery. In Proceedings of the 2006 International Conference of the IEEE Engineering in Medicine and Biology Society, New York, NY, USA, 30 August–3 September 2006; IEEE: New York, NY, USA, 2006; pp. 75–78. [Google Scholar]

- Weiss, S.; Mueller, H.M. The Contribution of EEG Coherence to the Investigation of Language. Brain Lang. 2003, 85, 325–343. [Google Scholar] [CrossRef]

- Li, F.; Peng, W.; Jiang, Y.; Song, L.; Liao, Y.; Yi, C.; Zhang, L.; Si, Y.; Zhang, T.; Wang, F.; et al. The Dynamic Brain Networks of Motor Imagery: Time-Varying Causality Analysis of Scalp EEG. Int. J. Neural Syst. 2019, 29, 1850016. [Google Scholar] [CrossRef] [PubMed]

- Chung, Y.G.; Kim, M.K.; Kim, S.P. Inter-Channel Connectivity of Motor Imagery EEG Signals for a Noninvasive BCI Application. In Proceedings of the 2011 International Workshop on Pattern Recognition in NeuroImaging, Seoul, Republic of Korea, 16–18 May 2011; IEEE: New York, NY, USA, 2011; pp. 49–52. [Google Scholar]

- Nunez, P.L.; Srinivasan, R.; Westdorp, A.F.; Wijesinghe, R.S.; Tucker, D.M.; Silberstein, R.B.; Cadusch, P.J. EEG Coherency. Electroencephalogr. Clin. Neurophysiol. 1997, 103, 499–515. [Google Scholar] [CrossRef]

- Mendes, D.; Caputo, F.M.; Giachetti, A.; Ferreira, A.; Jorge, J. A Survey on 3D Virtual Object Manipulation: From the Desktop to Immersive Virtual Environments. Comput. Graph. Forum 2019, 38, 21–45. [Google Scholar] [CrossRef]

- Jimenez, I.A.C.; Acevedo, J.S.G.; Olivetti, E.C.; Marcolin, F.; Ulrich, L.; Moos, S.; Vezzetti, E. User Engagement Comparison between Advergames and Traditional Advertising Using EEG: Does the User’s Engagement Influence Purchase Intention? Electronics 2023, 12, 122. [Google Scholar] [CrossRef]

- Lakshminarayanan, K.; Shah, R.; Daulat, S.R.; Moodley, V.; Yao, Y.; Sengupta, P.; Ramu, V.; Madathil, D. Evaluation of EEG Oscillatory Patterns and Classification of Compound Limb Tactile Imagery. Brain Sci. 2023, 13, 656. [Google Scholar] [CrossRef]

- Marks, D.F. Visual imagery differences in the recall of pictures. Br. J. Psychol. 1973, 64, 17–24. [Google Scholar] [CrossRef]

- Delorme, A.; Makeig, S. EEGLAB: An Open Source Toolbox for Analysis of Single-Trial EEG Dynamics including Independent Component Analysis. J. Neurosci. Methods 2004, 134, 9–21. [Google Scholar] [CrossRef]

- Rousselet, G.A.; Thorpe, S.J.; Fabre-Thorpe, M. How Parallel Is Visual Processing in the Ventral Pathway? Trends Cogn. Sci. 2004, 8, 363–370. [Google Scholar] [CrossRef]

- Tobimatsu, S.; Shigeto, H.; Arakawa, K.; Kato, M. Electrophysiological Studies of Parallel Visual Processing in Humans. Electroencephalogr. Clin. Neurophysiol. 1999, 49, 103–107. [Google Scholar]

- Mishkin, M.; Ungerleider, L.G.; Macko, K.A. Object Vision and Spatial Vision: Two Cortical Pathways. Trends Neurosci. 1983, 6, 414–417. [Google Scholar] [CrossRef]

- Donner, T.H.; Siegel, M.; Oostenveld, R.; Fries, P.; Bauer, M.; Engel, A.K. Population Activity in the Human Dorsal Pathway Predicts the Accuracy of Visual Motion Detection. J. Neurophysiol. 2007, 98, 345–359. [Google Scholar] [CrossRef] [PubMed]

- Bértolo, H.; Paiva, T.; Pessoa, L.; Mestre, T.; Marques, R.; Santos, R. Visual Dream Content, Graphical Representation and EEG Alpha Activity in Congenitally Blind Subjects. Cogn. Brain Res. 2003, 15, 277–284. [Google Scholar] [CrossRef] [PubMed]

- Xie, S.; Kaiser, D.; Cichy, R.M. Visual Imagery and Perception Share Neural Representations in the Alpha Frequency Band. Curr. Biol. 2020, 30, 2621–2627.e5. [Google Scholar] [CrossRef] [PubMed]

- Jamaloo, F.; Mikaeili, M. Discriminative Common Spatial Pattern Sub-Bands Weighting Based on Distinction Sensitive Learning Vector Quantization Method in Motor Imagery Based Brain-Computer Interface. J. Med. Signals Sens. 2015, 5, 156. [Google Scholar] [CrossRef] [PubMed]

- Blankertz, B.; Tomioka, R.; Lemm, S.; Kawanabe, M.; Muller, K.-R. Optimizing Spatial Filters for Robust EEG Single-Trial Analysis. IEEE Signal Process. Mag. 2008, 25, 41–56. [Google Scholar] [CrossRef]

- Chandaka, S.; Chatterjee, A.; Munshi, S. Cross-Correlation Aided Support Vector Machine Classifier for Classification of EEG Signals. Expert Syst. Appl. 2009, 36, 1329–1336. [Google Scholar] [CrossRef]

- Costa, A.; Møller, J.; Iversen, H.; Puthusserypady, S. An Adaptive CSP Filter to Investigate User Independence in a 3-Class MI-BCI Paradigm. Comput. Biol. Med. 2018, 103, 24–33. [Google Scholar] [CrossRef] [PubMed]

- Masood, N.; Farooq, H. Investigating EEG Patterns for Dual-Stimuli Induced Human Fear Emotional State. Sensors 2019, 19, 522. [Google Scholar] [CrossRef]

- Masood, N.; Farooq, H.; Mustafa, I. Selection of EEG Channels Based on Spatial Filter Weights. In Proceedings of the 2017 International Conference on Communication, Computing and Digital Systems (C-CODE), Islamabad, Pakistan, 8–9 March 2017; IEEE: New York, NY, USA, 2017; pp. 341–345. [Google Scholar]

- Scrivener, C.L.; Reader, A.T. Variability of EEG Electrode Positions and Their Underlying Brain Regions: Visualizing Gel Artifacts from a Simultaneous EEG-fMRI Dataset. Brain Behav. 2022, 12, e2476. [Google Scholar] [CrossRef]

- Martinez-Trujillo, J.C.; Cheyne, D.; Gaetz, W.; Simine, E.; Tsotsos, J.K. Activation of Area MT/V5 and the Right Inferior Parietal Cortex during the Discrimination of Transient Direction Changes in Translational Motion. Cereb. Cortex 2007, 17, 1733–1739. [Google Scholar] [CrossRef] [PubMed]

- Xiang, L.; Wang, W.; Hu, X.; Yang, J. Selective Kernel Networks. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; IEEE: New York, NY, USA, 2019; pp. 510–519. [Google Scholar]

- Zhou, Y.; Yu, T.; Gao, W.; Huang, W.; Lu, Z.; Huang, Q.; Li, Y. Shared Three-Dimensional Robotic Arm Control Based on Asynchronous BCI and Computer Vision. IEEE Trans. Neural Syst. Rehabil. Eng. 2023, 31, 3163–3175. [Google Scholar] [CrossRef] [PubMed]

| ID | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 |

|---|---|---|---|---|---|---|---|---|---|---|

| Score | 58 | 72 | 66 | 70 | 59 | 68 | 54 | 58 | 70 | 56 |

| ID | Strategy |

|---|---|

| 1 | Imagine the movement of the screw itself |

| 2 | Imagine the movement of the screw itself |

| 3 | Imagine big arrows following the screw |

| 4 | Imagine the movement of the screw itself |

| 5 | Imagine the movement of the screw itself |

| 6 | Imagine an extending strap following the screw |

| 7 | Imagine the movement of the screw itself |

| 8 | Imagine a series of arrows following the screw |

| 9 | Imagine the movement of the screw itself |

| 10 | Imagine the movement of the screw itself |

| ID | f1 | f2 | f3 | f4 | f5 | f6 |

|---|---|---|---|---|---|---|

| 1 | p = 2.6 × 10−8 h = 1 | p = 5.5 × 10−1 h = 0 | p = 7.0 × 10−1 h = 0 | p = 1.8 × 10−1 h = 0 | p = 3.5 × 10−5 h = 1 | p = 3.4 × 10−12 h = 1 |

| 2 | p = 8.4 × 10−12 h = 1 | p = 1.4 × 10−3 h = 1 | p = 4.0 × 10−2 h = 1 | p = 5.8 × 10−4 h = 1 | p = 8.6 × 10−4 h = 1 | p = 9.2 × 10−6 h = 1 |

| 3 | p = 1.6 × 10−1 h = 0 | p = 2.5 × 10−3 h = 1 | p = 2.5 × 10−3 h = 1 | p = 9.6 × 10−2 h = 0 | p = 2.1 × 10−3 h = 1 | p = 5.2 × 10−4 h = 1 |

| 4 | p = 2.3 × 10−4 h = 1 | p = 2.9 × 10−2 h = 1 | p = 1.7 × 10−2 h = 1 | p = 6.5 × 10−1 h = 0 | p = 1.7 × 10−1 h = 0 | p = 1.2 × 10−5 h = 1 |

| 5 | p = 1.3 × 10−4 h = 1 | p = 4.3 × 10−6 h = 1 | p = 1.4 × 10−1 h = 0 | p = 5.3 × 10−1 h = 0 | p = 1.8 × 10−3 h = 1 | p = 2.3 × 10−4 h = 1 |

| 6 | p = 2.0 × 10−5 h = 1 | p = 9.8 × 10−5 h = 1 | p = 5.2 × 10−1 h = 0 | p = 1.8 × 10−1 h = 0 | p = 9.4 × 10−3 h = 1 | p = 1.3 × 10−5 h = 1 |

| 7 | p = 4.9 × 10−4 h = 1 | p = 6.2 × 10−6 h = 1 | p = 3.8 × 10−2 h = 1 | p = 3.7 × 10−2 h = 1 | p = 5.5 × 10−3 h = 1 | p = 1.7 × 10−2 h = 1 |

| 8 | p = 4.4 × 10−3 h = 1 | p = 1.1 × 10−1 h = 0 | p = 1.5 × 10−3 h = 1 | p = 4.5 × 10−2 h = 1 | p = 1.9 × 10−1 h = 0 | p = 8.0 × 10−8 h = 1 |

| 9 | p = 1.3 × 10−6 h = 1 | p = 4.5 × 10−3 h = 1 | p = 3.4 × 10−1 h = 0 | p = 9.3 × 10−1 h = 0 | p = 3.1 × 10−4 h = 1 | p = 1.1 × 10−5 h = 1 |

| 10 | p = 5.1 × 10−4 h = 1 | p = 9.9 × 10−2 h = 0 | p = 1.5 × 10−1 h = 0 | p = 7.1 × 10−1 h = 0 | p = 4.5 × 10−4 h = 1 | p = 9.5 × 10−4 h = 1 |

| Parameter | Value |

|---|---|

| Ratio of training set to testing set | 3:1 |

| Optimizer | Adam |

| Loss function | Binary crossentropy |

| Learning rate | 5 × 10−4 |

| Dropout value | 0.2 |

| Training epochs | 400 |

| ID | OD+CNN | PSD+SVM | HHTMS +SVM | CSP+SVM | Cross-Correlation +SKN | Coherence+SKN | Three Inputs+ MFFM |

|---|---|---|---|---|---|---|---|

| 1 | 0.70 | 0.68 | 0.52 | 0.85 | 0.84 | 0.75 | 0.87 |

| 2 | 0.75 | 0.72 | 0.62 | 0.87 | 0.87 | 0.84 | 0.93 |

| 3 | 0.76 | 0.68 | 0.65 | 0.75 | 0.78 | 0.84 | 0.86 |

| 4 | 0.68 | 0.73 | 0.66 | 0.87 | 0.74 | 0.65 | 0.75 |

| 5 | 0.60 | 0.70 | 0.65 | 0.76 | 0.75 | 0.78 | 0.83 |

| 6 | 0.70 | 0.70 | 0.68 | 0.81 | 0.82 | 0.88 | 0.92 |

| 7 | 0.72 | 0.67 | 0.66 | 0.79 | 0.72 | 0.59 | 0.63 |

| 8 | 0.68 | 0.61 | 0.60 | 0.82 | 0.71 | 0.66 | 0.73 |

| 9 | 0.80 | 0.69 | 0.62 | 0.84 | 0.83 | 0.83 | 0.88 |

| 10 | 0.63 | 0.55 | 0.58 | 0.80 | 0.78 | 0.68 | 0.76 |

| Average | 0.70 | 0.67 | 0.62 | 0.82 | 0.78 | 0.75 | 0.82 |

| Method | CSP+SVM | Cross-Correlation+SKN | Coherence+SKN | Multi-Input +MFFM |

|---|---|---|---|---|

| OD+CNN | 1.08 × 10−4 | 1.30 × 10−3 | 0.17 | 4.90 × 10−3 |

| PSD+SVM | 3.57 × 10−6 | 2.13 × 10−5 | 3.93 × 10−2 | 6.45 × 10−4 |

| HHTMS+SVM | 2.00 × 10−8 | 3.14 × 10−8 | 1.90 × 10−3 | 2.20 × 10−5 |

| ID | Method | Accuracy | AUC | Precision | Recall | F-Measure |

|---|---|---|---|---|---|---|

| 1 | MFFM | 0.87 | 0.87 | 0.85 | 0.85 | 0.85 |

| 2 | MFFM | 0.93 | 0.93 | 0.91 | 0.91 | 0.91 |

| 3 | MFFM | 0.86 | 0.84 | 0.83 | 0.83 | 0.83 |

| 4 | CSP+SVM | 0.87 | 0.86 | 0.85 | 0.85 | 0.85 |

| 5 | MFFM | 0.83 | 0.83 | 0.82 | 0.82 | 0.82 |

| 6 | MFFM | 0.92 | 0.92 | 0.90 | 0.90 | 0.90 |

| 7 | CSP+SVM | 0.79 | 0.79 | 0.78 | 0.78 | 0.78 |

| 8 | CSP+SVM | 0.82 | 0.80 | 0.81 | 0.81 | 0.80 |

| 9 | MFFM | 0.88 | 0.88 | 0.87 | 0.87 | 0.87 |

| 10 | CSP+SVM | 0.80 | 0.79 | 0.78 | 0.78 | 0.78 |

| Average | -- | 0.86 | 0.85 | 0.84 | 0.84 | 0.84 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cao, B.; Niu, H.; Hao, J.; Yang, X.; Ye, Z. Spatial Visual Imagery (SVI)-Based Electroencephalograph Discrimination for Natural CAD Manipulation. Sensors 2024, 24, 785. https://doi.org/10.3390/s24030785

Cao B, Niu H, Hao J, Yang X, Ye Z. Spatial Visual Imagery (SVI)-Based Electroencephalograph Discrimination for Natural CAD Manipulation. Sensors. 2024; 24(3):785. https://doi.org/10.3390/s24030785

Chicago/Turabian StyleCao, Beining, Hongwei Niu, Jia Hao, Xiaonan Yang, and Zinian Ye. 2024. "Spatial Visual Imagery (SVI)-Based Electroencephalograph Discrimination for Natural CAD Manipulation" Sensors 24, no. 3: 785. https://doi.org/10.3390/s24030785

APA StyleCao, B., Niu, H., Hao, J., Yang, X., & Ye, Z. (2024). Spatial Visual Imagery (SVI)-Based Electroencephalograph Discrimination for Natural CAD Manipulation. Sensors, 24(3), 785. https://doi.org/10.3390/s24030785