XAI GNSS—A Comprehensive Study on Signal Quality Assessment of GNSS Disruptions Using Explainable AI Technique

Abstract

1. Introduction

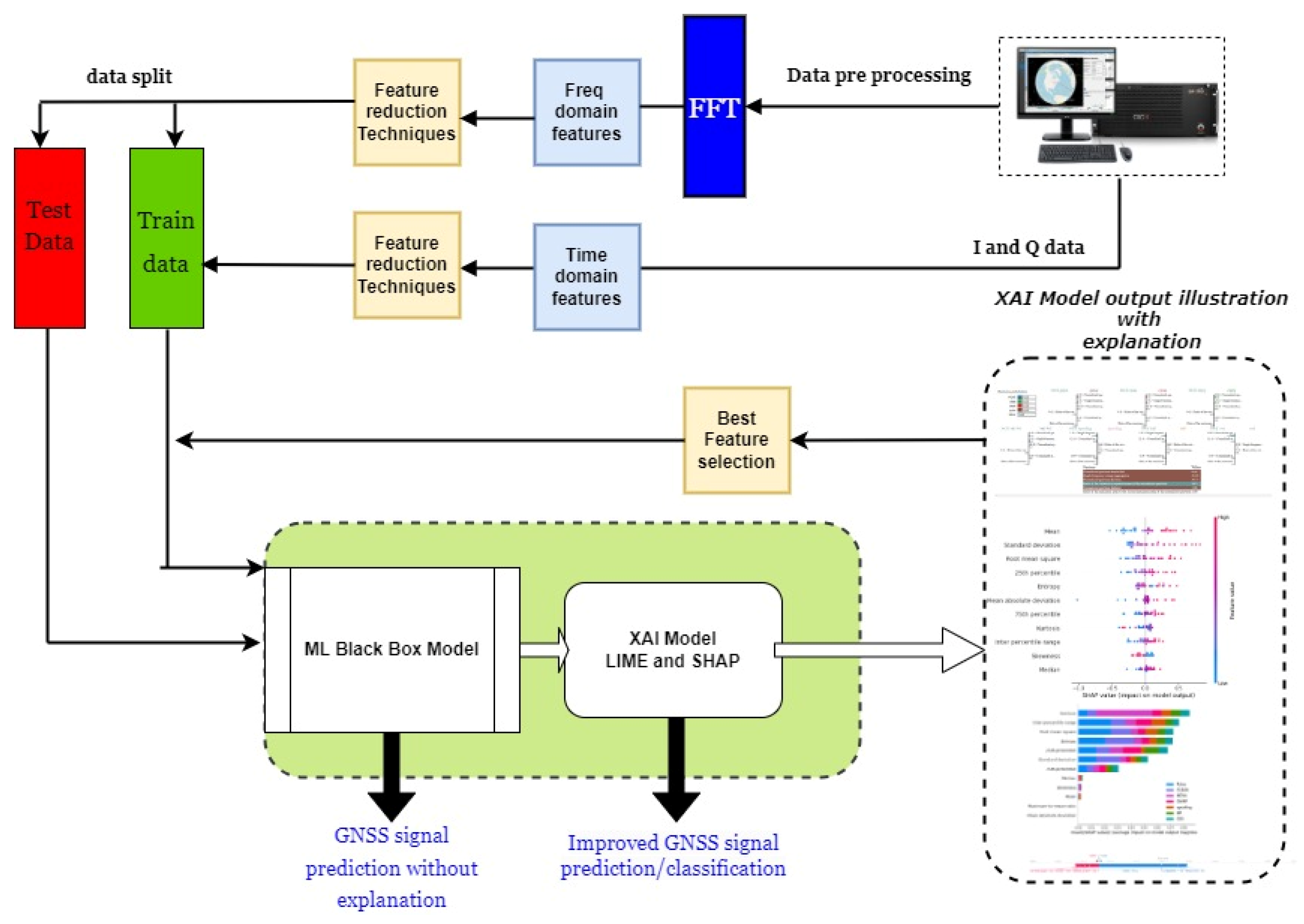

- In this paper, we recorded a synthetic GNSS dataset under various disruptions at different Jamming-to-Signal Ratio (JSR) levels using the Skydel simulator. The dataset was used to evaluate the performances of different ML models on signal prediction/classification tasks.

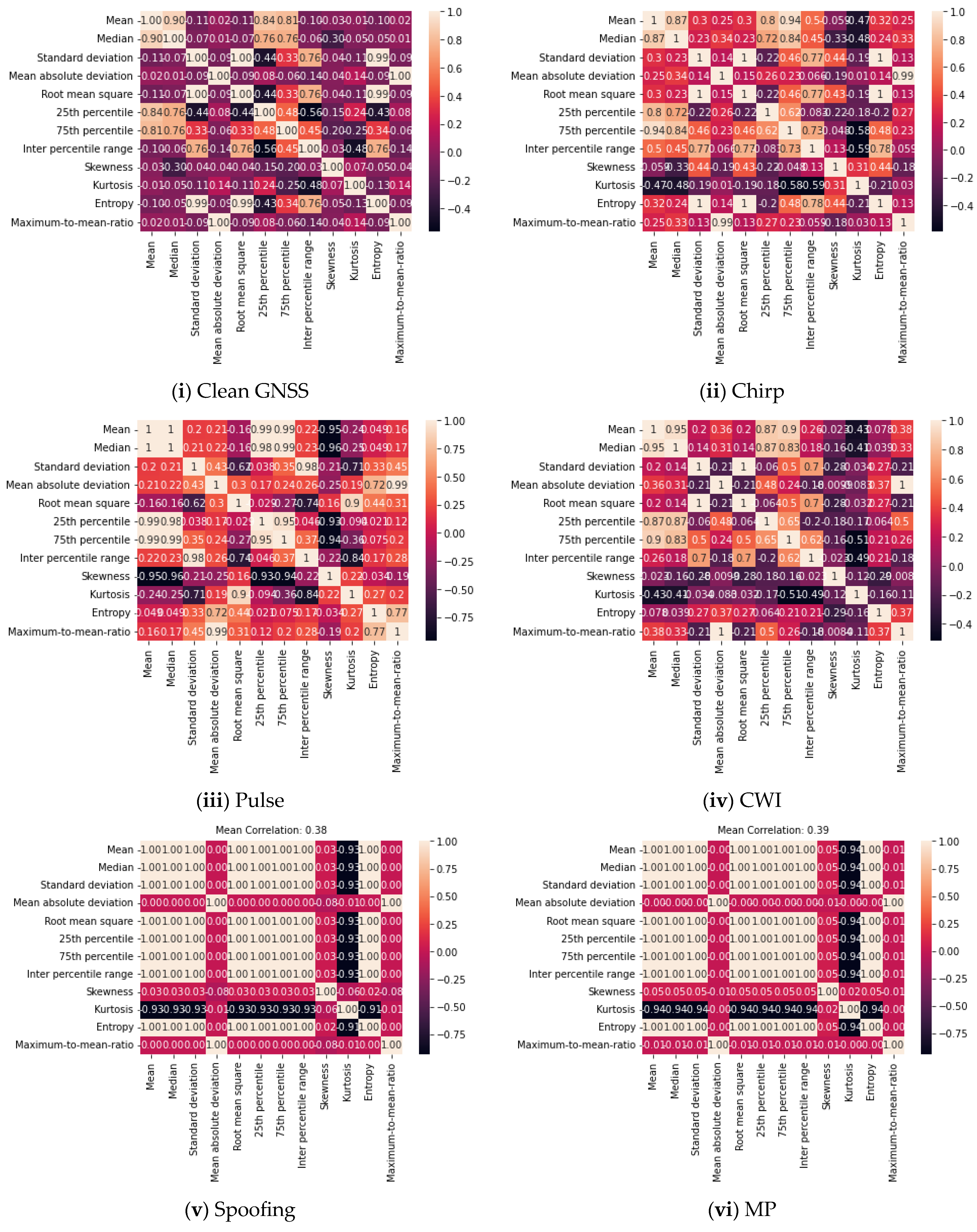

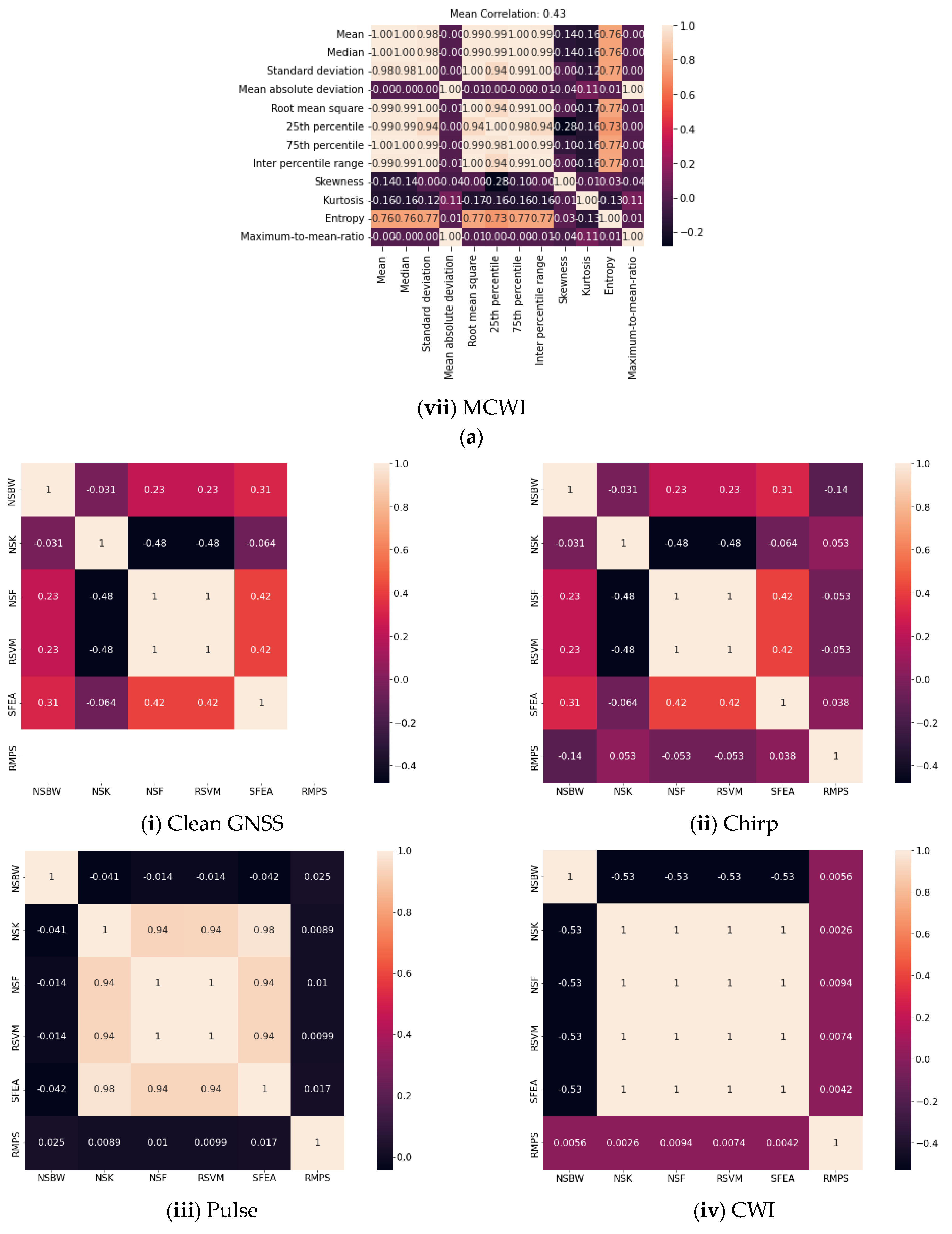

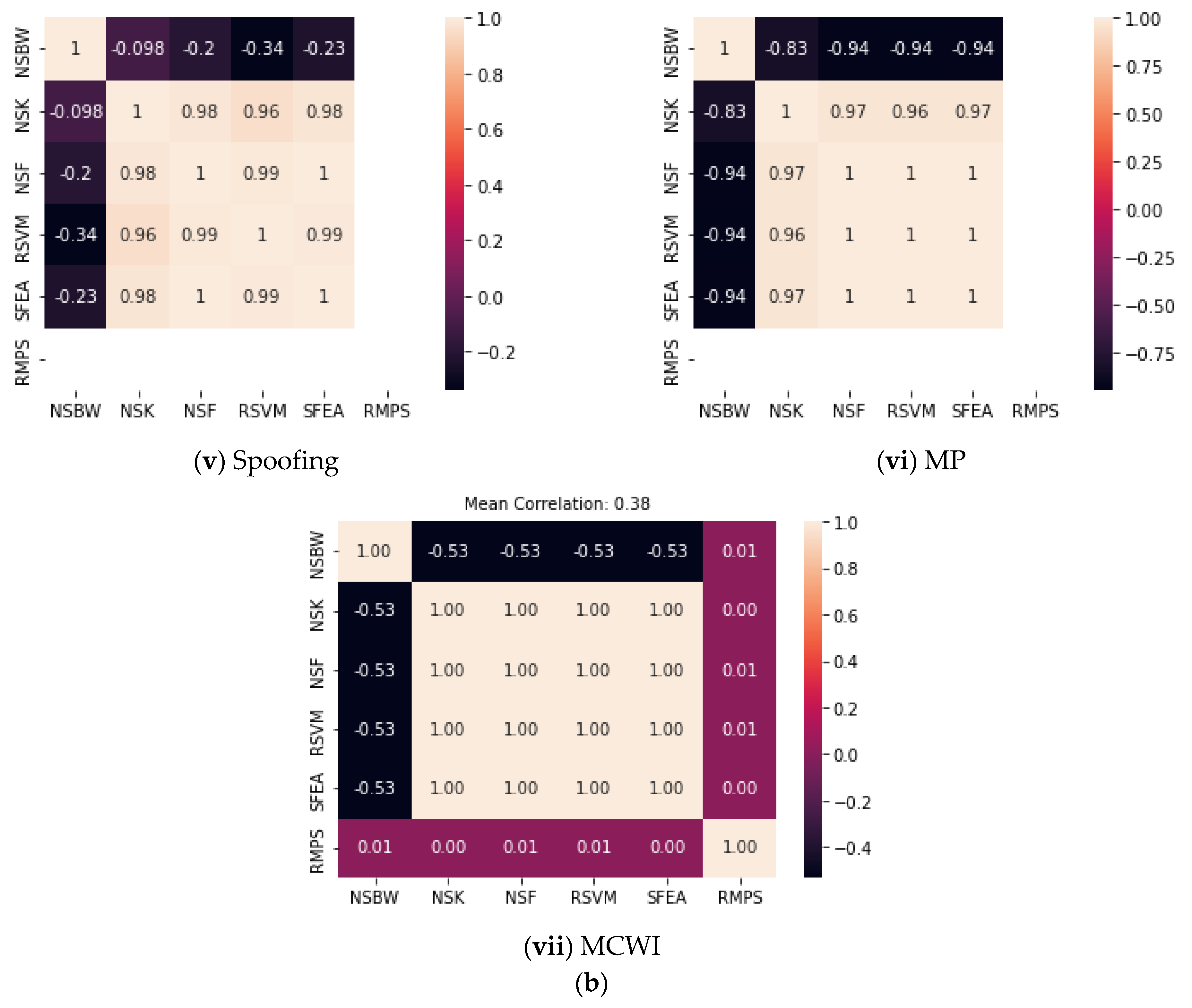

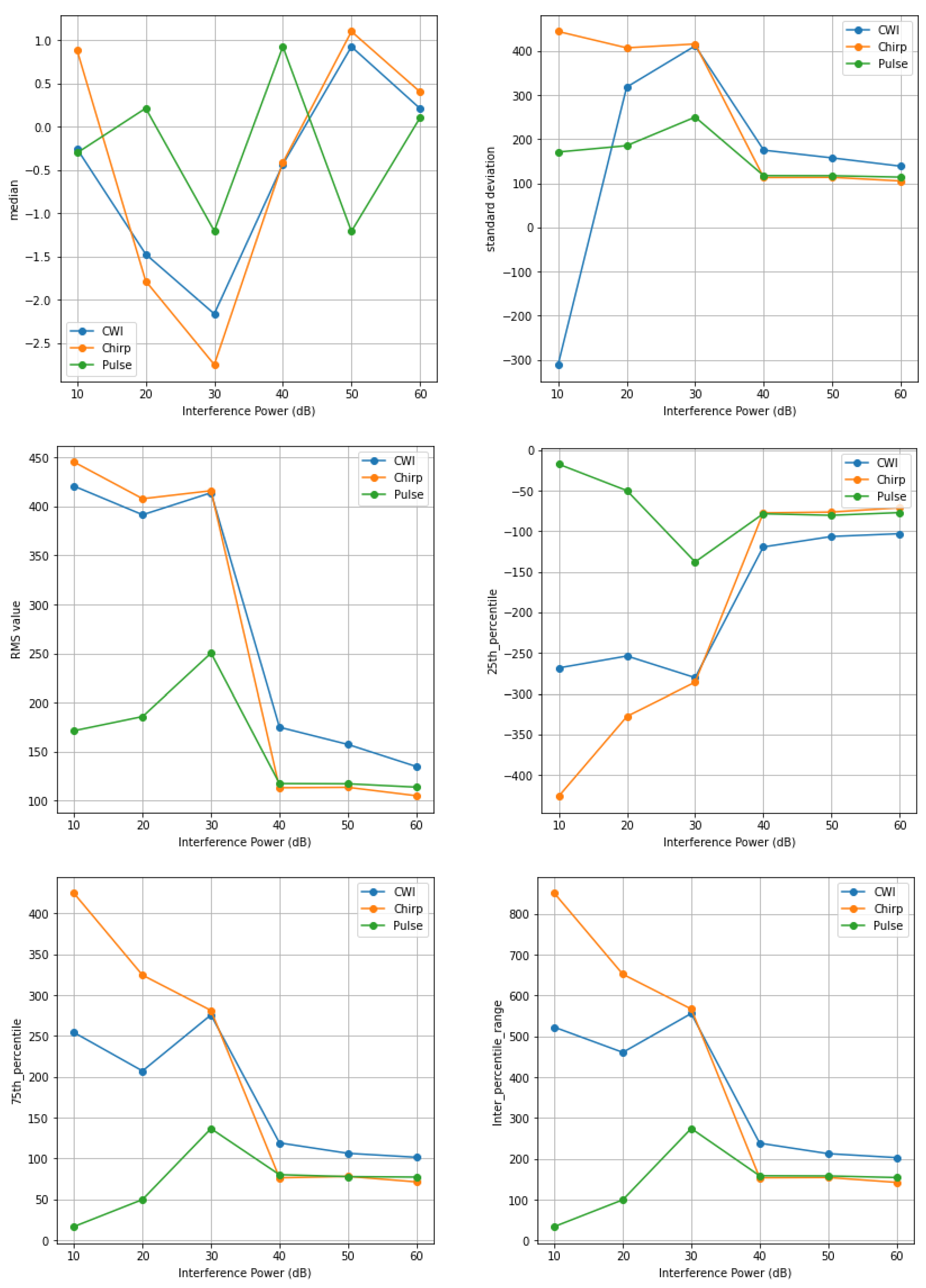

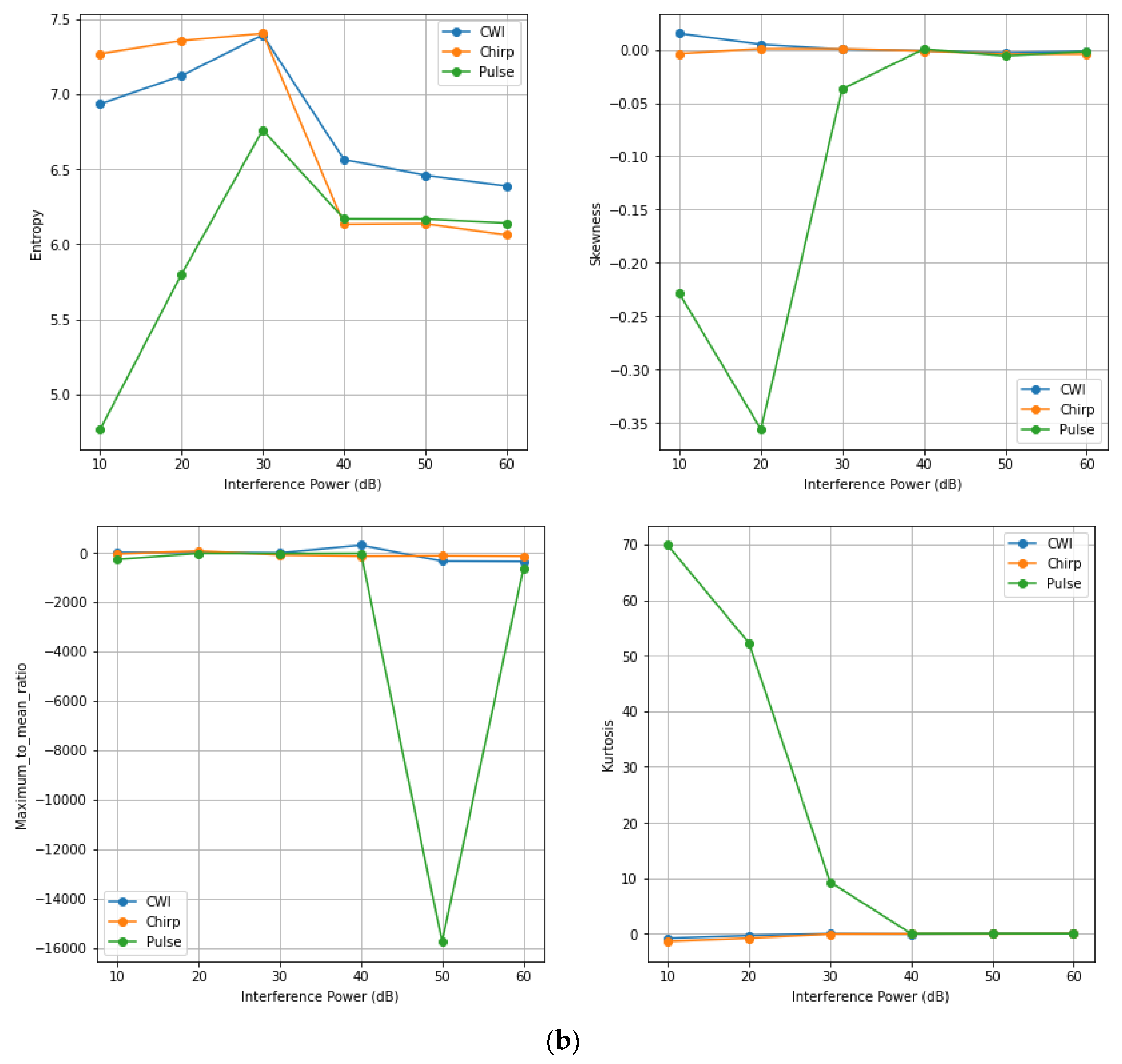

- We extracted and compared a set of attributes (time domain and frequency domain) using the statistical parameters to identify the strongly and weakly correlated features, as visualized in the correlation plot.

- We utilized the most influential features from the global (SHAP) and local explanation (LIME) XAI models in the form of feature rankings, a summary plot, and a forced plot with detailed explanations, using only the key features for predicting and classifying the signal disruption resulted in improved metrics.

2. Literature Review

3. GNSS Signal Disruption Simulation

GNSS Signal Quality Monitoring Setup

4. Statistical Quality Assessment of GNSS Signal

4.1. Time Domain Features

- Mean:

- 2.

- Median:

- 3.

- Standard Deviation:

- 4.

- Mean Absolute Deviation:

- 5.

- Root Mean Square Error:

- 6.

- 25th Percentile:

- 7.

- 75th Percentile:

- 8.

- Inter-percentile Range:

- 9.

- Skewness:

- 10.

- Kurtosis:

- 11.

- Entropy:

- 12.

- Maximum-to-Mean Ratio (MTMR):

4.2. Frequency Domain Features

- Normalized Spectrum Bandwidth (NSBW):

- 2.

- Normalized Spectrum Kurtosis (NSK):

- 3.

- Normalized Spectrum Flatness (NSF):

- 4.

- Ratio of the Variance to the Squared Mean of the Normalized Spectrum (RVSM):

- 5.

- Single-Frequency Energy Aggregation (SFEA):

- 6.

- Ratio of the Maximum Peak to the Second Maximum Peak of the Normalised Spectrum (RMPS):

5. XAI Analysis for GNSS Signal Disruption Classification

5.1. Data Preparation

5.2. ML Algorithm Training and Testing

5.3. Local Explanations Results—LIME Technique

5.4. Local Explanations Results—SHAP Technique

6. Discussion

Major Findings and Future Scope

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Sokolova, N.; Morrison, A.; Diez, A. Characterization of the GNSS RFI Threat to DFMC GBAS Signal Bands. Sensors 2022, 22, 8587. [Google Scholar] [CrossRef] [PubMed]

- Kaasalainen, S.; Mäkelä, M.; Ruotsalainen, L.; Malmivirta, T.; Fordell, T.; Hanhijärvi, K.; Wallin, A.E.; Lindvall, T.; Nikolskiy, S.; Olkkonen, M.K.; et al. Reason—Resilience and Security of Geospatial Data for Critical Infrastructures. In Proceedings of the CEUR Workshop Proceedings, Luxembourg, 3–4 December 2020; Volume 2880. [Google Scholar]

- Abraha, K.E.; Frisk, A.; Wiklund, P. GNSS Interference Monitoring and Detection Based on the Swedish CORS Network SWEPOS. J. Géod. Sci. 2024, 14, 20220157. [Google Scholar] [CrossRef]

- Lebrun, S.; Kaloustian, S.; Rollier, R.; Barschel, C. GNSS Positioning Security: Automatic Anomaly Detection on Reference Stations. In Critical Information Infrastructures Security; Percia, D., Mermoud, A., Maillart, T., Eds.; Springer International Publishing: Cham, Switzerland, 2021; pp. 60–76. [Google Scholar]

- Morrison, A.; Sokolova, N.; Gerrard, N.; Rødningsby, A.; Rost, C.; Ruotsalainen, L. Radio-Frequency Interference Considerations for Utility of the Galileo E6 Signal Based on Long-Term Monitoring by ARFIDAAS. J. Inst. Navig. 2023, 70, navi.560. [Google Scholar] [CrossRef]

- Jagiwala, D.; Shah, S.N. Possibilities of AI Algorithm Execution in GNSS. In Proceedings of the URSI Regional Conference on Radio Science (URSI-RCRS 2022), IIT Indore, India, 1–4 December 2022. [Google Scholar]

- Siemuri, A.; Kuusniemi, H.; Elmusrati, M.S.; Valisuo, P.; Shamsuzzoha, A. Machine Learning Utilization in GNSS—Use Cases, Challenges and Future Applications. In Proceedings of the 2021 International Conference on Localization and GNSS (ICL-GNSS), Tampere, Finland, 1–3 June 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Ferre, R.M.; De La Fuente, A.; Lohan, E.S. Jammer Classification in GNSS Bands Via Machine Learning Algorithms. Sensors 2019, 19, 4841. [Google Scholar] [CrossRef] [PubMed]

- Elango, G.A.; Sudha, G.F.; Francis, B. Weak Signal Acquisition Enhancement in Software GPS Receivers—Pre-filtering Combined Post-correlation Detection Approach. Appl. Comput. Inform. 2017, 13, 66–78. [Google Scholar] [CrossRef]

- Jiang, Q.; Yan, S.; Yan, X.; Chen, S.; Sun, J. Data-Driven Individual–Joint Learning Framework for Nonlinear Process Monitoring. Control. Eng. Pract. 2020, 95, 104235. [Google Scholar] [CrossRef]

- Mohanty, A.; Gao, G. A Survey of Machine Learning Techniques for Improving Global Navigation Satellite Systems. EURASIP J. Adv. Signal Process. 2024, 2024, 73. [Google Scholar] [CrossRef]

- Reda, A.; Mekkawy, T. GNSS Jamming Detection Using Attention-Based Mutual Information Feature Selection. Discov. Appl. Sci. 2024, 6, 163. [Google Scholar] [CrossRef]

- van der Merwe, J.R.; Franco, D.C.; Feigl, T.; Rügamer, A. Optimal Machine Learning and Signal Processing Synergies for Low-Resource GNSS Interference Classification. IEEE Trans. Aerosp. Electron. Syst. 2024, 60, 2705–2721. [Google Scholar] [CrossRef]

- Fu, D.; Li, X.; Mou, W.; Ma, M.; Ou, G. Navigation jamming signal recognition based on long short-term memory neural networks. J. Syst. Eng. Electron. 2022, 33, 835–844. [Google Scholar] [CrossRef]

- Le Thi, N.; Männel, B.; Jarema, M.; Seemala, G.K.; Heki, K.; Schuh, H. Selection of an Optimal Algorithm for Outlier Detection in GNSS Time Series. In Proceedings of the EGU General Assembly 2021, Online, 19–30 April 2021. [Google Scholar]

- Al-Amri, R.; Murugesan, R.K.; Man, M.; Abdulateef, A.F.; Al-Sharafi, M.A.; Alkahtani, A.A. A Review of Machine Learning and Deep Learning Techniques for Anomaly Detection in IoT Data. Appl. Sci. 2021, 11, 5320. [Google Scholar] [CrossRef]

- Nassif, A.B.; Abu Talib, M.; Nasir, Q.; Dakalbab, F.M. Machine Learning for Anomaly Detection: A Systematic Review. IEEE Access 2021, 9, 78658–78700. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Savolainen, O.; Elango, A.; Morrison, A.; Sokolova, N.; Ruotsalainen, L. GNSS Anomaly Detection with Complex-Valued LSTM Networks. In Proceedings of the 2024 International Conference on Localization and GNSS (ICL-GNSS), Antwerp, Belgium, 25–27 July 2024; pp. 1–7. [Google Scholar] [CrossRef]

- Elsayed, M.S.; Le-Khac, N.A.; Dev, S.; Jurcut, A.D. DdoSNet: A Deep-Learning Model for Detecting Network Attacks. In Proceedings of the 2020 IEEE 21st International Symposium on “A World of Wireless, Mobile and Multimedia Networks”, Cork, Ireland, 31 August–3 September 2020; pp. 391–396. [Google Scholar]

- Loli Piccolomini, E.; Gandolfi, S.; Poluzzi, L.; Tavasci, L.; Cascarano, P.; Pascucci, A. Recurrent Neural Networks Applied to GNSS Time Series for Denoising and Prediction. In Proceedings of the 26th International Symposium on Temporal Representation and Reasoning (TIME 2019), Málaga, Spain, 16–19 October 2019. [Google Scholar]

- Shahvandi, M.K.; Soja, B. Modified Deep Transformers for GNSS Time Series Prediction. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; pp. 8313–8316. [Google Scholar] [CrossRef]

- Wu, Q.; Sun, Z.; Zhou, X. Interference Detection and Recognition Based on Signal Reconstruction Using Recurrent Neural Network. In Proceedings of the IEEE International Conference, Shanghai, China, 20–24 May 2019. [Google Scholar]

- Li, J.; Zhu, X.; Ouyang, M.; Li, W.; Chen, Z.; Fu, Q. GNSS Spoofing Jamming Detection Based on Generative Adversarial Network. IEEE Sensors J. 2021, 21, 22823–22832. [Google Scholar] [CrossRef]

- Liu, M.; Jin, L.; Shang, B. LSTM-based Jamming Detection for Satellite Communication with Alpha-Stable Noise. In Proceedings of the IEEE Wireless Communications and Networking Conference Workshops (WCNCW), Nanjing, China, 29 March 2021. [Google Scholar]

- Calvo-Palomino, R.; Bhattacharya, A.; Bovet, G.; Giustiniano, D. LSTM-based GNSS Spoofing Detection Using Low-Cost Spectrum Sensors. In Proceedings of the IEEE 21st International Symposium on “A World of Wireless, Mobile and Multimedia Networks”, Cork, Ireland, 31 August–3 September 2020. [Google Scholar]

- Xia, Y.; Pan, S.; Meng, X.; Gao, W.; Ye, F.; Zhao, Q.; Zhao, X. Anomaly Detection for Urban Vehicle GNSS Observation with a Hybrid Machine Learning System. Remote. Sens. 2020, 12, 971. [Google Scholar] [CrossRef]

- Gunn, L.; Smet, P.; Arbon, E.; McDonnell, M.D. Anomaly Detection in Satellite Communications Systems Using LSTM Networks. In Proceedings of the 2018 Military Communications and Information Systems (ICMCIS 2018), Warsaw, Poland, 22–23 May 2018. [Google Scholar]

- Hsu, L.T. GNSS Multipath Detection Using a Machine Learning Approach. In Proceedings of the 2017 IEEE 20th International Conference on Intelligent Transportation Systems (ITSC), Yokohama, Japan, 16–19 October 2017. [Google Scholar]

- Orouji, N.; Mosavi, M.R. A Multi-layer Perceptron Neural Network to Mitigate the Interference of Time Synchronization Attacks in Stationary GPS Receivers. GPS Solut. 2021, 25, 84. [Google Scholar] [CrossRef]

- Dasgupta, S.; Rahman, M.; Islam, M.; Chowdhury, M. Prediction-based GNSS Spoofing Attack Detection for Autonomous Vehicles. In Proceedings of the Transportation Research Board 100th Annual Meeting, Washington, DC, USA, 5–29 January 2021. [Google Scholar]

- Wang, P.; Cetin, E.; Dempster, A.G.; Wang, Y.; Wu, S. Time Frequency and Statistical Inference Based Interference Detection Technique for GNSS Receivers. IEEE Trans. Aerosp. Electron. Syst. 2017, 53, 2865–2876. [Google Scholar] [CrossRef]

- Qin, W.; Dovis, F. Situational Awareness of Chirp Jamming Threats to GNSS Based on Supervised Machine Learning. IEEE Trans. Aerosp. Electron. Syst. 2022, 58, 1707–1720. [Google Scholar] [CrossRef]

- Tritscher, J.; Krause, A.; Hotho, A. Feature Relevance XAI in Anomaly Detection: Reviewing Approaches and Challenges. Front. Artif. Intell. 2023, 6, 1099521. [Google Scholar] [CrossRef]

- Abououf, M.; Singh, S.; Mizouni, R.; Otrok, H. Explainable AI for Event and Anomaly Detection and Classification in Healthcare Monitoring Systems. IEEE Internet Things J. 2023, 11, 3446–3457. [Google Scholar] [CrossRef]

- Rawal, A.; McCoy, J.; Rawat, D.B.; Sadler, B.M.; Amant, R.S. Recent Advances in Trustworthy Explainable Artificial Intelligence: Status, Challenges, and Perspectives. IEEE Trans. Artif. Intell. 2021, 3, 852–866. [Google Scholar] [CrossRef]

- Theissler, A.; Spinnato, F.; Schlegel, U.; Guidotti, R. Explainable AI for Time Series Classification: A Review, Taxonomy and Research Directions. IEEE Access 2022, 10, 100700–100724. [Google Scholar] [CrossRef]

- Elkhawaga, G.; Elzeki, O.M.; Abu-Elkheir, M.; Reichert, M. Why Should I Trust Your Explanation? An Evaluation Approach for XAI Methods Applied to Predictive Process Monitoring Results. IEEE Trans. Artif. Intell. 2024, 5, 1458–1472. [Google Scholar] [CrossRef]

- Sarker, I.H.; Janicke, H.; Mohsin, A.; Gill, A.; Maglaras, L. Explainable AI for Cybersecurity Automation, Intelligence and Trustworthiness in Digital Twin: Methods, Taxonomy, Challenges and Prospects. ICT Express 2024, 10, 935–958. [Google Scholar] [CrossRef]

- Laktionov, I.; Diachenko, G.; Rutkowska, D.; Kisiel-Dorohinicki, M. An Explainable AI Approach to Agrotechnical Monitoring and Crop Diseases Prediction in Dnipro Region of Ukraine. J. Artif. Intell. Soft Comput. Res. 2023, 13, 247–272. [Google Scholar] [CrossRef]

- Aslam, N.; Khan, I.U.; Alansari, A.; Alrammah, M.; Alghwairy, A.; Alqahtani, R.; Almushikes, M.; AL Hashim, M. Anomaly Detection Using Explainable Random Forest for the Prediction of Undesirable Events in Oil Wells. Appl. Comput. Intell. Soft Comput. 2022, 2022, 1558381. [Google Scholar] [CrossRef]

- Shams Khoozani, Z.; Sabri, A.Q.M.; Seng, W.C.; Seera, M.; Eg, K.Y. Navigating the Landscape of Concept-Supported XAI: Challenges, Innovations, and Future Directions. Multimed. Tools Appl. 2024, 83, 67147–67197. [Google Scholar] [CrossRef]

- Sharma, N.A.; Chand, R.R.; Buksh, Z.; Ali, A.B.M.S.; Hanif, A.; Beheshti, A. Explainable AI Frameworks: Navigating the Present Challenges and Unveiling Innovative Applications. Algorithms 2024, 17, 227. [Google Scholar] [CrossRef]

- Sledz, A.; Heipke, C. Thermal Anomaly Detection Based on Saliency Analysis from Multimodal Imaging Sources. ISPRS Ann. Photogramm. Remote. Sens. Spat. Inf. Sci. 2021, V-1-2021, 55–64. [Google Scholar] [CrossRef]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations From Deep Networks via Gradient-Based Localization. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017. [Google Scholar]

- Shrikumar, A.; Greenside, P.; Kundaje, A. Learning Important Features Through Propagating Activation Differences. In Proceedings of the International conference on machine learning, Long Beach, CA, USA, 10–15 June 2019. [Google Scholar]

- Lapuschkin, S.; Binder, A.; Montavon, G.; Müller, K.R.; Samek, W. The LRP Toolbox for Artificial Neural Networks. J. Mach. Learn. Res. 2016, 17, 1–5. [Google Scholar]

- Athavale, J.; Baldovin, A.; Graefe, R.; Paulitsch, M.; Rosales, R. AI and Reliability Trends in Safety-Critical Autonomous Systems on Ground and Air. In Proceedings of the 2020 50th Annual IEEE/IFIP International Conference on Dependable Systems and Networks Workshops (DSN-W), Valencia, Spain, 29 June–2 July 2020; pp. 74–77. [Google Scholar] [CrossRef]

- Kaplan, E.; Hegarty, C. Understanding GPS/GNSS: Principles and Applications, 3rd ed.; Artech House Publishers: London, UK, 2017. [Google Scholar]

- Orolia User Guide. GSG-8 Advanced GNSS/GPS Simulator. Available online: https://www.orolia.com/products/gnss-simulation/gsg-8-gnss-simulator (accessed on 24 March 2023).

| (a) | ||||

|---|---|---|---|---|

| AI Model | Accuracy | Precision | Recall | F1-Score |

| DT | 74 | 74 | 75 | 74 |

| RF | 75 | 75 | 75 | 75 |

| KNN | 79 | 79 | 79 | 79 |

| SVM | 81 | 82 | 81 | 81 |

| AdaBoost | 29 | 29 | 43 | 33 |

| (b) | ||||

| AI Model | Accuracy | Precision | Recall | F1-Score |

| DT | 66 | 65 | 64 | 65 |

| RF | 71 | 73 | 72 | 73 |

| KNN | 41 | 41 | 41 | 43 |

| SVM | 16 | 12 | 16 | 6 |

| AdaBoost | 57 | 48 | 57 | 50 |

| (a) | ||||

|---|---|---|---|---|

| AI Model | Class | Precision | Recall | F1-Score |

| DT | MCWI | 100 | 100 | 100 |

| MP | 100 | 97 | 99 | |

| Chirp | 19 | 23 | 20 | |

| Clean | 3 | 3 | 3 | |

| CWI | 100 | 100 | 100 | |

| Pulse | 100 | 100 | 100 | |

| Spoofing | 98 | 100 | 99 | |

| RF | MCWI | 100 | 100 | 100 |

| MP | 100 | 97 | 99 | |

| Chirp | 80 | 70 | 80 | |

| Clean | 16 | 17 | 17 | |

| CWI | 100 | 100 | 100 | |

| Pulse | 100 | 100 | 100 | |

| Spoofing | 98 | 100 | 99 | |

| KNN | MCWI | 100 | 100 | 100 |

| MP | 100 | 97 | 99 | |

| Chirp | 31 | 35 | 33 | |

| Clean | 26 | 23 | 24 | |

| CWI | 100 | 100 | 100 | |

| Pulse | 100 | 100 | 100 | |

| Spoofing | 98 | 100 | 99 | |

| SVM | MCWI | 91 | 100 | 95 |

| MP | 100 | 97 | 99 | |

| Chirp | 50 | 68 | 57 | |

| Clean | 38 | 33 | 35 | |

| CWI | 100 | 72 | 84 | |

| Pulse | 100 | 97 | 99 | |

| Spoofing | 98 | 100 | 99 | |

| AdaBoost | MCWI | 0 | 0 | 0 |

| MP | 0 | 0 | 0 | |

| Chirp | 50 | 100 | 67 | |

| Clean | 0 | 0 | 0 | |

| CWI | 100 | 100 | 100 | |

| Pulse | 50 | 100 | 67 | |

| Spoofing | 0 | 0 | 0 | |

| (b) | ||||

| AI Model | Class | Precision | Recall | F1-score |

| DT | MCWI | 100 | 99 | 100 |

| MP | 99 | 100 | 99 | |

| Chirp | 100 | 100 | 100 | |

| Clean | 100 | 100 | 100 | |

| CWI | 100 | 100 | 100 | |

| Pulse | 100 | 100 | 100 | |

| Spoofing | 100 | 100 | 100 | |

| RF | MCWI | 100 | 100 | 100 |

| MP | 100 | 97 | 99 | |

| Chirp | 80 | 70 | 80 | |

| Clean | 16 | 17 | 17 | |

| CWI | 100 | 100 | 100 | |

| Pulse | 100 | 100 | 100 | |

| Spoofing | 98 | 100 | 99 | |

| KNN | MCWI | 34 | 39 | 36 |

| MP | 27 | 43 | 33 | |

| Chirp | 40 | 42 | 41 | |

| Clean | 26 | 25 | 26 | |

| CWI | 99 | 91 | 95 | |

| Pulse | 42 | 31 | 36 | |

| Spoofing | 30 | 17 | 22 | |

| SVM | MCWI | 0 | 0 | 0 |

| MP | 32 | 4 | 8 | |

| Chirp | 0 | 0 | 0 | |

| Clean | 28 | 6 | 9 | |

| CWI | 100 | 72 | 84 | |

| Pulse | 6 | 1 | 1 | |

| Spoofing | 98 | 100 | 99 | |

| AdaBoost | MCWI | 33 | 99 | 50 |

| MP | 0 | 0 | 0 | |

| Chirp | 4 | 12 | 14 | |

| Clean | 0 | 0 | 0 | |

| CWI | 100 | 100 | 100 | |

| Pulse | 100 | 100 | 100 | |

| Spoofing | 0 | 0 | 0 | |

| (a) | |||||||

|---|---|---|---|---|---|---|---|

| Feature | DT | RF | KNN | SVM | AdaBoost | Average | Rank |

| Normalized spectrum kurtosis | 1 | 1 | 1 | 1 | 2 | 1 | 1 |

| Ratio of the variance to the squared mean of the normalized spectrum | 6 | 2 | 4 | 3 | 1 | 2.6 | 3 |

| Normalized spectrum flatness | 2 | 4 | 3 | 4 | 4 | 2.83 | 3 |

| Ratio of the maximum peak to the second maximum peak of the normalized spectrum | 5 | 6 | 5 | 5 | 5 | 4.3 | 4 |

| Normalized spectrum bandwidth | 3 | 5 | 6 | 6 | 6 | 4.3 | 4 |

| Single-frequency energy aggregation | 4 | 3 | 2 | 2 | 3 | 2.3 | 2 |

| (b) | |||||||

| Feature | DT | RF | KNN | SVM | AdaBoost | Average | Rank |

| Mean | 10 | 10 | 3 | 2 | 12 | 7.4 | 7 |

| Median | 9 | 9 | 2 | 3 | 11 | 6.8 | 7 |

| Standard deviation | 5 | 5 | 8 | 12 | 4 | 6.8 | 7 |

| Mean absolute deviation | 8 | 12 | 1 | 1 | 10 | 6.4 | 6 |

| Root mean square error | 1 | 2 | 7 | 4 | 6 | 4 | 4 |

| 25th percentile | 9 | 6 | 6 | 6 | 1 | 5.6 | 6 |

| 75th percentile | 6 | 7 | 4 | 5 | 9 | 6.2 | 6 |

| Inter-percentile range | 3 | 4 | 5 | 7 | 8 | 5.4 | 5 |

| Skewness | 8 | 8 | 9 | 8 | 3 | 7.2 | 7 |

| Kurtosis | 2 | 3 | 11 | 9 | 2 | 5.4 | 5 |

| Entropy | 4 | 1 | 10 | 10 | 5 | 6 | 6 |

| Maximum-to-mean ratio | 7 | 11 | 12 | 11 | 7 | 9.6 | 10 |

| (a) | ||||||

|---|---|---|---|---|---|---|

| No. of Features | Metric | DT | RF | KNN | SVM | AdaBoost |

| K = 1 | Accuracy | 0.4500 | 0.2857 | 0.4750 | 0.4607 | 0.4223 |

| Precision | 0.4524 | 0.1667 | 0.5125 | 0.3794 | 0.3901 | |

| Recall | 0.4500 | 0.2857 | 0.4750 | 0.4607 | 0.4356 | |

| F1-score | 0.4504 | 0.1837 | 0.4852 | 0.3719 | 0.3421 | |

| K = 2 | Accuracy | 0.9750 | 0.7143 | 0.9786, | 0.8250 | 0.5682 |

| Precision | 0.9763 | 0.5656 | 0.9798 | 0.8558 | 0.5321 | |

| Recall | 0.9750 | 0.7143 | 0.9786 | 0.8250 | 0.5789 | |

| F1-score | 0.9749 | 0.6172 | 0.9785 | 0.8158 | 0.5971 | |

| K = 3 | Accuracy | 0.9867 | 0.8571 | 0.9788 | 0.9250 | 0.6210 |

| Precision | 0.9244 | 0.7667 | 0.9425 | 0.9384 | 0.6535 | |

| Recall | 0.9462 | 0.8571 | 0.9532 | 0.9250 | 0.7152 | |

| F1-score | 0.9764 | 0.8023 | 0.9642 | 0.9234 | 0.7543 | |

| K = 4 | Accuracy | 0.9899 | 0.9672 | 0.9635 | 0.9964 | 0.6458 |

| Precision | 1 | 0.9989 | 0.9785 | 0.9965 | 0.7342 | |

| Recall | 1 | 1 | 0.9826 | 0.9964 | 0.6735 | |

| F1-score | 1 | 1 | 1 | 0.9964 | 0.7211 | |

| K = 5 | Accuracy | 1 | 0.9929 | 1 | 0.9964 | 0.6712 |

| Precision | 1 | 0.9932 | 1 | 0.9965 | 0.7443 | |

| Recall | 1 | 0.9929 | 1 | 0.9964 | 0.6990 | |

| F1-score | 1 | 0.9929 | 1 | 0.9964 | 0.7124 | |

| K = 6 | Accuracy | 1 | 0.8571 | 1 | 0.9250 | 0.6876 |

| Precision | 1 | 0.7667 | 1 | 0.9384 | 0.7342 | |

| Recall | 1 | 0.8571 | 1 | 0.9250 | 0.6735 | |

| F1-score | 1 | 0.8023 | 1 | 0.9234 | 0.7211 | |

| (b) | ||||||

| No. of Features | Metric | DT | RF | KNN | SVM | AdaBoost |

| K = 1 | Accuracy | 0.6250 | 0.5714 | 0.7036 | 0.4286 | 0.3246 |

| Precision | 0.6252 | 0.4048 | 0.7038 | 0.1917 | 0.0869 | |

| Recall | 0.6250 | 0.5714 | 0.7036 | 0.4286 | 0.3458 | |

| F1-score | 0.6243 | 0.4524 | 0.7026 | 0.2633 | 0.3675 | |

| K = 2 | Accuracy | 0.4524 | 0.5714 | 0.7036 | 0.4571 | 0.4412 |

| Precision | 0.6252 | 0.4048 | 0.7038 | 0.2489 | 0.2357 | |

| Recall | 0.6250, | 0.5714 | 0.7036 | 0.4571 | 0.4043 | |

| F1-score | 0.6243 | 0.4524 | 0.7026 | 0.3205 | 0.3215 | |

| K = 3 | Accuracy | 0.7500, | 0.8536 | 0.7786 | 0.7786 | 0.6548 |

| Precision | 0.7489 | 0.7822 | 0.7786 | 0.7313 | 0.5436 | |

| Recall | 0.7500 | 0.8536 | 0.7786 | 0.7786 | 0.4265 | |

| F1-score | 0.7494 | 0.8060 | 0.7785 | 0.7262 | 43,445 | |

| K = 4 | Accuracy | 0.7480, | 0.8536 | 0.7786 | 0.8250 | 0.6550 |

| Precision | 0.7421 | 0.7822 | 0.7782 | 0.7576 | 0.5436 | |

| Recall | 0.7464 | 0.8536 | 0.7786 | 0.8250 | 0.4336 | |

| F1-score | 0.7439 | 0.8060 | 0.7783 | 0.7772 | 0.4987 | |

| K = 5 | Accuracy | 0.7480 | 0.8393 | 0.7786 | 0.7750 | 0.6548 |

| Precision | 0.7394 | 0.7692 | 0.7588 | 0.7740 | 0.5436 | |

| Recall | 0.7464 | 0.8393 | 0.8286 | 0.7750 | 0.4265 | |

| F1-score | 0.7425 | 0.7916 | 0.7809 | 0.7741 | 43,445 | |

| K = 6 | Accuracy | 0.7464 | 0.8429 | 0.7750, | 0.8286 | 0.6753 |

| Precision | 0.7394 | 0.7727 | 0.7740 | 0.7588, | 0.5964 | |

| Recall | 0.7464, | 0.8429 | 0.7750 | 0.8286 | 0.4413 | |

| F1-score | 0.7425 | 0.7952 | 0.7741 | 0.7809 | 0.4842 | |

| K = 7 | Accuracy | 0.7464 | 0.8429 | 0.7750 | 0.8286 | 0.6759 |

| Precision | 0.7394 | 0.7727 | 0.7740 | 0.7588 | 0.61225 | |

| Recall | 0.7464 | 0.8429 | 0.7750 | 0.8286 | 0.6709 | |

| F1-score | 0.7425 | 0.7952 | 0.7741 | 0.7809 | 0.4007 | |

| K = 8 | Accuracy | 0.7464 | 0.8429 | 0.7750 | 0.8286 | 0.6986 |

| Precision | 0.7394 | 0.7727 | 0.7740 | 0.7588 | 0.6543 | |

| Recall | 0.7464 | 0.8429 | 0.7750 | 0.8286, | 0.6709 | |

| F1-score | 0.7425 | 0.7952 | 0.7741 | 0.7809 | 0.4346 | |

| K = 9 | Accuracy | 0.7464 | 0.8429 | 0.7750 | 0.8286 | 0.6986 |

| Precision | 0.7394 | 0.7727 | 0.7740 | 0.7588 | 0.6543 | |

| Recall | 0.7464 | 0.8429 | 0.7750 | 0.8286 | 0.6709 | |

| F1-score | 0.7425 | 0.7952 | 0.7741 | 0.7809 | 0.4346 | |

| K = 10 | Accuracy | 0.7464 | 0.8429 | 0.7750 | 0.8286 | 0.6986 |

| Precision | 0.7394 | 0.7727 | 0.7740 | 0.7588 | 0.6543 | |

| Recall | 0.7464 | 0.8429 | 0.7750 | 0.8286 | 0.6709 | |

| F1-score | 0.7425 | 0.7952 | 0.7741 | 0.7809 | 0.4346 | |

| K = 11 | Accuracy | 0.7464 | 0.8429 | 0.7750 | 0.8286 | 0.6986 |

| Precision | 0.7394 | 0.7727 | 0.7740 | 0.7588 | 0.6543 | |

| Recall | 0.7464 | 0.8429 | 0.7750 | 0.8286 | 0.6709 | |

| F1-score | 0.7425 | 0.7952 | 0.7741 | 0.7809 | 0.4346 | |

| K = 12 | Accuracy | 0.7464 | 0.8429 | 0.7750 | 0.8286 | 0.6986 |

| Precision | 0.7394 | 0.7727 | 0.7740 | 0.7588 | 0.6543 | |

| Recall | 0.7464 | 0.8429 | 0.7750 | 0.8286 | 0.6709 | |

| F1-score | 0.7425 | 0.7741 | 0.7809 | 0.7862 | 0.4346 | |

| Model | Class | SHAP | PCA | Backward Elimination | Forward Selection | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| DT | Metric | Acc | Prec | Rec | F1 | Acc | Prec | Rec | F1 | Acc | Prec | Rec | F1 | Acc | Prec | Rec | F1 |

| MCWI | 100 | 100 | 100 | 100 | 97 | 95 | 97 | 92 | 97 | 95 | 97 | 92 | 97 | 95 | 97 | 92 | |

| MP | 95 | 100 | 97 | 99 | 98 | 89 | 91 | 90 | 98 | 89 | 91 | 90 | 98 | 89 | 91 | 90 | |

| Chirp | 24 | 19 | 23 | 20 | 21 | 19 | 21 | 20 | 23 | 17 | 32 | 23 | 25 | 22 | 26 | 23 | |

| Clean | 72 | 76 | 78 | 83 | 82 | 18 | 12 | 12 | 12 | 18 | 15 | 12 | 16 | 22 | 23 | 09 | |

| CWI | 100 | 100 | 100 | 100 | 98 | 88 | 90 | 93 | 98 | 88 | 90 | 93 | 98 | 88 | 90 | 83 | |

| Pulse | 100 | 100 | 100 | 100 | 97 | 76 | 81 | 90 | 97 | 76 | 81 | 90 | 97 | 72 | 80 | 79 | |

| Spoofing | 98 | 98 | 100 | 99 | 97 | 95 | 97 | 92 | 97 | 95 | 97 | 92 | 97 | 95 | 97 | 92 | |

| RF | MCWI | 94 | 97 | 93 | 97 | 98 | 89 | 91 | 90 | 98 | 89 | 91 | 90 | 98 | 89 | 91 | 90 |

| MP | 98 | 100 | 97 | 99 | 95 | 94 | 92 | 91 | 88 | 93 | 92 | 89 | 90 | 91 | 88 | 86 | |

| Chirp | 98 | 80 | 70 | 80 | 72 | 76 | 71 | 76 | 67 | 66 | 63 | 61 | 60 | 65 | 66 | 67 | |

| Clean | 19 | 16 | 17 | 17 | 18 | 13 | 17 | 15 | 12 | 13 | 15 | 12 | 12 | 13 | 13 | 11 | |

| CWI | 100 | 100 | 100 | 100 | 97 | 76 | 81 | 90 | 97 | 76 | 81 | 90 | 97 | 76 | 81 | 90 | |

| Pulse | 100 | 100 | 100 | 100 | 97 | 95 | 97 | 92 | 97 | 95 | 97 | 92 | 97 | 95 | 97 | 92 | |

| Spoofing | 98 | 98 | 100 | 99 | 98 | 89 | 91 | 90 | 98 | 89 | 91 | 90 | 98 | 89 | 91 | 90 | |

| KNN | MCWI | 100 | 100 | 100 | 100 | 93 | 85 | 87 | 80 | 81 | 64 | 72 | 72 | 77 | 75 | 75 | 72 |

| MP | 99 | 100 | 97 | 99 | 95 | 88 | 87 | 80 | 75 | 65 | 64 | 60 | 61 | 64 | 66 | 63 | |

| Chirp | 37 | 31 | 35 | 33 | 34 | 32 | 24 | 24 | 29 | 31 | 33 | 29 | 30 | 28 | 29 | 33 | |

| Clean | 27 | 26 | 23 | 24 | 32 | 37 | 31 | 24 | 19 | 19 | 24 | 21 | 20 | 27 | 32 | 35 | |

| CWI | 100 | 100 | 100 | 100 | 95 | 94 | 93 | 90 | 94 | 78 | 73 | 71 | 68 | 72 | 73 | 78 | |

| Pulse | 100 | 100 | 100 | 100 | 97 | 94 | 93 | 90 | 89 | 91 | 89 | 88 | 79 | 81 | 80 | 81 | |

| Spoofing | 100 | 98 | 100 | 99 | 87 | 97 | 95 | 93 | 71 | 75 | 73 | 74 | 70 | 71 | 72 | 71 | |

| SVM | MCWI | 95 | 91 | 100 | 95 | 75 | 65 | 75 | 79 | 70 | 68 | 69 | 78 | 64 | 61 | 56 | 64 |

| MP | 96 | 100 | 97 | 99 | 98 | 88 | 90 | 82 | 80 | 88 | 81 | 79 | 79 | 88 | 79 | 79 | |

| Chirp | 65 | 50 | 68 | 57 | 97 | 76 | 81 | 90 | 97 | 76 | 81 | 90 | 97 | 76 | 81 | 90 | |

| Clean | 34 | 38 | 33 | 35 | 97 | 95 | 97 | 92 | 97 | 95 | 97 | 92 | 97 | 95 | 97 | 92 | |

| CWI | 87 | 100 | 72 | 84 | 98 | 89 | 91 | 90 | 98 | 89 | 91 | 90 | 98 | 89 | 91 | 90 | |

| Pulse | 98 | 100 | 97 | 99 | 78 | 76 | 72 | 70 | 67 | 69 | 73 | 72 | 77 | 74 | 77 | 71 | |

| Spoofing | 97 | 98 | 100 | 99 | 84 | 74 | 75 | 77 | 86 | 81 | 71 | 69 | 73 | 76 | 77 | 72 | |

| AdaBoost | MCWI | 25 | 23 | 26 | 23 | 24 | 25 | 24 | 19 | 22 | 21 | 24 | 23 | 27 | 22 | 24 | 26 |

| MP | 45 | 46 | 45 | 15 | 44 | 32 | 37 | 39 | 42 | 41 | 32 | 33 | 39 | 41 | 40 | 34 | |

| Chirp | 53 | 50 | 100 | 67 | 53 | 54 | 51 | 43 | 44 | 35 | 45 | 32 | 48 | 42 | 43 | 35 | |

| Clean | 54 | 56 | 45 | 55 | 54 | 52 | 41 | 44 | 50 | 43 | 47 | 42 | 43 | 41 | 39 | 38 | |

| CWI | 56 | 54 | 56 | 45 | 45 | 51 | 42 | 41 | 44 | 48 | 50 | 46 | 52 | 43 | 39 | 44 | |

| Pulse | 49 | 50 | 65 | 67 | 53 | 54 | 56 | 47 | 45 | 56 | 54 | 50 | 54 | 52 | 46 | 46 | |

| Spoofing | 51 | 56 | 54 | 45 | 59 | 46 | 46 | 43 | 34 | 43 | 34 | 44 | 41 | 43 | 42 | 39 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Elango, A.; Landry, R.J. XAI GNSS—A Comprehensive Study on Signal Quality Assessment of GNSS Disruptions Using Explainable AI Technique. Sensors 2024, 24, 8039. https://doi.org/10.3390/s24248039

Elango A, Landry RJ. XAI GNSS—A Comprehensive Study on Signal Quality Assessment of GNSS Disruptions Using Explainable AI Technique. Sensors. 2024; 24(24):8039. https://doi.org/10.3390/s24248039

Chicago/Turabian StyleElango, Arul, and Rene Jr. Landry. 2024. "XAI GNSS—A Comprehensive Study on Signal Quality Assessment of GNSS Disruptions Using Explainable AI Technique" Sensors 24, no. 24: 8039. https://doi.org/10.3390/s24248039

APA StyleElango, A., & Landry, R. J. (2024). XAI GNSS—A Comprehensive Study on Signal Quality Assessment of GNSS Disruptions Using Explainable AI Technique. Sensors, 24(24), 8039. https://doi.org/10.3390/s24248039