Abstract

Humanoid robots are typically designed for static environments, but real-world applications demand robust performance under dynamic, uncertain conditions. This paper introduces a perceptive motion planning and control algorithm that enables humanoid robots to navigate and operate effectively in environments with unpredictable kinematic and dynamic disturbances. The proposed algorithm ensures synchronized multi-limb motion while maintaining dynamic balance, utilizing real-time feedback from force, torque, and inertia sensors. Experimental results demonstrate the algorithm’s adaptability and robustness in handling complex tasks, including walking on uneven terrain and responding to external disturbances. These findings highlight the potential of perceptive motion planning in enhancing the versatility and resilience of humanoid robots in uncertain environments. The results have potential applications in search-and-rescue missions, healthcare robotics, and industrial automation, where robots operate in unpredictable or dynamic conditions.

1. Introduction

Humanoid robots are typically designed and work for static environments, but real-world applications demand robust performance under dynamic, uncertain conditions. Many significant studies and achievements have been made in recent years based on some well-known hardware platforms, such as HRP series humanoid robots [1,2,3], Atlas [4], Cassie [5], and Digit [6]. HRP-2 humanoid robot which has 1540 mm height, 58 kg weight and 30 degrees of the freedom, is one of the earliest series of platforms for researchers to implement their algorithms. Atlas is a highly advanced humanoid robot developed by Boston Dynamics, known for its impressive agility, balance, and ability to perform complex movements such as running, jumping, and navigating challenging environments. Cassis and Digit are humanoid robots designed for advanced mobility and task execution, with Cassis specializing in human-robot interaction and Digit focusing on delivery and logistics tasks with exceptional agility and dexterity. Many advanced control algorithms have been applied in these excellent platforms to draw maximum performance. These algorithms aim to achieve a more responsive and agile motion by improving the dynamic balance of the robot or manipulated objects.

Path planning, a critical aspect of humanoid robot control, has seen the integration of various intelligent techniques. For instance, neural network-based approaches have shown promise in optimizing robotic paths. Cellular Neural Networks (CNNs) have been utilized for real-time, collision-free path generation, leveraging analogies with harmonic functions to ensure smooth and dynamic trajectories in complex environments [7,8]. Other works, such as crossover recombination-based optimization algorithms, effectively address multi-constraint optimization problems like UAV path planning by enhancing path smoothness and adaptability in three-dimensional spaces [9]. Additionally, methods tailored for specific contexts, such as path planning for needle insertion in deformable soft tissues, illustrate the broader applicability of path planning techniques across diverse domains [10,11].

Static balance is easier and safer, as the system remains stable even if all joints freeze. Kajita [12] introduced a seminal method for achieving stable walking in humanoid robots using Zero Moment Point (ZMP) control combined with preview control. This approach leverages the preview gain, which is determined by solving the Riccati equation [13]. Achieving dynamic balance with uncertainties in robots presents two main challenges. First, humanoid robots or manipulated objects may be underactuated, meaning not all poses, velocities, or accelerations are feasible due to limited contact points and friction constraints [14]. Second, the system must adapt to dynamic uncertainties from both internal factors, like model errors and communication delays, and external factors, such as slippery surfaces or unexpected forces [15]. The integration of advanced filters, such as the Model Predictive-Based Unscented Kalman Filter (MP-UKF), has demonstrated improved adaptiveness in nonlinear and high-dynamic systems. This approach compensates for model errors in real time, enhancing the performance of navigation systems in environments with high maneuverability demands [16].

Traditional methods are generally relying on offline motion planning and linear control, which is inadequate for fully exploiting hardware capabilities and responding effectively to dynamically changing environments. In contrast, Model Predictive Control (MPC) offers trajectory generation in the nonlinear domain, enabling the optimal use of the robot’s kinematic range and torque limits [17]. Furthermore, frequent motion replanning based on the robot’s current state enhances robustness against disturbances. As a result, the application of MPC in legged robotic systems has been a growing area of research over the past decade. In ICRA 2022, there are 19 out of 28 workshop papers related to MPC [17]. In the early stages of legged robotics research, MPC was introduced for the online generation of walking patterns in bipedal and humanoid robots, aiming to enhance responsiveness to disturbances and user inputs. Here, the term online generation means that the trajectory keeps updating while the robot is moving.

There are many different types of MPC algorithms. In general, MPC uses the model of the system under control to predict its behavior in a defined prediction horizon [18]. Following that, the objectives of the system are defined as a cost function and the control problem becomes minimizing the errors between the model predicted values and reference values. In addition to dynamic modeling-based control approaches, reinforcement learning has emerged as a compelling alternative for controlling humanoid robots. Leveraging tools like Isaac Sim and Gym provided by NVIDIA, researchers from ETH Zurich and NVIDIA have demonstrated a novel and promising method for controlling various types of legged robots [19]. By training numerous robots in parallel within a high-fidelity physics simulation environment, these systems achieve real-world locomotion after addressing key sim-to-real challenges.

In addition, there are always unpredictable factors in a humanoid robot’s operating environment, which we can refer to as environment uncertainties. These uncertainties include variations in friction, changes in terrain slope, and unpredictability in object poses or external disturbances. Significant research efforts have been devoted to addressing uncertainties in control systems, including approaches such as uncertain iterative optimal attitude control [20] and uncertain optimal design strategies [21]. However, these uncertainties remain a substantial challenge, especially for robots performing tasks such as manipulation and locomotion. Such tasks require adaptive strategies to effectively respond to dynamic changes in the environment. While traditional Model Predictive Control (MPC) can handle certain types of environmental uncertainties to ensure stable walking, its capabilities are limited when faced with more complex or unpredictable scenarios.

MPC in humanoid robots, while effective for planning and optimization, has several drawbacks. First, MPC is computationally intensive due to its reliance on solving optimization problems at every time step, which can lead to latency and limit real-time performance, especially in highly dynamic environments [22]. This computational burden increases with the complexity of the robot’s model and the number of constraints considered. Additionally, MPC requires accurate models of the robot’s dynamics [17], which can be challenging to obtain due to uncertainties or approximations in the robot’s mechanical structure and external forces like friction or disturbances. Another issue is that MPC often struggles with nonlinearity in humanoid movements [23], and achieving fast responses or agile behaviors may require more specialized or approximated control strategies. Lastly, tuning MPC parameters can be difficult, requiring extensive trial and error to balance control performance and computational efficiency [24].

In this paper, a perceptive motion reference system for humanoid robots operating in dynamic and uncertain environments is developed. It is designed to improve dynamic balancing and motion synchronization. Instead of relying on traditional time-based reference tracking, this method shifts to a path-following approach guided by real-time sensory information, especially force, torque, and inertia data. The perceptive reference is derived from dynamic balance criteria such as the Zero Moment Point (ZMP). Compared to traditional MPC algorithms, the key difference lies in its adaptability: when disturbances occur, the system temporarily pauses the motion and re-synchronizes the movements without requiring complete trajectory re-planning, providing robustness and adaptability. This method is particularly suited to underactuated systems where perfect control is not feasible, and external disturbances (like soft ground or object blocking) are common. The rest of this paper is organized as follows: Section 2 details the perceptive motion reference framework, focusing on how it addresses dynamic balance and environmental uncertainties in multi-limb robotic systems. Section 3 presents the trajectory planning methodology, illustrating the synchronization of legs and the Center of Gravity (CoG) using the proposed perceptive approach. Section 4 provides simulation and experimental results, demonstrating the framework’s robustness in handling disturbances and unexpected conditions, and finally, Section 5 concludes the paper with a summary.

2. Perceptive Motion Reference Framework

2.1. System Overview

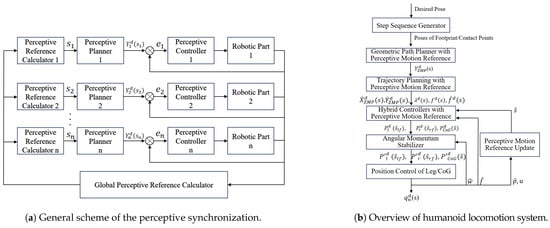

Figure 1a illustrates the overall perceptive planning and control framework. The central concept is the use of a perceptive motion reference, denoted as a scalar s representing the system’s state derived from sensory data. This reference is used to plan the desired motion trajectory of subsystems, such as limbs based on the value of s. The system operates in a closed loop by continuously computing s in real time and determining the required motion, such as the desired pose of the i-th limb, represented as , according to the perceptive plan. The control error, like the error for the i-th limb, , is also calculated based on this reference. The direct relationship between the perceptive reference and the system state aids in achieving coordinated synchronization. This integration means that planning is now part of the real-time control loop.

Figure 1.

Locomotion system structure.

In humanoid locomotion, this system enables the robot to intuitively swing its leg when the Zero Moment Point (ZMP) shifts to specific areas, ensuring dynamic balance, while also minimizing the L2-norm error of the ZMP. Figure 1b shows the overall locomotion system and intermediate parameters, where all the desired values, including force, orientation, and speed, are functions of the perceptive motion reference s. This flexibility helps set more reasonable desired values when the assumptions made during planning, such as timing or spatial constraints, are not met. For instance, trajectory generation algorithms [25] may assume an instant transition between the single support phase (SSP) and double support phase (DSP), but foot landing can be affected by various unpredictable factors like contact point irregularities, causing deviations in planned trajectories. Our controllers adjust the desired values according to the current perceptive motion reference, which account for the impact of any invalid planning assumptions.

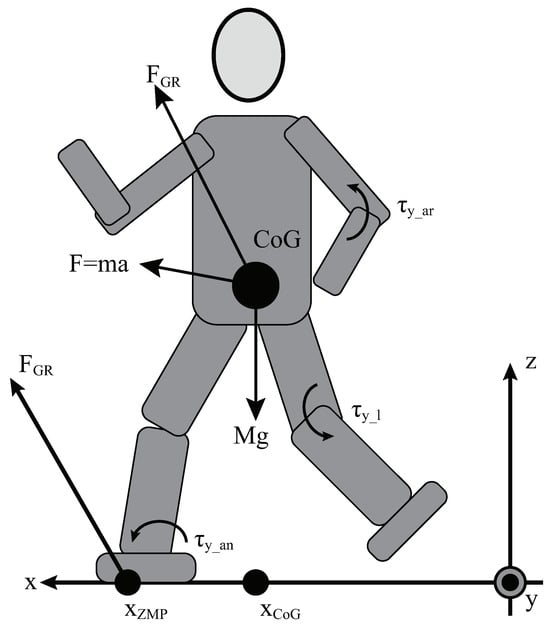

Figure 2 is a 3D linear inverted pendulum model of a humanoid robot. To maintain a consistently low spin angular momentum, similar to human walking on flat ground, a whole-body angular stabilizer uses torque from arm swings and ankle rotations (denoted as and which are illustrated in Figure 2) to counterbalance the additional angular momentum [26] caused by leg swings (as in Figure 2). This stabilization minimizes the deviation of the Center of Gravity (CoG). The process introduces the centroidal moment pivot (CMP), a key ground reference through which the shifted ground reaction force (FGR) can pass through the CoG. The CMP is controlled to align with the ZMP when the horizontal component of angular momentum around the CoG is neutralized. For the sake of brevity, the specific details of this control are not elaborated in the paper. However, this approach allows us to assume that the net moment around the CoG is zero. In flat ground walking tasks, the desired angular position is set to a constant value of zero.

Figure 2.

A simple illustration of a dynamic model for a humanoid robot.

2.2. Design of the Perceptive Motion Reference

As discussed earlier, the perceptive reference s replaces time as the motion reference for planning and synchronizing the movements of both the legs and the Center of Gravity (CoG). Unlike traditional path-following methods, various studies [27,28] suggest that this non-time-based motion reference should incorporate the following: First, the system’s inherent characteristics, including its kinematics, dynamics, and transfer functions, which are closely linked to the system states that are critical for the task; second, the key objective or essential criterion of the task, such as controlled values like distance traveled, tangential speed, etc.

In our locomotion framework, we adopt the Zero Moment Point (ZMP) balance criterion [25], where the ZMP must remain within the center of the support polygon at all times during walking. The ZMP’s position is connected to the CoG through a linear inverted pendulum dynamic model and is constrained by the variable support polygon created by the feet. Therefore, the distance traveled by the ZMP along the planned reference path is chosen as the perceptive motion reference s. For a given geometric reference path of the ZMP on a 2D xy-plane, it can be expressed as piecewise functions , where and (m being the number of ZMP path segments), and . The scalar parameter s is calculated based on the real-time projected point of the current ZMP position onto the specified ZMP geometric reference path. The ZMP’s traveled distance along the reference path, as calculated in Equation (1), represents the support point of the 3D inverted pendulum system. This distance contains vital dynamic information that can continuously guide the movement of other body parts. Similarly to time, the ZMP traveled distance is a one-dimensional parameter, which makes it convenient to use as a motion reference for parameterizing the body’s trajectories.

Accordingly, the perceptive motion reference for a foot (denoted by for the left foot or for the right foot) is defined by the distance traveled along its reference path, starting from the initial swing point to the current projected position of the left or right foot on that path. These reference values are computed based on the current value of s to ensure balance, as will be explained in the following section.

2.3. Perceptive Motion Spatial Planning

The 3D LIP model in Figure 2 can describe the system dynamics and relationship between the CoG and ZMP as the following equation (, and are the three direction components of ground reaction force F):

Equation (2) also encapsulates the dynamics of scenarios involving manipulated objects with a significant volume, where a single contact point exists with a robotic gripper or finger on the object’s surface. Similarly, it applies to floating space manipulators interacting with objects of a comparatively larger mass. Consequently, the subsequent analysis can be generalized to encompass specific cases of dexterous manipulation and space-based robotic systems.

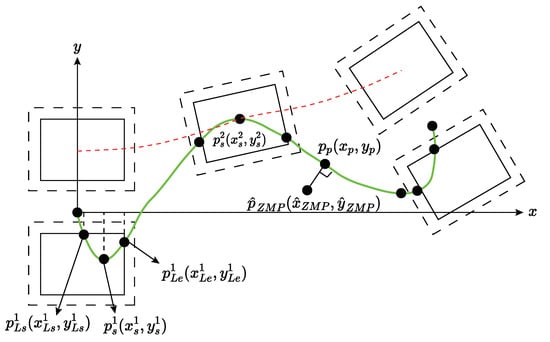

In humanoid locomotion, Figure 3 shows the ZMP reference path (green curves) and the left foot’s path (red-dashed line) on the x–y plane. Dotted squares represent actual footprints, and solid squares mark valid support polygons to prevent ZMP from reaching the footplate edges and tilting the robot. The origin is set between the feet at the task’s start. For each leg swing, the ZMP path must stay within the support leg’s valid polygon to maintain balance, linking leg motion references (, ) to the main reference s as described in Equation (3), where i is the step number. and correspond to the main reference s end and start values for the i-th left foot swing, while and indicate the start and end values of the left foot’s motion reference for that step.

Figure 3.

A geometric illustration of reference path planning.

2.4. Perceptive Motion Temporal Planning

Our primary consideration is dynamic balance, which requires the ZMP controlled by CoG motion to be located in the support polygon formed by one’s foot. This requires us to develop a foot trajectory generator that initiates the swing when the LIP and walking direction align, and design a ZMP tracking system to ensure the CoG trajectory keeps the ZMP on its reference path. According to the perceptive reference, a third-order polynomial trajectory of the ZMP and feet is planned for the smoothness of the trajectory. The boundary conditions are set based on the desired forward walking speed , determined by either user input or the perception module. Using these conditions, two segments of the ZMP trajectories can be derived as Equation (4).

Based on this condition, three pieces of the right foot trajectory in 3D can be obtained with the boundary conditions. It is quite similar for other steps. Generally, as mentioned in [7], the ZMP preview controller performs much better than a linear-quadratic optimal controller if it considers the future reference position and minimizes ZMP errors accordingly. We define the LIP input variable u as the temporal second derivative of the acceleration of the CoG:

The ZMP equation can be translated into a discretized dynamical system [10] with the control cycle duration T. And, the mentioned ZMP future reference position can be obtained as:

Equation (6) is derived from a discretized dynamical system [30] with the control cycle duration T, and k is the step. Based on Equation (6), we can have the optimal input of the minimized performance index in a sized preview window, and it can be described with:

To optimize, we can use an online solver. The optimal controller that minimizes J is:

The gain values , and can be derived from [30].

3. Perceptive Motion Control

3.1. Dynamics Model

The full dynamics model of the humanoid robot is established in [31]:

where is the system inertia matrix, represents the gravity and Coriolis forces, is the control input map, and is a transformation matrix, converting external forces F into the corresponding generalized forces acting on the system. Here, we defined the point on the external surface where a shifted ground reaction force vector F would pass through and go across the CoG as Centroid Moment Pivot (CMP). The constraint equation can be described as:

Hence, we can have a conversion from ZMP to CMP, and it can be described as:

3.2. Translational Dynamics Equilibrium Perceptive Model

In the single-support phase, where the only external force is the ground reaction force F on the stance foot, the CoG position can be described using forward kinematics as and in a local coordinate system at the ankle. The translation equilibrium is then expressed as:

In the single-foot stance phase, CoG has accordingly in joint space based on inverse kinematics. Hence, Equation (12) can be expressed as:

There should be a common constraint for each foot’s friction , , , and . The friction constraint directly influences whether the desired CoG state is valid and achievable based on the potential ground reaction forces on the feet. And the acceleration relationship can be expressed as and .

3.3. Rotational Dynamics Equilibrium Perceptive Model

With the actuated ankle joint, which connects the foot to the rest of the body, remaining stationary, the torque equilibrium around the joint is maintained as:

where is the overall torque around the ankle joint and it equals the angular momentum of the robot around the ankle joint . According to Equations (13) and (14), the dynamics model in terms of the angular momentum would be expressed as:

where:

By shifting the rotation axis from the ankle to the ZMP in the sagittal and frontal planes, the angular momentum can be expressed as . Taking the derivative of both sides, the torque around the ZMP, can be represented as:

3.4. Impact Dynamics Equilibrium Perceptive Model

An impact model is required to link the analysis across different single-leg support phases. For instance, when the left leg, acting as the swing leg, lands at time and becomes the support leg, there is a brief double-leg support phase where the robot’s configuration q remains unchanged. The right leg then lifts off and becomes the swing leg. The hybrid controller ensures a smooth, non-bouncing landing, with the vertical velocity of the landing foot set to zero post-impact. However, the horizontal velocity changes, and this is linearly represented by a 6 × 6 matrix . Additionally, the roles of the left and right feet are swapped using a permutation matrix , allowing the single-leg support analysis to be reused. The impact effect in joint space is detailed in [32] as:

Here, and represent the velocity vectors just after and just before the impact. To ensure the desired CoG reference path aligns with the impact dynamics described in Equation (17), the final velocity of the i-th step just before impact must match the initial velocity of the -th step as shown below:

Assuming , where is a scalar, and with the foot planner determining and , the equation can be reformulated as a condition for as:

Hence, a feasible series of solution is provided.

3.5. Perceptive Control Law Formulation

The “hybrid” leg controller operates as a velocity closed-loop during the swing phase and a dynamic model-based closed-loop when the leg is in support. A landing event-triggered switch is used to transition between these two control modes.

For the velocity controller, the reference velocity vector for the left and right foot is provided as or , depending on the global perceptive reference . The foot speed error is calculated as , where is the transformation matrix from world coordinates to the robot’s local frame at the pelvis. The velocity controller is then designed accordingly as:

where, represents the Jacobian matrix of the swing leg, and , are the parameters for the PI controller. The desired joint velocity is then forwarded to the lower-level joint velocity controllers for execution. To minimize unwanted bouncing and oscillations, the transition from the velocity controller (V) for the swing foot to the force controller (F) for the stance foot is based on a force-related condition. These relationship can be represented with equations:

where represents the expected contact force on a single foot during the double support phase, while is the ground reaction force in the z axis, as measured by the 6-axis force–torque sensors on the ankles (providing outputs for forces , , and torques , , ). The switch to the force controller (F) occurs at when . Once the switch is made, the stance leg controller, designed with feedforward and feedback mechanisms, ensures the CoG reference path and the ZMP position are achieved. The control inputs are joint torques (for ) and the reference velocity , where , making increase over time. The resulting control error is then computed with:

The controller would firstly be designed as:

Let the angular momentum dynamics mentioned in Equation (15) be with , then we can have:

And with Equation (9), we can know as:

Finally, the designed controller with and can ensure the dynamical balance of the humanoid robot system, and can be represented as:

4. Simulation and Experiment

4.1. Hardware Platform

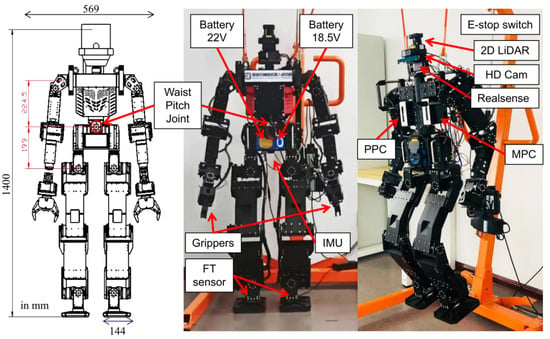

Our humanoid robot which is shown in Figure 4, developed at the Shenzhen Academy of Robotics (SZAR), weighs 44 kg and features two 6-DOF legs, 6-DOF arms, a 2-DOF head, and a 2-DOF waist. It uses 29 ROBOTIS brushless motors powered by a 22 V battery, with integrated sensors for precise control. Two six-axis force/torque sensors at the ankles and an IMU at the body’s center provide data for ZMP and dynamic state estimation. The robot’s hardware is similar to the THORMANG3 model. The system is powered by two onboard Intel® NUC computers (i5, 8 GB RAM, 128 GB SSD). One handles motion control at 100 Hz (MPC), while the other handles perception tasks (PPC) with SLAM, using LiDAR and cameras. Both wirelessly connect to an external operation PC for user interface and monitoring, all running Ubuntu 18.04 with ROS Melodic.

Figure 4.

The full-size humanoid robot hardware. (Left): design paper with size; (Middle): real robot with shell; (Right): real robot without shell.

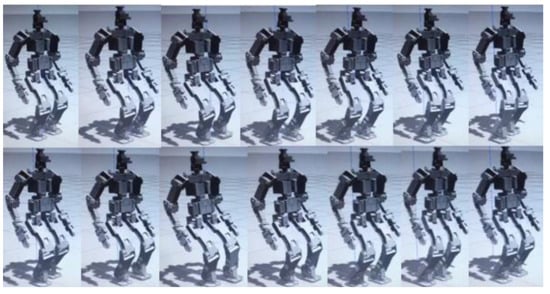

4.2. Simulation of Biped Robot Locomotion Under Disturbances

To initially validate the proposed method under ideal conditions, a dynamic simulation was conducted in Gazebo, free from disturbances and sensor noise. The target locomotion parameters were set to 0.1 m step length, a cycle time of 1.0 s, and four forward steps, as depicted in Figure 5. In the absence of external disturbances, the perceptive framework successfully generated a smooth Zero Moment Point (ZMP) trajectory, along with corresponding foot placement and center of gravity (CoG) paths, as illustrated in Figure 5.

Figure 5.

Simulation test a (0.1 m, 1.0 s, 4 steps forward) without external disturbance, with the simplified dynamic model and IMU, F/T sensor noise.

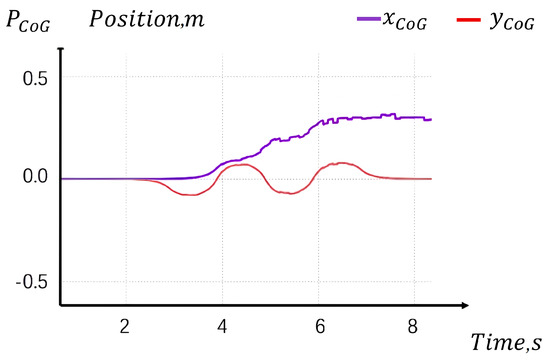

As shown in Figure 6, the overall Center of Mass (CoM) tracking performance closely matches that of the time-based framework. The locomotion process was completed within the expected duration, approximately 4 s for simulation (a).

Figure 6.

Simulation test result of a: The reference CoG position without any disturbance.

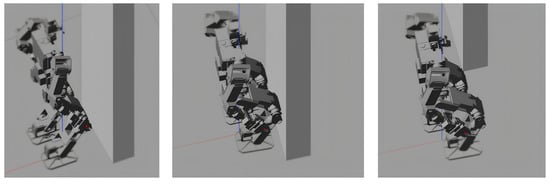

Subsequently, an unexpected obstacle was introduced, temporarily blocking the robot’s right foot (depicted within the red rectangle in Figure 7). Specifically, a static, wall-like obstacle impeded the right foot for 0.2 s. This can be interpreted as an external force being applied in the opposite direction along the x-axis for 0.2 s.

Figure 7.

Simulation test b (0.1 m, 1.0 s, 4 steps forward) with additional external disturbance.

In Figure 5, obtained from simulation (a), the robot demonstrates a distinct yet stable gait over different time intervals (please refer to the attached video for further explanation). This variation is reflected in the time-dependent displacement , influenced by uncertainties in the Linear Inverted Pendulum (LIP) model, control errors, and disturbances originating from both the ground and the robot itself.

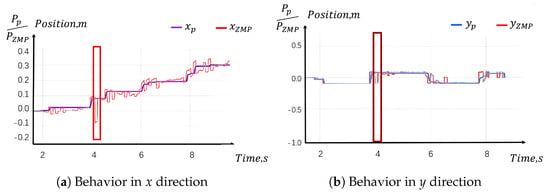

In simulation test (b), a temporary blocking force is applied to the right knee while the robot is in the leg-swinging phase. As illustrated in Figure 8, this external force significantly impacts the actual Zero Moment Point (ZMP) values along both the x and y axes, deviating from the desired path. However, the perceptive planner is capable of pausing temporarily, as it maintains its reference value at the moment the force is applied. Consequently, the desired right foot pose is also paused, along with the center of gravity (CoG) and the left foot. The additional time created by this pause is effectively used by the ZMP ankle and pelvis controller to correct the ZMP deviations. As a result, the remainder of the planned gait is executed successfully without the need for re-planning, as shown in Figure 8. This demonstrates a key feature of the system, where the reference “waits” until the control error is minimized.

Figure 8.

Simulation test b result: The ZMP and projected ZMP with an unexpected disturbance.

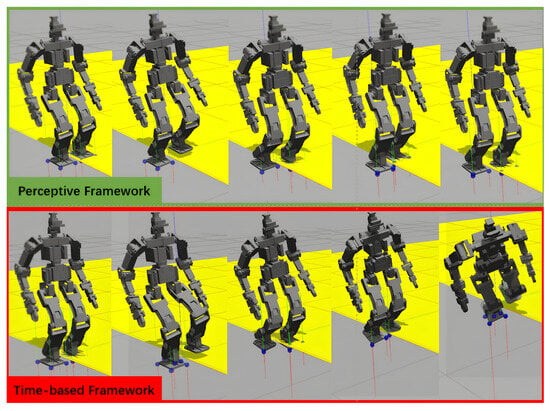

To clearly demonstrate the robustness and advantages of the proposed method, we evaluate its performance on soft or elastic surfaces, such as desert terrain or artificial elastic materials. These surfaces require significantly greater deformation or depth along the z axis to generate the desired ground reaction force. However, traditional robot perception algorithms struggle to detect such soft or elastic surfaces in advance, as they appear similar to normal ground in both 2D and 3D before contact is made. To create a more challenging scenario, we modified half of the ground’s elastic properties in the Gazebo simulation (with a stiffness parameter of kp = 500, resembling the elasticity of an inflated yoga ball), as illustrated in yellow in Figure 9. The gray areas in Figure 9 represent hard, rigid surfaces, comparable to standard concrete (with a stiffness parameter of kp = 500,000).

Figure 9.

Simulation test c (0.1 m, 1.0 s, 3 steps forward) with the hard ground in gray and the soft and elastic ground in yellow as an unexpected disturbance.

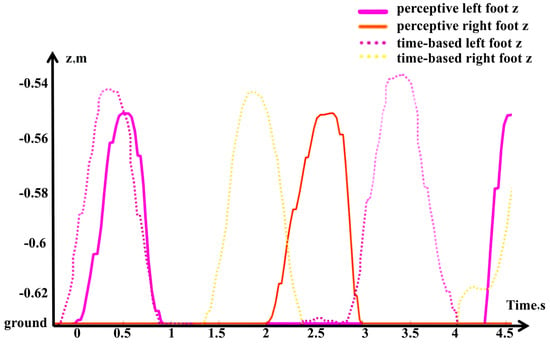

In the force/position hybrid control of the left leg, the position control error increases unexpectedly in order to generate the desired ground reaction force, due to the unanticipated softness of the ground. With the original z axis velocity, the left foot requires more time to step deeper compared to the right foot, which is on the rigid gray surface. As shown in the lower row of snapshots in Figure 9, the time-based control triggered the second step according to the global clock, but prematurely. This behavior is confirmed in Figure 10, where the first right foot swing occurs approximately 0.5 s later, and the second left foot swing about 1.5 s later in the perceptive framework compared to the time-based approach. In contrast, the perceptive framework demonstrates adaptability and robustness in this test, successfully completing the locomotion task.

Figure 10.

Simulation test c result: Walking on soft/elastic ground (yellow) simulation with feet z axis position comparison between perceptive framework and time-based framework.

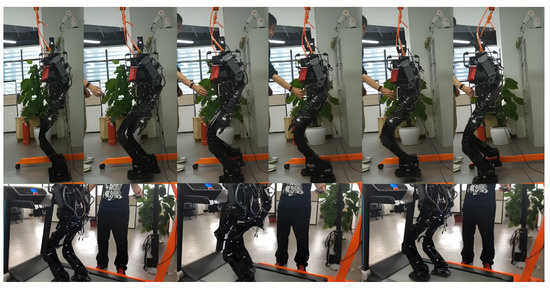

4.3. Experimental Test of Biped Robot Under Unexpected Disturbances

To evaluate the method under more realistic conditions, locomotion experiments were performed using a full-size humanoid robot on a standard treadmill, as shown in Figure 11. After several trials, the proposed method enabled the robot to achieve fast forward walking at a linear speed of up to 0.42 m/s while maintaining dynamic balance, further validating the method’s robustness.

Figure 11.

Locomotion with external disturbance and max-speed experiments on real full-size humanoid.

5. Discussion

A significant challenge in comparing humanoid robot control algorithms lies in the lack of standardized evaluation frameworks. Unlike fields such as computer vision, humanoid robot control is highly hardware-dependent, with algorithms often tailored to specific proprietary platforms, making cross-platform comparisons impractical. Furthermore, the implementation of these algorithms is complex, requiring months of expertise and calibration to reproduce results on different systems. While metrics like ZMP trajectory tracking exist for stable walking, universally applicable benchmarks for dynamic and complex behaviors remain elusive, underscoring the need for shared evaluation criteria in future research.

The simulation and experimental results demonstrated the effectiveness of the perceptive motion planning framework under various conditions, including external disturbances and environmental uncertainties. The simulation results clearly show the system’s robustness when external disturbances, such as temporary foot blockage, are introduced. Specifically, the framework’s ability to pause motion, synchronize corrective actions, and maintain ZMP within the desired trajectory highlights its superiority over traditional time-based control. This robustness can be attributed to the dynamic recalibration of the perceptive reference in real-time. In practice, this feature could significantly enhance the humanoid robot’s performance in unstructured or unpredictable environments, such as disaster response or crowded public spaces.

Following that, we have the test performance when facing the elastic ground. When comparing locomotion on rigid versus elastic surfaces, the perceptive framework demonstrated remarkable adaptability. Unlike traditional control, which heavily relies on precise timing, the perceptive approach adjusted the foot placement and gait cycle dynamically. The delayed initiation of the next step while compensating for the increased depth on the elastic terrain illustrates the flexibility of the framework. This result is significant for applications requiring the traversal of varied terrains, such as exploration or construction robotics.

Lastly, a real-world experiment has been conducted. The experimental validation on a treadmill further confirmed the framework’s real-world viability. The robot achieved a maximum speed of 0.42 m/s while maintaining balance. This success underlines the practical implementation of perceptive motion planning for real-time humanoid control.

The perceptive framework’s ability to outperform time-based counterparts in scenarios with dynamic uncertainties, as evidenced by the simulation and experimental data, provides a compelling argument for its broader adoption. The reduced need for re-planning and the capability to adapt to unexpected conditions without interrupting the task pipeline are particularly noteworthy.

6. Conclusions

In conclusion, this paper presents a theoretical analysis and the experimental validation of a perceptive motion reference framework for controlling multiple robotic limbs on a “floating base” system. The proposed method simplifies synchronized motion control across limbs without requiring the temporal coordination of individual movements. It also enhances the system’s robustness and adaptability by quickly adjusting to control errors or external disturbances, maintaining the execution of planned trajectories without the need for re-planning.

While the current study focuses on humanoid platforms and balance criteria, several avenues for future research could expand the capabilities and applications of this framework. First, extending the framework to integrate advanced machine learning algorithms for adaptive motion prediction could further improve performance in highly dynamic and uncertain environments. Second, exploring the integration of tactile sensors and advanced proprioceptive sensing might enhance the robot’s ability to interact more effectively with complex and variable terrains.

Additionally, the framework’s adaptability could be tested on other multi-limbed robots, including quadrupeds and exoskeleton systems, to assess its generalizability. Future works could also investigate the application of this methodology in real-time collaborative tasks involving human–robot interaction, such as industrial assembly lines or medical assistive robotics. Furthermore, optimizing computational efficiency to enable faster decision-making in dense obstacle environments remains a critical area for development.

Finally, the integration of this perceptive motion reference framework with external object manipulation and coordinated multi-robot systems opens up exciting possibilities in logistics, search-and-rescue operations, and extraterrestrial exploration.

By addressing these challenges and opportunities, the proposed framework has the potential to significantly advance the state-of-the-art in humanoid robot motion planning and control.

Author Contributions

Conceptualization, S.W.; Methodology, Z.J. and Y.W.; Software, Y.W.; Validation, Y.W.; Formal analysis, S.W.; Investigation, Z.J.; Resources, Z.J. and Y.W.; Data curation, Y.W.; Writing—original draft preparation, Y.W.; Writing—review and editing, Y.W. and S.B.; Visualization, Y.W.; Supervision, S.B.; Project administration, Z.J.; Funding acquisition, J.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Guangdong Basic and Applied Basic Research Foundation (Grant No.: 2021A1515011423).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

Author Zhiyong Jiang was employed by the company China Electronics Technology Group Corporation. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Hirukawa, H.; Kanehiro, F.; Kaneko, K.; Kajita, S.; Fujiwara, K.; Kawai, Y.; Tomita, F.; Hirai, S.; Tanie, K.; Isozumi, T.; et al. Humanoid robotics platforms developed in HRP. Robot. Auton. Syst. 2004, 48, 165–175. [Google Scholar] [CrossRef]

- Kaneko, K.; Harada, K.; Kanehiro, F.; Miyamori, G.; Akachi, K. Humanoid robot HRP-3. In Proceedings of the 2008 IEEE/RSJ International Conference on Intelligent Robots and Systems, Nice, France, 22–26 September 2008; pp. 2471–2478. Available online: https://ieeexplore.ieee.org/document/4650604 (accessed on 28 October 2024).

- Kaneko, K.; Kanehiro, F.; Morisawa, M.; Akachi, K.; Miyamori, G.; Hayashi, A.; Kanehira, N. Humanoid robot HRP-4—Humanoid robotics platform with lightweight and slim body. In Proceedings of the 2011 IEEE/RSJ International Conference on Intelligent Robots and Systems, San Francisco, CA, USA, 25–30 September 2011; IEEE: Piscataway, NJ, USA, 2011; pp. 4400–4407. Available online: https://ieeexplore.ieee.org/document/6094465/ (accessed on 28 October 2024).

- Feng, S.; Whitman, E.; Xinjilefu, X.; Atkeson, C.G. Optimization based full body control for the Atlas robot. In Proceedings of the 2014 IEEE-RAS International Conference on Humanoid Robots, Madrid, Spain, 18–20 November 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 120–127. Available online: https://ieeexplore.ieee.org/document/7041347 (accessed on 28 October 2024).

- Gong, Y.; Hartley, R.; Da, X.; Hereid, A.; Harib, O.; Huang, J.-K.; Grizzle, J. Feedback control of a Cassie bipedal robot: Walking, standing, and riding a Segway. In Proceedings of the 2019 American Control Conference (ACC), Philadelphia, PA, USA, 10–12 July 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 4559–4566. Available online: https://ieeexplore.ieee.org/document/8814833 (accessed on 28 October 2024).

- Castillo, G.A.; Weng, B.; Zhang, W.; Hereid, A. Robust feedback motion policy design using reinforcement learning on a 3D Digit bipedal robot. In Proceedings of the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September–1 October 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 5136–5143. Available online: https://ieeexplore.ieee.org/document/9636467 (accessed on 28 October 2024).

- Zhong, Y.; Shirinzadeh, B.; Yuan, X. Optimal robot path planning with cellular neural network. Int. J. Intell. Mechatron. Robot. (IJIMR) 2011, 1, 20–39. [Google Scholar] [CrossRef]

- Zhong, Y.; Shirinzadeh, B.; Tian, Y. A new neural network for robot path planning. In Proceedings of the 2008 IEEE/ASME International Conference on Advanced Intelligent Mechatronics, Xi’an, China, 2–5 July 2008; IEEE: New York, NY, USA, 2008; pp. 1361–1366. [Google Scholar] [CrossRef]

- Zhou, Q.; Gao, S.; Qu, B.; Gao, X.; Zhong, Y. Crossover recombination-based global-best brain storm optimization algorithm for UAV path planning. In Proceedings of the Romanian Academy Series A—Mathematics, Physics, Technical Sciences, Information Science; Publishing House of the Romanian Academy: Bucharest, Romania, 2022; Volume 23, pp. 207–216. [Google Scholar]

- Bahwini, T.; Zhong, Y.; Gu, C. Path planning in the presence of soft tissue deformation. In International Journal on Interactive Design and Manufacturing (IJIDeM); Springer: Berlin/Heidelberg, Germany, 2019; Volume 13, pp. 1603–1616. [Google Scholar] [CrossRef]

- Hills, J.; Zhong, Y. Cellular neural network-based thermal modelling for real-time robotic path planning. In International Journal of Agile Systems and Management; Inderscience Publishers Ltd.: Geneva, Switzerland, 2014; Volume 7, pp. 261–281. [Google Scholar] [CrossRef]

- Kajita, S.; Kanehiro, F.; Kaneko, K.; Fujiwara, K.; Harada, K.; Yokoi, K.; Hirukawa, H. Biped walking pattern generation by using preview control of zero-moment point. In Proceedings of the 2003 IEEE International Conference on Robotics and Automation (Cat. No. 03CH37422), Taipei, Taiwan, 14–19 September 2003; IEEE: Piscataway, NJ, USA, 2003; Volume 2, pp. 1620–1626. Available online: https://ieeexplore.ieee.org/document/1241826/ (accessed on 28 October 2024).

- Yang, C. Interval Riccati equation-based and non-probabilistic dynamic reliability-constrained multi-objective optimal vibration control with multi-source uncertainties. J. Sound Vib. 2025, 595, 118742. [Google Scholar] [CrossRef]

- Adu-Bredu, A.; Gibson, G.; Grizzle, J. Exploring kinodynamic fabrics for reactive whole-body control of underactuated humanoid robots. In Proceedings of the 2023 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Detroit, MI, USA, 1–5 October 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 10397–10404. Available online: https://ieeexplore.ieee.org/document/10342091/ (accessed on 28 October 2024).

- Liu, W.; Liang, X.; Zheng, M. Task-constrained motion planning considering uncertainty-informed human motion prediction for human–robot collaborative disassembly. IEEE/ASME Trans. Mechatronics 2023, 28, 2056–2063. Available online: https://ieeexplore.ieee.org/document/10138312/ (accessed on 28 October 2024). [CrossRef]

- Hu, G.; Ni, L.; Gao, B.; Zhu, X.; Wang, W.; Zhong, Y. Model predictive based unscented Kalman filter for hypersonic vehicle navigation with INS/GNSS integration. IEEE Access 2019, 8, 4814–4823. [Google Scholar] [CrossRef]

- Katayama, S.; Murooka, M.; Tazaki, Y. Model predictive control of legged and humanoid robots: Models and algorithms. Adv. Robot. 2023, 37, 298–315. [Google Scholar] [CrossRef]

- Harbi, I.; Rodriguez, J.; Liegmann, E.; Makhamreh, H.; Heldwein, M.L.; Novak, M.; Rossi, M.; Abdelrahem, M.; Trabelsi, M.; Ahmed, M.; et al. Model-predictive control of multilevel inverters: Challenges, recent advances, and trends. IEEE Trans. Power Electron. 2023, 38, 10845–10868. [Google Scholar] [CrossRef]

- Rudin, N.; Hoeller, D.; Reist, P.; Hutter, M. Learning to walk in minutes using massively parallel deep reinforcement learning. In Proceedings of the Conference on Robot Learning, Auckland, New Zealand, 14–18 December 2022; PMLR. pp. 91–100. Available online: https://proceedings.mlr.press/v164/rudin22a.html (accessed on 28 October 2024).

- Yang, C.; Fan, Z.; Lu, W.; Gao, H. Uncertain iterative optimal attitude control method for periodic satellite with reliability constraint. IEEE Trans. Aerosp. Electron. Syst. 2024, 60, 6461–6473. Available online: https://ieeexplore.ieee.org/document/10540193 (accessed on 28 October 2024). [CrossRef]

- Yang, C.; Wang, Q.; Lu, W.; Li, Y. Integrated uncertain optimal design strategy for truss configuration and attitude–vibration control in rigid–flexible coupling structure with interval uncertainties. Nonlinear Dyn. 2024, 1–24. [Google Scholar] [CrossRef]

- Forbes, M.G.; Patwardhan, R.S.; Hamadah, H.; Gopaluni, R.B. Model predictive control in industry: Challenges and opportunities. IFAC-PapersOnLine 2015, 48, 531–538. [Google Scholar] [CrossRef]

- Rutschmann, M.; Satzinger, B.; Byl, M.; Byl, K. Nonlinear model predictive control for rough-terrain robot hopping. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura-Algarve, Portugal, 7–12 October 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 1859–1864. Available online: https://ieeexplore.ieee.org/document/6385865/ (accessed on 28 October 2024).

- Kashyap, A.K.; Parhi, D.R. Optimization of stability of humanoid robot NAO using ant colony optimization tuned MPC controller for uneven path. Soft Comput. 2021, 25, 5131–5150. [Google Scholar] [CrossRef]

- Popovic, M.B.; Goswami, A.; Herr, H. Ground reference points in legged locomotion: Definitions, biological trajectories and control implications. Int. J. Robot. Res. 2005, 24, 1013–1032. [Google Scholar] [CrossRef]

- Xi, N.; Tarn, T.-J.; Bejczy, A.K. Intelligent planning and control for multirobot coordination: An event-based approach. IEEE Trans. Robot. Autom. 1996, 12, 439–452. Available online: https://ieeexplore.ieee.org/document/499825 (accessed on 28 October 2024).

- Xi, N.; Tarn, T.-J. Stability analysis of non-time referenced internet-based telerobotic systems. Robot. Auton. Syst. 2000, 32, 173–178. [Google Scholar] [CrossRef]

- Latella, C.; Traversaro, S.; Ferigo, D.; Tirupachuri, Y.; Rapetti, L.; Andrade Chavez, F.J.; Nori, F.; Pucci, D. Simultaneous floating-base estimation of human kinematics and joint torques. Sensors 2019, 19, 2794. [Google Scholar] [CrossRef] [PubMed]

- Wahrmann, D.; Wu, Y.; Sygulla, F.; Hildebrandt, A.-C.; Wittmann, R.; Seiwald, P.; Rixen, D. Time-variable, event-based walking control for biped robots. Int. J. Adv. Robot. Syst. 2018, 15, 1729881418768918. [Google Scholar] [CrossRef]

- Hamed, K.A.; Kim, J.; Pandala, A. Quadrupedal locomotion via event-based predictive control and QP-based virtual constraints. IEEE Robot. Autom. Lett. 2020, 5, 4463–4470. Available online: https://ieeexplore.ieee.org/document/9113252 (accessed on 28 October 2024). [CrossRef]

- Kuindersma, S.; Permenter, F.; Tedrake, R. An efficiently solvable quadratic program for stabilizing dynamic locomotion. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 2589–2594. Available online: https://ieeexplore.ieee.org/document/6907230 (accessed on 28 October 2024).

- Chevallereau, C.; Formal’sky, A.; Djoudi, D. Tracking a joint path for the walk of an underactuated biped. Robotica 2004, 22, 15–28. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).