3D Road Boundary Extraction Based on Machine Learning Strategy Using LiDAR and Image-Derived MMS Point Clouds

Abstract

1. Introduction

- We propose a new methodology that integrates machine learning for the extraction of road boundaries and surfaces from point clouds, utilizing object space constraints applicable to both MLS and MMS data;

- We present a novel approach for road edge segmentation that enables the detection of various road structures, such as curbs, turns at intersections, traffic islands, and roundabouts;

- We conducted experiments with dataset acquired from a MLS and a MMS and assessed the accuracy of the results by comparing with reference ground truth, manually measured with the RTK-GNSS technique.

2. Related Studies

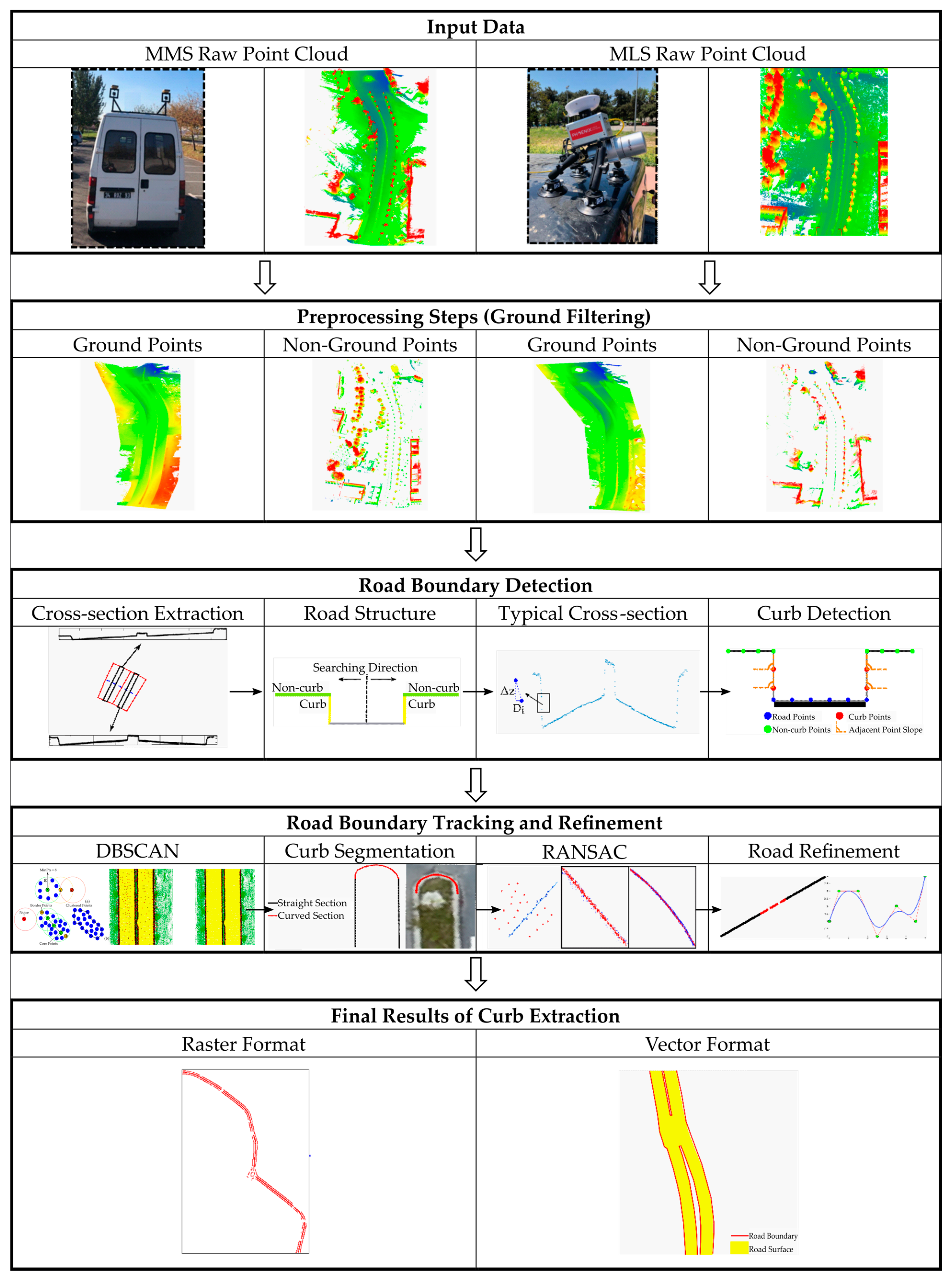

3. Methodology

3.1. Pre-Processing Steps

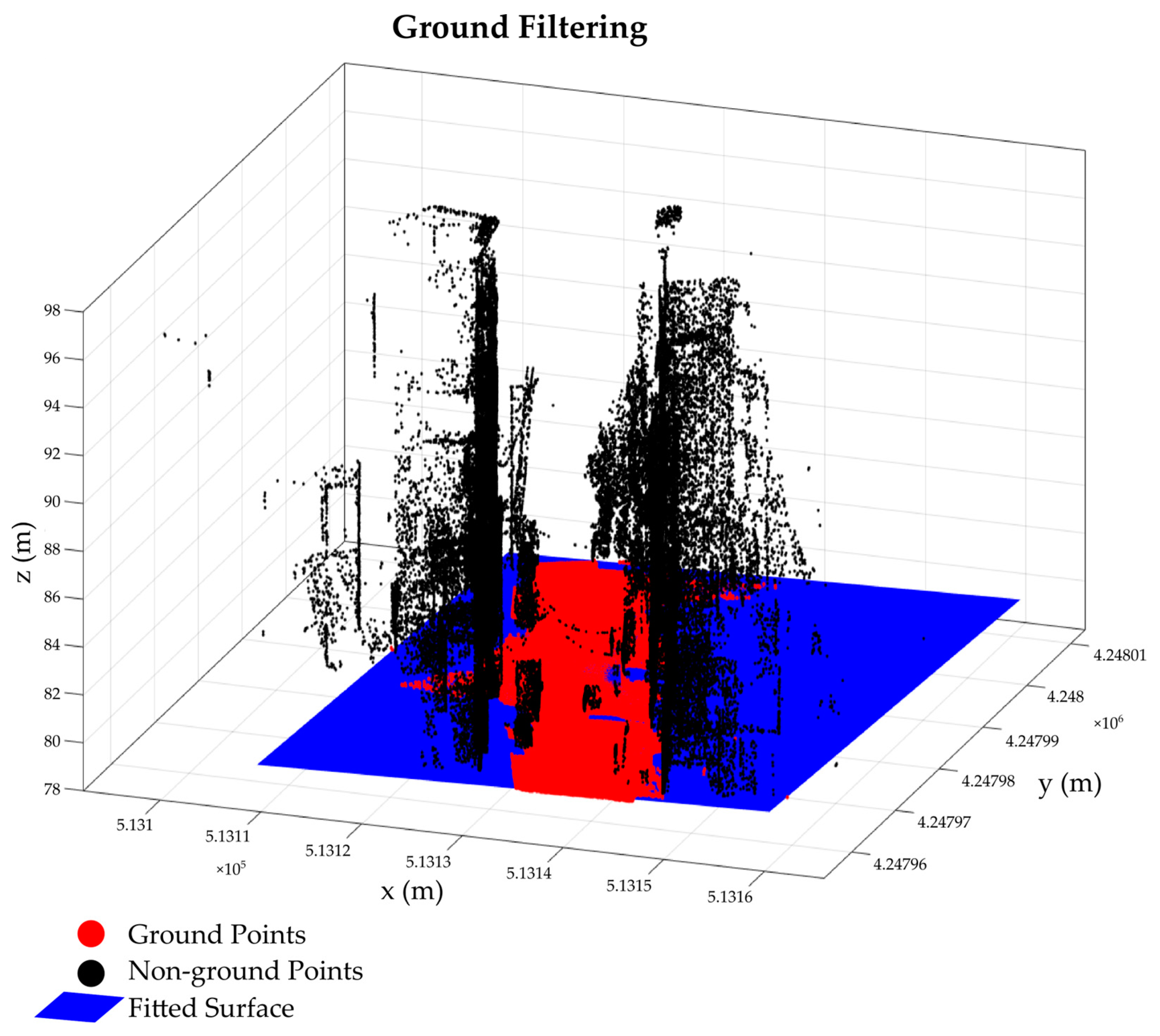

3.1.1. Ground Filtering

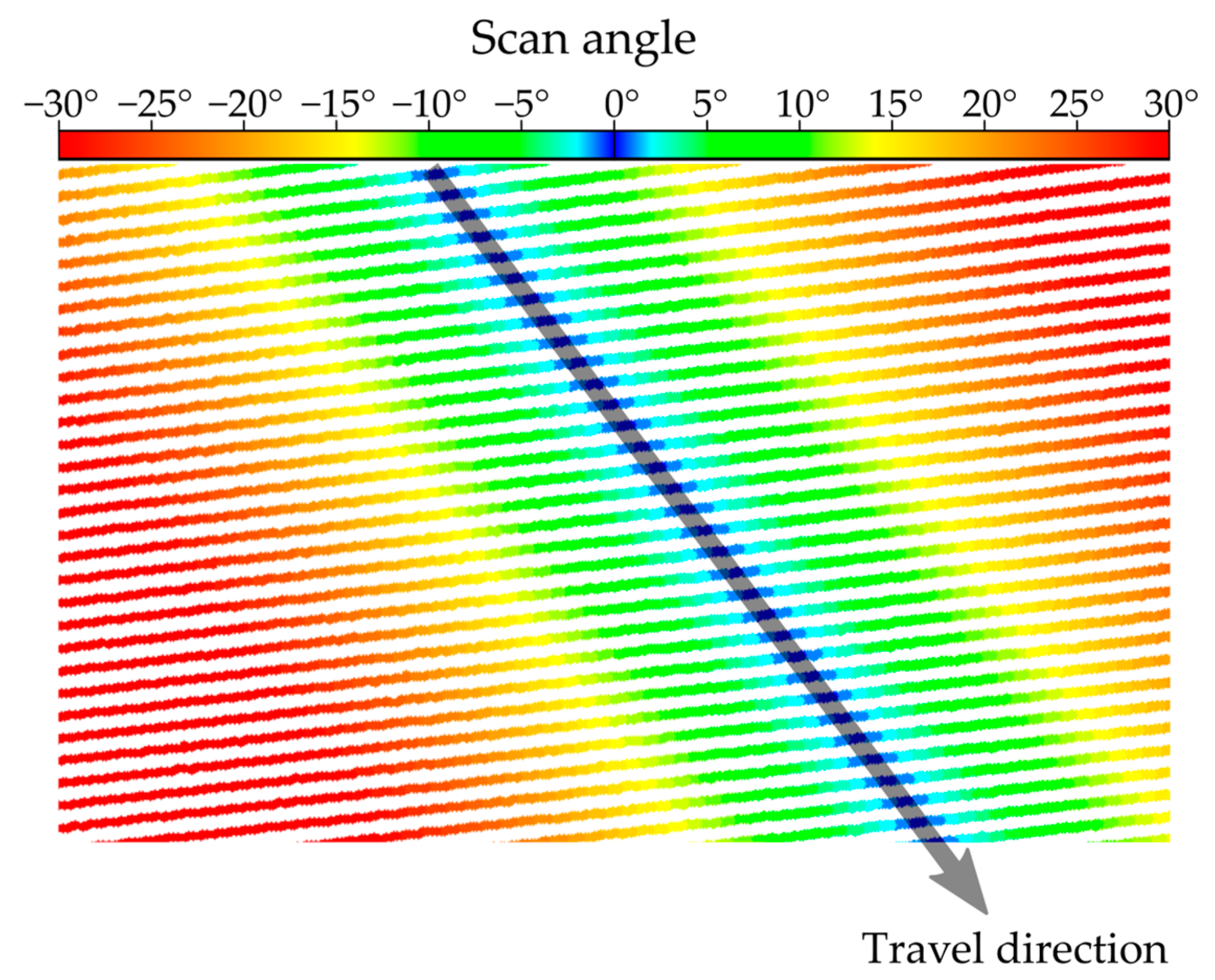

3.1.2. Extraction the Vehicle Trajectory from RAW LAS Data

3.2. Road Boundary Detection and Road Surface Extraction

3.2.1. Partitioning Point Clouds into Sections

3.2.2. Extracting Road Boundaries Using Slope and Elevation Threshold

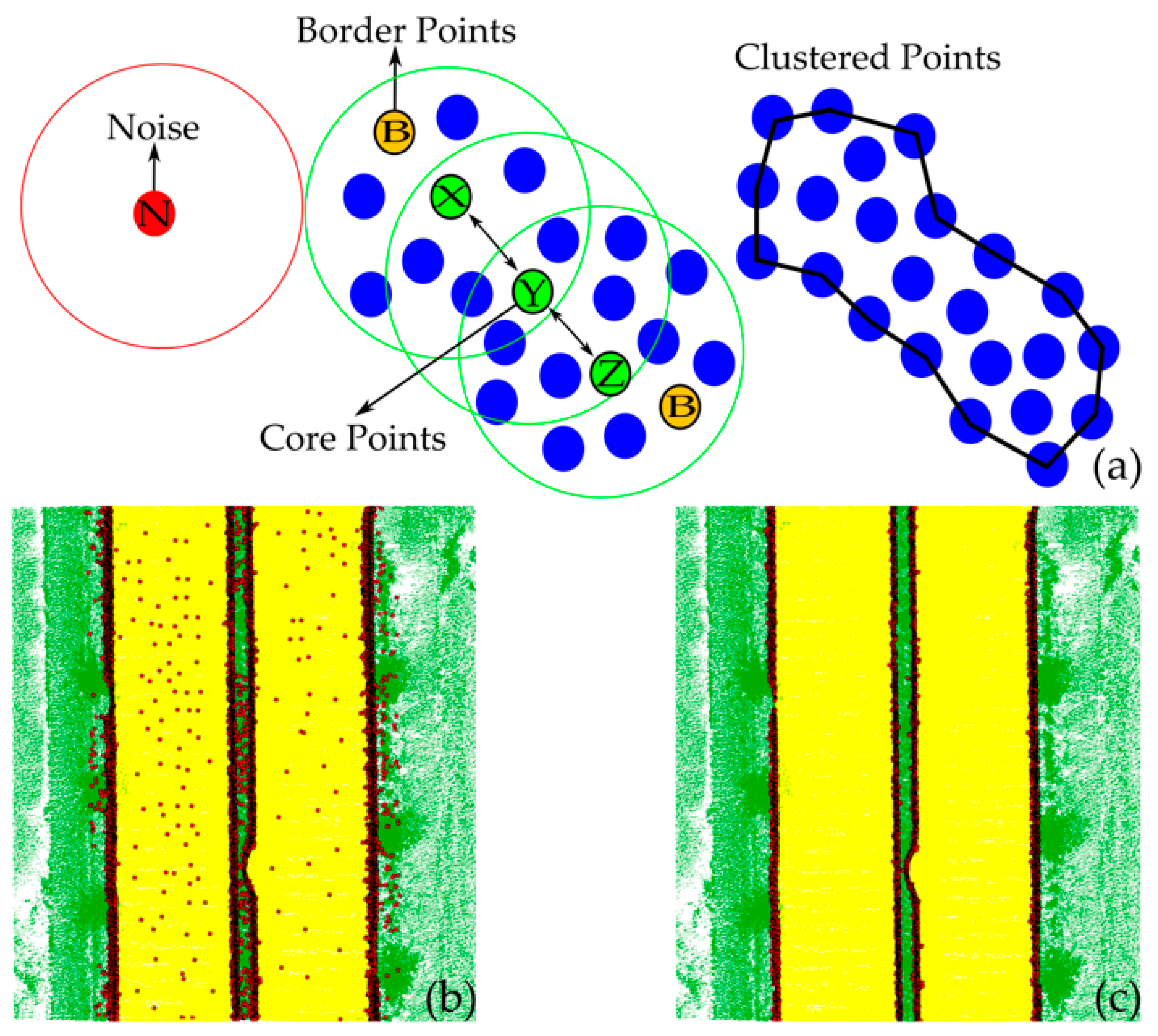

3.2.3. Road Boundary Tracking and Refinement

- A random point, X, is chosen from the LiDAR point cloud, and then, points are examined based on the parameters minPts and ε. The distances from the selected point X to all other points are calculated. Within this framework, all neighbors of point X, whose distance from X is either less than or equal to ε or falls within the specified radius (eps − ε) of the circle, are retained.

- The point X is considered a core point if there are more points than the specified minPts within the space around it defined by the given ε threshold. If it has less than the minPts in the ε-neighborhood, then it is identified as a border point. Finally, if the point is not classified as a boundary or core point, it is considered a noise point. In Figure 9a, points X, Y, and Z are selected as core points within the ε-neighborhood, satisfying the condition minPts > 8.

- The DBSCAN method creates clusters based on the density-reachable and density-connected concepts. Points that are within a chain of distance ε are considered density-reachable and density-connected; notably, point Z can be density-reachable from point X. Thus, this method creates clusters by connecting core points and their neighbors in dense regions within a distance of ε [66].

4. Experiments

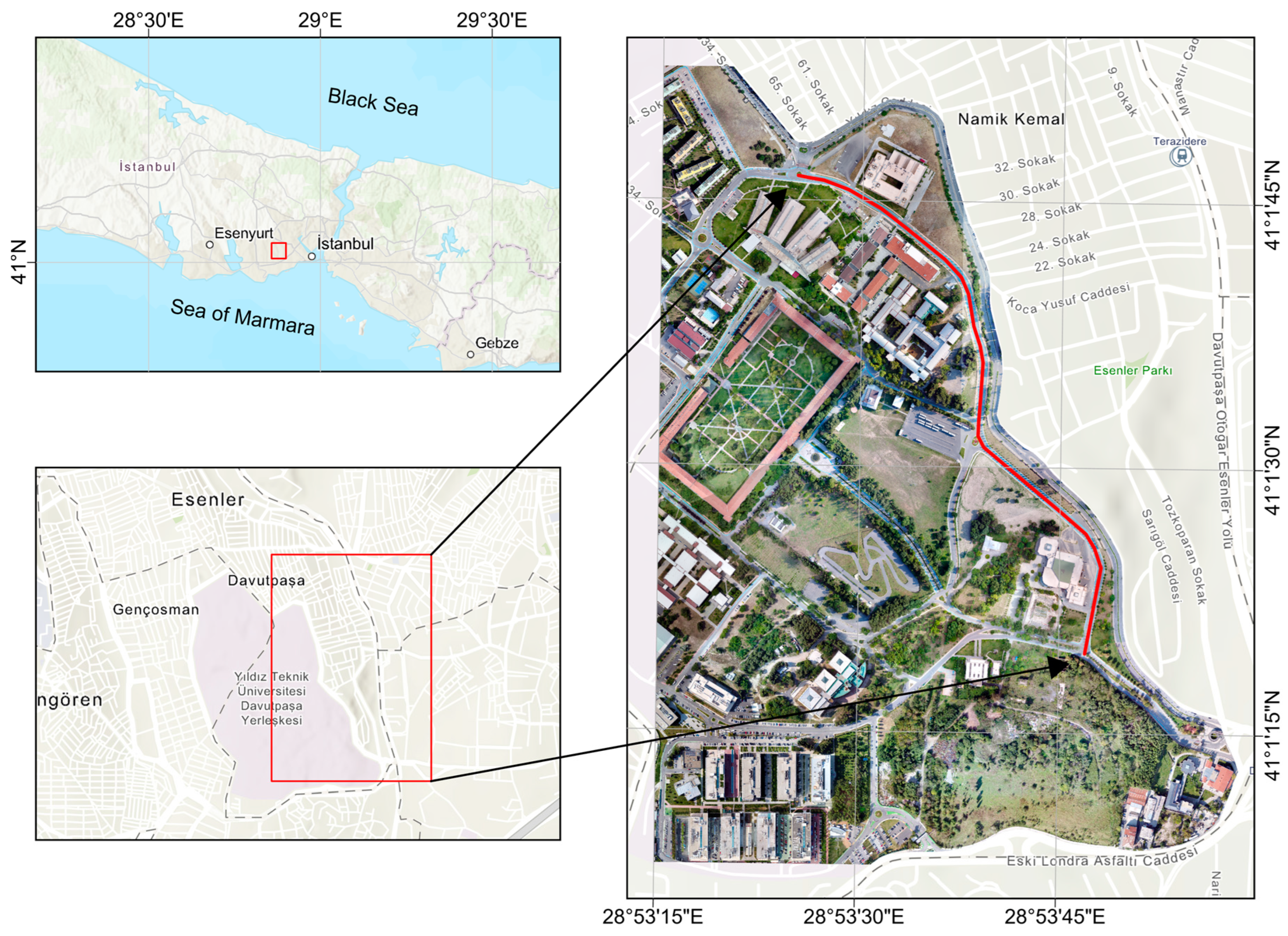

Study Areas, Data Acquisition Systems, and Datasets

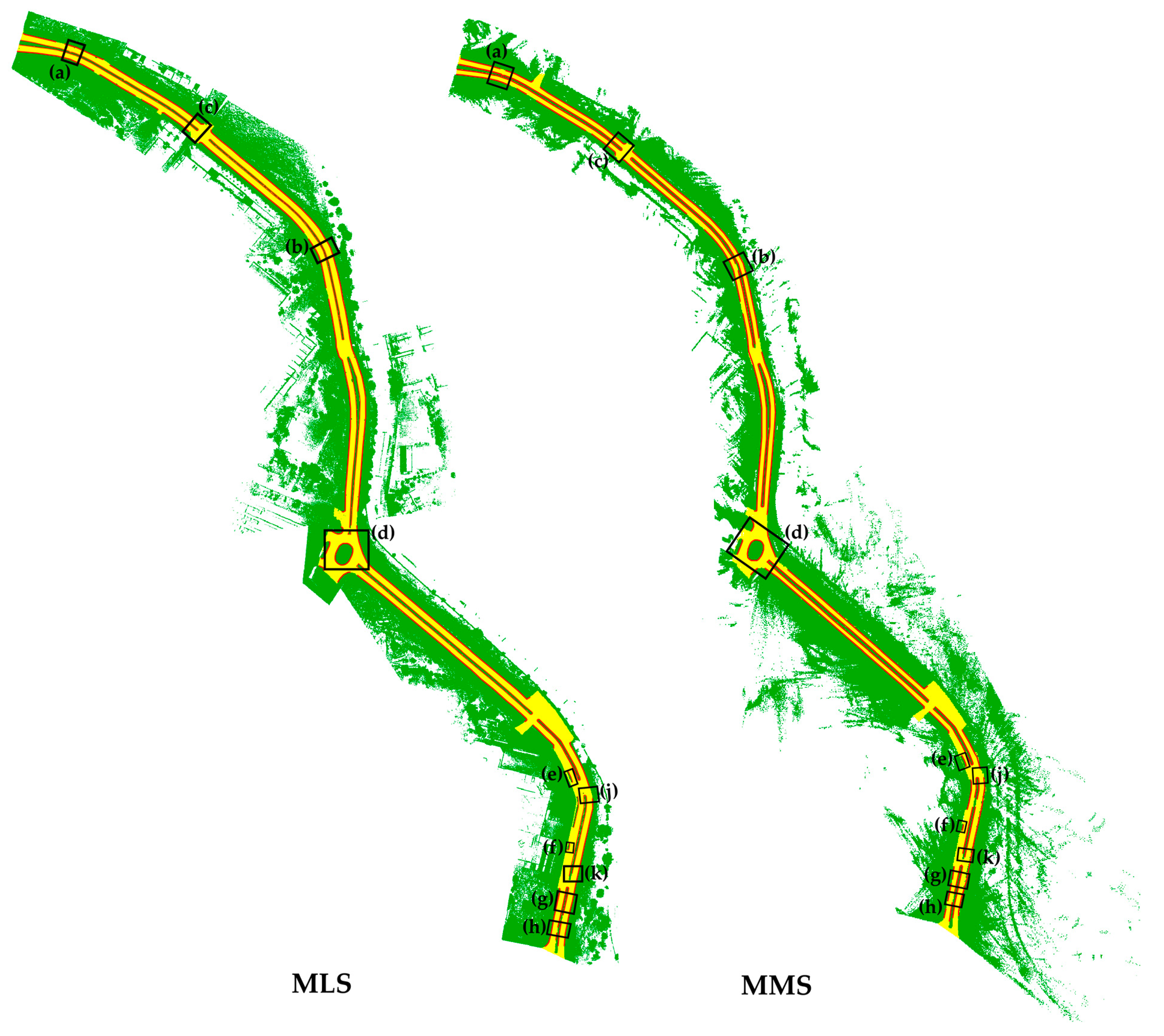

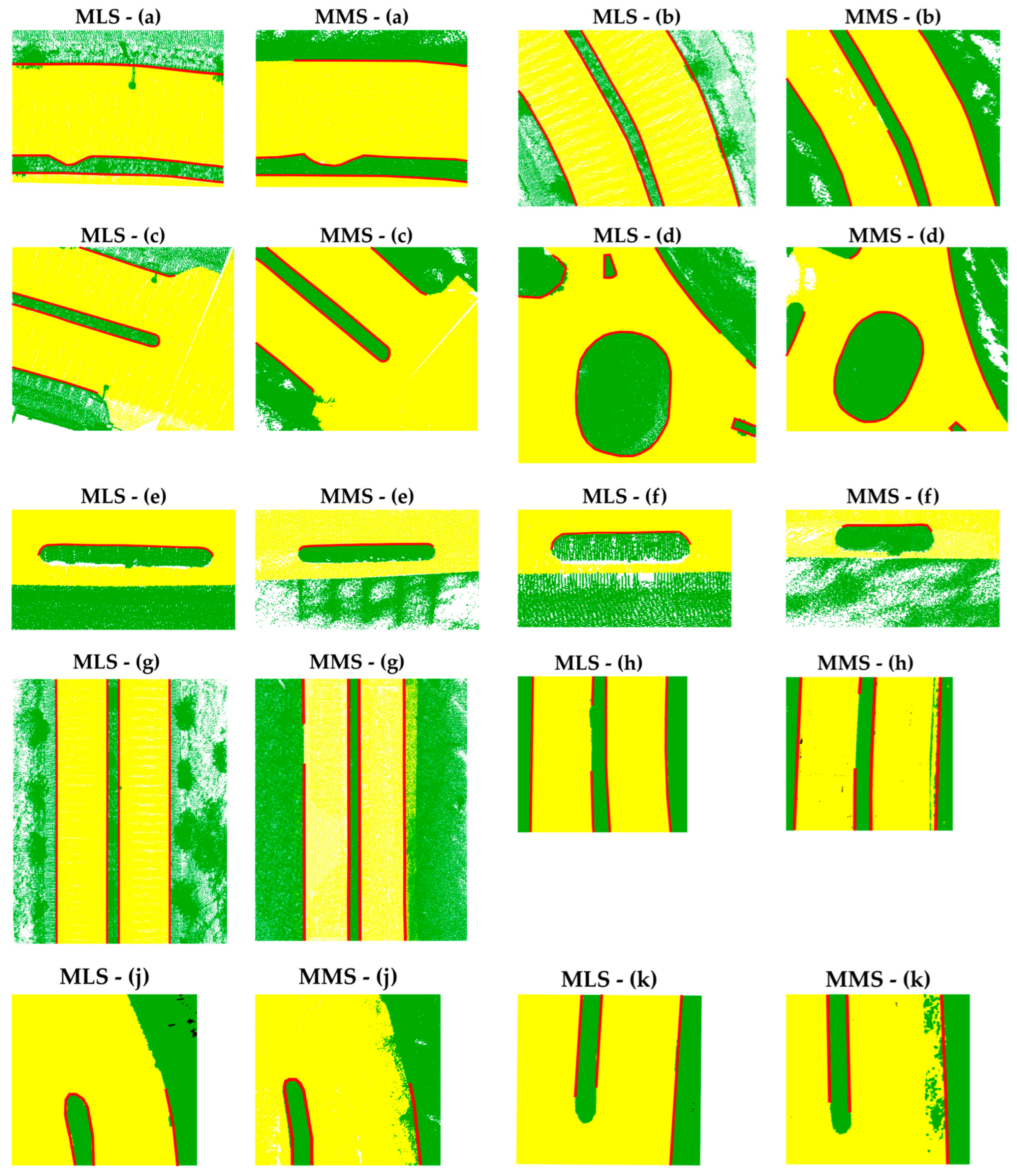

5. Results

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- McCall, J.C.; Trivedi, M.M. Video-based lane estimation and tracking for driver assistance: Survey, system, and evaluation. IEEE Trans. Intell. Transp. Syst. 2006, 7, 20–37. [Google Scholar] [CrossRef]

- Williams, K.; Olsen, M.J.; Roe, G.V.; Glennie, C. Synthesis of transportation applications of mobile LiDAR. Remote Sens. 2013, 5, 4652–4692. [Google Scholar] [CrossRef]

- Ma, L.; Li, Y.; Li, J.; Wang, C.; Wang, R.; Chapman, M.A. Mobile laser scanned point-clouds for road object detection and extraction: A review. Remote Sens. 2018, 10, 1531. [Google Scholar] [CrossRef]

- Zhou, J.; Cheng, L.; Bischof, W.F. Online learning with novelty detection in human-guided road tracking. IEEE Trans. Geosci. Remote Sens. 2007, 45, 3967–3977. [Google Scholar] [CrossRef]

- Zai, D.; Li, J.; Guo, Y.; Cheng, M.; Lin, Y.; Luo, H.; Wang, C. 3-D road boundary extraction from mobile laser scanning data via supervoxels and graph cuts. IEEE Trans. Intell. Transp. Syst. 2017, 19, 802–813. [Google Scholar] [CrossRef]

- Findley, D.J.; Cunningham, C.M.; Hummer, J.E. Comparison of mobile and manual data collection for roadway components. Transp. Res. Part C Emerg. Technol. 2011, 19, 521–540. [Google Scholar] [CrossRef]

- Wang, W.; Yang, N.; Zhang, Y.; Wang, F.; Cao, T.; Eklund, P. A review of road extraction from remote sensing images. J. Traffic Transp. Eng. 2016, 3, 271–282. [Google Scholar] [CrossRef]

- Yadav, M.; Khan, P.; Singh, A.K.; Lohani, B. An automatic hybrid method for ground filtering in mobile laser scanning data of various types of roadway environments. Autom. Constr. 2021, 126, 103681. [Google Scholar] [CrossRef]

- Sha, Z.; Chen, Y.; Lin, Y.; Wang, C.; Marcato, J.; Li, J. A supervoxel approach to road boundary enhancement from 3-d lidar point clouds. IEEE Geosci. Remote Sens. Lett. 2020, 19, 1–5. [Google Scholar] [CrossRef]

- Farhadmanesh, M.; Cross, C.; Mashhadi, A.H.; Rashidi, A.; Wempen, J. Highway asset and pavement condition management using mobile photogrammetry. Transp. Res. Rec. 2021, 2675, 296–307. [Google Scholar] [CrossRef]

- Biçici, S.; Zeybek, M. Effectiveness of training sample and features for random forest on road extraction from unmanned aerial vehicle-based point cloud. Transp. Res. Rec. 2021, 2675, 401–418. [Google Scholar] [CrossRef]

- Nebiker, S.; Cavegn, S.; Loesch, B. Cloud-Based geospatial 3D image spaces—A powerful urban model for the smart city. ISPRS Int. J. Geo-Inf. 2015, 4, 2267–2291. [Google Scholar] [CrossRef]

- Frentzos, E.; Tournas, E.; Skarlatos, D. Developing an image based low-cost mobile mapping system for GIS data acquisition. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, 43, 235–242. [Google Scholar] [CrossRef]

- Elhashash, M.; Albanwan, H.; Qin, R. A review of mobile mapping systems: From sensors to applications. Sensors 2022, 22, 4262. [Google Scholar] [CrossRef] [PubMed]

- Mi, X.; Yang, B.; Dong, Z.; Chen, C.; Gu, J. Automated 3D road boundary extraction and vectorization using MLS point clouds. IEEE Trans. Intell. Transp. Syst. 2021, 23, 5287–5297. [Google Scholar] [CrossRef]

- Helala, M.A.; Pu, K.Q.; Qureshi, F.Z. Road boundary detection in challenging scenarios. In Proceedings of the 2012 IEEE Ninth International Conference on Advanced Video and Signal-Based Surveillance, Beijing, China, 18–21 September 2012; pp. 428–433. [Google Scholar]

- Strygulec, S.; Müller, D.; Meuter, M.; Nunn, C.; Ghosh, S.; Wöhler, C. Road boundary detection and tracking using monochrome camera images. In Proceedings of the 16th International Conference on Information Fusion, Istanbul, Turkey, 9–12 July 2013; pp. 864–870. [Google Scholar]

- Boyko, A.; Funkhouser, T. Extracting roads from dense point clouds in large scale urban environment. ISPRS J. Photogramm. Remote Sens. 2011, 66, S2–S12. [Google Scholar] [CrossRef]

- Stewart, B.; Reading, I.; Thomson, M.; Binnie, T.D.; Dickinson, K.; Wan, C. Adaptive lane finding in road traffic image analysis. In Proceedings of the Seventh International Conference on Road Traffic Monitoring and Control, London, UK, 26–28 April 1994; pp. 133–136. [Google Scholar]

- Melo, J.; Naftel, A.; Bernardino, A.; Santos-Victor, J. Detection and classification of highway lanes using vehicle motion trajectories. IEEE Trans. Intell. Transp. Syst. 2006, 7, 188–200. [Google Scholar] [CrossRef]

- Wen, C.; You, C.; Wu, H.; Wang, C.; Fan, X.; Li, J. Recovery of urban 3D road boundary via multi-source data. ISPRS J. Photogramm. Remote Sens. 2019, 156, 184–201. [Google Scholar] [CrossRef]

- Manandhar, D.; Shibasaki, R. Auto-extraction of urban features from vehicle-borne laser data. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2002, 34, 650–655. [Google Scholar]

- Ibrahim, S.; Lichti, D. Curb-based street floor extraction from mobile terrestrial LiDAR point cloud. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, 39, 193–198. [Google Scholar] [CrossRef]

- Jaakkola, A.; Hyyppä, J.; Hyyppä, H.; Kukko, A. Retrieval algorithms for road surface modelling using laser-based mobile mapping. Sensors 2008, 8, 5238–5249. [Google Scholar] [CrossRef]

- Yoon, J.; Crane, C.D. Evaluation of terrain using LADAR data in urban environment for autonomous vehicles and its application in the DARPA urban challenge. In Proceedings of the 2009 ICCAS-SICE, Fukuoka City, Japan, 18–21 August 2009; pp. 641–646. [Google Scholar]

- Pu, S.; Rutzinger, M.; Vosselman, G.; Elberink, S.O. Recognizing basic structures from mobile laser scanning data for road inventory studies. ISPRS J. Photogramm. Remote Sens. 2011, 66, S28–S39. [Google Scholar] [CrossRef]

- Kumar, P.; McElhinney, C.P.; Lewis, P.; McCarthy, T. Automated road markings extraction from mobile laser scanning data. Int. J. Appl. Earth Obs. Geoinf. 2014, 32, 125–137. [Google Scholar] [CrossRef]

- Yang, B.; Fang, L.; Li, J. Semi-automated extraction and delineation of 3D roads of street scene from mobile laser scanning point clouds. ISPRS J. Photogramm. Remote Sens. 2013, 79, 80–93. [Google Scholar] [CrossRef]

- Guan, H.; Li, J.; Yu, Y.; Chapman, M.; Wang, C. Automated road information extraction from mobile laser scanning data. IEEE Trans. Intell. Transp. Syst. 2014, 16, 194–205. [Google Scholar] [CrossRef]

- Xu, S.; Wang, R.; Zheng, H. Road curb extraction from mobile LiDAR point clouds. IEEE Trans. Geosci. Remote Sens. 2016, 55, 996–1009. [Google Scholar] [CrossRef]

- Wijesoma, W.S.; Kodagoda, K.S.; Balasuriya, A.P. Road-boundary detection and tracking using ladar sensing. IEEE Trans. Robot. Autom. 2004, 20, 456–464. [Google Scholar] [CrossRef]

- Kim, B.; Park, S.; Kim, E. Hough transform-based road boundary localization. Int. J. Fuzzy Log. Intell. Syst. 2017, 17, 162–169. [Google Scholar] [CrossRef][Green Version]

- Huang, R.; Chen, J.; Liu, J.; Liu, L.; Yu, B.; Wu, Y. A practical point cloud based road curb detection method for autonomous vehicle. Information 2017, 8, 93. [Google Scholar] [CrossRef]

- Nebikera, S.; Muttenz, S.-S. 3D Imagery for Infrastructure Management–Mobile Mapping meets the Cloud. In Proceedings of the 56th Photogrammetric Week, Stuttgart, Germany, 11–15 September 2017. [Google Scholar]

- Anguelov, D.; Dulong, C.; Filip, D.; Frueh, C.; Lafon, S.; Lyon, R.; Ogale, A.; Vincent, L.; Weaver, J. Google street view: Capturing the world at street level. Computer 2010, 43, 32–38. [Google Scholar] [CrossRef]

- Aufrere, R.; Mertz, C.; Thorpe, C. Multiple sensor fusion for detecting location of curbs, walls, and barriers. In Proceedings of the IEEE IV2003 Intelligent Vehicles Symposium, Columbus, OH, USA, 9–11 June 2003; pp. 126–131. [Google Scholar]

- Yamaguchi, K.; Watanabe, A.; Naito, T.; Ninomiya, Y. Road region estimation using a sequence of monocular images. In Proceedings of the 2008 19th International Conference on Pattern Recognition, Tampa, Florida, USA, 8–11 December 2008; pp. 1–4. [Google Scholar]

- Zhao, G.; Yuan, J. Curb detection and tracking using 3D-LIDAR scanner. In Proceedings of the 19th IEEE International Conference on Image Processing, Orlando, FL, USA, 30 September–3 October 2012; pp. 437–440. [Google Scholar]

- Broome, M.; Gadd, M.; De Martini, D.; Newman, P. On the road: Route proposal from radar self-supervised by fuzzy LiDAR traversability. AI 2020, 1, 558–585. [Google Scholar] [CrossRef]

- Oniga, F.; Nedevschi, S.; Meinecke, M.M. Curb detection based on elevation maps from dense stereo. In Proceedings of the 2007 IEEE International Conference on Intelligent Computer Communication and Processing, Cluj-Napoca, Romania, 6–8 September 2007; pp. 119–125. [Google Scholar]

- Fernández, C.; Llorca, D.F.; Stiller, C.; Sotelo, M.A. Curvature-based curb detection method in urban environments using stereo and laser. In Proceedings of the 2015 IEEE Intelligent Vehicles Symposium, Seoul, Republic of Korea, 28 June–1 July 2015; pp. 579–584. [Google Scholar]

- Balali, V.; Golparvar-Fard, M. Segmentation and recognition of roadway assets from car-mounted camera video streams using a scalable non-parametric image parsing method. Autom. Constr. 2015, 49, 27–39. [Google Scholar] [CrossRef]

- Uslu, B.; Golparvar-Fard, M.; de la Garza, J.M. Image-based 3D reconstruction and recognition for enhanced highway condition assessment. In Proceedings of the International Workshop on Computing in Civil Engineering, Miami, Florida, USA, 19-22 June 2011; pp. 67–76. [Google Scholar]

- Balali, V.; Jahangiri, A.; Machiani, S.G. Multi-class US traffic signs 3D recognition and localization via image-based point cloud model using color candidate extraction and texture-based recognition. Adv. Eng. Inform. 2017, 32, 263–274. [Google Scholar] [CrossRef]

- Gonçalves, J.; Pinhal, A. Mobile Mapping System Based on Action Cameras. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, 42, 167–171. [Google Scholar] [CrossRef]

- Hasler, O.; Blaser, S.; Nebiker, S. Performance evaluation of a mobile mapping application using smartphones and augmented reality frameworks. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, 2, 741–747. [Google Scholar] [CrossRef]

- Romero, L.M.; Guerrero, J.A.; Romero, G. Road curb detection: A historical survey. Sensors 2021, 21, 6952. [Google Scholar] [CrossRef] [PubMed]

- Chen, Z.; Deng, L.; Luo, Y.; Li, D.; Junior, J.M.; Gonçalves, W.N.; Nurunnabi, A.A.M.; Li, J.; Wang, C.; Li, D. Road extraction in remote sensing data: A survey. Int. J. Appl. Earth Obs. Geoinf. 2022, 112, 102833. [Google Scholar] [CrossRef]

- Zhao, H.; Xi, X.; Wang, C.; Pan, F. Ground surface recognition at voxel scale from mobile laser scanning data in urban environment. IEEE Geosci. Remote Sens. Lett. 2019, 17, 317–321. [Google Scholar] [CrossRef]

- Guo, D.; Yang, G.; Qi, B.; Wang, C. Curb detection and compensation method for autonomous driving via a 3-D-LiDAR sensor. IEEE Sens. J. 2022, 22, 19500–19512. [Google Scholar] [CrossRef]

- Husain, A.; Vaishya, R. A time efficient algorithm for ground point filtering from mobile LiDAR data. In Proceedings of the 2016 International Conference on Control, Computing, Communication and Materials (ICCCCM), Allahabad, India, 21–22 October 2016; pp. 1–5. [Google Scholar]

- Beaton, A.E.; Tukey, J.W. The fitting of power series, meaning polynomials, illustrated on band-spectroscopic data. Technometrics 1974, 16, 147–185. [Google Scholar] [CrossRef]

- Yadav, M.; Singh, A.K.; Lohani, B. Extraction of road surface from mobile LiDAR data of complex road environment. Int. J. Remote Sens. 2017, 38, 4655–4682. [Google Scholar] [CrossRef]

- Zhong, M.; Sui, L.; Wang, Z.; Yang, X.; Zhang, C.; Chen, N. Recovering missing trajectory data for mobile laser scanning systems. Remote Sens. 2020, 12, 899. [Google Scholar] [CrossRef]

- Yu, Y.; Li, J.; Guan, H.; Wang, C.; Yu, J. Semiautomated extraction of street light poles from mobile LiDAR point-clouds. IEEE Trans. Geosci. Remote Sens. 2014, 53, 1374–1386. [Google Scholar] [CrossRef]

- Puente, I.; Akinci, B.; González-Jorge, H.; Díaz-Vilariño, L.; Arias, P. A semi-automated method for extracting vertical clearance and cross sections in tunnels using mobile LiDAR data. Tunn. Undergr. Space Technol. 2016, 59, 48–54. [Google Scholar] [CrossRef]

- Sun, P.; Zhao, X.; Xu, Z.; Wang, R.; Min, H. A 3D LiDAR data-based dedicated road boundary detection algorithm for autonomous vehicles. IEEE Access 2019, 7, 29623–29638. [Google Scholar] [CrossRef]

- Wu, B.; Yu, B.; Huang, C.; Wu, Q.; Wu, J. Automated extraction of ground surface along urban roads from mobile laser scanning point clouds. Remote Sens. Lett. 2016, 7, 170–179. [Google Scholar] [CrossRef]

- Liu, Z.; Wang, J.; Liu, D. A new curb detection method for unmanned ground vehicles using 2D sequential laser data. Sensors 2013, 13, 1102–1120. [Google Scholar] [CrossRef]

- De Blasiis, M.R.; Di Benedetto, A.; Fiani, M. Mobile laser scanning data for the evaluation of pavement surface distress. Remote Sens. 2020, 12, 942. [Google Scholar] [CrossRef]

- Jung, J.; Che, E.; Olsen, M.J.; Parrish, C. Efficient and robust lane marking extraction from mobile lidar point clouds. ISPRS J. Photogramm. Remote Sens. 2019, 147, 1–18. [Google Scholar] [CrossRef]

- Barbarella, M.; Di Benedetto, A.; Fiani, M. A Method for Obtaining a DEM with Curved Abscissa from MLS Data for Linear Infrastructure Survey Design. Remote Sens. 2022, 14, 889. [Google Scholar] [CrossRef]

- Rodríguez-Cuenca, B.; Garcia-Cortes, S.; Ordóñez, C.; Alonso, M.C. An approach to detect and delineate street curbs from MLS 3D point cloud data. Autom. Constr. 2015, 51, 103–112. [Google Scholar] [CrossRef]

- Ester, M.; Kriegel, H.-P.; Sander, J.; Xu, X. A density-based algorithm for discovering clusters in large spatial databases with noise. In Proceedings of the KDD, 1996; AAAI Press: Portland, OR, USA; pp. 226–231.

- Yan, W.Y.; Morsy, S.; Shaker, A.; Tulloch, M. Automatic extraction of highway light poles and towers from mobile LiDAR data. Opt. Laser Technol. 2016, 77, 162–168. [Google Scholar] [CrossRef]

- Hossain, M.Z.; Islam, M.J.; Miah, M.W.R.; Rony, J.H.; Begum, M. Develop a dynamic DBSCAN algorithm for solving initial parameter selection problem of the DBSCAN algorithm. Indones. J. Electr. Eng. Comput. Sci. 2021, 23, 1602–1610. [Google Scholar] [CrossRef]

- Chen, H.; Liang, M.; Liu, W.; Wang, W.; Liu, P.X. An approach to boundary detection for 3D point clouds based on DBSCAN clustering. Pattern Recognit. 2022, 124, 108431. [Google Scholar] [CrossRef]

- Rahmah, N.; Sitanggang, I.S. Determination of optimal epsilon (eps) value on dbscan algorithm to clustering data on peatland hotspots in sumatra. In Proceedings of the IOP Conference Series: Earth and Environmental Science; IOP Publishing Ltd.: Bristol, UK, 2016; p. 012012. [Google Scholar]

- Awrangjeb, M. Using point cloud data to identify, trace, and regularize the outlines of buildings. Int. J. Remote Sens. 2016, 37, 551–579. [Google Scholar] [CrossRef]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Suleymanoglu, B.; Gurturk, M.; Yilmaz, Y.; Soycan, A.; Soycan, M. Comparison of Unmanned Aerial Vehicle-LiDAR and Image-Based Mobile Mapping System for Assessing Road Geometry Parameters via Digital Terrain Models. Transp. Res. Rec. 2023, 2677, 03611981231157730. [Google Scholar] [CrossRef]

- Gurturk, M.; Yusefi, A.; Aslan, M.F.; Soycan, M.; Durdu, A.; Masiero, A. The YTU dataset and recurrent neural network based visual-inertial odometry. Measurement 2021, 184, 109878. [Google Scholar] [CrossRef]

| Parameters | Description | Values | |

|---|---|---|---|

| MLS Dataset | MMS Dataset | ||

| Δh | Height difference threshold for ground filtering | 0.2 m | 0.2 m |

| Bw | The segment width | 5 m | 5 m |

| CSw | The cross-section width | 0.02 m | 0.02 m |

| St | Slope threshold | 45° | 45° |

| H | The elevation difference for detecting road curbs | Hmin = 0.05 m, Hmax = 0.30 m | |

| Epsilon (ε) | Radius value for searching minimum point | Automatic | Automatic |

| MinPts | Minimum number of points | 7 | 6 |

| Lr (m) | Le (m) | TP (m) | FP (m) | FN (m) | |

|---|---|---|---|---|---|

| MLS | 4052.2 | 3832.2 | 3777.5 | 54.6 | 187.7 |

| MMS | 4052.2 | 3658.1 | 3422.8 | 235.2 | 395.0 |

| Completeness (%) | Correctness (%) | Quality (%) | |

|---|---|---|---|

| MLS | 93.2 | 98.6 | 93.9 |

| MMS | 84.5 | 93.6 | 84.5 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Suleymanoglu, B.; Soycan, M.; Toth, C. 3D Road Boundary Extraction Based on Machine Learning Strategy Using LiDAR and Image-Derived MMS Point Clouds. Sensors 2024, 24, 503. https://doi.org/10.3390/s24020503

Suleymanoglu B, Soycan M, Toth C. 3D Road Boundary Extraction Based on Machine Learning Strategy Using LiDAR and Image-Derived MMS Point Clouds. Sensors. 2024; 24(2):503. https://doi.org/10.3390/s24020503

Chicago/Turabian StyleSuleymanoglu, Baris, Metin Soycan, and Charles Toth. 2024. "3D Road Boundary Extraction Based on Machine Learning Strategy Using LiDAR and Image-Derived MMS Point Clouds" Sensors 24, no. 2: 503. https://doi.org/10.3390/s24020503

APA StyleSuleymanoglu, B., Soycan, M., & Toth, C. (2024). 3D Road Boundary Extraction Based on Machine Learning Strategy Using LiDAR and Image-Derived MMS Point Clouds. Sensors, 24(2), 503. https://doi.org/10.3390/s24020503