A Survey on Reduction of Energy Consumption in Fog Networks—Communications and Computations

Abstract

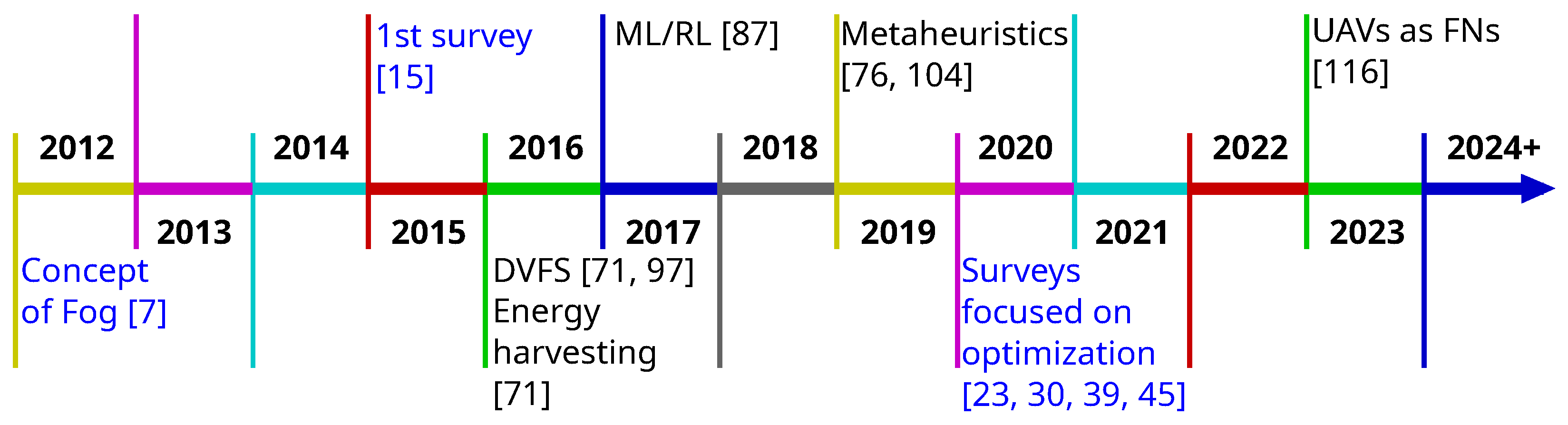

1. Introduction

1.1. Motivation

1.2. Contributions

- We provide a thorough examination of the published survey works through the lenses of energy consumption. Forty-two such works are listed in Table 1, and their contents are compared with ours.

- We provide a holistic perspective on the energy consumption of fog networks related to both computing and communication and include perspectives of both wireless and wired parts of the network.

- Our detailed study includes a survey of network models, their parameterization, and optimization methods and technologies used to reduce the energy consumption of fog networks.

- Our analysis of optimization in the fog network is comprehensive, extracting key information such as objective functions, constraints, and decision variables from analyzed works. We analyze each work presenting multiple optimization problems and solutions. Together, this allows readers to compare and contrast optimization methods between works and within them.

- We discuss the results presented in the surveyed works with a focus on energy reduction but taking into account other metrics such as delay. Baseline solutions are consistently listed.

- We point out future research directions based on the current trends observed in the surveyed works and on our own experience.

1.3. Work Outline

2. Related Work

2.1. Overview of Published Surveys

- (1)

- (2)

- Energy—Fog vs. Cloud—comparing and contrasting the energy consumption of solutions based on fog (possibly including cloud) and those based solely on cloud.

- (3)

- Energy for Communication—energy spent on the transmission of data between nodes in the network. We examine whether the works discuss/present mathematical models describing the energy consumption in the fog. We tick the General column if the topic is at least briefly tackled. The Model column is ticked if network models (including traffic, nodes, and communications) are discussed in the survey, while the Parameterization column is ticked if the model parameter values are surveyed.

- (4)

- Energy for Computation—energy spent on performing computations i.e., processing of data by devices in the network. This aspect is split into General, Model, and Param. just like in the Energy for Communication column.

- (5)

- Energy-saving Methods—the optimization of fog using energy as an objective and/or constraint (the Optimization column) and the utilization of technologies minimizing energy consumption (the Technologies column). The Multiple column refers to works which describe multiple optimization problems and/or propose multiple methods for solving them. It is ticked, when the survey paper contains analysis of all optimization problems and methods from the surveyed works.

| Research Work (Chronological Order) | Work Focus (Group) | 1. Energy Consumption | 2. Energy–Fog | 3. Energy for Communication | 4. Energy for Computation | 5. Energy-Saving Methods | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| of Fog in General | vs. Cloud | General | Model | Param. | General | Model | Param. | Optim. | Tech. | Multi. | ||

| Yi et al. [15] (2015) | Current issues and future work directions (a) | ∘ | - | - | - | - | - | - | - | - | ∘ | - |

| Mao et al. [16] (2017) | Computation and communication models and resource management (c) | + | ∘ | + | + | - | + | + | - | + | + | - |

| Taleb et al. [17] (2017) | Network architecture, orchestration, and underlying technologies (a) | + | - | + | - | - | - | - | - | + | - | - |

| Hu et al. [18] (2017) | Key technologies of fog computing (a) | + | ∘ | + | - | - | + | - | - | + | - | - |

| Jalali et al. [10] (2017) | Power consumption, network design (a) | + | + | + | - | ∘ | + | - | ∘ | + | ∘ | - |

| Abbas et al. [19] (2018) | Extensively covers multiple areas (c) | + | - | + | - | - | + | - | - | + | - | - |

| Nath et al. [9] (2018) | Extensively covers multiple areas (c) | + | - | + | - | - | + | - | - | + | - | - |

| Mouradian et al. [20] (2018) | Categorizing and reviewing articles on architectures and fog-related algorithms (c, d) | + | ∘ | + | - | - | + | - | - | + | - | - |

| Mahmud et al. [21] (2018) | Taxonomy, resource and service provisioning (a, b) | + | - | - | - | - | - | - | - | - | - | - |

| Mukherjee et al. [22] (2018) | Fog computing architectures and models (b, c) | + | ∘ | + | + | - | + | + | - | + | - | - |

| Svorobej et al. [23] (2019) | Simulating fog and edge computing (d, e) | + | - | + | ∘ | - | + | - | - | + | - | - |

| Yousefpour et al. [24] (2019) | Comparison of fog computing and similar paradigms, taxonomy (b, c) | + | - | + | ∘ | - | + | - | - | + | ∘ | - |

| Moura and Hutchison [25] (2019) | Usage of Game Theory in wireless communications (d, e) | + | - | + | - | - | + | - | - | + | ∘ | - |

| Hameed Mohsin and Al Omary [26] (2020) | Energy saving and security in IoT and Fog (e) | + | - | + | - | - | + | - | - | + | ∘ | - |

| Qu et al. [27] (2020) | QoS and energy optimization in fog and other computing paradigms (d) | + | + | + | + | - | + | - | - | + | ∘ | - |

| Rahimi et al. [28] (2020) | Fog computing utilized by smart homes (e) | + | - | - | - | - | - | - | - | + | - | - |

| Caiza et al. [29] (2020) | Fog computing at industrial level (e) | + | - | - | - | - | - | - | - | + | ∘ | - |

| Bendechache et al. [30] (2020) | Simulations of resource management in cloud, fog, and edge (d, e) | + | - | - | - | - | + | - | - | + | - | - |

| Qureshi et al. [31] (2020) | Resource allocation schemes for real-time, high-performance computing (d, e) | + | - | - | - | - | + | - | - | + | + | - |

| Habibi et al. [32] (2020) | Various architectures of fog, e.g., reference, software, security (a, b) | + | + | - | - | - | - | - | - | + | - | - |

| Aslanpour et al. [33] (2020) | Comparison of performance evaluation metrics for cloud, fog, and edge (b, d) | + | + | - | - | - | + | - | - | + | - | - |

| Lin et al. [34] (2020) | Computation offloading—modeling and optimization (d) | + | ∘ | + | + | - | + | + | - | + | + | - |

| Asim et al. [35] (2020) | Computational intelligence algorithms in cloud and edge computing (d, e) | + | + | + | - | - | + | - | - | + | - | - |

| Fakhfakh et al. [36] (2021) | Formal verification of properties of fog and cloud networks (d, e) | + | - | - | - | - | - | - | - | - | - | - |

| QingQingChang et al. [37] (2021) | Health monitoring with fog-based IoT (e) | + | - | - | - | - | - | - | - | ∘ | - | - |

| Alomari et al. [38] (2021) | Taxonomy of SDN-based cloud and fog (b, d) | + | + | - | - | - | + | - | - | + | - | - |

| Ben Dhaou et al. [39] (2021) | Technologies and implementation of E-health using edge devices (e) | + | ∘ | + | - | - | + | - | - | + | + | - |

| Laroui et al. [40] (2021) | Extensively covers multiple areas (c) | + | + | + | - | - | - | - | - | + | - | - |

| Hajam and Sofi [41] (2021) | Smart city applications using IoT, fog, and cloud (e) | + | + | + | - | - | + | - | - | + | ∘ | - |

| Mohamed et al. [42] (2021) | Smart city applications using IoT, fog, and cloud (e) | + | + | + | - | - | + | - | - | + | ∘ | - |

| Martinez et al. [43] (2021) | Design, management, and evaluation of various fog systems (d) | + | - | - | - | - | - | - | - | + | - | - |

| Kaul et al. [44] (2022) | Nature-inspired algorithms for computing optimization (d, e) | + | ∘ | - | - | - | + | + | - | + | ∘ | - |

| Al-Araji et al. [45] (2022) | Fuzzy logic and algorithms in fog computing (d, e) | + | ∘ | - | - | - | - | - | - | + | - | - |

| Rahimikhanghah et al. [46] (2022) | Resource scheduling methods in fog and cloud (d) | + | ∘ | - | - | - | + | - | - | + | + | - |

| Tran-Dang et al. [47] (2022) | Resource allocation in fog using reinforcement learning (d, e) | + | + | + | - | - | + | ∘ | - | + | - | - |

| Acheampong et al. [48] (2022) | Computation offloading—metric, factors, and algorithms (d) | + | ∘ | + | - | - | + | - | - | + | ∘ | - |

| Goudarzi et al. [49] (2022) | Scheduling, taxonomy of application, and environmental architectures (b, d) | + | ∘ | - | - | - | - | - | - | + | ∘ | - |

| Ben Ammar et al. [50] (2022) | Computation offloading and energy harvesting (d, e) | + | ∘ | + | + | + | + | ∘ | - | + | + | - |

| Dhifaoui et al. [51] (2022) (e) | Edge, fog, and cloud computing in smart agriculture | + | + | + | - | - | + | - | - | + | - | - |

| Sadatdiynov et al. [52] (2023) | Optimization methods for computation offloading (d) | + | ∘ | + | + | - | + | ∘ | - | + | ∘ | - |

| Avan et al. [53] (2023) | Scheduling for delay-sensitive applications (d) | + | + | + | - | - | + | - | - | + | - | - |

| Zhou et al. [54] (2023) | Computation offloading, overview of MEC (a, d) | + | ∘ | + | - | - | + | - | - | + | - | - |

| Patsias et al. [55] (2023) | Optimization methods for computation offloading (d) | + | - | + | - | - | + | - | - | + | ∘ | - |

| Shareef et al. [56] (2024) | Handling imbalanced IoT data with fog (d, e) | + | - | + | - | - | - | - | - | + | - | - |

| Our work (2024) | Energy consumption in fog—modeling, parameterization, and optimization (d, e) | + | + | + | + | + | + | + | + | + | + | + |

- (a)

- (b)

- Taxonomy and comparison with similar paradigms—works in this category focus on presenting similarities and differences between fog computing and other similar paradigms, e.g., cloud computing, edge computing, and Mobile/Multi-Access Edge Computing (MEC). Works in this category include [21,22,24,32,33,38,49]. All of them also belong to other categories.

- (c)

- Broad and deep survey—works in this category thoroughly examine multiple topics. These works survey numerous sources—over 100 in the case of [19,20,40] and over 200 in the case of [9,16,22]. The work [24], with over 400 cited works, perfectly fits into this category. It also fits into the category Taxonomy and comparison with similar paradigms.

- (d)

- Resource allocation and optimization—this category refers to works that study the management of fog networks, resource allocation strategies, and various optimization algorithms. Works that broadly survey resource allocation and optimization include [20,27,34,43,46,48,52,54,55]. Other works are more focused on particular scenarios—Alomari et al. [38] study resource allocation in fog and cloud based on SDN, while Ben Ammar et al. [50] examine energy harvesting edge devices, Avan et al. focus on delay-sensitive applications in [53], and Qureshi et al. focus on high-performance computing in [31]. There are also works that focus on particular solutions to the optimization problems: nature-inspired algorithms in [35,44], algorithms based on fuzzy logic in [45], Reinforcement Learning (RL) algorithms in [47], and algorithms based on game theory in [25]. Bendechache et al. and Svorobej et al. focus on the simulation environments rather than the resource management problems themselves in [23,30], while Aslanpour et al. provide a set of performance metrics that can be used in network optimization [33]. Goudarzi et al. examine scheduling applications while providing a taxonomy for application and network architectures [49]. Fakhfakh et al. perform the formal verification of network properties and optimal solutions in [36]. Finally, Shareef et al. [56] examine various aspects of processing imbalanced data generated by IoT devices.

- (e)

- Focused on particular topic—there are multiple surveys focused on particular aspects of fog, which are not included in the previous categories: energy and security in fog and IoT [26], fog-based smart homes [28], fog computing at an industrial scale [29], health monitoring with edge devices/IoT [37,39], smart cities [41,42], and smart agriculture [51]. The focus of our work is complementary to them. The rest of the works in the category Focused on particular topic belong also to other categories and have already been discussed.

2.2. Discussion of Overlapping Works

2.3. Scope of the Survey Discussion

3. Modeling the Fog

3.1. Network

| Work | Application | Communication | Computation | Discrete | Comments | ||||

|---|---|---|---|---|---|---|---|---|---|

| Type | Things-Fog | Intra-Fog | Fog-Cloud | Things | Fog | Cloud | Tasks/Continuous Workload | ||

| 1. Energy spent only by things—mobile devices | |||||||||

| Huang et al. [68] (2012) | Offloading | E and D | N/A | D | E and D | N/A | D | Tasks (e, f) | Single MD, no computing nodes in the fog tier. |

| Sardellitti et al. [69] (2015) | Offloading | E and D | N/A | N/A | N/A (*) | D | N/A | Tasks (a) | Inter-FN interference; local execution is mentioned multiple times yet no equations nor costs are provided. |

| Muñoz et al. [70] (2015) | Offloading | E and D | N/A | N/A | E and D | D | N/A | Tasks (c) | Single MD, separate modeling of Things-Fog UL and DL, single FN. |

| Mao et al. [71] (2016) | Offloading | E and D | N/A | N/A | E and D | – | N/A | Tasks (a) | Single FN, single MD, DVFS for MD. |

| Dinh et al. [72] (2017) | Offloading | E and D | N/A | D | E and D | D | D | Tasks (a) | Single MD; DVFS for MD; cloud is modeled not in conjunction with FNs, but in a separate scenario. |

| You et al. [73] (2017) | Offloading | E and D | N/A | N/A | E and D | D | N/A | Tasks (c) | Single FN. |

| Liu et al. [74] (2018) | Offloading | E and D | – | D | E and D | D | D | Tasks and Workload (c) | Social ties between MDs, single FN with multiple servers. |

| Feng et al. [75] (2018) | Offloading | E and D | N/A | N/A | E | – | N/A | Tasks (c) | Single FN. |

| Cui et al. [76] (2019) | Offloading | E and D | D | N/A | E and D | D | N/A | Tasks and Workload (a) | Single FN, multiple small cell BSs acting as relay nodes. |

| Kryszkiewicz et al. [77] (2019) | Offloading | E | N/A | N/A | E | – | N/A | Workload (a) | Single MD, single FN. |

| He et al. [78] (2020) | Offloading | E and D | N/A | N/A | E and D | D | N/A | Tasks (a) | |

| Shahidinejad and Ghobaei-Arani [79] (2020) | Offloading | E and D | D | D | E and D | D | D | Tasks (a) | Resource provisioning, edge gateways acting as relay nodes. |

| Nath and Wu [80] (2020) | Offloading and task caching | E and D | – | – | E and D | D | N/A | Tasks (a) | One or more FNs, FNs can cache tasks, FNs can fetch tasks from other FNs or cloud, fetching incurs costs but neither energy nor delay. |

| Bai and Qian [81] (2021) | Offloading | E and D | N/A | D | E and D | D | – | Tasks (a) | |

| Vu et al. [82] (2021) | Offloading | E and D | N/A | E & D | E and D | D | D | Tasks (a) | Includes direct transmission things-cloud. |

| Bian et al. [83,84] (2022) | Data aggr.—distributed training | E and D (*) | N/A | N/A | E and D (*) | N/A | N/A | – | Delay and energy spent are random in time, their models are not given; a single FN coordinating distributed learning; [84] is the longer version. |

| Yin et al. [85] (2024) | Offloading | E and D | N/A | N/A | E and D | D | N/A | Tasks (a) | single FN, single MD, DVFS for MD. |

| 2. Energy spent only by fog nodes | |||||||||

| Ouesis et al. [86] (2015) | Offloading | – | E and D | N/A | N/A | D | N/A | Tasks (a) | |

| Xu et al. [87] (2017) | Offloading | D | – | E and D | N/A | E and D | D | Workload (c) | Transmission to cloud and processing in cloud are modeled jointly; single FN with multiple servers; resource provisioning. |

| Chen et al. [88] (2018) | Offloading | E and D | D | N/A | N/A | E and D | N/A | Tasks (a) | things–fog transmission costs spent by the FN. |

| Murtaza et al. [89] (2020) | Offloading | D | N/A | D | N/A | E and D | D | Tasks (a) | Service provisioning. |

| Gao et al. [90] (2020) | Offloading | N/A | E | – | N/A | E and D | – | Workload (c) | 2 tiers of FNs, DVFS for FNs. |

| Vakilian et al. [91] (2020) | Offloading | N/A | D | D | N/A | E and D | D | Workload (c) | |

| Vakilian et al. [92] (2021) | Offloading | N/A | D | D | N/A | E and D | D | Workload (c) | |

| Vakilian et al. [93] (2021) | Offloading | N/A | D | – (*) | N/A | E and D | – | Workload (c) | Transmission to a cloud is mentioned throughout the model, but the mathematical description is missing. |

| Abdel-Basset et al. [94] (2021) | Offloading | N/A | – | N/A | N/A | E and D | N/A | Tasks (a) | Multiple VMs, each VM can be thought of as a separate FN. |

| Sun and Chen [95] (2023) | Offloading & Service caching | D | N/A | – (*) | – (*) | E and D | N/A | Tasks (a) | Includes non-energy costs paid by FNs. Local processing and transmission to cloud are mentioned without description. |

| 3. Energy spent only by the cloud | |||||||||

| Do et al. [96] (2015) | Streaming | N/A | N/A | – | N/A | N/A | E | Workload (c) | Energy spent for computing by cloud refers to video processing, energy expressed in terms of carbon footprint. |

| 4. Energy spent by nodes in multiple tiers of the network | |||||||||

| Deng et al. [97] (2016) | Offloading | – | – | D | N/A | E and D | E and D | Tasks and Workload (a, b) | Multiple clouds, DVFS for FNs and clouds. |

| Sarkar and Misra [98] (2016) | Offloading | E and D | – | E and D | N/A | E and D | E and D | Tasks and Workload (a) | |

| Zhang et al. [99] (2017) | Offloading | N/A | E and D | E and D | N/A | E and D | E and D | Workload (c) | Incomplete/ambiguous model descriptions due to magazine style. |

| Sarkar et al. [100] (2018) | Offloading & data aggr. | E and D | – | E and D | N/A | E and D | E and D | Tasks and Workload (a) | Additional energy cost due to infinite processing in cloud. Multiple VMs per FN, intra-FN, and inter-FN resource management. |

| Wang et al. [101] (2019) | Offloading | E and D | N/A | N/A | E and D | E and D | N/A | Tasks (a) | Includes transmission between MDs. |

| Sun et al. [102] (2019) | Content caching | E and D | N/A | E | N/A | N/A | E | – | Energy spent for computing by cloud refers to signal processing. |

| Kopras et al. [103] (2019) | Offloading | E and D | – | E and D | N/A | E and D | E and D | Tasks and Workload (a) | |

| Djemai et al. [104] (2019) | Offloading | E and D | E and D | E and D | E and D | E and D | E and D | Tasks (e, f) | |

| Abbasi et al. [105] (2020) | Offloading | – | – | D | N/A | E and D | E and D | Tasks and Workload (a, b) | Model taken directly from [97]. |

| Roy et al. [106] (2020) | Offloading | E and D | – | – | N/A | E and D | E and D | Tasks (b) | 2 tiers of FNs: dew and edge; each FN and cloud has multiple VMs; no modeling of intra-fog and fog–cloud transmission despite being shown as part of the system; includes failure & repair times of nodes |

| Wang and Chen [107] (2020) | Offloading | E and D | N/A | N/A | E and D | E and D | N/A | Tasks (a) | Single FN, DVFS for MDs and for an FN. |

| Kopras et al. [108] (2020) | Offloading | N/A | E and D | E and D | N/A | E and D | E and D | Tasks (d) | |

| Khumalo et al. [109] (2020) | Offloading | E and D | N/A | D | N/A | E and D | D | Task (a) | |

| Gazori et al. [110] (2020) | Offloading | D | D | D | N/A | E and D | E and D | Tasks (a) | 2 tiers of FNs, multiple VMs per FN and cloud. |

| Zhang et al. [111] (2020) | Offloading | E and D | N/A | N/A | E and D | E and D | N/A | Workload (c) | Single FN, multiple RRHs. |

| Alharbi and Aldossary [112] (2021) | Offloading | E | E | E | N/A | E | E | Workload (a) | Two tiers of FNs. |

| Ghanavati et al. [113] (2022) | Offloading | E and D | – | N/A (*) | N/A | E and D | N/A (*) | Tasks (b) | Transmission between the MDs and broker (gateway or AP); broker–FNs transmission has no costs; transmission to a cloud is mentioned without description. |

| Kopras et al. [114] (2022) | Offloading | D | E and D | E and D | N/A | E and D | E and D | Tasks (a) | DVFS for FNs. |

| Kopras et al. [115] (2023) | Offloading | E and D | E and D | E and D | N/A | E and D | E and D | Tasks (a) | DVFS for FNs. |

| Jiang et al. [116] (2023) | Offloading | E and D | N/A | – | E and D | E and D | — | Tasks (a) | UAVs act like FNs, transmission through IRSs, additional flying-related energy costs for UAVs, DVFS for MDs and FNs, cloud coordinates the network. |

| Daghayeghi and Nickray [117] (2024) | Offloading | E and D | E and D | E and D | N/A | E and D | E and D | Tasks (a) | Scheduling nodes between MDs and FNs, multiple clouds. |

- Only those in the things tier,

- Only in fog,

- Only in the cloud, as well as

- Devices in multiple tiers.

3.2. Application Type

3.2.1. Computation Offloading

3.2.2. Data Processing and Aggregation

3.2.3. Content Distribution

3.3. Tasks and Traffic

3.3.1. Discrete Computational Tasks

3.3.2. Continuous Computational Workload

3.3.3. Other Types of Tasks

3.4. Model of Energy Spent on Communication

3.4.1. Wireless Transmission

- High-level power consumption model,

- Measurement-based estimation,

- Transmitter and receiver components power estimation.

3.4.2. Wired Transmission

- Network nodes cannot be switched off or completely deactivated for connectivity reasons unless they are MDs and the end user decides so. However, parts of them related to links (wired transmission) can be adapted or even deactivated if the device has a modular structure. This refers to Adaptive Line Rate (ALR) in Ethernet networks [148] and data rate adaptation in EONs [149]. The modules need to be related to links (Network Interface Cards (NICs) in a router) in order to be dynamically switched between active and sleep states. Attempts are made to make as small as possible as well as to change the profile of power dependency on load from a linear profile to a cubic one [150]. Dynamic Voltage and Frequency Scaling (DVFS) is the technology used behind these attempts.

- Network links can be completely deactivated if there are alternative paths between the pair of nodes located at their ends. This provides higher power saving than link adaptation but comes with higher costs in terms of the calculation of alternative paths, traffic rerouting, and the time needed to activate and deactivate devices installed along the links (including NICs). Eventually, the reconfiguration process may lead to jitter and packets arriving out of order. Therefore, the reconfiguration has to be planned in advance with rather conservative traffic predictions and fair margins on link utilization [151].

3.5. Model of Energy Spent on Computation

3.5.1. Function of Clock Frequency—Nonlinear Models

3.5.2. Function of the Number of Servers

3.6. Section Summary

4. Fog Scenarios and Parameterization

4.1. What Constitutes a Scenario?

4.2. Scenario Parameterization—Comparison of Surveyed Works

4.2.1. Classification Rules for Works in Table 4

4.2.2. Differences and Similarities in Chosen Scenarios

4.3. Section Summary

5. Optimization of Energy-Saving in the Fog

5.1. Optimization Problem Formulation

| SID. Work | Scenario | Objective Function | Constraints | Decision Variables | Optim. Methods |

|---|---|---|---|---|---|

| 1. Convex optimization | |||||

| C1. Do et al. [96] (2015) | One cloud streaming video to multiple FNs | Maximize the utility (amount of video streaming) minus cost (cloud energy—carbon footprint) | Computational capacity of cloud | Amount of streaming to each FN | Proximal algorithm, ADMM |

| C2. Ouesis et al. [86] (2015) | Multiple FNs receiving tasks from MDs, FNs processing tasks or sending to other FNs | Minimize energy spent on the transmission of tasks by the FNs | Max. delay, FN computing rates | FN transmission power, allocation of computational resources to tasks | Reformulation into a convex problem, Lagrange method |

| C3. Sardellitti et al. [69] (2015) | Single MD offloading tasks to a single FN through a BS | Minimize energy consumption spent by the MD on transmission | Max. delay, max. MD transmission power | MD transmit covariance matrix, FN computing resources used | Reformulation into a convex problem, water-filling algorithm |

| C4. Sardellitti et al. [69] (2015) | Multiple MDs offloading tasks to a single FN through multiple BSs, multiple MDs transmitting without offloading | Weighted sum of MD transmission energy consumption | Max. delay for offloading MDs, min. rate for non-offloading MDs, max. MD transmission power | MD transmit covariance matrices, allocation of FN computing resources to tasks | SCA |

| C5. Sardellitti et al. [69] (2015) | Multiple MDs offloading tasks to a single FN through multiple BSs, multiple MDs transmitting without offloading (decentralized for each BS) | As in C4 | As in C4 | As in C4 | SCA, separation of delay constraint in covariance matrices, dual decomposition |

| C6. Sardellitti et al. [69] (2015) | As in C5 | As in C4 | As in C4 | Decomposition using slack variables, SCA with or without second-order information | |

| C7.1. Muñoz et al. [70] | One MD transmitting (UL) a task to a single FN | Minimize energy consumption spent on transmission by the MD | Min. transmission | MD transmission covariance matrix | Water-filling algorithm |

| C7.2 | One MD receiving (DL) offloaded task from the FN | Maximize DL transmission rate | Max. FN transmission power | FN transmission covariance matrix | As in C7.1 |

| C7.3 | One MD offloading a task to a single FN, includes C7.1 and C7.2 | Minimize total energy consumption spent by the MD | Max. delay, max. DL transmission power | Portions of tasks offloaded and processed locally, UL and DL transmission times | Problem reformulation in terms of portion of tasks offloaded and UL transmission rate, analytically finding opt. rate, finding opt. offloaded portion through gradient descent |

| C8. Muñoz et al. [70] | As in C7.3 | Minimize total energy consumption spent by the MD | Max. DL transmission power | Portions of tasks offloaded and processed locally, UL and DL transmission times | Mathematically proving that partial offloading can never be opt. and finding the threshold above which local processing is opt. |

| C9. Dinh et al. [72] (2017) | One MD offloading tasks to multiple FNs or processing them locally | Minimize the weighted sum of latency and MD energy consumption | One-task-one-node | Allocation of tasks | LP relaxation |

| C10. Dinh et al. [72] (2017) | As in C9 | As in C9 | As in C9 | As in C9 | SDR |

| C11. Dinh et al. [72] (2017) | As in C10 | As in C10 | As in C10 | Allocation of tasks, MD operating frequency | As in C10 |

| C12. You et al. [73] (2017) | Multiple MDs offloading tasks (also partially) to a single FN with infinite computing capacity (TDMA) or processing them locally | Minimize the sum of energy consumption of all MDs weighted by unspecified fairness | Max. delay, one task per time slot | Size of offloaded portions of tasks, time slot allocation | Lagrange method, bisection search, this problem is used as a subproblem for other optimizations from [73] |

| C13. You et al. [73] (2017) | Multiple MDs offloading tasks (also partially) to a single FN (TDMA) or processing them locally | As in C12 | As in C12 | As in C12 | Lagrange method, utilizing solution C12, 2D search for Lagrange multipliers (time slots, offloaded portions) |

| C14. You et al. [73] (2017) | Multiple MDs offloading tasks (also partially) to a single FN (OFDMA) or processing them locally | As in C13 | Max. delay | Size of offloaded portions of tasks, subchannel allocation | Relaxation-and-rounding |

| C15. Feng et al. [75] (2018) | Multiple MDs offloading tasks (also partially) to a single FN or processing them locally | Minimize energy consumption of an MD with the highest consumption | Min. transmit rate, assignment of an IoT device to one and only one subcarrier | Subcarrier allocation, size of offloaded portions of tasks | Lagrange method, relaxation, subgradient projection |

| C16. Chen et al. [88] (2018) | Multiple MDs offloading load to FNs, FNs sharing load between each other—decentralized decision-making | Minimize delay over time | Max. energy cost over time, max. energy cost per time slot, max. delay per time slot | Task allocation to nodes (from one FN to another) | Lyapunov optimization |

| C17. Vakilian and Fanian [91] (2020) | Multiple FNs receiving offloaded workloads and processing them or sending them to other FNs or cloud for processing | Minimize weighted and normalized sum of energy consumption of FNs and delay | Processing rate of FNs | Allocation of workload to nodes | SCS |

| C18. He et al. [78] (2020) | A single MD offloading tasks to multiple FNs with potential non-colluding adversaries sensing its presence | Minimize energy consumption of an MD over time | Max. delay, max. task drop rate, max. likelihood of detection by adversaries, one-task-one-node | Task allocation to nodes, task dropping, MD transmission power | Lyapunov optimization |

| C19. He et al. [78] (2020) | A single MD offloading tasks to multiple FNs with potential colluding adversaries sensing its presence | As in C18 | As in C18 | As in C18 | As in C18 |

| C20.1 Gao et al. [90] (2020) | Multiple FNs receiving offloaded workload and processing it or sending it (or portions of it) to higher-tier FNs or cloud | Minimize average total power consumption of all FNs | Stability of queues, max. size of queues | Allocation of workload to nodes, FN transmit power, FN operating frequency | Lyapunov optimization, decomposition into C20.2, C20.3, and C20.4, workload prediction |

| C20.2 | FNs processing workload | Minimize drift-plus-penalty for FN frequency decision | – | FN operating frequency | Polynomial (3rd degree) minimization |

| C20.3 | Lower-tier FN wirelessly transmitting workload to higher-tier FN | Minimize drift-plus-penalty for transmit power decision | – | FN transmit power | Water-filling algorithm |

| C20.4 | Lower-tier FN processing workload or sending it to higher-tier FN, higher-tier FN processing workload or sending it to cloud | Minimize drift-plus-penalty for offloading decision | – | offloading decision | Polynomial (1st degree) minimization |

| C21. Vu et al. [82] (2021) | Multiple MDs offloading tasks to multiple FNs or a cloud, or processing them locally | Minimize the sum of energy consumption of all MDs | One-task-one-node, max. delay | Allocation of tasks to nodes, uplink, downlink, and computing rates of cloud and of FNs | Relaxation-and-rounding |

| C22. Vu et al. [82] (2021) | As in C21 | As in C21 | As in C21 | As in C21 | Relaxation, improved BB algorithm |

| C23.1 Vu et al. [82] (2021) | As in C21 | As in C21 | As in C21 | As in C21 | FFBD into the following problems |

| C23.2 | Multiple MDs offloading tasks to multiple FNs or a cloud, or processing them locally | Minimize the sum of energy consumption of all MDs | – | Allocation of tasks to nodes | Integer programming |

| C23.3 | Checking if a solution to C23.2 is feasible | – | One-task-one-node, max. delay | Uplink, downlink, and computing rates rates of cloud and of FNs | None (verification of C23.2) |

| C24. Alharbi and Aldosdary [112] (2021) | Multiple MDs offloading tasks to two tiers of FNs (with lower tier nodes being called edge nodes) and cloud DCs | Minimize total power consumption | One-task-one-node, min. number of networking equipment such as routers, switches, terminals, and gateways | A total of 15 different variables | MILP |

| C25.1 Yin et al. [85] (2024) | Single EH MD offloading tasks to a single FN or processing them locally | Minimize the weighted sum of MD energy consumption and delay over time | One-task-one-node, battery level, stability of MD task queue | Task allocation to nodes, MD operating frequency, MD transmit power, harvested energy. | Lyapunov optimization, decomposition to subtasks C24.2 and C24.3 |

| C25.2 | Single MD optimizing EH | Minimize the product harvested energy and virtual level of the battery | Max. harvested energy | Harvested energy | Exhaustive search. |

| C25.3 | Single MD offloading tasks to a single FN or processing them locally | As in C24.1 | As in C24.1 | Task allocation to nodes, MD operating frequency, MD transmit power. | Lyapunov optimization. |

| 2. Heuristics | |||||

| H1. Huang et al. [68] (2012) | Single MDs offloading parts of tasks (directed graphs) to the cloud for offloading or processing them locally | Minimize energy consumption of the MD over time | Max. percentage of tasks exceeding delay constraint, stability of the system | subtask allocation to nodes | Lyapunov optimization, 1-opt local search algorithm |

| H2. You et al. [73] (2017) | Multiple MDs offloading tasks (also partially) to a single FN (TDMA) or processing them locally | Minimize the sum of energy consumption of all MDs weighted by unspecified fairness | Max. delay, one task per time slot | Size of offloaded portions of tasks, time slot allocation | Lagrange method, utilizing solution C12, greedy time slot allocation, 1D search for offloading Lagrange multiplier |

| H3. You et al. [73] (2017) | Multiple MDs offloading tasks (also partially) to a single FN (OFDMA) or processing them locally | Minimize the sum of energy consumption of all MDs weighted by unspecified fairness | Max. delay | Size of offloaded portions of tasks, subchannel allocation | Sequentially performing the following: assigning one subchannel to each MD according to priority, determining the total subchannel number and offloaded data size for each MD, assigning specific subchannels to MDs according to priority, finding the final offloaded data size for each MD |

| H4. Kopras et al. [108] (2020) | Single MD offloading tasks (directed graphs) to multiple FNs or cloud | Minimize the sum of energy consumption of all devices | Scheduling in nodes and links (one task per time slot), max. delay | Allocation of tasks to nodes | Clustering of nodes, exhaustive search over clusters |

| H5. Alharbi and Aldosdary [112] | As in C24 | As in C24 | As in C24 | As in C24 | Heuristic based on sequentially checking whether a task can be processed by an edge node, then FN, then cloud |

| 3. Metaheuristics | |||||

| MH1. Djemai et al. [104] (2019) | Multiple MDs offloading tasks (directed graphs) to multiple FNs or cloud nodes, or processing them locally | Minimize the sum of energy consumption of all devices plus penalties for delay violations | Min. memory, min. computing capability | Allocation of tasks to nodes | Discrete PSO |

| MH2. Cui et al. [76] (2019) | Multiple MDs offloading tasks to an FN through relay nodes or processing them locally | Minimize energy consumption of MDs and delay (multi-objective) | One-task-one-node, processing rate of MDs and FN | Task allocation (binary—local or to the FN) | Modified NSGAII with task allocation as chromosomes and MDs as genes |

| MH3. Wang and Chen [107] (2020) | Multiple MDs offloading tasks to a single FN or processing them locally | Minimize total delay | One-task-one-node, max. task delay, max. task energy consumption | Task allocation to nodes, computation capability (operating frequency) of MDs and an FN | HGSA |

| MH4. Abbasi et al. [105] (2020) | Offloaded workload being shared between multiple FNs and clouds | Minimize fitness (a function of energy consumption and delay—not clearly stated) | Max. computing capacity of FNs | Allocation of workload to nodes, FN-cloud traffic rates, cloud on/off state, cloud operating frequency, number of turned on machines at cloud | NSGAII with assignment of workload to nodes as genes |

| MH5. Roy et al. [106] (2020) | Multiple MDs offloading tasks consisting of subtasks to two tiers of FNs or a cloud | Maximize fitness (combination of availability, delay, and power consumption) | Constraints included in the objective function | Allocation of subtasks to nodes | Adaptive PSO, GA |

| MH6. Vakilian et al. [92] (2021) | Multiple FNs receiving offloaded workloads and processing them or sending them to other FNs or cloud for processing | Minimize the weighted sum of normalized energy consumption of FNs and normalized delay | Processing rate of FNs | Allocation of workload to nodes | ABC |

| MH7. Vakilian et al. [93] (2021) | As in MH6 | Minimize the weighted sum of energy consumption of FNs and delay adjusted by fairness (processing rate of a given FN divided by the total processing rate of all FNs) | As in MH6 | As in MH6 | Cuckoo algorithm |

| MH8. Abdel-Basset et al. [94] (2021) | Single FN allocating offloaded tasks to multiple VMs | Minimize the weighted sum of total energy consumption and the highest delay over VMs | One-task-one-node | Allocation of tasks to VMs | MPA |

| MH9. Abdel-Basset et al. [94] (2021) | As in MH8 | As in MH8 | As in MH8 | As in MH8 | Modified MPA |

| MH10. Abdel-Basset et al. [94] (2021) | As in MH8 | As in MH8 | As in MH8 | As in MH8 | Improved modified MPA |

| MH11. Ghanavati et al. [113] (2022) | Multiple MDs offloading sets of tasks to multiple FNs or a cloud | Minimize the weighted sum of energy spent by FNs and total delays for each set of tasks | One-task-one-node | Allocation of tasks to nodes | AMO |

| MH12. Daghayeghi et al. [117] (2024) | Multiple MDs offloading tasks to FNs or cloud DCs | Minimize total energy consumption and delay (multi-objective) | One-task-one-node, max. delay, computing capacity | Task allocation to nodes | SPEA-II modified with differential evolution |

| 4. Machine Learning | |||||

| ML1. Xu et al. [87] (2017) | Single FN with multiple servers processing offloaded workload or sending it to a cloud for processing | Expected cost (delay, battery depreciation, backup power usage) over time with discount factor giving less weight to cost later in the future | Not clearly defined | Number of active servers in an FN, fraction of workload offloaded to the cloud | Utilizing RL to solve PDS-based Bellman equations |

| ML2. Wang et al. [101] (2019) | Multiple MDs offloading tasks to other MDs or an FN, or processing them locally | Minimize the weighted sum of energy consumption and delay, and exceeded delay penalties | MD battery level | Task allocation (wait, process locally, offload to an FN, offload to other MDs) | Deep RL scheduling |

| ML3. Wang et al. [101] (2019) | As in ML2 | As in ML2 | As in ML2 | As in ML2 | DDS, deep Q-learning |

| ML4. Nath and Wu [80] (2020) | Multiple MDs offloading tasks to one FN or processing them locally, FN caching previous tasks, FN fetching (downloading to cache) tasks from the cloud | Minimize the weighted sum of energy consumption, delay, and fetching cost over time | One-task-one-node, max. cached data size, max. delay | Allocation of tasks to nodes, caching decisions, fetching from cloud decisions, MD operating frequency, MD transmission power | Deep RL, DDPG |

| ML5. Nath and Wu [80] (2020) | Multiple MDs offloading tasks to one of multiple FNs (each MD can only offload to one FN) or processing them locally, FNs caching previous tasks, FNs fetching tasks from the cloud or other FNs | As in ML4 | As in ML4 | Allocation of tasks to nodes, caching decisions, fetching from cloud decisions, fetching from FNs decisions, MD operating frequency, MD transmission power | As in ML4 |

| ML6. Bai and Qian [81] (2021) | Multiple MDs offloading tasks to multiple FNs or a cloud | Minimize the weighted sum of energy spent by MDs and delay | One-task-one-node | Allocation of tasks to nodes, allocation of channel resources to tasks, allocation of FN computing resources to tasks | A2C algorithm |

| 5. Game Theory | |||||

| G1. Chen et al. [88] (2018) | Multiple MDs offloading loads to FNs, FNs sharing load between each other—decentralized decision-making | Minimize delay over time | Max. energy cost over time, max. energy cost per time slot, max. delay per time slot | Task allocation to nodes (from one FN to another) | Best-response algorithm |

| 6. Mixed Approach | |||||

| MA1.1 Deng et al. [97] (2016) | Offloaded workload being shared between multiple FNs and clouds | Minimize the sum of energy consumption of FNs and clouds | Max. delay, max. computing capacity of FNs, max. FN-cloud traffic rate | Allocation of workload to nodes, FN-cloud traffic rates, cloud on/off state, cloud operating frequency, number of turned on machines at cloud | Approximation—decomposition into MA1.2, MA1.3, and MA1.4 |

| MA1.2 | Workload being shared between multiple FNs | Minimize the weighted sum of delay and energy consumption of FNs | Max. computing capacity of FNs | Allocation of workload to nodes | Convex optimization—interior-point method |

| MA1.3 | Workload being shared between multiple clouds | Minimize the sum of energy consumption of clouds | Max. delay, max. computing capacity of clouds | Allocation of workload to nodes, FN-cloud traffic rates, cloud on/off state, cloud operating frequency, number of turned on machines at cloud | Non-convex optimization - generalized Benders decomposition |

| MA1.4 | Workload being transmitted between multiple FNs and clouds | Minimize total transmission delay | – | FN-cloud traffic rates | Hungarian algorithm |

| MA2.1 Mao et al. [71] (2016) | Single EH MD offloading tasks to a single FN or processing it locally | Minimize weighted sum of delay and dropped task penalty over time | One-task-one-node, MD battery level | Allocation of tasks to nodes, MD transmission power, MD operating frequency, MD harvested energy | Proving opt. frequency should be constant for a task, using Lyapunov optimization to transform MA2.1 to a per-slot MA2.2 deterministic problem |

| MA2.2 | As in MA2.1 | Minimize weighted sum of virtual energy queue length, delay, and dropped task penalty over time | As in MA2.1 | As in MA2.1 | Finding opt. harvested energy with LP leading into MA2.3 |

| MA2.3 | As in MA2.2 | As in MA2.2 | As in MA2.2 | Allocation of tasks to nodes, MD transmission power, MD operating frequency | Finding opt. values for frequency and trans. power, separately calculating costs for local execution, offloading, and dropping the task and choosing the lowest one |

| MA3. Liu et al. [74] (2018) | Energy harvesting MDs offloading workload (or portions of it) to a single FN (consisting of multiple servers) or cloud, or processing it locally | For each MDs minimize execution cost (delay + dropped task penalty) increased by weighted execution costs of other MDs in its “social group” | Stability of queues, battery level of MDs | Allocation of workload to nodes | Game theory—partial penalization, convex optimization—formulation of KKT conditions, convex optimization—semi-smooth Newton method with Armijo line search |

| MA4.1 Sun et al. [102] (2019) | Multiple MDs receiving content from a cloud through RRHs or D2D transmitters | Minimize the sum of energy consumption of all devices | – | On-off states of cloud processors, communication modes of MDs | Machine Learning—Deep RL |

| MA4.2 | As in MA4.1 | Minimize the sum of network-wide precoding vectors | Min. transmission rates, max. transmission power, computing resources of cloud | MDs data rates | Convex optimization |

| MA5.1 Zhang et al. [111] (2020) | Multiple MDs offloading load to one FN through RRHs or processing it locally | Minimize energy consumption divided by the size of processed tasks over time | Stability of queues | FN operating frequency (for computation and transmission), MD operating frequency, a fraction of offloaded load, FN transmission power, subchannel allocation | Lyapunov optimization, decomposition into MA5.2, MA5.3, MA5.4, MA5.5 |

| MA5.2 | Single MD offloading load to one FN through RRHs or processing it locally | Minimize Lyapunov drift-plus-penalty for the offloading decision | – | A fraction of offloaded load | Polynomial minimization |

| MA5.3 | Multiple MDs offloading load to one FN through RRHs | Minimize Lyapunov drift-plus-penalty for the subchannel and transmit power allocation | – | FN transmission power, subchannel allocation | Game theory—two-side swap matching game, non-convex optimization—geometric programming |

| MA5.4 | One FN processing offloaded load | Minimize Lyapunov drift-plus-penalty for FN computing resource allocation | – | FN operating frequency for computation and transmission | Convex optimization—decomposition |

| MA5.5 | One MD processing tasks locally | Minimize Lyapunov drift-plus-penalty for MD computing resource allocation | – | MD operating frequency for computation | As in MA5.4 |

| MA6. Kopras et al. [108] (2020) | Single edge node offloading tasks (directed graphs) to multiple FNs or cloud | Minimize the sum of energy consumption of all devices | Scheduling in nodes and links (one task per time slot), max. delay | Allocation of tasks to nodes | Heuristic—clustering of nodes, metaheuristic—discrete PSO |

| MA7.1 Bian et al. [83,84] (2022) | Single FN distributing parameters to and from multiple MDs in distributed training scheme | Minimize regret (a function of delay) | Wireless channel capacity, max. expected energy consumption of MDs, fairness—min. expected MD selection rates | Selection of MDs for training | Convex optimization—Lyapunov optimization, transformation into MA7.2 |

| MA7.2 | As in MA7.1 | Minimize weighted sum of virtual queues (for energy consumption and fairness) and UCB term (estimated mean regret plus exploration cost) | Max. number of MDs the FN can communicate with in each round | Selection of MDs for training | UCB-based bandit algorithm, transformation into MA7.3 |

| MA7.3 | As in MA7.1 | Minimize weighted sum of virtual queues | Selection of MD with the lowest UCB term, max. number of MDs the FN can communicate with during each round | Selection of MD for training | Heuristic—greedy approach |

| MA8.1 Kopras et al. [114] (2022) | Multiple FNs receiving tasks offloaded from multiple MDs and processing them or sending them to other FNs or cloud for processing | Minimize the sum of energy consumption of all devices | One-task-one-node, max. delay | Allocation of tasks to nodes, FN operating frequency | Decomposition into MA8.2 and MA8.3 |

| MA8.2 | An FN processing offloaded task | Minimize the energy consumption of the FN | Max. delay | FN operating frequency | Convex optimization—SCA, Lagrange method |

| MA8.3 | Multiple FNs processing offloaded tasks or sending them to other FNs or cloud for processing | Minimize the sum of energy consumption of all devices | One-task-one-node | Allocation of tasks to nodes | Hungarian algorithm |

| MA9.1. Kopras et al. [114] (2022) | Multiple FNs processing offloaded tasks or sending them to other FNs or cloud for processing | Minimize the sum of energy consumption of all devices | One-task-one-node, max. delay | Allocation of tasks to nodes, FN operating frequency | Decomposition into 2 subproblems MA9.2 and MA9.3 |

| MA9.2 | An FN processing offloaded task | Minimize the energy consumption of the FN | Max. delay | FN operating frequency | Convex optimization—SCA, Lagrange method |

| MA9.3 | Multiple FNs processing offloaded tasks or sending them to other FNs or cloud for processing | Minimize the sum of energy consumption of all devices | One-task-one-node | Allocation of tasks to nodes | Heuristic—greedy algorithm |

| MA10.1 Kopras et al. [115] (2023) | Multiple MDs offloading tasks to multiple FNs or a cloud | Minimize the sum of energy consumption of all devices | One-task-one-node, max. delay | Allocation of MD-FN transmission, allocation of tasks to nodes, FN operating frequency | Decomposition into 3 subproblems MA10.2, MA10.3, and MA10.4 |

| MA10.2 | An FN processing offloaded task | Minimize the energy consumption of the FN | Max. delay | FN operating frequency | Rational (3rd degree) function minimization |

| MA10.3 | An MD offloading a task to one of multiple FNs | Minimize the sum of energy consumption of all devices | – | Allocation of MD-FN transmission for all possible computation allocations | Exhaustive search |

| MA10.4 | Multiple FNs processing offloaded tasks or sending them to other FNs or cloud for processing | As in MA10.3 | One-task-one-node | Allocation of tasks to nodes | Hungarian algorithm |

| MA11.1 Kopras et al. [115] (2023) | Multiple MDs offloading tasks to multiple FNs or a cloud | As in MA10.4 | One-task-one-node, max. delay | Allocation of MD-FN transmission, allocation of tasks to nodes, FN operating frequency | Decomposition into 3 subproblems MA11.2, MA11.3, and MA11.4 |

| MA11.2 | An FN processing offloaded task | Minimize the energy consumption of the FN | Max. delay | FN operating frequency | Rational (3rd degree) function minimization |

| MA11.3 | An MD offloading a task to one of multiple FNs | Minimize the sum of energy consumption of all devices | – | Allocation of MD-FN transmission | Heuristic—always transmitting with the lowest MD-FN cost |

| MA11.4 | Multiple FNs processing offloaded tasks or sending them to other FNs or cloud for processing | Minimize the sum of energy consumption of all devices | One-task-one-node | Allocation of tasks to nodes | Hungarian algorithm |

| MA12.1 Sun and Chen [95] (2023) | Multiple MDs offloading tasks to FNs (each MD connected to a single FN) or processing them locally, FNs caching services | Maximize utility (price paid by MDs minus costs including energy) for the network provider | One-task-one-node, Max. FN power consumption, max. FN storage | MD transmission power, FN operating frequency, offloading cost (paid by and MD to an FN), cache location | Reducing incentive constraints, decomposition into MA12.2 and MA12.3 |

| MA12.2 | FNs processing offloaded tasks | Maximize utility | Max. FN power consumption | FN operating frequency | Convex optimization |

| MA12.3 | Multiple MDs offloading tasks to FNs (each MD connected to a single FN) or processing them locally, FNs caching services | As in MA12.2 | Max. FN storage | MD transmission power, cache location | Exhaustive generation of all possible cache locations, heuristic—greedy algorithm to find opt. cache locations, convex optimization—block-coordinate descent to find MD transmission power |

| MA13. Jiang et al. [116] (2023) | Multiple MDs offloading tasks to one or more UAVs acting like FNs | Minimize the weighted sum of energy spent by MDs and UAVs | One-task-one-node, max. computing capacity of MDs and UAVs | Locations of UAVs, task allocation to nodes, computing allocation to tasks, transmission matrix | Quantitative passive beamforming, machine learning –A2C algorithm with multi-head agent, multi-task learning; metaheuristic—LWS refinement |

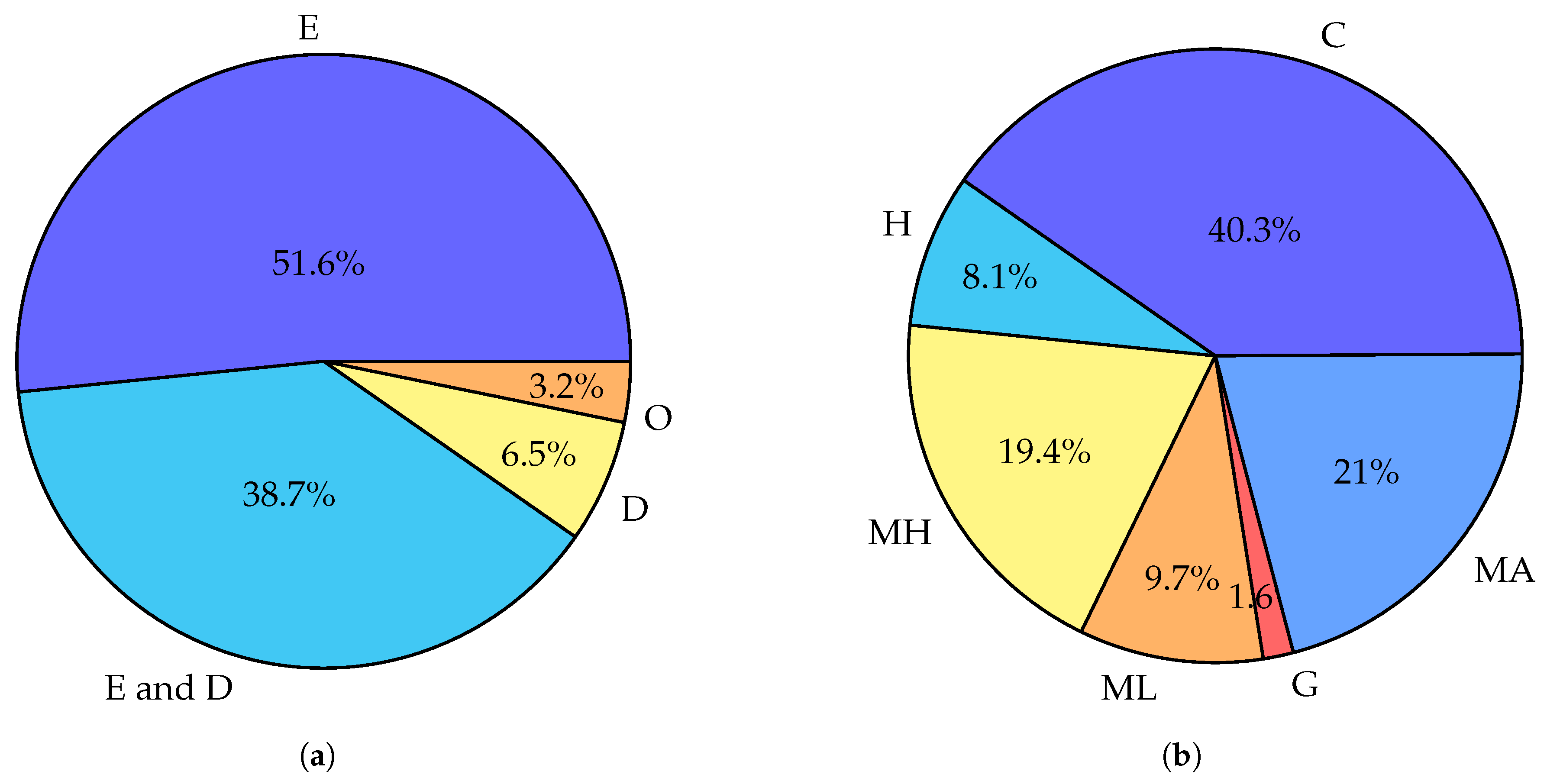

5.2. Optimization Method Classification

5.2.1. Optimization Method Attributes

5.2.2. Optimization Method Families

5.3. Comparison of Works on Fog Optimization

5.4. Summary of Optimization Methods and Results

5.4.1. Energy as a Sole Objective

5.4.2. Energy as One of a Few Objectives

5.4.3. Energy Only as a Constraint

5.5. Section Summary

- Use the well-tested convex optimization methods if the problem you are examining can be solved with them.

- Otherwise, test the performance of the existing metaheuristics or Machine Learning (ML) algorithms on your problem. Adjusing the method to better suit your particular problem is advisable.

- As shown in Table 5, it can be beneficial to split the problem into smaller subproblems where each subproblem can be solved with a different method.

6. Discussion and Trends

6.1. Fog Technologies

6.2. Model-Based ML for Approaching Energy-Optimum

6.3. Security and Privacy

- Resource management security: Fog computing relies on distributed resources that are geographically dispersed, including devices and DCs. Ensuring the security of these resources is essential for maintaining overall system efficiency. By implementing robust security measures such as access control, encryption, and authentication, the energy efficiency of fog infrastructure can be optimized without compromising security.

- Task classification security: Task execution in the fog architectures is based on task classification (e.g., with respect to required complexity, latency, reliability, bandwidth, etc.). Examples of such sensitive tasks include mission-critical tasks that require ultra-low latency and ultra-high reliability. The classification criteria may change, but considering the multi-class classification with defined sensitivity levels (from non-critical tasks to highly critical ones), any miss-classification may cause a fatal error in task execution, especially when the classes are close to the classifier. Moreover, it may translate to energy overspending if serving the task with inappropriate resources.

- Threat mitigation: In comparison to conventional cloud systems, energy-efficient fog devices could have less computational power and resources. Consequently, it is critical to recognize potential security risks and weaknesses that may compromise total energy efficiency. Energy resources can be preserved and used more effectively without being consumed by malicious activity by quickly identifying and addressing threats.

- Data privacy and integrity: Fog computing involves processing and storing data at the network edge, closer to the data source. This raises issues with data integrity and privacy. It is imperative to guarantee that the information conveyed, handled, and retained within the fog infrastructure is shielded from unwanted access, manipulation, or corruption. Energy-efficient fog systems may be trusted with critical data by putting strong security measures in place, which increase efficiency and dependability.

- Security-energy costs: Security procedures, threat detection, and mitigation algorithms come at an energy cost. At the same time, these algorithms may prevent the devices and network from energy overspending (e.g., by unnecessary retransmissions or network access attempts). Thus, it is necessary to thoroughly evaluate and balance the energy cost and benefit of security measures.

6.4. Fog Economics

6.5. Future Research Directions

- Fog orchestration and management: As fog networks continue to grow, the efficient management and orchestration of resources will become crucial. Future research shall focus on developing advanced fog management frameworks to automate resource allocation, load balancing, and service provisioning, as well as on the energy cost to be paid for such management algorithms.

- AI at the edge: Fog computing combined with AI will enable intelligent decision-making at the edge of the network, reducing latency and improving real-time processing capabilities. However, the AI/ML algorithms themselves can be extremely computationally complex and thus can be highly energy-consuming. A trade-off between the energy consumption induced by these algorithms and the energy savings they offer has to be examined.

- Security of fog computing: Securing fog nodes and their communication will become essential. The implementation of robust security mechanisms, including encryption, authentication, anomaly and intrusion detection, continuous network monitoring, and methods counteracting cyberattacks will inevitably be energy-consuming. The cost of security (also in terms of energy consumption) needs to be assessed.

- Industry-specific fog applications: Different industries will leverage fog communication and computing for specific applications. This includes smart cities, autonomous vehicles, healthcare, industrial automation, and more. The tailored fog solutions designed to address the unique requirements of each industry will have a different impact on their energy consumption. Cost-benefit analysis for these specific application scenarios is another direction of research to be conducted.

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| A2C | Advantage actor–critic |

| ABC | Artificial Bee Colony |

| ADMM | Alternating Direction Method of Multipliers |

| AI | Artificial Intelligence |

| ALR | Adaptive Line Rate |

| AMO | Ant Mating Optimization |

| AP | Access Point |

| BB | Branch and Bound |

| BS | Base Station |

| CDN | Content Delivery Network |

| CMOS | Complementary Metal-Oxide Semiconductor |

| CS | Contract-based under Symmetric information |

| CPU | Central Processing Unit |

| D2D | Device-to-Device |

| DAG | Directed Acyclic Graph |

| DC | Data Center |

| DDS | Deep Dynamic Scheduling |

| DDPG | Deep Deterministic Policy Gradient |

| DL | DownLink |

| DVFS | Dynamic Voltage and Frequency Scaling |

| EH | Energy Harvesting |

| EON | Elastic Optical Network |

| FFBD | Feasibility Finding Benders Decomposition |

| FI | Fog Instance |

| FLOP | Floating Point Operation |

| FLOPS | Floating Point Operations per Second |

| FN | Fog Node |

| GA | Genetic Algorithm |

| GPU | Graphics Processing Unit |

| HGSA | Hybrid Genetic Simulated Annealing |

| ICT | Information and Communication Technology |

| IRS | Intelligent Reflective Surface |

| IoT | Internet of Things |

| IP | Internet Protocol |

| ISP | Internet Service Provider |

| KKT | Karush–Kuhn–Tucker |

| LNA | Low Noise Amplifier |

| LO | Local Oscillator |

| LP | Linear Programming |

| LTE | Long Term Evolution |

| LWS | Lone Wolf Search |

| MCC | Mobile Cloud Computing |

| MD | Mobile Device |

| MDP | Markov Decision Process |

| MEC | Mobile/Multi-Access Edge Computing |

| MILP | Mixed-Integer Linear Programming |

| MIMO | Multiple-Input and Multiple-Output |

| MINLP | Mixed-Integer Non-Linear Programming |

| ML | Machine Learning |

| MPA | Marine Predators Algorithm |

| N/A | Not Applicable |

| NEP | Nash Equilibrium Problem |

| NIC | Network Interface Card |

| NFV | Network Function Virtualization |

| NOMA | Non-Orthogonal Multiple Access |

| NSGAII | Non-dominated Sorting Genetic Algorithm II |

| NTV | Near-Threshold Technology |

| OFDMA | Orthogonal Frequency-Division Multiple Access |

| PA | Power Amplifier |

| PDS | Post-Decision State |

| PGN | Portable Game Notation |

| PON | Passive Optical Network |

| PSO | Particle Swarm Optimization |

| QoS | Quality of Service |

| RF | Radio Frequency |

| RL | Reinforcement Learning |

| ROI | Return on Investment |

| RRH | Remote Radio Head |

| SCA | Successive Convex Approximation |

| SCS | Splitting Conic Solver |

| SDN | Software Defined Network |

| SDR | SemiDefinite Relaxation |

| SID | Solution IDentifier |

| SoC | System-on-Chip |

| SPEA-II | Strength Pareto Evolutionary Algorithm II |

| TDMA | Time Division Multiple Access |

| UAV | Unmanned Aerial Vehicle |

| UCB | Upper Confidence Bound |

| UL | UpLink |

| VC | Virtual Cluster |

| VM | Virtual Machine |

| WDM | Wavelength Division Multiplexing |

References

- Cisco. Cisco Annual Internet Report (2018–2023); Technical Report; Cisco: San Jose, CA, USA, 2020. [Google Scholar]

- Ericsson. Ericsson Mobility Report November 2023; Technical Report; Ericsson: Stockholm, Sweden, 2023. [Google Scholar]

- Dinh, H.T.; Lee, C.; Niyato, D.; Wang, P. A survey of mobile cloud computing: Architecture, applications, and approaches. Wirel. Commun. Mob. Comput. 2011, 13, 1587–1611. [Google Scholar] [CrossRef]

- Khan, A.u.R.; Othman, M.; Madani, S.A.; Khan, S.U. A Survey of Mobile Cloud Computing Application Models. IEEE Commun. Surv. Tutor. 2014, 16, 393–413. [Google Scholar] [CrossRef]

- Satyanarayanan, M.; Bahl, P.; Caceres, R.; Davies, N. The Case for VM-Based Cloudlets in Mobile Computing. IEEE Pervasive Comput. 2009, 8, 14–23. [Google Scholar] [CrossRef]

- Jiao, L.; Friedman, R.; Fu, X.; Secci, S.; Smoreda, Z.; Tschofenig, H. Cloud-based computation offloading for mobile devices: State of the art, challenges and opportunities. In Proceedings of the 2013 Future Network Mobile Summit, Lisbon, Portugal, 3–5 July 2013. [Google Scholar]

- Bonomi, F.; Milito, R.; Zhu, J.; Addepalli, S. Fog Computing and Its Role in the Internet of Things. In Proceedings of the MCC’12 First Edition of the MCC Workshop on Mobile Cloud Computing, Helsinki, Finland, 17 August 2012; ACM: New York, NY, USA, 2012; pp. 13–16. [Google Scholar] [CrossRef]

- Vaquero, L.M.; Rodero-Merino, L. Finding Your Way in the Fog: Towards a Comprehensive Definition of Fog Computing. SIGCOMM Comput. Commun. Rev. 2014, 44, 27–32. [Google Scholar] [CrossRef]

- Nath, S.B.; Gupta, H.; Chakraborty, S.; Ghosh, S.K. A Survey of Fog Computing and Communication: Current Researches and Future Directions. arXiv 2018, arXiv:1804.04365. [Google Scholar]

- Jalali, F.; Khodadustan, S.; Gray, C.; Hinton, K.; Suits, F. Greening IoT with Fog: A Survey. In Proceedings of the 2017 IEEE International Conference on Edge Computing (EDGE), Honolulu, HI, USA, 25–30 June 2017; pp. 25–31. [Google Scholar] [CrossRef]

- Montevecchi, F.; Stickler, T.; Hintemann, R.; Hinterholzer, S. Energy-Efficient Cloud Computing Technologies and Policies for an Eco-Friendly Cloud Market. Final Study Report; Technical Report; European Commissino, Directorate-General for Communications Networks, Content and Technology: Brussels, Belgium, 2020. [Google Scholar]

- Freitag, C.; Berners-Lee, M.; Widdicks, K.; Knowles, B.; Blair, G.S.; Friday, A. The real climate and transformative impact of ICT: A critique of estimates, trends, and regulations. Patterns 2021, 2, 100340. [Google Scholar] [CrossRef]

- Shirazi, S.N.; Gouglidis, A.; Farshad, A.; Hutchison, D. The Extended Cloud: Review and Analysis of Mobile Edge Computing and Fog From a Security and Resilience Perspective. IEEE J. Sel. Areas Commun. 2017, 35, 2586–2595. [Google Scholar] [CrossRef]

- Ometov, A.; Molua, O.L.; Komarov, M.; Nurmi, J. A Survey of Security in Cloud, Edge, and Fog Computing. Sensors 2022, 22, 927. [Google Scholar] [CrossRef]

- Yi, S.; Li, C.; Li, Q. A Survey of Fog Computing: Concepts, Applications and Issues. In Proceedings of the 2015 Workshop on Mobile Big Data (Mobidata ’15), Hangzhou, China, 22–25 June 2015; ACM: New York, NY, USA, 2015; pp. 37–42. [Google Scholar] [CrossRef]

- Mao, Y.; You, C.; Zhang, J.; Huang, K.; Letaief, K.B. A survey on mobile edge computing: The communication perspective. IEEE Commun. Surv. Tutor. 2017, 19, 2322–2358. [Google Scholar] [CrossRef]

- Taleb, T.; Samdanis, K.; Mada, B.; Flinck, H.; Dutta, S.; Sabella, D. On Multi-Access Edge Computing: A Survey of the Emerging 5G Network Edge Cloud Architecture and Orchestration. IEEE Commun. Surv. Tutor. 2017, 19, 1657–1681. [Google Scholar] [CrossRef]

- Hu, P.; Dhelim, S.; Ning, H.; Qiu, T. Survey on fog computing: Architecture, key technologies, applications and open issues. J. Netw. Comput. Appl. 2017, 98, 27–42. [Google Scholar] [CrossRef]

- Abbas, N.; Zhang, Y.; Taherkordi, A.; Skeie, T. Mobile Edge Computing: A Survey. IEEE Internet Things J. 2018, 5, 450–465. [Google Scholar] [CrossRef]

- Mouradian, C.; Naboulsi, D.; Yangui, S.; Glitho, R.H.; Morrow, M.J.; Polakos, P.A. A Comprehensive Survey on Fog Computing: State-of-the-Art and Research Challenges. IEEE Commun. Surv. Tutor. 2018, 20, 416–464. [Google Scholar] [CrossRef]

- Mahmud, R.; Kotagiri, R.; Buyya, R. Fog Computing: A Taxonomy, Survey and Future Directions. In Internet of Everything: Algorithms, Methodologies, Technologies and Perspectives; Springer: Singapore, 2018; pp. 103–130. [Google Scholar] [CrossRef]

- Mukherjee, M.; Shu, L.; Wang, D. Survey of Fog Computing: Fundamental, Network Applications, and Research Challenges. IEEE Commun. Surv. Tutor. 2018, 20, 1826–1857. [Google Scholar] [CrossRef]

- Svorobej, S.; Takako Endo, P.; Bendechache, M.; Filelis-Papadopoulos, C.; Giannoutakis, K.M.; Gravvanis, G.A.; Tzovaras, D.; Byrne, J.; Lynn, T. Simulating Fog and Edge Computing Scenarios: An Overview and Research Challenges. Future Internet 2019, 11, 55. [Google Scholar] [CrossRef]

- Yousefpour, A.; Fung, C.; Nguyen, T.; Kadiyala, K.; Jalali, F.; Niakanlahiji, A.; Kong, J.; Jue, J.P. All one needs to know about fog computing and related edge computing paradigms: A complete survey. J. Syst. Archit. 2019, 98, 289–330. [Google Scholar] [CrossRef]

- Moura, J.; Hutchison, D. Game Theory for Multi-Access Edge Computing: Survey, Use Cases, and Future Trends. IEEE Commun. Surv. Tutor. 2019, 21, 260–288. [Google Scholar] [CrossRef]

- Hameed Mohsin, A.; Al Omary, A. Security and Power Management in IoT and Fog Computing: A Survey. In Proceedings of the 2020 International Conference on Data Analytics for Business and Industry: Way Towards a Sustainable Economy (ICDABI), Sakheer, Bahrain, 26–27 October 2020. [Google Scholar] [CrossRef]

- Qu, Z.; Wang, Y.; Sun, L.; Peng, D.; Li, Z. Study QoS Optimization and Energy Saving Techniques in Cloud, Fog, Edge, and IoT. Complexity 2020, 2020, 8964165. [Google Scholar] [CrossRef]

- Rahimi, M.; Songhorabadi, M.; Kashani, M.H. Fog-based smart homes: A systematic review. J. Netw. Comput. Appl. 2020, 153, 102531. [Google Scholar] [CrossRef]

- Caiza, G.; Saeteros, M.; Oñate, W.; Garcia, M.V. Fog computing at industrial level, architecture, latency, energy, and security: A review. Heliyon 2020, 6, e03706. [Google Scholar] [CrossRef]

- Bendechache, M.; Svorobej, S.; Takako Endo, P.; Lynn, T. Simulating Resource Management across the Cloud-to-Thing Continuum: A Survey and Future Directions. Future Internet 2020, 12, 95. [Google Scholar] [CrossRef]

- Qureshi, M.S.; Qureshi, M.B.; Fayaz, M.; Mashwani, W.K.; Belhaouari, S.B.; Hassan, S.; Shah, A. A comparative analysis of resource allocation schemes for real-time services in high-performance computing systems. Int. J. Distrib. Sens. Netw. 2020, 16, 1550147720932750. [Google Scholar] [CrossRef]

- Habibi, P.; Farhoudi, M.; Kazemian, S.; Khorsandi, S.; Leon-Garcia, A. Fog Computing: A Comprehensive Architectural Survey. IEEE Access 2020, 8, 69105–69133. [Google Scholar] [CrossRef]

- Aslanpour, M.S.; Gill, S.S.; Toosi, A.N. Performance evaluation metrics for cloud, fog and edge computing: A review, taxonomy, benchmarks and standards for future research. Internet Things 2020, 12, 100273. [Google Scholar] [CrossRef]

- Lin, H.; Zeadally, S.; Chen, Z.; Labiod, H.; Wang, L. A survey on computation offloading modeling for edge computing. J. Netw. Comput. Appl. 2020, 169, 102781. [Google Scholar] [CrossRef]

- Asim, M.; Wang, Y.; Wang, K.; Huang, P.-Q. A Review on Computational Intelligence Techniques in Cloud and Edge Computing. IEEE Trans. Emerg. Top. Comput. Intell. 2020, 4, 742–763. [Google Scholar] [CrossRef]

- Fakhfakh, F.; Kallel, S.; Cheikhrouhou, S. Formal Verification of Cloud and Fog Systems: A Review and Research Challenges. JUCS J. Univers. Comput. Sci. 2021, 27, 341–363. [Google Scholar] [CrossRef]

- QingQingChang; Ahmad, I.; Liao, X.; Nazir, S. Evaluation and Quality Assurance of Fog Computing-Based IoT for Health Monitoring System. Wirel. Commun. Mob. Comput. 2021, 2021, 5599907. [Google Scholar] [CrossRef]

- Alomari, A.; Subramaniam, S.K.; Samian, N.; Latip, R.; Zukarnain, Z. Resource Management in SDN-Based Cloud and SDN-Based Fog Computing: Taxonomy Study. Symmetry 2021, 13, 734. [Google Scholar] [CrossRef]

- Ben Dhaou, I.; Ebrahimi, M.; Ben Ammar, M.; Bouattour, G.; Kanoun, O. Edge Devices for Internet of Medical Things: Technologies, Techniques, and Implementation. Electronics 2021, 10, 2104. [Google Scholar] [CrossRef]

- Laroui, M.; Nour, B.; Moungla, H.; Cherif, M.A.; Afifi, H.; Guizani, M. Edge and fog computing for IoT: A survey on current research activities & future directions. Comput. Commun. 2021, 180, 210–231. [Google Scholar]

- Hajam, S.S.; Sofi, S.A. IoT-Fog architectures in smart city applications: A survey. China Commun. 2021, 18, 117–140. [Google Scholar] [CrossRef]

- Mohamed, N.; Al-Jaroodi, J.; Lazarova-Molnar, S.; Jawhar, I. Applications of Integrated IoT-Fog-Cloud Systems to Smart Cities: A Survey. Electronics 2021, 10, 2918. [Google Scholar] [CrossRef]

- Martinez, I.; Hafid, A.S.; Jarray, A. Design, Resource Management, and Evaluation of Fog Computing Systems: A Survey. IEEE Internet Things J. 2021, 8, 2494–2516. [Google Scholar] [CrossRef]

- Kaul, S.; Kumar, Y.; Ghosh, U.; Alnumay, W. Nature-inspired optimization algorithms for different computing systems: Novel perspective and systematic review. Multimed. Tools Appl. 2022, 81, 26779–26801. [Google Scholar] [CrossRef]

- Al-Araji, Z.J.; Ahmad, S.S.S.; Kausar, N.; Farhani, A.; Ozbilge, E.; Cagin, T. Fuzzy Theory in Fog Computing: Review, Taxonomy, and Open Issues. IEEE Access 2022, 10, 126931–126956. [Google Scholar] [CrossRef]

- Rahimikhanghah, A.; Tajkey, M.; Rezazadeh, B.; Rahmani, A.M. Resource scheduling methods in cloud and fog computing environments: A systematic literature review. Clust. Comput. 2022, 25, 911–945. [Google Scholar] [CrossRef]

- Tran-Dang, H.; Bhardwaj, S.; Rahim, T.; Musaddiq, A.; Kim, D.S. Reinforcement learning based resource management for fog computing environment: Literature review, challenges, and open issues. J. Commun. Netw. 2022, 24, 83–98. [Google Scholar] [CrossRef]

- Acheampong, A.; Zhang, Y.; Xu, X.; Kumah, D.A. A Review of the Current Task Offloading Algorithms, Strategies and Approach in Edge Computing Systems. Comput. Model. Eng. Sci. 2023, 134, 35–88. [Google Scholar] [CrossRef]

- Goudarzi, M.; Palaniswami, M.; Buyya, R. Scheduling IoT Applications in Edge and Fog Computing Environments: A Taxonomy and Future Directions. ACM Comput. Surv. 2022, 55, 1–41. [Google Scholar] [CrossRef]

- Ben Ammar, M.; Ben Dhaou, I.; El Houssaini, D.; Sahnoun, S.; Fakhfakh, A.; Kanoun, O. Requirements for Energy-Harvesting-Driven Edge Devices Using Task-Offloading Approaches. Electronics 2022, 11, 383. [Google Scholar] [CrossRef]

- Dhifaoui, S.; Houaidia, C.; Saidane, L.A. Cloud-Fog-Edge Computing in Smart Agriculture in the Era of Drones: A Systematic Survey. In Proceedings of the 2022 IEEE 11th IFIP International Conference on Performance Evaluation and Modeling in Wireless and Wired Networks (PEMWN), Rome, Italy, 8–10 November 2022; 10 November 2022. [Google Scholar] [CrossRef]

- Sadatdiynov, K.; Cui, L.; Zhang, L.; Huang, J.Z.; Salloum, S.; Mahmud, M.S. A review of optimization methods for computation offloading in edge computing networks. Digit. Commun. Netw. 2023, 9, 450–461. [Google Scholar] [CrossRef]

- Avan, A.; Azim, A.; Mahmoud, Q.H. A State-of-the-Art Review of Task Scheduling for Edge Computing: A Delay-Sensitive Application Perspective. Electronics 2023, 12, 2599. [Google Scholar] [CrossRef]

- Zhou, S.; Jadoon, W.; Khan, I.A. Computing Offloading Strategy in Mobile Edge Computing Environment: A Comparison between Adopted Frameworks, Challenges, and Future Directions. Electronics 2023, 12, 2452. [Google Scholar] [CrossRef]

- Patsias, V.; Amanatidis, P.; Karampatzakis, D.; Lagkas, T.; Michalakopoulou, K.; Nikitas, A. Task Allocation Methods and Optimization Techniques in Edge Computing: A Systematic Review of the Literature. Future Internet 2023, 15, 254. [Google Scholar] [CrossRef]

- Shareef, F.; Ijaz, H.; Shojafar, M.; Naeem, M.A. Multi-Class Imbalanced Data Handling with Concept Drift in Fog Computing: A Taxonomy, Review, and Future Directions. Acm Comput. Surv. 2024. just accepted. [Google Scholar] [CrossRef]

- Roman, R.; Lopez, J.; Mambo, M. Mobile edge computing, Fog et al.: A survey and analysis of security threats and challenges. Future Gener. Comput. Syst. 2018, 78, 680–698. [Google Scholar] [CrossRef]

- Tange, K.; De Donno, M.; Fafoutis, X.; Dragoni, N. A Systematic Survey of Industrial Internet of Things Security: Requirements and Fog Computing Opportunities. IEEE Commun. Surv. Tutor. 2020, 22, 2489–2520. [Google Scholar] [CrossRef]

- Butun, I.; Sari, A.; Österberg, P. Hardware Security of Fog end devices for the Internet of Things. Sensors 2020, 20, 5729. [Google Scholar] [CrossRef]

- Verma, R.; Chandra, S. A Systematic Survey on Fog steered IoT: Architecture, Prevalent Threats and Trust Models. Int. J. Wirel. Inf. Netw. 2021, 28, 116–133. [Google Scholar] [CrossRef]

- Elazhary, H. Internet of Things (IoT), mobile cloud, cloudlet, mobile IoT, IoT cloud, fog, mobile edge, and edge emerging computing paradigms: Disambiguation and research challenges. J. Netw. Comput. Appl. 2010, 128, 105–140. [Google Scholar] [CrossRef]

- Gilbert, G.M.; Naiman, S.; Kimaro, H.; Bagile, B. A Critical Review of Edge and Fog Computing for Smart Grid Applications. In Proceedings of the 15th International Conference on Social Implications of Computers in Developing Countries (ICT4D), Dar es Salaam, Tanzania, 1–3 May 2019; Volume AICT-551. pp. 763–775. [Google Scholar] [CrossRef]

- Kumar, N.M.; Chand, A.A.; Malvoni, M.; Prasad, K.A.; Mamun, K.A.; Islam, F.; Chopra, S.S. Distributed Energy Resources and the Application of AI, IoT, and Blockchain in Smart Grids. Energies 2020, 13, 5739. [Google Scholar] [CrossRef]

- Taneja, M.; Davy, A. Resource aware placement of IoT application modules in Fog-Cloud Computing Paradigm. In Proceedings of the 2017 IFIP/IEEE Symposium on Integrated Network and Service Management (IM), Lisbon, Portugal, 8–12 May 2017; pp. 1222–1228. [Google Scholar] [CrossRef]

- Hosseinioun, P.; Kheirabadi, M.; Kamel Tabbakh, S.R.; Ghaemi, R. A new energy-aware tasks scheduling approach in fog computing using hybrid meta-heuristic algorithm. J. Parallel Distrib. Comput. 2020, 143, 88–96. [Google Scholar] [CrossRef]

- Rehman, A.U.; Ahmad, Z.; Jehangiri, A.I.; Ala’Anzy, M.A.; Othman, M.; Umar, A.I.; Ahmad, J. Dynamic Energy Efficient Resource Allocation Strategy for Load Balancing in Fog Environment. IEEE Access 2020, 8, 199829–199839. [Google Scholar] [CrossRef]

- OpenFog Consortium. OpenFog Reference Architecture for Fog Computing; OpenFog Consortium: Fremont, CA, USA, 2017. [Google Scholar]

- Huang, D.; Wang, P.; Niyato, D. A Dynamic Offloading Algorithm for Mobile Computing. IEEE Trans. Wirel. Commun. 2012, 11, 1991–1995. [Google Scholar] [CrossRef]

- Sardellitti, S.; Scutari, G.; Barbarossa, S. Joint Optimization of Radio and Computational Resources for Multicell Mobile-Edge Computing. IEEE Trans. Signal Inf. Process. Over Netw. 2015, 1, 89–103. [Google Scholar] [CrossRef]

- Muñoz, O.; Pascual-Iserte, A.; Vidal, J. Optimization of Radio and Computational Resources for Energy Efficiency in Latency-Constrained Application Offloading. IEEE Trans. Veh. Technol. 2015, 64, 4738–4755. [Google Scholar] [CrossRef]

- Mao, Y.; Zhang, J.; Letaief, K.B. Dynamic Computation Offloading for Mobile-Edge Computing With Energy Harvesting Devices. IEEE J. Sel. Areas Commun. 2016, 34, 3590–3605. [Google Scholar] [CrossRef]

- Dinh, T.Q.; Tang, J.; La, Q.D.; Quek, T.Q.S. Offloading in Mobile Edge Computing: Task Allocation and Computational Frequency Scaling. IEEE Trans. Commun. 2017, 65, 3571–3584. [Google Scholar] [CrossRef]

- You, C.; Huang, K.; Chae, H.; Kim, B.H. Energy-Efficient Resource Allocation for Mobile-Edge Computation Offloading. IEEE Trans. Wirel. Commun. 2017, 16, 1397–1411. [Google Scholar] [CrossRef]

- Liu, L.; Chang, Z.; Guo, X. Socially Aware Dynamic Computation Offloading Scheme for Fog Computing System with Energy Harvesting Devices. IEEE Internet Things J. 2018, 5, 1869–1879. [Google Scholar] [CrossRef]

- Feng, J.; Zhao, L.; Du, J.; Chu, X.; Yu, F.R. Energy-Efficient Resource Allocation in Fog Computing Supported IoT with Min-Max Fairness Guarantees. In Proceedings of the 2018 IEEE International Conference on Communications (ICC), Kansas City, MO, USA, 20–24 May 2018. [Google Scholar] [CrossRef]

- Cui, L.; Xu, C.; Yang, S.; Huang, J.Z.; Li, J.; Wang, X.; Ming, Z.; Lu, N. Joint Optimization of Energy Consumption and Latency in Mobile Edge Computing for Internet of Things. IEEE Internet Things J. 2019, 6, 4791–4803. [Google Scholar] [CrossRef]

- Kryszkiewicz, P.; Idzikowski, F.; Bossy, B.; Kopras, B.; Bogucka, H. Energy Savings by Task Offloading to a Fog Considering Radio Front-End Characteristics. In Proceedings of the 2019 IEEE 30th Annual International Symposium on Personal, Indoor and Mobile Radio Communications (PIMRC), Istanbul, Turkey, 8–11 September 2019. [Google Scholar] [CrossRef]

- He, X.; Jin, R.; Dai, H. PEACE: Privacy-Preserving and Cost-Efficient Task Offloading for Mobile-Edge Computing. IEEE Trans. Wirel. Commun. 2020, 19, 1814–1824. [Google Scholar] [CrossRef]

- Shahidinejad, A.; Ghobaei-Arani, M. Joint computation offloading and resource provisioning for edge-cloud computing environment: A machine learning-based approach. Softw. Pract. Exp. 2020, 50, 2212–2230. [Google Scholar] [CrossRef]

- Nath, S.; Wu, J. Deep reinforcement learning for dynamic computation offloading and resource allocation in cache-assisted mobile edge computing systems. Intell. Converg. Netw. 2020, 1, 181–198. [Google Scholar] [CrossRef]

- Bai, W.; Qian, C. Deep Reinforcement Learning for Joint Offloading and Resource Allocation in Fog Computing. In Proceedings of the 2021 IEEE 12th International Conference on Software Engineering and Service Science (ICSESS), Beijing, China, 20–22 August 2021; pp. 131–134. [Google Scholar] [CrossRef]

- Vu, T.T.; Nguyen, D.N.; Hoang, D.T.; Dutkiewicz, E.; Nguyen, T.V. Optimal Energy Efficiency With Delay Constraints for Multi-Layer Cooperative Fog Computing Networks. IEEE Trans. Commun. 2021, 69, 3911–3929. [Google Scholar] [CrossRef]

- Bian, S.; Wang, S.; Tang, Y.; Shao, Z. Social-Aware Edge Intelligence: A Constrained Graphical Bandit Approach. In Proceedings of the GLOBECOM 2022 IEEE Global Communications Conference, Rio de Janeiro, Brasil, 4–8 December 2022; pp. 6372–6377. [Google Scholar] [CrossRef]

- Wang, S.; Bian, S.; Tang, Y.; Shao, Z. Social-Aware Edge Intelligence: A Constrained Graphical Bandit Approach; Technical Report; ShanghaiTech University: Shanghai, China, 2022. [Google Scholar]

- Li, Y.; Guo, S.; Jiang, Q. Joint Task Allocation and Computation Offloading in Mobile Edge Computing with Energy Harvesting. IEEE Internet Things J. 2024. early access. [Google Scholar] [CrossRef]