Computer-Vision- and Deep-Learning-Based Determination of Flow Regimes, Void Fraction, and Resistance Sensor Data in Microchannel Flow Boiling

Abstract

1. Introduction

2. Materials and Methods

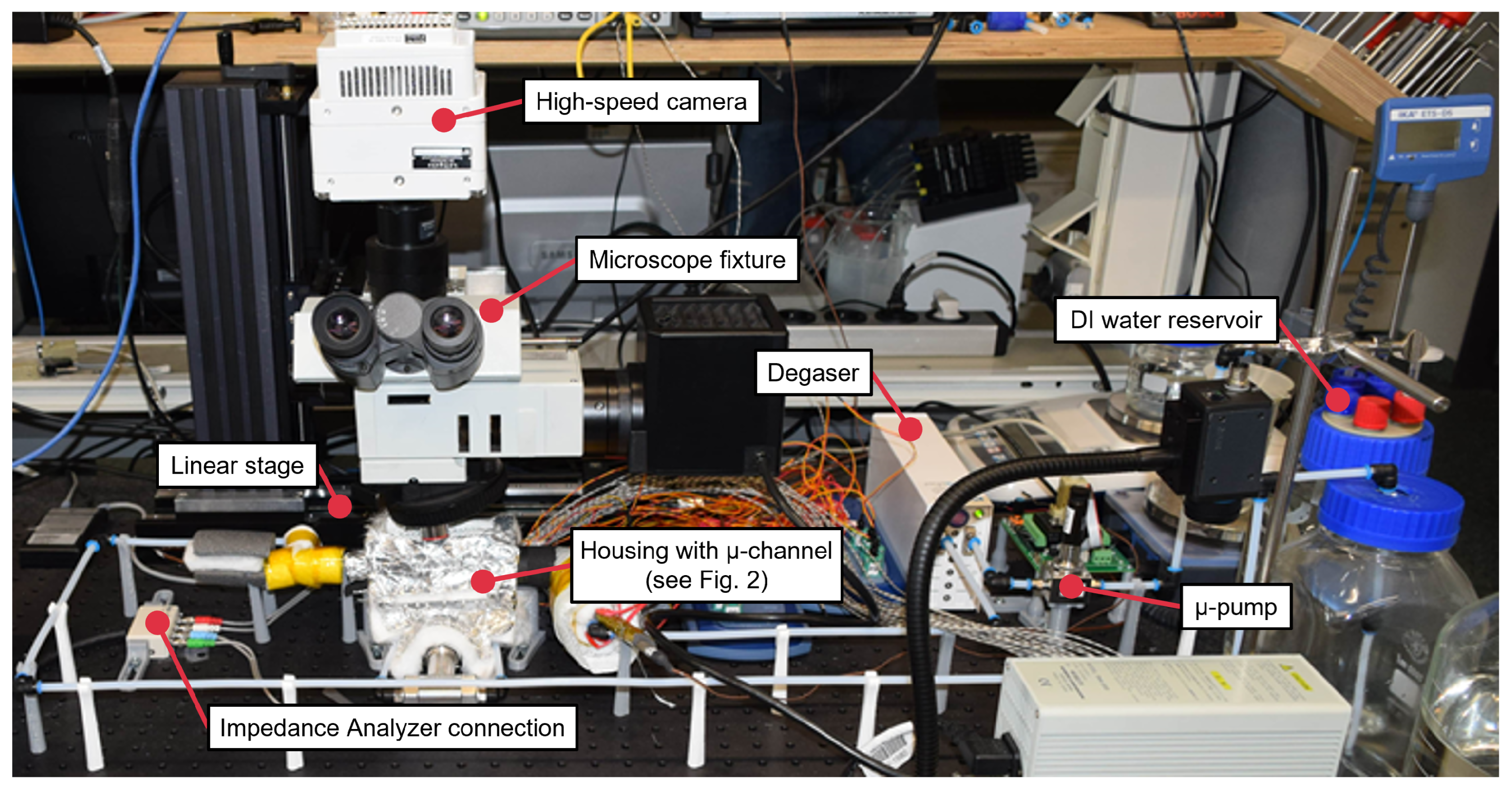

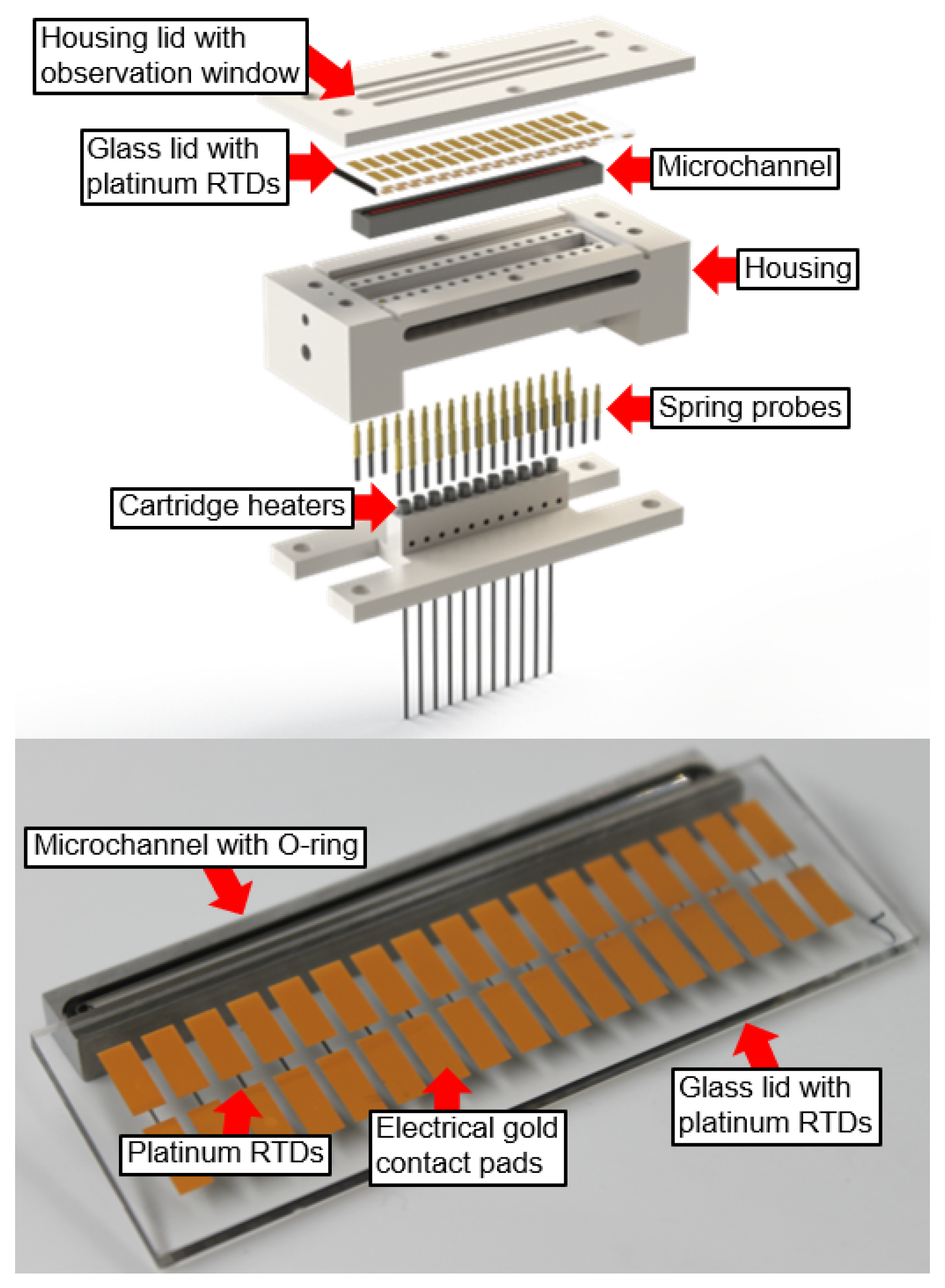

2.1. Experimental Flow Boiling Apparatus

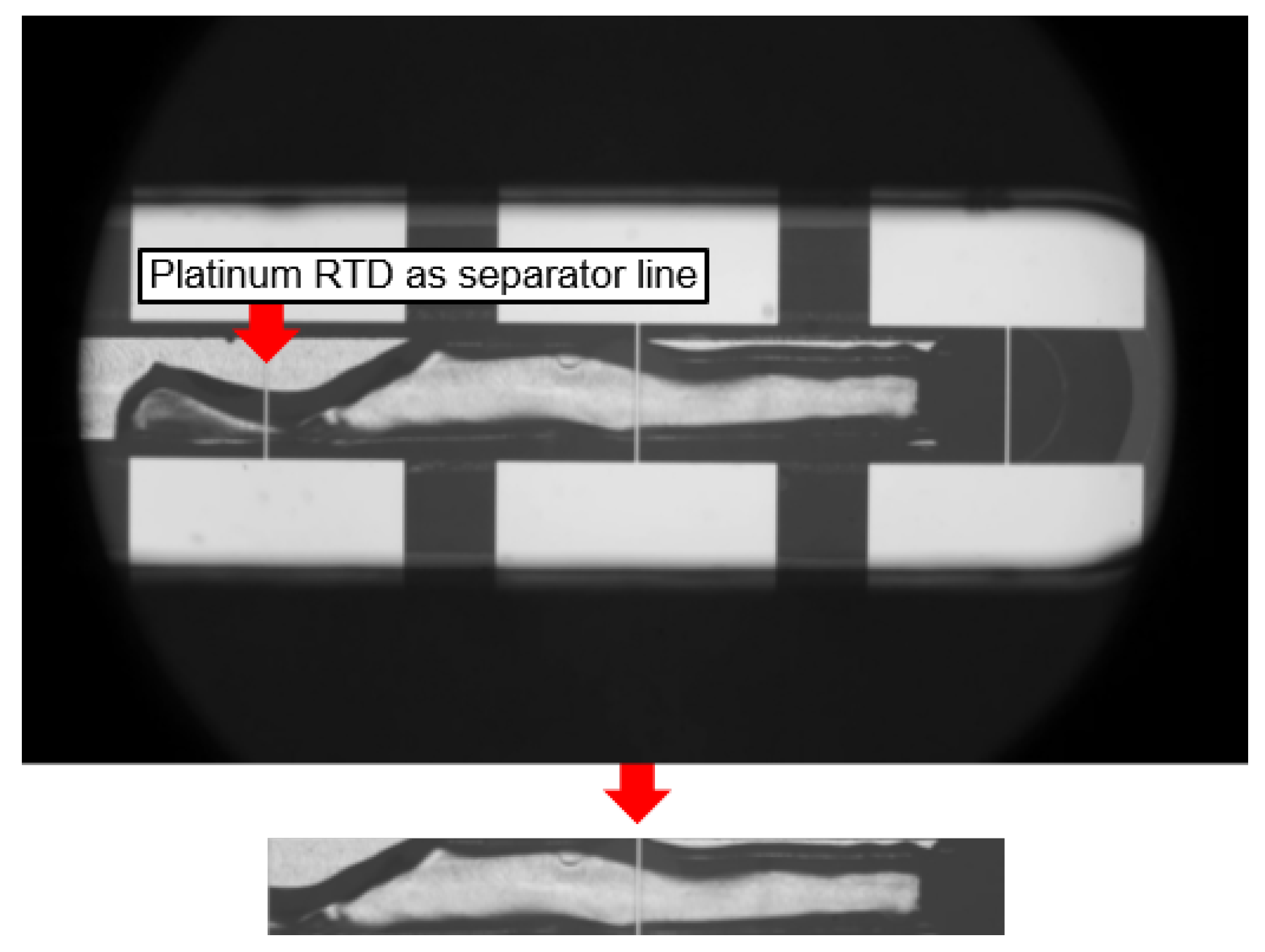

2.2. Video Data Pre-Processing

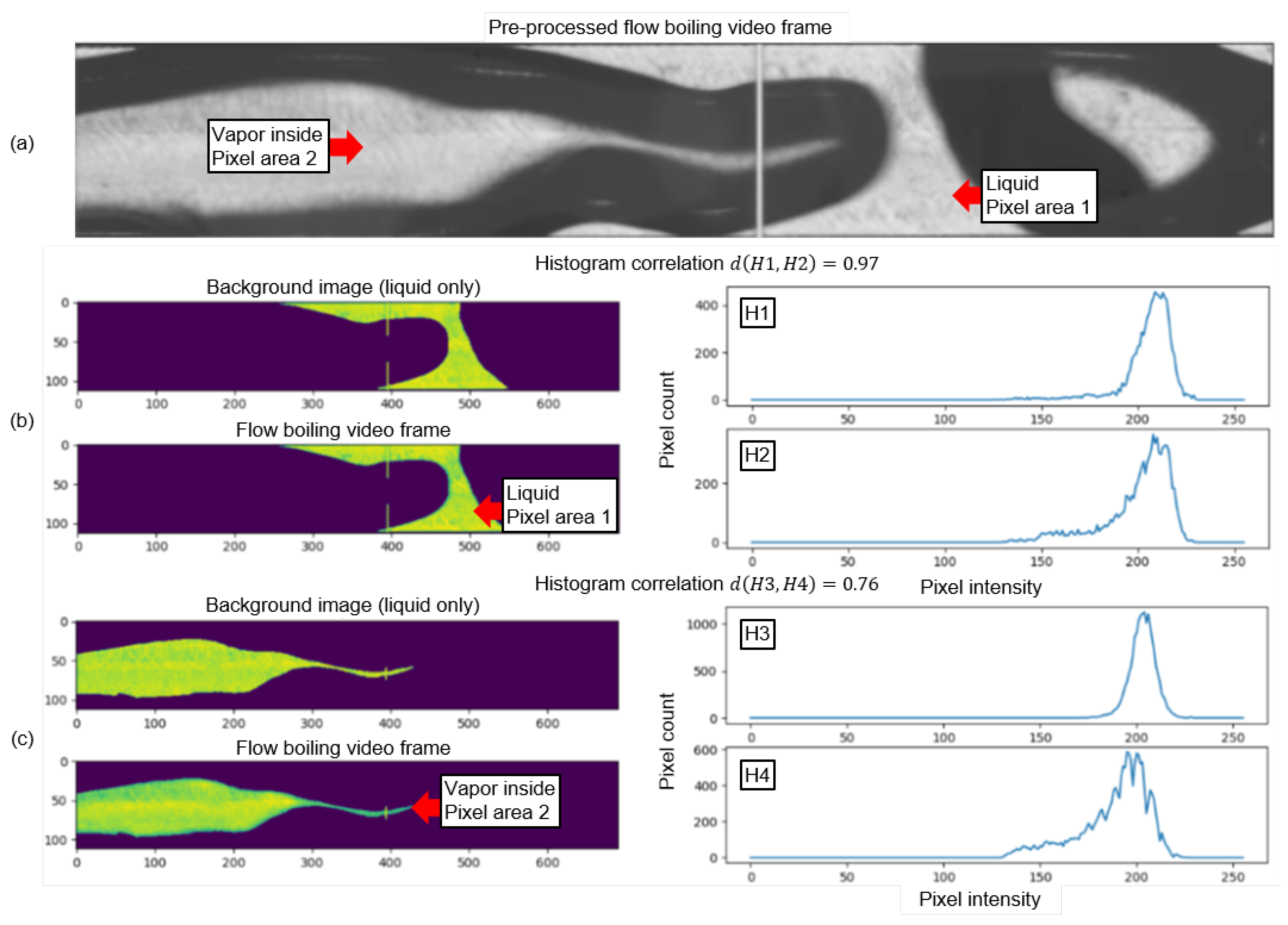

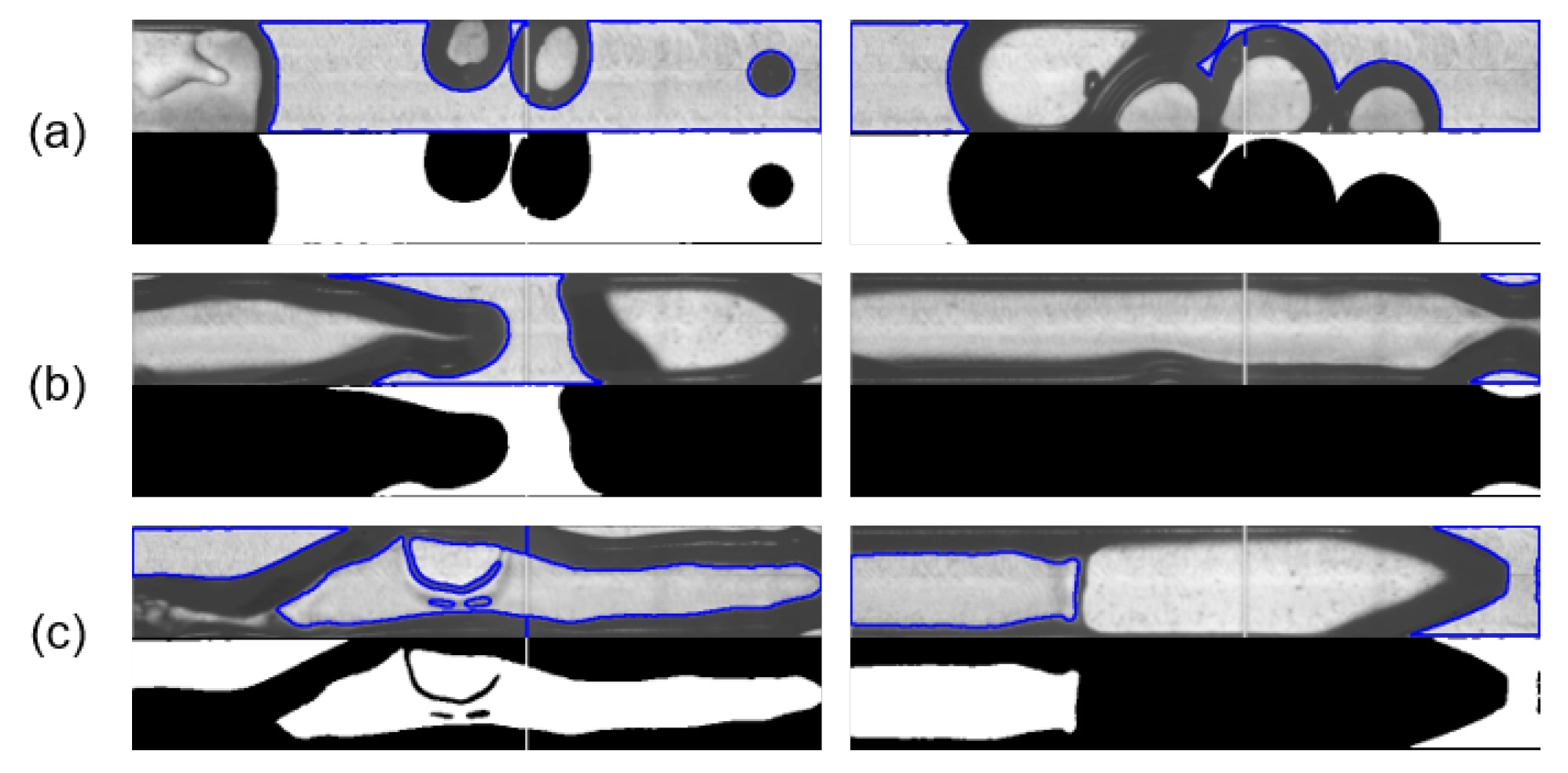

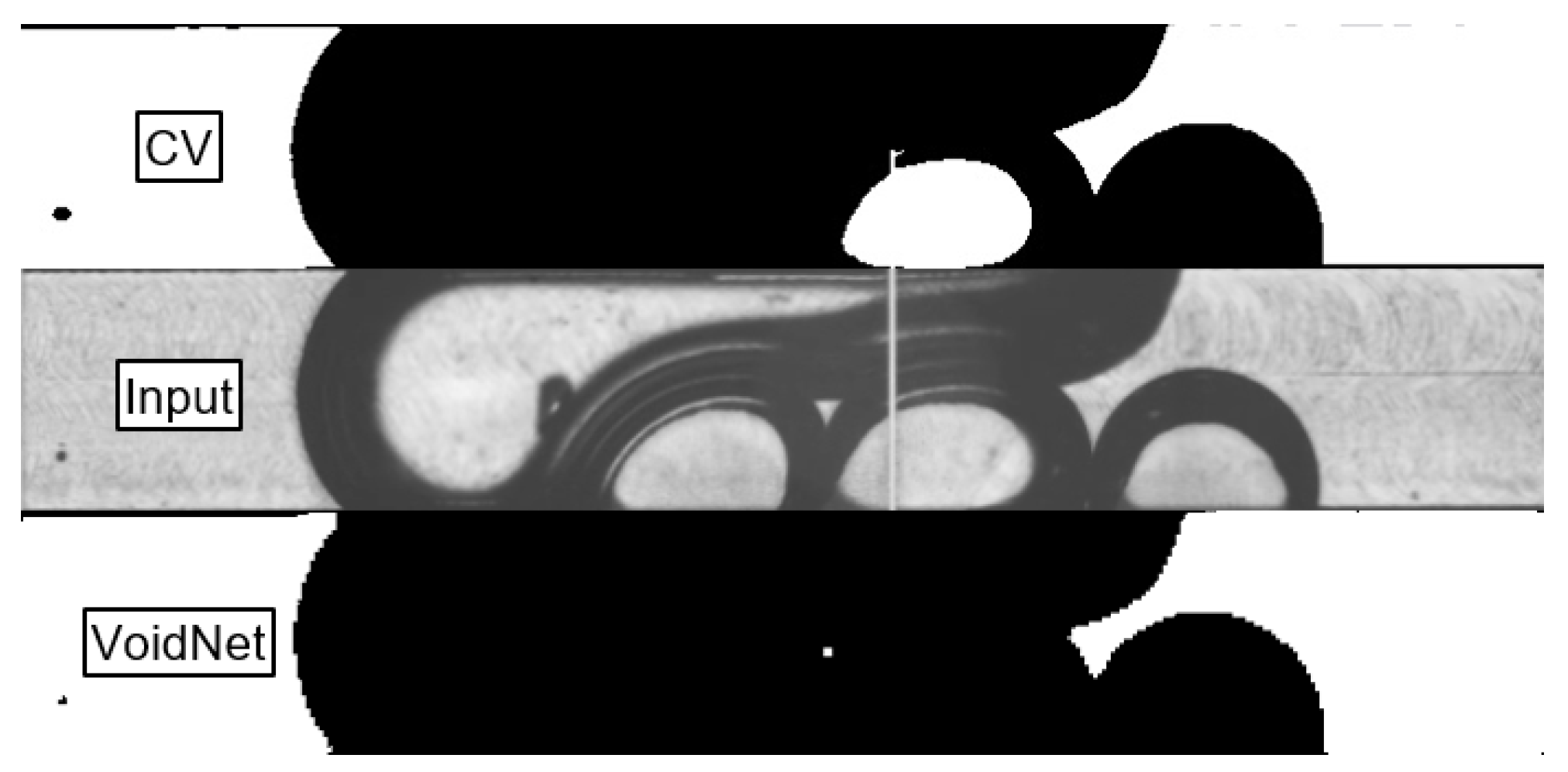

2.3. Computer Vision for Binary Image Segmentation

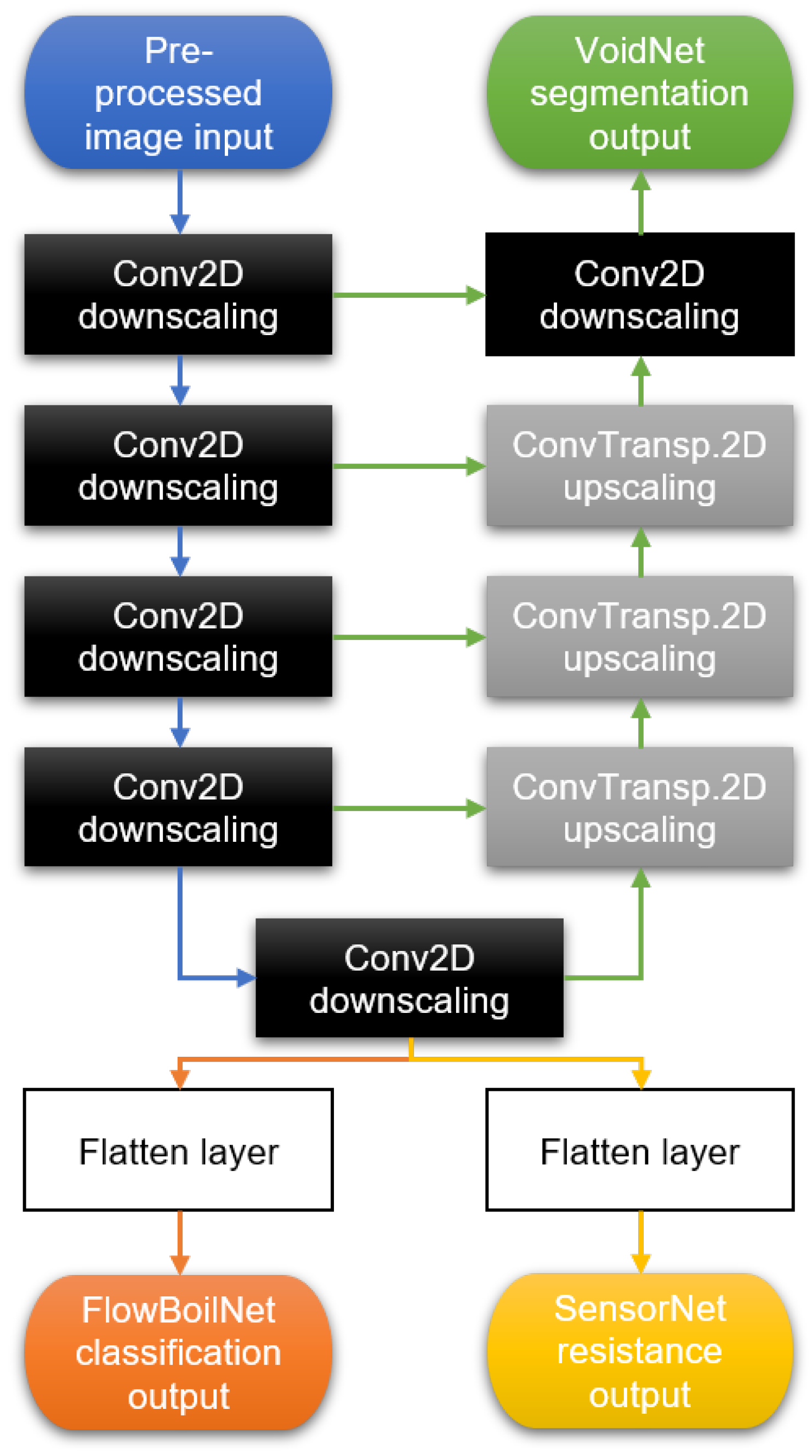

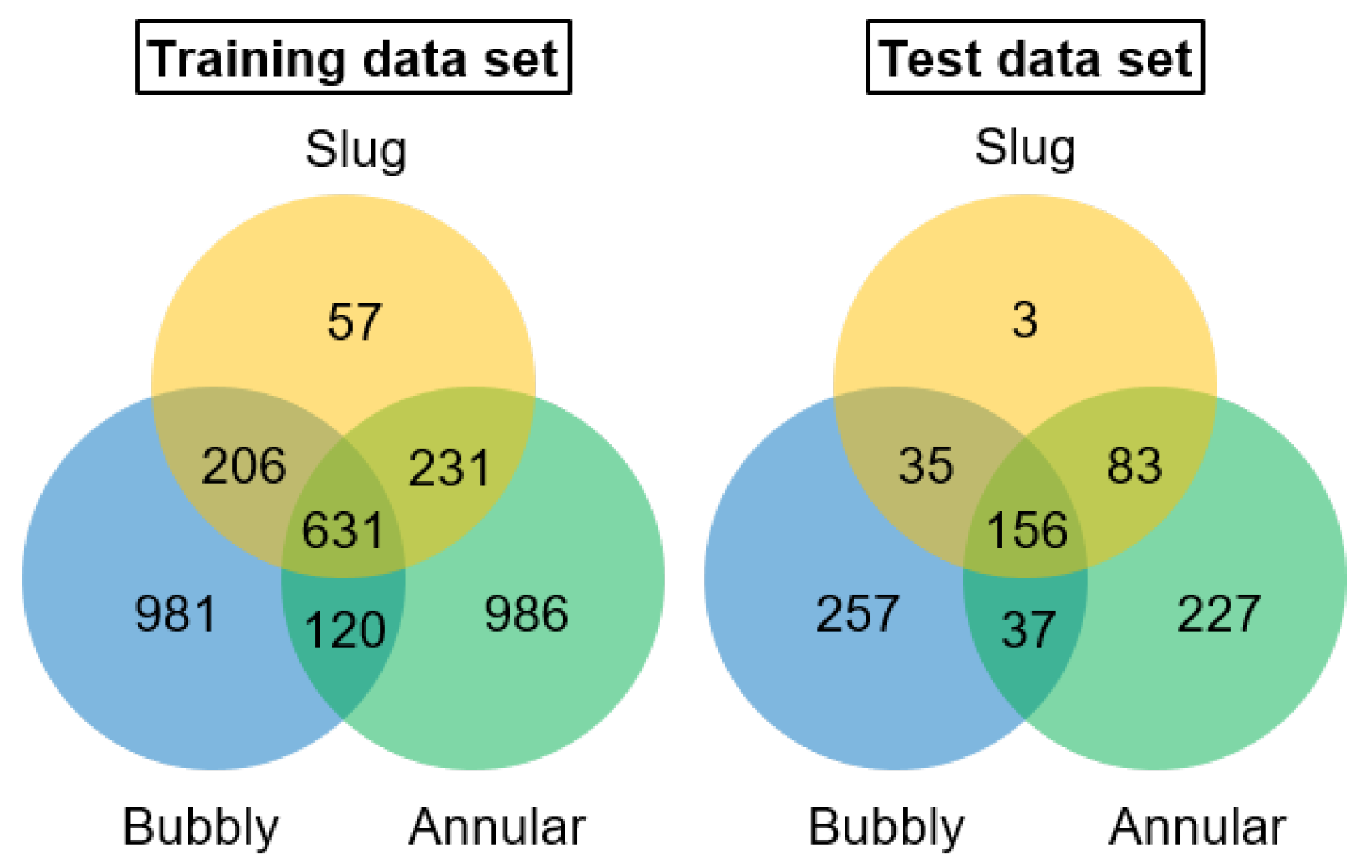

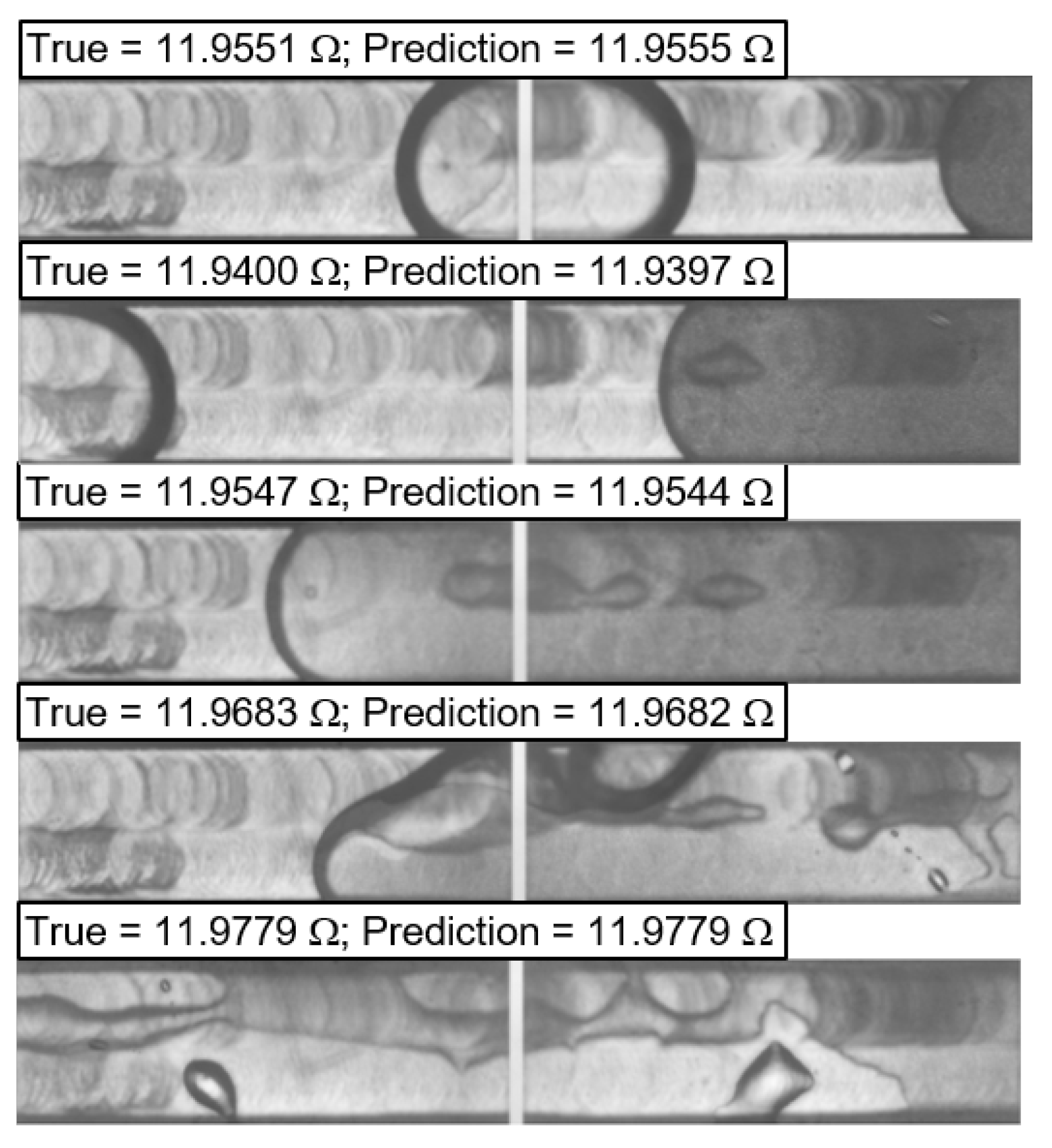

2.4. Deep Learning for Binary Image Segmentation, Flow Regime Classification and RTD Data Prediction

- Input layer image channels were set to 3, as the video frames are in RGB format.

- Output layer image channels were set to 1 since the masked images obtained were in greyscale form, with 0 denoting pixels identified as vapor and 1 denoting pixels identified as liquid.

- The kernel size of the first convolutional layer was set to 7 × 7 with a rectifier linear unit (ReLU) activation function. This change helped to process larger images with half the computational cost.

3. Results and Discussion

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| CNN | Convolutional neural network |

| CV | Computer vision |

| DL | Deep learning |

| HSV | High-speed video |

| LSTM | Long short-term memory |

| MSE | Mean square error |

| ReLU | Rectifier linear unit |

| RTD | Resistance temperature detector |

References

- Cheng, L.; Xia, G. High heat flux cooling technologies using microchannel evaporators: Fundamentals and challenges. Heat Transf. Eng. 2023, 44, 1470–1479. [Google Scholar] [CrossRef]

- He, Z.; Yan, Y.; Zhang, Z. Thermal management and temperature uniformity enhancement of electronic devices by micro heat sinks: A review. Energy 2020, 216, 119223. [Google Scholar] [CrossRef]

- Thome, J.R. The new frontier in heat transfer: Microscale and nanoscale technologies. Heat Transf. Eng. 2006, 27, 1–3. [Google Scholar] [CrossRef]

- Benam, B.P.; Sadaghiani, A.K.; Yağcı, V.; Parlak, M.; Sefiane, K.; Koşar, A. Review on high heat flux flow boiling of refrigerants and water for electronics cooling. Int. J. Heat Mass Transf. 2021, 180, 121787. [Google Scholar] [CrossRef]

- Karayiannis, T.G.; Mahmoud, M.M. Flow boiling in microchannels: Fundamentals and applications. Appl. Therm. Eng. 2017, 115, 1372–1397. [Google Scholar] [CrossRef]

- Inamdar, S.J.; Lawankar, S.M. Flow boiling in micro and mini channels—A review. AIP Conf. Proc. 2022, 2451, 020054. [Google Scholar]

- Wang, Y.; Wang, Z.G. An overview of liquid–vapor phase change, flow and heat transfer in mini-and micro-channels. Int. J. Therm. Sci. 2014, 86, 227–245. [Google Scholar] [CrossRef]

- Thome, J.R. Engineering Data Book III; Wolverine Tube Inc.: Decatur, AL, USA, 2010. [Google Scholar]

- Zhao, Y.; Chen, G.; Yuan, Q. Liquid-liquid two-phase flow patterns in a rectangular microchannel. AICHE J. 2006, 52, 4052–4060. [Google Scholar] [CrossRef]

- Schepperle, M.; Ghanam, M.; Bucherer, A.; Gerach, T.; Woias, P. Noninvasive platinum thin-film microheater/temperature sensor array for predicting and controlling flow boiling in microchannels. Sens. Actuators A Phys. 2022, 345, 113811. [Google Scholar] [CrossRef]

- Talebi, M.; Sadir, S.; Cobry, K.; Stroh, A.; Dittmeyer, R.; Woias, P. Local heat transfer analysis in a single microchannel with boiling DI-water and correlations with impedance local sensors. Energies 2020, 13, 6473. [Google Scholar] [CrossRef]

- Yang, B.; Zhu, X.; Wei, B.; Liu, M.; Li, Y.; Lv, Z.; Wang, F. Computer vision and machine learning methods for heat transfer and fluid flow in complex structural microchannels: A review. Energies 2023, 16, 1500. [Google Scholar] [CrossRef]

- Hanafizadeh, P.; Ghanbarzadeh, S.; Saidi, M.H. Visual technique for detection of gas–liquid two-phase flow regime in the airlift pump. J. Pet. Sci. Eng. 2023, 75, 327–335. [Google Scholar] [CrossRef]

- Singh, S.G.; Jain, A.; Sridharan, A.; Duttagupta, S.P.; Agrawal, A. Flow map and measurement of void fraction and heat transfer coefficient using an image analysis technique for flow boiling of water in a silicon microchannel. J. Micromech. Microeng. 2009, 19, 075004. [Google Scholar] [CrossRef]

- Qiu, Y.; Garg, D.; Zhou, L.; Kharangate, C.R.; Kim, S.M.; Mudawar, I. An artificial neural network model to predict mini/micro-channels saturated flow boiling heat transfer coefficient based on universal consolidated data. Int. J. Heat Mass Transf. 2020, 149, 119211. [Google Scholar] [CrossRef]

- Zhu, G.; Wen, T.; Zhang, D. Machine learning based approach for the prediction of flow boiling/condensation heat transfer performance in mini channels with serrated fins. Int. J. Heat Mass Transf. 2021, 166, 120783. [Google Scholar] [CrossRef]

- Qiu, Y.; Garg, D.; Kim, S.M.; Mudawar, I.; Kharangate, C.R. Machine learning algorithms to predict flow boiling pressure drop in mini/micro-channels based on universal consolidated data. Int. J. Heat Mass Transf. 2021, 178, 121607. [Google Scholar] [CrossRef]

- Bard, A.; Qiu, Y.; Kharangate, C.R.; French, R. Consolidated modeling and prediction of heat transfer coefficients for saturated flow boiling in mini/micro-channels using machine learning methods. Appl. Therm. Eng. 2022, 210, 118305. [Google Scholar] [CrossRef]

- Qiu, Y.; Vo, T.; Garg, D.; Lee, H.; Kharangate, C.R. A systematic approach to optimization of ANN model parameters to predict flow boiling heat transfer coefficient in mini/micro-channel heatsinks. Int. J. Heat Mass Transf. 2023, 202, 123728. [Google Scholar] [CrossRef]

- Chen, B.L.; Yang, T.F.; Sajjad, U.; Ali, H.M.; Yan, W.M. Deep learning-based assessment of saturated flow boiling heat transfer and two-phase pressure drop for evaporating flow. Eng. Anal. Bound. Elem. 2023, 151, 519–537. [Google Scholar] [CrossRef]

- Suh, Y.; Bostanabad, R.; Won, Y. Deep learning predicts boiling heat transfer. Sci. Rep. 2021, 11, 5622. [Google Scholar] [CrossRef]

- Lee, H.; Lee, G.; Kim, K.; Kong, D.; Lee, H. Multimodal machine learning for predicting heat transfer characteristics in micro-pin fin heat sinks. Case Stud. Therm. Eng. 2024, 57, 104331. [Google Scholar] [CrossRef]

- Kim, Y.; Park, H. Deep learning-based automated and universal bubble detection and mask extraction in complex two-phase flows. Sci. Rep. 2021, 11, 8940. [Google Scholar] [CrossRef] [PubMed]

- Kadish, S.; Schmid, D.; Son, J.; Boje, E. Computer vision-based classification of flow regime and vapor quality in vertical two-phase flow. Sensors 2022, 22, 996. [Google Scholar] [CrossRef] [PubMed]

- Seong, J.H.; Ravichandran, M.; Su, G.; Phillips, B.; Bucci, M. Automated bubble analysis of high-speed subcooled flow boiling images using U-net transfer learning and global optical flow. Int. J. Multiph. Flow 2023, 159, 104336. [Google Scholar] [CrossRef]

- Schepperle, M.; Junaid, S.; Mandal, A.; Selvam, D.; Woias, P. Determination of void fraction in microchannel flow boiling using computer vision. In Proceedings of the Eighth World Congress on Mechanical, Chemical, and Material Engineering (MCM’22), Prague, Czech Republic, 31 July–2 August 2022; p. 164. [Google Scholar]

- Kim, Y.; Park, H. Upward bubbly flows in a square pipe with a sudden expansion: Bubble dispersion and reattachment length. Int. J. Multiph. Flow 2019, 118, 254–269. [Google Scholar] [CrossRef]

- Fu, Y.; Liu, Y. BubGAN: Bubble generative adversarial networks for synthesizing realistic bubbly flow images. Chem. Eng. Sci. 2019, 204, 35–47. [Google Scholar] [CrossRef]

- Lee, J.; Park, H. Bubble dynamics and bubble-induced agitation in the homogeneous bubble-swarm past a circular cylinder at small to moderate void fractions. Phys. Rev. Fluids 2020, 5, 054304. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the MICCAI 2015, Munich, Germany, 5–9 October 2015. [Google Scholar]

- Schepperle, M.; Arnold, S.J.; Woias, P. Active conversion of bubbly flow into slug and annular flow during microchannel flow boiling using thin-film platinum microheaters. Proceedings 2024, 97, 13. [Google Scholar] [CrossRef]

- Schepperle, M.; Samkhaniani, N.; Magnini, M.; Woias, P.; Stroh, A. Thermohydraulic characterization of DI water flow in rectangular microchannels by means of experiments and simulations. In Proceedings of the 7th World Congress on Momentum, Heat and Mass Transfer (MHMT’22), Virtual, 7–9 April 2022. [Google Scholar]

- Schepperle, M.; Mandal, A.; Woias, P. Flow boiling instabilities and single-phase pressure drop in rectangular microchannels with different inlet restrictions. In Proceedings of the Eighth World Congress on Momentum, Heat and Mass Transfer (MHMT’23), Lisbon, Portugal, 26–28 March 2023. [Google Scholar]

- Schepperle, M.; Samkhaniani, N.; Magnini, M.; Woias, P.; Stroh, A. Understanding inconsistencies in thermohydraulic characteristics between experimental and numerical data for DI water flow through a rectangular microchannel. ASME J. Heat Mass Transf. 2024, 146, 1–33. [Google Scholar] [CrossRef]

- Hosokawa, S.; Tanaka, K.; Tomiyama, A.; Maeda, Y.; Yamaguchi, S.; Ito, Y. Measurement of micro bubbles generated by a pressurized dissolution method. J. Phys. Conf. Ser. 2009, 147, 012016. [Google Scholar] [CrossRef]

- Gordiychuk, A.; Svanera, M.; Benini, S.; Poesio, P. Size distribution and Sauter mean diameter of micro bubbles for a Venturi type bubble generator. Exp. Therm. Fluid Sci. 2016, 70, 51–60. [Google Scholar] [CrossRef]

- Bertels, J.; Eelbode, T.; Berman, M.; Vandermeulen, D.; Maes, F.; Bisschops, R.; Blaschko, M.B. Optimizing the Dice score and jaccard index for medical image segmentation: Theory and practice. In Proceedings of the MICCAI 2019, Shenzhen, China, 13–17 October 2019. [Google Scholar]

- Kingma, P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Simard, P.; Steinkraus, D.; Platt, J. Best practices for convolutional neural networks applied to visual document analysis. In Proceedings of the Seventh International Conference on Document Analysis and Recognition—ICDAR 2003, Edinburgh, Scotland, 3–6 August 2003. [Google Scholar]

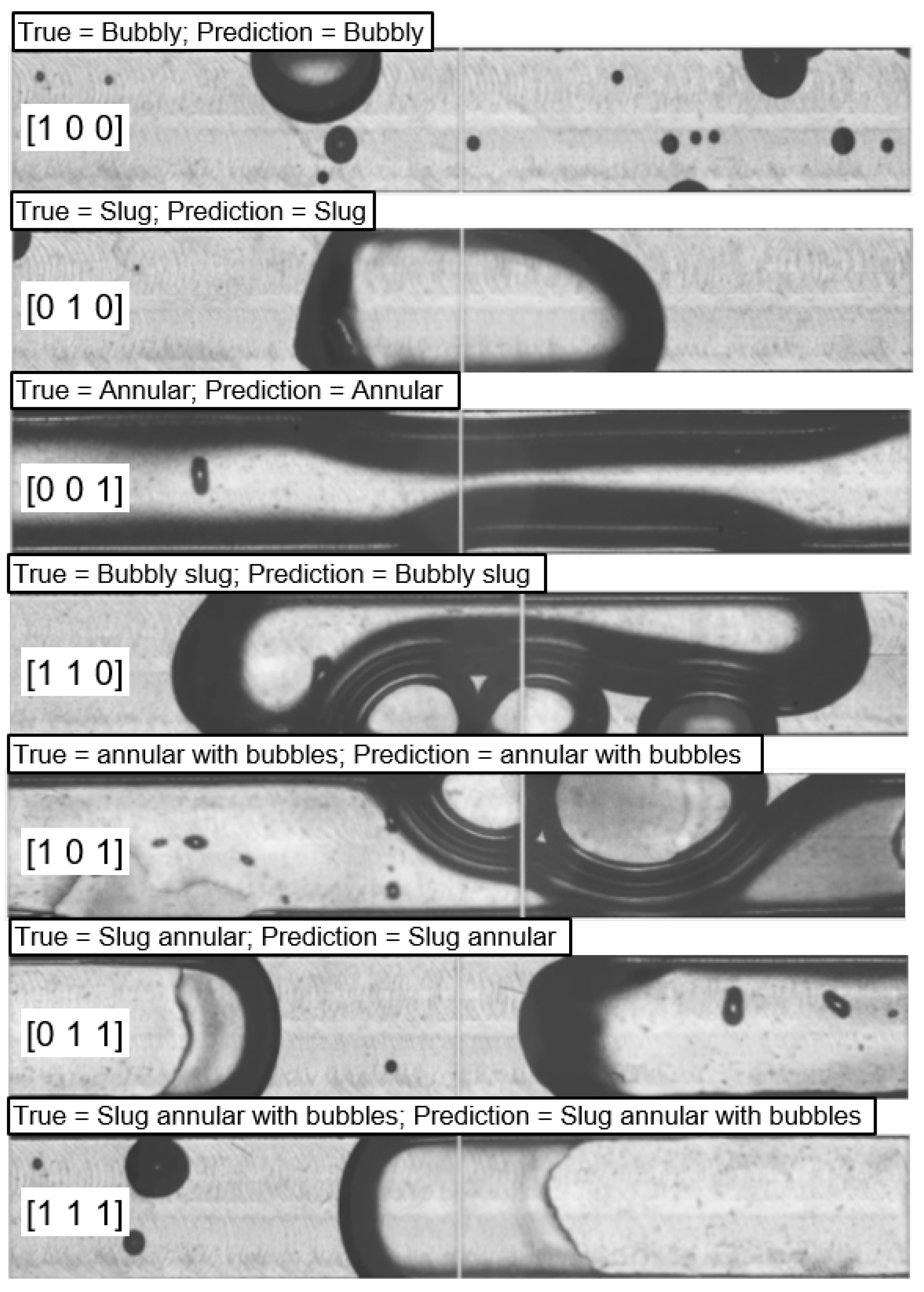

| Flow Regime | 3 × 1 Output Tensor |

|---|---|

| Bubbly | [1 0 0] |

| Slug | [0 1 0] |

| Annular | [0 0 1] |

| Bubbly slug | [1 1 0] |

| Annular with bubbles | [1 0 1] |

| Slug annular | [0 1 1] |

| Slug annular with bubbles | [1 1 1] |

| Hyperparameter | VoidNet | FlowBoilNet | SensorNet |

|---|---|---|---|

| Loss | Dice coefficient | Cross entropy | MSE |

| Optimizer | Adam | Adam | Adam |

| Learning rate | 10−5 | 10−4 | 10−6 |

| Batch Size | 32 | 32 | 32 |

| Epochs | 50 | 50 | 50 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Schepperle, M.; Junaid, S.; Woias, P. Computer-Vision- and Deep-Learning-Based Determination of Flow Regimes, Void Fraction, and Resistance Sensor Data in Microchannel Flow Boiling. Sensors 2024, 24, 3363. https://doi.org/10.3390/s24113363

Schepperle M, Junaid S, Woias P. Computer-Vision- and Deep-Learning-Based Determination of Flow Regimes, Void Fraction, and Resistance Sensor Data in Microchannel Flow Boiling. Sensors. 2024; 24(11):3363. https://doi.org/10.3390/s24113363

Chicago/Turabian StyleSchepperle, Mark, Shayan Junaid, and Peter Woias. 2024. "Computer-Vision- and Deep-Learning-Based Determination of Flow Regimes, Void Fraction, and Resistance Sensor Data in Microchannel Flow Boiling" Sensors 24, no. 11: 3363. https://doi.org/10.3390/s24113363

APA StyleSchepperle, M., Junaid, S., & Woias, P. (2024). Computer-Vision- and Deep-Learning-Based Determination of Flow Regimes, Void Fraction, and Resistance Sensor Data in Microchannel Flow Boiling. Sensors, 24(11), 3363. https://doi.org/10.3390/s24113363