Abstract

This review article aims to address common research questions in passive polarized vision for robotics. What kind of polarization sensing can we embed into robots? Can we find our geolocation and true north heading by detecting light scattering from the sky as animals do? How should polarization images be related to the physical properties of reflecting surfaces in the context of scene understanding? This review article is divided into three main sections to address these questions, as well as to assist roboticists in identifying future directions in passive polarized vision for robotics. After an introduction, three key interconnected areas will be covered in the following sections: embedded polarization imaging; polarized vision for robotics navigation; and polarized vision for scene understanding. We will then discuss how polarized vision, a type of vision commonly used in the animal kingdom, should be implemented in robotics; this type of vision has not yet been exploited in robotics service. Passive polarized vision could be a supplemental perceptive modality of localization techniques to complement and reinforce more conventional ones.

1. Introduction

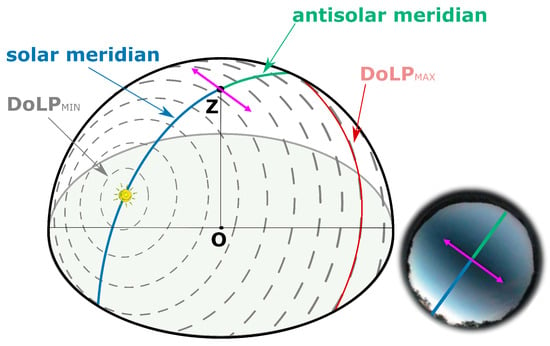

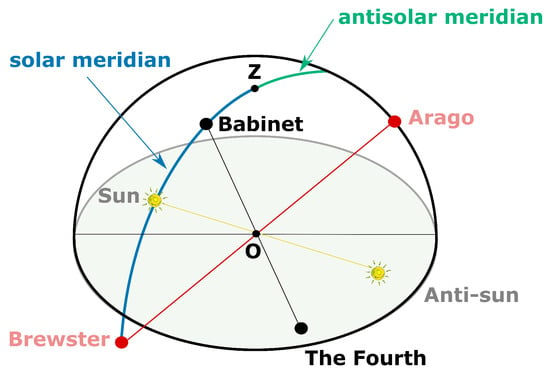

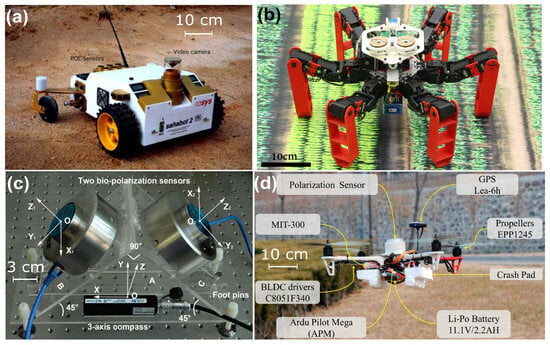

Navigating in Global Navigation Satellite System-denied or unmapped environments will, over the coming decade, become one of the 10 biggest challenges in robotics [1]. Currently, autonomous robots rely on Global Navigation Satellite System (GNSS), Inertial Navigation Systems (INS) and ground-based antennas to triangulate or correct GNSS signals (5G networks or Real-Time Kinematic (RTK) networks), astronomical navigation, gyrocompass navigation, and vision-based or lidar-based SLAM (SLAM stands for Simultaneous Localization And Mapping). Surprisingly, passive polarized vision has not yet become standard in robotics to improve the SLAM technology brick, geolocation, or true north heading detection, for instance. In contrast, animals are able to navigate or migrate over extremely long distances without using localization techniques developed by humans [2]. Migratory birds should be mentioned: they are known for their astonishing navigation capabilities. Studies have shown that some of them, such as Savannah sparrows [3] or Catharus thrushes [4], can navigate by means of a Polarization-based Compass (PbC), which they use to calibrate their magnetic compass. However, the precise mechanism involved in this calibration remains unclear [5]. On the other side, how insects use sky polarization to navigate is better understood. For instance, desert ants use a powerful navigational tool termed optical path integration to locate their nest. When returning, desert ants follow the shortest possible route—a straight line—even in featureless or unfamiliar terrains. By integrating a directional compass and distance information from their vision, desert ants calculate a vector from their visual inputs, and this leads them home [6]. Since pioneering behavioral experiments on desert ants by Piéron (1904) [7], who manipulated ant position in order to observe their behavior, and Santschi (1911) [8], who manipulated the light perceived by ants by using a mirror, it has taken about a century to understand how desert ants exploit sunlight for navigation purposes [6,9]. The ant-inspired path integrator has been recently implemented on board fully autonomous robots: firstly, a wheeled robot, called Sahabot in 2000 [10], then a legged robot, called AntBot in 2019 [11,12] (see Section 3.3 for further information).

Understanding how roboticists can exploit polarized sunlight or light reflection is at an early stage (Figure 1), but it is extremely relevant because this could pave the way for the development of a GNSS-free geolocation for autonomous outdoor navigation as it works in the animal kingdom [13]. It should be noted that long before the modern polarization sensing systems described below, between the 10th and 13th century, Viking navigators had been using skylight polarization for navigation to reach Greenland and then North America without a magnetic compass, instead using a sunstone working as an optical compass (see [14,15,16,17] and Section 3.1 for further details).

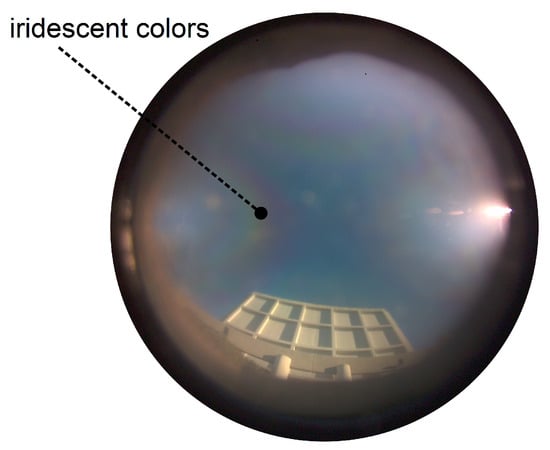

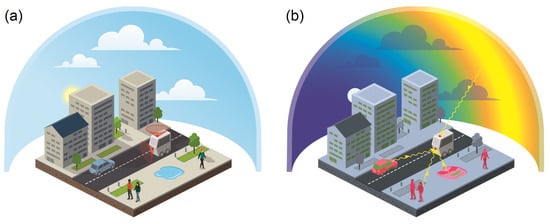

Figure 1.

Illustration of the available polarized light in the environment. Picture credits: Camille Dégargin (2023). (a) Visual environment as seen by the robot with unpolarized light, i.e., light intensity. (b) Visual environment as seen by the robot with polarized light, which can be either due to the light scattering from the sky or the light reflection from surrounding environment.

This review article was written to address common research questions in the field of autonomous robotics:

- What kind of polarization sensing can we embed into robots? (see Section 2)

- Can we geolocate ourselves and find the true north heading by detecting light scattering from the sky? (see Section 3)

- How do polarization images relate to the physical properties of reflecting surfaces in the context of scene understanding? (see Section 4)

Autonomous robots working in urban environments, e.g., for last-mile delivery services, will have to locate and position themselves with a spatial accuracy of better than 5 cm and 0.2 degrees by 2030. Mobile robots navigating through public environments (in urban areas or on a campus for instance, see Figure 1a) must meet the most stringent safety requirements. They must comply with the Machine Directive (ISO 3691-4 [18]), as well as autonomous vehicle standards such as Directives ISO 26262 [19] (Fusa) and ISO 21448 [20] (Sotif). Using and fusing the polarized sensors’ outputs with an Inertial Navigation System (INS) could be a supplemental perceptive modality of localization techniques that would help reach the level of performance required by ISO standards in order to complement and reinforce the conventional localization techniques (3D LiDAR-based SLAM, GNSS, and visual–inertial odometry).

Robots will therefore use all the available visual information including that coming either from the light scattering of the sky or the light reflection from surrounding environment. Even if the sun is hidden or the sky is covered, light scattering remains available, and this includes relevant and robust information for robots’ navigation (Figure 1b). Moreover, using polarized light reflection will be useful to improve visual contrast in order to better understand the visual scene through superior object detection.

To help researchers find relevant directions over the next decade in the field of passive polarized vision in autonomous vehicles, we will divide this review into three main sections. Following this logic, the three main sections are as follows: Section 2, “Embedded polarization sensing”, focusing on polarimetric sensors which can be embedded on board robots; Section 3, “Polarized vision for navigation”, which will emphasize how polarized light scattering can be used for navigation purposes; and lastly Section 4, “Polarized vision for scene understanding”, which will suggest how polarized light reflection can be used to better understand a visual scene. Figure 1 illustrates the links between these three sections as they relate to the above main research questions. To address each of these questions, it is required to divide them into more specific research questions on how we can transfer knowledge derived from the physical properties of polarized light to sensors and then use it for navigation purposes.

Previous review articles have focused on the progress of bio-inspired polarized sensors and comprised an exhaustive overview on polarized sensors manufactured by nanotechnology [21], polarization based orientation estimation algorithms, and the combination of polarized sensors with INS, GNSS, SLAM, and other localization systems [22,23] or polarization-based geolocation [23,24] instead of being focused on passive polarized vision in autonomous vehicles in which the dynamic accuracy is more relevant than the static accuracy. Despite the growing interest in bio-inspired polarized sensors for navigation purposes, few of them have been implemented on board mobile robots.

In Section 2, we will introduce the various technological solutions to embed polarization imaging into robots. Current state-of-the-art polarization acquisition techniques will be introduced. Only relevant technologies, comprising passive and linear Stokes polarimetry, in the framework of mobile robotics will be presented in this section. Section 2 will be divided into following five subsections:

- Section 2.1. Stokes formalism;

- Section 2.2. State-of-the-art of polarization analysis techniques;

- Section 2.3. Calibration and preprocessing;

- Section 2.4. Extension to multispectral polarimetric sensing;

- Section 2.5. Summary and future directions in embedded polarization sensing.

In Section 3, we will introduce the various implementations of polarization-based navigation systems on board autonomous robots. Starting with a brief historical overview of polarization navigation, we will then describe the skylight polarization pattern from the simplest model to the most advanced ones. Next, we will present an exhaustive overview of polarized sensors implemented on board vehicles or autonomous robots for heading or attitude estimation. Lastly, recent developments in polarization-based geolocation will be presented. Section 3 will be divided into the following five subsections:

- Section 3.1. Historical overview of polarization navigation;

- Section 3.2. The skylight’s polarization pattern;

- Section 3.3. Polarization-based sensors dedicated to navigation;

- Section 3.4. Methods for combining polarization-based geolocation to an integrated navigation system;

- Section 3.5. Summary and future directions in polarized vision for navigation.

In Section 4, we will introduce the use of polarized vision for scene understanding. Polarization has been widely used in the classification of materials [25] or reconstruction of object shape [26]: the section will only focus on applications that can be directly extended to autonomous vehicles. After recalling and explaining all the mathematical formula linking the polarization parameters to the normal orientation, object detection will be described. Then, shape from polarization that exploits most of the physical information and the latest techniques using polarization imaging to improve depth estimation and facilitate pose estimation in robots will be presented . Section 4 will be divided into the following five subsections:

- Section 4.1. Polarization and reflection;

- Section 4.2. Detection and classification;

- Section 4.3. Shape from polarization;

- Section 4.4. 3D-depth with polarization cues;

- Section 4.5. Summary and future directions in polarized vision for scene understanding.

In Section 5, we will deal with lessons learned from this review and provide new lines of research and future directions in the sensing of polarized light in robotics for the next 10 years.

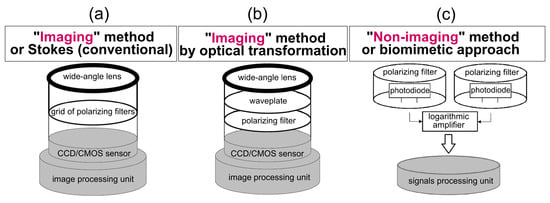

2. Embedded Polarization Imaging

We present here the state of the art on polarimetric techniques that allow for the capture of the polarization characteristics of an unknown beam of light. We will first consider Stokes formalism. We focus on technologies that seem appropriate for the framework of on-board acquisition systems in mobile robotics: passive and linear Stokes imaging polarimeters. Non-imaging sensors used for navigation are detailed in Section 3.3. Further information about point-source sensors can be found in [21].

2.1. Stokes Formalism

The linear polarization state of light depends on the material properties of the objects in the scene, but also on the geometry of the incident light beam (angle of incidence and angle of reflection), and on the state of polarization of the incident light. The whole polarization information about the scene is contained in the four-component Stokes vector , sometimes referred to as [27,28]. The first component is related to the total energy in the scene (polarized or not), the second and third components are related to the linear polarization, and the fourth component is related to circular polarization. A convenient representation of the Stokes vector is the Poincaré sphere [29], such as described Figure 2.

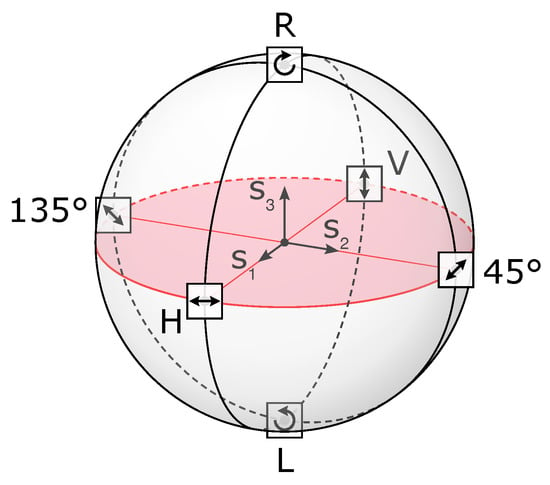

Figure 2.

Poincaré sphere. In the equatorial plane (in pink), we can find purely linear polarizations that are considered in our review. Adapted from original material under CC-BY license [30].

The effect of any optical change on an input Stokes vector into an output vector can be described by a Mueller matrix such as [31,32]:

Mueller matrix estimation can be used to study and classify materials [33,34,35,36,37] or for biomedical applications [38,39]; however, in the following, we will restrict ourselves to Stokes estimation.

In outdoor robotic conditions, i.e., in environments with passive illumination, beams with significant elliptical polarization are rarely encountered since first diffusion only produces linear polarization [40,41]. For practical purposes, we will therefore limit ourselves to linear polarization for the description of Stokes formalism and thus consider that , which should be confirmed for each application.

Estimating the linear Stokes vector can be performed through the measurement of foujr elementary intensities measured through a linear polarizer oriented at 0°, 45°, 90° and −45° and therefore named , , , and :

This is probably the most popular method for capturing linear Stokes parameters. However, reduced schemes using only three measures also exist [42]. It should be noted that the choice of configuration affects the system condition number, which impacts on performance metrics such as the signal-to-noise ratio (SNR) [43], specifically and thoroughly in ref. [44] for a four-polarizer filter array sensor. Moreover, it has been demonstrated that the polarization angles used for the polarization state analyzer (PSA) that minimize noise influence form a regular polyhedron in the Poincaré space (which is a unit disk for linear polarization or a unit sphere in the general case) [42].

It should be noted that is not an algebraic vector (no additive inverse, for instance) and that any vector in is not a Stokes vector. Stokes vector components must fulfill:

A generalized measurement framework can be derived for any polarimeter using N polarization channels. The principle is to perform intensity measurements in N different configurations of the PSA after proper calibration, meaning proper determination of the N analyzer vectors. Since in our case , we can write the following:

where is the polarimetric measurement matrix formed by the analyzer vectors. Provided the N configurations are properly chosen, the Stokes vector is estimated by using , where is often called the Data Reduction Matrix (DRM) or the analysis matrix, computed using the pseudo-inverse method [45,46].

One can derive polarization metrics from the linear Stokes vector, for instance the Degree of Linear Polarization :

and the Angle of Linear Polarization :

Both and are useful for skylight navigation, as detailed in Section 3.2. In many cases, authors often mention using the Degree of Polarization :

In our specific case, with no circular polarization, evaluating comes down to evaluating .

2.2. State-of-the-Art Polarization State Analyzers (PSA)

Two main categories of Polarization State Analyzers (PSAs) exist, both of them providing Stokes information: scanning and snapshot systems. All Division-of-Time (DoT) polarimeters belong to the class of scanning instruments, i.e., several sequential acquisitions are needed to obtain the polarimetric information, but some of these are fast enough to be compatible for robotic applications. Thus, we first present the DoT techniques, and then present the snapshot techniques, namely Replication of Aperture (RoAp), Division of Amplitude (DoAmp), Division of Aperture (DoAp) and Division of Focal Plane (DoFP).

Table 1, inspired by that of Tyo et al. [47], lists the various polarimetric imaging techniques detailed below. It is worth noting that we only include the technologies that permit the capture of two to four Stokes parameters in an efficient way.

Table 1.

Pros and cons of various imaging Stokes polarimeter architectures (inspired from [47]). For two configurations (RoAp and DoAp, labeled with an ’), obtaining only 3 Stokes parameters is reported, but obtaining 4 seems reasonably straightforward. For the Division of Focal plane, most systems provide 3 Stokes parameters; getting the fourth parameter is not straightforward but has been demonstrated in prototype polarimeters.

2.2.1. PSA Using Division of Time (DoT)

PSA with Rotating Polarization Elements

Conceptually, this is the simplest kind of PSA. A single rotating element (a polarizer or a waveplate in front of a fixed polarizer) is located in the optical path between the object and the sensor. N azimuth angles are considered for the rotating element, as in Equation (5). The maximum rank for the analysis matrix W is 3, even if we multiply the measurements in various angular configurations. Only a partial Stokes vector is therefore analyzed. To analyze all the Stokes information, it is necessary to use as a polarization element an extra waveplate whose retardance is not an integer multiple of . Typically, a quarter-wave plate is used. Tyo [43] showed that a waveplate with a retardance of optimizes the system SNR (provided that optimum angles are chosen). This type of assembly, whether the waveplates are motorized or not, remains slow, but does potentially present the best optical quality. However, it may be necessary to register the different images before calculating the Stokes parameters (see Section 2.3.2). Such techniques have been successfully used for skylight polarization estimation [48,49,50].

PSA Using Liquid Crystal Cells

It is advantageous to replace the mechanically rotating polarization element with an electrically controlled liquid crystal cell. You can consult [71] for an overview of the physics and use of these liquid crystals. Due to Wolff [51], this idea gave rise to many works in the 2000s. In this preliminary implementation, polarization is rotated thanks to two twisted nematic liquid crystal cells controlled in a binary manner; therefore, a maximum of four directions of analysis is obtained. It is reasonable to estimate that the rate of 25 frames per second (fps) can be reached. Gandorfer accelerated this setup thanks to ferroelectric liquid crystal cells (smectic C) [53], thus achieving 250 fps. Some experimental works improved this principle using a single tunable ferroelectric liquid crystal cell which allows a continuous adjustment of the polarization rotation [56,72] and obtaining three Stokes parameters. Optimized use of modulator control and chromatism also achieves the fourth Stokes parameter, but at the cost of higher noise [73].

Commercial liquid crystal polarimetric cameras were marketed as early as the 2000s by BossaNova [57,58]. Today, cameras based solely on liquid crystal modulators are no longer an optimum solution and have mainly been replaced by Polarimetric Filter Array (PFA) cameras (see Section 2.2.5) when only the linear polarization is of interest. However, liquid crystal modulators remain interesting for analyzing circular polarization [74] (impossible for commercial PFA cameras) and also for generating polarization states in Mueller imaging as Polarization State Generators.

Liquid crystal techniques for skylight observation were reported in Refs. [55,59].

2.2.2. PSA Using Replication-of-Aperture (RoAp)

These systems are conceptually very straightforward: you place as many cameras and optics as you need next to each other. Systems dedicated to three Stokes parameters, measuring along three or four polarization directions, were reported [60,61,62]. These systems are rather expensive and require both a calibration of the different systems and a registration of the different polarization images before estimating Stokes parameters.

2.2.3. PSA Using Division of Amplitude (DoAmp)

Use of Beam Splitters

This type of assembly is conceptually quite simple, since it consists of dividing the beam into as many sub-beams as there are measurements to be performed. In practice, if one wants to access all the Stokes information, this leads to relatively heavy setups, since four analysis arms are required. The optical elements must be of high quality and the images must be mechanically or digitally registered. An apparently high-performance compact version has been proposed [63]; it allows access to all the linear polarization information. A simplified version consists of placing oneself in a monostatic configuration and analyzing only two crossed polarization components by dividing the beam using a Wollaston prism. The latter makes it possible to shift the two polarization components and thus juxtapose them on the detector [64]. It should be noted that a version providing full Stokes information has already been implemented in the infrared [75].

A noteworthy approach combining DoT and DoAmp has been suggested [65]. It forms Stokes components calculated from images acquired simultaneously, therefore with a reduced shift between the different images. In this case, only the first three Stokes components are considered, and two measurement arms are used preceded by a ferroelectric liquid crystal modulator acting as a rotator. Thus are simultaneously acquired, and afterwards . An approach combining DoA and DoFP is also possible [76].

Use of PGA

The measurement of the different polarization components can also be performed via Polarization Gratings Arrays (PGAs) [77,78]. They are composed of anisotropic diffraction optical elements to spatially separate polarization information. PGAs have the property of producing chromatic dispersion proportional to the polarization state of the light, generating a pattern that can be focused and captured on a focal plane. This technique has the advantage of capturing polarization information using a spectral band with a spectral resolution down to 1 nm [79] and allows spectropolarimetric imaging with a simple and compact design.

2.2.4. PSA Using Division of Aperture (DoAp)

This technique is rather similar to the division-of-amplitude method, but the system is more compact since it uses only one camera [66] at the expense of a loss of definition in the polarization images. The optical system is also more complex. It was implemented in the middle-wave infrared but could be considered in the visible range [66].

2.2.5. PSA Using Division of Focal Plane (DoFP)

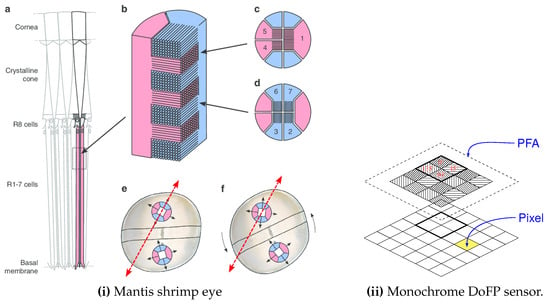

The idea reported Figure 3 takes up that already proposed by Bayer for RGB cameras [80]: the pixels do not all capture the same state of polarization. An array of microfilters (aluminum nanowires), often referred to as Polarizer Filter Array (PFA) composed of a pattern of four pixelated polarizers which are repeated many times on the grid, is placed in front of the sensor. These four polarizers allow capturing vertical, horizontal, 45° and −45° linear polarizations. This idea, proposed by [67] and implemented in particular by Gruev et al. [68], has been commercially developed by 4D Technology [69] and especially Sony Semiconductors which provide sensors to camera integrators [70]. Tremendous progress has been made with this technology over the past ten years. Whereas 4D technology puts the PFA on top of the microlens array, Sony puts the PFA between the microlens array and the sensor itself, which greatly reduces polarization crosstalk, as described Figure 4.

Figure 3.

(i) Mantis shrimp eye is a good example of Division of Focal Plane as far as polarization is concerned (originally published in [81] and made available under CC-BY-SA license [82]). Subfigure (i).a highlights a rhabdom (in pink), which can be seen as a waveguide. The cornea acts as a sensor. A section of the rhabdom is shown in Subfigure (i).b, with retinular cells made of microvilli stacks (here coloured in red and blue), as described in Subfigures (i).c and (i).d. These microvilli act as polarizers. Since each rhabdom contains microvilli in crossed directions, each rhabdom allows selection of two crossed polarizations. Since rhabdoms are shifted by 45° between the ventral and dorsal hemispheres as depicted in Subfigures (i).e and (i).f, the eye can actually sense 4 equally spaced directions of polarization. In Subfigure (i).e, polarization direction (red arrow) is aligned with a set of microvilli in the dorsal hemisphere, so the polarization direction is easily detected. In Subfigure (i).f, the eye has rotated by 22.5°; polarization direction (red arrow) is aligned with none of the sets of microvilli in the dorsal or ventral hemispheres, so the eye cannot detect the polarization direction. Subfigure (ii) describes a modern polarization-sensitive camera sensor, such as Sony Polarsens IMX264MZR, which mimics the mantis shrimp eye, with micropolarizers with different orientations placed side by side in front of the photosensitive sensor.

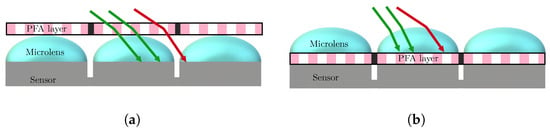

Figure 4.

Two assembly schemes for PFA integration: on-glass (a) and on-chip (b) schemes. In both schemes, most rays (depicted as green arrows) hit the right pixel. For the on-glass scheme, some oblique rays (red arrows) may hit the wrong pixel, which is not possible with the on-chip scheme. Therefore the on-chip scheme used in PolarSens Sony Sensors, with the PFA between the microlenses and the sensor, greatly reduces polarimetric crosstalk. Reproduced with permission from Yilbert Gimenez [83].

The cameras based upon Sony PolarSens sensors proved to be very successful in the scientific community and have been the subject of much literature concerning their characterization and calibration [84,85,86,87,88,89] and demosaicing preprocessing [90,91,92,93]. Among other things, they have enabled the development of applications for driving assistance [94,95] and autonomous navigation [96].

Commercially available cameras have resolutions of 5 to 12 Mpixels and provide 12-bit information with moderate noise for less than $1500. Depending on their communication interface, they can be operated up to 90 fps. If we stick to the acquisition of linear polarization, they have supplanted the DoT and DoA technologies. Since the operating rate only depends on the sensor technology, PFA cameras able to operate up to 7000 fps are reported [97,98]. To acquire the complete Stokes vector, one can combine two PFAs (one of which is equipped with a retarder waveplate) [76] in a hybrid DoA–DoFP architecture or place a liquid crystal modulator in front of the camera (DoT–DoFP architecture) [99]. A laboratory device acquiring the full Stokes information using a single PFA has been proposed [100].

In DoFP PFA systems, the most common polarization arrangement is a repeating pattern of analyzers and has been introduced by Chun et al. [67]. Other spatial arrangement patterns for micropolarizers have been found to be less sensitive to visual artifacts in the reconstructed images [101,102,103], but none have been implemented in a camera to our knowledge.

2.3. Calibration and Preprocessing Operations

The aforementioned hardware systems, whatever their characteristics, provide raw data that must be preprocessed in order to be used for navigation operations. Such raw data, without any corrections or preprocessing, usually result in polarimetric data full of artifacts.

2.3.1. PSA Calibration

To precisely estimate the Stokes vector from intensity measurements, must be estimated very accurately. This compensates for the imperfect polarization optics, i.e., the transmission, diattenuation, and polarization angle characteristics. A first solution consists of the component-wise calibration using a reference metrology polarimeter [104]. Another popular solution proves to be a block calibration of the whole system from the camera responses. It consists of generating a set of M well-known reference polarization states using an ‘ideal’ polarizer—the Stokes vectors of which are gathered in a matrix named —and taking a set of N measurements with the PSA for each of the reference states gathered in a matrix named . Therefore, we can write the following:

An estimate of can then be computed using the pseudo-inverse method [45,46] by and thus the Stokes vector is estimated by using , where is often called the Data Reduction Matrix (DRM). Alternative estimators have also been considered, like Singular Value Decomposition (SVD) [105] or the Eigenvalue Calibration Method (ECM) [106]. Some works assume that the polarization measurement is mainly affected by signal-dependent Poisson shot and Gaussian noise. These considerations have been used to select the optimal reference polarization states to take into account both Poisson and Gaussian noises [85,107,108].

Depending on the type of PSA, calibration may be simplified; for instance, a CCD camera equipped with a rotating polarizer may not require a pixel-to-pixel characterization. A polarimeter including a liquid crystal (LC) cell will require a careful characterization of the LC cell (which behavior may depend on wavelength and temperature). A PFA CMOS camera may require a full characterization since both the CMOS sensor and the PFA exhibit pixel-to pixel variations [109].

Calibration methods have been specifically designed for DoFP PFA polarimeters, like the super-pixel method [84,87,110], which jointly calibrates a group of pixels instead of calibrating independently each pixel. A recent study evaluated the efficiency of this method for extreme camera lens configurations (focal lengths and apertures) [89]. Other evolved calibration methods have been studied which do not need precise and cumbersome instruments [111,112] or spatially uniform illumination [85]. In some cases, for instance if the camera is used in an 8-bit mode, especially when it is based on a Sony PolarSens sensor, a single overall calibration may be sufficient. In this case, only the average figures of the transmission ratio and the orientation angle are considered over the whole image [113].

2.3.2. Spatial Reconstruction of DoFP Images

In the case of DoFP polarimeters, there is a spatial sampling of the analyzers, i.e., the focal plane array is spatially modulated. Thus, each pixel senses only a specific polarization state of a specific point in the scene. A very easy solution consists of subsampling the original raw image into four linear polarization direction images, but this operation produces Instantaneous Field of View (IFoV) errors resulting in strong artifacts in and images. A registration seems mandatory [114,115]. An alternative consists of reconstructing directional images produced by PFA cameras to their full resolution, in order to avoid possible interpretation errors by computer vision algorithms. This aims to estimate the values of each of the missing polarization channels at a pixel location. The operation is called demosaicing, and has been extensively studied in the literature, especially for RGB Bayer microfilter patterns.

As in the spectral case, demosaicing algorithms can benefit from several assumptions, like spatial correlation or polarization channel correlations. Nevertheless, several algorithms are dedicated to DoFP, including the physical constraint [116]. These are based either on filtering [117,118], adaptive filtering [119,120], linear systems theory [114], motion [121,122], or learning approaches [123,124,125].

2.3.3. Spatial Registration of Elementary Polarization Images

Many polarimeters exhibit a spatial shift between the various polarization channels. It can be due to an imperfect alignment (Division of Amplitude polarimeters), to imperfect components, for instance wedge effect (Division of Time polarimeters), or to a rigid relative motion between the camera and the scene (Division of Time polarimeters). This shift, even if it is a subpixel shift, is likely to produce strong artifacts in images for instance [126]. Efficient solutions can be applied to circumvent this phenomenon [127,128].

Images produced by PFA DoFP cameras also exhibit a shift when subsampled without demosaicing (see Section 2.3.2) and should also be registered [129].

Images produced by scanning polarimeters exhibit polarization artifacts at the edge of moving objects. This can be solved by optical flow techniques [130], but this solution remains rather computer intensive [131]. For this very reason, DoFP PFA polarimeters are now extremely popular, much more than DoT polarimeters.

2.3.4. Denoising Polarization Images

As with any imaging system, an imaging polarimetric system is likely to be affected by noise. An abundant amount of works in the literature deal with this; a starting point could be Refs. [43,132,133,134]. This noise can be due to physics (Poisson noise) or due to imperfect or miscalibrated instruments (Gaussian noise, shift between polarimetric bands, etc.). Modern PSAs, provided they are carefully used and carefully calibrated, can produce intensity images with a reduced noise level, but the polarimetric pipeline leading to and estimation may amplify this noise [135]. Recently, a metric named Accuracy of Polarization Measurement Redundancy (APMR) was proposed to quantify this polarimetric noise [136] regarding intensity images, but it is not necessarily correlated to noise affecting Stokes parameters.

To reduce noise, temporal averaging of intensity images is an obvious solution, although it is not always practicable. Filtering solutions originally dedicated to luminance or intensity imaging, such as BM3D [137], can be successfully adapted to polarization imaging, after Stokes estimation [138]. Another popular solution consists of using more than the minimum of three configurations of the PSA necessary to reconstruct the first three Stokes parameters, which is naturally possible for PFA DoFP commercial architectures which include four polarization directions by construction. It can be combined with other solutions consisting of calibrating the PSA (see Section 2.3.1 ) and if necessary compensating for IFoV errors (see Section 2.3.1).

2.4. Extension to Multispectral Polarimetric Sensing

The principles described earlier are compatible with broadband imaging, since linear polarizers usually exhibit a rather flat response over the visible range (and beyond). Nevertheless, when using waveplates or liquid crystal devices, a narrow spectral filter may be required since the retardance depends on the wavelength for such components, even for achromatic waveplates. Not taking this phenomenon into account results in errors in the estimation of DoFP [139].

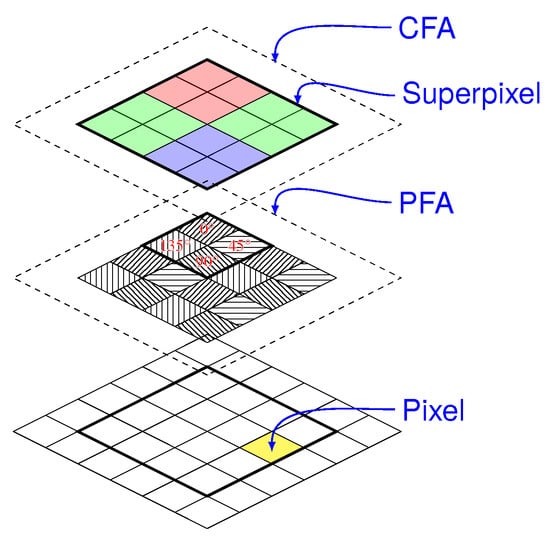

Multispectral sensing can be considered, generally at the expense of further division of space or time [140]. In the first implementations, it consisted of a rotating wheel equipped with spectral filters [141,142]. As a snapshot alternative, in the past few years [143], color polarization filter arrays referred as CPFAs have been commercially released [70]: they mix two principles, the CFA (Color Filter Array) and the PFA, as described in Figure 5.

Figure 5.

Color polarization filter array, such as those implemented in commercial sensors Sony IMX250MYR and IMX253MYR. An efficient demosaicing procedure is required.

With such devices, we obtain 12-channel mosaiced images, the information is then rather sparse for each channel. Efficient demosaicing algorithms are required to prevent color and polarization reconstruction artifacts [125,144,145]. Alternate geometries combining CFA and PFA were proposed in order to maximize the signal to noise ratio or minimize the reconstruction artifacts [145,146,147].

2.5. Summary and Future Directions in Embedded Polarization Sensing

Efficiently capturing linear polarization in 2D in the field of autonomous navigation could benefit from several recent technological developments.

First, most snapshot polarimeters capture the filtered intensities in only one spectral band and in the visible part of the spectrum. This is no longer a real limit with color PFAs, at the expense of a loss in spatial resolution and an attenuation due to the use of spectral filters. This latter point has recently been overcome with a very promising solution consisting in using metasurfaces as routers [148]. The loss of spatial resolution could also be solved through the use of vertically stacked detectors, mimicking a mantis shrimp’s eye, as suggested and implemented by Garcia et al. [149,150] and Altaqui et al. [151]. This could enable the snapshot capture of spectropolarimetric information [152], which could be relevant to computer vision algorithms such as, for example, visibility restoration [153].

Second, robotic navigation using polarization sensing only considers linear polarization since skylight contains mainly linear polarization information. Using circular polarization may help, especially when navigation safety is concerned, as suggested by Geng et al. [154]. This will require that full Stokes snapshot PSAs are available. Several solutions could be considered, for instance using two PFA cameras [76], but promising solutions using only one camera also exist [155].

Finally, it seems that imaging sensors have already reached a mature level that has enlarged the audience of polarimetry. Open-source software toolkits such as Polanalyzer [156] and Pola4all [157] will be useful in the near future to help end users in robotics applications and beyond in the implementation of efficient solutions.

4. Polarized Vision for Scene Understanding

In nature, light polarization occurs mainly due to two physical phenomena: light scattering and light reflection [275]. As an illustration of the latter property, many animal species such as water fleas and butterflies are sensitive to the polarization of light and exploit this ability to discriminate water [276]. This section focuses on how robots may take advantage of sensing polarized light to understand scenes through detection, estimation of 3D shapes, depth and pose estimation. Ref. [277] may be the first in the literature to emphasize, in the computer vision field, how the polarization parameters of light are related to the normal estimation of objects. In this section, after recalling and deriving all the mathematical formula linking the polarization parameters to the normal orientation, direct application, i.e., object detection and discrimination, will be described. Shapes from polarization that exploit most of the physical information will be then presentedand the section will end with the latest techniques using polarization imaging to improve depth estimation and or facilitate pose estimation in robots.

4.1. Polarization and Reflection

The reflection model employed here is a simplified one, providing a first approximation of the use of polarimetric imaging for the detection and 3D reconstruction of objects. In practice, reflected light is a combination of these two reflection: diffuse and specular. Reaching an interface between two media with different properties, light becomes partially reflected and partially transmitted. Considering a beam traveling through the first medium (characterized by a refractive index ) then reaching the interface of a second medium (characterized by refractive index ), the proportions that are reflected and transmitted are defined by the Snell’s Law:

where , , and are the angle of incidence, transmission, and reflection, respectively. In addition, the incident, transmitted, and reflected beams are in the same plane containing the normal of the surface and called the plane of incidence.

4.1.1. Recall of Fresnel Formulae

The Fresnel formulae can be determined by solving Maxwell’s equations and respecting the continuity conditions imposed at the interface on the electric and magnetic fields. Letting r and t denote the reflectivity and transmissivity ratios that represent the ratio of the complex amplitude of the reflected and transmitted values with the amplitude of the electric vector of the incident field, we have the following (see [28]):

where ‖ (resp. ⊥) denotes the components in the plane of incidence (resp. normal to the plane of incidence).

4.1.2. Partial Polarizer

The Mueller matrices of reflection and transmission are directly related to the Mueller matrix of a partial polarizer as defined in the following equation:

where and are the ratio coefficients according to the perpendicular and parallel of the incidence plane, respectively. Therefore, assuming the incoming light is unpolarized, this leads to obtaining light that is partially linearly polarized with a degree of polarization () equal to the following:

In addition, it can be deduced that the polarized vibrations are orthogonal to the plane of incidence if and parallel otherwise.

4.1.3. Specular Reflections

To study the polarization properties of the light that is specularly reflected, Equation (15) can be used by replacing and by the Fresnel ratio of the reflection and given from Equation (13). If we denote as the angle of reflection, denote n as the real refractive index of the media that the beam is reflected within, and assume that the refractive index of air is equal to 1, and can be rewritten:

Consequently, the Equation (15) of the degree of polarization can be rewritten as a function of the angle of reflection and the refractive index n [278]:

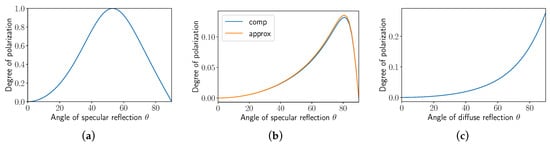

Figure 14a shows the plot of the previous equation with a refractive index n set to 1.5. As can be highlighted here, an ambiguity occurs while trying to determine the angle from the . The previous formulation of the is only valid for dielectric materials. To derive a formula for a metallic object, the complex refractive index of the media , where is the attenuation index, must be taken into account [279]. The following approximation can be applied if we consider the visible region of the spectrum of light [231]:

Figure 14.

Relationship between the (Degree of Polarization) and the reflected angle according to (a) dielectric specular reflection, (b) metallic specular reflection and (c) diffuse dielectric reflection.

Applying the same considerations as for dielectric objects, Equation (15) of the degree of polarization can be rewritten as the following:

The plot presented in Figure 14b assumes a metallic medium and again reveals an ambiguity in the determination of angle from the measured . Nevertheless, contrary to a dielectric object, the maximum occurs for a high value of the angle , around 80°, and the reconstruction the shape of smoothly curved objects can be applied without solving this ambiguity.

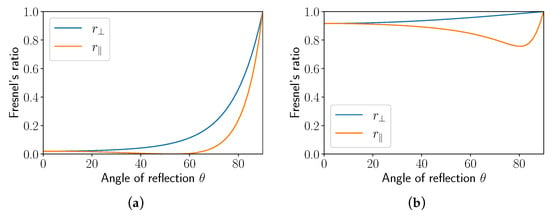

In addition, as can be seen in Figure 15, the orthogonal Fresnel ratio is always greater than the parallel one in both cases: dielectric and metallic media. Therefore, we can deduce that the specularly reflected light becomes polarized orthogonaly to the incidence plane. As a result, polarization contrast that could be measured for materials with both types of reflection tends to reduce. Active polarization imaging, which is outside the scope of this review article, could be used to improve the contrast of such objects.

Figure 15.

Fresnel’s ratio for specular reflection according to the angle of reflection: (a) dielectric object with refractive index equal to 1.33 , (b) metallic object with refractive index equal to .

4.1.4. Diffuse Reflections

The diffuse reflections that can provide polarized light are generally considered as the resulting process of light that first penetrates the surface and becomes partially polarized by refraction. Then, within the medium the light is randomly scattered and becomes depolarized. Some part of the light is then refracted back into air and becomes polarized. To obtain an expression of the degree of polarization according to the angle of diffuse reflection, Equation (15) can be used by replacing and by the Fresnel ratio of the transmission and given from Equation (13). With denoting the angle of diffuse reflection, n denoting the refractive index of the media that the beam is refracted from, and assuming that the refractive index of air is equal to 1, and can be rewritten:

The degree of polarization of the light in the case of diffuse reflections for dielectric objects can be rewritten:

Figure 14c shows the plot of the function linking the to the angle . As can be seen, the is lower in the case of a diffuse reflection than of a specular reflection. Nevertheless, the determination of the angle from the is performed without any ambiguity if the refractive index n of the media is known.

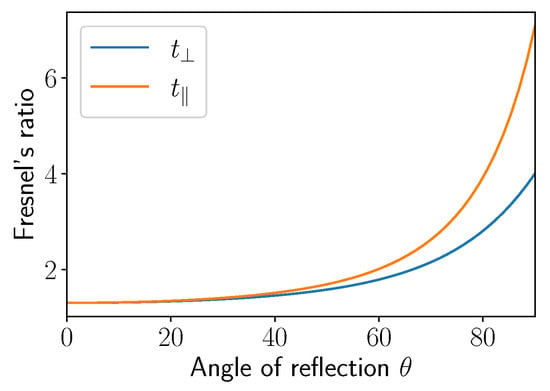

Also, contrary to specular reflections, as illustrated on Figure 16, the orthogonal Fresnel ratio is lower to that of the parallel one, which leads to the conclusion that light obtained by diffuse reflections is always parallel to the incidence plane.

Figure 16.

Fresnel ratio for diffuse reflection from a dielectric object according to the angle of reflection [231].

4.2. Detection and Classification

Before finding some applications in robotics, detection and segmentation of objects based on polarimetric imaging were initially developed in the field of computer vision [277]. In Ref. [280], the physical basis was developed and detailed to highlight the capabilities of polarimetric imaging to distinguish metallic materials from dielectric materials. More advanced classification techniques can be found in [25]. Subsequently, the benefits of this modality to enhance perceiving of transparent objects were revealed [281]. This task is essential in robotic gripping systems to manipulate transparent objects with ease [282] and improvements are continuously being made [283].

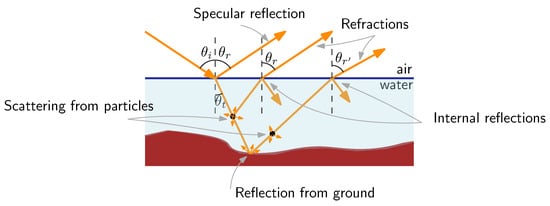

Autonomous robots are often based on bio-inspired systems regarding the perception task. Polarimetric cues are used by many water beetles and insects to search for bodies of water [276,284]. For instance, in ground robotics this modality has been exploited to detect water hazards or mud in conjunction with 3D sensing techniques such as LIDAR [285,286], stereo-vision [287,288], and mono-depth [289]. Figure 17 shows that the light reflected by water is made up of a proportion linked to the specular reflection as well as a proportion linked to refraction as described in the previous subsection. As shown in Figure 17, refraction is a combination of light scattered by particles in the water and light reflected by the ground. The Mueller matrix that models this phenomenon can be determined from the following:

where and are the Mueller matrices of reflection and refraction, respectively. is the Mueller matrix of a depolarizer and is the absorption coefficient for both particles in the water and the ground. and can both be computed using the generic Mueller matrix defined in Equation (14), replacing by the appropriate Fresnel coefficients defined in Equation (13): for the reflection case and for refraction. Using Equation (12) enables us to write the Mueller matrices as a function of the angle of reflection and the refractive index of water n.

Figure 17.

Reflection and refraction of light on water.

Glass or transparent object segmentation remains a major issue in mobile robotics in urban environment to prevent collisions or misunderstanding of the scene. For instance, a learning-based method proposed in Ref. [290] that manages both polarization parameters and colorimetric information tends to outperform standard methods. More generally, the benefits of polarimetric imaging in urban scenes are still growing since it drastically improves the segmentation tasks. Among these, we can cite road classification [94,291,292,293], and semantic segmentation [294]. Advanced classification tasks can also be performed such as land mine detection [295,296] and astronomical solid body identification [297,298,299]. To increase the segmentation task quality, polarization modality can be used advantageously with infrared imaging [293,300,301] or multispectral imaging [296]. Reflection removal [302] can also be seen as a direct application of polarization properties of transparent surfaces to provide high-quality images for navigation tasks.

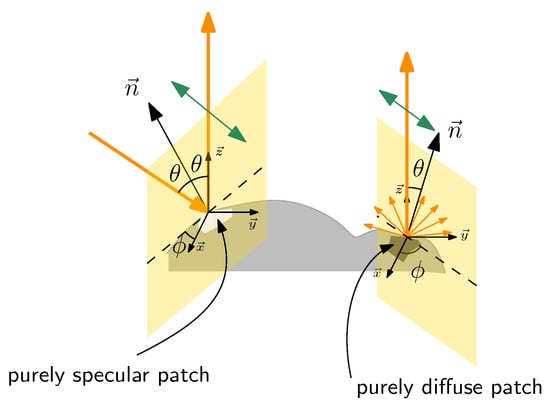

4.3. Shape from Polarization

In most robotics tasks, the perception of three-dimensional objects, the estimation of depth, and 3D reconstruction are all essential. As presented in Section 4.1, the polarization parameters of the light reflected or refracted from an object are directly related to the normal of the surfaces. Historically introduced by Wolff and Boult [303], the way of determining the surface normals from the measured polarization parameters led to a specific field of computer vision named “Shape from Polarization”. Assuming as a first approach that an orthographic lens is used in front of the polarimetric sensor, all light rays are parallel according to the optical axis of the camera as illustrated on Figure 18. In this frame, the normal can be written as the following:

where and are the zenith and azimuth angles, respectively.

Figure 18.

Illustration of the “Shape from Polarization” basis with the two types of reflection: specular and diffuse. The direction of polarization is indicated in green.

Finally the shape of the object is obtained by integrating the normal fields. The two angles and , respectively, are related to the degree and the angle of polarization. Depending on the nature of the surface, highly reflective or diffuse, some ambiguities appear in the determination of the normals:

- Diffuse reflection As long as the refractive index is known, there is no ambiguity in determining the zenith angle from the . The main drawback is that the is lower for diffuse reflection. An ambiguity remains regarding the azimuth angle which is equal to or , since the light is polarized in the plane defined by the normal and the reflected ray (Figure 18).

- Specular reflection Assuming the refractive index is known, as shown in Figure 14, an ambiguity appears in the determination of the zenith angle from the . In the same way, there is ambiguity as to the determination of the azimuth angle which is equal to since the light is polarized orthogonally to the incidence plane (Figure 18).

Shape from polarization started with the 3D reconstruction of objects having some priors about their shape to facilitate the disambiguation process [278,304,305,306]. Active lighting sources [279,307], multi-spectral imaging [308,309,310], multi-view [278] and Shape from Shading techniques [311,312,313,314] were also used in addition to shape from polarization to extract the most out of the techniques. Under flash illumination and using deep learning, Deschaintre et al. [315] captured the shapes of objects including the bidirectional reflectance distribution function by using polarization considerations. It is important to point out that multi-spectral imaging [309,310] in conjunction with polarization imaging enables the estimation of both the refractive index and the normals. Smith et al. [313] started with objects that provide both specular and diffuse reflections under controlled illumination, and later conducted experiments with unknown lighting [316]. To manage ambiguity between diffuse or specular dominating reflection [317] they were the first to introduce deep learning and to provide a lighting invariance algorithm based on shape from shading. Yang et al. [318] succeeded in using deep learning to reconstruct an object with shape from polarization information only. Knowing the polarization pattern of the blue sky [319] can also help to determine the object’s shape but this method is not suitable in real time. Evolution of shape from polarization is summarized in Table 2.

Table 2.

Evolution of Shape from Polarization in the literature.

4.4. Three-Dimensional Depth with Polarization Cues

Thanks to its ability to estimate the normals, polarization imaging is increasingly involved in 3D depth estimation. To improve the 3D reconstruction of objects, Kadambi et al. [321] combine polarization imaging with an aligned depth map obtained from a Kinect. Disambiguation is initiated by the depth map and the integration process starts with depth map estimation and is then improved by including the estimation of normals.

4.4.1. Stereo-Vision Systems

Also, in some stereo-vision systems, polarization imaging can solve the reconstruction of specular or transparent surfaces: Berger et al. [288] used a pair of polarimetric cameras to estimate the depth of a scene including the presence of water areas. Instead of using the polarization parameters to simplify the matching process, Fukao et al. [322] integrated all the measured parameters in a cost volume construction and the surface normals are then estimated. In a study carried out by Zhu and Smith [323], the pair comprises one RGB camera and one camera equipped with a linear polarizer (that could be replaced by a polarimetric camera). Even if restricted to controlled lighting, high- quality 3D reconstruction can be obtained combining polarization imaging and binocular stereo vision system thanks to the fusion scheme proposed by Tian et al. [324]. Cui et al. [325] proposed a multi-view acquisition system using polarization imaging that enables dense 3D reconstruction adapted for texture-less regions and non-Lambertian objects.

4.4.2. Pose Estimation and SLAM

Pose estimation and SLAM (Simultaneous Localization and Mapping) are also of major importance in the field of robotics particularly for navigation tasks and scene analysis. Yang et al. [170] were the first authors to propose a polarimetric dense monocular that can reconstruct 3D in real time and provide improved results compared to conventional techniques when some regions are specular or texture-less.

Cui et al. [326] developed a relative pose estimation algorithm from polarimetric images which reduced the point correspondence to two points but limited the analysis of diffuse reflection. High reflections and transparency objects are handled in Ref. [327]. They developed a network called PPP-net (Pose Polarimetric Prediction Network) that uses a two step framework. The fusion of polarization information and physical cues provides after learning the object mask, normal map and NOCS (Normalized Object Coordinate Space) required to a final regression network for monocular 6D object pose estimation [328]. Additionally, a learning-based algorithm that focuses on human pose and shape estimation was recently developed by Zou et al. [329].

4.5. Summary and Future Directions in Polarized Vision for Scene Understanding

Polarization imaging is becoming an indispensable modality for robotics both as a means of providing additional clues regarding the nature of objects and as a major contributor to 3D object recognition. Nevertheless, as presented in this section, polarization imaging could not be a standalone system providing all necessary information. Ambiguities remain regarding the azimuth and zenith angles, or the priors of the refractive index or shapes, are sometimes unavoidable in robotics. Methods based on deep learning seem to overcome most of these limitations. Huang et al. [330] used a combination of stereo-vision and a polarization system to recover normals and disparity through a deep learning-based algorithm. Assuming only diffuse reflection, ambiguities were solved, and in addition, the authors succeeded in overcoming the restriction of using orthographic cameras. Consequently, standard stereo-vision systems can be advantageously replaced by a pair of color-polarized cameras.

Improved perception of the real world through polarization extends applications to more advanced systems such as event cameras [331,332] or iTOF (indirect Time Of Flight) cameras [333]. One solution to the major challenges in scene understanding and 3D estimation could be to fuse polarization cues through various wavelengths additionally to 3D sensor to provide robust reconstruction in the presence of specular or translucent objects that can be found indoors or outdoors. Extending the fusion of polarization imaging and multispectral imaging for the detection task appears to be relevant for scene understanding.

5. Conclusions

The principles of polarization that we use today have been known since the 19th century, but due to the lack of experimental imaging systems able to operate in real time, few applications were reported until the late 1990s, whatever the field. Availability of digital cameras and liquid crystal modulators made it possible to implement systems and a variety of applications such as skylight navigation was considered. Commercial systems emerged in the early 2010s due to a major increase in the availability of high-definition low-noise commercial cameras able to sense linear polarization at 50–100 fps.

The Division-of-Focal Plane (DoFP) camera for linear polarization image capture is one of these cameras and appears to be the best-suited solution to robotic applications. Like the color filter arrays, this technology seems to have reached significant maturity in terms of performance and repeatability of the measurement, such that its use could be generalized in the future. A variation of this technology also makes it possible to make a joint acquisition of color and polarization images. In our opinion, an effort toward standardization and the definition of dedicated preprocessing pipelines remains to be made, possibly with open-source software toolkits.

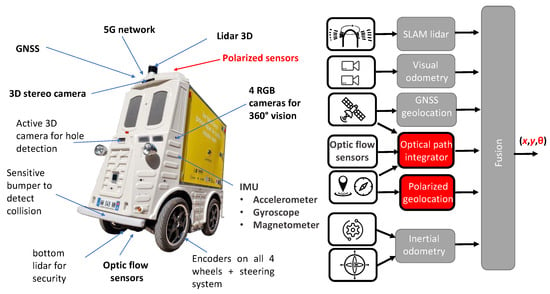

There are several advantages to using sky polarization for robotics navigation: this technology is undetectable, it has immunity to GNSS signal spoofing or jamming, the celestial heading detection estimate is driftless, and it could work at night by moonlight, making it exploitable in urban environments for civilian applications such as automated last-mile delivery service. An autonomous vehicle such as that proposed by the French company TwinswHeel (Figure 19) could use polarization for guidance as early as 2030.

Figure 19.

Logistics droid ciTHy L from TwinswHeel (payload up to 300 kg). This delivery droid is currently equipped with an Integrated Navigation System (INS) based on a triple redundancy of locations: 1st 3D Lidar, 2nd Stereo Camera, and 3rd GNSS + IMU + 4 wheels with encoders. The optical path integrator + polarized geolocation will be the 4th redundancy of location to make the robot geolocation more robust in all weather conditions and complex environments. The ciTHy L picture is courtesy of Vincent and Benjamin Talon, Co-founders of TwinswHeel (https://www.twinswheel.fr/, accessed on 19 March 2024).

The polarimetric systems described in this manuscript can estimate geolocation with a sufficient precision using only skylight, and these systems are so lightweight and inexpensive that they could be embedded into terrestrial, aerial, or underwater autonomous vehicles. Improvements of the technology will enable such vehicles to operate using, for instance, the detection of the multispectral polarization patterns. But UV usage, detection of surrounding light in panoramic view and operation in complex weather conditions remain challenges.

Moreover, current popular polarimetric sensors are megapixel cameras, which are too bulky and expensive for applications in automotive or service robotics. An alternative could take its inspiration from nature: some animals detect the celestial heading with a visual system corresponding to the equivalent of very low-definition sensors, which was corroborated by simulations with neural networks using low-definition images. Therefore, an artificial retina, consisting of one thousand pixels (instead of one million pixels for a classical camera) and a dedicated trained processing unit could be the first step toward a low-cost polarimetric device aimed at autonomous navigation.

In this review, we also presented the benefits of polarimetric imaging for robots to help them better understand the world in which they will operate. The detection of transparent or potentially dangerous surfaces can be facilitated by analyzing the polarization of light reflected from surfaces. In an even more advanced way, we have seen how the 3D shape of objects can be estimated from the measurement of polarization parameters. Algorithms based on neural networks can now overcome the constraints associated with shape-from-polarization techniques, making it possible to generalize the reconstruction of objects outdoors under a variety of lighting conditions.

Autonomous robots working in urban environments, e.g., for last-mile delivery services, will have to locate and position themselves with a spatial accuracy of better than 5 cm and 0.2 degree by 2030. Concurrently, in public areas, they must meet the most stringent safety requirements. Using and fusing the polarized sensors’ outputs with an INS could be a supplemental perceptive modality of localization techniques to reach the requested level of performances in order to complement and reinforce conventional localization techniques (3D LiDAR-based SLAM, GNSS, and visual–inertial odometry, see Figure 19).

Author Contributions

All authors wrote Section 1—“Introduction” and Section 5—“Conclusions”. P.-J.L. and L.B. wrote Section 2—“Embedded polarization sensing”. J.R.S., S.V., T.K.-M. and A.M. wrote Section 3—“Polarized vision for robotics navigation”. O.M. wrote Section 4—“Polarized vision for scene understanding”. All authors wrote the first draft of the paper, prepared, and then revised the manuscript. All authors have read and agreed to the published version of the manuscript.

Funding

J.R.S. has received funding from the Excellence Initiative of Aix-Marseille Université-A*Midex, a French “Investissements d’Avenir” programme AMX-21-ERC-02 and AMX-20-TRA-043 helping him to succeed in this project. This research work was also supported by the SUD Provence-Alpes-Côte d’Azur Region (PACA) (Grant #2021/08135). A.M. was supported by a CIFRE doctoral fellowship from the ANRT and Safran Electronics and Defense (agreement #2021/0056). T.K.M. was supported by an inter-doctoral school fellowship from Aix Marseille University. This work was also supported by the ANR JCJC SPIASI project, grant ANR-18-CE10-0005 of the French Agence Nationale de la Recherche.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

We thank Léo Poughon from both the STELLANTIS group and the Institute of Movement Sciences and Étienne-Jules Marey (CNRS/Aix Marseille University, ISM UMR7287), for their fruitful discussions concerning inputs of the PILONE PbC device. We also thank Laura Eshelman from Polaris Sensor Technologies Inc. (Huntsville, AL, USA) for providing us with a picture of SkyPASS. The authors would like to thank David Wood for revising the English of the manuscript. We also thank the three anonymous referees for their valuable comments.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| AoLP | Angle of Linear Polarization (sometimes referred to as Angle of Polarization) |

| APMR | Accuracy of Polarization Measurement Redundancy |

| BM3D | Block-Matching and 3D filtering |

| DoA | Division-of-Aperture |

| DoFP | Division-of-Focal Plane |

| DoLP | Degree of Linear Polarization |

| DoP | Degree of Polarization |

| DoT | Division-Of-Time |

| DRA | Dorsal Rim Area |

| ENU | East North Up |

| fps | frames per second |

| GNSS | Global Navigation Satellite System |

| GPS | Global Positioning System |

| IFoV | Instantaneous Field of View |

| INS | Inertial Navigation System |

| ISO | International Organization for Standardization |

| NCP | North Celestial Pole |

| PbC | Polarization-based Compass |

| PFA | Polarimetric Filter Array |

| PG | Polarization Gratings |

| PSA | Polarization State Analyzer |

| RGB | Red Green Blue |

| RMSE | Root Mean Squared Error |

| SLAM | Simultaneous Localization And Mapping |

| SNR | Signal-to-Noise Ratio |

| UAV | Unmanned Aerial Vehicle |

| UV | UltraViolet |

References

- Yang, G.Z.; Bellingham, J.; Dupont, P.E.; Fischer, P.; Floridi, L.; Full, R.; Jacobstein, N.; Kumar, V.; McNutt, M.; Merrifield, R.; et al. The grand challenges of science robotics. Sci. Robot. 2018, 3, eaar7650. [Google Scholar] [CrossRef] [PubMed]

- Horváth, G.; Lerner, A.; Shashar, N. Polarized Light and Polarization Vision in Animal Sciences; Springer: Berlin/Heidelberg, Germany, 2014; Volume 2. [Google Scholar]

- Able, K.; Able, M. Manipulations of polarized skylight calibrate magnetic orientation in a migratory bird. J. Comp. Phys. A 1995, 177, 351–356. [Google Scholar] [CrossRef]

- Cochran, W.W.; Mouritsen, H.; Wikelski, M. Migrating Songbirds Recalibrate Their Magnetic Compass Daily from Twilight Cues. Science 2004, 304, 405–408. [Google Scholar] [CrossRef] [PubMed]

- Akesson, S. The Ecology of Polarisation Vision in Birds. In Polarized Light and Polarization Vision in Animal Sciences, 2nd ed.; Springer: Berlin/Heidelberg, Germany, 2014; pp. 275–292. [Google Scholar] [CrossRef]

- Wehner, R. Desert Navigator: The Journey of an Ant; Harvard University Press: Cambridge, MA, USA, 2020. [Google Scholar]

- Pieron, H. Du rôle du sens musculaire dans l’orientation de quelques espèces de fourmis. Bull. Inst. Gen. Psychol. 1904, 4, 168–186. [Google Scholar]

- Santschi, F. Observations et remarques critiques sur le mécanisme de l’orientation chez les fourmis. Rev. Suisse Zool. 1911, 19, 303–338. [Google Scholar]

- Papi, F. Animal navigation at the end of the century: A retrospect and a look forward. Ital. J. Zool. 2001, 68, 171–180. [Google Scholar] [CrossRef]

- Lambrinos, D.; Möller, R.; Labhart, T.; Pfeifer, R.; Wehner, R. A mobile robot employing insect strategies for navigation. Robot. Auton. Syst. 2000, 30, 39–64. [Google Scholar] [CrossRef]

- Dupeyroux, J.; Serres, J.R.; Viollet, S. AntBot: A six-legged walking robot able to home like desert ants in outdoor environments. Sci. Robot. 2019, 4, eaau0307. [Google Scholar] [CrossRef] [PubMed]

- Dupeyroux, J.; Viollet, S.; Serres, J.R. An ant-inspired celestial compass applied to autonomous outdoor robot navigation. Robot. Auton. Syst. 2019, 117, 40–56. [Google Scholar] [CrossRef]

- Barta, A.; Suhai, B.; Horváth, G. Polarization Cloud Detection with Imaging Polarimetry. In Polarized Light and Polarization Vision in Animal Sciences; Springer: Berlin/Heidelberg, Germany, 2014; pp. 585–602. [Google Scholar]

- Hegedüs, R.; Åkesson, S.; Wehner, R.; Horváth, G. Could Vikings have navigated under foggy and cloudy conditions by skylight polarization? On the atmospheric optical prerequisites of polarimetric Viking navigation under foggy and cloudy skies. Proc. R. Soc. A Math. Phys. Eng. Sci. 2007, 463, 1081–1095. [Google Scholar] [CrossRef]

- Horváth, G.; Barta, A.; Pomozi, I.; Suhai, B.; Hegedüs, R.; Åkesson, S.; Meyer-Rochow, B.; Wehner, R. On the trail of Vikings with polarized skylight: Experimental study of the atmospheric optical prerequisites allowing polarimetric navigation by Viking seafarers. Philos. Trans. R. Soc. B Biol. Sci. 2011, 366, 772–782. [Google Scholar] [CrossRef]

- Ropars, G.; Gorre, G.; Le Floch, A.; Enoch, J.; Lakshminarayanan, V. A depolarizer as a possible precise sunstone for Viking navigation by polarized skylight. Proc. R. Soc. A Math. Phys. Eng. Sci. 2012, 468, 671–684. [Google Scholar] [CrossRef]

- Takacs, P.; Szaz, D.; Pereszlenyi, A.; Horvath, G. Speedy bearings to slacked steering: Mapping the navigation patterns and motions of Viking voyages. PLoS ONE 2023, 18, e0293816. [Google Scholar] [CrossRef]

- Standard ISO 3691-4:2023; Industrial Trucks—Safety Requirements and Verification—Part 4: Driverless Industrial Trucks and Their Systems. ISO: Geneva, Switzerland, 2023. Available online: https://www.iso.org/obp/ui/fr/#iso:std:iso:3691:-4:ed-2:v1:en (accessed on 19 March 2024).

- Standard ISO 26262-1:2018; Road Vehicles—Functional Safety—Part 1: Vocabulary. ISO: Geneva, Switzerland, 2018. Available online: https://www.iso.org/obp/ui/fr/#iso:std:iso:26262:-1:ed-2:v1:en (accessed on 19 March 2024).

- Standard ISO 21448:2022; Road Vehicles—Safety of the Intended Functionality. ISO: Geneva, Switzerland, 2022. Available online: https://www.iso.org/obp/ui/fr/#iso:std:iso:21448:ed-1:v1:en (accessed on 19 March 2024).

- Li, S.; Kong, F.; Xu, H.; Guo, X.; Li, H.; Ruan, Y.; Cao, S.; Guo, Y. Biomimetic Polarized Light Navigation Sensor: A Review. Sensors 2023, 23, 5848. [Google Scholar] [CrossRef]

- Kong, F.; Guo, Y.; Zhang, J.; Fan, X.; Guo, X. Review on bio-inspired polarized skylight navigation. Chin. J. Aeronaut. 2023, 36, 14–37. [Google Scholar] [CrossRef]

- Li, Q.; Dong, L.; Hu, Y.; Hao, Q.; Wang, W.; Cao, J.; Cheng, Y. Polarimetry for bionic geolocation and navigation applications: A review. Remote Sens. 2023, 15, 3518. [Google Scholar] [CrossRef]

- Liu, Y.; Wenzhou, Z.; Fan, C.; Zhang, L. A Review of Bionic Polarized Light Localization Methods. In Proceedings of the 2023 5th International Conference on Intelligent Control, Measurement and Signal Processing (ICMSP), Chengdu, China, 19–21 May 2023; pp. 793–803. [Google Scholar] [CrossRef]

- Tominaga, S.; Kimachi, A. Polarization imaging for material classification. Opt. Eng. 2008, 47, 123201. [Google Scholar] [CrossRef]

- Li, X.; Yan, L.; Qi, P.; Zhang, L.; Goudail, F.; Liu, T.; Zhai, J.; Hu, H. Polarimetric Imaging via Deep Learning: A Review. Remote Sens. 2023, 15, 1540. [Google Scholar] [CrossRef]

- Stokes, G.G. On the composition and resolution of streams of polarized light from different sources. Trans. Camb. Philos. Soc. 1852, 9, 339–416. [Google Scholar] [CrossRef]

- Goldstein, D.H. Polarized Light, 3rd ed.; CRC Press Inc.: Boca Raton, FL, USA, 2010; p. 808. [Google Scholar]

- Poincaré, H. Théorie Mathématique de la Lumière; Georges Carré: Paris, France, 1892; Volume II. [Google Scholar]

- Geek3. Poincaré Sphere. Available online: https://commons.wikimedia.org/wiki/File:Poincare-sphere_arrows.svg (accessed on 20 March 2024).

- Perrin, F. Polarization of Light Scattered by Isotropic Opalescent Media. J. Chem. Phys. 1942, 10, 415–427. [Google Scholar] [CrossRef]

- Mueller, H. The foundation of optics. J. Opt. Soc. Am. 1948, 38, 661. [Google Scholar]

- Jones, D.; Goldstein, D.; Spaulding, J. Reflective and polarimetric characteristics of urban materials. In Polarization: Measurement, Analysis, and Remote Sensing VII; Proceedings of Defense and Security Symposium, Orlando, FL, USA; SPIE: Bellingham, WA, USA, 2006; Volume 6240. [Google Scholar] [CrossRef]

- Hoover, B.G.; Tyo, J.S. Polarization components analysis for invariant discrimination. Appl. Opt. 2007, 46, 8364–8373. [Google Scholar] [CrossRef]

- Wang, P.; Chen, Q.; Gu, G.; Qian, W.; Ren, K. Polarimetric Image Discrimination With Depolarization Mueller Matrix. IEEE Photonics J. 2016, 8, 6901413. [Google Scholar] [CrossRef]

- Quéau, Y.; Leporcq, F.; Lechervy, A.; Alfalou, A. Learning to classify materials using Mueller imaging polarimetry. In Proceedings of the Fourteenth International Conference on Quality Control by Artificial Vision, Mulhouse, France, 15–17 May 2019; SPIE: Bellingham, WA, USA, 2019; Volume 11172. [Google Scholar] [CrossRef]

- Kupinski, M.; Li, L. Evaluating the Utility of Mueller Matrix Imaging for Diffuse Material Classification. J. Imaging Sci. Technol. 2020, 64, 060409-1–060409-7. [Google Scholar] [CrossRef]

- Pierangelo, A.; Nazac, A.; Benali, A.; Validire, P.; Cohen, H.; Novikova, T.; Ibrahim, B.H.; Manhas, S.; Fallet, C.; Antonelli, M.R.; et al. Polarimetric imaging of uterine cervix: A case study. Opt. Express 2013, 21, 14120–14130. [Google Scholar] [CrossRef]

- Van Eeckhout, A.; Lizana, A.; Garcia-Caurel, E.; Gil, J.J.; Sansa, A.; Rodríguez, C.; Estévez, I.; González, E.; Escalera, J.C.; Moreno, I.; et al. Polarimetric imaging of biological tissues based on the indices of polarimetric purity. J. Biophotonics 2018, 11, e201700189. [Google Scholar] [CrossRef]

- Slonaker, R.; Takano, Y.; Liou, K.N.; Ou, S.C. Circular polarization signal for aerosols and clouds. In Atmospheric and Environmental Remote Sensing Data Processing and Utilization: Numerical Atmospheric Prediction and Environmental Monitoring; Proceedings of Optics and Photonics 2005, San Diego, CA, USA; SPIE: Bellingham, WA, USA, 2005; Volume 5890, p. 5890. [Google Scholar] [CrossRef]

- Gassó, S.; Knobelspiesse, K.D. Circular polarization in atmospheric aerosols. Atmos. Chem. Phys. 2022, 22, 13581–13605. [Google Scholar] [CrossRef]

- Tyo, J.S. Optimum linear combination strategy for an N-channel polarization-sensitive imaging or vision system. JOSA A 1998, 15, 359–366. [Google Scholar] [CrossRef]

- Tyo, J.S. Design of Optimal Polarimeters: Maximization of Signal-to-Noise Ratio and Minimization of Systematic Error. Appl. Opt. 2002, 41, 619–630. [Google Scholar] [CrossRef]

- Perkins, R.; Gruev, V. Signal-to-noise analysis of Stokes parameters in division of focal plane polarimeters. Opt. Express 2010, 18, 25815–25824. [Google Scholar] [CrossRef]

- Bass, M. Handbook of Optics: Volume ii-Design, Fabrication, and Testing; Sources and Detectors; Radiometry and Photometry; McGraw-Hill Education: Chicago, IL, USA, 2010. [Google Scholar]

- Mu, T.; Pacheco, S.; Chen, Z.; Zhang, C.; Liang, R. Snapshot linear-Stokes imaging spectropolarimeter using division-of-focal-plane polarimetry and integral field spectroscopy. Sci. Rep. 2017, 7, 42115. [Google Scholar] [CrossRef] [PubMed]

- Tyo, J.S.; Goldstein, D.L.; Chenault, D.B.; Shaw, J.A. Review of passive imaging polarimetry for remote sensing applications. Appl. Opt. 2006, 45, 5453–5469. [Google Scholar] [CrossRef] [PubMed]

- Voss, K.J.; Liu, Y. Polarized radiance distribution measurements of skylight. I. System description and characterization. Appl. Opt. 1997, 36, 6083–6094. [Google Scholar] [CrossRef] [PubMed]

- Kreuter, A.; Zangerl, M.; Schwarzmann, M.; Blumthaler, M. All-sky imaging: A simple, versatile system for atmospheric research. Appl. Opt. 2009, 48, 1091–1097. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Hu, X.; Lian, J.; Zhang, L.; Xian, Z.; Ma, T. Design of a Device for Sky Light Polarization Measurements. Sensors 2014, 14, 14916–14931. [Google Scholar] [CrossRef] [PubMed]

- Wolff, L.B.; Mancini, T.A.; Pouliquen, P.; Andreou, A.G. Liquid crystal polarization camera. IEEE Trans. Robot. Autom. 1997, 13, 195–203. [Google Scholar] [CrossRef]

- Chipman, R.A. Polarimetry. In Handbook of Optics; Book Section 22; McGraw-Hill: New York, NY, USA, 1995; Volume 2. [Google Scholar]

- Gandorfer, A.M. Ferroelectric retarders as an alternative to piezoelastic modulators for use in solar Stokes vector polarimetry. Opt. Eng. 1999, 38, 1402–1408. [Google Scholar] [CrossRef]

- Blakeney, S.L.; Day, S.E.; Stewart, J.N. Determination of unknown input polarisation using a twisted nematic liquid crystal display with fixed components. Opt. Commun. 2002, 214, 1–8. [Google Scholar] [CrossRef]

- Pust, N.J.; Shaw, J.A. Dual-field imaging polarimeter using liquid crystal variable retarders. Appl. Opt. 2006, 45, 5470–5478. [Google Scholar] [CrossRef]

- Gendre, L.; Foulonneau, A.; Bigué, L. Imaging linear polarimetry using a single ferroelectric liquid crystal modulator. Appl. Opt. 2010, 49, 4687–4699. [Google Scholar] [CrossRef]

- Lefaudeux, N.; Lechocinski, N.; Breugnot, S.; Clemenceau, P. Compact and robust linear Stokes polarization camera. In Polarization: Measurement, Analysis, and Remote Sensing VIII, Proceedings of the SPIE Defense and Security Symposium, Orlando, FL, USA; SPIE: Bellingham, WA, USA, 2008; Volume 6972, p. 69720B. [Google Scholar] [CrossRef]

- Vedel, M.; Breugnot, S.; Lechocinski, N. Full Stokes polarization imaging camera. In Proceedings of the Polarization Science and Remote Sensing V; Proceedings of Optical Engineering + Applications. Shaw, J.A., Tyo, J.S., Eds.; SPIE: Bellingham, WA, USA, 2011; Volume 8160, p. 81600X-13. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhao, H.; Song, P.; Shi, S.; Xu, W.; Liang, X. Ground-based full-sky imaging polarimeter based on liquid crystal variable retarders. Opt. Express 2014, 22, 8749–8764. [Google Scholar] [CrossRef]

- Horváth, G.; Barta, A.; Gál, J.; Suhai, B.; Haiman, O. Ground-based full-sky imaging polarimetry of rapidly changing skies and its use for polarimetric cloud detection. Appl. Opt. 2002, 41, 543–559. [Google Scholar] [CrossRef]

- Wang, D.; Liang, H.; Zhu, H.; Zhang, S. A Bionic Camera-Based Polarization Navigation Sensor. Sensors 2014, 14, 13006–13023. [Google Scholar] [CrossRef]

- Fan, C.; Hu, X.; Lian, J.; Zhang, L.; He, X. Design and Calibration of a Novel Camera-Based Bio-Inspired Polarization Navigation Sensor. IEEE Sens. J. 2016, 16, 3640–3648. [Google Scholar] [CrossRef]

- de Leon, E.; Brandt, R.; Phenis, A.; Virgen, M. Initial results of a simultaneous Stokes imaging polarimeter. In Polarization Science and Remote Sensing III, Proceedings of SPIE Optical Engineering + Applications, San Diego, CA, USA, 29–30 August 2007; Shaw, J.A., Tyo, J.S., Eds.; SPIE: St Bellingham, WA, USA, 2007; Volume 6682, p. 668215. [Google Scholar] [CrossRef]

- Fujita, K.; Itoh, Y.; Mukai, T. Development of simultaneous imaging polarimeter for asteroids. Adv. Space Res. 2009, 43, 325–327. [Google Scholar] [CrossRef]

- Gu, D.F.; Winker, B.; Wen, B.; Mansell, J.; Zachery, K.; Taber, D.; Chang, T.; Choi, S.; Ma, J.; Wang, X.; et al. Liquid crystal tunable polarization filters for polarization imaging. In Liquid Crystals XII; Proceedings of Photonic Devices + Applications; SPIE: Bellingham, WA, USA, 2008; Volume 7050, p. 7050. [Google Scholar] [CrossRef]

- Pezzaniti, J.L.; Chenault, D.B. A division of aperture MWIR imaging polarimeter. In Polarization Science and Remote Sensing II; Proceedings of Optics and Photonics 2005, San Diego, CA, USA; SPIE: Bellingham, WA, USA, 2005; Volume 5888, p. 58880V-12. [Google Scholar] [CrossRef]

- Chun, C.; Fleming, D.; Torok, E. Polarization-sensitive thermal imaging. In Automatic Object Recognition IV; Proceedings of the SPIE’s International Symposium on Optical Engineering and Photonics in Aerospace Sensing, Orlando, FL, USA; SPIE: Bellingham, WA, USA, 1994; Volume 2234, p. 2234. [Google Scholar] [CrossRef]