Enhancing Intrusion Detection Systems for IoT and Cloud Environments Using a Growth Optimizer Algorithm and Conventional Neural Networks

Abstract

1. Introduction

Paper Contribution

- Suggest a different method for securing IoT by combining DL and feature selection techniques.

- Use a CNN model to analyze network traffic records and identify complex feature representations.

- Create a modified version of Growth Optimizer (GO) for improved intrusion detection in IoT environments. The modification uses the operators of the Whale Optimization Algorithm (WOA). The proposed method, called MGO, is employed to address the issue of discrete feature selection.

- Evaluate the performance of the MGO against established methods using four actual intrusion datasets.

2. Background

2.1. Growth Optimizer

2.1.1. Learning Stage

2.1.2. Reflection Stage

2.2. Whale Optimization Algorithm

3. Proposed Method

3.1. Prepare IoT Dataset

3.2. CNN for Feature Extraction

3.3. Feature Selection-Based MGO Approach

4. Experimental Series and Results

4.1. Evaluation Measures

- Average accuracy (: This measure stands for the rate of a correct intrusion detected using the algorithm and it is represented as:in which indicates the iteration numbers.

- Average Recall : is the percentage of intrusion predicted positively (it can be called true positive rate (TPR)). It can be computed as:

- Average Precision : stands for the rate of TP samples of all positive cases with the formulation:

- Average F1-measure : can be computed as:

- Average G-mean : can be computed as:

4.2. Experiments Setup

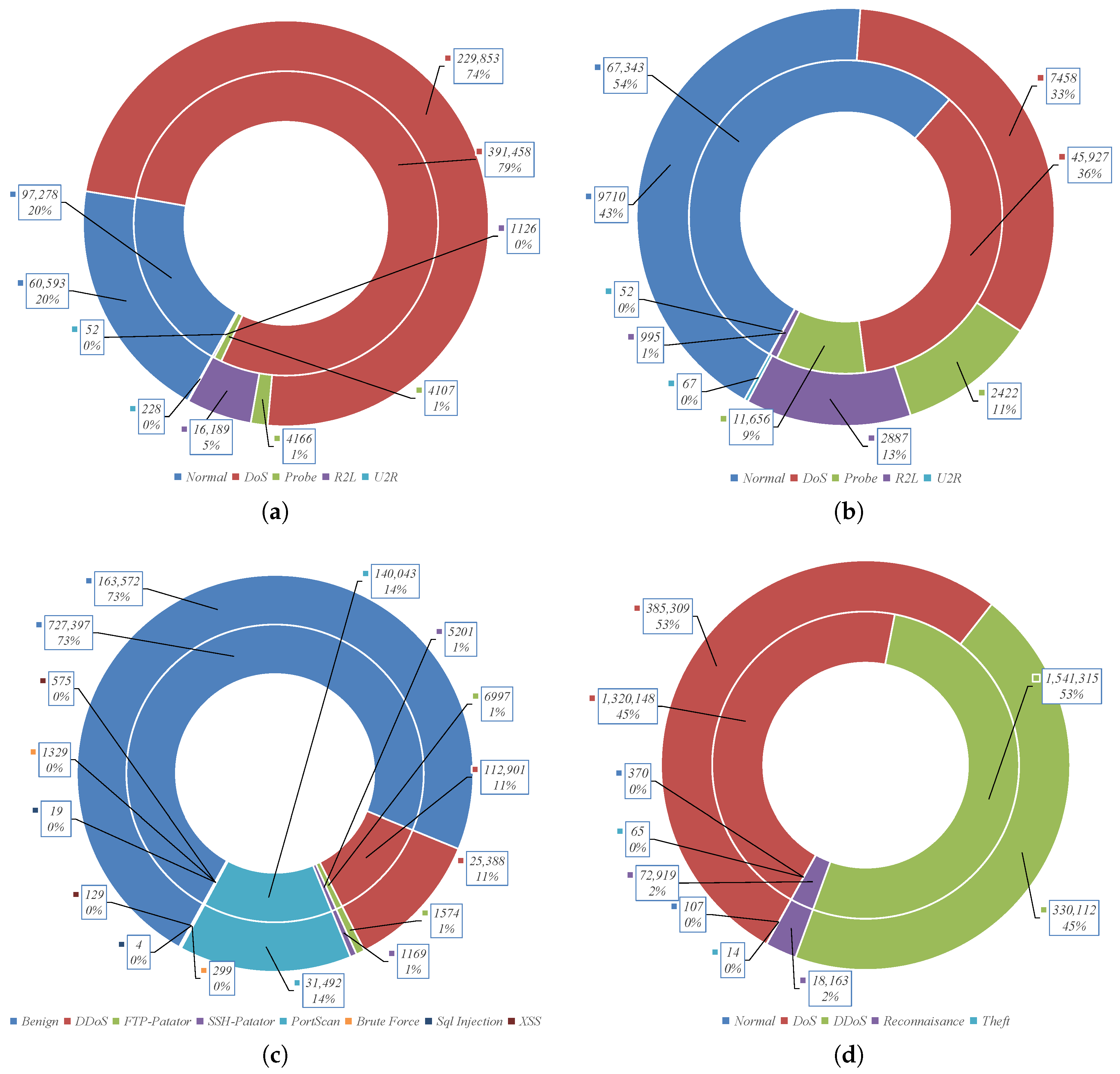

4.3. Experimental Datasets

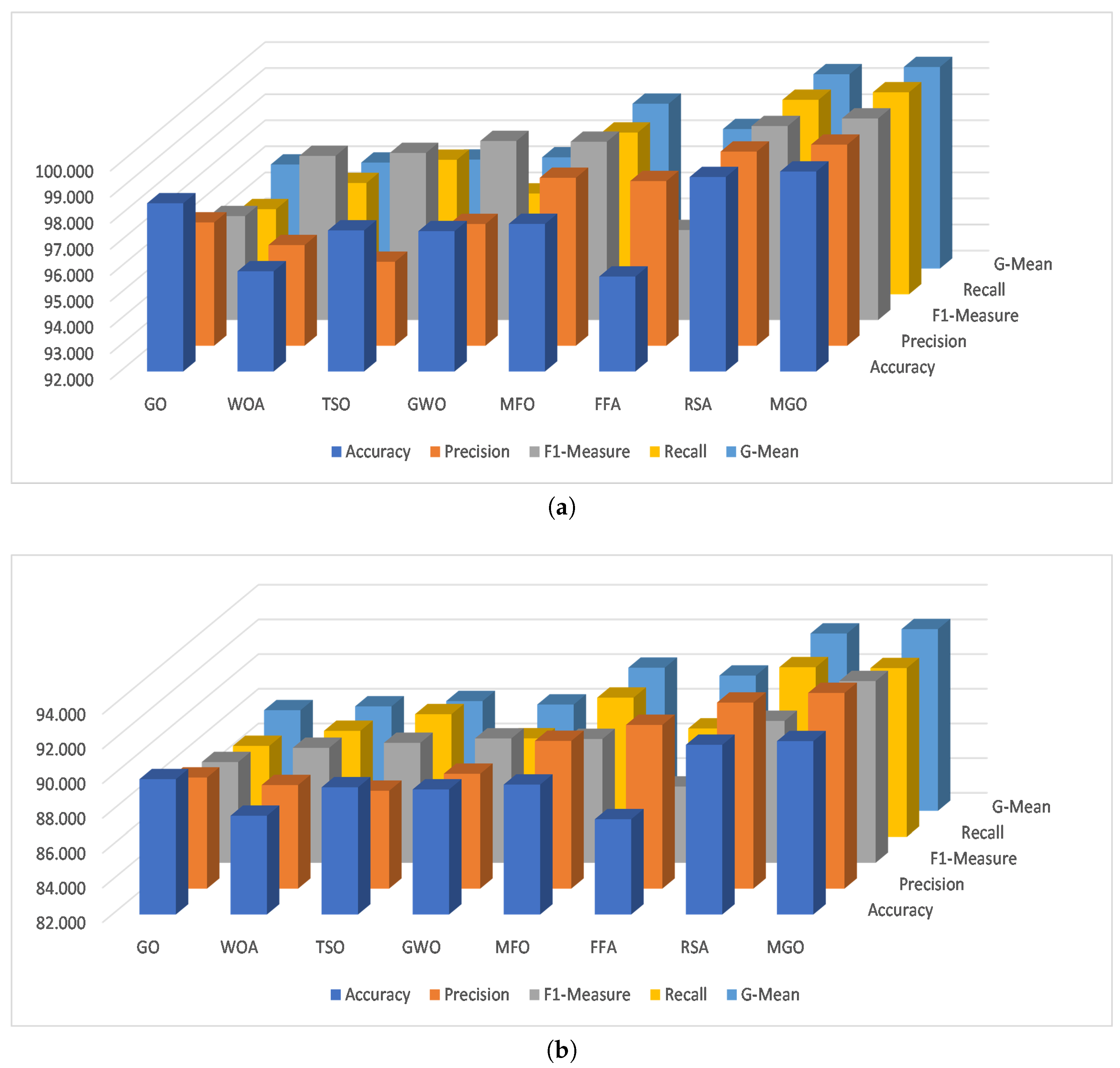

4.4. Result and Discussions

4.5. Comparison with Existing Methods

5. Conclusions and Future work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Debicha, I.; Bauwens, R.; Debatty, T.; Dricot, J.M.; Kenaza, T.; Mees, W. TAD: Transfer learning-based multi-adversarial detection of evasion attacks against network intrusion detection systems. Future Gener. Comput. Syst. 2023, 138, 185–197. [Google Scholar] [CrossRef]

- Lata, S.; Singh, D. Intrusion detection system in cloud environment: Literature survey & future research directions. Int. J. Inf. Manag. Data Insights 2022, 2, 100134. [Google Scholar]

- Schueller, Q.; Basu, K.; Younas, M.; Patel, M.; Ball, F. A hierarchical intrusion detection system using support vector machine for SDN network in cloud data center. In Proceedings of the 2018 28th International Telecommunication Networks and Applications Conference (ITNAC), Sydney, Australia, 21–23 November 2018; pp. 1–6. [Google Scholar]

- Wei, J.; Long, C.; Li, J.; Zhao, J. An intrusion detection algorithm based on bag representation with ensemble support vector machine in cloud computing. Concurr. Comput. Pract. Exp. 2020, 32, e5922. [Google Scholar] [CrossRef]

- Peng, K.; Leung, V.; Zheng, L.; Wang, S.; Huang, C.; Lin, T. Intrusion detection system based on decision tree over big data in fog environment. Wirel. Commun. Mob. Comput. 2018, 2018. [Google Scholar] [CrossRef]

- Modi, C.; Patel, D.; Borisanya, B.; Patel, A.; Rajarajan, M. A novel framework for intrusion detection in cloud. In Proceedings of the Fifth International Conference on Security of Information and Networks, Jaipur, India, 25–27 October 2012; pp. 67–74. [Google Scholar]

- Kumar, G.R.; Mangathayaru, N.; Narasimha, G. An improved k-Means Clustering algorithm for Intrusion Detection using Gaussian function. In Proceedings of the International Conference on Engineering & MIS 2015, Istanbul, Turkey, 24–26 September 2015; pp. 1–7. [Google Scholar]

- Zhao, X.; Zhang, W. An anomaly intrusion detection method based on improved k-means of cloud computing. In Proceedings of the 2016 Sixth International Conference on Instrumentation & Measurement, Computer, Communication and Control (IMCCC), Harbin, China, 21–23 July 2016; pp. 284–288. [Google Scholar]

- Hodo, E.; Bellekens, X.; Hamilton, A.; Dubouilh, P.L.; Iorkyase, E.; Tachtatzis, C.; Atkinson, R. Threat analysis of IoT networks using artificial neural network intrusion detection system. In Proceedings of the 2016 International Symposium on Networks, Computers and Communications (ISNCC), Yasmine Hammamet, Tunisia, 11–13 May 2016; pp. 1–6. [Google Scholar]

- Almiani, M.; AbuGhazleh, A.; Al-Rahayfeh, A.; Atiewi, S.; Razaque, A. Deep recurrent neural network for IoT intrusion detection system. Simul. Model. Pract. Theory 2020, 101, 102031. [Google Scholar] [CrossRef]

- Wu, K.; Chen, Z.; Li, W. A novel intrusion detection model for a massive network using convolutional neural networks. IEEE Access 2018, 6, 50850–50859. [Google Scholar] [CrossRef]

- Alazab, M.; Khurma, R.A.; Awajan, A.; Camacho, D. A new intrusion detection system based on moth–flame optimizer algorithm. Expert Syst. Appl. 2022, 210, 118439. [Google Scholar] [CrossRef]

- Samadi Bonab, M.; Ghaffari, A.; Soleimanian Gharehchopogh, F.; Alemi, P. A wrapper-based feature selection for improving performance of intrusion detection systems. Int. J. Commun. Syst. 2020, 33, e4434. [Google Scholar] [CrossRef]

- Zhou, Y.; Cheng, G.; Jiang, S.; Dai, M. Building an efficient intrusion detection system based on feature selection and ensemble classifier. Comput. Netw. 2020, 174, 107247. [Google Scholar] [CrossRef]

- Mojtahedi, A.; Sorouri, F.; Souha, A.N.; Molazadeh, A.; Mehr, S.S. Feature Selection-based Intrusion Detection System Using Genetic Whale Optimization Algorithm and Sample-based Classification. arXiv 2022, arXiv:2201.00584. [Google Scholar]

- Talita, A.; Nataza, O.; Rustam, Z. Naïve bayes classifier and particle swarm optimization feature selection method for classifying intrusion detection system dataset. J. Phys. Conf. Ser. 2021, 1752, 012021. [Google Scholar] [CrossRef]

- Fatani, A.; Dahou, A.; Al-Qaness, M.A.; Lu, S.; Elaziz, M.A. Advanced feature extraction and selection approach using deep learning and Aquila optimizer for IoT intrusion detection system. Sensors 2021, 22, 140. [Google Scholar] [CrossRef]

- Dahou, A.; Abd Elaziz, M.; Chelloug, S.A.; Awadallah, M.A.; Al-Betar, M.A.; Al-qaness, M.A.; Forestiero, A. Intrusion Detection System for IoT Based on Deep Learning and Modified Reptile Search Algorithm. Comput. Intell. Neurosci. 2022, 2022, 6473507. [Google Scholar] [CrossRef]

- Karuppusamy, L.; Ravi, J.; Dabbu, M.; Lakshmanan, S. Chronological salp swarm algorithm based deep belief network for intrusion detection in cloud using fuzzy entropy. Int. J. Numer. Model. Electron. Netw. Devices Fields 2022, 35, e2948. [Google Scholar] [CrossRef]

- Zhang, Q.; Gao, H.; Zhan, Z.H.; Li, J.; Zhang, H. Growth Optimizer: A powerful metaheuristic algorithm for solving continuous and discrete global optimization problems. Knowl.-Based Syst. 2023, 261, 110206. [Google Scholar] [CrossRef]

- Mirjalili, S.; Lewis, A. The whale optimization algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- Abualigah, L.; Abd Elaziz, M.; Sumari, P.; Geem, Z.W.; Gandomi, A.H. Reptile Search Algorithm (RSA): A nature-inspired meta-heuristic optimizer. Expert Syst. Appl. 2022, 191, 116158. [Google Scholar] [CrossRef]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey wolf optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef]

- Fatani, A.; Abd Elaziz, M.; Dahou, A.; Al-Qaness, M.A.; Lu, S. IoT Intrusion Detection System Using Deep Learning and Enhanced Transient Search Optimization. IEEE Access 2021, 9, 123448–123464. [Google Scholar] [CrossRef]

- Yang, X.S.; He, X. Firefly algorithm: Recent advances and applications. Int. J. Swarm Intell. 2013, 1, 36–50. [Google Scholar] [CrossRef]

- Mirjalili, S. Moth-flame optimization algorithm: A novel nature-inspired heuristic paradigm. Knowl.-Based Syst. 2015, 89, 228–249. [Google Scholar] [CrossRef]

- Sharafaldin, I.; Lashkari, A.H.; Ghorbani, A.A. Toward generating a new intrusion detection dataset and intrusion traffic characterization. ICISSp 2018, 1, 108–116. [Google Scholar]

- Koroniotis, N.; Moustafa, N.; Sitnikova, E.; Turnbull, B. Towards the development of realistic botnet dataset in the internet of things for network forensic analytics: Bot-iot dataset. Future Gener. Comput. Syst. 2019, 100, 779–796. [Google Scholar] [CrossRef]

- Wu, P. Deep learning for network intrusion detection: Attack recognition with computational intelligence. Ph.D. Thesis, UNSW Sydney, Kensington, Australia, 2020. [Google Scholar]

- Farahnakian, F.; Heikkonen, J. A deep auto-encoder based approach for intrusion detection system. In Proceedings of the 2018 20th International Conference on Advanced Communication Technology (ICACT), Chuncheon, Republic of Korea, 11–14 February 2018; pp. 178–183. [Google Scholar]

- Churcher, A.; Ullah, R.; Ahmad, J.; Ur Rehman, S.; Masood, F.; Gogate, M.; Alqahtani, F.; Nour, B.; Buchanan, W.J. An experimental analysis of attack classification using machine learning in IoT networks. Sensors 2021, 21, 446. [Google Scholar] [CrossRef]

- Ma, T.; Wang, F.; Cheng, J.; Yu, Y.; Chen, X. A hybrid spectral clustering and deep neural network ensemble algorithm for intrusion detection in sensor networks. Sensors 2016, 16, 1701. [Google Scholar] [CrossRef]

- Javaid, A.; Niyaz, Q.; Sun, W.; Alam, M. A deep learning approach for network intrusion detection system. In Proceedings of the 9th EAI International Conference on Bio-Inspired Information and Communications Technologies (Formerly BIONETICS), New York, NY, USA, 3–5 December 2015; pp. 21–26. [Google Scholar]

- Tang, T.A.; Mhamdi, L.; McLernon, D.; Zaidi, S.A.R.; Ghogho, M. Deep learning approach for network intrusion detection in software defined networking. In Proceedings of the 2016 International Conference on Wireless Networks and Mobile Communications (WINCOM), Fez, Morocco, 26–29 October 2016; pp. 258–263. [Google Scholar]

- Imamverdiyev, Y.; Abdullayeva, F. Deep learning method for denial of service attack detection based on restricted boltzmann machine. Big Data 2018, 6, 159–169. [Google Scholar] [CrossRef]

- Alkadi, O.; Moustafa, N.; Turnbull, B.; Choo, K.K.R. A deep blockchain framework-enabled collaborative intrusion detection for protecting IoT and cloud networks. IEEE Internet Things J. 2020, 8, 9463–9472. [Google Scholar] [CrossRef]

- Vinayakumar, R.; Alazab, M.; Soman, K.; Poornachandran, P.; Al-Nemrat, A.; Venkatraman, S. Deep learning approach for intelligent intrusion detection system. IEEE Access 2019, 7, 41525–41550. [Google Scholar] [CrossRef]

- Laghrissi, F.; Douzi, S.; Douzi, K.; Hssina, B. Intrusion detection systems using long short-term memory (LSTM). J. Big Data 2021, 8, 65. [Google Scholar] [CrossRef]

- Alkahtani, H.; Aldhyani, T.H. Intrusion detection system to advance internet of things infrastructure-based deep learning algorithms. Complexity 2021, 2021, 5579851. [Google Scholar] [CrossRef]

- Luque, A.; Carrasco, A.; Martín, A.; de Las Heras, A. The impact of class imbalance in classification performance metrics based on the binary confusion matrix. Pattern Recognit. 2019, 91, 216–231. [Google Scholar] [CrossRef]

| Train | Test | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Accuracy | Precision | F1 | Recall | GM | Accuracy | Precision | F1 | Recall | GM | ||

| KDD99 | GO | 98.515 | 93.483 | 92.652 | 91.835 | 92.655 | 90.615 | 84.249 | 83.797 | 83.350 | 83.798 |

| WOA | 92.275 | 92.414 | 97.304 | 93.126 | 92.769 | 84.375 | 82.501 | 87.351 | 85.225 | 83.852 | |

| TSO | 95.439 | 91.027 | 97.437 | 94.919 | 92.953 | 87.536 | 80.791 | 87.479 | 87.016 | 83.846 | |

| GWO | 95.513 | 94.062 | 98.482 | 92.383 | 93.219 | 87.618 | 84.131 | 88.533 | 84.488 | 84.310 | |

| MFO | 96.073 | 97.631 | 98.371 | 97.123 | 97.377 | 88.175 | 87.763 | 88.420 | 89.225 | 88.491 | |

| FFA | 91.988 | 97.328 | 91.538 | 93.368 | 95.328 | 84.318 | 91.609 | 84.285 | 85.698 | 88.604 | |

| RSA | 99.910 | 99.909 | 99.906 | 99.910 | 99.910 | 92.040 | 89.684 | 89.985 | 92.040 | 90.855 | |

| MGO | 99.910 | 99.959 | 99.946 | 99.933 | 99.946 | 92.040 | 90.841 | 90.941 | 91.040 | 90.941 | |

| NSL-KDD | GO | 97.108 | 95.104 | 93.017 | 91.020 | 93.040 | 70.224 | 72.200 | 69.794 | 67.544 | 69.833 |

| WOA | 91.947 | 92.080 | 96.968 | 92.797 | 92.438 | 67.951 | 71.131 | 68.907 | 68.801 | 69.956 | |

| TSO | 95.078 | 90.657 | 97.067 | 94.558 | 92.587 | 71.330 | 71.298 | 69.697 | 70.810 | 71.053 | |

| GWO | 95.182 | 93.724 | 98.143 | 92.052 | 92.884 | 71.066 | 72.151 | 69.948 | 67.936 | 70.012 | |

| MFO | 95.745 | 97.297 | 98.035 | 96.795 | 97.046 | 71.626 | 76.122 | 69.844 | 72.676 | 74.379 | |

| FFA | 91.660 | 96.991 | 91.201 | 93.040 | 94.995 | 67.437 | 75.873 | 62.944 | 68.817 | 72.259 | |

| RSA | 99.201 | 99.158 | 99.148 | 99.201 | 99.180 | 76.107 | 82.171 | 71.731 | 76.107 | 79.081 | |

| MGO | 99.214 | 99.458 | 99.437 | 99.416 | 99.437 | 76.725 | 83.105 | 79.759 | 76.672 | 79.824 | |

| BIoT | GO | 99.068 | 99.107 | 99.076 | 99.045 | 99.076 | 99.141 | 98.100 | 98.371 | 98.644 | 98.372 |

| WOA | 99.472 | 99.472 | 99.472 | 99.472 | 99.472 | 98.956 | 98.957 | 99.005 | 98.964 | 98.960 | |

| TSO | 99.460 | 99.459 | 99.459 | 99.460 | 99.460 | 98.986 | 98.941 | 99.005 | 98.981 | 98.961 | |

| GWO | 99.477 | 99.476 | 99.476 | 99.477 | 99.477 | 98.990 | 98.975 | 99.019 | 98.959 | 98.967 | |

| MFO | 99.480 | 99.480 | 99.480 | 99.480 | 99.480 | 98.998 | 99.013 | 99.020 | 99.009 | 99.011 | |

| FFA | 99.479 | 99.478 | 99.478 | 99.479 | 99.478 | 98.954 | 99.007 | 98.949 | 98.968 | 98.987 | |

| RSA | 98.829 | 98.829 | 98.829 | 98.829 | 98.829 | 99.020 | 99.098 | 99.070 | 99.038 | 99.068 | |

| MGO | 99.629 | 99.529 | 99.629 | 99.729 | 99.629 | 99.220 | 99.188 | 99.218 | 99.248 | 99.218 | |

| CIC2017 | GO | 99.130 | 99.239 | 99.204 | 99.170 | 99.204 | 99.170 | 99.020 | 99.215 | 99.410 | 99.215 |

| WOA | 99.690 | 99.490 | 99.450 | 99.690 | 99.590 | 99.430 | 99.240 | 99.190 | 99.430 | 99.335 | |

| TSO | 99.680 | 99.750 | 99.680 | 99.710 | 99.730 | 99.420 | 99.480 | 99.420 | 99.450 | 99.465 | |

| GWO | 99.370 | 99.430 | 99.380 | 99.560 | 99.495 | 99.110 | 99.180 | 99.120 | 99.300 | 99.240 | |

| MFO | 99.360 | 99.370 | 99.480 | 99.430 | 99.400 | 99.100 | 99.120 | 99.220 | 99.170 | 99.145 | |

| FFA | 99.450 | 99.480 | 99.600 | 99.740 | 99.610 | 99.200 | 99.220 | 99.350 | 99.490 | 99.355 | |

| RSA | 99.911 | 99.910 | 99.889 | 99.911 | 99.910 | 99.911 | 99.907 | 99.888 | 99.911 | 99.909 | |

| MGO | 99.941 | 99.920 | 99.926 | 99.931 | 99.926 | 99.941 | 99.947 | 99.942 | 99.936 | 99.942 | |

| GO | WOA | TSO | GWO | MFO | FFA | RSA | MGO | ||

|---|---|---|---|---|---|---|---|---|---|

| Training | Accuracy | 3.7500 | 3.5000 | 3.5000 | 4.0000 | 4.7500 | 3.0000 | 5.6250 | 7.8750 |

| Precision | 2.5000 | 3.2500 | 2.7500 | 3.7500 | 5.2500 | 5.0000 | 5.5000 | 8.0000 | |

| F1-Measure | 1.7500 | 3.2500 | 4.2500 | 4.7500 | 5.2500 | 3.2500 | 5.5000 | 8.0000 | |

| Recall | 1.2500 | 3.5000 | 4.5000 | 3.0000 | 5.2500 | 5.0000 | 5.5000 | 8.0000 | |

| GM | 2.0000 | 2.7500 | 3.5000 | 3.7500 | 5.2500 | 5.2500 | 5.5000 | 8.0000 | |

| Testing | Accuracy | 4.7500 | 3.0000 | 4.0000 | 3.5000 | 4.2500 | 1.7500 | 6.8750 | 7.8750 |

| Precision | 2.5000 | 2.7500 | 2.7500 | 3.2500 | 4.7500 | 5.5000 | 6.7500 | 7.7500 | |

| F1-Measure | 2.2500 | 2.6250 | 4.1250 | 4.5000 | 5.0000 | 2.5000 | 7.0000 | 8.0000 | |

| Recall | 1.5000 | 3.2500 | 5.0000 | 2.0000 | 4.7500 | 4.5000 | 7.2500 | 7.7500 | |

| GM | 1.2500 | 2.7500 | 3.7500 | 3.5000 | 4.5000 | 5.2500 | 7.0000 | 8.0000 |

| Dataset | Work | Accuracy |

|---|---|---|

| KDD Cup 99 | Wu [29] | 85.24 |

| Farahnakian et al. [30] | 96.53 | |

| MGO | 0.9204 | |

| NSL-KDD | Ma et al. [32] SCDNN | 72.64 |

| Javaid et al. [33] STL | 74.38 | |

| Tang et al. [34] DNN | 75.75 | |

| Imamverdiyev et al. [35] Gaussian–Bernoulli RBM | 73.23 | |

| MGO | 76.725 | |

| BIoT | [36] (BiLSTM) | 98.91 |

| Alkadi et al. [36] (NB) | 97.5 | |

| Alkadi et al. [36] (SVM) | 97.8 | |

| Churcher et al. [31] (KNN) | 99 | |

| Churcher et al. [31] (SVM) | 79 | |

| Churcher et al. [31] (DT) | 96 | |

| Churcher et al. [31] (NB) | 94 | |

| Churcher et al. [31] (RF) | 95 | |

| Churcher et al. [31] (ANN) | 97 | |

| Churcher et al. [31] (LR) | 74 | |

| MGO | 99.22 | |

| CICIDS2017 | Vinayakumar [37] | 94.61 |

| Laghrissi et al. [38] | 85.64 | |

| Alkahtani et al. [39] | 80.91 | |

| MGO | 99.941 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fatani, A.; Dahou, A.; Abd Elaziz, M.; Al-qaness, M.A.A.; Lu, S.; Alfadhli, S.A.; Alresheedi, S.S. Enhancing Intrusion Detection Systems for IoT and Cloud Environments Using a Growth Optimizer Algorithm and Conventional Neural Networks. Sensors 2023, 23, 4430. https://doi.org/10.3390/s23094430

Fatani A, Dahou A, Abd Elaziz M, Al-qaness MAA, Lu S, Alfadhli SA, Alresheedi SS. Enhancing Intrusion Detection Systems for IoT and Cloud Environments Using a Growth Optimizer Algorithm and Conventional Neural Networks. Sensors. 2023; 23(9):4430. https://doi.org/10.3390/s23094430

Chicago/Turabian StyleFatani, Abdulaziz, Abdelghani Dahou, Mohamed Abd Elaziz, Mohammed A. A. Al-qaness, Songfeng Lu, Saad Ali Alfadhli, and Shayem Saleh Alresheedi. 2023. "Enhancing Intrusion Detection Systems for IoT and Cloud Environments Using a Growth Optimizer Algorithm and Conventional Neural Networks" Sensors 23, no. 9: 4430. https://doi.org/10.3390/s23094430

APA StyleFatani, A., Dahou, A., Abd Elaziz, M., Al-qaness, M. A. A., Lu, S., Alfadhli, S. A., & Alresheedi, S. S. (2023). Enhancing Intrusion Detection Systems for IoT and Cloud Environments Using a Growth Optimizer Algorithm and Conventional Neural Networks. Sensors, 23(9), 4430. https://doi.org/10.3390/s23094430