Reinforcement Learning-Based Approach for Minimizing Energy Loss of Driving Platoon Decisions †

Abstract

1. Introduction

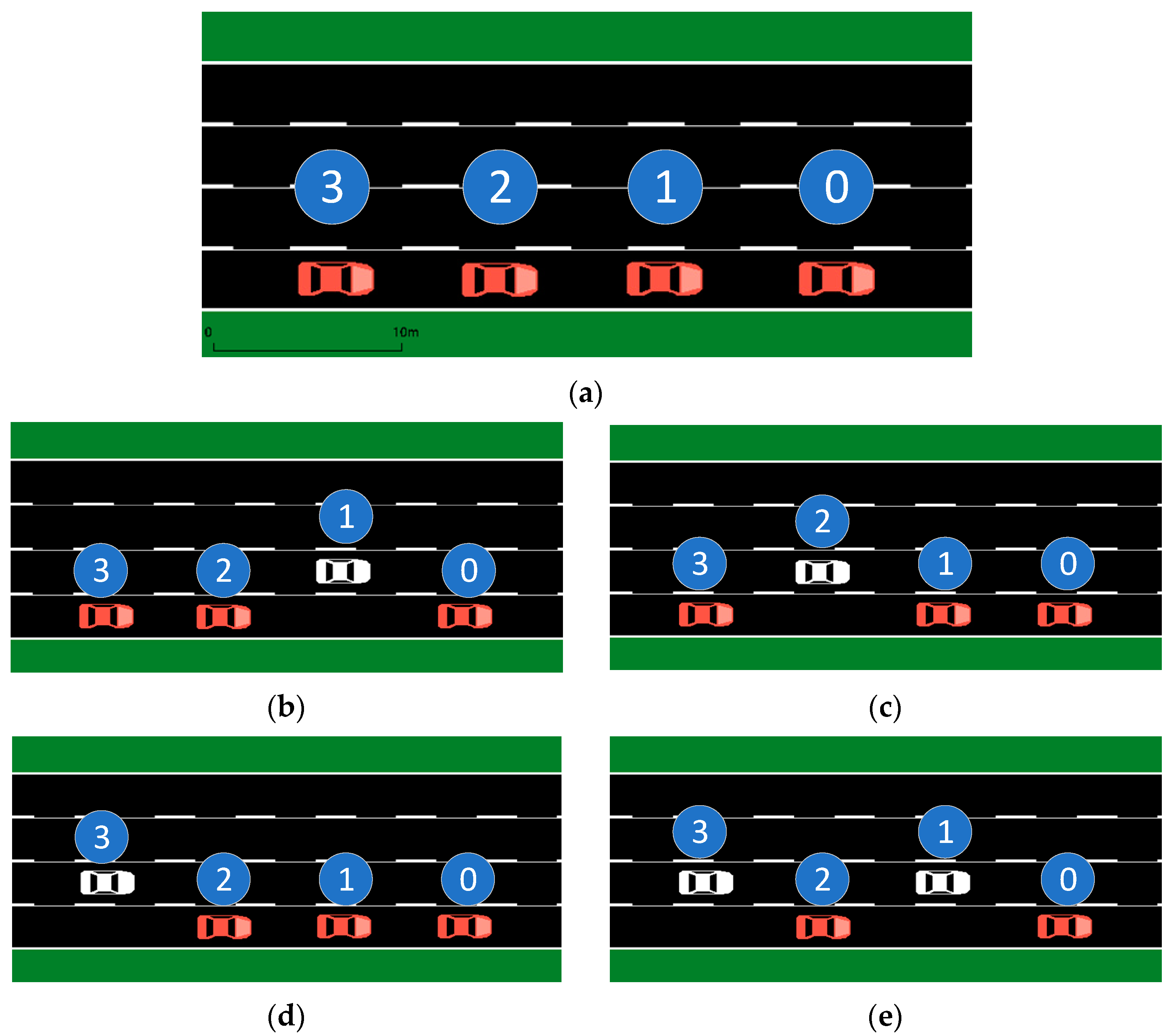

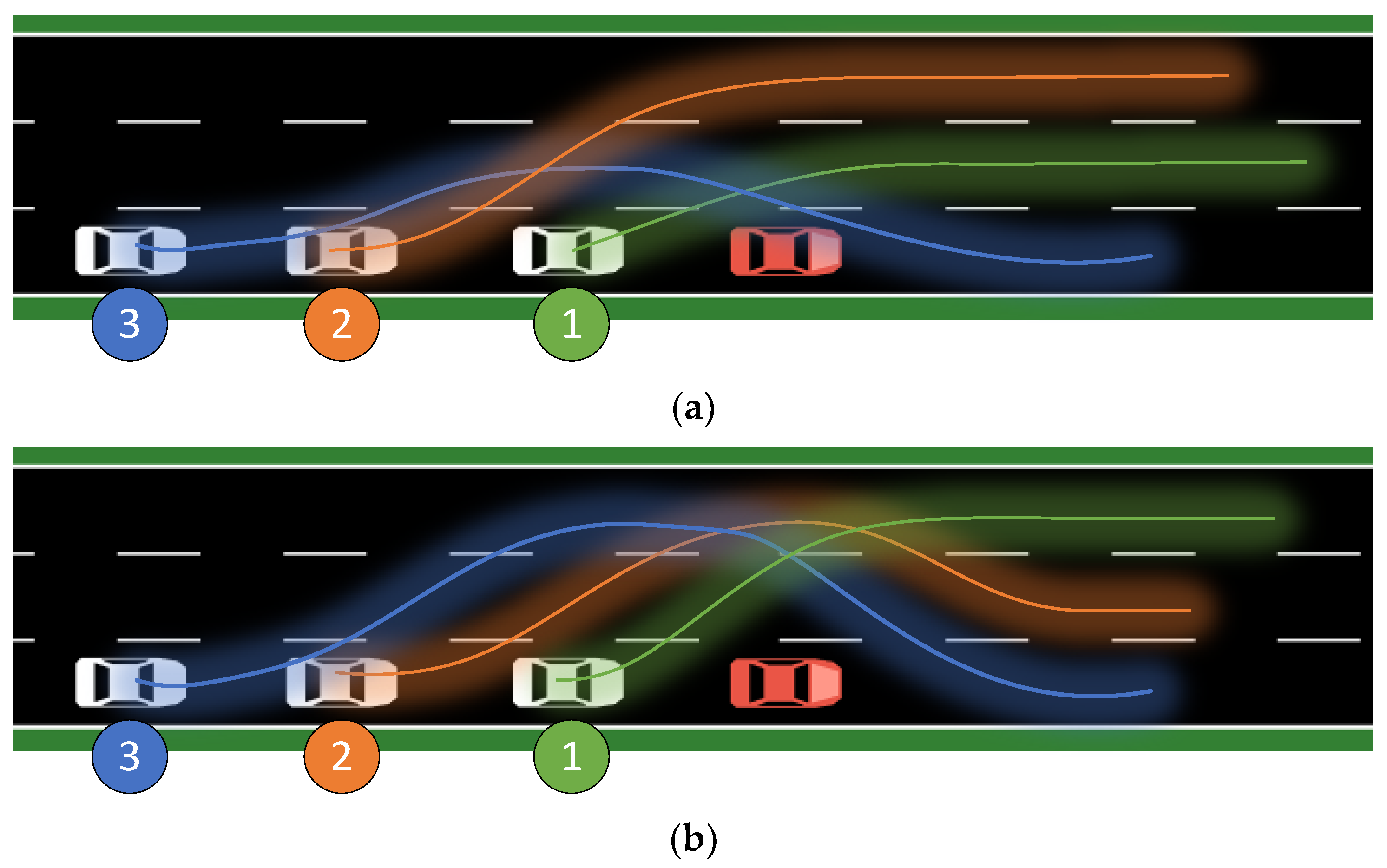

- A hypothetical situation is created to depict a scenario where a line of vehicles is present on a two-lane highway. When there are no other vehicles present, the front vehicle of the platoon suffers a serious traffic accident.

- To prevent further damage, reinforcement learning (policy gradient algorithm) is used to obtain the most efficient strategy to be adopted by the member vehicles to reduce the impact of the collision on the team.

- While solving the collision avoidance problem, the reinforcement learning algorithm also examines the damage caused by the vehicle behavior and computes the strategy that minimizes the damage.

- In order to break the limitations of traditional algorithms, reinforcement learning algorithms (policy gradients) are applied to the behavioral decision of the fleet, which is a leap forward and a hot spot for future research.

2. Platoon Algorithms with RL

2.1. The CACC Car-Following Model

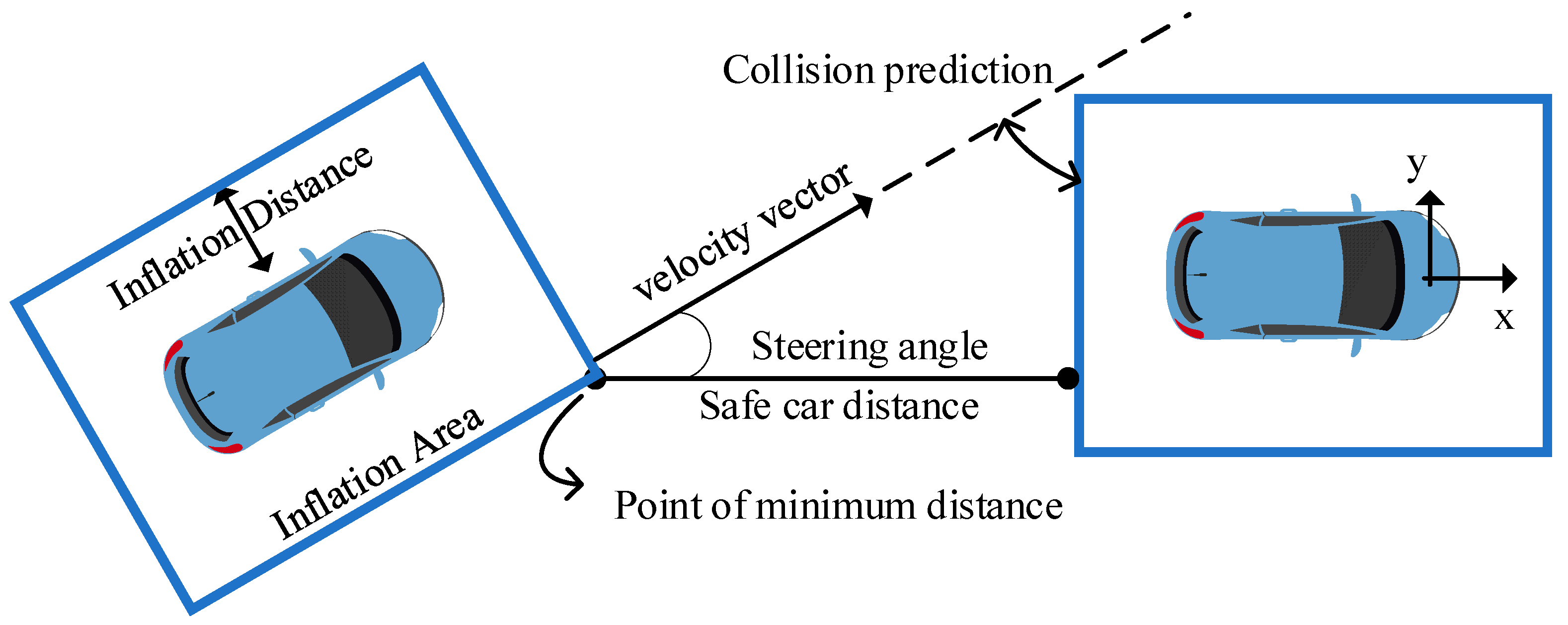

2.2. Proposed Car Dynamics Cost Model

- When , it is elastic collision; .

- When , it is inelastic collision; .

- When , it is completely inelastic collision; .

2.3. Markov Decision Process (MDP)

2.4. Policy Gradient Algorithm

2.5. Gauss Policy Function

2.6. Softmax Policy Function

3. Proposed Model

3.1. Vehicle Dynamics and Network Parameters

| Algorithm 1 Policy gradient for platoon |

| Input: a differentiable policy parameterization

Algorithm parameter: step size Initialize policy parameter , environment and state |

|

3.2. Strategic Gradient Decision-Making Behavior

3.2.1. State Space and Action Space

3.2.2. Mission Analysis

3.2.3. Observation Spatial Information Filtering

3.2.4. Reward Function Settings

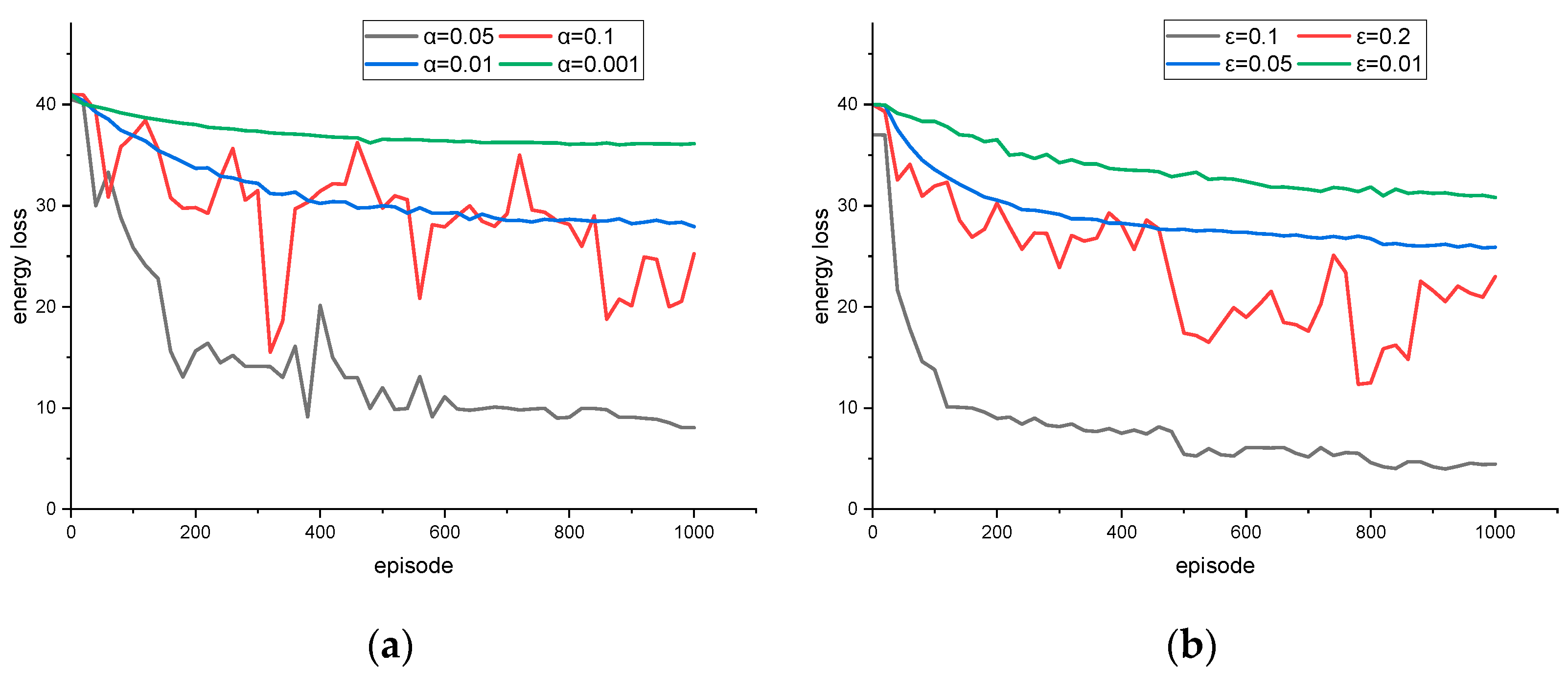

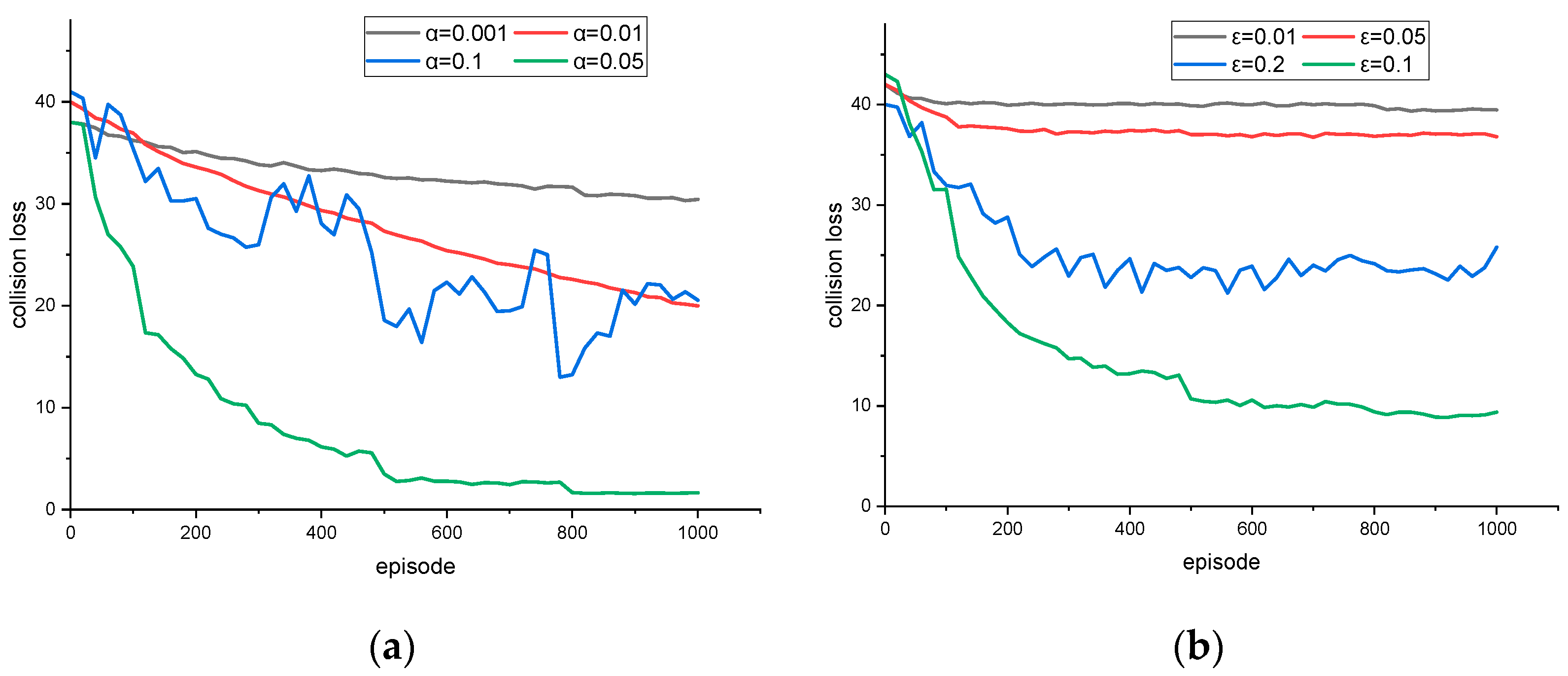

4. Analysis of Simulation Results

Analysis of Three-Lane Scenario Simulation Results

5. Conclusions

- This study used short-range communication networks only for small-scale vehicle communication, and when more vehicles and more network types (such as base stations) are added, how reinforcement learning can cope with them should be specifically analyzed.

- When the road environment is more complex, reinforcement learning intelligences should be multi-agents and consideration should be given to whether to use distributed or centralized agents.

- The crash environment in this study is only one of many small probability situations; a more generalized decision algorithm should be sought to minimize loss.

- There is endogeneity in the road passage, traffic calming methods, and crash behavior decisions, and their correlation should be studied.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Xia, X.; Meng, Z.; Han, X.; Li, H.; Tsukiji, T.; Xu, R.; Zhang, Z.; Ma, J. Automated Driving Systems Data Acquisition and Processing Platform|DeepAI. arXiv preprint 2022, arXiv:2211.13425. Available online: https://deepai.org/publication/automated-driving-systems-data-acquisition-and-processing-platform (accessed on 9 April 2023).

- Khosravi, M.; Behrunani, V.N.; Myszkorowski, P.; Smith, R.S.; Rupenyan, A.; Lygeros, J. Performance-Driven Cascade Controller Tuning with Bayesian Optimization. IEEE Trans. Ind. Electron. 2022, 69, 1032–1042. [Google Scholar] [CrossRef]

- Xia, X.; Hashemi, E.; Xiong, L.; Khajepour, A. Autonomous Vehicle Kinematics and Dynamics Synthesis for Sideslip Angle Estimation Based on Consensus Kalman Filter. IEEE Trans. Control. Syst. Technol. 2023, 31, 179–192. [Google Scholar] [CrossRef]

- Xia, X.; Xiong, L.; Huang, Y.; Lu, Y.; Gao, L.; Xu, N.; Yu, Z. Estimation on IMU yaw misalignment by fusing information of automotive onboard sensors. Mech. Syst. Signal Process. 2022, 162, 107993. [Google Scholar] [CrossRef]

- Improved Vehicle Localization Using On-Board Sensors and Vehicle Lateral Velocity|IEEE Journals & Magazine|IEEE Xplore. Available online: https://ieeexplore.ieee.org/document/9707770 (accessed on 9 April 2023).

- Liu, W.; Xia, X.; Xiong, L.; Lu, Y.; Gao, L.; Yu, Z. Automated Vehicle Sideslip Angle Estimation Considering Signal Measurement Characteristic. IEEE Sens. J. 2021, 21, 21675–21687. [Google Scholar] [CrossRef]

- Liu, W.; Quijano, K.; Crawford, M.M. YOLOv5-Tassel: Detecting Tassels in RGB UAV Imagery with Improved YOLOv5 Based on Transfer Learning. IEEE J. Sel. Top. Appl. Earth Obs. Remote. Sens. 2022, 15, 8085–8094. [Google Scholar] [CrossRef]

- Kiran, B.R.; Sobh, I.; Talpaert, V.; Mannion, P.; Al Sallab, A.A.; Yogamani, S.; Perez, P. Deep Reinforcement Learning for Autonomous Driving: A Survey. IEEE Trans. Intell. Transp. Syst. 2022, 23, 4909–4926. [Google Scholar] [CrossRef]

- Poongodi, M.; Bourouis, S.; Ahmed, A.N.; Vijayaragavan, M.; Venkatesan KG, S.; Alhakami, W.; Hamdi, M. A Novel Secured Multi-Access Edge Computing based VANET with Neuro fuzzy systems based Blockchain Framework-ScienceDirect. Comput. Commun. 2022, 192, 48–56. Available online: https://www.sciencedirect.com/science/article/abs/pii/S0140366422001669 (accessed on 9 April 2023).

- Gao, W.; Jiang, Z.-P.; Lewis, F.L.; Wang, Y. Cooperative optimal output regulation of multi-agent systems using adaptive dynamic programming. In Proceedings of the 2017 American Control Conference (ACC), Seattle, WA, USA, 24–26 May 2017; pp. 2674–2679. [Google Scholar]

- Park, H.; Lim, Y. Deep Reinforcement Learning Based Resource Allocation with Radio Remote Head Grouping and Vehicle Clustering in 5G Vehicular Networks. Electronics 2021, 10, 3015. [Google Scholar] [CrossRef]

- Reinforcement Learning Based Power Control for VANET Broadcast against Jamming|IEEE Conference Publication|IEEE Xplore. Available online: https://ieeexplore.ieee.org/document/8647273 (accessed on 9 April 2023).

- Lansky, J.; Rahmani, A.M.; Hosseinzadeh, M. Reinforcement Learning-Based Routing Protocols in Vehicular Ad Hoc Networks for Intelligent Transport System (ITS): A Survey. Mathematics 2022, 10, 4673. [Google Scholar] [CrossRef]

- Wang, J.; Zhu, K.; Hossain, E. Green Internet of Vehicles (IoV) in the 6G Era: Toward Sustainable Vehicular Communications and Networking. arXiv 2021. [Google Scholar] [CrossRef]

- Peng, H.; Shen, X.S. Deep Reinforcement Learning Based Resource Management for Multi-Access Edge Computing in Vehicular Networks. IEEE Trans. Netw. Sci. Eng. 2020, 7, 2416–2428. [Google Scholar] [CrossRef]

- Yu, K.; Lin, L.; Alazab, M.; Tan, L.; Gu, B. Deep Learning-Based Traffic Safety Solution for a Mixture of Autonomous and Manual Vehicles in a 5G-Enabled Intelligent Transportation System. IEEE Trans. Intell. Transp. Syst. 2021, 22, 4337–4347. [Google Scholar] [CrossRef]

- Noori, H. Realistic urban traffic simulation as vehicular Ad-hoc network (VANET) via Veins framework. In Proceedings of the 2012 12th Conference of Open Innovations Association (FRUCT), Oulu, Finland, 5–9 November 2012; pp. 1–7. [Google Scholar]

- Saravanan, M.; Ganeshkumar, P. Routing using reinforcement learning in vehicular ad hoc networks. Comput. Intell. 2020, 36, 682–697. [Google Scholar] [CrossRef]

- Schettler, M.; Buse, D.S.; Zubow, A.; Dressler, F. How to Train your ITS? Integrating Machine Learning with Vehicular Network Simulation. In Proceedings of the 2020 IEEE Vehicular Networking Conference (VNC), New York, NY, USA, 16–18 December 2020; pp. 1–4. [Google Scholar] [CrossRef]

- Zhang, C.; Du, H. DMORA: Decentralized Multi-SP Online Resource Allocation Scheme for Mobile Edge Computing. IEEE Trans. Cloud Comput. 2022, 10, 2497–2507. [Google Scholar] [CrossRef]

- Li, S.; Wu, Y.; Cui, X.; Dong, H.; Fang, F.; Russell, S. Robust Multi-Agent Reinforcement Learning via Minimax Deep Determin-istic Policy Gradient. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33. [Google Scholar] [CrossRef]

- Naderializadeh, N.; Hashemi, M. Energy-Aware Multi-Server Mobile Edge Computing: A Deep Reinforcement Learning Approach. In Proceedings of the 2019 53rd Asilomar Conference on Signals, Systems, and Computers, Pacific Grove, CA, USA, 3–6 November 2019; pp. 383–387. [Google Scholar] [CrossRef]

- Wong, F. Carbon emissions allowances trade amount dynamic prediction based on machine learning. In Proceedings of the 2022 International Conference on Machine Learning and Knowledge Engineering (MLKE), Guilin, China, 25–27 February 2022; pp. 115–120. [Google Scholar] [CrossRef]

- Zhang, H.; Wu, D.; Boulet, B. A Review of Recent Advances on Reinforcement Learning for Smart Home Energy Management. In Proceedings of the 2020 IEEE Electric Power and Energy Conference (EPEC), Edmonton, AB, Canada, 9–10 November 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Yang, Y.; Shen, H. Deep Reinforcement Learning Enhanced Greedy Algorithm for Online Scheduling of Batched Tasks in Cloud in Cloud HPC Systems. IEEE Trans. Parallel Distrib. Syst. 2022, 33, 3003–3014. [Google Scholar] [CrossRef]

- Ban, Y.; Xie, L.; Xu, Z.; Zhang, X.; Guo, Z.; Hu, Y. An optimal spatial-temporal smoothness approach for tile-based 360-degree video streaming. In Proceedings of the 2017 IEEE Visual Communications and Image Processing (VCIP), St. Petersburg, FL, USA, 10–13 December 2017; pp. 1–4. [Google Scholar] [CrossRef]

- Reinforcement Learning Based Rate Adaptation for 360-Degree Video Streaming|IEEE Journals & Magazine|IEEE Xplore. Available online: https://ieeexplore.ieee.org/document/9226435 (accessed on 9 April 2023).

- Subramanyam, S.; Viola, I.; Jansen, J.; Alexiou, E.; Hanjalic, A.; Cesar, P. Subjective QoE Evaluation of User-Centered Adaptive Streaming of Dynamic Point Clouds. In Proceedings of the 2022 14th International Conference on Quality of Multimedia Experience (QoMEX), Lippstadt, Germany, 5–7 September 2022; pp. 1–6. [Google Scholar] [CrossRef]

- Yazid, Y.; Ez-Zazi, I.; Guerrero-González, A.; El Oualkadi, A.; Arioua, M. UAV-Enabled Mobile Edge-Computing for IoT Based on AI: A Comprehensive Review. Drones 2021, 5, 148. [Google Scholar] [CrossRef]

- Al-Turki, M.; Ratrout, N.T.; Rahman, S.M.; Reza, I. Impacts of Autonomous Vehicles on Traffic Flow Characteristics under Mixed Traffic Environment: Future Perspectives. Sustainability 2021, 13, 11052. [Google Scholar] [CrossRef]

- Yao, L.; Zhao, H.; Tang, J.; Liu, S.; Gaudiot, J.-L. Streaming Data Priority Scheduling Framework for Autonomous Driving by Edge. In Proceedings of the 2021 IEEE 45th Annual Computers, Software, and Applications Conference (COMPSAC), Madrid, Spain, 12–16 July 2021; pp. 37–42. [Google Scholar] [CrossRef]

- A Survey of Multi-Access Edge Computing and Vehicular Networking|IEEE Journals & Magazine|IEEE Xplore. Available online: https://ieeexplore.ieee.org/document/9956993 (accessed on 9 April 2023).

- Lu, S.; Zhang, K.; Chen, T.; Başar, T.; Horesh, L. Decentralized Policy Gradient Descent Ascent for Safe Multi-Agent Reinforcement Learning. Proc. Conf. AAAI Artif. Intell. 2021, 35, 8767–8775. [Google Scholar] [CrossRef]

| Parameter | Value |

|---|---|

| Car speed | 120 Km/h |

| Headway | 1.5 s |

| Response time | 1 s |

| Deceleration | 8 m/s2 |

| Routing packet size | 512 Bytes |

| Simulation distance | 1000 m |

| Maximum rate | 10 MB/s |

| Number of nodes | 4 |

| Communication distance | 250 m |

| Hello packet interval | Ls |

| Transfer Protocol | UDP |

| Packet interval | 0.1 s |

| MAC layer protocol | 802.11 |

| Channel transmission rate | 3 Mbps |

| Learning rate α | 0.001, 0.01, 0.05, 0.1 |

| Exploration rate ε | 0.01, 0.05, 0.1, 0.2 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gu, Z.; Liu, Z.; Wang, Q.; Mao, Q.; Shuai, Z.; Ma, Z. Reinforcement Learning-Based Approach for Minimizing Energy Loss of Driving Platoon Decisions. Sensors 2023, 23, 4176. https://doi.org/10.3390/s23084176

Gu Z, Liu Z, Wang Q, Mao Q, Shuai Z, Ma Z. Reinforcement Learning-Based Approach for Minimizing Energy Loss of Driving Platoon Decisions. Sensors. 2023; 23(8):4176. https://doi.org/10.3390/s23084176

Chicago/Turabian StyleGu, Zhiru, Zhongwei Liu, Qi Wang, Qiyun Mao, Zhikang Shuai, and Ziji Ma. 2023. "Reinforcement Learning-Based Approach for Minimizing Energy Loss of Driving Platoon Decisions" Sensors 23, no. 8: 4176. https://doi.org/10.3390/s23084176

APA StyleGu, Z., Liu, Z., Wang, Q., Mao, Q., Shuai, Z., & Ma, Z. (2023). Reinforcement Learning-Based Approach for Minimizing Energy Loss of Driving Platoon Decisions. Sensors, 23(8), 4176. https://doi.org/10.3390/s23084176