Real-Time Recognition and Localization Based on Improved YOLOv5s for Robot’s Picking Clustered Fruits of Chilies

Abstract

1. Introduction

2. Related Work

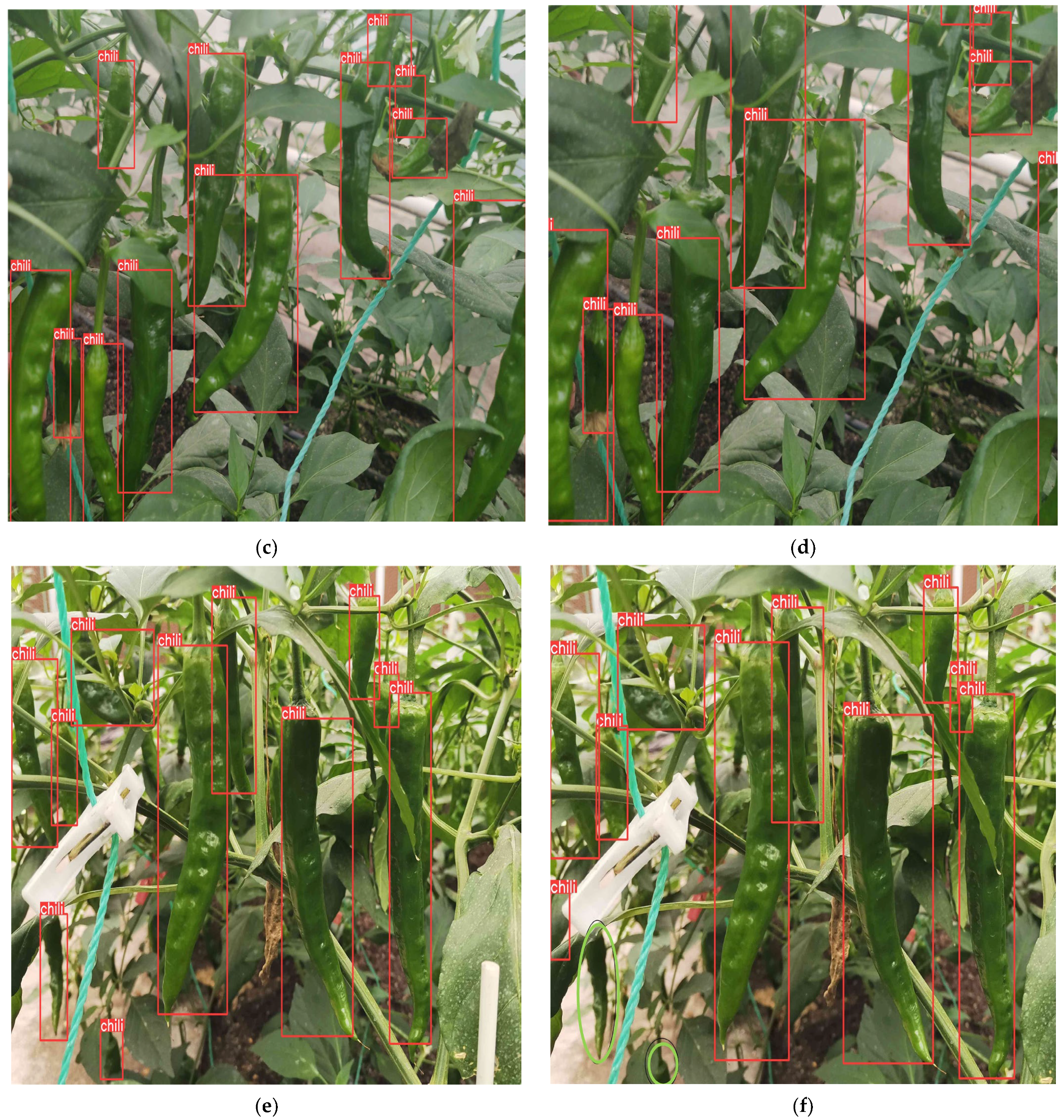

3. Problem Statement

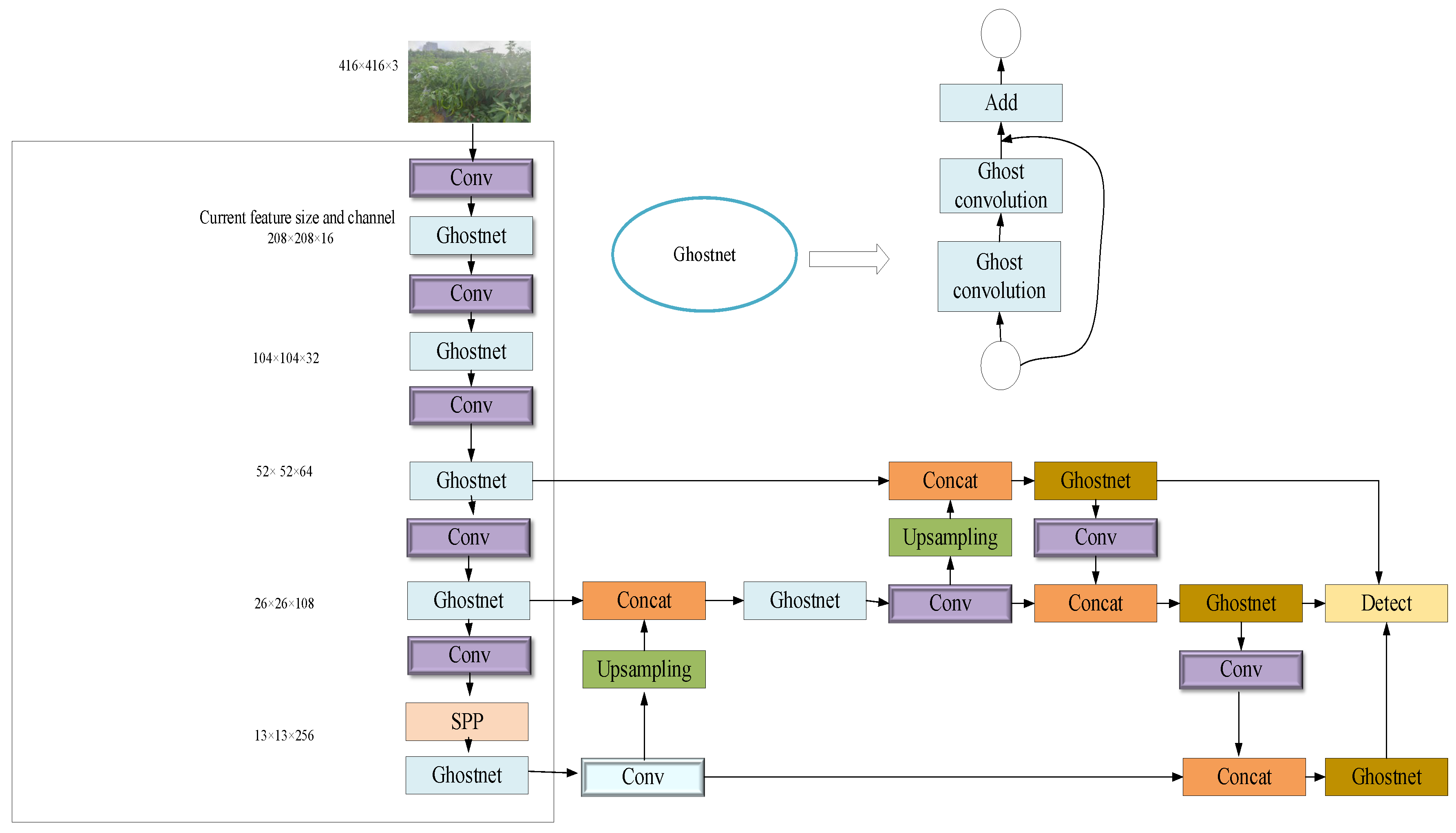

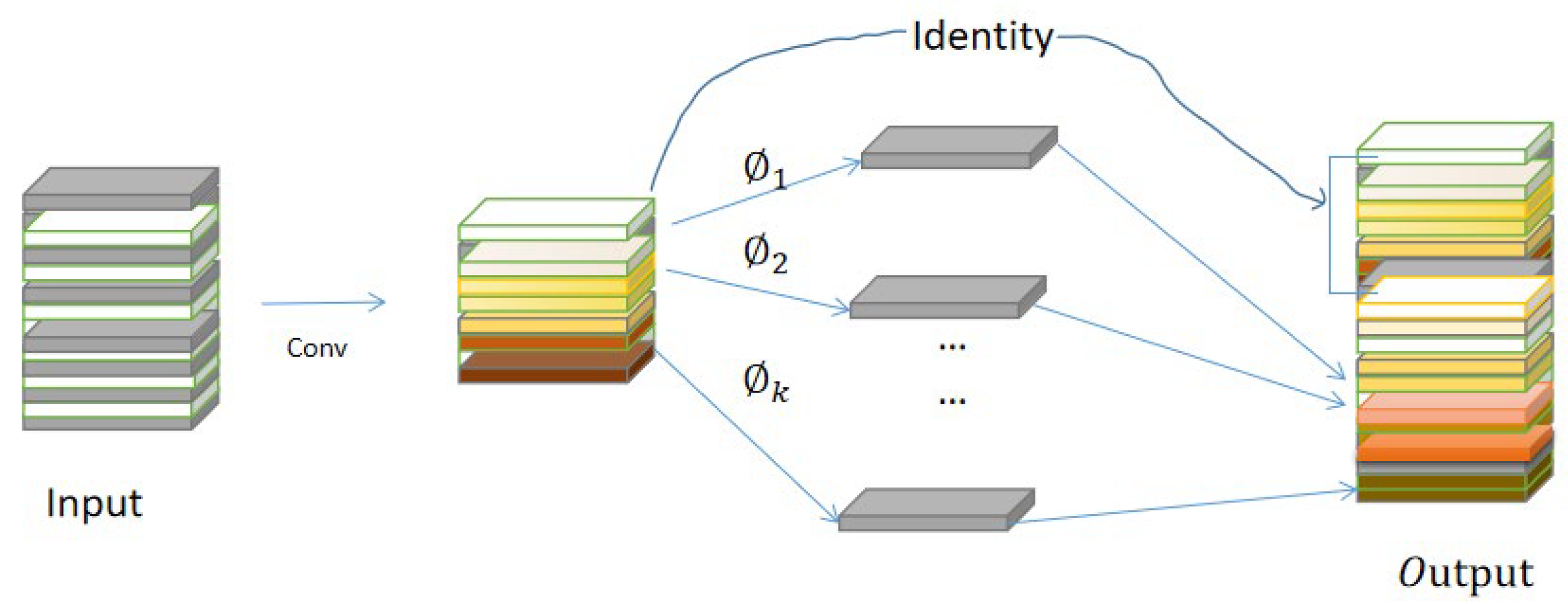

3.1. GhostNet Module

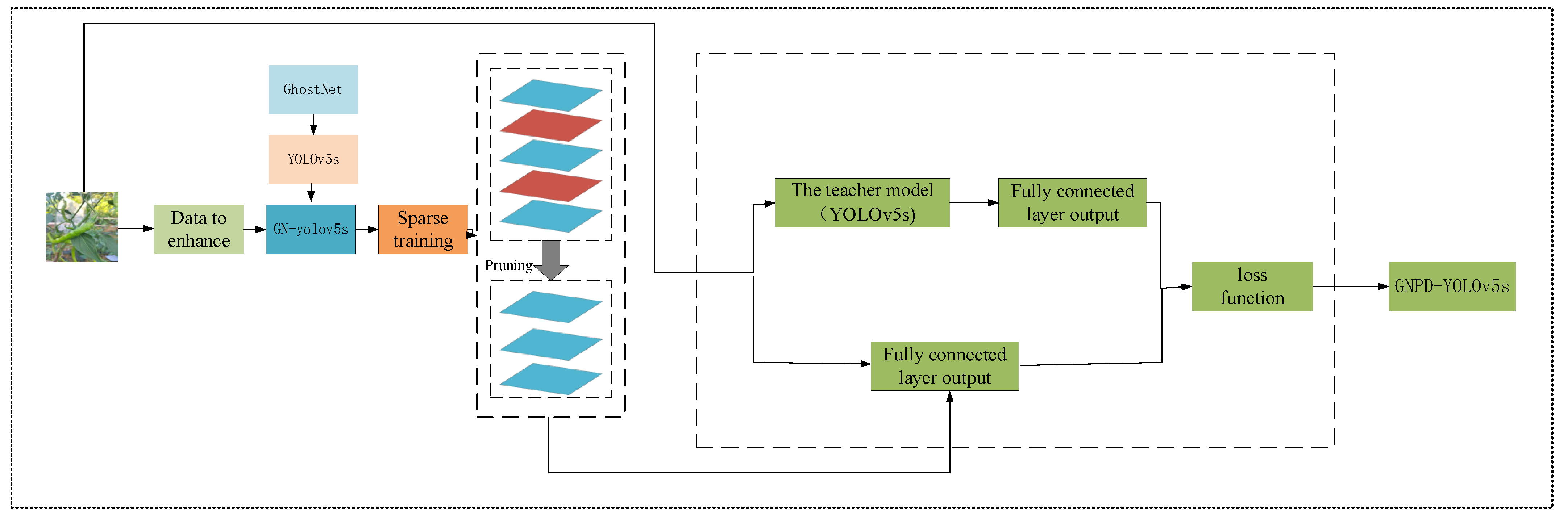

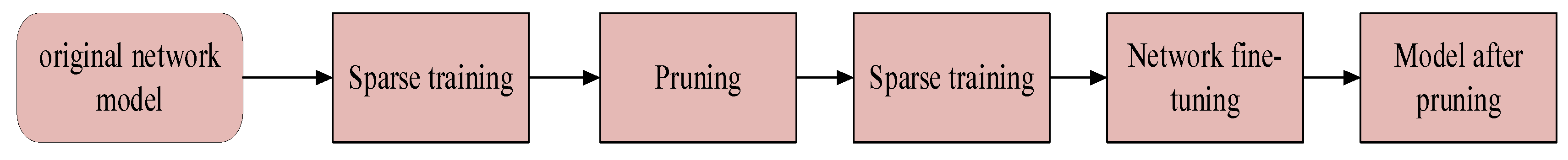

3.2. Model Compression

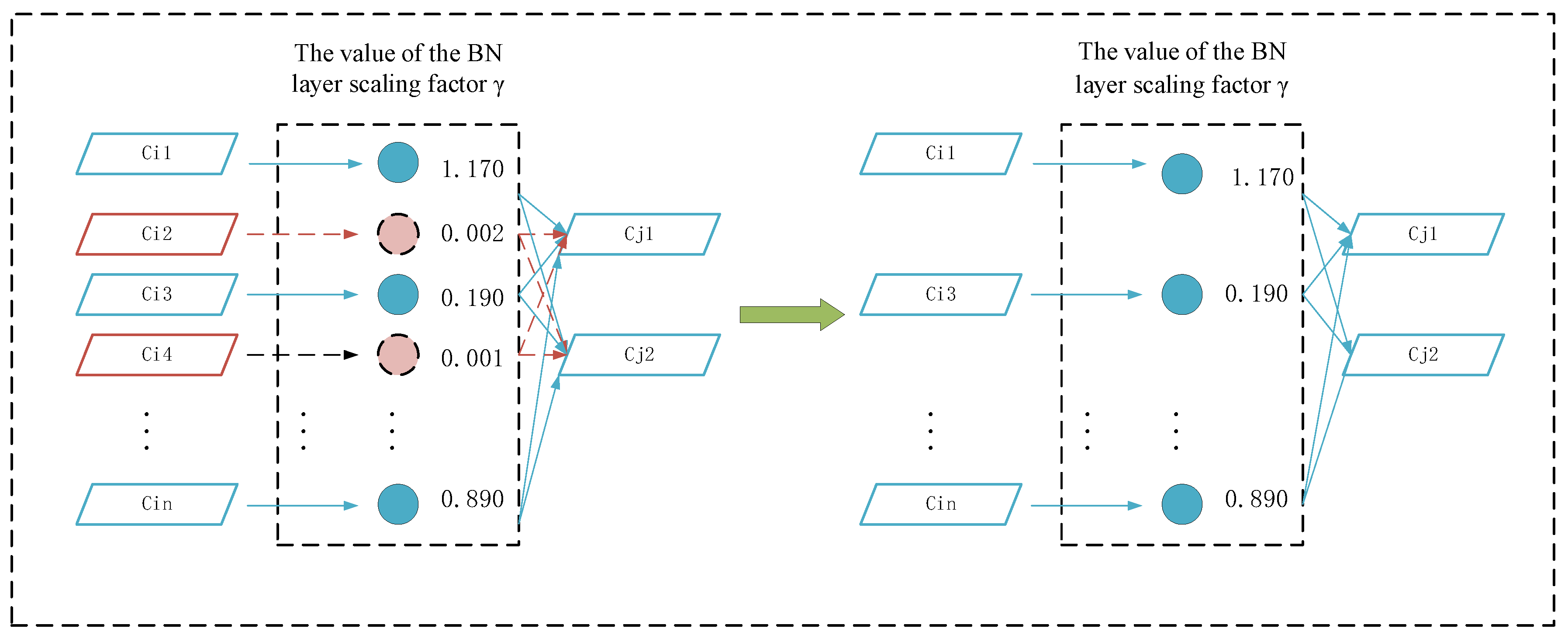

3.2.1. Channel Pruning

- (1)

- Sparse training

- (2)

- Channel pruning

3.2.2. Knowledge Distillation

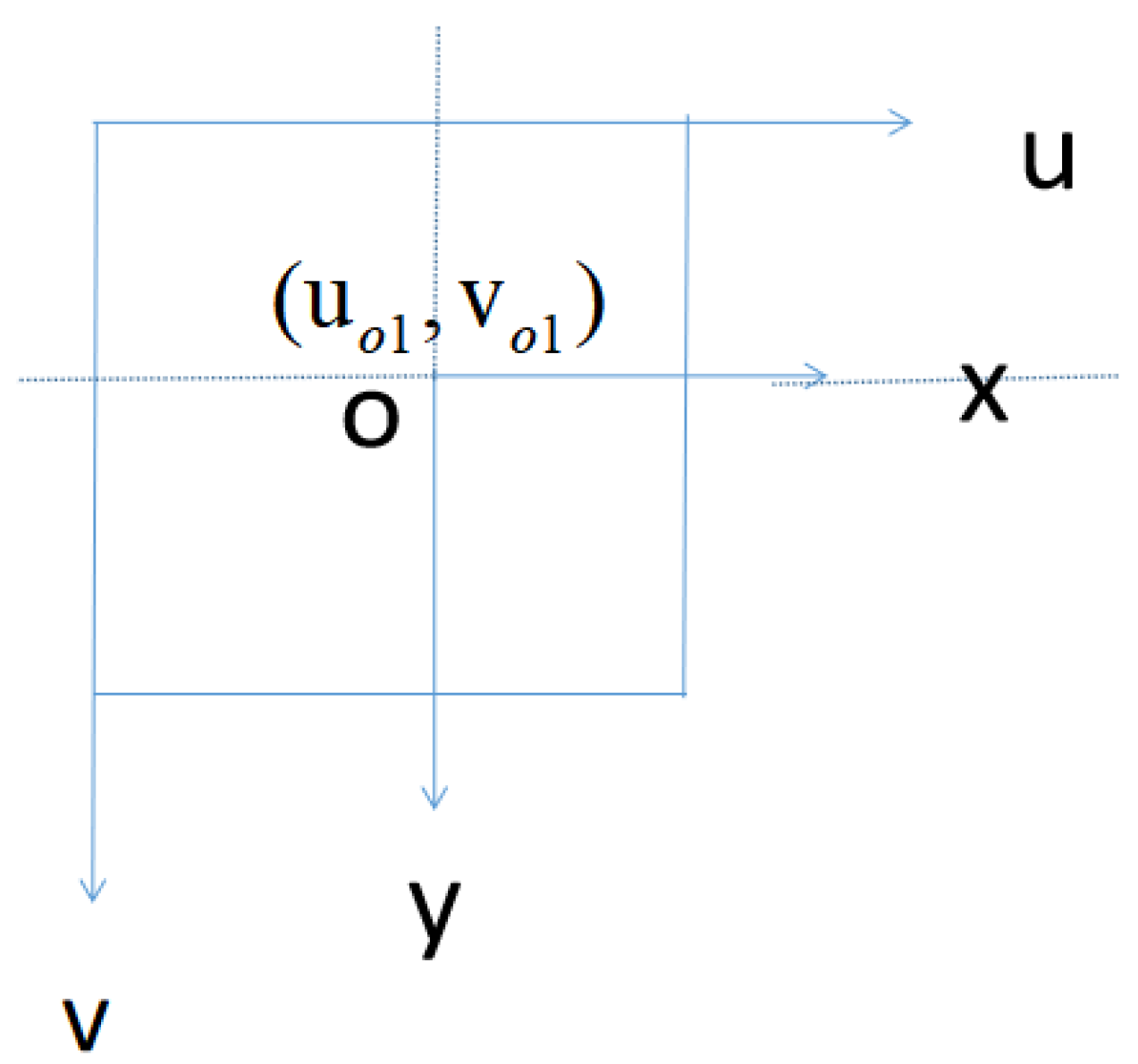

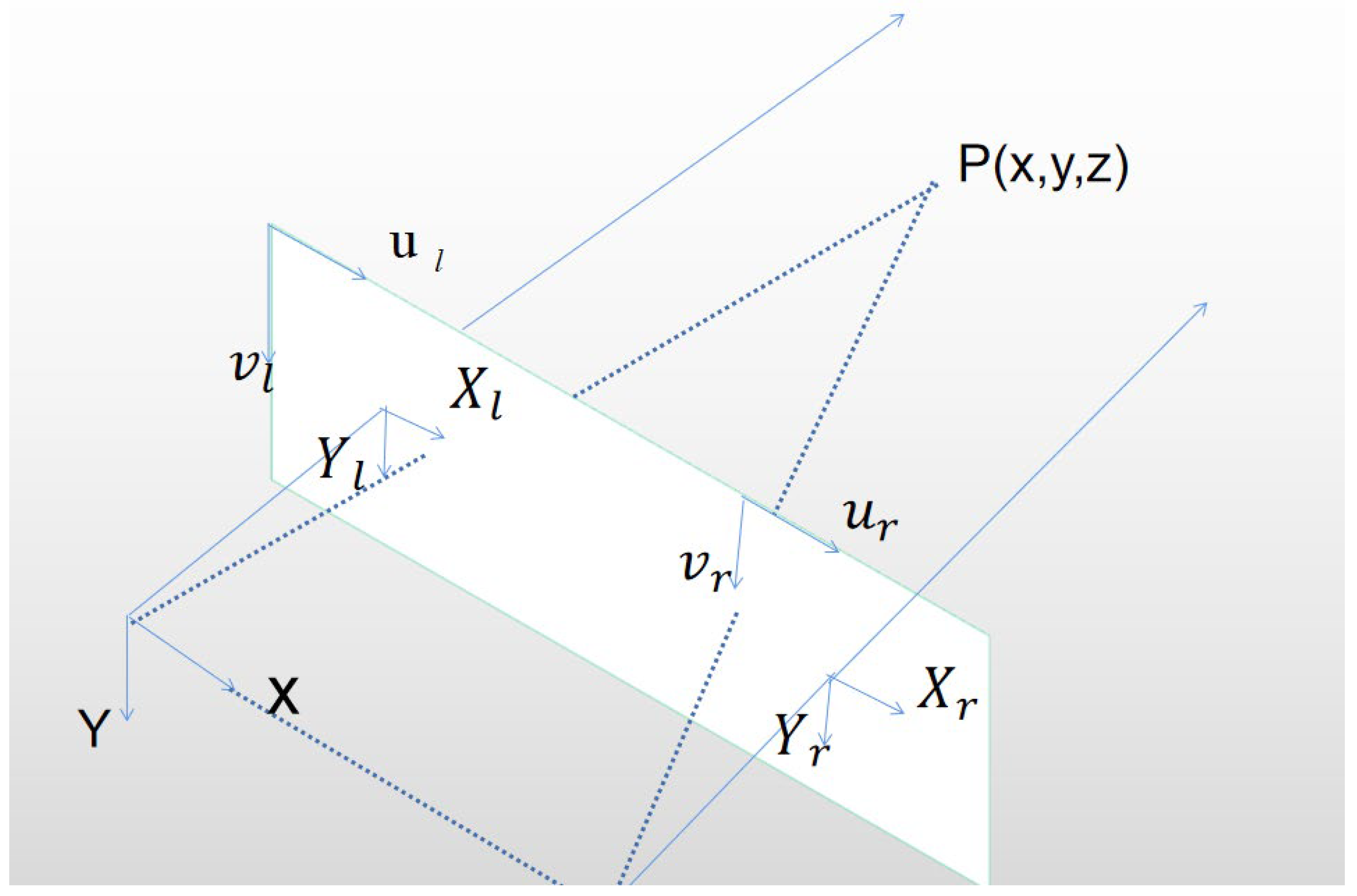

3.2.3. Location of Chili Fruit

4. Experiment

4.1. The Chili Datset

Acquisition of Image Data

4.2. Analysis of Experimental Results

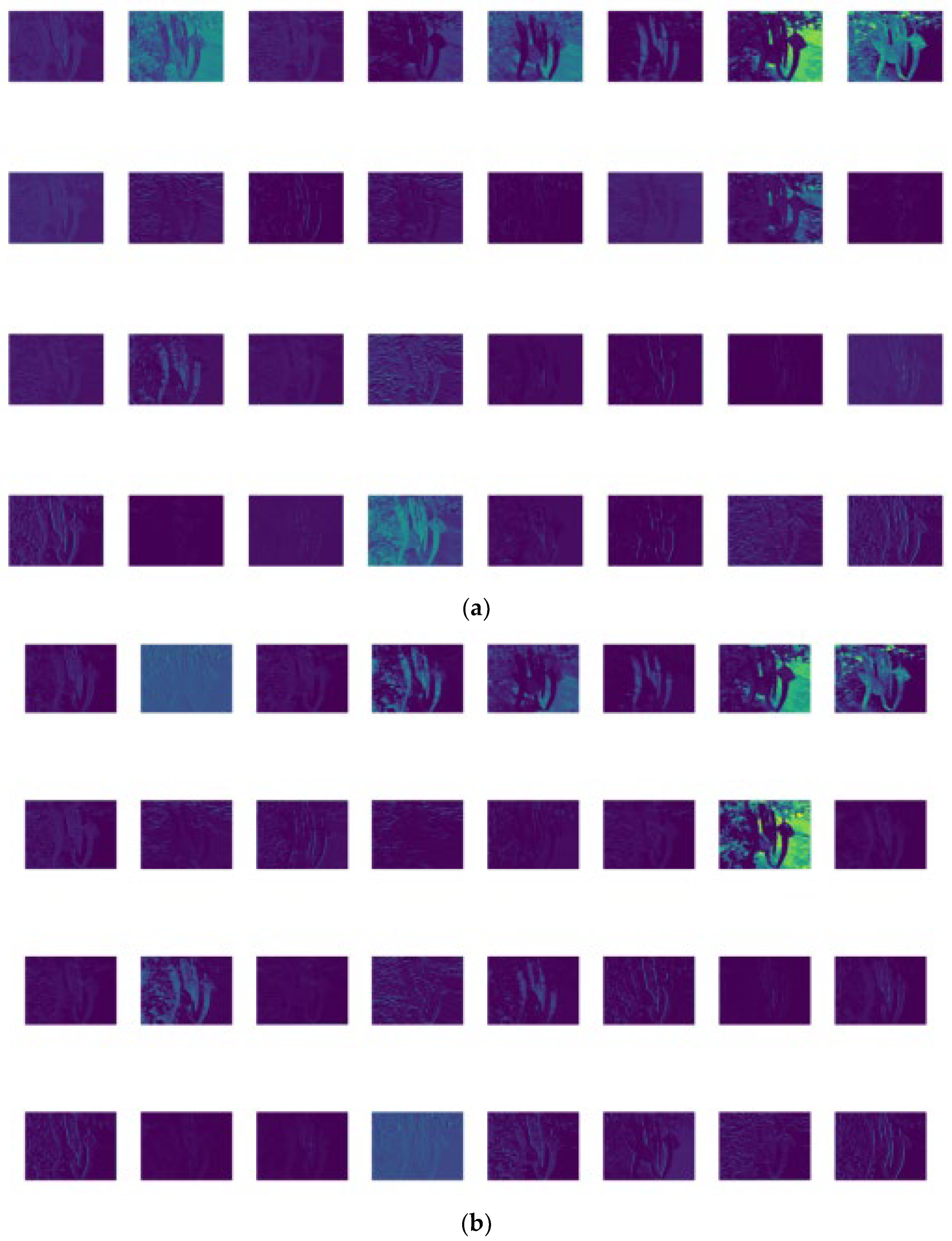

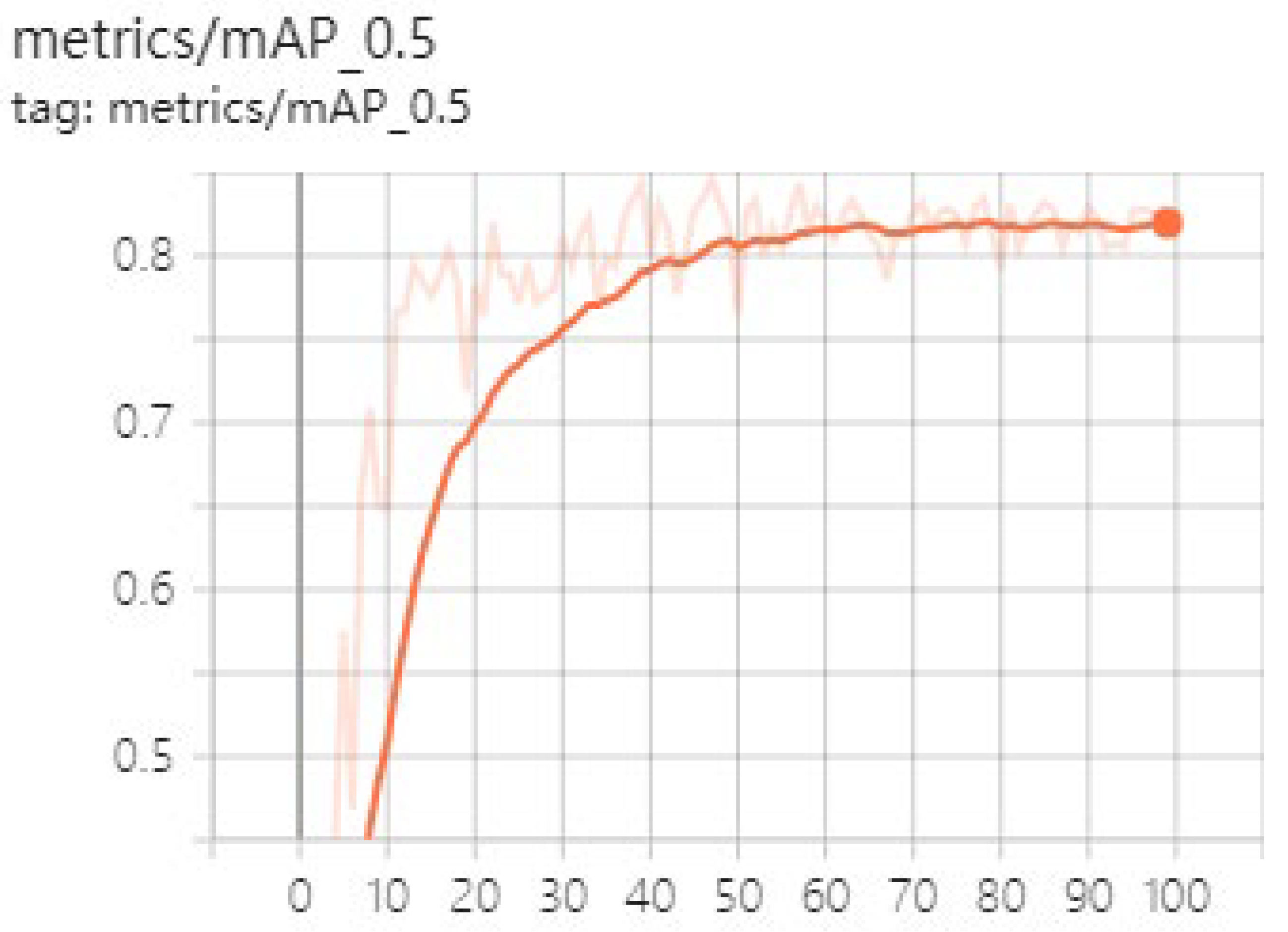

4.2.1. Sparse Training

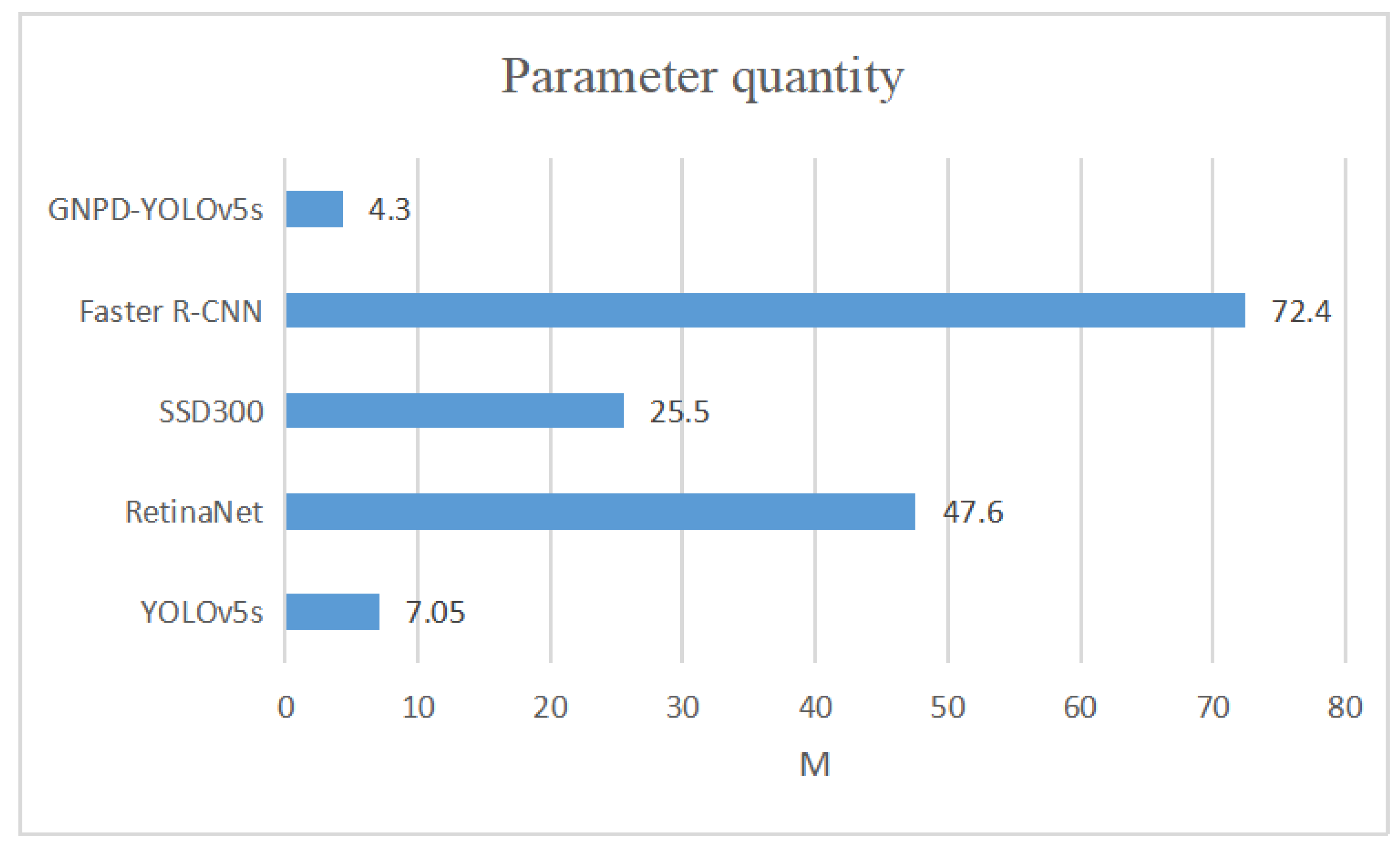

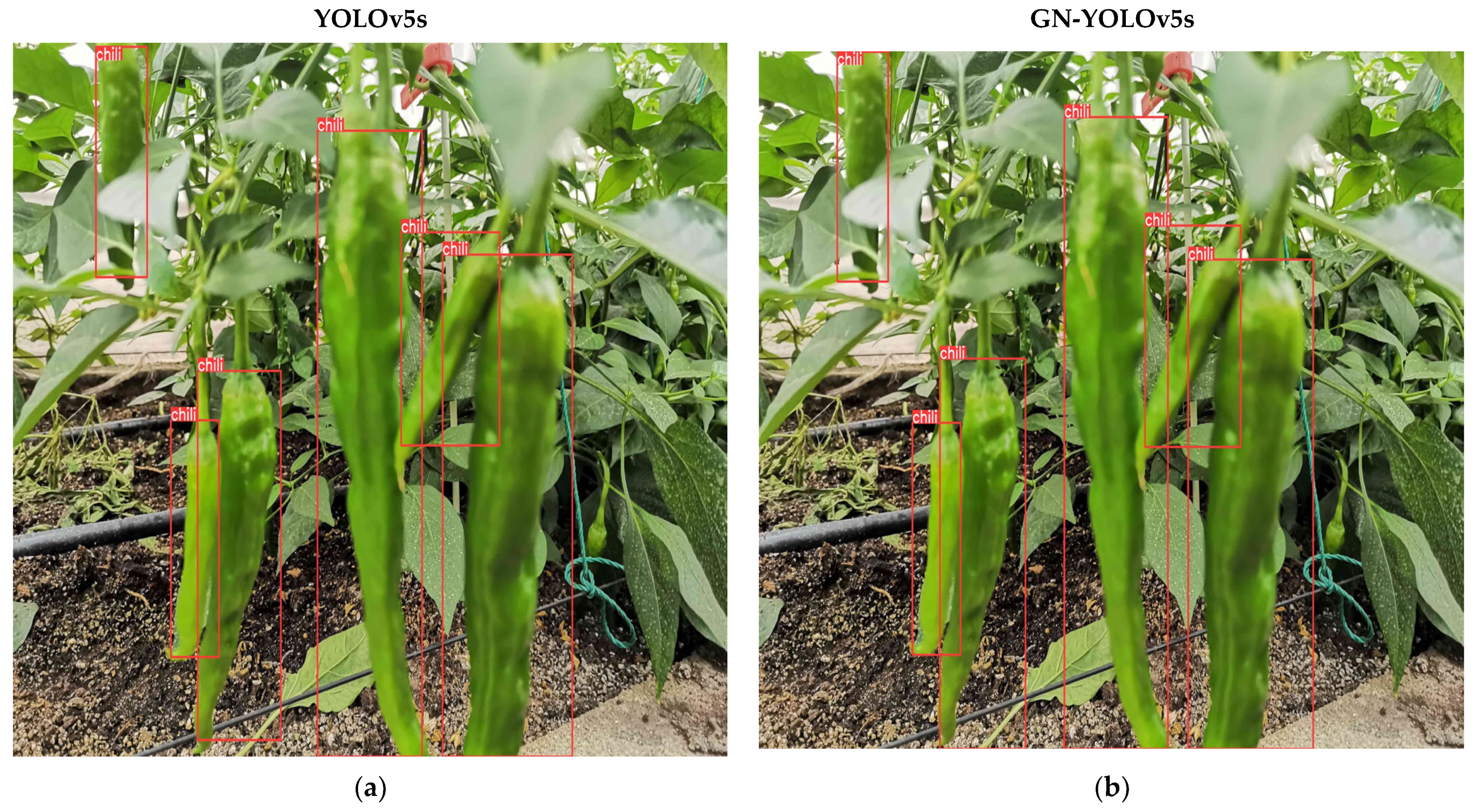

4.2.2. Comparative Experimental Analysis of Recognition Models

4.2.3. Ablation Experiment

4.3. Three Dimensional Space Locating of the Chili Target

4.3.1. Spatial Localization of Chili

4.3.2. Chili Ranging with Different Degrees of Shading

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Fu, L.; Gao, F.; Wu, J.; Li, R.; Karkee, M.; Zhang, Q. Application of consumer RGB-D cameras for fruit recognition and localization in field: A critical review. Comput. Electron. Agric. 2020, 177, 105687. [Google Scholar] [CrossRef]

- Sun, J.; He, X.; Wu, M.; Wu, X.; Shen, J.; Lu, B. Recognition of tomato organs based on convolutional neural network under the overlap and occlusion backgrounds. Mach. Vis. Appl. 2020, 31, 31. [Google Scholar] [CrossRef]

- Flores, P.; Zhang, Z.; Igathinathane, C.; Jithin, M.; Naik, D.; Stenger, J.; Ransom, J.; Kiran, R. Distinguishing seedling volunteer corn from soybean through greenhouse color, color-infrared, and fused images using machine and deep learning. Ind. Crops Prod. 2021, 161, 113223. [Google Scholar] [CrossRef]

- Gongal, A.; Silwal, A.; Amatya, S.; Karkee, M.; Zhang, Q.; Lewis, K. Apple crop-load estimation with over-the-row machine vision system. Comput. Electron. Agric. 2016, 120, 26–35. [Google Scholar] [CrossRef]

- Azarmdel, H.; Jahanbakhshi, A.; Mohtasebi, S.S.; Muñoz, A.R. Evaluation of image processing technique as an expert system in mulberry fruit grading based on ripeness level using artificial neural networks (ANNs) and support vector machine (SVM). Postharvest Biol. Technol. 2020, 166, 111201. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time objectdetection. In Proceedings of the IEEE Conference on Computer Vision and Pattern 2016 Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 779–788. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 21–37. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 99, 2999–3007. [Google Scholar]

- Barlow, Z.; Jashami, H.; Sova, A.; Hurwitz, D.S.; Olsen, M.J. Policy processes and recommendations for Unmanned Aerial System operations near roadways based on visual attention of drivers. Transp. Res. Part C Emerg. Technol. 2019, 108, 207–222. [Google Scholar] [CrossRef]

- Chen, T.; Wang, Z.; Li, G.; Lin, L. Recurrent attentional reinforcement learning for multi-label image recognition. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; Volume 32. [Google Scholar]

- Zhu, C.; Wu, C.; Li, Y.; Hu, S.; Gong, H. Spatial Location of Sugarcane Node for Binocular Vision-Based Harvesting Robots Based on Improved YOLOv4. Appl. Sci. 2022, 12, 3088. [Google Scholar] [CrossRef]

- Fuentes, A.; Yoon, S.; Kim, S.C.; Park, D.S. A robust deep-learning-based detector for real-time tomato plant diseases and pests recognition. Sensors 2017, 17, 2022. [Google Scholar] [CrossRef] [PubMed]

- Liu, X.; Zhao, D.; Jia, W.; Ji, W.; Ruan, C.; Sun, Y. Cucumber fruits recognition in greenhouses based on instance segmentation. IEEE Access 2019, 7, 139635–139642. [Google Scholar] [CrossRef]

- Xu, S.; Li, R.; Wang, Y.; Liu, Y.; Hu, W.; Wu, Y.; Zhang, C.; Liu, C.; Ma, C. Research and verification of convolutional neural network lightweight in BCI. Comput. Math. Methods Med. 2020, 2020, 5916818. [Google Scholar] [CrossRef] [PubMed]

- Li, X.; Pan, J.; Xie, F.; Zeng, J.; Li, Q.; Huang, X.; Liu, D.; Wang, X. Fast and accurate green pepper detection in complex backgrounds via an improved Yolov4-tiny model. Comput. Electron. Agric. 2021, 191, 106503. [Google Scholar] [CrossRef]

- Liu, Z.; Sun, M.; Zhou, T.; Huang, G.; Darrell, T. Rethinking the value of network pruning. arXiv 2018, arXiv:1810.05270. [Google Scholar]

- Yin, P.; Lyu, J.; Zhang, S.; Osher, S.; Qi, Y.; Xin, J. Understanding straight-through estimator in training activation quantized neural nets. arXiv 2019, arXiv:1903.05662. [Google Scholar]

- Gou, J.; Yu, B.; Maybank, S.J.; Tao, D. Knowledge distillation: A survey. Int. J. Comput. Vis. 2021, 129, 1789–1819. [Google Scholar] [CrossRef]

- Yap, J.W.; bin Mohd Yussof, Z.; bin Salim, S.I.; Chuan, L.K. Fixed point implementation of tiny-yolo-v2 using opencl on fpga. Int. J. Adv. Comput. Sci. Appl. 2018, 9, 506–512. [Google Scholar]

- Yi, Z.; Yongliang, S.; Jun, Z. An improved tiny-yolov3 pedestrian recognition algorithm. Optik 2019, 183, 17–23. [Google Scholar] [CrossRef]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.-C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar]

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.-C.; Chen, B.; Tan, M.; Wang, W.; Zhu, Y.; Pang, R.; Vasudevan, V.; et al. Searching for mobilenetv3. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 1314–1324. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. Efficientdet: Scalable and efficient object recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10781–10790. [Google Scholar]

- Han, K.; Wang, Y.; Tian, Q.; Guo, J.; Xu, C.; Xu, C. Ghostnet: More features from cheap operations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 1580–1589. [Google Scholar]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. Shufflenet: An extremely efficient convolutional neural network for mobile devices. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6848–6856. [Google Scholar]

- Ma, N.; Zhang, X.; Zheng, H.T.; Sun, J. Shufflenet v2: Practical guidelines for efficient cnn architecture design. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 116–131. [Google Scholar]

- Iandola, F.N.; Han, S.; Moskewicz, M.W.; Ashraf, K.; Dally, W.J.; Keutzer, K. SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and <0.5 MB model size. arXiv 2016, arXiv:1602.07360. [Google Scholar]

- Rehman, T.U.; Mahmud, M.S.; Chang, Y.K.; Jin, J.; Shin, J. Current and future applications of statistical machine learning algorithms for agricultural machine vision systems. Comput. Electron. Agric. 2019, 156, 585–605. [Google Scholar] [CrossRef]

- Sun, J.; Zhang, Y.; Cheng, X. A high precision 3D reconstruction method for bend tube axis based on binocular stereo vision. Opt. Express 2019, 27, 2292–2304. [Google Scholar] [CrossRef] [PubMed]

- Zhao, M.; Peng, Y.; Li, L. A robot system for the autorecognition and classification of apple internal quality attributes. Postharvest Biol. Technol. 2021, 180, 111615. [Google Scholar] [CrossRef]

- Wan, S.; Goudos, S. Faster R-CNN for multi-class fruit recognition using a robotic vision system. Comput. Netw. 2020, 168, 107036. [Google Scholar] [CrossRef]

- Jia, W.; Zhang, Y.; Lian, J.; Zheng, Y.; Zhao, D.; Li, C. Apple harvesting robot under information technology: A review. Int. J. Adv. Robot. Syst. 2020, 17, 1729881420925310. [Google Scholar] [CrossRef]

- Luo, L.; Tang, Y.; Lu, Q.; Chen, X.; Zhang, P.; Zou, X. A vision methodology for harvesting robot to detect cutting points on peduncles of double overlapping grape clusters in a vineyard. Comput. Ind. 2018, 99, 130–139. [Google Scholar] [CrossRef]

| Dataset | Number of Unoccluded Samples | Number of Occluded Samples | The Total Number |

|---|---|---|---|

| Training set | 5324 | 2671 | 6995 |

| Validation set | 673 | 291 | 964 |

| Test set | 644 | 235 | 879 |

| Model | Recall Rate | MAP | Inference Speed/(ms/Frame) |

|---|---|---|---|

| RetinaNet | 0.863 | 0.745 | 37 |

| Faster R-CNN | 0.942 | 0.831 | 162 |

| SSD300 | 0.792 | 0.769 | 32 |

| SSD512 | 0.812 | 0.774 | 65 |

| GNPD-YOLOv5s | 0.927 | 0.869 | 14 |

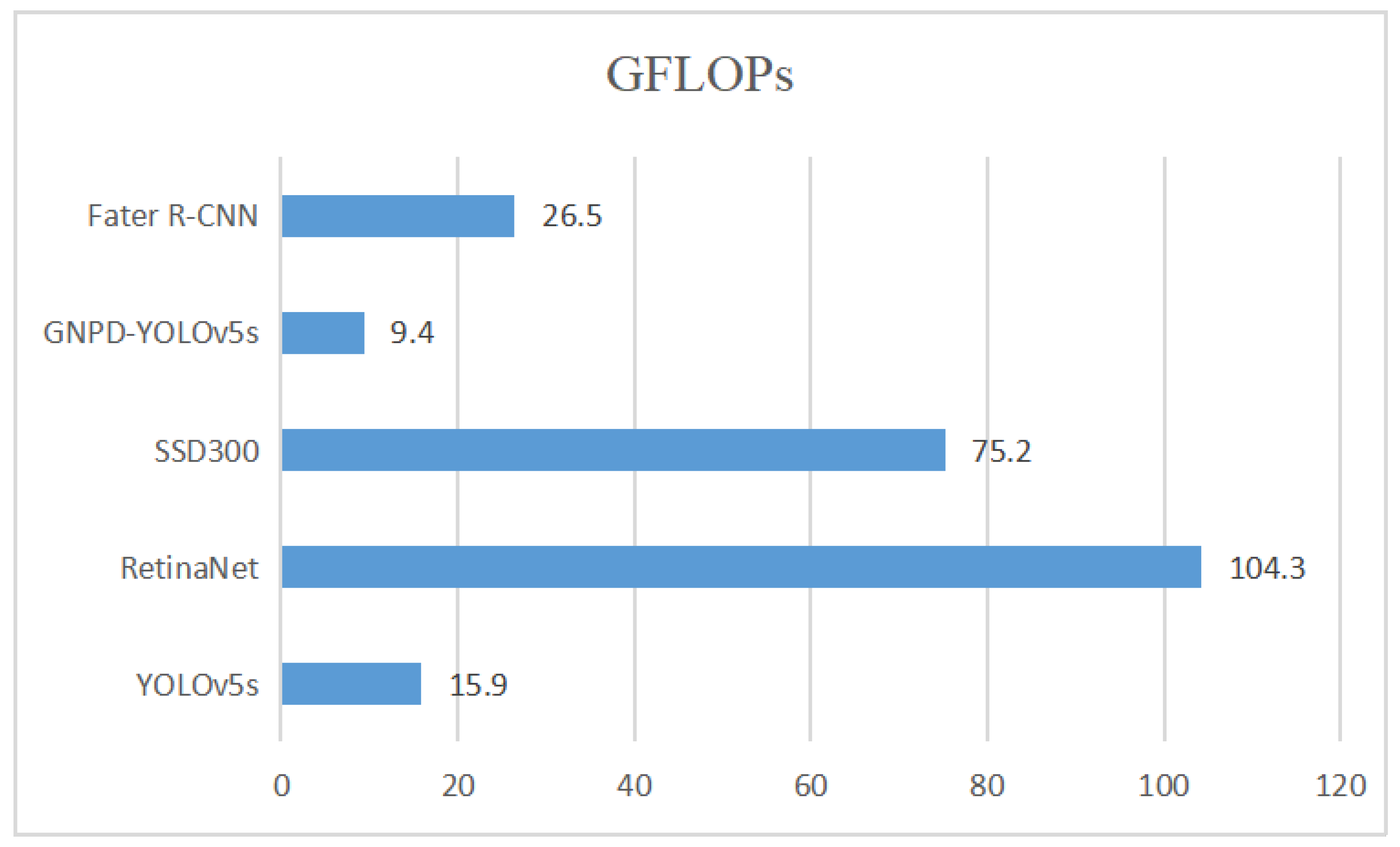

| Model | Reall Rate | Floating Point Operand/ GFLOPs | Model Size/MB | MAP | Inference Speed/ (ms/Frame) |

|---|---|---|---|---|---|

| YOLOV5s | 0.942 | 15.9 | 14.74 | 0.892 | 29 |

| GN-YOLOv5s | 0.921 | 13.2 | 12.86 | 0.873 | 18 |

| GN-YOLOv5s + Channel pruning | 0.915 | 9.4 | 7.84 | 0.856 | 16 |

| GNPD-YOLOv5s | 0.927 | 9.4 | 7.84 | 0.869 | 14 |

| Experiment Times | Theoretical Position | Locating Result of Moving 20 mm | Error/mm |

|---|---|---|---|

| 1 | (100 mm, 100 mm, 100 mm) | (100.62 mm, 100.54 mm, 100.06 mm) | 0.06 |

| 2 | (100 mm, 100 mm, 120 mm) | (100.58 mm, 100.64 mm, 120.12 mm) | 0.12 |

| 3 | (100 mm, 100 mm, 140 mm) | (100.55 mm, 100.29 mm, 140.08 mm) | 0.08 |

| 4 | (100 mm, 100 mm, 160 mm) | (100.69 mm, 100.78 mm, 161.62 mm) | 1.62 |

| 5 | (100 mm, 100 mm, 180 mm) | (100.81 mm, 100.96 mm, 180.24 mm) | 0.24 |

| 6 | (100 mm, 100 mm, 200 mm) | (100.027 mm, 100.45 mm, 200.43 mm) | 0.43 |

| 7 | (100 mm, 100 mm, 220 mm) | (100.95 mm, 100.43 mm, 220.16 mm) | 0.16 |

| 8 | (100 mm, 100 mm, 240 mm) | (100.34 mm, 101.15 mm, 241.84 mm) | 1.84 |

| Experiment Times | Shielding Area (%) | Ranging Result (m) |

|---|---|---|

| 1 | 20 | 0.608 |

| 2 | 30 | 0.609 |

| 3 | 40 | 0.553 |

| 4 | 50 | 0.562 |

| 5 | 60 | 0.574 |

| 6 | 70 | 0.586 |

| 7 | 80 | 0.547 |

| 8 | 90 | Cannot be identified |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, S.; Xie, M. Real-Time Recognition and Localization Based on Improved YOLOv5s for Robot’s Picking Clustered Fruits of Chilies. Sensors 2023, 23, 3408. https://doi.org/10.3390/s23073408

Zhang S, Xie M. Real-Time Recognition and Localization Based on Improved YOLOv5s for Robot’s Picking Clustered Fruits of Chilies. Sensors. 2023; 23(7):3408. https://doi.org/10.3390/s23073408

Chicago/Turabian StyleZhang, Song, and Mingshan Xie. 2023. "Real-Time Recognition and Localization Based on Improved YOLOv5s for Robot’s Picking Clustered Fruits of Chilies" Sensors 23, no. 7: 3408. https://doi.org/10.3390/s23073408

APA StyleZhang, S., & Xie, M. (2023). Real-Time Recognition and Localization Based on Improved YOLOv5s for Robot’s Picking Clustered Fruits of Chilies. Sensors, 23(7), 3408. https://doi.org/10.3390/s23073408