LSTM-Based Projectile Trajectory Estimation in a GNSS-Denied Environment †

Abstract

1. Introduction

- •

- to detail an LSTM-based approach to estimate projectile positions, velocities and Euler angles from the embedded IMU, the magnetic field reference, pre-flight parameters and a time vector.

- •

- to present BALCO (BALlistic COde) [21] used to generate the dataset. This simulator provides true-to-life trajectories of several projectiles according to the ammunition parameters.

- •

- to investigate different normalization forms of the LSTM input data in order to evaluate their contribution on the estimation accuracy. For this purpose, several LSTMs are trained with different input data normalizations.

- •

- to study the impact of the local navigation frame rotation on the estimation accuracy. Rotating the local navigation frame during the training step allows having similar variation ranges along the three axes, especially for the lateral position, which is extremely small compared to the two other axes. This method shares the same goals as normalization but without any information loss.

- •

- to examine the influence of inertial sensor models on estimation accuracy. For this purpose, two inertial sensor error models are studied in order to evaluate their influence on LSTM predictions.

- •

- to compare the LSTM accuracy to a Dead-Reckoning, performed on finned mortar trajectory. Estimation methods are evaluated through error criteria based on the Root Mean Square Error and the impact point error.

2. Related Work

2.1. Model-Based Projectile Trajectory Estimation

2.2. AI-Based Trajectory Estimation

- Surveillance and target recognition where machine learning algorithms applied to computer vision detect, identify and track objects of interest.For example, the Maven project, presented by the US Department of Defense (DoD), focuses on automatic target identification and localization from images collected by Aerial Vehicles [11].

- Predictive maintenance to establish the optimal time to change a part of a system, as the US Army does on F-16 aircraft [12].

- Military training where AI is used in training simulation software to improve efficiency through various scenarios, such as AIMS (Artificial Intelligence for Military Simulation) [13].

- Analysis and decision support to extract and deal with relevant elements in an information flow, to analyze a field or to predict events. The Defense Advanced Research Projects Agency (DARPA) aims to equip US Army helicopter pilots with augmented reality helmets to support them in operations [14].

- Cybersecurity, as military systems are strongly sensitive to cyberattacks leading to loss and theft of critical information. To this end, the DeepArmor program from SparkCognition uses AI to protect, detect and block networks, computer programs and data from cyber threats [15].

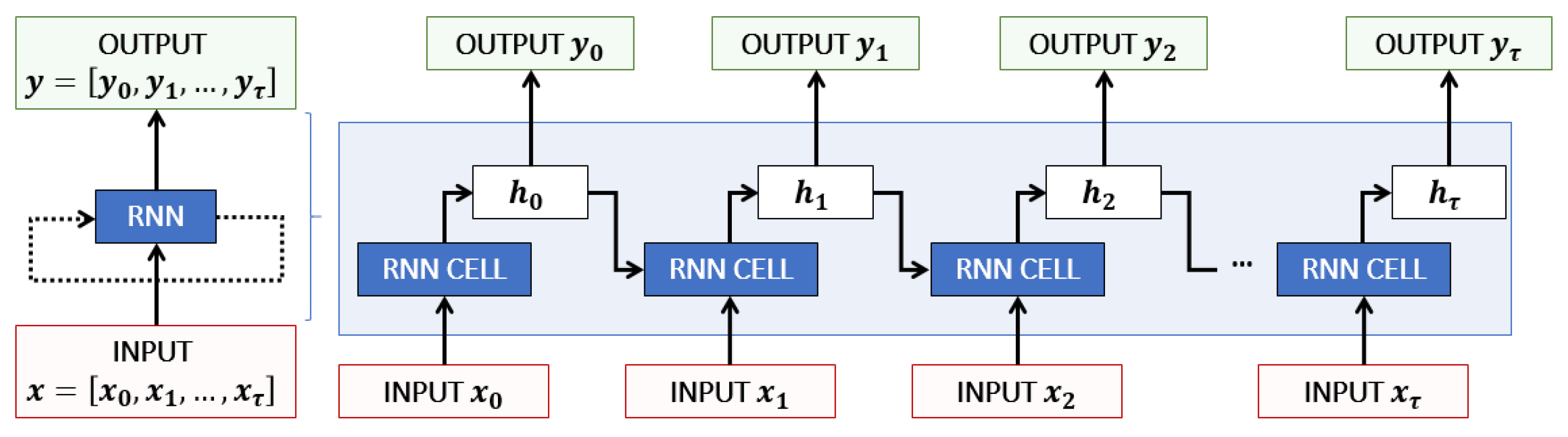

2.2.1. Recurrent Neural Networks (RNNs)

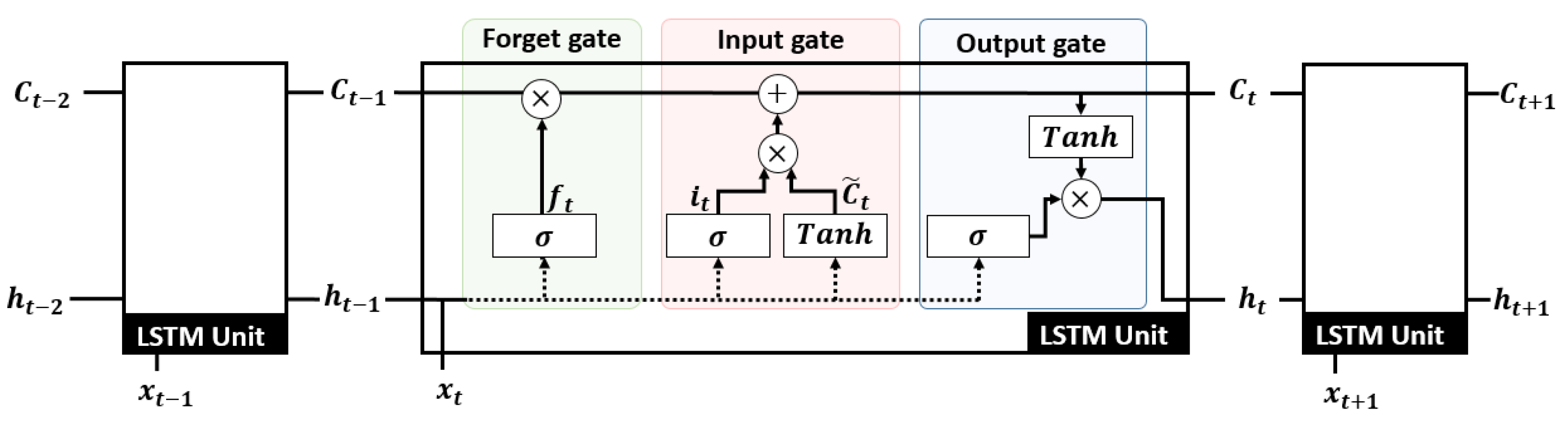

2.2.2. Long Short-Term Memory Cell

- –

- the forget gate filters, through a Sigmoid function , data contained in the concatenation of and . Data are forgotten for values close to 0 and are memorized for values close to 1. The forget gate model is:

- –

- the input gate extracts relevant information from by applying a Sigmoid and a Tanh function. The input gate is represented by the following:The memory cell is updated from the forget gate and the input gate and , to memorize pertinent data:

- –

- the output gate defines the next hidden state containing information about previous inputs. The hidden state is updated with the memory cell normalized by a Tanh function and normalized by a Sigmoid function:with and , the different gate weights and biases.

3. Problem Formulation

3.1. The Projectile Trajectory Dataset BALCO (BALlistic COde)

- the inertial measurements in the body frame b and in the sensor frame s, i.e., gyrometer , accelerometer and magnetometer measurements. Three kinds of inertial measurements are available:

- –

- the Perfect IMU measurements performed in the body frame b (red frame in Figure 3), in the ideal case where all the three inertial sensors are perfectly aligned with the projectile gravity center and where no sensor default model is taken into account providing ideal inertial measurements, i.e., without any noise or bias. These measurements are not exploited in this work but are necessary to provide realistic inertial data.

- –

- the IMU measurements performed in the sensor frame s (green frame in Figure 3): issued from the Perfect IMU measurements where a sensor error model is added. This error model, specific to each sensor axis, includes a misalignment, a sensitivity factor, a bias and a noise (assumed zero mean white Gaussian noise). Thus, this measurement accurately models an IMU embedded in a finned projectile.

- –

- the IMU DYN measurements performed in the sensor frame s (green frame in Figure 3): issued from IMU measurements to which a transfer function is added to each sensor. For each sensor, IMU DYN measurements are modeled by:with the IMU measurements of the considered sensor, the corresponding IMU DYN measurements and with a and b, the coefficients of the sensor transfer function defined via BALCO. This sensor model allows to model the response of the three sensors over the operating range.

- the magnetic field reference in the local navigation frame n, assumed constant during the projectile flight.

- flight parameters, which are, in the case of a finned projectile, the fin angle to stabilize projectiles, the initial velocity at barrel exit and the barrel elevation angle , relatively important to obtain ballistic trajectories with short ranges.

- a time vector where is the IMU sampling period: .

- the reference trajectory, i.e., the projectile position , velocity and Euler angles in the local navigation frame n at the IMU frequency. This trajectory is used to evaluate the LSTMs accuracy and to compute errors.

3.2. Data Characteristics and LSTM Requirements

- -

- the inertial data, including IMU or IMU DYN measurements in the sensor frame s and the reference magnetic field in the local navigation frame n presented in Section 3.1,

- -

- the flight parameters. In the case of a finned projectile, the three flight parameters are the fin angle , the initial velocity and the the barrel elevation angle .

- -

- the time vector, such as with k the considered time step and the IMU sampling period.

- -

- trained to estimate 9 output features which are the projectile position , velocity and Euler angles in the navigation frame n.

- -

- trained to estimate 3 output features, which are the projectile position expressed in the navigation frame n.

- -

- trained to estimate 3 output features which are the projectile velocity expressed in the navigation frame n.

- -

- trained to estimate 3 output features which are the projectile Euler angles in the navigation frame n.

4. LSTM Input Data Preprocessing

4.1. LSTM Input Data Normalization

- Min/Max normalization : with and the maximum and minimum of x.

- Standard Deviation normalization : with x the quantity to normalize, its mean and its standard deviation. Thus, is a quantity with a zero-mean and a standard deviation of one.

4.2. Local Navigation Frame Rotation

5. Results and Analysis

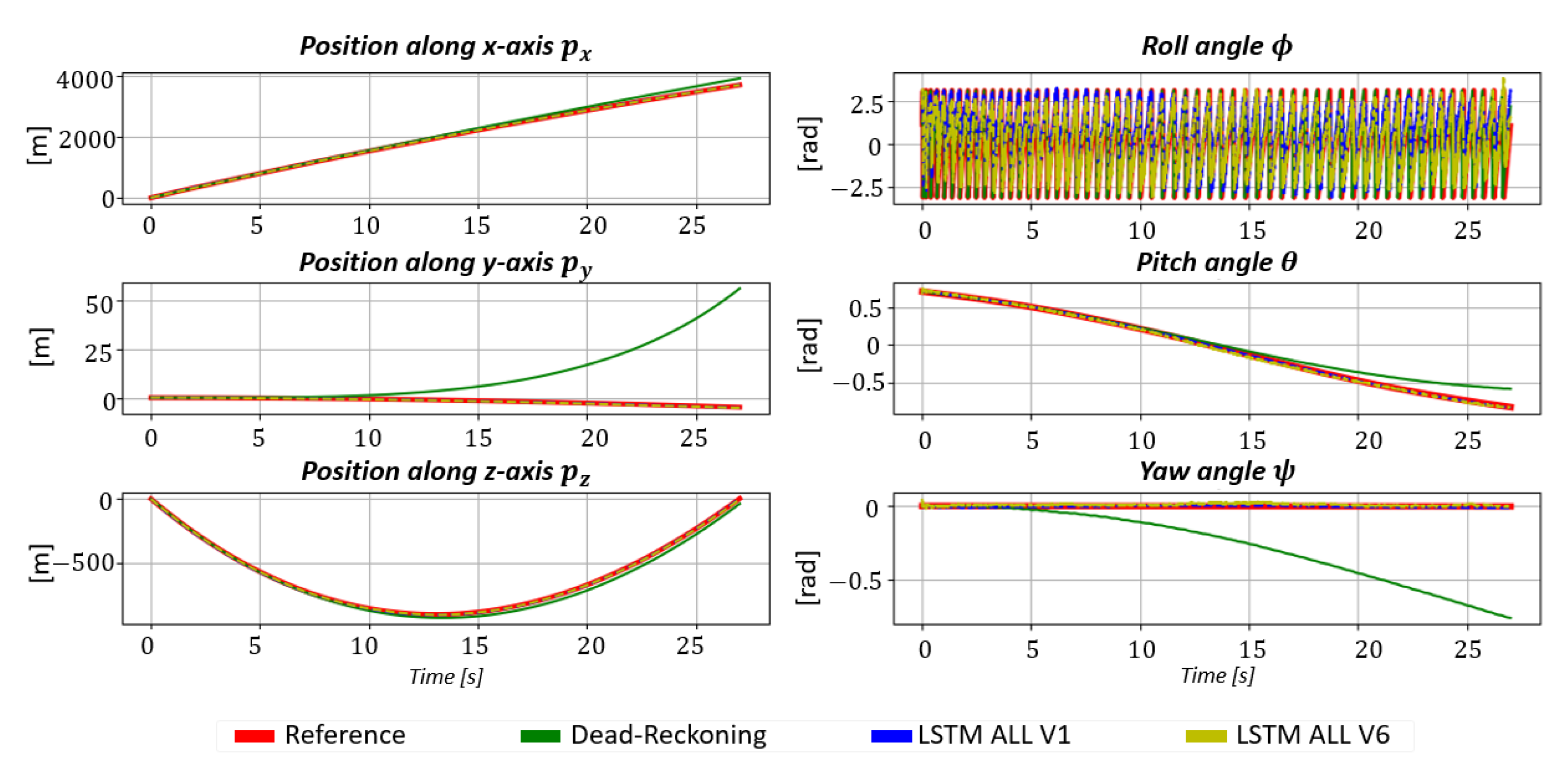

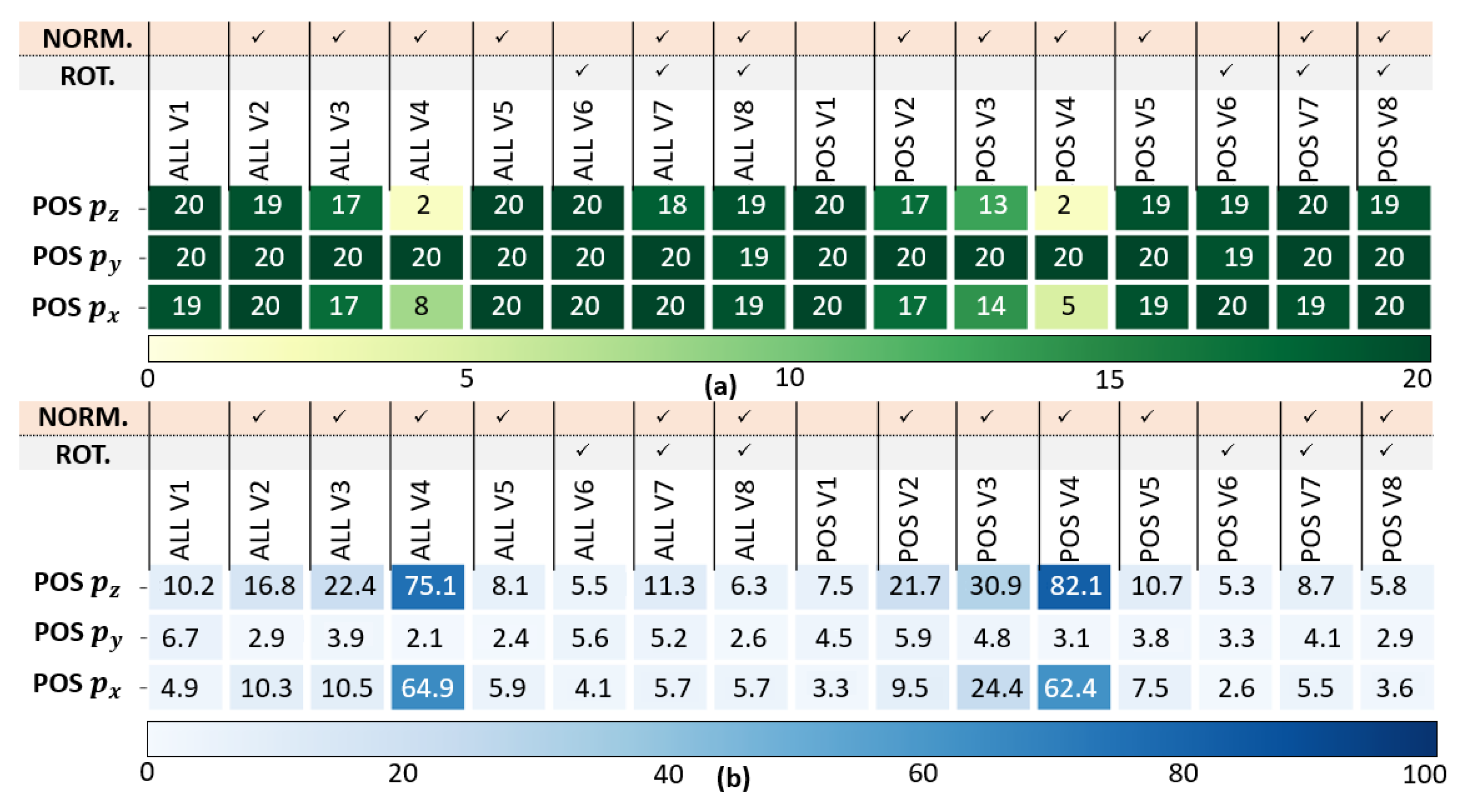

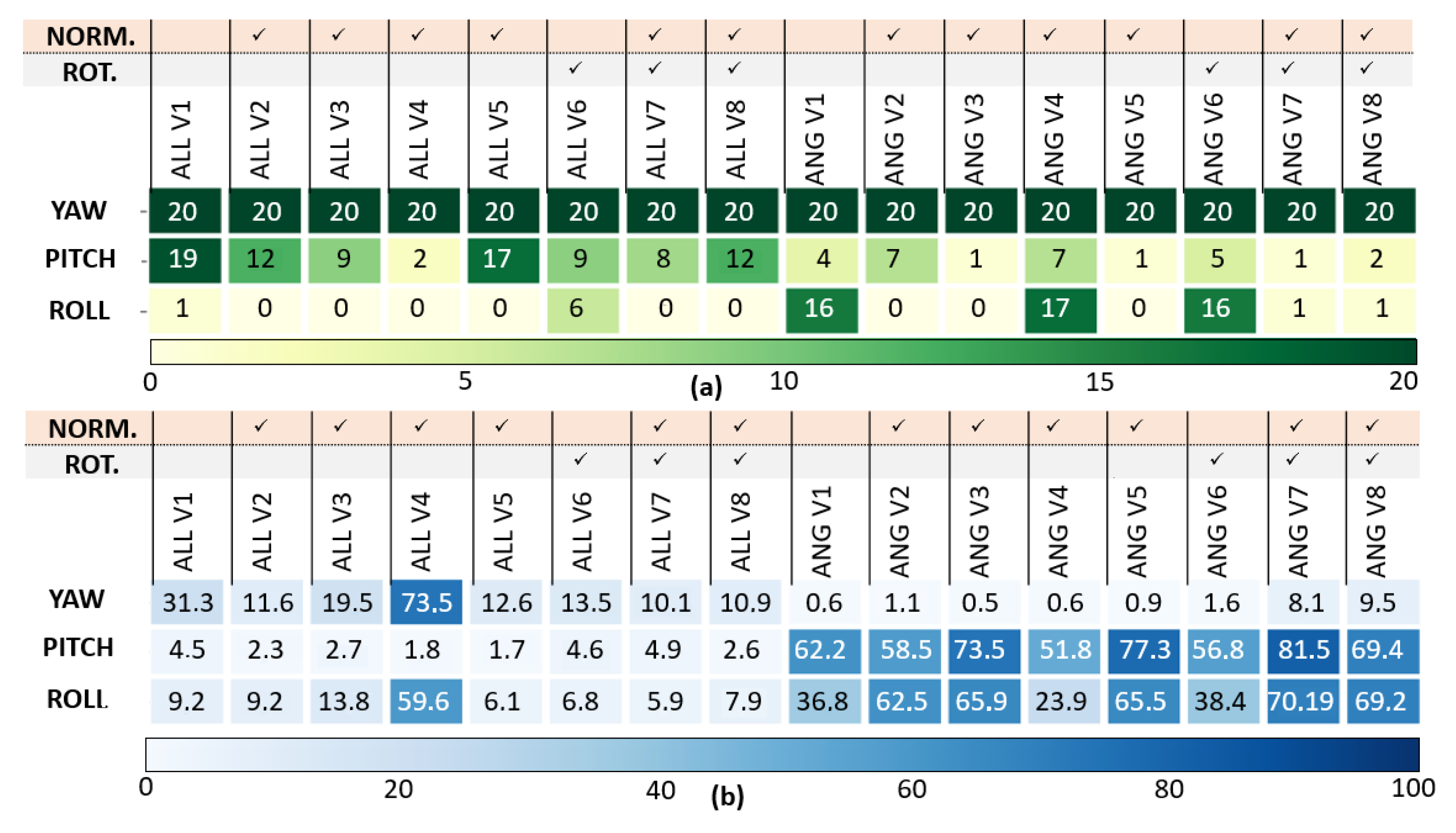

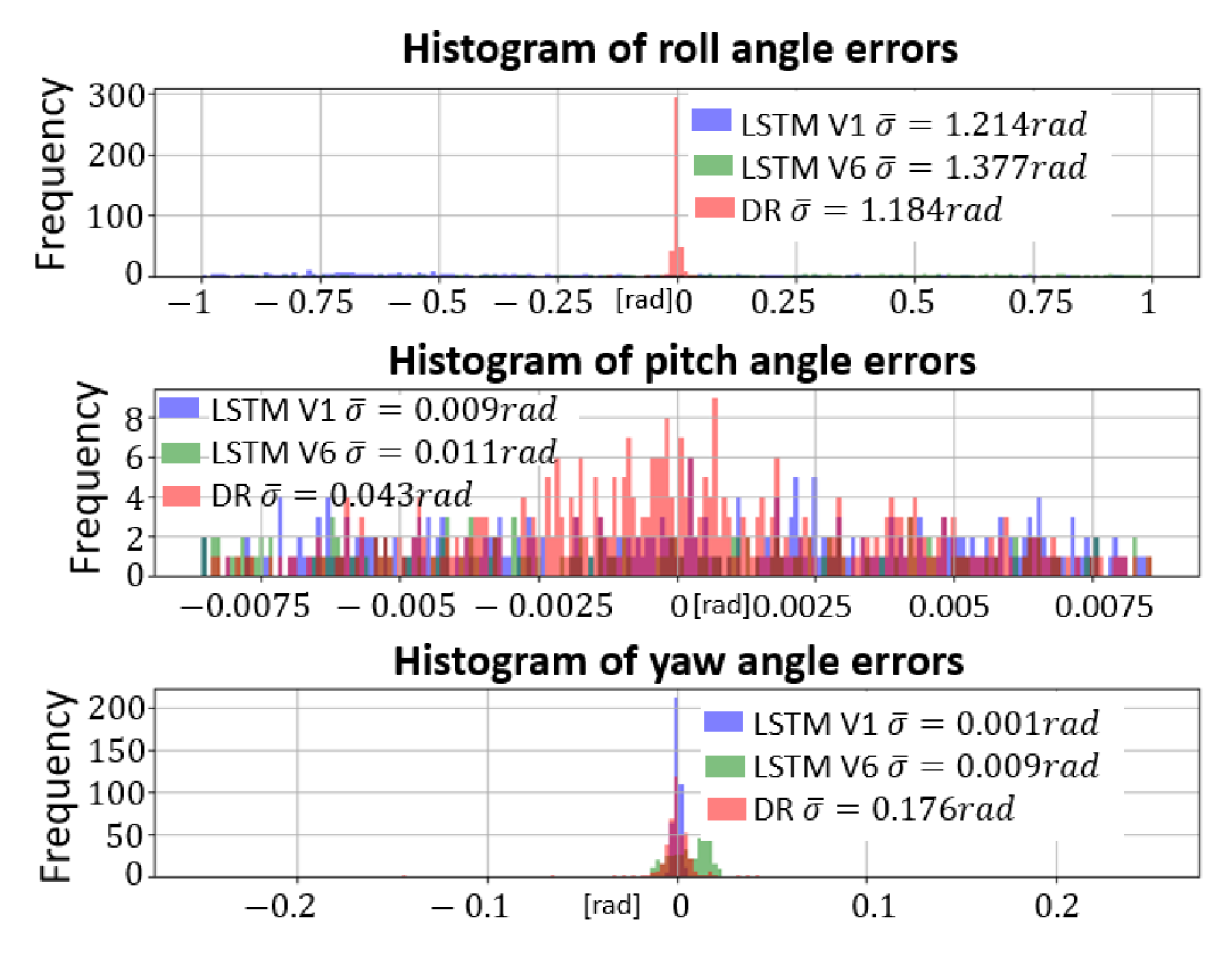

5.1. Impact of the Input Data Normalization and the Local Navigation Frame Rotation

5.1.1. Qualitative Validation: One Finned Projectile Fire Simulation

5.1.2. Quantitative Evaluation: Analysis on the Whole Test Dataset

- Success Rate : number of simulations where a LSTM RMSE is strictly smaller than the Dead-Reckoning.

- Error Rate : percentage of LSTM error compared to Dead-Reckoning errors.

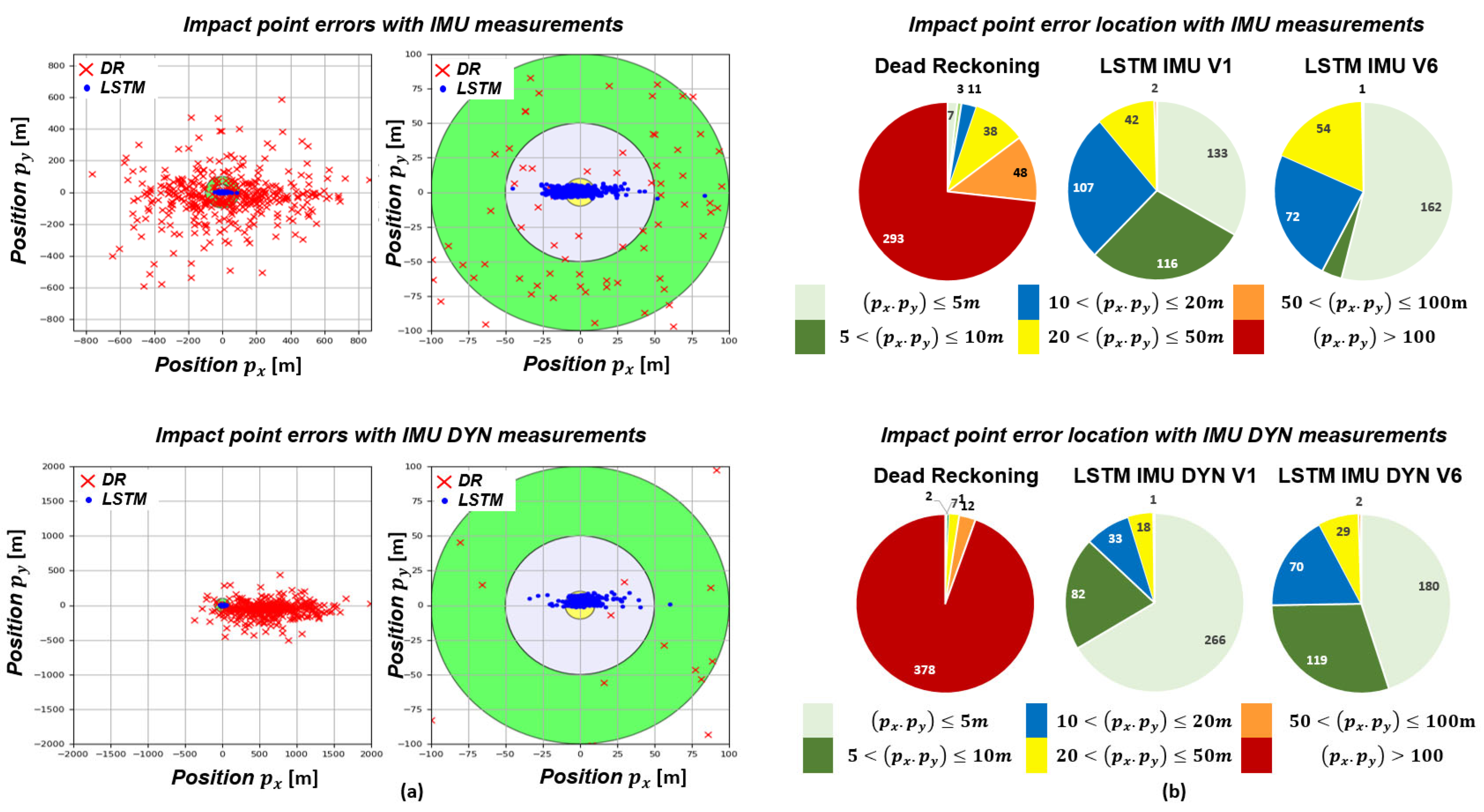

5.2. Impact of Inertial Measurement Type and Local Navigation Frame Rotation on Estimation Accuracy

5.2.1. Impact of the Local Navigation Frame Rotation and IMU Measurement

5.2.2. Impact of the Local Navigation Frame Rotation and IMU DYN Measurement

5.2.3. Evaluation Metric

- Mean Absolute Error:with x the reference, the estimate N, the number of samples.

- SCORE: Number of simulations in the test dataset where the considered method obtains the smallest RMSE.

5.2.4. Errors at Impact Point

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Groves, P.D. Principles of GNSS, inertial, and multisensor integrated navigation systems. IEEE Aerosp. Electron. Syst. Mag. 2015, 30, 26–27. [Google Scholar] [CrossRef]

- Zhao, H.; Li, Z. Ultra-tight GPS/IMU integration based long-range rocket projectile navigation. Def. Sci. J. 2016, 66, 64–70. [Google Scholar] [CrossRef]

- Fairfax, L.D.; Fresconi, F.E. Loosely-coupled GPS/INS state estimation in precision projectiles. In Proceedings of the 2012 IEEE/ION Position, Location and Navigation Symposium, Myrtle Beach, SC, USA, 23–26 April 2012; pp. 620–624. [Google Scholar]

- Wells, L.L. The projectile GRAM SAASM for ERGM and Excalibur. In Proceedings of the IEEE 2000. Position Location and Navigation Symposium (Cat. No. 00CH37062), San Diego, CA, USA, 13–16 March 2000; pp. 106–111. [Google Scholar]

- Duckworth, G.L.; Baranoski, E.J. Navigation in GNSS-denied environments: Signals of opportunity and beacons. In Proceedings of the NATO Research and Technology Organization (RTO) Sensors and Technology Panel (SET) Symposium; 2007; pp. 1–14. [Google Scholar]

- Ruegamer, A.; Kowalewski, D. Jamming and spoofing of gnss signals—An underestimated risk?! Proc. Wisdom Ages Chall. Mod. World 2015, 3, 17–21. [Google Scholar]

- Schmidt, D.; Radke, K.; Camtepe, S.; Foo, E.; Ren, M. A survey and analysis of the GNSS spoofing threat and countermeasures. ACM Comput. Surv. (CSUR) 2016, 48, 1–31. [Google Scholar] [CrossRef]

- Roux, A.; Changey, S.; Weber, J.; Lauffenburger, J.P. Projectile trajectory estimation: Performance analysis of an Extended Kalman Filter and an Imperfect Invariant Extended Kalman Filter. In Proceedings of the 2021 9th International Conference on Systems and Control (ICSC), Caen, France, 24–26 November 2021; pp. 274–281. [Google Scholar]

- Combettes, C.; Changey, S.; Adam, R.; Pecheur, E. Attitude and velocity estimation of a projectile using low cost magnetometers and accelerometers. In Proceedings of the 2018 IEEE/ION Position, Location and Navigation Symposium (PLANS), Monterey, CA, USA, 23–26 April 2018; pp. 650–657. [Google Scholar]

- Fiot, A.; Changey, S.; Petit, N. Attitude estimation for artillery shells using magnetometers and frequency detection of accelerometers. Control. Eng. Pract. 2022, 122, 105080. [Google Scholar] [CrossRef]

- Tarraf, D.C.; Shelton, W.; Parker, E.; Alkire, B.; Carew, D.G.; Grana, J.; Levedahl, A.; Léveillé, J.; Mondschein, J.; Ryseff, J.; et al. The Department of Defense Posture for Artificial Intelligence: Assessment and Recommendations; Technical Report; Rand National Defense Research Institute: Santa Monica, CA, USA, 2019. [Google Scholar]

- Zeldam, S. Automated Failure Diagnosis in Aviation Maintenance Using Explainable Artificial Intelligence (XAI). Master’s Thesis, University of Twente, Enschede, The Netherlands, 2018. [Google Scholar]

- Ustun, V.; Kumar, R.; Reilly, A.; Sajjadi, S.; Miller, A. Adaptive synthetic characters for military training. arXiv 2021, arXiv:2101.02185. [Google Scholar]

- Svenmarck, P.; Luotsinen, L.; Nilsson, M.; Schubert, J. Possibilities and challenges for artificial intelligence in military applications. In Proceedings of the NATO Big Data and Artificial Intelligence for Military Decision Making Specialists’ Meeting, Bordeaux, France, 30 June–1 July 2018; pp. 1–16. [Google Scholar]

- Ventre, D. Artificial Intelligence, Cybersecurity and Cyber Defence; John Wiley & Sons: Hoboken, NJ, USA, 2020. [Google Scholar]

- Shi, Z.; Xu, M.; Pan, Q.; Yan, B.; Zhang, H. LSTM-based flight trajectory prediction. In Proceedings of the 2018 International Joint Conference on Neural Networks (IJCNN), Rio de Janeiro, Brazil, 8–13 July 2018; pp. 1–8. [Google Scholar]

- Gaiduchenko, N.E.; Gritsyk, P.A.; Malashko, Y.I. Multi-Step Ballistic Vehicle Trajectory Forecasting Using Deep Learning Models. In Proceedings of the 2020 International Conference Engineering and Telecommunication (En&T), Dolgoprudny, Russia, 25–26 November 2020; pp. 1–6. [Google Scholar]

- Al-Molegi, A.; Jabreel, M.; Ghaleb, B. STF-RNN: Space time features-based recurrent neural network for predicting people next location. In Proceedings of the 2016 IEEE Symposium Series on Computational Intelligence (SSCI), Athens, Greece, 6–9 December 2016; pp. 1–7. [Google Scholar]

- Sherstinsky, A. Fundamentals of recurrent neural network (RNN) and long short-term memory (LSTM) network. Phys. D Nonlinear Phenom. 2020, 404, 132306. [Google Scholar] [CrossRef]

- Yu, Y.; Si, X.; Hu, C.; Zhang, J. A review of recurrent neural networks: LSTM cells and network architectures. Neural Comput. 2019, 31, 1235–1270. [Google Scholar] [CrossRef] [PubMed]

- Wey, P.; Corriveau, D.; Saitz, T.A.; de Ruijter, W.; Strömbäck, P. BALCO 6/7-DoF trajectory model. In Proceedings of the 29th International Symposium on Ballistics, Edinburgh, Scotland, 9–13 May 2016; Volume 1, pp. 151–162. [Google Scholar]

- Liu, F.; Su, Z.; Zhao, H.; Li, Q.; Li, C. Attitude measurement for high-spinning projectile with a hollow MEMS IMU consisting of multiple accelerometers and gyros. Sensors 2019, 19, 1799. [Google Scholar] [CrossRef] [PubMed]

- Rogers, J.; Costello, M. Design of a roll-stabilized mortar projectile with reciprocating canards. J. Guid. Control Dyn. 2010, 33, 1026–1034. [Google Scholar] [CrossRef]

- Jardak, N.; Adam, R.; Changey, S. A Gyroless Algorithm with Multi-Hypothesis Initialization for Projectile Navigation. Sensors 2021, 21, 7487. [Google Scholar] [CrossRef] [PubMed]

- Radi, A.; Zahran, S.; El-Sheimy, N. GNSS Only Reduced Navigation System Performance Evaluation for High-Speed Smart Projectile Attitudes Estimation. In Proceedings of the 2021 International Telecommunications Conference (ITC-Egypt), Alexandria, Egypt, 13–15 July 2021; pp. 1–4. [Google Scholar]

- Aykenar, M.B.; Boz, I.C.; Soysal, G.; Efe, M. A Multiple Model Approach for Estimating Roll Rate of a Very Fast Spinning Artillery Rocket. In Proceedings of the 2020 IEEE 23rd International Conference on Information Fusion (FUSION), Rustenburg, South Africa, 6–9 July 2020; pp. 1–7. [Google Scholar]

- Changey, S.; Pecheur, E.; Bernard, L.; Sommer, E.; Wey, P.; Berner, C. Real time estimation of projectile roll angle using magnetometers: In-flight experimental validation. In Proceedings of the 2012 IEEE/ION Position, Location and Navigation Symposium, Myrtle Beach, SC, USA, 23–26 April 2012; pp. 371–376. [Google Scholar]

- Zhao, H.; Su, Z. Real-time estimation of roll angle for trajectory correction projectile using radial magnetometers. IET Radar Sonar Navig. 2020, 14, 1559–1570. [Google Scholar] [CrossRef]

- Changey, S.; Beauvois, D.; Fleck, V. A mixed extended-unscented filter for attitude estimation with magnetometer sensor. In Proceedings of the 2006 American Control Conference, Minneapolis, MN, USA, 14–16 June 2006; p. 6. [Google Scholar]

- Schmidt, G.T. Navigation sensors and systems in GNSS degraded and denied environments. Chin. J. Aeronaut. 2015, 28, 1–10. [Google Scholar] [CrossRef]

- Fiot, A.; Changey, S.; Petit, N.C. Estimation of air velocity for a high velocity spinning projectile using transerse accelerometers. In Proceedings of the 2018 AIAA Guidance, Navigation, and Control Conference, Kissimmee, FL, USA, 8–12 January 2018; p. 1349. [Google Scholar]

- Changey, S.; Fleck, V.; Beauvois, D. Projectile attitude and position determination using magnetometer sensor only. In Proceedings of the Intelligent Computing: Theory and Applications III; SPIE: Orlando, FL, USA, 2005; Volume 5803, pp. 49–58. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Hochreiter, S.; Bengio, Y.; Frasconi, P.; Schmidhuber, J. Gradient flow in recurrent nets: The difficulty of learning long-term dependencies. In A Field Guide to Dynamical Recurrent Neural Networks; IEEE Press: Piscataway, NJ, USA, 2001. [Google Scholar]

- Park, S.H.; Kim, B.; Kang, C.M.; Chung, C.C.; Choi, J.W. Sequence-to-sequence prediction of vehicle trajectory via LSTM encoder-decoder architecture. In Proceedings of the 2018 IEEE intelligent vehicles symposium (IV), Changshu, China, 26–30 June 2018; pp. 1672–1678. [Google Scholar]

- Sørensen, K.A.; Heiselberg, P.; Heiselberg, H. Probabilistic maritime trajectory prediction in complex scenarios using deep learning. Sensors 2022, 22, 2058. [Google Scholar] [CrossRef] [PubMed]

- Barsoum, E.; Kender, J.; Liu, Z. Hp-gan: Probabilistic 3d human motion prediction via gan. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 1418–1427. [Google Scholar]

- Hou, L.h.; Liu, H.j. An end-to-end lstm-mdn network for projectile trajectory prediction. In Proceedings of the Intelligence Science and Big Data Engineering, Big Data and Machine Learning: 9th International Conference, IScIDE 2019, Nanjing, China, 17–20 October 2019; pp. 114–125. [Google Scholar]

- Corriveau, D. Validation of the NATO Armaments Ballistic Kernel for use in small-arms fire control systems. Def. Technol. 2017, 13, 188–199. [Google Scholar] [CrossRef]

- Roux, A.; Changey, S.; Weber, J.; Lauffenburger, J.P. Cnn-based invariant extended kalman filter for projectile trajectory estimation using imu only. In Proceedings of the 2021 International Conference on Control, Automation and Diagnosis (ICCAD), Grenoble, France, 3–5 November 2021; pp. 1–6. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

| Name | Normalization | Rotation |

|---|---|---|

| No | No | |

| No | ||

| No | ||

| No | ||

| No | ||

| No | Yes | |

| Yes | ||

| Yes |

| Dataset | Training Dataset: | 100 Simulations (Validation: 10 Simulations) |

| Test Dataset: | 20 Simulations | |

| Input data | Batch size: | 64 (: 20 timesteps) |

| Input data: | (with IMU measurements) | |

| Cost function: | Mean Squared Error (MSE) | |

| Training | Optimization algorithm: | ADAM [41] (Learning rate : ) |

| LSTM layer: | 2 (Hidden units: 64–128) |

| Dataset | Training Dataset: | 4000 Simulations (Validation: 400 Simulations) |

| Test Dataset: | 400 Simulations | |

| LSTM name | No normalization | (with IMU measurements) |

| & No rotation | (with IMU DYN measurements) | |

| No normalization | (with IMU measurements) | |

| & Rotation | (with IMU DYN measurements) | |

| Input data | Batch size: | 64 (: 20 timestamp) |

| Input data: | ||

| Cost function: | Mean Squared Error (MSE) | |

| Training | Optimization algorithm: | ADAM [41] (Learning rate: 1 × ) |

| LSTM layer: | 2 (Hidden units: 64–128) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Roux, A.; Changey, S.; Weber, J.; Lauffenburger, J.-P. LSTM-Based Projectile Trajectory Estimation in a GNSS-Denied Environment. Sensors 2023, 23, 3025. https://doi.org/10.3390/s23063025

Roux A, Changey S, Weber J, Lauffenburger J-P. LSTM-Based Projectile Trajectory Estimation in a GNSS-Denied Environment. Sensors. 2023; 23(6):3025. https://doi.org/10.3390/s23063025

Chicago/Turabian StyleRoux, Alicia, Sébastien Changey, Jonathan Weber, and Jean-Philippe Lauffenburger. 2023. "LSTM-Based Projectile Trajectory Estimation in a GNSS-Denied Environment" Sensors 23, no. 6: 3025. https://doi.org/10.3390/s23063025

APA StyleRoux, A., Changey, S., Weber, J., & Lauffenburger, J.-P. (2023). LSTM-Based Projectile Trajectory Estimation in a GNSS-Denied Environment. Sensors, 23(6), 3025. https://doi.org/10.3390/s23063025