1. Introduction

Biometrics is the science of establishing the identity of an individual based on the physical and/or behavioral traits of the person in either a fully automated or semi-automated manner [

1]. Currently, conventional biometrics, such as fingerprint, face, iris, voice, and DNA, have been extensively studied in the literature and widely adopted in real-life scenarios. However, these biometrics each possess their weaknesses such as being noncancelable, disclosable, and easily stolen, and they do not provide life detection [

1,

2,

3,

4]. Brain electrical activity measured at the scalp (through electroencephalography or EEG) can be a potential replacement for the above biometrics since it can be influenced by many genetic factors and presents high individuality among people. Recent studies in the biometric research community support the above statement [

5,

6]. The EEG has several unique advantages, such as being cancelable, not disclosable, and not easily stolen, and it provides life detection [

2].

Our ability to precisely measure physical phenomena related to magnetic resonance and electric currents allows us to observe the brain’s activity related to blood flow in the brain and neurons’ electrical activity. Brain activity can be measured using various imaging techniques, such as functional magnetic resonance imaging (fMRI) [

7], functional ultrasound imaging (fUS), magnetoencephalography (MEG), functional near-infrared spectroscopy (fNIRS), and electroencephalography (EEG) [

8]. EEG recordings can be acquired using portable and low-cost devices when compared to other brain imaging techniques. EEG is recorded by placing electrodes on the scalp according to the 10–20 international system [

8]. The recorded EEG waveforms are classified into different frequency bands (alpha, beta, theta, delta, and gamma waves) ranging (typically) from 0.5 Hz to 40 Hz [

8]. While the slowest brain rhythms are related to an inactive brain state, the fastest are related to information processing phenomena [

9]. EEG is widely employed for medical purposes, allowing physicians to diagnose epilepsy [

10], strokes [

11], Alzheimer’s disease (AD) [

12], and other brain disorders [

13,

14]. Additionally, it is used as the basic brain imaging technique for brain–computer interfaces [

15,

16]. In addition, in recent years, EEG signals have been employed for biometric purposes [

2,

17].

The adoption of EEG for biometrics purposes has raised some scientific questions related to the overall biometric system. To execute a biometric study using EEG signals, decisions must be made related to (1) the acquisition protocol, (2) features engineering, and (3) decision systems (or classifiers) [

2]. The acquisition protocol is strongly related to the EEG device and the EEG responses that we intend to exploit. Two types of EEG devices can be used for biometric purposes: (1) medical-grade systems and (2) low-cost systems [

2,

18,

19,

20]. Medical-grade systems contain a large number of electrodes for capturing the EEG, while wet sensors produce high-quality signals. However, the deployment of wet sensors could be impractical in EEG biometric applications. On the other hand, low-cost systems contain a small number of electrodes. In addition, they use dry sensors for reducing the cost and improving the usability of the system [

19,

20,

21].

A crucial decision of an EEG-based biometric system is related to the brain responses used. These brain responses should have properties that are different for each individual. To elicit these brain responses, a particular stimulation protocol must be followed. In the literature, three types of brain stimulation are adopted: resting state, sensory (audio/visual) stimuli, and cognitive tasks. During the resting state, the subject is usually asked to sit on a chair in a quiet environment, either with eyes open or closed. The resting state scheme is the least demanding to generate an external stimulus in terms of needing additional equipment; the users passively produce EEG signals without needing to follow additional instructions during the data collection [

4,

5]. Most works related to EEG biometrics use EEG signals produced during a resting state [

5,

21,

22,

23,

24,

25,

26,

27]. However, these systems need to collect a large amount of EEG data. Furthermore, the instruction to users “to rest” may be interpreted in many different ways by the users [

4]. On the other hand, a sensory stimuli protocol measures brain responses that are the direct result of a specific sensory event [

6,

28,

29,

30,

31,

32,

33,

34,

35,

36]. A drawback of this approach is the need for external devices to produce the stimulus for the generation of the required EEG signals, resulting in a complicated system. Finally, cognitive stimuli could be used as an alternative to the above protocols. A cognitive stimulus involves directing the subject(s) to perform various intentional tasks during the data collection [

20,

24,

37,

38,

39]. However, cognitive task stimuli also suffer from the ambiguity problem similar to the resting state case [

4].

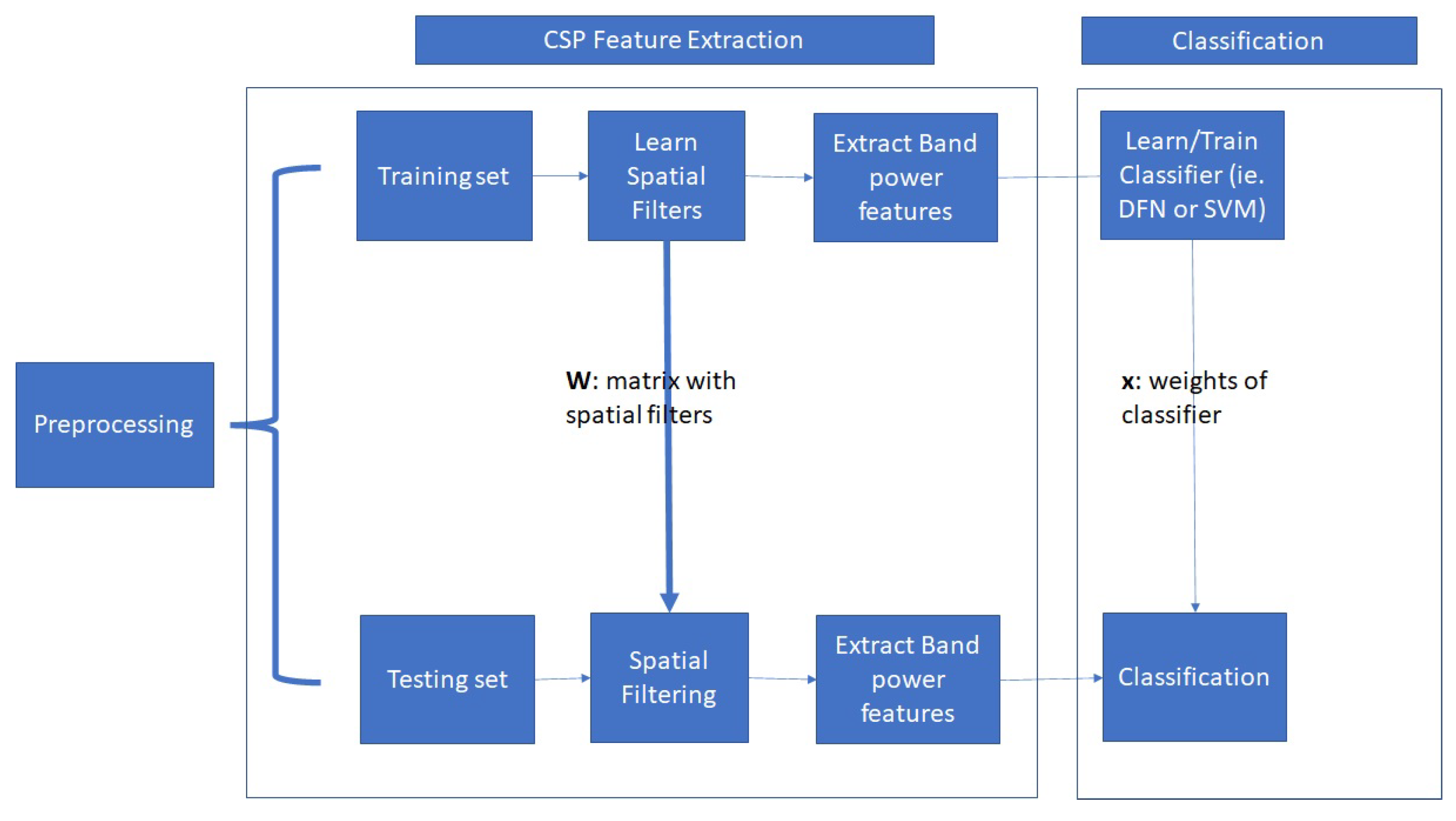

Feature engineering from EEG signals plays a significant role in the person identification problem. Hence, EEG features have been extracted from many different and divergent domains, such as the time domain or brain connectivity (i.e., graphs) domain. Extraction methods based on auto-regressive (AR) modeling, power spectral density (PSD) energy of EEG channels, wavelet packet decomposition (WPD), and phase locking values (PLV) have been used extensively in person identification with EEG signals. In the time domain, the most used features are based on AR modeling. In particular, AR coefficients, either on each EEG band or over the entire EEG frequencies band, have been extracted from resting state EEG signals [

5,

21,

24] attention tasks [

24], steady-state visual evoked potential (SSVEP) [

35], and visual stimulation [

40]. Additionally, features based on energy or statistical measures of a time series were used in [

27,

28,

33,

34]. In addition, raw EEG time series, after some basic filtering, were used as input (hence features) in deep learning (DL) schemes [

28,

36]. Furthermore, to exploit characteristics and structures over EEG’s frequencies, PSD features were extracted for various tasks [

20,

22,

25,

26,

30] related to EEG biometrics. Since, the time domain and frequency domain provide valuable information about EEG signals, it is natural to exploit the time–frequency distribution using wavelet-based features [

24,

29]. Finally, in recent years, the connectivity between brain areas has been studied extensively in EEG biometrics. For example, in [

24,

26,

37] PLV values were used to model the brain’s connectivity, while in [

23,

25,

31] functional connectivity (FC) features, such as eigenvector centrality, were proposed for person identification using EEG signals. From the above analysis, we can observe a limited use of spatial patterns for EEG-based person identification.

After the features engineering step, the next step of an EEG biometric system involves the classifier. In the EEG biometric literature, various classifiers have been used, which can be divided into four large families: similarity-based (or distance-based) methods, kernel-based methods, discriminant analysis methods, and neural networks [

2,

4]. One representative example of the similarity-based method is the k-nearest neighborhood (kNN) classifier. The k-NN classifier compares the similarity (or the distance) between the template feature (or training) samples and the query (or test) samples. The kNN has been used widely in EEG biometrics. In [

29], short-time Fourier transform (STFT)-based features were extracted from SSVEP signals and used as input into a kNN classifier, while in [

30] PSD features were utilized. In addition, in [

34], statistical features (i.e., normalized variances) extracted from SSVEP signals were fed to a kNN classifier. Finally, distance-based classifiers have been used to identify a person [

5,

21,

23,

26].

Kernel-based methods are based on the “kernel” trick, where the original finite-dimensional space is mapped into a space with a much larger dimension. Here, the assumption is that the distinction between persons’ identities in this high-dimensional space is easier to determine. The support vector machine (SVM) is the most representative kernel-based method. The SVM classifier was adopted in many EEG biometric studies to distinguish between persons [

20,

21,

27]. In contrast to the SVM and the “kernel” trick, linear discriminant analysis (LDA) uses hyperplanes to separate the data of different classes by projecting the data into a lower dimensional space. Furthermore, LDA assumes that classes are distributed normally with equal class covariance. LDA is one of the most popular classification algorithms in EEG biometrics [

19,

21,

33,

37,

40].

In the field of EEG biometrics, neural networks (NN), and especially deep NN, have also received attention. An important difference between the various DNN approaches is related to the EEG features used as input to the NN: raw EEG time series or extracted EEG features. Convolutional NNs (CNNs) were used in [

36], where the input to the CNN was raw EEG time series, while [

24] used CNN with raw EEG as input as well as CNN with various input features, such as AR coefficients, wavelets, and PLV values. Furthermore, adversarial CNNs were used in [

28] with raw EEG time series as input. In [

41], CNNs with PSD features as input were used to discriminate between persons. Overall, Deep NNs are valuable to identify a person using EEG signals. However, the quantity of data and time required for training efficient deep NNs is a major concern for their effective widespread deployment in EEG biometric systems.

SSVEP signals have been rarely used for the identification of a person. However, SSVEP-based person identification systems should leverage the advantages of current SSVEP protocols applied in BCI systems, such as the ability to design a speller. In [

29], the authors proposed an SSVEP-based biometric system, with one stimulus frequency that varied from 6 to 9 Hz. The authors collected SSVEP signals from five users. To decide about the user identity, features based on short time Fourier transform and the standard EEG bands were extracted. Then, these features were used as input to a kNN classifier. In [

34], eight subjects participated in an experiment, and six stimulus frequencies were used for the production/elicitation of SSVEP signals. Normalized variances were obtained for each stimulus frequency and each channel and were used as features. Furthermore, the kNN classifier was used to decide the identity of a user. In [

35], SSVEP signals from twenty-five subjects were used. Additionally, four different stimulus frequencies were used to evoke the SSVEP responses. Mel-frequency cepstral coefficients (MFCCs) and autoregressive (AR) reflection coefficients were used as discriminative features. The Manhattan (L1) distance was used to evaluate the similarity between users, and the decision was made to use the minimum among the distances. In [

36], two SSVEP datasets were used (5 and 12 individuals) with 3 stimulus frequencies. To decide about the identity of a user, the authors used convolutional neural networks on raw SSVEP signals and enhanced SSVEP signals, where the enhanced signals were obtained by using canonical correlation analysis (CCA).

We can observe that spatial patterns of individuals from SSVEP signals have not been utilized, and all reported works applied to a limited number of individuals and stimulus frequencies. The number of stimulus frequencies is an important aspect of the overall system since it defines and restricts our design’s options. A small number of stimulus frequencies does not give us the ability to design a biometric system using properties from an SSVEP speller. Using an SSVEP speller as a basic component of a biometric system, we can design a biometric system that simultaneously recognizes the user through SSVEP responses and passwords. Concerning EEG biometrics, and not only SSVEP-based biometrics, we can observe the limited use of spatial filtering approaches. Spatial patterns of EEG have not been investigated extensively (at least to the author’s knowledge), except for [

33] who used spatial filters in auditory evoked potentials (AEP). The novelties and contributions of our work are related to:

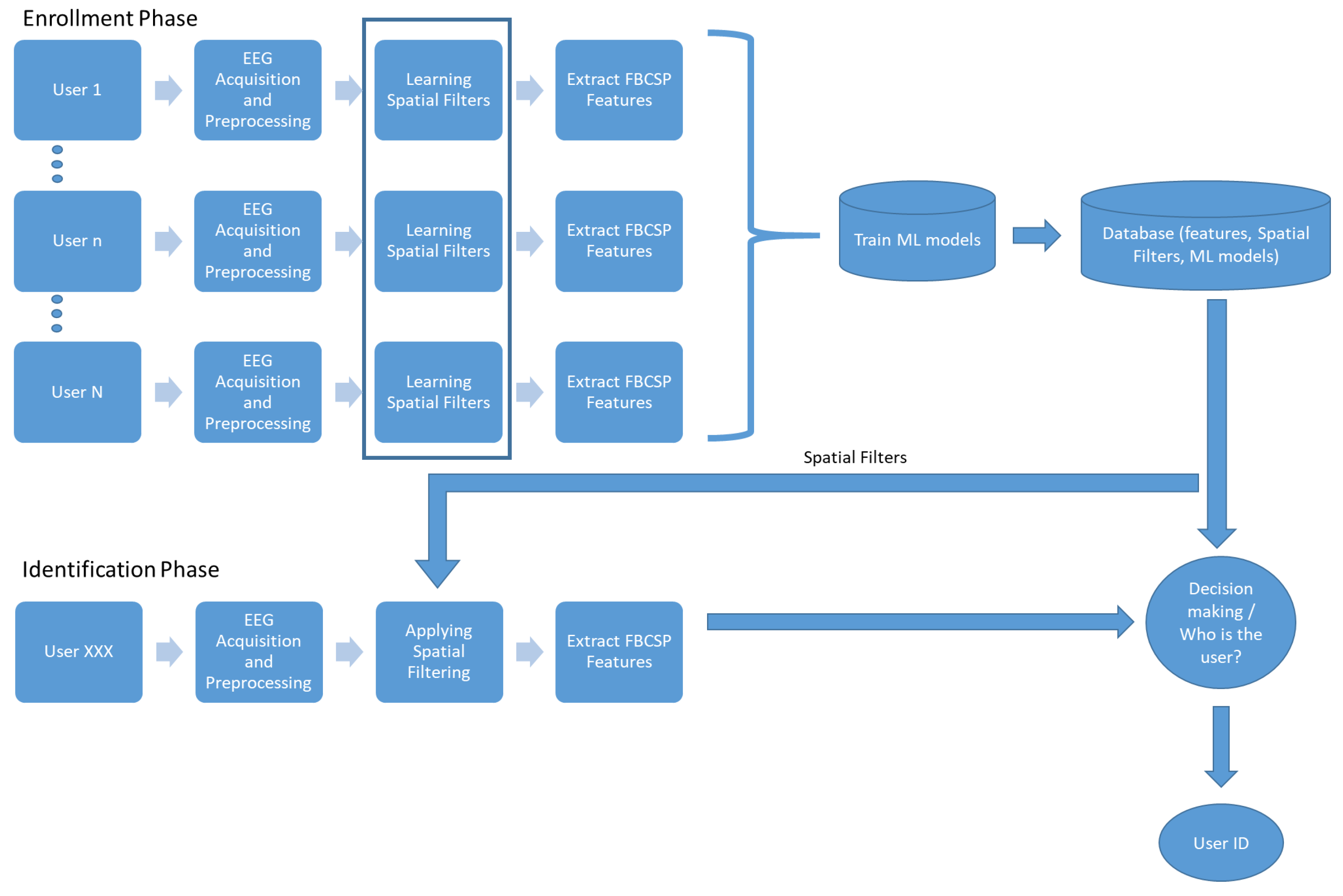

The use of SSVEP signals for person identification;

A new DL-based framework for person identification (using SSVEP signals);

A new kind of spatial features for EEG person identification using the filterbank common spatial patterns (FBCSP) methodology in multi-class problems;

An extension of a current SSVEP-based biometric system to a larger number of stimulus frequencies. This number is sufficient to design a speller.

This paper is organized as follows. In

Section 2, we provide information about the SSVEP datasets and the proposed methodology for person identification. More specifically, detailed descriptions of the spatial filtering method and the architecture of neural networks are provided. After that, in

Section 3, we provide information about the experimental settings of our experiments. Then, in

Section 4, we present a comprehensive comparison of our approach with well-known classifiers. Finally, in

Section 5, we provide a discussion and concluding remarks related to our work and its future directions.

4. Results

SSVEP signals are generated by stationary localized sources and distributed sources in the parietal and occipital areas of the brain. Our overarching goal in these experiments is to examine if SSVEP signals contain information that can be used to discriminate individuals. Furthermore, we examine if this information contains distinguishable spatial patterns between individuals. In

Table 3, we provide the results of our first experiment. Our intention in this experiment was to examine if our algorithm could identify persons using SSVEP signals from various frequencies of the stimulus. In

Table 3, we provide the performance of all reported methods. In this experiment, the length of an SSVEP trial is 5 s. The DFN method provides us a better person recognition rate than all other methods. Importantly, this observation could be extended to a wide range of frequencies. The DFN method presents better performance for 40 different stimuli frequencies ranging from 8 to 15 Hz. This is reflected in the average CRR over all stimuli. We can see that, on average, the DFN has a CRR of 99% compared to 94% and 93% of SVM-FBCSP and kNN-FBCSP, respectively. Additionally, it is important to note that for some stimuli frequencies, the CRR is perfect (100%), see for example the CRR for 9 Hz and 15 Hz. The above observations indicate that the DFN method in conjunction with carefully chosen stimuli frequency could provide excellent person identification. To examine if the observed differences in

Table 3 are statistically significant, we conducted one-way ANOVA to compare the effect of classification methods on CRR values. We performed comparisons between all reported methods. There was a significant difference in accuracy among the classification methods at the

p < 0.05 level for the three methods F(2,117) = 279,

p < 0.001. Furthermore, post hoc analysis revealed that the proposed method had significantly different accuracy from the other methods. These results indicate that the chosen method for PI has a statistically significant effect on the final model’s performance. The above experimental results were implemented using Matlab on a PC with Intel(R) Core(TM) i5-4690K CPU @ 3.50 GHz, 16 GB RAM, and 64-bit Windows 10 OS. In a typical case, the spatial filter training took up to 1.38 s, while the network training took up to 11.29 s.

In general, it is desirable to use SSVEP signals of small duration because they contribute to the design of EEG-based biometric systems that are more comfortable with a better user experience. Hence, we performed additional experiments to see the behavior of methods when we have different durations of SSVEP signals. In

Table 4, we provide the averaged CRR over all stimuli frequencies for various durations of SSVEP trials. We can observe that the DFN method provides the best recognition rate among all methods for all trials’ durations. Additionally, we conducted one-way ANOVA to compare the effect of the method on CRR values. There was a significant difference in accuracy among the classification methods at the

p < 0.05 level for the 3 methods F(2,27) = 5.89,

p = 0.008. Furthermore, post hoc analysis revealed that between the DFN and other methods, we had significantly different accuracy; however, we did not find significantly different accuracy between the SVM-FBCSP and the kNN-FBCSP. The above results indicate that the DFN presents better performance than the SVM-FBCSP and the kNN-FBCSP even if we choose a different duration of a trial in our experimental settings.

In our study, we also evaluated the usability of the comparative methods to have a more compact view of the proposed algorithms, as well as to provide comparisons with other EEG-based PI systems reported in the literature. In

Table 5, we provide the obtained results when we calculate the averaged

U measure for various trial durations. The best

U-value (101.2288) is obtained from the DFN method, where the duration of the trial is 0.5 s. Comparing our approach with those reported in [

4] concerning the

U-measure, we can see that our approach presents the best performance. It is important to note here that the significant aspect that gives us the ability to have better performance than other methods reported in [

4] is that our method presents high CRR for a very small duration of EEG trials. Clearly, other methods have presented very high CRR, similar to ours; however, these methods need larger EEG trials and more channels.

We conducted similar experiments using a second SSVEP dataset (the

EPOC dataset) to see the behavior of our approach in different settings. It is important to note here that for the acquisition of SSVEP signals, the portable Epoc device was used. In

Table 6, we provide the obtained CRR values for various frequency stimuli. We can observe that the DFN method presents the best performance among competitive methods. Furthermore, in

Table 7, we provide the averaged CRR values for various durations of EEG trials. Again, the DFN method presents the best performance for all trials’ duration except that of 1 s. Finally, in

Table 8, we provide the utility values. We can see that the DFN method provides us with the best

U value when the duration of the trial is 0.5 s. Comparing the provided results on the two SSVEP datasets, we can see that all methods present the best performance (using either the CRR measure or the

U measure) in the case of the Speller dataset. This is something that we expect to some degree since, due to experimental settings and the used EEG devices, the EEG signals in the Epoc dataset are very noisy. However, we must not underestimate the results in the

EPOC dataset since they provide indications that a portable SSVEP-based PI can be designed and used for real applications outside the lab.

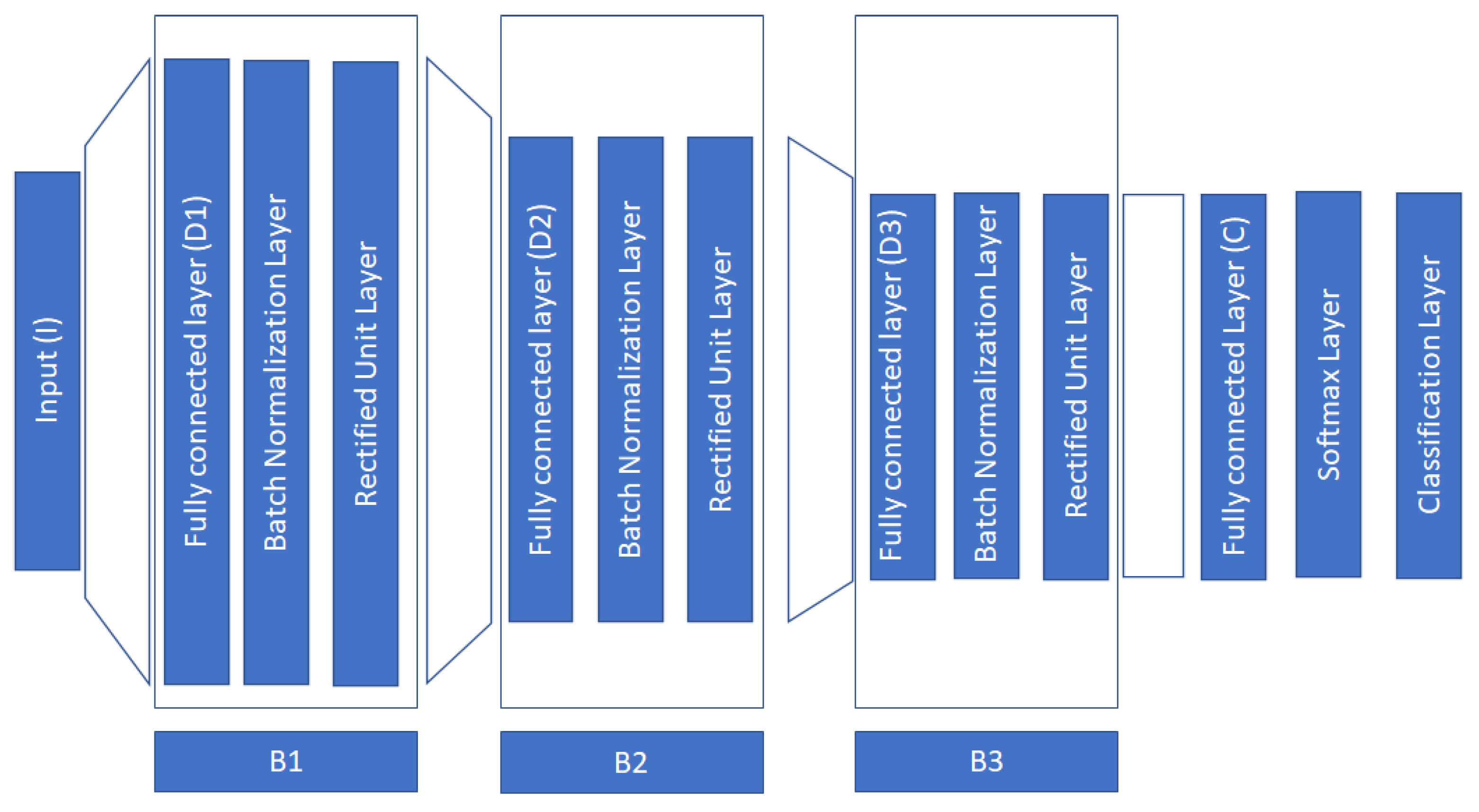

It is typical when a new DL architecture is proposed to conduct an ablation study to see how the various building layers affect the overall performance of the proposed neural network. In our study, we conducted one such study by removing major blocks of our architecture as those described in

Figure 1. More specifically our approach contains three major blocks (B1, B2, B3). We conducted experiments where we removed each block starting from the “top” of the deep architecture. The obtained results are provided in

Table 9. From these results, we can observe the significance of block B1. This block is responsible to project the input to a much higher dimension. The inclusion of the B1 block in the deep architecture gives a significant boost to the performance of the final neural network.

5. Discussion and Conclusions

In this study, a large number of subjects were used for the design of an EEG-based biometric system. Furthermore, a large number of SSVEP stimuli were used to test the performance of the proposed approach. The results provide evidence that a wide range of frequencies can be used for the stimulus without sacrificing the performance of the system. In addition, the experiments with the EPOC device provide evidence that wearable EEG devices could be used to detect the SSVEP responses for biometric purposes. Finally, an additional characteristic of our approach is the CSP features that are used for the first time to identify persons. The CSP algorithm gives us the ability to design personalized spatial filters to identify a person. In addition, we can observe that the spatial patterns of the brain can have discriminative properties when we intend to identify persons.

This work demonstrates that the proprietary use of DFN outperformed the more modern neural network architectures such as CNN. For person identification, the proposed DFN gained the consistently highest performance among the evaluated methods. Additionally, it presented better performance than CNN architectures in SSVEP-related biometric scenarios [

36]. It is evident that the proper choice of features and the deep architecture of MLP can leverage the performance of conventional neural networks. Furthermore, modern deep learning architectures are ordinarily performed on high-performance computing facilities due to the the large size of the input features and the complexity of its model. Our work has shown that traditional MLP with deep layers and small input size can provide efficient performance and tackle the computation requirement limitation of modern approaches.

In the BCI community, a BCI speller is a tool for measuring and converting brain signals to commands without the participation of the peripheral nervous system. Additionally, when an SSVEP-based BCI speller is adopted, we can design a graphical interface that resembles the traditional QWERTY keyboard. This feature of the SSVEP speller gives us the ability to design a two-step authentication system. In one such system, the user inserts the PIN through the SSVEP speller, while the authentication system simultaneously recognizes the user through his/her SSVEP responses. This system combines two modalities for the authentication process: what you know and who you are [

33]. Furthermore, such systems could be very helpful in situations where the user is not able to introduce the password by using conventional means such as keyboards. These situations rise when people deal with situations that affect the central nervous system, such as Parkinson’s disease, amyotrophic lateral sclerosis (ALS), and tetraplegia. However, in the design of SSVEP-based biometric systems, we must consider the fatigue which is evoked by the flicker [

51]. To alleviate this characteristic, it is important to design systems where the duration of SSVEP signals is small. In addition, problems are raised due to the SSVEP illiteracy phenomenon where some people do not produce valuable SSVEP responses [

52]. Finally, SSVEPs can elicit epileptic responses to luminance or chromatic stimuli, and special care must provided with respect to this issue [

51].

The non-stationarity of EEG signals is considered a major challenge in EEG signal processing, and a lot of effort has been devoted to accommodating this issue [

53]. EEG non-stationarities can be raised due to neurophysiological mechanisms related to learning, plasticity, and aging mechanisms and due to experimental conditions related to the recording quality, device configurations, and small changes in electrode locations. Hence, a significant issue that affects the performance and the acceptability of an EEG-based biometric system is the permanence of EEG features across time. To attack this effect, EEG signals must be collected across time for the particular acquisition protocol. In the literature, many efforts have been performed under this assumption; however, the time interval between sessions is one month, making it difficult to study the performance of EEG-based biometric systems in larger time intervals (larger than a year). A solution to the above problem could be the re-calibration of the EEG database in specific time intervals. Furthermore, from an algorithmic perspective, algorithms based on lifelong learning could be very useful in EEG-based biometric systems. This type of algorithm can take into consideration the time-varying distribution of EEG features and the covariance shifts of features [

53]. Future directions of our study could include extensions of our approach to fit the authentication and continuous authentication scenarios [

24]. In addition, the adoption of multi-modal systems utilizing portable EEG devices and Gait analysis using IoT devices [

54] could provide valuable information related to the identification of a person.

In concluding this paper, it is important to emphasize that we have proposed a new methodology for person identification using SSVEP signals. The new methodology is based on the combination of personalized spatial filters and deep neural networks. The provided results in two SSVEP datasets show the usefulness of our approach, especially if we take into account the usability measure. This measure provides us with a way to compare our methodology with other brain biometrics methodologies. Comparisons of our approach with other classification schemes, such as SVM-FSCSP and kNN-FBCSP, show the superiority of our approach. Additionally, the results from the ablation study justify the proposed deep architecture over other deep architectures. Finally, comparisons of our approach with other methodologies in terms of U measure show the basic advantage of our approach which is the high recognition rate using EEG signals of very small duration (0.5 s).