Accurate 3D to 2D Object Distance Estimation from the Mapped Point Cloud Data

Abstract

1. Introduction

- Triangulation measurement systems consist of a camera and laser transmitter positioned at a fixed angle. Both the position and distance of the laser transmitter from the camera are known. On the target item, the laser emits a pattern that is visible in the camera image. The point appears at a different spot in the camera’s frame of vision depending on the distance from the surface. The distance between the laser source and target object can then be calculated using trigonometry.

- Time-of-flight measurement systems are related to the time required by a laser pulse to travel from the moment it is emitted to the time the reflected light of the target is detected. The distance traveled by light can then be calculated using the speed of light and refractive index of the medium. They are employed in long-range distance estimates for applications, such as space LiDAR.

- The phase-shift measurement system demands the use of continuous lasers, which can be modulated to determine the phase difference between the two beams.

- We present robust point cloud processing for distance estimation using a BEV map and image-based triangulation;

- We propose an image-based approach for metric distance estimation from mapped point cloud data with 3D bounding box projection to the image using the E-RPN model;

- We demonstrate the viability of extracting all parameters defining the bounding boxes of the objects from the BEV representation, including their height and elevation, even though the BEV representation generally struggles to grasp the features related to the height coordinate.

2. Related Work

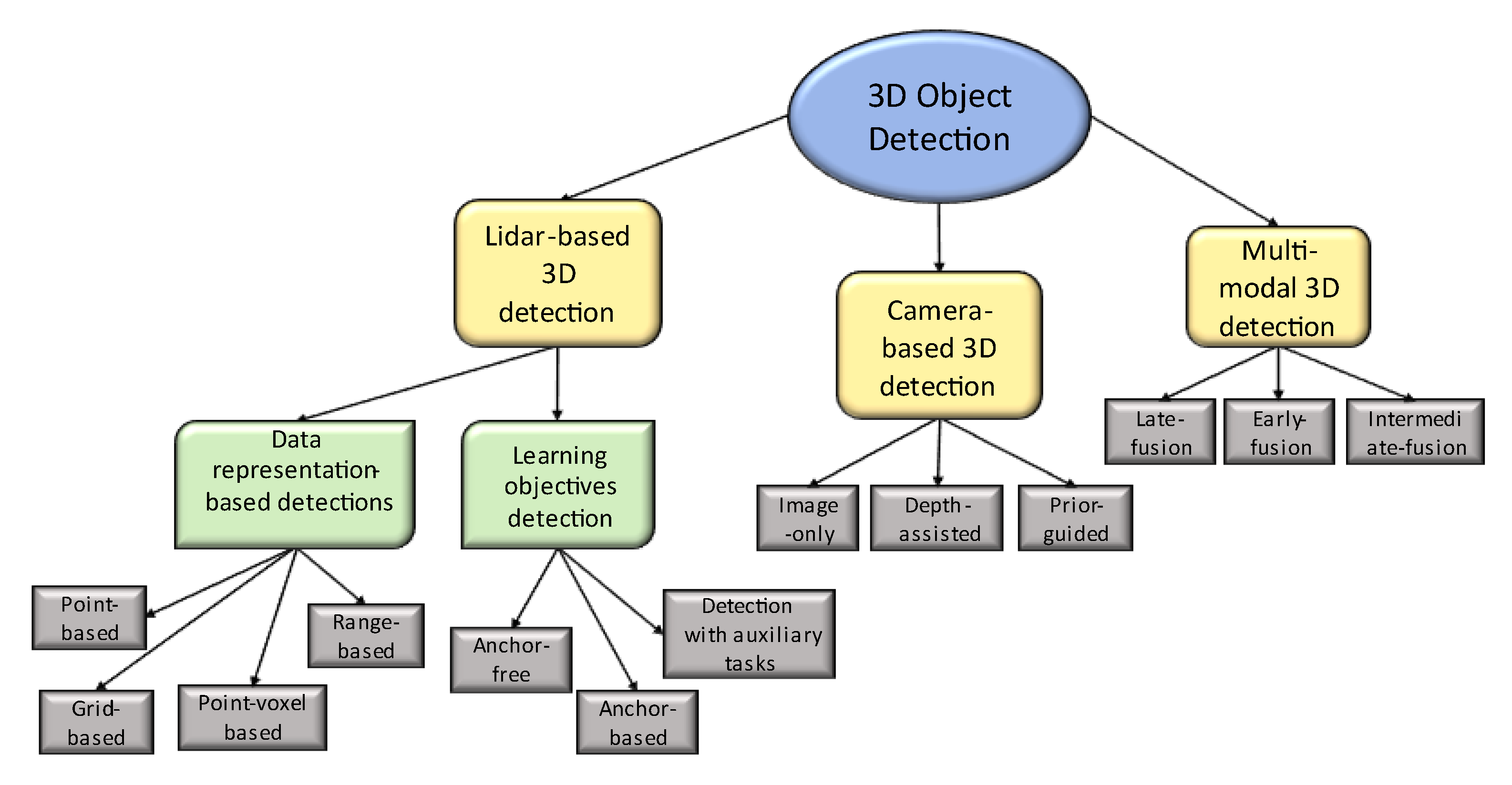

2.1. 3D Object Detection

- LiDAR-based detection;

- Data representations (points, grids, point voxels, and ranges);

- Using learning objectives.

- Camera-based detections (monocular 3D object detection);

- Multimodal detections.

2.1.1. Point-Based Detection

2.1.2. Grid-Based 3D Detection

2.2. Distance Estimation from 3D Object Detection

3. Proposed Method

3.1. KITTI Dataset

3.2. Point Cloud Conversion

3.3. Bird’s Eye View Representation

| Algorithm 1. Pseudo-code for LiDAR to BEV conversion | |

| start: PointCloud2BEV function (points) input variables: Resolution = 0.1 Side range = (−20, 20) # left side 20 m, right side 20 m view Forward range = (0, 50) # we do not consider points that are behind the vehicle Height range = (−1.8, 1.25) # assuming the position of LiDAR to be on 1.25 m processing: Creates a 2D birds eye view representation of the point cloud data Extract the points for each axis Three filters for the front-to-back, side-to-side, and height ranges Convert to pixel position values based on resolution Shift pixels to have minimum be at the (0, 0) Assign height values between a range (−1.8, 1.25 m) Rescale the height values between 0 and 255 Fill the pixel values in an image array output: Return BEV image |

3.4. Bounding Box Derivation

- Limit the complex angle to be in boundaries ;

- Inverse the rigid body transformation matrix (3 × 4 as );

- Recreate a 3D bounding box in the Velodyne coordinate system and then convert it to camera coordinates. The translational difference between the sensors is estimated by considering the following matrix multiplication:

- The target boxes are rescaled, yaw angles are obtained, and the box is drawn on the image.

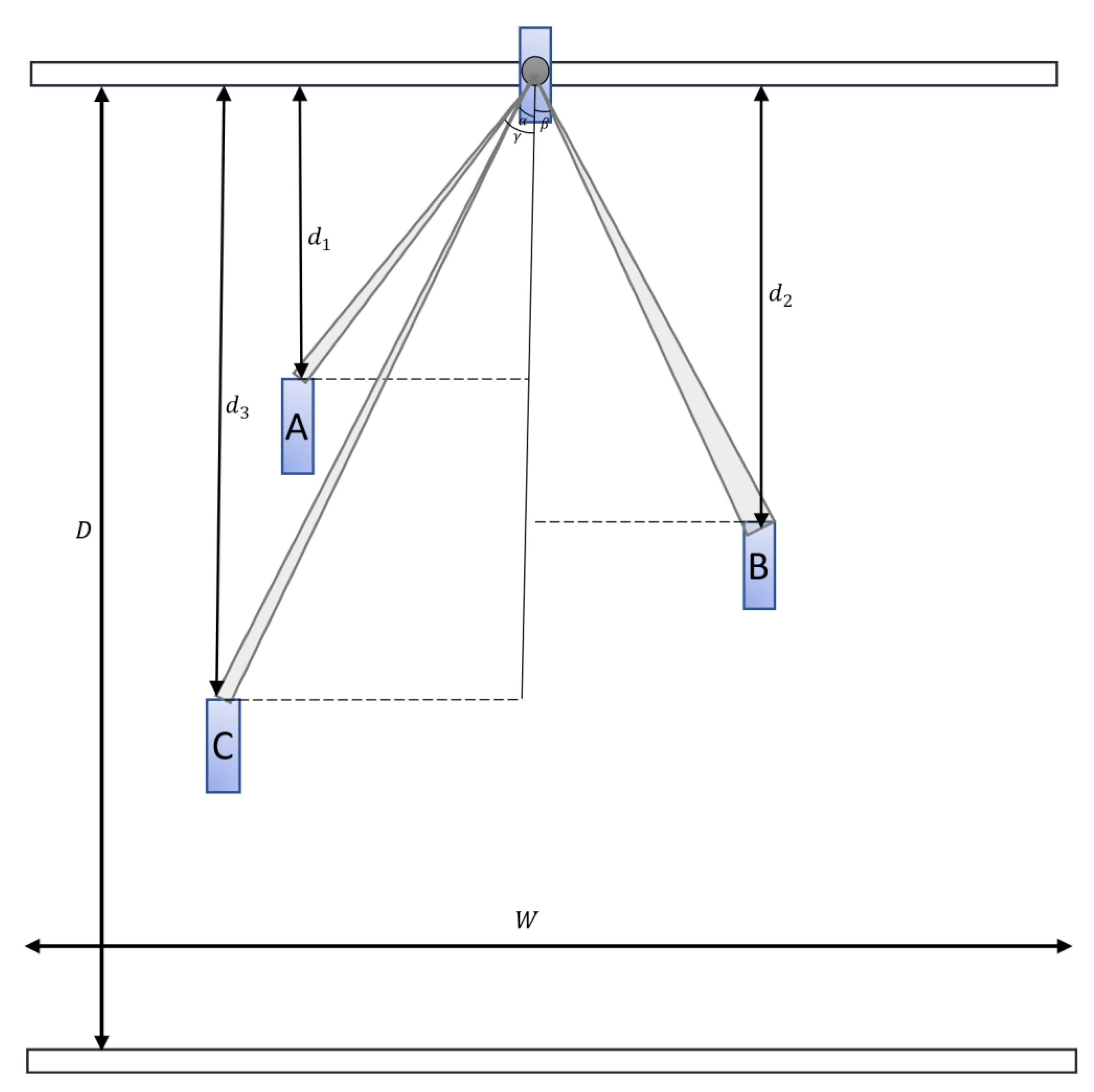

3.5. Distance Estimation

3.6. Evaluation

4. Experiments and Results

5. Discussion

6. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Funding

Conflicts of Interest

References

- Simon, M.; Milz, S.; Amende, K.; Gross, H.M. Complex-YOLO: Real-time 3D Object Detection on Point Clouds. arXiv 2018, arXiv:1803.06199v2. [Google Scholar]

- Zhang, S.; Wang, C.; Dong, W.; Fan, B. A Survey on Depth Ambiguity of 3D Human Pose Estimation. Appl. Sci. 2022, 12, 10591. [Google Scholar] [CrossRef]

- Davydov, Y.; Chen, W.; Lin, Y. Supervised Object-Specific Distance Estimation from Monocular Images for Autonomous Driving. Sensors 2022, 22, 8846. [Google Scholar] [CrossRef] [PubMed]

- Huang, L.; Zhe, T.; Wu, J.; Wu, Q.; Pei, C.; Chen, D. Robust Inter-Vehicle Distance Estimation Method Based on Monocular Vision. IEEE Access 2019, 7, 46059–46070. [Google Scholar] [CrossRef]

- Sun, L.; Manabe, Y.; Yata, N. Double Sparse Representation for Point Cloud Registration. ITE Trans. Media Technol. Appl. 2019, 7, 148–158. [Google Scholar] [CrossRef]

- Geiger, A.; Lenz, P.; Urtasun, R. Are We Ready for Autonomous Driving. In The KITTI Vision Benchmark Suite; CVPR: Washington, DC, USA, 2012. [Google Scholar]

- Godard, C.; Mac Aodha, O.; Brostow, G. Unsupervised Monocular Depth Estimation with Left-Right Consistency. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Godard, C.; Mac Aodha, O.; Firman, M.; Brostow, G. Digging into Self-Supervised Monocular Depth Estimation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Abbasi, R.; Bashir, A.K.; Alyamani, H.J.; Amin, F.; Doh, J.; Chen, J. Lidar Point Cloud Compression, Processing and Learning for Autonomous Driving. IEEE Trans. Intell. Transp. Syst. 2023, 24, 962–979. [Google Scholar] [CrossRef]

- Barrera, A.; Guindel, C.; Beltr´an, J.; García, F. Birdnet+: End-to-end 3d Object Detection in Lidar Bird’s Eye View. In Proceedings of the 2020 IEEE 23rd International Conference on Intelligent Transportation Systems (ITSC), Rhodes, Greece, 20–23 September 2020. [Google Scholar]

- Liebe, C.C.; Coste, K. Distance Measurement Utilizing Image-Based Triangulation. IEEE Sens. J. 2013, 13, 234–244. [Google Scholar] [CrossRef]

- Mao, J.; Shi, S.H.; Wang, X.; Li, H. 3D Object Detection for Autonomous Driving: A Review and New Outlooks. arXiv 2022, arXiv:2206.09474v1. [Google Scholar]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. Pointnet: Deep Learning on Point Sets for 3D Classification and Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Qi, C.H.R.; Yi, L.; Su, H.; Guibas, L.J. PointNet++: Deep Hierarchical Feature Learning on Point Sets in a Metric Space. Adv. Neural Inf. Process. Syst. 2017, 30, 5105–5114. [Google Scholar]

- Ngiam, J.; Caine, B.; Han, W.; Yang, B.; Chai, Y.; Sun, P.; Zhou, Y.; Yi, X.; Alsharif, O.; Nguyen, P.; et al. Starnet: Targeted Computation for Object Detection in Point Clouds. arXiv 2019, arXiv:1908.11069v3. [Google Scholar]

- Ali, W.; Abdelkarim, S.; Zidan, M.; Zahran, M.; El Sallab, A. Yolo3d: End-to-end Real-Time 3d Oriented Object Bounding Box Detection from Lidar Point Cloud, in proceedings to ECCVW. arXiv 2018, arXiv:1808.02350v1. [Google Scholar]

- Chen, X.; Ma, H.; Wan, J.; Li, B.; Xia, T. Multi-View 3D Object Detection Network for Autonomous Driving? In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Lee, S.; Han, K.; Park, S.; Yang, X. Vehicle Distance Estimation from a Monocular Camera for Advanced Driver Assistance Systems. Symmetry 2022, 14, 2657. [Google Scholar] [CrossRef]

- Kumar, G.A.; Lee, J.H.; Hwang, J.; Park, J.; Youn, S.H.; Kwon, S. LiDAR and Camera Fusion Approach for Object Distance Estimation in Self-Driving Vehicles. Symmetry 2020, 12, 324. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.; Liao, H.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. Cbam: Convolutional Block Attention Module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.h.; Sun, J. Spatial Pyramid Pooling in Deep Convolutional Networks for Visual Recognition. IEEE Trans. Pattern Anal. Mach. Intell. (TPAMI) 2015, 37, 1904–1916. [Google Scholar] [CrossRef]

- Wang, C.H.Y.; Mark Liao, H.Y.; Wu, Y.H.; Chen, P.Y.; Hsieh, J.W.; Yeh, I.H. CSPNet: A New Backbone that can Enhance Learning Capabilities Of CNN. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshop (CVPR Workshop), Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Nguyen, M.D. Complex YOLOv4. 2020. Available online: https://github.com/maudzung/Complex-YOLOv4-Pytorch (accessed on 7 July 2022).

- Franca JG, D.; Gazziro, M.A.; Ide, A.N.; Saito, J.H. A 3D Scanning System Based on Laser Triangulation and Variable Field of View. In Proceedings of the IEEE International Conference on Image Processing, Genova, Italy, 14 September 2005; p. I-425. [Google Scholar] [CrossRef]

- Li, E.; Wang, S.; Li, C.; Li, D.; Wu, X.; Hao, Q. SUSTech POINTS: A Portable 3D Point Cloud Interactive Annotation Platform System. In Proceedings of the 2020 IEEE Intelligent Vehicles Symposium (IV), Las Vegas, NV, USA, 19 October–13 November 2020; pp. 1108–1115. [Google Scholar] [CrossRef]

- Zhou, T.; Brown, M.; Snavely, N.; Lowe, D.G. Unsupervised Learning of Depth and Ego-Motion from Video. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6612–6619. [Google Scholar]

- Ding, M.; Zhang, Z.; Jiang, X.; Cao, Y. Vision-based Distance Measurement in Advanced Driving Assistance Systems. Appl. Sci. 2020, 10, 7276. [Google Scholar] [CrossRef]

- Liang, H.; Ma, Z.; Zhang, Q. Self-supervised Object Distance Estimation Using a Monocular Camera. Sensors 2022, 22, 2936. [Google Scholar] [CrossRef] [PubMed]

- Kim, J.B. Efficient Vehicle Detection and Distance Estimation Based on Aggregated Channel Features and Inverse Perspective Mapping from a Single Camera. Symmetry 2019, 11, 1205. [Google Scholar] [CrossRef]

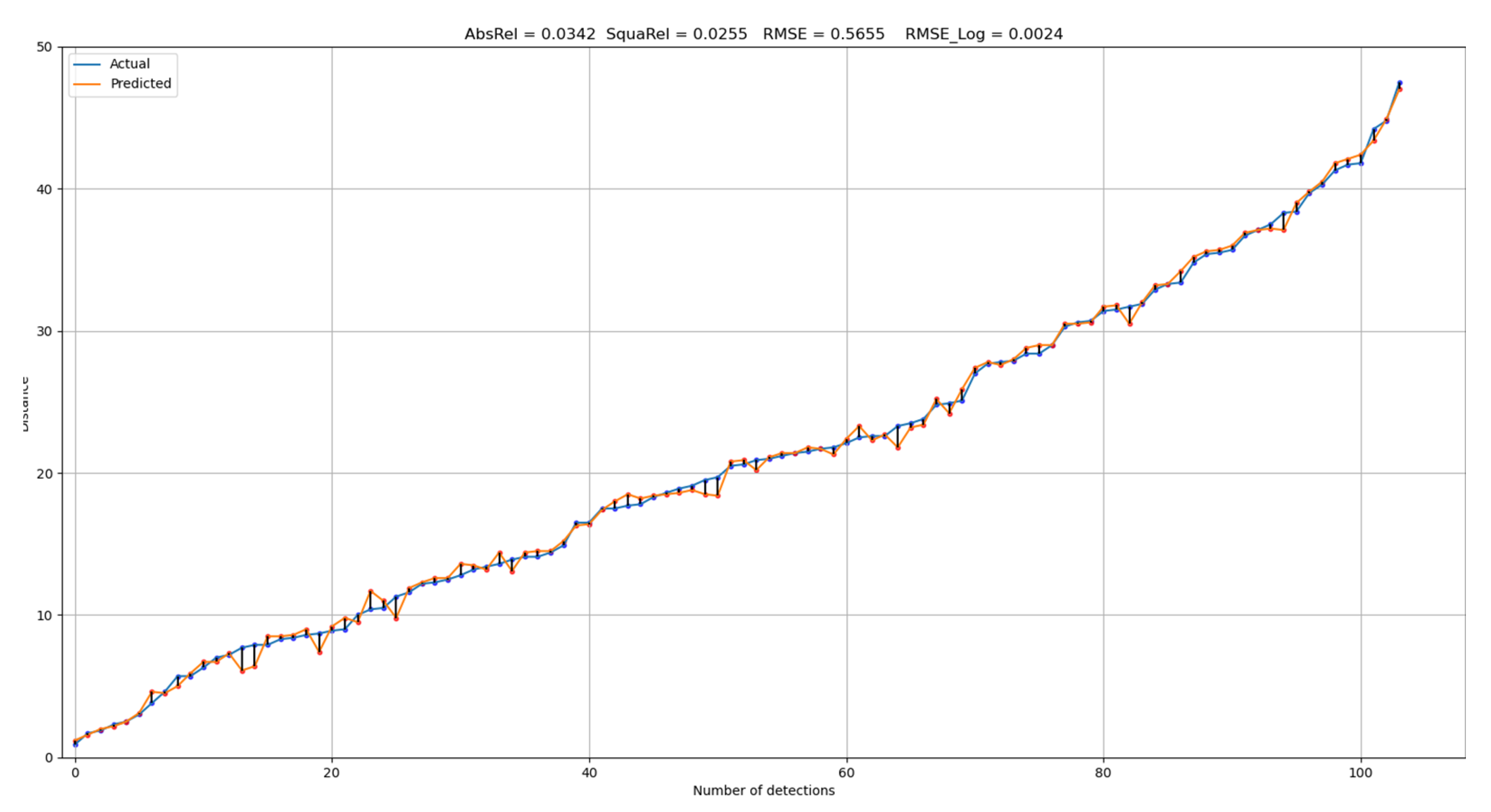

| Distance (m) | AbsRel | SquaRel | RMSE | RMSLE |

|---|---|---|---|---|

| 0–10 | 0.0836 | 0.0615 | 0.6477 | 0.0078 |

| 10–20 | 0.0337 | 0.0282 | 0.6208 | 0.0019 |

| 20–30 | 0.0160 | 0.0105 | 0.4969 | 0.0004 |

| 30–40 | 0.0100 | 0.0068 | 0.4843 | 0.0002 |

| 40–50 | 0.0103 | 0.0056 | 0.4843 | 0.0002 |

| Overall | 0.0342 | 0.0255 | 0.5655 | 0.0024 |

| Number of Detections per Scene | Car 1 | Car 2 | Car 3 | Car 4 | Car 5 | Car 6 | Car 7 | |

|---|---|---|---|---|---|---|---|---|

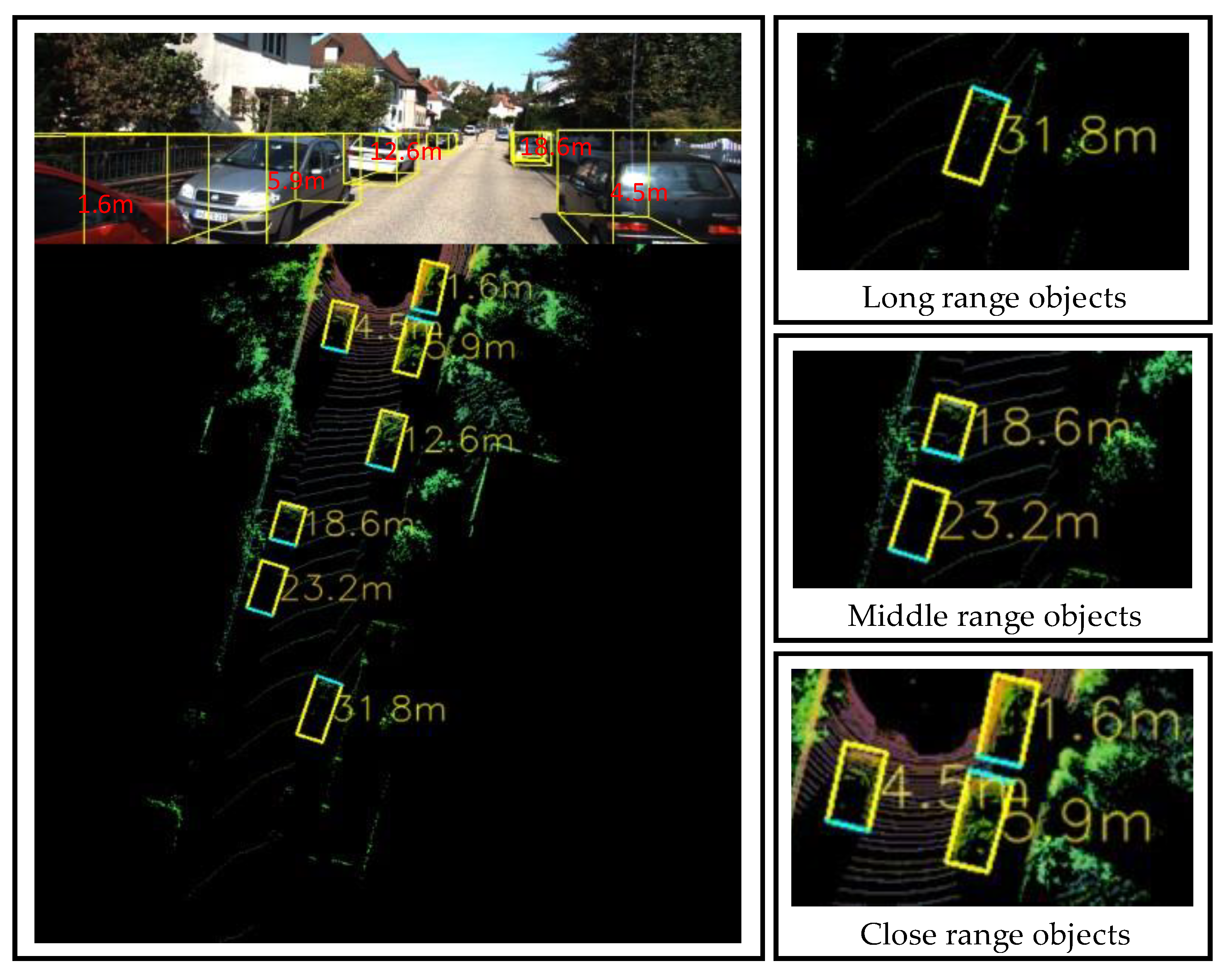

| Distance Verification | Actual (m) | 1.7 | 4.6 | 5.7 | 12.5 | 18.9 | 23.5 | 31.5 |

| Estimated (m) | 1.6 | 4.5 | 5.9 | 12.6 | 18.6 | 23.2 | 31.8 | |

| Absolute error (m) | 0.1 | 0.1 | 0.2 | 0.1 | 0.3 | 0.3 | 0.3 | |

| Relative error (%) | 5.88 | 2.17 | 3.51 | 0.80 | 1.59 | 1.28 | 0.95 | |

| Average error (m) | 0.2 | |||||||

| Average error (%) | 2.31 | |||||||

| Study | Methodology | Error Metric | Accuracy (in %) | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Stereo | Monocular | LiDAR | AbsRel | SquaRel | RMSE | RMSLE | ||||

| Lee et al. [18] | ✓ | 0.047 | 0.116 | 2.091 | 0.076 | 0.982 | 0.996 | 1.000 | ||

| Zhou et al. [29] | ✓ | 0.183 | 6.709 | 0.270 | 0.734 | 0.902 | 0.959 | |||

| Ding et al. [30] | ✓ | 0.071 | 3.740 | 0.934 | 0.979 | 0.992 | ||||

| Liang et al. [31] | ✓ | 0.101 | 0.715 | 0.178 | 0.899 | 0.981 | 0.990 | |||

| Ours | ✓ | 0.0342 | 0.0255 | 0.5655 | 0.0024 | 0.980 | 1.000 | 1.000 | ||

| Distance (m) | Ours | Lee et al. [18] | Kim et al. [32] | Kumar et al. [19] |

|---|---|---|---|---|

| 0–10 | 0.913 | 0.983 | 0.980 | × |

| 10–20 | 1.000 | 0.987 | 0.922 | × |

| 20–30 | 1.000 | 0.984 | 0.917 | 0.980 |

| 30–40 | 1.000 | 0.995 | 0.913 | × |

| 40–50 | 1.000 | 0.975 | 0.912 | 0.963 |

| 50–60 | × | 0.974 | × | × |

| 60–70 | × | 0.931 | × | × |

| 70–80 | × | 0.963 | × | 0.960 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Usmankhujaev, S.; Baydadaev, S.; Kwon, J.W. Accurate 3D to 2D Object Distance Estimation from the Mapped Point Cloud Data. Sensors 2023, 23, 2103. https://doi.org/10.3390/s23042103

Usmankhujaev S, Baydadaev S, Kwon JW. Accurate 3D to 2D Object Distance Estimation from the Mapped Point Cloud Data. Sensors. 2023; 23(4):2103. https://doi.org/10.3390/s23042103

Chicago/Turabian StyleUsmankhujaev, Saidrasul, Shokhrukh Baydadaev, and Jang Woo Kwon. 2023. "Accurate 3D to 2D Object Distance Estimation from the Mapped Point Cloud Data" Sensors 23, no. 4: 2103. https://doi.org/10.3390/s23042103

APA StyleUsmankhujaev, S., Baydadaev, S., & Kwon, J. W. (2023). Accurate 3D to 2D Object Distance Estimation from the Mapped Point Cloud Data. Sensors, 23(4), 2103. https://doi.org/10.3390/s23042103